prometheus-数据展示之grafana部署和数据源配置

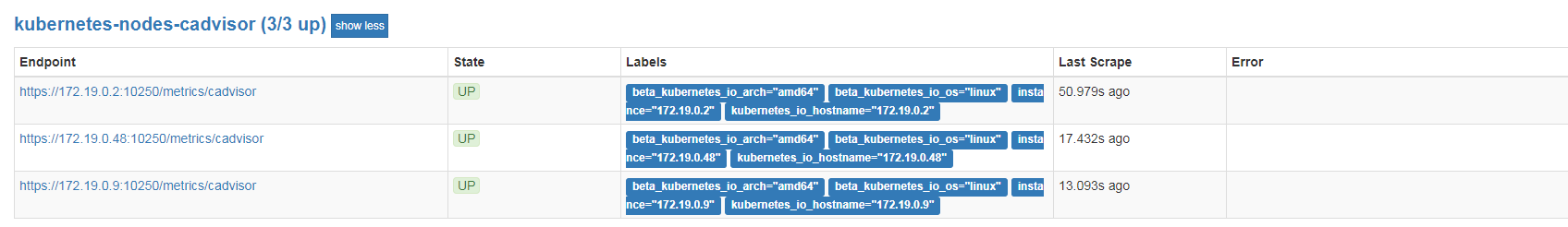

1、监控pods 。 prometheus再部署以后,会自带cAdvisor。结果如下:

2、K8S集群状态监控。需要使用kube-state-metrics插件。部署以后

kubernetes.io/cluster-service: "true" 会自动启用监控对象,无需配置

[root@VM_0_48_centos prometheus]# cat kube-state-metrics-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: kube-state-metrics namespace: kube-system labels: k8s-app: kube-state-metrics kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v1.3.0 spec: selector: matchLabels: k8s-app: kube-state-metrics version: v1.3.0 replicas: 1 template: metadata: labels: k8s-app: kube-state-metrics version: v1.3.0 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-cluster-critical serviceAccountName: kube-state-metrics containers: - name: kube-state-metrics image: quay.io/coreos/kube-state-metrics:v1.3.0 ports: - name: http-metrics containerPort: 8080 - name: telemetry containerPort: 8081 readinessProbe: httpGet: path: /healthz port: 8080 initialDelaySeconds: 5 timeoutSeconds: 5 - name: addon-resizer image: k8s.gcr.io/addon-resizer:1.8.5 resources: limits: cpu: 100m memory: 30Mi requests: cpu: 100m memory: 30Mi env: - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: MY_POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: config-volume mountPath: /etc/config command: - /pod_nanny - --config-dir=/etc/config - --container=kube-state-metrics - --cpu=100m - --extra-cpu=1m - --memory=100Mi - --extra-memory=2Mi - --threshold=5 - --deployment=kube-state-metrics volumes: - name: config-volume configMap: name: kube-state-metrics-config --- # Config map for resource configuration. apiVersion: v1 kind: ConfigMap metadata: name: kube-state-metrics-config namespace: kube-system labels: k8s-app: kube-state-metrics kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile data: NannyConfiguration: |- apiVersion: nannyconfig/v1alpha1 kind: NannyConfiguration

部署结果展示:

[root@VM_0_48_centos prometheus]# kubectl get svc -n kube-system|grep kube-state-metrics kube-state-metrics ClusterIP 10.0.0.164 <none> 8080/TCP,8081/TCP 3d

3、node宿主机监控。由于node_exporter 需要监控宿主机状态,不建议采用容器部署。建议采用二进制部署。解压后。

[root@VM_0_48_centos prometheus]# cat /usr/lib/systemd/system/node_exporter.service [Unit] Description=prometheus [Service] Restart=on-failure ExecStart=/opt/prometheus/node_exporter/node_exporter ####需要改为自己的解压后的目录 [Install] WantedBy=multi-user.target

[root@VM_0_48_centos prometheus]# ps -ef|grep node_exporter root 15748 1 0 09:47 ? 00:00:08 /opt/prometheus/node_exporter/node_exporter root 16560 4032 0 18:47 pts/0 00:00:00 grep --color=auto node_exporter [root@VM_0_48_centos prometheus]#

4、prometheus启用监控目标

[root@VM_0_48_centos prometheus]# cat prometheus-configmap.yaml # Prometheus configuration format https://prometheus.io/docs/prometheus/latest/configuration/configuration/ apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExists data: prometheus.yml: | scrape_configs: - job_name: prometheus static_configs: - targets: - localhost:9090 - job_name: node #####启动node_exporter static_configs: - targets: - 172.19.0.48:9100 - 172.19.0.14:9100 - 172.19.0.9:9100 - 172.19.0.2:9100 - job_name: kubernetes-apiservers kubernetes_sd_configs: - role: endpoints relabel_configs: - action: keep regex: default;kubernetes;https source_labels: - __meta_kubernetes_namespace - __meta_kubernetes_service_name - __meta_kubernetes_endpoint_port_name scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt insecure_skip_verify: true bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token - job_name: kubernetes-nodes-kubelet kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt insecure_skip_verify: true bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token - job_name: kubernetes-nodes-cadvisor kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __metrics_path__ replacement: /metrics/cadvisor scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt insecure_skip_verify: true bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token - job_name: kubernetes-service-endpoints kubernetes_sd_configs: - role: endpoints relabel_configs: - action: keep regex: true source_labels: - __meta_kubernetes_service_annotation_prometheus_io_scrape - action: replace regex: (https?) source_labels: - __meta_kubernetes_service_annotation_prometheus_io_scheme target_label: __scheme__ - action: replace regex: (.+) source_labels: - __meta_kubernetes_service_annotation_prometheus_io_path target_label: __metrics_path__ - action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 source_labels: - __address__ - __meta_kubernetes_service_annotation_prometheus_io_port target_label: __address__ - action: labelmap regex: __meta_kubernetes_service_label_(.+) - action: replace source_labels: - __meta_kubernetes_namespace target_label: kubernetes_namespace - action: replace source_labels: - __meta_kubernetes_service_name target_label: kubernetes_name - job_name: kubernetes-services kubernetes_sd_configs: - role: service metrics_path: /probe params: module: - http_2xx relabel_configs: - action: keep regex: true source_labels: - __meta_kubernetes_service_annotation_prometheus_io_probe - source_labels: - __address__ target_label: __param_target - replacement: blackbox target_label: __address__ - source_labels: - __param_target target_label: instance - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: - __meta_kubernetes_namespace target_label: kubernetes_namespace - source_labels: - __meta_kubernetes_service_name target_label: kubernetes_name - job_name: kubernetes-pods kubernetes_sd_configs: - role: pod relabel_configs: - action: keep regex: true source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_scrape - action: replace regex: (.+) source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_path target_label: __metrics_path__ - action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 source_labels: - __address__ - __meta_kubernetes_pod_annotation_prometheus_io_port target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - action: replace source_labels: - __meta_kubernetes_namespace target_label: kubernetes_namespace - action: replace source_labels: - __meta_kubernetes_pod_name target_label: kubernetes_pod_name alerting: alertmanagers: - static_configs: - targets: ["alertmanager:80"] rule_files: - "/etc/config/alert_rules/*.yml"

5、结果展示

6、grafana 部署

docker run -d --name=grafana -p 3000:3000 grafana/grafana [root@VM_0_48_centos prometheus]# docker ps -a|grep grafana 6ea8d2852906 grafana/grafana "/run.sh" 5 hours ago Up 5 hours 0.0.0.0:3000->3000/tcp grafana [root@VM_0_48_centos prometheus]#

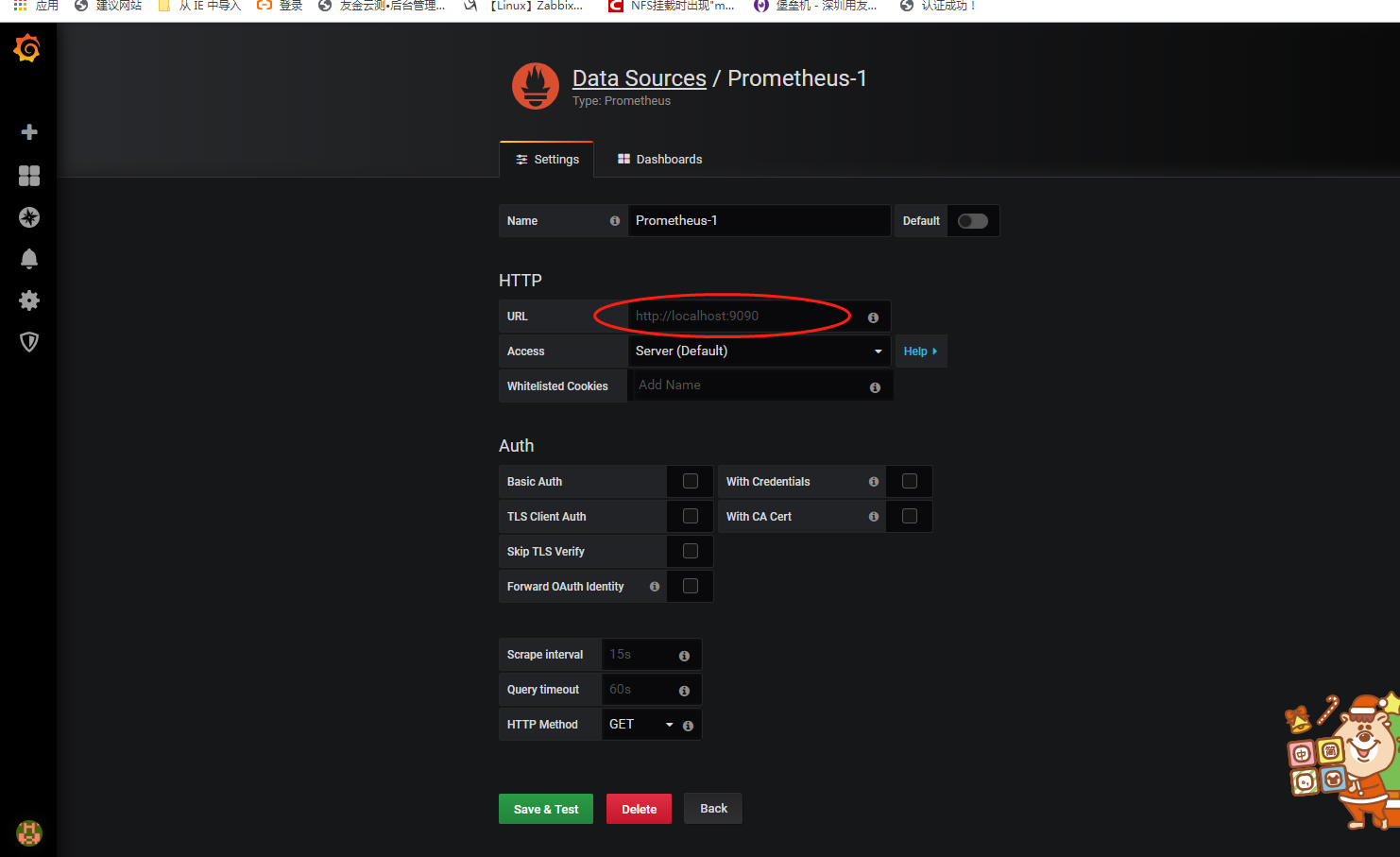

6、登录grafana 配置数据源展示结果

7 、导入展示

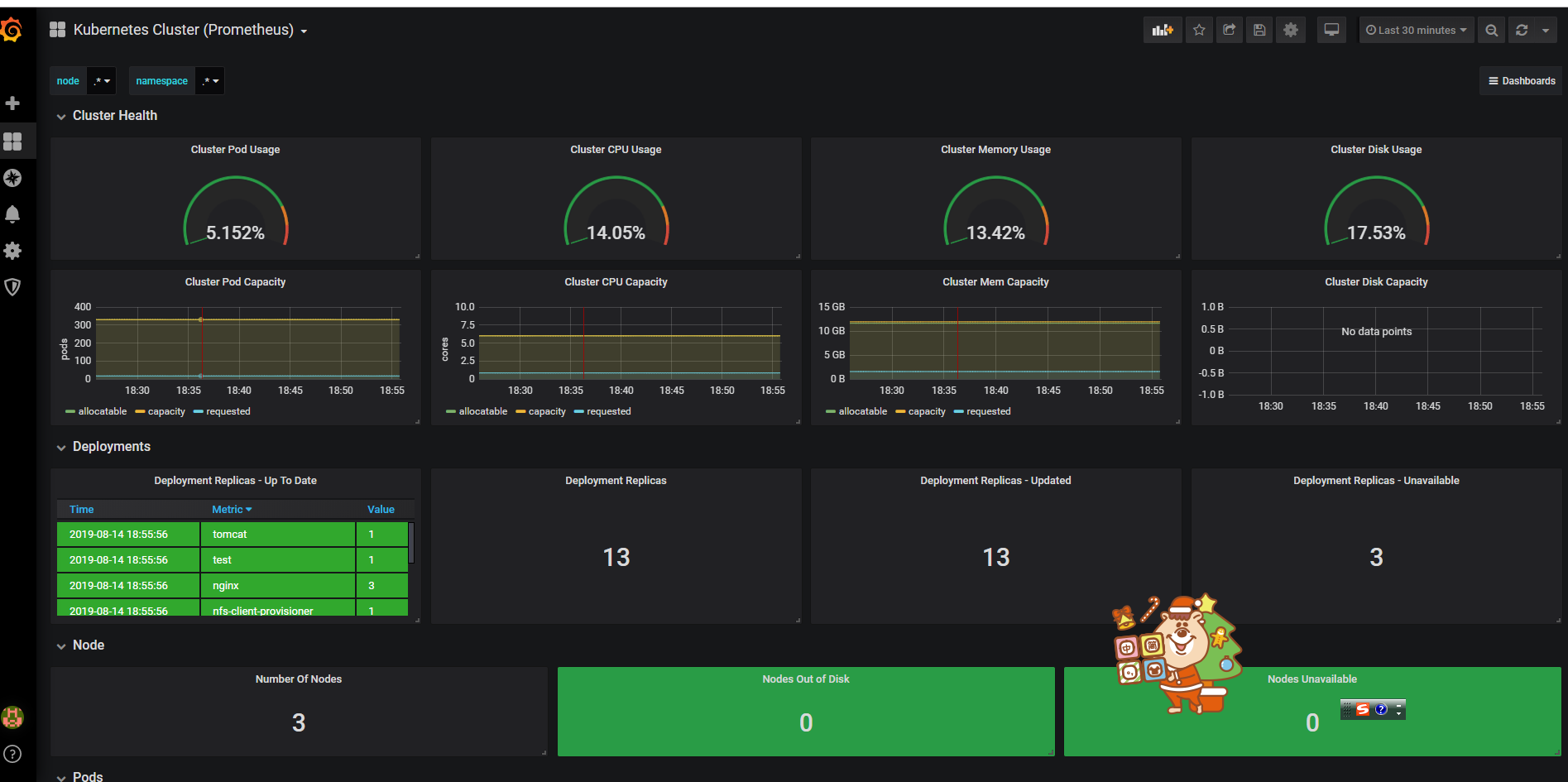

推荐模板: •集群资源监控:3119 •资源状态监控:6417 •Node监控:9276

8、结果展示:

良禽择木而栖 贤臣择主而侍

浙公网安备 33010602011771号

浙公网安备 33010602011771号