k8s-静态PV和动态PV

1、pv 简单介绍

PersistenVolume(PV):对存储资源创建和使用的抽象,使得存储作为集群中的资源管理 PV分为静态和动态,动态能够自动创建PV • PersistentVolumeClaim(PVC):让用户不需要关心具体的Volume实现细节 容器与PV、PVC之间的关系,可以如下图所示: 总的来说,PV是提供者,PVC是消费者,消费的过程就是绑定。 参考网址:1、https://www.cnblogs.com/weifeng1463/p/10037803.html 2、https://blog.csdn.net/qq_25611295/article/details/86065053

2、nfs 搭建:

1 2 3 4 5 6 7 8 9 10 11 | yum install nfs-utils vim /etc/exports/data/k8s/ 172.16.1.0/24(sync,rw,no_root_squash) systemctl start nfs; systemctl start rpcbind systemctl enable nfs测试:yum install nfs-utilsshowmount -e 172.16.1.131 |

3、PersistentVolume 静态绑定 (手工创建PV、PVC)

[root@VM_0_48_centos prometheus]# cat mypv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: pv001 spec: capacity: storage: 10Gi accessModes: - ReadWriteMany nfs: path: /data/k8s server: 172.19.0.14 [root@VM_0_48_centos prometheus]# cat mypvc.yaml ###会根据大小和类型自动匹配到上面的PV kind: PersistentVolumeClaim apiVersion: v1 metadata: namespace: kube-system name: prometheus-claim spec: accessModes: - ReadWriteMany resources: requests: storage: 10Gi [root@VM_0_48_centos prometheus]# kubectl get pv,pvc -n kube-system NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pv001 10Gi RWX Retain Bound kube-system/prometheus-claim 17m NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/prometheus-claim Bound pv001 10Gi RWX

4、PersistentVolume 静态PVC使用案例

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 | apiVersion: apps/v1kind: StatefulSetmetadata: name: prometheus namespace: kube-system labels: k8s-app: prometheus kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v2.2.1spec: serviceName: "prometheus" replicas: 1 podManagementPolicy: "Parallel" updateStrategy: type: "RollingUpdate" selector: matchLabels: k8s-app: prometheus template: metadata: labels: k8s-app: prometheus annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-cluster-critical serviceAccountName: prometheus initContainers: - name: "init-chown-data" image: "busybox:latest" imagePullPolicy: "IfNotPresent" command: ["chown", "-R", "65534:65534", "/data"] volumeMounts: - name: prometheus-data mountPath: /data subPath: "" containers: - name: prometheus-server-configmap-reload image: "jimmidyson/configmap-reload:v0.1" imagePullPolicy: "IfNotPresent" args: - --volume-dir=/etc/config - --webhook-url=http://localhost:9090/-/reload volumeMounts: - name: config-volume mountPath: /etc/config readOnly: true resources: limits: cpu: 10m memory: 10Mi requests: cpu: 10m memory: 10Mi - name: prometheus-server image: "prom/prometheus:v2.2.1" imagePullPolicy: "IfNotPresent" args: - --config.file=/etc/config/prometheus.yml - --storage.tsdb.path=/data - --web.console.libraries=/etc/prometheus/console_libraries - --web.console.templates=/etc/prometheus/consoles - --web.enable-lifecycle ports: - containerPort: 9090 readinessProbe: httpGet: path: /-/ready port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 livenessProbe: httpGet: path: /-/healthy port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 # based on 10 running nodes with 30 pods each resources: limits: cpu: 200m memory: 1000Mi requests: cpu: 200m memory: 1000Mi volumeMounts: - name: config-volume mountPath: /etc/config - name: prometheus-data mountPath: /data subPath: "" terminationGracePeriodSeconds: 300 volumes: - name: config-volume configMap: name: prometheus-config - name: prometheus-data persistentVolumeClaim: #申明使用静态PVC永久化存储 claimName: prometheus-claim |

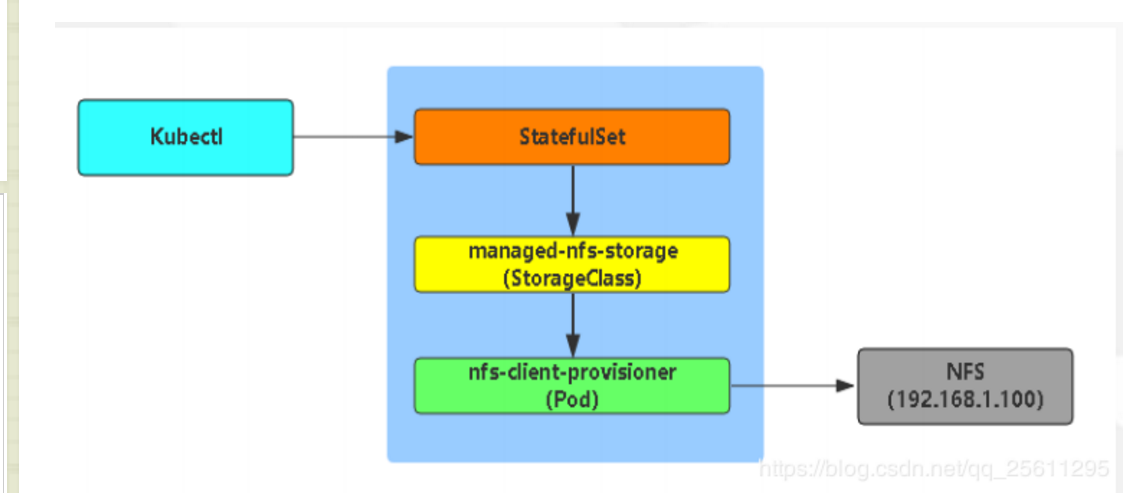

5、动态PV,K8S调用资源对象自动创建PV。生产环境常用

当我们k8s业务上来的时候,大量的pvc,此时我们人工创建匹配的话,工作量就会非常大了,需要动态的自动挂载相应的存储。

我们需要使用到StorageClass,来对接存储,靠他来自动关联pvc,并创建pv。 Kubernetes支持动态供给的存储插件: https://kubernetes.io/docs/concepts/storage/storage-classes/ 因为NFS不支持动态存储,所以我们需要借用这个存储插件。 nfs动态相关部署可以参考: https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy

6、存储对象申明和授权。

定义一个storage [root@VM_0_48_centos prometheus]# cat storageclass-nfs.yaml apiVersion: storage.k8s.io/v1beta1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs 因为storage自动创建pv需要经过kube-apiserver,所以要进行授权 [root@VM_0_48_centos prometheus]# cat storageclass-rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["list", "watch", "create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io 部署一个自动创建pv的服务 [root@VM_0_48_centos prometheus]# cat prometheus-statefulset.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: prometheus namespace: kube-system labels: k8s-app: prometheus kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v2.2.1 spec: serviceName: "prometheus" replicas: 1 podManagementPolicy: "Parallel" updateStrategy: type: "RollingUpdate" selector: matchLabels: k8s-app: prometheus template: metadata: labels: k8s-app: prometheus annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-cluster-critical serviceAccountName: prometheus initContainers: - name: "init-chown-data" image: "busybox:latest" imagePullPolicy: "IfNotPresent" command: ["chown", "-R", "65534:65534", "/data"] volumeMounts: - name: prometheus-data mountPath: /data subPath: "" containers: - name: prometheus-server-configmap-reload image: "jimmidyson/configmap-reload:v0.1" imagePullPolicy: "IfNotPresent" args: - --volume-dir=/etc/config - --webhook-url=http://localhost:9090/-/reload volumeMounts: - name: config-volume mountPath: /etc/config readOnly: true resources: limits: cpu: 10m memory: 10Mi requests: cpu: 10m memory: 10Mi - name: prometheus-server image: "prom/prometheus:v2.2.1" imagePullPolicy: "IfNotPresent" args: - --config.file=/etc/config/prometheus.yml - --storage.tsdb.path=/data - --web.console.libraries=/etc/prometheus/console_libraries - --web.console.templates=/etc/prometheus/consoles - --web.enable-lifecycle ports: - containerPort: 9090 readinessProbe: httpGet: path: /-/ready port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 livenessProbe: httpGet: path: /-/healthy port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 # based on 10 running nodes with 30 pods each resources: limits: cpu: 200m memory: 1000Mi requests: cpu: 200m memory: 1000Mi volumeMounts: - name: config-volume mountPath: /etc/config - name: prometheus-data mountPath: /data subPath: "" terminationGracePeriodSeconds: 300 volumes: - name: config-volume configMap: name: prometheus-config - name: prometheus-data persistentVolumeClaim: claimName: prometheus-claim

7、效果测试

[root@VM_0_48_centos prometheus]# cat test.yaml apiVersion: v1 kind: Service metadata: name: nginx labels: app: nginx spec: ports: - port: 80 name: web clusterIP: None selector: app: nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: web spec: serviceName: "nginx" replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 name: web volumeMounts: - name: www mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: www spec: accessModes: [ "ReadWriteOnce" ] storageClassName: "managed-nfs-storage" resources: requests: storage: 1Gi kubectl exec -it web-0 sh # cd /usr/share/nginx/html # touch 1.txt

良禽择木而栖 贤臣择主而侍

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:基于图像分类模型对图像进行分类

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 25岁的心里话

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· 零经验选手,Compose 一天开发一款小游戏!

· 一起来玩mcp_server_sqlite,让AI帮你做增删改查!!