记一次简单爬虫(豆瓣/dytt)

磕磕绊绊学python一个月,这次到正则表达式终于能写点有趣的东西,在此作个记录:

—————————————————————————————————————————————————

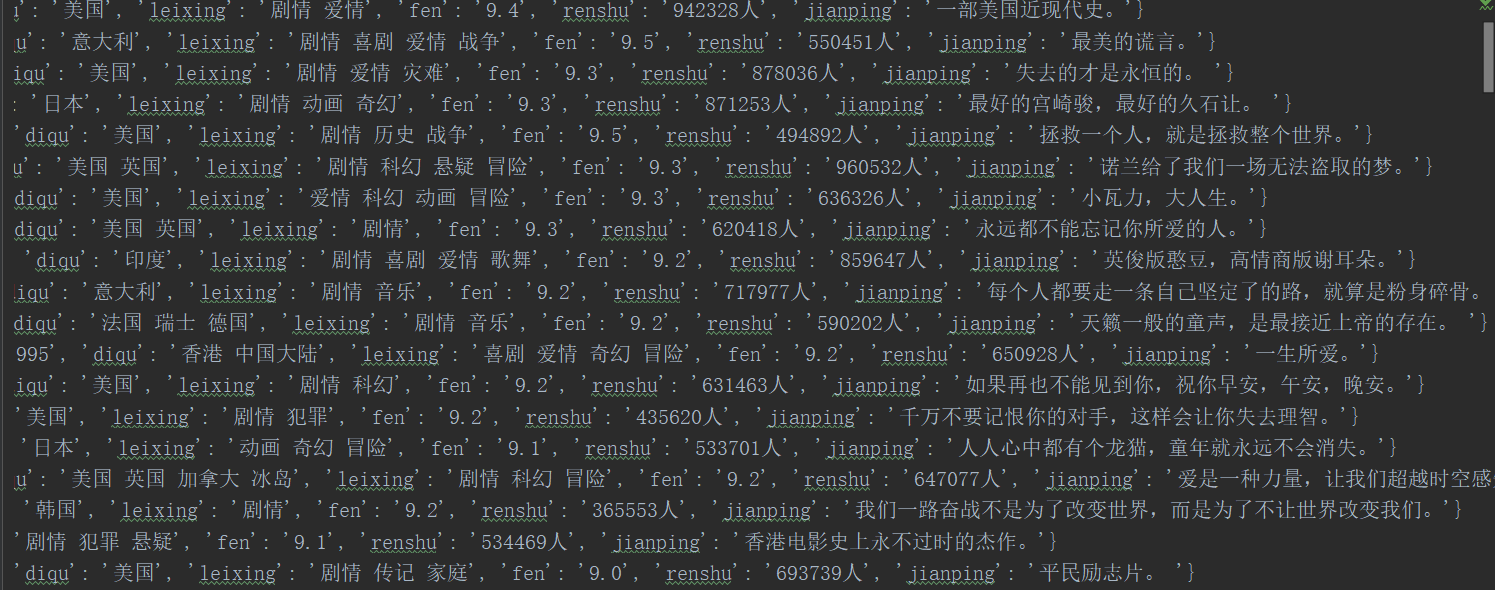

1.爬取豆瓣电影榜前250名单

运行环境:

pycharm-professional-2018.2.4

3.7.0 (v3.7.0:1bf9cc5093, Jun 27 2018, 04:59:51) [MSC v.1914 64 bit (AMD64)]

成品效果:

相关代码:

1 from urllib.request import urlopen 2 import re 3 # import ssl # 若有数字签名问题可用 4 # ssl._create_default_https_context = ssl._create_unverified_context 5 6 7 # 写正则规则 8 obj = re.compile(r'<div class="item">.*?<span class="title">(?P<name>.*?)</span>.*?导演:(?P<daoyan>.*?) .*?' 9 r'主演:(?P<zhuyan>.*?)<br>\n (?P<shijian>.*?) / (?P<diqu>.*?) ' 10 r'/ (?P<leixing>.*?)\n.*?<span class="rating_num" property="v:average">(?P<fen>.*?)</span>.*?<span>' 11 r'(?P<renshu>.*?)评价</span>.*?<span class="inq">(?P<jianping>.*?)</span>',re.S) # re.S 干掉换行 12 13 # 转码 获取内容 14 def getContent(url): 15 content = urlopen(url).read().decode("utf-8") 16 return content 17 18 # 匹配页面内容 返回一个迭代器 19 def parseContent(content): 20 iiter = obj.finditer(content) 21 for el in iiter: 22 yield { 23 "name":el.group("name"), 24 "daoyan":el.group("daoyan"), 25 "zhuyan":el.group("zhuyan"), 26 "shijian":el.group("shijian"), 27 "diqu":el.group("diqu"), 28 "leixing":el.group("leixing"), 29 "fen":el.group("fen"), 30 "renshu":el.group("renshu"), 31 "jianping":el.group("jianping") 32 } 33 34 35 for i in range(10): 36 url = "https://movie.douban.com/top250?start=%s&filter=" % (i*25) # 循环页面10 37 print(url) 38 g = parseContent(getContent(url)) # 匹配获取的内容返回给g 39 f = open("douban_movie.txt",mode="a",encoding="utf-8") 40 for el in g: 41 f.write(str(el)+"\n") # 写入到txt 注意加上换行 42 43 # f.write("==============================================") # 测试分页 44 f.close()

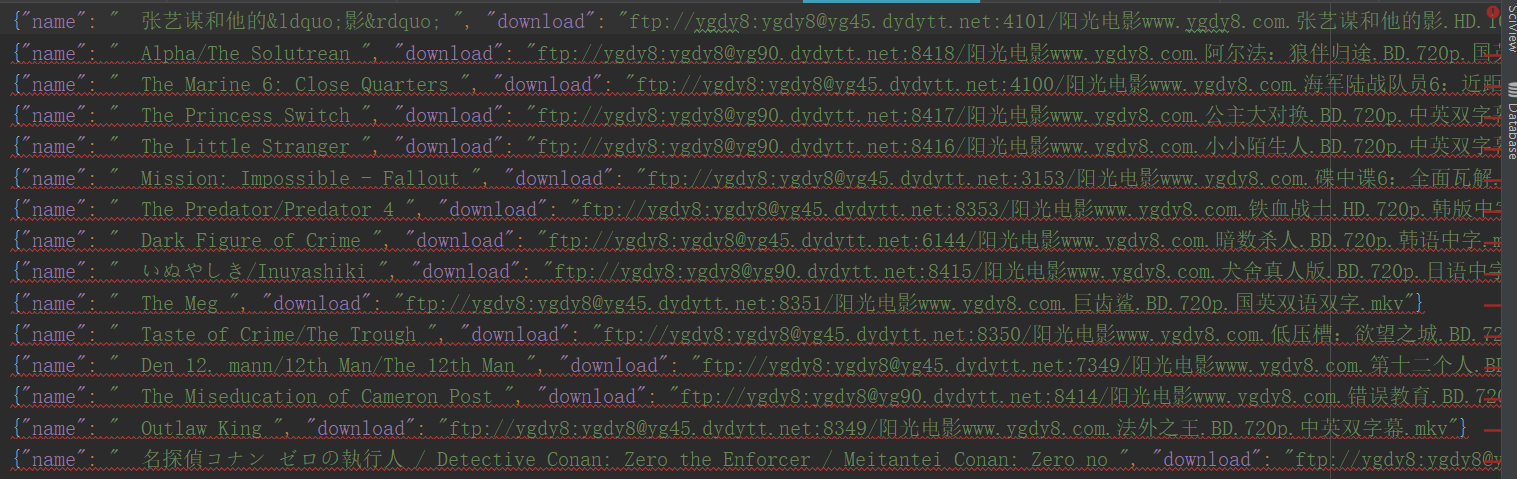

2.爬取某站最新电影和下载地址

运行环境:

pycharm-professional-2018.2.4

3.7.0 (v3.7.0:1bf9cc5093, Jun 27 2018, 04:59:51) [MSC v.1914 64 bit (AMD64)]

成品效果:

相关代码:

1 from urllib.request import urlopen 2 import json 3 import re 4 5 # 获取主页面内容 6 url = "https://www.dytt8.net/" 7 content = urlopen(url).read().decode("gbk") 8 # print(content) 9 10 # 正则 11 obj = re.compile(r'.*?最新电影下载</a>]<a href=\'(?P<url1>.*?)\'>', re.S) 12 obj1 = re.compile(r'.*?<div id="Zoom">.*?<br />◎片 名(?P<name>.*?)<br />.*?bgcolor="#fdfddf"><a href="(?P<download>.*?)">', re.S) 13 14 15 def get_content(content): 16 res = obj.finditer(content) 17 f = open('movie_dytt.json', mode='w', encoding='utf-8') 18 for el in res: 19 res = el.group("url1") 20 res = url + res # 拼接子页面网址 21 22 content1 = urlopen(res).read().decode("gbk") # 获取子页面内容 23 lst = obj1.findall(content1) # 匹配obj1返回一个列表 24 # print(lst) # 元组 25 name = lst[0][0] 26 download = lst[0][1] 27 s = json.dumps({"name":name,"download":download},ensure_ascii=False) 28 f.write(s+"\n") 29 f.flush() 30 f.close() 31 32 33 get_content(content) # 调用函数 执行