Theory of Computation

Model of Computation

Mathematical Logic

Def. Formal logical system consist of

- An alphabet of symbols

- A set of finite strings of these symbols, called well-formed formula (wf.)

- A set of well-formed formulas, called axioms

- A finite set of "rules of deduction"

Def. Perfect Logical System:

- Soundness

- Consistency

- Completeness

Thm.[Gödel's completeness theorem] We have a complete first-order logic system.

Thm.[Gödel's incompleteness theorem] We cannot have a complete first-order arithmetic system.

Def. tautology/contradiction: a statement form that is always true/false.

Def. \(A\) logically implies \(B\) iff \(A\rightarrow B\) is tautology. \(A\) is logically equivalent to \(B\) iff \(A\leftrightarrow B\) is tautology.

Def. Rules for substitution:Replace the statement variables of a tautology with any statement form, the result will still be a tautology.

Thm. Every statement form is logically equivalent to a disjunctive/conjunctive normal form: $$(\vee_i(\wedge_j Q_{ij})),(\wedge_i(\vee_j Q_{ij})).$$

Thm. Adequate sets of connective: \(\{\neg, \wedge\}, \{\neg, \vee\}, \{\neg, \rightarrow\},\{\text{NOR}\}, \{\text{NAND}\}.\)

Statement logic

Formal Statement Calculus - L

Components:

- Alphabet of symbols

- Finite strings of these symbols, called well-formed formula (wf.)

Axioms:

- (L1) \(A \rightarrow (B \rightarrow A)\)

- (L2) \((A \rightarrow (B \rightarrow C)) \rightarrow ((A \rightarrow B) \rightarrow (A \rightarrow C))\)

- (L3) \(((\neg A) \rightarrow (\neg B)) \rightarrow (B \rightarrow A)\)

Rules of Deduction:

- Modus ponens (MP) From \(A\) and \(A \rightarrow B\), \(B\) is a direct consequence

\[\begin{aligned} (1)\ & (A\to ((B\to A)\to A)) & (L1) \\ (2)\ & ((A\to ((B\to A)\to A)) \to ((A\to (B\to A))\to (A\to A))) & (L2) \\ (3)\ & ((A\to (B\to A))\to (A\to A)) & (MP\ (1) (2)) \\ (4)\ & (A\to (B\to A)) & (L1) \\ (5)\ & (A\to A) & (MP\ (3) (4)) \end{aligned} \]

\[\begin{aligned} (1)\ & A & (\Gamma) \\ (2)\ & (A\to (B\to A)) & (L1) \\ (3)\ & (B\to A) & (MP\ (1) (2)) \\ (4)\ & (B\to (A\to C)) & (\Gamma) \\ (5)\ & ((B\to (A\to C))\to ((B\to A)\to (B\to C))) & (L2) \\ (6)\ & ((B\to A)\to (B\to C)) & (MP\ (4) (5)) \\ (7)\ & (B\to C) & (MP\ (3) (6)) \end{aligned}\]

The deduction theorem: \(\Gamma\cup \{A\} \vdash_L B\) iff \(\Gamma \vdash_L (A\to B)\).

“Hypothetical Syllogism” (HS) $${(A\to B), (B\to C)} \vdash_L (A\to C).$$ Proof by the deduction theorem.

\[\begin{aligned} (1)\ & (\neg B\to(\neg A\to \neg B)) & (L1) \\ (2)\ & ((\neg A\to \neg B)\to (B\to A)) & (L3) \\ (3)\ & (\neg B\to(B\to A)) & (HS(1)(2)) \\ \end{aligned}\]

\[\begin{aligned} (1)\ & (\neg A \to A) & (\text{LI}) \\ (2)\ & (\neg A \to (\neg (\neg A \to A) \to \neg A)) & (\text{L3}) \\ (3)\ & \neg (\neg A \to A) \to \neg A & (\text{from (2), (3) HS}) \\ (4)\ & (\neg A \to (A \to \neg (\neg A \to A))) & (\text{L2}) \\ (5)\ & (\neg A \to (A \to \neg (\neg A \to A))) \to ((\neg A \to A) \to (\neg A \to \neg (\neg A \to A))) & (\text{L2}) \\ (6)\ & (\neg A \to A) \to (\neg A \to \neg (\neg A \to A)) & (\text{from (4), (5) MP}) \\ (7)\ & (\neg A \to \neg (\neg A \to A)) & (\text{from (1), (6) MP}) \\ (8)\ & (\neg A \to \neg (\neg A \to A)) \to ((\neg A \to A) \to A) & (\text{L3}) \\ (9)\ & ((\neg A \to A) \to A) & (\text{from (7), (8) MP}) \\ (10)\ & A & (\text{from (1), (9) MP}) \end{aligned} \]

Def. A valuation of \(L\) is just an assignment of truth values to the variables of wfs.

Def. An extension of \(L\) is a formal system obtained by enlarging the set of axioms so that all theorems of \(L\) remain theorems.

Thm. Let \(L^{*}\) be a consistent extension of \(L\) and let \(A\) be a wf. which is not a theorem of \(L^*\). Then \(L^{**}\), obtained from \(L^*\) by including \((\neg A)\) as an additional axiom, is also consistent.

If \(L^{**}= L^{*}\cup\{\neg A\}\) is not consistent, then \(\vdash_{L^{**}} A\) , so \(\{\neg A\}\vdash_{L^*}A\). Thus \(\vdash_{L^*}(\neg A\to A)\) by the deduction theorem. Since \(\vdash_{L^*}((\neg A\to A)\to A)\), we have \(\vdash_{L^*} A\) , comes to a contradiction.

For all wfs. \(A_0,A_1,...\), we add to \(L_0=L\) one by one:

- If \(\vdash_{L_i}A_i\), then \(L_{i+1}=L_i\),

- Else \(L_{i+1}=L_i\cup\{\neg A_i\}\).

Finally let \(L^*=\bigcup_i L_i\). We can see \(L^*\) is a consistent complete extension of \(L\).

Let \(L^*\) be a complete consistent extension of \(L\), then there is a valuation in which each theorem of \(L^*\) takes value T. Proof: we check the two operations \(\neg\) and \(\to\).

First-order logic

- Term:

- variables and constants are terms

- if \(t_1,t_2, ..., t_n\) are terms, then \(f_i^n(t_1,t_2,..., t_n)\) is a term

- Atomic formula:

- if \(t_1,t_2, ..., t_n\) are terms, then \(A_i^n(t_1,t_2, ..., t_n)\) is an atomic formula

- Well-formed formula:

- every atomic formula

- if \(A\) and \(B\) are wfs., so are \((\neg A)\), \((A\to B)\) and \((\forall x_i)A\), where \(x_i\) is any variable

Def. In \((\forall x_i)A\), we say that \(A\) is the scope of the quantifier.

Def. An occurrence of \(x_i\) in a wf. is said to be bound if it occurs within the scope of a \((\forall x_i)\) in the wf. or if it is the \(x_i\) in a \((\forall x_i)\). Otherwise it is free.

Def(!!!). A term \(t\) is free for \(x_i\) in \(a\) if \(x_i\) doesn't occur free in \(A\) within the scope of a \((\forall x_j)\), where \(x_j\) is any variable occurring in \(t\). Then \(((\forall x_i) A(x_i)\to A(t))\) is true. i.e., \(t\) can be substituted for free occurrence of \(x_i\) in \(A\) without interactions with quantifiers in \(A\).

Def. An interpretation \(I\) of \(\mathcal{L}\) is a non-empty set \(D_I\) (the domain of \(I\)) together with a collection of distinguished elements, a collection of functions on \(D_I\), and a collection of relations on \(D_I\).

Def. A valuation in \(I\) is a function \(v\) from the set of variables of \(\mathcal{L}\) to the set \(D_I\).

Def. A wf. \(A\) is true in an interpretation in \(I\) if every valuation in \(I\) satisfies \(A\).

Def. A wf. \(A\) is logically valid / contradictory if \(A\) is true/false in every interpretation of \(\mathcal{L}\).

Def. A wf. \(A\) of \(\mathcal{L}\) is closed if no variable occurs free in \(A\).

Formal system \(K_{\mathcal{L}}\)

Axioms

- (K1) \(A \rightarrow (B \rightarrow A)\).

- (K2) \(((A \rightarrow (B \rightarrow C)) \rightarrow ((A \rightarrow B) \rightarrow (A \rightarrow C)))\).

- (K3) \(((\neg A \rightarrow (B \rightarrow \neg B)) \rightarrow (B \rightarrow A))\).

- (K4) \(((\forall x) A \rightarrow A)\), if \(x\) does not occur free in \(A\).

- (K5) \(((\forall x) A(x) \rightarrow A(t))\), if \(t\) is a term in \(\mathcal{L}\) which is free for \(x\) in \(A(x)\).

- (K6) \(((\forall x)(A \rightarrow B) \rightarrow (A \rightarrow (\forall x) B))\), if \(A\) contains no free occurrence of \(x\).

Rules

- Modus ponens: from \(A\) and \(A \rightarrow B\), deduce \(B\).

- Generalisation: from \(A\), deduce \((\forall x)A\), where \(x\) is any variable.

Thm. If \(\Gamma\cup\{A\}\vdash_K B\), and \(A\) is a closed wf., then \(\Gamma\vdash_K(A\to B)\).

\[\begin{aligned} (1)\ & (\forall x_i)\,A(x_i)\to A(x_j) & (\text{K5})\\ (2)\ & (\forall x_j)\Bigl((\forall x_i)A(x_i)\to A(x_j)\Bigr) & (\text{Generalisation rule})\\ (3)\ & (\forall x_j)\Bigl((\forall x_i)A(x_i)\to A(x_j)\Bigr)\to\Bigl((\forall x_i)A(x_i)\to(\forall x_j)A(x_j)\Bigr) & (\text{K6})\\ (4)\ & (\forall x_i)A(x_i)\to(\forall x_j)A(x_j) & (\text{MP}(2),(3)) \end{aligned} \]

\[\begin{aligned} (1)\ & (\forall x_i)(A \to B) \to (A \to B) & (\text{K4 or K5})\\ (2)\ & (A \to B) \to (\neg B \to \neg A) & (\text{K3})\\ (3)\ & (\forall x_i)(A \to B) \to (\neg B \to \neg A) & (\text{HS})\\ (4)\ & (\forall x_i)( (\forall x_i)(A \to B) ) \to (\neg B \to \neg A) & (\text{Generalisation})\\ (5)\ & (\forall x_i)( (\forall x_i)(A \to B) ) \to (\neg B \to \neg A) \to ((\forall x_i)(A \to B) \to (\forall x_i)(\neg B \to \neg A)) & (\text{K6})\\ (6)\ & (\forall x_i)(A \to B) \to (\forall x_i)(\neg B \to \neg A) & (\text{MP})\\ (7)\ & (\forall x_i)(\neg B \to \neg A) \to (\neg B \to (\forall x_i) \neg A) & (\text{K6})\\ (8)\ & \neg B \to (\forall x_i) \neg A & (\text{K3})\\ (9)\ & (\forall x_i)(A \to B) \to (\neg (\forall x_i) \neg A \to B) & (\text{2 HS steps}) \end{aligned} \]

Def.\((Q_1x_{i1})(Q_2x_{i2})...(Q_kx_{ik})D\) is a prenex form, where \(D\) is a wf. of \(\mathcal{L}\) with no quantifiers, and each \(Q_j\) is either \(\forall\) or \(\exists\).

-

If \(x_i\) does not occur free in \(A\), then

- \(\vdash_K (\forall x_i)(A \to B) \leftrightarrow (A \to (\forall x_i)B)\)

- \(\vdash_K (\exists x_i)(A \to B) \leftrightarrow (A \to (\exists x_i)B)\)

-

If \(x_i\) does not occur free in \(B\), then

- \(\vdash_K (\forall x_i)(A \to B) \leftrightarrow ((\exists x_i)A \to B)\)

- \(\vdash_K (\exists x_i)(A \to B) \leftrightarrow ((\forall x_i)A \to B)\)

Thm. Any wf. 𝓐 is equivalent to a wf. 𝓑 in prenex form.

Def. A wf. in prenex form is a \(\Pi_n\)- form if it starts with a universal quantifier and has \(n-1\) alternations of quantifiers.

A wf. in prenex form is a \(\Sigma_n\)- form if it starts with an existential quantifier and has \(n-1\) alternations of quantifiers.

Thm. Substitution: let \(A\) and \(B\) are closed wfs. of \(L\), and suppose that \(B_0\) arises from the wf. \(A_0\) by substituting \(B\) for one or more occurrences of \(A\) in \(A_0\). Then

Finite Automata & Context Free Grammar

DFA, NFA and Regular language

Language, machine and DFA

Def. Formal Languages:

- Alphabet \(\Sigma\)

- String \(\Sigma^*=\{\varepsilon\}\cup\Sigma\cup\Sigma^2\cup...\)

- Language \(L\subseteq \Sigma^*\)

Def. Machine is a function \(M(x)=y\) where \(x\in \Sigma,y\in \{\text{accept},\text{reject}\}\). \(L(M)= \{ x|M \text{ accepts } w\} .\)

Def. Deterministic Finite Automata is a \(5\)-tuple (\(Q,\Sigma,\delta,q,F\)):

- \(Q\) is a finite set of states.

- \(\Sigma\) is a finite set of symbols.

- \(\delta:Q\times \Sigma\to Q\) is the transition function

- \(q\in Q\) is the start state

- \(F\subseteq Q\) is the set of accepting states

This is the syntax definition. Or you can directly define as "the machine accepts string \(w\)" (semantics).

Def. DFA \(M\) accepts \(w=w_1w_2...w_n\) iff \(\exists (r_0,r_1,...,r_n)\) where \(r_0=q,r_i=\delta(r_{i-1},w_i),r_n\in F\).

Def. Regular Languages: The set of all languages recognized by some DFA.

Closure properties

Thm. The class of regular languages is closed under complementation.

Pf. \(M'\) identical to \(M\), except with final states \(Q-F\).

Thm. The class of regular languages is closed under intersection.

Pf. \(M_3=(Q_1\times Q_2,\Sigma,\delta_3,(q_1,q_2),F_1\times F_2)\) where \(\delta_3((r_1,r_2),a)=(\delta_1(r_1,a),\delta_2(r_2,a))\).

Concatenation is hard.

Nondeterministic Finite Automata

Def. Nondeterministic Finite Automata is a \(5\)-tuple (\(Q,\Sigma,\delta,q,F\)):

- \(Q\) is a finite set of states.

- \(\Sigma\) is a finite set of symbols.

- \(\delta:Q\times \Sigma_{\varepsilon}\to 2^Q\) is the transition function

- \(q\in Q\) is the start state

- \(F\subseteq Q\) is the set of accepting states

Def. NFA \(M\) accepts \(w\) iff \(w\) can be written as \(x_1x_2...,x_m\) by adding any number of \(\varepsilon\) (\(w\) is a subsequence of \(x\)) s.t. \(\exists (r_0,r_1,...,r_m)\) where \(r_0=q,r_i\in\delta(r_{i-1},x_i),r_m\in F\).

Equivalence of NFA and DFA

Thm. For any DFA \(M\), there is a NFA \(N\) such that \(L(M) = L(N)\).

Trivial.

Thm. For any NFA \(N\), there is a DFA \(M\) such that \(L(N) = L(M)\).

Pf. For any NFA \(N = (Q,\Sigma,\delta,q,F)\), define a DFA \(M=(Q',\Sigma,\delta',q',F')\):

- \(Q'=2^Q\)

- \(\delta'(R, a)=E(\bigcup\limits_{r\in R}\delta(r,a))\)

- \(q'=E(q)\)

- \(F'=\{R|R\cap F\neq\emptyset\}\)

here \(E(R)=\{r\in Q| r \text{ is reachable from }R\text{ using zero or more }\varepsilon-\text{transitions}\}\).

Closure properties

NFA is closed under union, concatenation and star.

Regular Expressions

Def. A Regular expression is a string over \(\Sigma, \emptyset, \varepsilon,\cup,\circ ,^*,(,)\)

Def. Generalized NFAs: Transitions are REGEXPs, not just symbols from \(\Sigma_\varepsilon\).

Rem. GNFA only has one accept state. The accept state can't transfer to other state and the start state can't be transfered from other state.

Thm. REGEXPs equivalent to NFAs.

Pf. Only need to prove that every language recognized by an NFA can be represented as a REGEXP. Converse is obvious. Showing GNFA \(\subseteq\) REGEXP is sufficient, since NFA \(\subseteq\) GNFA.

The high-level idea is to reduce the size of GNFAs. If we want to get rid of a state \(q_{rip}\in Q\), for any \(q_i,q_j\in Q\), replace \(\delta(q_i,q_j)\) by

We can delete all non-start/accept states one by one. Until it only have with 2 states, obviously it's equivalent to a REGEXP.

CFG and PDA

Context Free Grammar

Def. A Context-free grammar (CFG) is a 4-tuple \((V,\Sigma,R,S)\):

- \(V\) is a set of variables;

- \(\Sigma\) is a set of terminals;

- \(R\) is a set of rules (a variable and a string in \((V\cup \Sigma)^*\));

- \(S\in V\) is the start variable.

Semantics:

-

\(uAv\Rightarrow uwv\) (\(uAv\) yields \(uwv\)) if \(A\rightarrow w\) is a rule \(r\in R\);

-

\(u\Rightarrow^*v\) (\(u\) derives \(v\)) if \(\exists u_1,u_2,...,u_k\) s.t.

\[u\Rightarrow u_1\Rightarrow u_2\Rightarrow...\Rightarrow u_k\Rightarrow v. \]

**Def. ** The language of a CGF \(G=(V,\Sigma,R,S)\) is defined as:

Ex. \(L=\{0^n1^n| n\ge 0\}\) is not regular, but is context-free (\(S\rightarrow0S1\mid \varepsilon\)).

Thm. Every regular language is context-free.

Pf. Given a DFA \(A = (Q,\Sigma,\delta,q_0,F)\), we need to find an equivalent CFG \(A’ = (V,\Sigma',R,S)\) s.t. \(L(A)=L(A')\). Make a variable \(R_i\) in \(A'\) for every state \(q_i\) in \(A\). Add the rule \(R_i\to aR_j\) to \(A’\) if \(\delta(q_i, a)=q_j\) in \(A\). \(R_0\) is the start variable if \(q_0\) is the start state. Add the rule \(R_i\to \varepsilon\) if \(q_i\) is an accept state in \(A\).

We can use Parse Tree to represents the rules used to generate a string.

Def. A grammar is called ambiguous if a string has two distinct parse trees.

Leftmost derivation: at every step the leftmost remaining variable is the one replaced. Ambiguous equivalent to having two different leftmost derivations.

Ex (Polish notation). \(\text{EXPR} \rightarrow a\mid b\mid c;\text{EXPR}\rightarrow+\text{ EXPR }\text{EXPR}\mid -\text{ EXPR }\text{EXPR}\mid \times\text{ EXPR }\text{EXPR}\mid /\text{ EXPR }\text{EXPR}.\)

Thm. Checking whether a CFG is ambiguous is undecidable.

Def. A CFL is called inherently ambiguous if every CFG for the language is ambiguous.

Ex. \(L=\{w\mid w=a^ib^jc^k,i,j,k\ge 1\wedge (i=j\vee i=k)\}\) is inherently ambiguous.

Thm. CFLs are closed under union, concatenation and kleene star.

Rem. CFLs are NOT closed under intersection.

Pushdown Automaton

Def. A pushdown automaton is a 6-tuple \((Q, \Sigma, \Gamma, \delta, q_0, F)\):

- \(Q\) is a finite set of states;

- \(\Sigma\) is the input alphabet;

- \(\Gamma\) is the stack alphabet;

- \(q_0\in Q\) is the initial state;

- \(F\subseteq Q\) is a set of final states;

- \(\delta: Q\times \Sigma_{\varepsilon}\times\Gamma_\varepsilon\to 2^{Q\times \Gamma_\varepsilon}\) is the transition function.

where \(\Sigma_{\varepsilon}=\Sigma \cup \{\varepsilon\},\Gamma_\varepsilon=\Gamma\cup\{\varepsilon\}\).

It accepts string \(w\in\Sigma^*\) if

- \(w\) can be written as \(x_1x_2...x_m\) where \(x_i\in\Sigma_\varepsilon\);

- \(\exists r_0,r_1,...,r_m\in Q\);

- \(\exists s_0,s_1,...,s_m\in\Gamma^*\)

such that

- \(r_0=q_0,s_0=\varepsilon\);

- \((r_i,b)\in\delta(r_{i-1},x_i,a)\), where \(s_{i-1}=at\) and \(s_i=bt\) for \(1\le i\le m\);

- \(r_m\in F\).

Thm. A language \(L\) is context-free if and only if it is accepted by some pushdown automaton.

Pf. CFGs \(\to\) PDAs: Push \(\$\) and \(S\) onto stack, then repeat the following steps:

- If the top of the stack is a variable \(A\): Choose a rule \(A\to \alpha\) and substitute \(A\) with \(\alpha\);

- If the top of the stack is a terminal \(a\): Read next input symbol and compare to \(a\).

- If they don’t match, reject;

- Otherwise, pop \(a\);

- If top of stack is \(\$\), go to accept state.

PDAs \(\to\) CFGs: First, we simplify the PDA s.t.

- It has a single accept state \(q_f\);

- It empties its stack before accepting;

- Each transition is either a push, or a pop.

For PDA \(P=(Q, \Sigma, \Gamma, \delta, q_0, F)\) satisfying above conditions, construct the CFG \(G=(V,\Sigma',R,S)\):

-

\(V=\{A_{pq}|\forall p,q\in Q\}\) where \(A_{pq}=\{x:x \text{ leads from state }p\text{ with an empty stack to state q with an empty stack}\}\).

-

\(S=A_{0f}\).

-

\(R=\{A_{pq}\to A_{pr}A_{rq}|\forall p,q,r\in Q\}\cup \{A_{pq}\to aA_{rs}b|\forall p,q,r,s\in Q,t\in\Gamma,(r,t)\in\delta(p,a,\varepsilon),(q,\varepsilon)\in\delta(s,b,t)\}\cup \{A_{pp}\to\varepsilon|\forall p\in Q\}.\)

We can show that \(L(P)=L(G)\).

Pumping Lemma

Nonregular Languages

Ex. \(\{0^n1^n|n\ge 0\}\) is nonregular. For any \(k\) states DFA \(M\), by Pigeonhole principle, there exists \(0\le i\neq j\le k\) s.t. \(M\) is on the same state after reading \(0^i\) or \(0^j\). Thus if \(M\) accepts \(0^i1^i\), it must accepts \(0^j1^i\).

Thm (Pumping Lemma). If \(A\) is a regular language, then there is a number \(p\) (the pumping length) where, if \(s\) is any string in \(A\) of length at least \(p\), then \(s\) can be divided into \(s=xyz\), satisfying

- For each \(i\ge 0\), \(xy^iz\in A\)

- \(|y|>0\)

- \(|xy|\le p\)

Pf: Let $ M = (Q, \Sigma, \delta, q_{\text{start}}, F) $ be a DFA recognizing $ A $ and $ p $ be the number of states of $ M $. For any string \(s\) s.t. $ |s| \geq p $, let $ q_0 = q_{\text{start}}, q_i = \delta(q_{i-1}, s[i])$. By pigeonhole principle, there exist $ q_i = q_j $ (\(i \neq j\)). So let $$ x = s[1, \ldots, i], y = s[i+1, \ldots, j], z = s[j+1, \ldots, p] $$ (\(z\) can be empty). $ xy^i $ will also reach $ q_i $, so if $ xyz \in A $, then $ xy^i z \in A $.

Ex. \(F=\{ww|w\in\{0,1\}^*\}\). \(s=0^p10^p1\in F\) but \(xy^2z=0^{p+i}10^p1\notin F\).

\(D=\{1^{n^2}|n\ge 0\}\). \(s=1^{p^2}\in D\) but \(xy^2z=1^{\le p^2+p}\notin F\).

\(\{0^i1^j|i>j\}\) is not regular.

Non context-free Languages

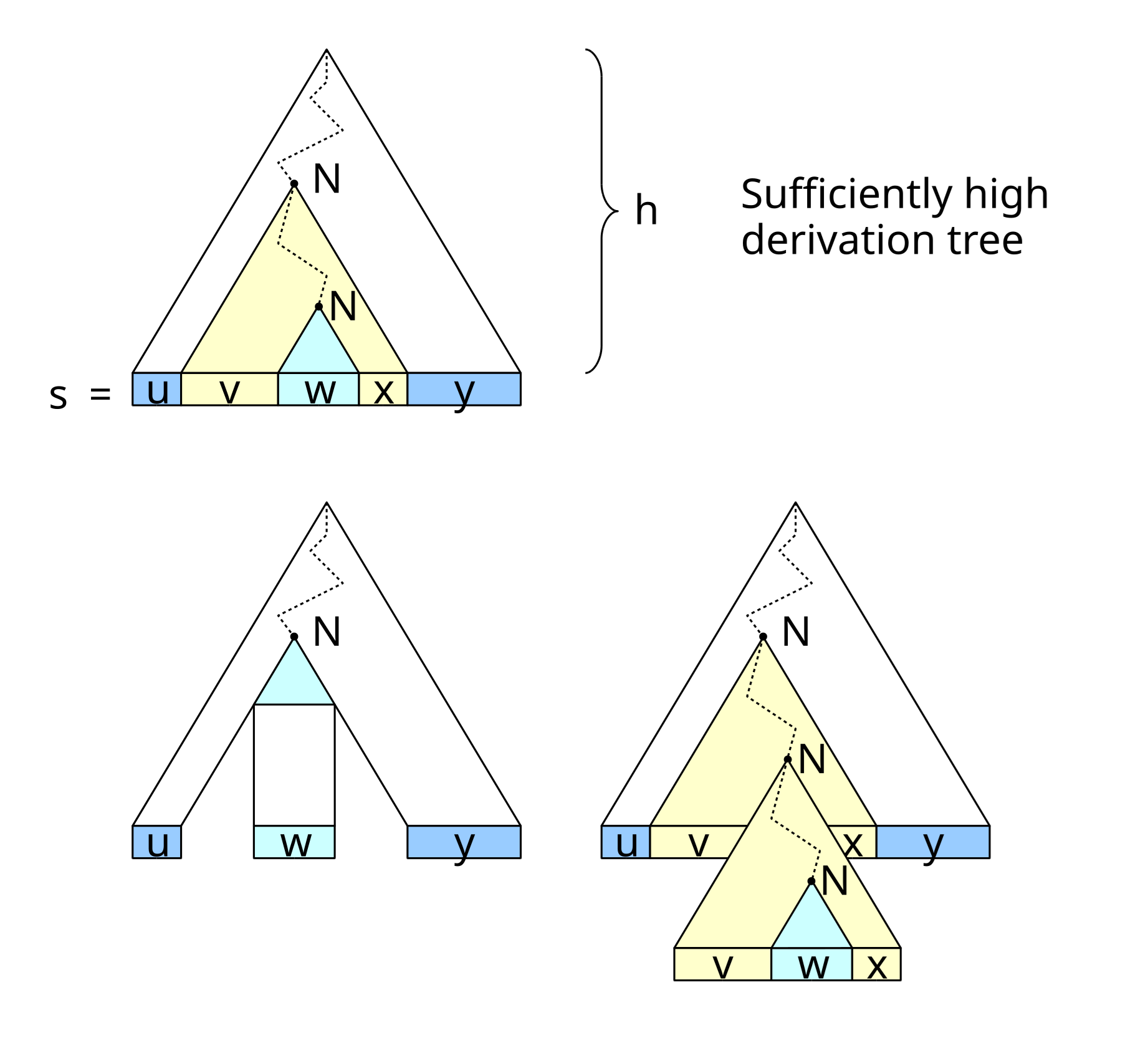

Thm. If \(A\) is a context-free language, then there is a number \(p\) (the pumping length) where, if \(s\) is any string in \(A\) of length at least \(p\), then \(s\) may be divided into \(5\) pieces \(s=uvxyz\), satisfying:

- For each \(i\ge 0\), \(uv^ixy^iz\in A\);

- \(|vy|>0\);

- \(|vxy|\le p\).

Pf. Let \(v\) be the number of variables, \(b\) be the maximum number of symbols in the right-hand side of the rules. Let \(p=b_{v+1}\). Assume \(s\) is any string in \(A\) of length at least \(p\). Consider a parse tree of \(s\), by pigeonhole principle, there exists a path of length at least \(v+1\). Furthermore, we can find two nodes with the same label on this path. Denote them as \(x\) and \(y\), assume \(x\) is an ancestor of \(y\). Let \(x\) be the subtree of \(y\) and \(vxy\) be the subtree of \(x\).

Ex. \(\{a^nb^nc^n|n\ge 0\}\) and \(\{ww|w\in\{0,1\}^*\}\) is not context-free.

For the former, consider \(z=a^pb^pc^p\). Since \(|vxy|\le p\), \(vxy\) cannot contain all three of the symbols \(a,b,c\), because there are \(p\) \(b\)s. So \(vxy\) either does not have any \(a\)s or does not have any \(b\)s or does not have any \(c\)s. Suppose, w.l.o.g., \(vxy\) does have any \(a\)s. Then \(uv^0 x y^0 z = uxz\) contains more \(a\)s than either \(b\)s or \(c\)s. Hence \(uxz\notin L\).

For the latter, consider \(s=0^p1^p0^p1^p\).

Turing Machine

DTM, NTM, and Variants

Standard Turing machine

Def. A Turing machine is a 7-tuple:

- \(Q\) is the set of states

- \(\Sigma\) is the input alphabet

- \(\Gamma\) is the tape alphabet

- \(\delta:Q\times\Gamma\to Q\times \Gamma\times\{L,R\}\) is the transition function

- \(q_{\text{start}}\in Q\)

- \(q_{\text{accept}}\in Q\)

- \(q_{\text{reject}}\in Q\)

satisfying \(\Sigma\cup\{\perp\}\subseteq\Gamma,q_{\text{accept}}\neq q_{\text{reject}}\).

Def. A configuration is a list of the tape contents, head position, and state.

Def. \(L(M) = \{w\in\Sigma* \mid \text{starting with }w\text{ on the first }|w|\text{ cells of the tape, }M\text{ will eventually enter the accept state}\}.\)

Def.

- \(M\) recognizes a language \(A\) if \(L(M)=A\). A language is recursively enumerable (or recognizable) if some Turing machine recognizes it.

- \(M\) decides \(A\) if \(L(M)=A\) and \(M\) always halts. A language is recursive (or decidable) if some Turing machine decides it.

- \(L\) is co-recognizable if \(L\) is recognizable.

Thm. A language is decidable iff it is recognizable and co-recognizable.

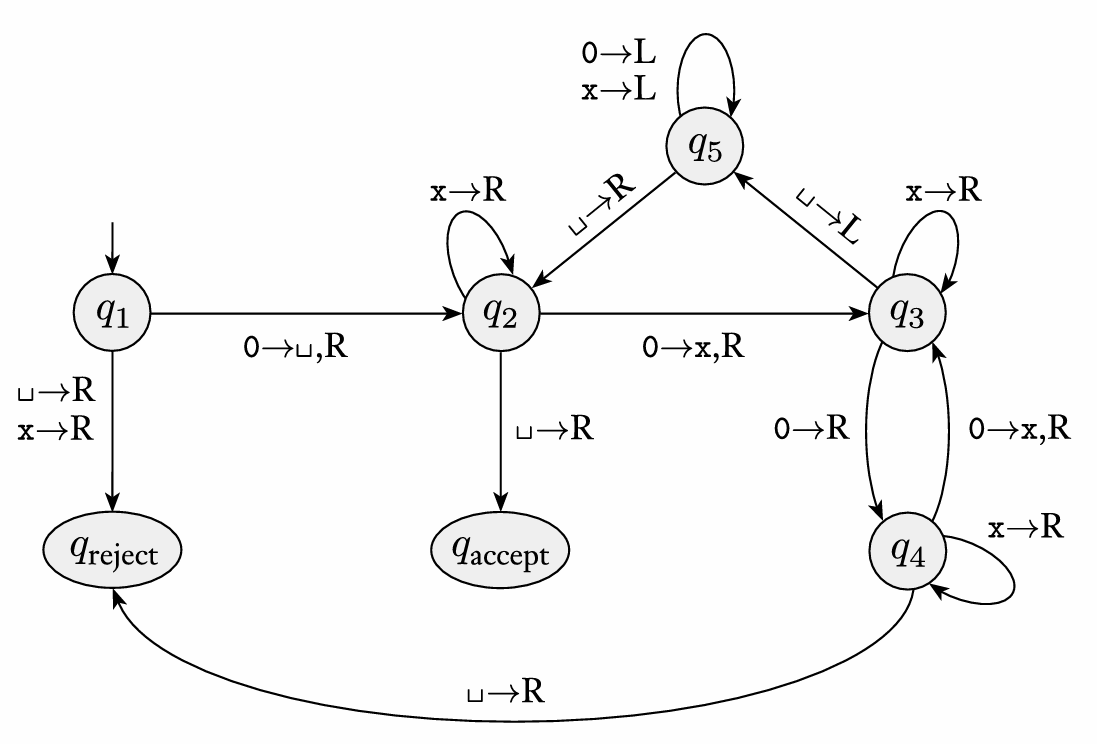

Ex. \(L=\{0^{2^n}|n\ge 0\}\).

Thm. The set of Turing machines is countable.

Def. Universal Turing machine is a Turing machine that can simulate an arbitrary Turing machine on arbitrary input.

Thm. Universal Turing machine exists.

Pf. Can be implemented by a 3-tape TM:

- The first one stores the description of the input machine.

- The second one stores the tape content.

- The third one stores the state.

Rem. There is a universal TM with 2 states and a 3-symbol alphabet. It's the smallest universal TM.

We will introduce several variants of TMs, and see that these variants are all equivalent to standard TM.

Multitape Turing Machines

Update the configuration on all the tapes simultaneously.

Thm. A language is Turing-recognizable if and only if some multitape Turing machine recognizes it.

Pf. The following simulation algorithm immediately prove the 2-tape case. (The head is at the first cell marked •)

-

Add a • to the first symbol 0/1

-

Write a '#' at the end of the input

-

Write a ⊥• after the #

-

Repeat:

-

Note the symbol \(x\)• in the current cell.

-

Move the head to the second • , note the symbol \(y\)• in that cell.

-

If the left head tries to move to the #, shift # and all of tape 2 to the right.

-

Apply the transition \(\delta(q,x,y) = (q’,x’,y’,m,m’)\) to the second •.

-

…then to the first.

-

Stop if \(q' = q_{\text{accept}}\) or \(q_{\text{reject}}\).

-

Nondeterministic T.M.s

The NTM accepts a string if there is SOME sequence of guesses that yields an accepting configuration.

Thm. A language is Turing-recognizable if and only if some nondeterministic Turing machine recognizes it.

Pf. It will be shown that how to simulate an NTM with a 3-tape TM. The information on 3 tapes:

- Tape 1 holds the input (don’t write anything else here)

- Tape 2 is a simulation tape

- Tape 3 holds the nondeterministic guesses

Do the breadth-first search:

-

Initially tape 1 contains the input, tapes 2 and 3 are empty

-

Copy tape 1 to tape 2

-

Use tape 2 to simulate the NTM with the input. Use tape 3 as choices for all branches. If no more symbols on tape 3, or if the nondeterministic choice is invalid, or the NTM reject, goes to step 4. If it accepts, accept the input.

-

Replace the string on tape 3 with the lexicographically next string. Go to step 2. (the string on tape 3 becomes longer and longer)

We call a nondeterministic Turing machine a decider if all branches halt on all inputs.

Thm. A language is decidable if and only if some nondeterministic Turing machine decides it.

TM with outputs

TM with an extra "write-only tape": whenever print, cannot erase

Def. Enumerator is a Turing machine with a printer.

Thm. A language is Turing-recognizable if and only if some enumerator generates it.

Pf. \(\Rightarrow\) Enumerate the step number \(i\) and a string \(s_j\), run the first \(i\) steps of \(M(s_j)\).

\(\Leftarrow\) Run the enumerator, every time that it outputs a string, compare it with the input string.

Reducibility

Gödel’s Entscheidungsproblem

Def. The Entscheidungsproblem asks for an algorithm that takes as input a statement of a first-order logic (possibly with a finite number of axioms beyond the usual axioms of first-order logic) and answers "Yes" or "No" according to whether the statement is universally valid, i.e., valid in every structure satisfying the axioms.

Thm. A general solution to the Entscheidungsproblem is impossible.

Def. Let \(A_{\text{TM}}=\{\langle M,w\rangle\mid M \text{ is a TM and }M\text{ accepts }w\}.\)

Thm. \(A_{\text{TM}}\) is recognizable, but not decidable.

Pf. We prove the latter by contradiction.

Assume \(A_{\text{TM}}\) is decidable, then there is a TM \(H\) that decides \(A_{\text{TM}}\). Construct a procedure \(D\) with input <\(M\)> (<\(M\)> is the description of a TM) :

D(<M>):

if H(<M,<M>>) accepts

return reject;

else

return accept;

Note that \(D\) always halts since \(H\) always halts. We can see that \(D(\text{<} D\text{>})\) always halts.

Prop. \(A_{\text{TM}}\) is not co-recognizable.

Halting Problem

Def. \(\text{HALT}_{\text{TM}} = \{\langle M,w\rangle | \text{TM } M \text{ halts on input }w\}.\)

Thm. \(\text{HALT}_{\text{TM}}\) is not decidable.

Pf. Reduction from \(A_{\text{TM}}\).

Def. Oracle Turing Machine (OTM): a TM capable of querying an oracle

- multitape TM \(M\) with special “query” tape

- and special states \(q_{\text{query}}, q_{\text{yes}}, q_{\text{no} }\)

- on input \(x\), with oracle language \(A\)

- \(M^A\) runs as usual, except…

- when \(M^A\) enters state \(q_{\text{query}}\):

- \(y = \text{contents of query tape}\)

- \(y \in A\) ⇒ transition to \(q_{\text{yes}}\).

- \(y \notin A\) ⇒ transition to \(q_{\text{no}}\).

Def. Language \(A\) is Turing reducible to \(B\), if an oracle TM \(M^B\) decides \(A\), write \(A\le_TB\).

Thm. If \(A\le_T B\) and \(B\) is decidable, then \(A\) is decidable.

Ex. We have \(A_{\text{TM}}\le_T\text{HALT}_{\text{TM}}\). From \(A_{\text{TM}}\) is undecidable, \(\text{HALT}_{\text{TM}}\) is also undecidable.

Thm [Kleene’s s-m-n Theorem]. Suppose we have a TM \(M(x_1,…,x_m, y_1,…,y_n)\) whose input is composed of \(m+n\) variables. There is a TM \(T(\text{<}M\text{>}, x_1,…,x_m)\) when given \(M, x_1,…,x_m\), outputs a TM \(M’\) such that \(M'(y_1,…,y_n)=M(x_1,…,x_m, y_1,…,y_n)\).

Ex. For a set of language \(S\), if exists \(L(M_0)\in S\) and \(L(M_1)\notin S\), then \(\mathcal{L}=\{M|L(M)\in S\}\) is undecidable. To prove \(\text{HALT}_{\text{TM}}\le_T L\), consider construct a TM \(T\) with input \(z\):

Input 〈M, w〉, where M is a TM and w is a string

Simulate M on input w

if M halts on w

return M0(z);

else

return M1(z);

Clearly, \(L(T)\in \mathcal{L}\) iff \(\langle M,w\rangle\in\text{HALT}_{\text{TM}}\).

The Post Correspondence Problem

Def. The Post Correspondence Problem (PCP) : Given a set of dominos, find a sequence of these dominos so that the string we get by reading off the symbols on the top is the same as the string of symbols on the bottom. In other words,

Thm. \(PCP\) is undecidable.

Pf. Consider reduction from \(A_{\text{TM}}\). W.l.o.g., assuming TM \(M\) erase its tape and one of the PCP dominos is marked as the starting domino.

Let \(\left[\dfrac{\#}{\#q_0w\#}\right]\) be the starting domino. \(\left[\dfrac{qa}{bq'}\right]\) for all \(\delta(q,a)=(q',b,R)\) and \(\left[\dfrac{cqa}{q'ba}\right]\) for all \(\delta(q,a)=(q',b,L)\) and \(c\). \(\left[\dfrac{a}{a}\right]\) for all \(a\). \(\left[\dfrac{☐\#}{\#}\right],\left[\dfrac{\#q_a}{\varepsilon}\right],\left[\dfrac{☐}{\varepsilon}\right]\).

Reductions via computation histories

Ex. \(ALL_{\text{CFG}}=\{\braket{G}:G\text{ is a CFG that generates all strings}\}\) is undecidable.

Consider the following reduction from \(A_{\text{TM}}\) to \(ALL_{\text{CFG}}\): For input \(\braket{M},w\), construct a CFG G s.t. \(M\) accepts \(w\) iff \(G\) does not generate its computation histories, and \(G\) generates all strings otherwise.

How to achieve it? Construct a PDA \(D\) that accepts strings of computation histories meeting one of the following conditions:

- Do not start with an starting configuration;

- Do not end with an accepting configuration;

- Some \(C_i\) does not yield \(C_{i+1}\) legally.

In order to make the language to be context-free, the strings accepted is arranged as:

Thus \(ALL_{\text{CFG}}\) is undecidable.

Thm. For context-free grammars:

- Universality, equivalence and ambiguity is undecidable;

- Emptiness, finiteness, membership is decidable.

Mapping reducibility

Def. A function is a recursive function if some Turing machine \(M\), on every input \(w\), halts with just \(f(w)\) on its tape.

Def. Language \(A\) is mapping reducible to language \(B\), if there is a recursive function \(f\), such that \(w\in A \Leftrightarrow f(w)\in B\), denoted \(A\le_m B\). The function \(f\) is called the reduction of \(A\) to \(B\). (also called “many-one reduction”)

Thm. If \(A\le_m B\) and \(B\) is decidable, then \(A\) is decidable.

Thm. If \(A\le_m B\) and \(B\) is Turing-recognizable, then \(A\) is Turing-recognizable.

Ex. \(EQ_{\text{TM}}=\{\braket{M_1,M_2}| M_1\text{ and }M_2\text{ are TMs and }L(M_1)=L(M_2)\}.\)

By Turing reducibility, we show that \(EQ_{\text{TM}}\) is undecidable.

By mapping reducibility, we show that \(EQ_{\text{TM}}\) is neither recognizable nor co-recognizable, using the following reduction:

f(<M,w>):

Define M1 which rejects all inputs

Define M2 which simulates M on input w

Output <M1,M2>

Def. \(\text{TIME}(t(n))\): all languages that are decidable by an \(O(t(n))\) time deterministic Turing machine.

\(\text{NTIME}(t(n))\): all languages that are decidable by an \(O(t(n))\) time nondeterministic Turing machine.

Def. \(\text{P}=\bigcup_k\text{TIME}(n^k),\text{NP}=\bigcup_k\text{NTIME}(n^k)\).

For proving NP-completeness, we use polynomial-time many-one reductions.

Recursion Theorem

Thm [Recursion Theorem]. Let \(t:\mathbb{N}\to\mathbb{N}\) be a recursive function. There is a TM \(F\) for which \(t(\braket{F})\) is the code of a Turing machine equivalent to \(F\).

Pf. Consider let \(\braket{F}=D(\braket{V})\), \(D\) and \(V\) is given as follows:

D(<M>) {

output the code of the Turing machine G:

G(y) {

run the machine with code M(<M>) on y;

}

}

V(<M>) {

output t(D(<M>));

}

We can see that \(F\) is decribed as follows:

F(y) {

run the machine with code V(<V>) on y;

}

Thus $$\braket{F}=V(\braket{V})=t(D(\braket{V}))=t(\braket{F}).$$

Thm [Kleene’s recursion theorem]. Let \(t\) be a recursive function \(t: \mathbb{N}\times\mathbb{N}\to\mathbb{N}\). There is a Turing machine \(R: \mathbb{N}\to\mathbb{N}\), where $$R(x)=t(\braket{R},x).$$

Pf. Define the recursive function \(s: \mathbb{N}\to\mathbb{N}\) as follows (by s-m-n theorem), and apply recursion theorem on \(s\).

s(x) {

output the code of the TM on input y computing t(x,y);

}

Thus $$R(x)= \text{``simulating the TM with code }s(\braket{R})\text{ on }y''=t(\braket{R},y).$$

Ex. Another proof of ATM is undecidable.

Pf. Suppose \(H(\braket{M,w})\) decides \(A_{\text{TM}}\):

B(w):

Obtain, via the recursion theorem, own description <B>

Run H(<B,w>)

Accept if H rejects, reject if H accepts

Ex. \(\text{MIN}_{\text{TM}} =\{\braket{M}| M\text{ is a “minimal” TM, that is, no TM with a shorter encoding recognizes the same language}\}\) is not recursively enumerable.

Pf. Assume \(\text{MIN}_{\text{TM}}\) is r.e., then there is an enumerator \(E\) which lists all strings in it.

R(w):

Obtain <R>;

Run E, producing list <M1>, <M2>, ... of all minimal TMs

until you find some <Mi> with |<Mi>| strictly greater than |<R>|;

Return Mi(w);

Then \(R\) is equivalent to \(M_i\), but shorter than \(M_i\), a contradiction.

Def. A quine is a non-empty computer program produces a copy of its own source code which takes no input and as its only output.

Thm. There is a quine. (By recursion theorem.)

*Kolmogorov complexity

Def. The minimum description \(d(x)\) of \(x\) is the shortest string \(\braket{M,w}\) where TM \(M\) on input \(w\) halts with \(x\) on its tape.

Def. The Kolmogorov complexity \(K(x)=|d(x)|.\)

Thm. For all \(x\), \(K(x^n)≤|x|+c·\log n\), for some constant \(c\).

Def. A string \(x\) is \(c\)-compressible if \(K(x)≤|x|-c\). If \(x\) is not \(1\)-compressible, we say that \(x\) is incompressible.

Thm. Incompressible strings of every length exist.

Thm. $$\text{Pr}_{x\in{0,1}^n} [K(x)\ge |x|-c]\ge 1-2^{-c}.$$

Pf. \(\#(\text{binary string of length }n)=2^n, \#(\text{description of length}<n-c)\le 2^{n-c}-1.\)

Thus $$\text{Pr}_{x\in{0,1}^n } [K(x)< n-c]\le\frac{2^{n-c} -1}{2^n }<2^{-c}.$$

Def. \(\text{COMPRESS}=\{(x,c)| K(x)≤c\}\).

Thm. COMPRESS is undecidable.

Pf. Suppose COMPRESS is decidable, construct a machine \(M\):

M(n):

For all y in lexicographical order:

If (y,n) ∉ COMPRESS, print y and halt

We can see that the output \(y\) is the first string of Kolmogorov complexity \(>n\).

But \(M(n)\) prints \(y\), and \(K(y)≤ |\braket{M,n}|=c+\log n\), a contradiction for large enough \(n\).

Thm [Chaitin's incompleteness theorem]. Suppose in a formal system \(F\):

- Any mathematical statement describable in English can also be described within \(F\).

- Proofs are convincing: given \((S,P)\), it is decidable to check that \(P\) is a proof of \(S\) in \(F\).

Then for large enough \(L\), for all \(x\), \(K(x)>L\) is not provable.

Pf. Suppose we can prove \(K(x)>L\) for some \(x\). Pick the smallest such proof \(P\) which proves \(K(y)>L\). Consider the machine \(R\):

R(L):

For all list of w.f. Q in lexicographical order:

Check whether Q is a valid argument

Check whether the last statement of Q is K(y)>L

If yes, output y and halts

\(R\) generates \(y\) where \(|\braket{R,L}|=c+\log L\).

Complexity

Cell-Probe Model

Def [Bounds for complexity]. If we have found an algorithm with time (space) complexity \(O(f(n))\) for a language \(L\), \(O(f(n))\) is an upper bound of the time (space) complexity for \(L\); If we have proved that any algorithm for \(L\) needs \(\Omega (f(n))\) time (space), \(\Omega (f(n))\) is a lower bound of the time (space) complexity for \(L\).

“Were-you-last?” Game

Def. “Were-you-last?” Game:

- \(m\) players of Dream Team are taken to a game room one by one (they don’t know the order);

- When a player leaves the game room, he is asked if he was the last of the \(m\) players to go there and must answer correctly;

- Inside the game room, there are many boxes. Every player can open at most \(t\) boxes.

- He can put a pebble in an empty box or remove the pebble from a full box.

- He must close a box before opening the next one.

- The Dream Team win if all players answer correctly. Assume there is their adversary, Hannibal, who knows their strategies, arranging the order of their entrance to the game room.

What is the smallest \(t\) so that Dream Team has a winning strategy?

Rem. First try: \(t=\Theta(\log_2m)\) is trivial. Just maintain a counter. Can we make \(t\) smaller?

Thm. Dream Team has a winning strategy with \(t=\Theta(\log\log m)\).

Pf. Represents the counter as “blocks” of 0s and 1s. Maintain:

- type of last block number;

- number of blocks;

- size of each block.

Each entry above can be specified using \(≤ \lceil \log\log m\rceil\)bits. We only need to change blocks in the end. So

The last problem is that how can the first player set the counter to \(m\). Just assume you are always working on \(C’=C\oplus m\), or using first \(\log m\) players to set the counter to \(m-\log m\).

Def. A sunflower is a collection of sets \(S_1,…,S_p\) so that \(S_i\cap S_j\) is the same for each pair of different sets \(S_i\) and \(S_j\).

Lem [Sunflower lemma]. Let \(\mathcal{F}=\{S_1,…,S_m\}\) be a collection of sets each of cardinality \(\le l\). If \(m>(p-1)^{l+1} l!\), then the collection contains a sunflower with \(p\) sets.

Pf. Induction on \(l\): for \(l=1\), \(p\) sets of different single elements form a flower. For \(l\ge 2\), take a maximal collection \(A_1,…,A_t\) of pairwise disjoint members in \(\mathcal{F}\).

-

If \(t\ge p\), they form a flower with empty core.

-

If \(t<p\), let \(B=A_1\cup ...\cup A_t\), then \(|B|\le (p-1)l\) and \(B\) intersects every set in \(\mathscr{F}.\)

Thus some element \(x\in B\) must be contained in

\[\ge \frac{(p-1)^{l+1}l!}{(p-1)l}=(p-1)^l(l-1)! \]sets in \(\mathscr{F}\). Delete \(x\) from them, by induction hypothesis, these sets form a sunflower with \(p\) sets. Add \(x\) to them to get a sunflower in \(\mathscr{F}\).

Thm. If \(t ≤ 0.4 \log\log m\), the Dream Team lose.

Pf. Define \(S_i\) to be the set of all bits that player \(i\) may read/write. Then \(|S_i|\le l= 2^t-1.\)

When \(t=0.4\log\log m\), we have \(l<(\log m)^{0.4}\). Furthermore, let \(p=2^l+1\),

By Sunflower lemma, we can find a sunflower with \(p>\) sets.

Hannibal first arrange other \(m-p\) players, then these \(p\) players. Clearly, only the center of the sunflower is meaningful for these players.

On the one hand, the possible number of status for the center is not greater than \(2^l\). On the other hand, \(p>2^l\), so there must be two players \(i\) and \(j\) will leave the same status. They cannot distinguish \(1,...,i,i+1,...,j,j+1,...,p\) from \(1,...,i,j+1,...,p\).

Rem. A simple counter needs \(O(\log m)\) space and update time. Such a block counter needs \(O(\log m\log\log m)\) space and \(\Theta(\log\log m)\) update time.

Comparison-based Sorting

Def. Comparison-based sorting algorithm are algorithms that can only use comparison of pairs of elements to gain order information about a sequence.

Rem. A lower bound on the number of comparisons will be a lower bound on the complexity of any comparison-based sorting algorithm.

Thm. \(\Omega(n \log n)\) is a lower bound of any comparison sort.

Pf. Consider a comparison-based sorting algorithm that enables to sort \(n\) distinct numbers. The decision tree of this algorithm is a binary tree and has at least \(n!\) leaf nodes, since there are \(n!\) possible permutations. Thus the depth of the tree is \(\ge \log_2(n!).\)

By Stirling’s approximation, $$\log_2(n!)=\Theta(\log_2(\sqrt{2\pi n}\left(\frac{n}{e}\right)^n))=\Theta(n\log n).$$

Thus, the time lower bound for comparison-based sorting algorithm is \(\Theta(n \log n)\).

Rem. The best worst-case complexity so far is \(\Theta(n \log n)\) (merge sort and heapsort). Thus they are optimal under this model. In fact, we can beat the lower bound if we don’t base our sort on comparisons.

Information Retrieval Problem

Def. Cell probe model is a model of computation where the cost of a computation is measured by the total number of memory accesses to a random access memory. All other computations are not counted and are considered to be free.

Rem. Dynamic data structures:

- Preprocessing phase: Given the input data, we can write in memory cells;

- Update: The “input data” can be changed by a small amount each time;

- Query phase: Determine whether a query datum is included in the input data.

Def. Information Retrieval Problem:

- Input: a set \(S\) of integers;

- Query: an integer \(x\), if \(x\in S\)?

Formally states, we want to minimize \(\text{Cost}(\mathcal{T},\mathcal{S})\), which equals to the number of probes in the worst case with table structure \(\mathcal{T}\) (how a particular set \(S\) be placed in the table) and search strategy \(\mathcal{S}\) (how to find a key \(K\) in the table). Let \(n=|S|,S\subseteq [M]\).

Def. Ramsey number \(R(n_1,...,n_c)\) is the smallest number \(n\) such that there exists cliques with color \(i\) of size \(n_i\) for some \(i\), in all \(K_n\) where every edge is colored with \(c\) different colors.

Thm [Ramsey Theorem]. \(R(n_1,...,n_c)\) exists for all \(c,n_1,...,n_c\in\mathbb{N}\).

Pf. Prove the following statements.

- \(R(2,n)=R(n,2)=n\).

- \(R(r,s)\le R(r-1,s)+R(r,s-1).\)

- \(R(n_1,...,n_c)\le R(n_1,...,n_{c-2},R(n_{c-1},n_c)).\)

Lem. When \(n\ge 2\) and \(M\ge 2n-1\), if the table structure always stores keys in some fixed permutation, then \(\lceil \log(n+1)\rceil\) probes are needed in worst-case for any search strategy.

Pf. Only need to prove the case that the table structure always stores keys in a sorted order. Consider the binary search structure.

Thm. For all \(n\), there is an \(N(n)\) such that we need \(\lceil \log(n+1)\rceil\) probes for \(M≥N(n)\).

Pf. Let \(\mathcal{A}=\{S\subseteq [1,...,m]:|S|=n\}\). For all permutation \(\sigma\), let

By Ramsey theorem extension to hypergraph, when \(M\) large enough, there is a subset \(\Gamma\subseteq [1,..,M]\) and \(|\Gamma|=2n-1\), such that \(\exists \sigma,\forall S\subseteq \Gamma\) and \(|S|=n\), \(S\in \mathcal{A}_{\sigma}.\)

By lemma, we need \(\lceil \log(n+1)\rceil\) probes when all the elements are in \(\Gamma\).

Rem. \(\lceil \log(n+1)\rceil\) is also an upper bound. Sorted table & binary search gives it.

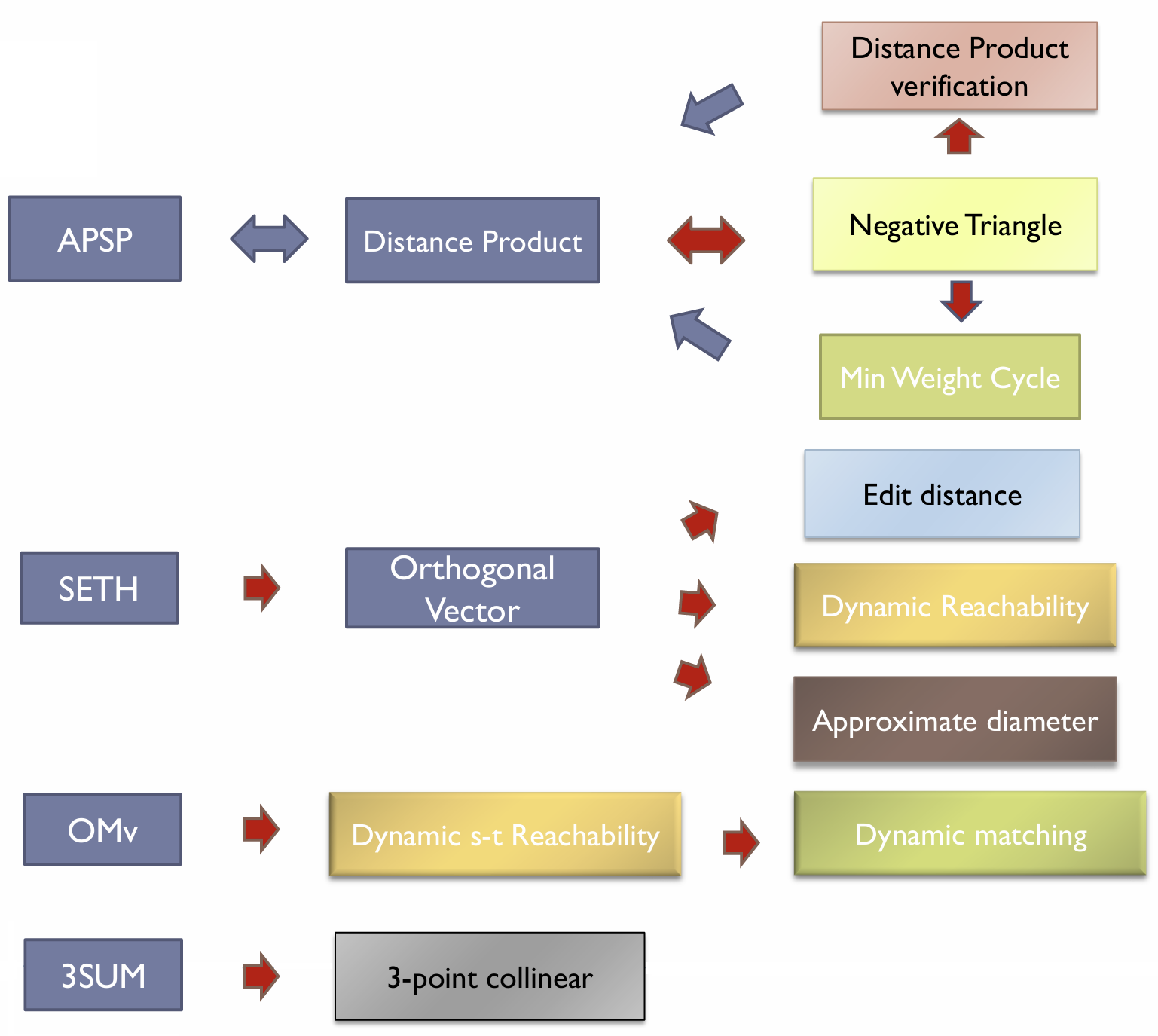

Dynamic Partial Sum

Def. Let \(\mathbb{G}\) be a group. The dynamic partial sum problem asks to maintain an array \(A[1,...,n]\) of group elements, under

-

\(\text{UPDATE}(k,\Delta):\) modify \(A[k]\leftarrow \Delta\), where \(\Delta\in \mathbb{G}\);

-

\(\text{SUM}(k):\) returns the partial sum \(\sum\limits_{i=1}^k A[i].\)

Assume \(|\mathbb{G}|=2^{\delta}\)and the word size for computer is \(w\ge \log n\).

Thm [Pătraşcu, Demaine 2004]. Any data structure requires an average running time of \(\Omega(\frac{\delta}{w}\log n)\) per updates and queries.

Pf. We design a “bad” sequence: Initially \(A[1…n]=(0,…,0)\). Choose a random permutation \(\pi\in S_n\) and a uniformly random sequence \(\braket{\Delta_1,...,\Delta_n}\in\mathbb{G}^n.\) Do the following:

for t=1,2,...,n:

UPDATE(𝜋(t),𝚫(t))

SUM(𝜋(t))

Let $ IL(t_0, t_1, t_2)$ be the number of transitions between a value \(\pi(i)\) with \(i<t_1\), and a consecutive value \(\pi(j)\) with \(j>t_1\). Suppose \(t_2-t_1=t_1-t_0=k\), we have

Let \(\Delta^*\) be the updates not in the range \([t_0,t_1]\), suppose it's fixed to some arbitrary \(\mathbf{\Delta^*}\), then

since the answers depend on \(IL(t_0, t_1, t_2)\) independent random variables uniformly chosen from \(\mathbb{G}\).

Let \(IT(t_0,t_1,t_2)\) be the set of memory locations which

- were read at a time \(t_r\in[t_1,t_2]\);

- were written at a time \(t_w\in[t_0,t_1]\), and not overwritten during \([t_{w+1},t_r]\).

The above entropy is upper bounded by the average length of the information transfer encoding, which contains:

-

the cardinality \(|IT(t_0, t_1, t_2)|\);

-

the address of each cell in \(IT(t_0,t_1,t_2)\);

-

the contents of each cell at time \(t_1\).

We have

Combining (1) and (2) gives

Finally, consider the total \(IT\), which is the sum of all vertices of the segment tree:

Thus $$E[\sum_v|IT(v)|]=\Theta(\frac{\delta}{w}n\log n).$$

Rem. Proving lower bound: design a hard instance and analyze its information transfer

Rem. The upper bound given by segment tree meets the lower bound.

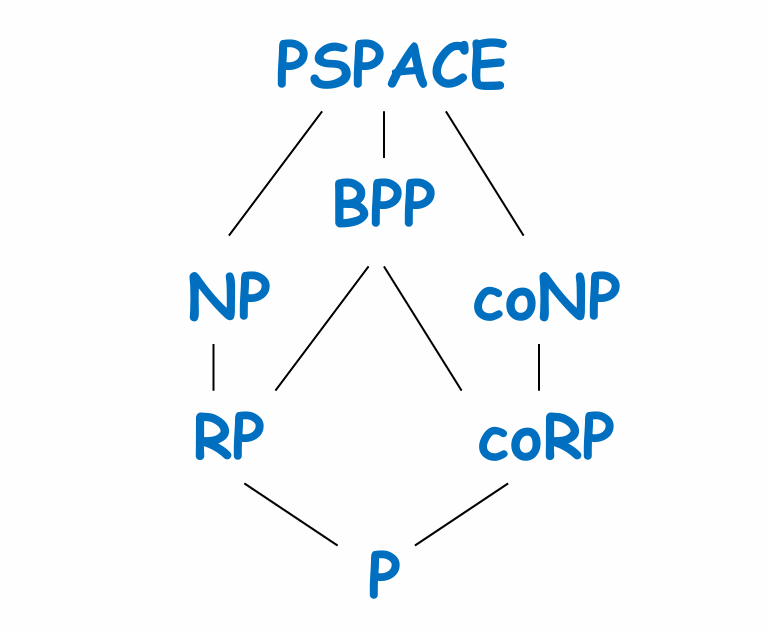

Deterministic & Nondeterministic

Time Complexity

Def (Time Complexity). Let \(M\) be a deterministic Turing machine that halts on all inputs. The running time or time complexity of \(M\) is the function \(f: \mathbb{N}\to\mathbb{N}\), where \(f(n)\) is the maximum number of steps that \(M\) uses on any input of length \(n\).

Def. \(\mathsf{TIME}(t(n))\): all languages that are decidable by an \(O(t(n))\) time deterministic Turing machine.

\(\mathsf{NTIME}(t(n))\): all languages that are decidable by an \(O(t(n))\) time nondeterministic Turing machine.

Def.

The Church-Turing thesis says all these models, e.g., Turing Machine and java, are equivalent in power... but not in running time!

Cobham-Edmonds thesis. For any realistic models of computation \(M_1\) and \(M_2\), \(M_1\) can be simulated on \(M_2\) with at most polynomial slowdown.

Rem. Quantum computation does NOT change the Church-Turing thesis, that is, what is computable. But it does seem to change what is computable in polynomial time.

Space Complexity

Def (Space Complexity Classes). For any function \(f: \mathbb{N}\to\mathbb{N}\), we define

Rem. Here we consider 3-tape machines:

- Input tape is read-only

- Work tape can read and write

- Output tape is write-only.

Only the size of the work tape is counted for complexity purposes.

Ex. \(\mathsf{SAT}\in\mathsf{SPACE}(n)\).

Logarithmic Space

Def (Logarithmic Space Classes).

Fact.

-

\(\mathsf{TIME}(f(n))\subseteq\mathsf{SPACE}(f(n)).\)

Since a machine that uses \(f(n)\) time can use at most \(f(n)\) space.

-

\(\mathsf{SPACE}(f(n))\subseteq\mathsf{TIME}(2^{O(f(n))}).\)

A machine uses \(O(f(n))\) space can have at most \(2^{O(f(n))}\) configurations. A TM that halts may not repeat a configuration, so the total time to “list” all configurations is \(f(n)\cdot 2^{O(f(n))} = 2^{O(f(n))}\).

-

\(\mathsf{NTIME}(f(n))\subseteq\mathsf{SPACE}(f(n)).\)

We can enumerate all possible \(O(f(n))\) “guesses” (of size \(O(f(n))\)) in \(O(f(n))\) space.

-

\(\mathsf{NSPACE}(f(n))\subseteq\mathsf{TIME}(2^{O(f(n))}).\)

Construct the configuration graph with \(2^{O(f(n))}\) vertices. Check whether accepting configurations are reachable from start configuration.

Thm. The following inclusions hold:

NL-completeness

Def (Log-Space Reductions). \(A\) is log-space reducible to \(B\), written \(A \leq_L B\), if there exists a log space TM M that, given input \(w\), outputs \(f(w)\) s.t. \(w \in A\) iff \(f(w) \in B\).

Thm. If \(A_1\le_L A_2\) and \(A_2\in \mathsf{L}\), then \(A_1\in \mathsf{L}\).

Pf. Let \(M\) be a TM for \(A_2\). On input \(x\), simulate \(M\) on \(f(x)\) and whenever \(M\) need to read the \(i^{\text{th}}\) bit of \(x\), run \(f\) on \(x\) to send the \(i^{\text{th}}\) of \(f(x)\) to \(M\). Since \(i\) is bounded by \(\text{poly}(n)\), record it only takes \(O(\log n)\) bit.

Thm. More generally, if \(f\) and \(g\) are log-space computable functions, \(f \circ g\) is also log-space computable.

Pf. First we can see \(g'(x, i)\), the \(i^{\text{th}}\) bit of \(g(x)\), is computable in log-space. We just wait until the \(i^{\text{th}}\) bit comes out. Size of \(g(x)\) polynomial of \(|x|\), so size of \(i\) is \(O(\log |x|)\). To compute \(f(g(x))\), directly simulate the function \(f\), whenever \(f\) reads the \(i^{\text{th}}\) bit on \(g(x)\), call \(g'(x, i)\).

OPEN PROLEM. Is NP-Completeness closed under log-space reduction?

Def (NL-Completeness). A language \(B\) is \(\mathsf{NL}\)-hard if for every \(A\in\mathsf{NL}, A\leq_LB\). A language \(B\) is \(\mathsf{NL}\)-Complete if \(B∈\mathsf{NL}\) and \(B\) is \(\mathsf{NL}\)-hard.

Ex. PATH (st-connectivity):

- Instance: A directed graph \(G\) and two vertices \(s,t\in V\)

- Problem: To decide if there is a path from \(s\) to \(t\) in \(G\)

First, the following machine decides PATH so immediately PATH \(\in\mathsf{NL}\):

Start at s

for i = 1, ..., |V|:

Non-deterministically choose a neighbor and jump to it

if you get to t:

return accept

return reject

Then we can show that PATH is \(\mathsf{NL}\)-Complete by reduction. Any \(\mathsf{NL}\) language \(A\), assume \(M\) is a NTM that decides \(A\). For the computation of \(M\) on an input \(x\), it can be described by a graph \(G_{M,x}\):

- Each vertex corresponds to a configurations of \(M\)

- \((u,v)\in E\) if \(M\) can move from \(u\) to \(v\) in one step

- \(s\) corresponds to the start configuration, \(t\) corresponds to the accept configuration (w.l.o.g., we can assume all NTM’s have exactly one accepting configuration)

Clearly, \(M\) accepts \(x\) iff there is a path from \(s\) to \(t\) in \(G_{M,x}\). Also this graph is log-space computable from \(M,x\). So we have a log-space reduction from \(A\) to PATH. Thus PATH is \(\mathsf{NL}\)-Complete. Since PATH is \(\mathsf{P}\), immediately we have:

Prop. \(\mathsf{NL}\subseteq\textsf{P}\).

Thm (Savitch's Theorem). \(\textsf{NL}\subseteq\mathsf{SPACE}(\log^2n).\)

Pf. Only need to show PATH \(\in\mathsf{SPACE}(\log^2n)\).

bool PATH(a,b,d) { //whether there is path from a to b with distance ≤d

if there is an edge from a to b then

return TRUE

else {

if d=1 return FALSE

for every vertex v {

if PATH(a,v, ⎣d/2⎦) and PATH(v,b, ⎡d/2⎤) then

return TRUE

}

return FALSE

}

}

In the above algorithm, we need to maintain a recursion stack. In this stack, each call takes \(O(\log n)\) space (to keep track of \(v\) and \(d\)), and \(O(\log n)\) recursion depth, so \(O(\log^2 n)\) space in total. Thus PATH can be decided by a deterministic TM in \(O(\log^2n)\) space.

Rem. For undirected version (USTCON), it's \(\mathsf{L}\). [Reingold, STOC'05]

Ex. NON-PATH:

- Instance: A directed graph \(G\) and two vertices \(s,t∈V\)

- Problem: To decide if there is no path from \(s\) to \(t\)

Clearly, NON-PATH is \(\mathsf{coNL}\)-Complete.

Thm [Immerman/Szelepcsenyi, 1987].

Pf. Let's prove it by showing NON-PATH is \(\mathsf{NL}\). Consider the following machine :

bool NON_PATH(G, s, t):

count ← 1

for l ← 1 to n:

count ← Number_of_Reachable_Vertices(s, l, count)

if R(s, t, n, count) == 1:

return false

return true

The next question is how to count the number of reachable vertices. Here is a log-space nondeterministic algorithm for it:

int Is_v_reachable(s, v, l, count):

for each u ≠ v:

b ← guess if u is reachable from s in l steps

newcount ← newcount + b

if b = 1:

guess a path from s to u of length ≤ l

if we don’t reach u:

return reject

if newcount == count:

return 0

if newcount == count - 1:

guess a path to v

if we find it:

return 1

else:

return reject

else:

return reject

int Number_of_Reachable_Vertices(s, l, bef):

aft ← 0

for each v:

for each u:

if (u, v) ∈ E and R(s, u, l-1, bef):

aft = aft + 1

break

return aft

Polynomial Time/Space

Padding Argument

Thm. If \(\mathsf{P=NP}\), then \(\mathsf{EXP=NEXP}\).

Pf. We need to show \(\mathsf{NEXP\subseteq EXP}\). For a language \(L\in\mathsf{ NEXP}\), we know there is some NTM \(M\) decides \(L\) in \(2^{n^c}\)time. Let

where \(1\) is a symbol not in \(L\). Clearly, \(L'\) can be decides by a polynomial time NTM. By assumption, \(L'\in\mathsf{P}\). Thus \(L\in\mathsf{EXP}\).

Rem. This proof technique is called padding argument.

Exploit it, we can generalize Savitch's Theorem.

Def. A function \(f:\mathbb{N\to N}\), where \(f(n) \ge \log n\), is called space constructible, if the function that maps \(1^n\) to the binary representation of \(f(n)\) is computable in space \(O(f(n))\).

A function \(f:\mathbb{N\to N}\), where \(f(n) \ge n\log n\), is called time constructible, if the function that maps \(1^n\) to the binary representation of \(f(n)\) is computable in time \(O(f(n))\).

Thm. For any space constructible function \(S(n)\ge \log (n)\),

Pf. Apply padding argument on \(\textsf{NL}\subseteq\mathsf{SPACE}(\log^2n)\):

For \(L\in\mathsf{NSPACE}(S(n))\), suppose NTM \(N\) decides \(L\), let

Construct the following TM \(N'\):

Count backwards the number of 0's and check there are 2^S(|x|) such

Run M_L on x

We can see that \(N'\) decides \(L'\) in \(O(S(|x|))\) space. Thus \(L'\in \mathsf{NL}\subseteq \mathsf{SPACE}(\log^2n).\) Immediately, there is a DTM \(D'\) decides \(L'\) in \(O(\log^2 n)=O(S(n)^2)\) space. Since \(f:x\to x\#0^{2^{S(|x|)}}\) can be computed in \(O(S(|x|))\) space, we derive \(L\in \mathsf{SPACE}(S(n)^2)\).

Cor.

Pf. Clearly, \(\mathsf{PSPACE\subseteq NPSPACE}\). By Savitch's theorem, \(\mathsf{NPSPACE\subseteq PSPACE}\).

Cor. For any space constructible function \(S(n)\ge \log (n)\),

Pf. Apply padding argument on \(\textsf{NL=coNL}\).

PSPACE-completeness

Def. A language \(B\) is \(\mathsf{PSPACE}\)-hard if for every \(A \in \mathsf{PSPACE}\), \(A \leq_p B\). A language \(B\) is \(\mathsf{PSPACE}\)-Complete if \(B \in \mathsf{PSPACE}\) and \(B\) is \(\mathsf{PSPACE}\)-hard.

Ex. TQBF (true quantified Boolean formula):

- Instance: A fully quantified Boolean formula \(\phi\) (can be written in prenex form)

- Problem: To decide if \(\phi\) is true

First, let's show TQBF \(\in\mathsf{PSPACE}\). We'll describe a poly-space algorithm \(A\) for evaluating \(\phi\):

A:

If 𝜙 has no quantifiers: evaluate it

If 𝜙=∀x(𝜓(x)):

call A on 𝜓(0) and on 𝜓(1)

Accept if both are true

If 𝜙=∃x(𝜓(x)):

call A on 𝜓(0) and on 𝜓(1)

Accept if either are true

The total space needed is polynomial in the number of variables (the depth of the recursion).

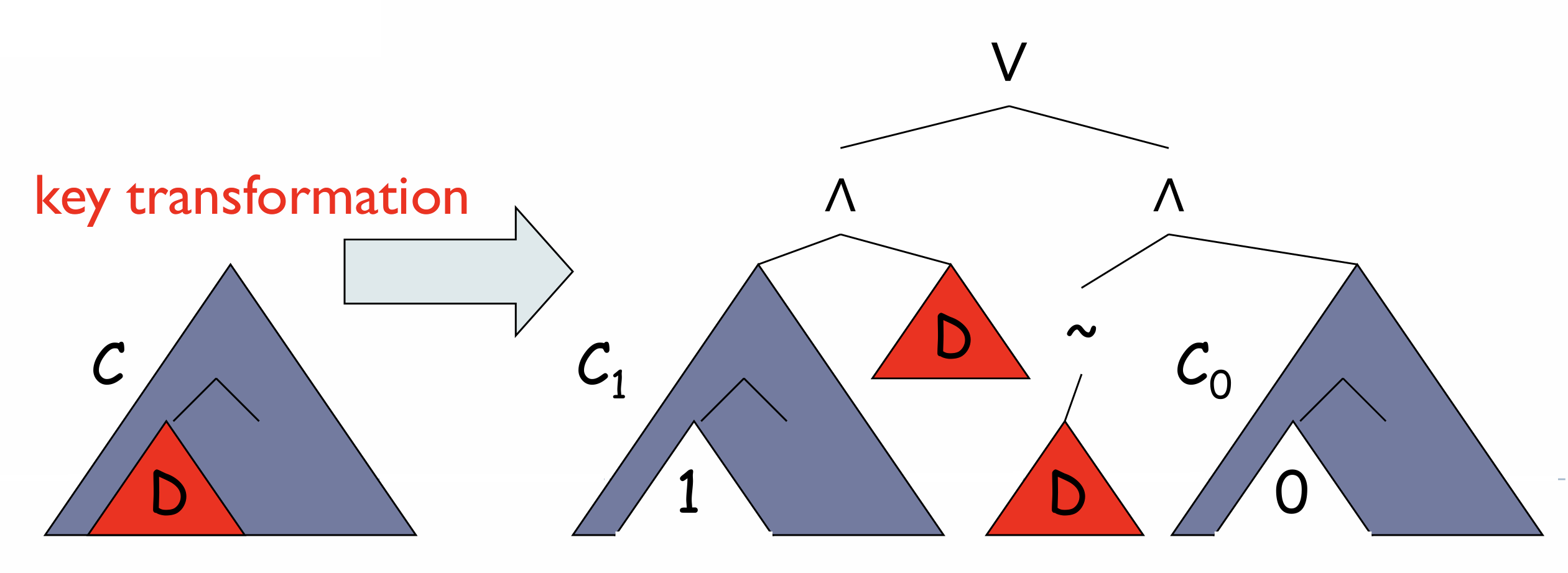

Next, show TQBF is \(\mathsf{PSPACE}\)-hard:

Given a poly-space TM \(M\) for a language \(L \in \mathsf{PSPACE}\), and an input \(x\), we construct a QBF \(\psi\) of polynomial size s.t. \(\psi\) is true iff \(M\) accepts \(x\).

In the TM \(M\) for configurations \(u, v\) and an integer \(t\), define \(\psi(u, v, t) = \text{true}\) iff \(v\) is reachable from \(u\) within \(t\) steps

-

\(\psi(u, v, 1)\) is efficiently computable

-

For \(t > 1\),

\[\begin{align*} \psi(u, v, t) =&\ (\exists m)(\forall (a,b)\in\{(u,m),(m,v)\})\psi(a,b, t/2)\\ =&\ (\exists m)(\forall a)(\forall b) [((a=u \wedge b=m)\vee (a=m\wedge b=v))\to \psi(a, b, t/2)] \end{align*} \]where \(m\) is another configuration.

Let \(S(t)\) be the size of \(\psi(u,v,t)\). Then

since \(\mathsf{PSPACE\subseteq EXPTIME}\) so \(\log t=O(|x|)\).

We can see that \(M\) accepts \(x\) iff \(\psi_{M,x}=\psi(c_{\text{start}},c_{\text{accept}},h)\) is true. Since \(\psi\) is constructible in poly-time, any \(\mathsf{PSPACE}\) language is poly-time reducible to TQBF. So far, TQBF is \(\mathsf{PSPACE}\)-Complete.

Ex. Formula Game: The game contains two players \(A\) and \(E\), with a Boolean formula \(\psi\) with variables \(x_1 ,x_2 ,...,x_n\). They will play alternately: \(A\) selects the value of \(x_1\), \(E\) selects the value of \(x_2\), \(A\) selects the value of \(x_3\), \(E\) selects the value of \(x_4\)... Finally, \(A\) wins if \(\psi\) is false, \(E\) wins if \(\psi\) is true.

We can see that \(E\) has winning strategy iff

is true, which is in the form of TQBF.

In fact, the Formula Game is \(\mathsf{PSPACE}\)-complete even if \(\psi\) is a 3-CNF. Since for any \(\psi(x)\), we can find a 3-CNF \(\phi(x,y)\) s.t. \(\psi(x)\) is true iff \((\exists y)\phi(x,y)\) is true.

Ex. Generalized geography game:

In a directed graph with a start node \(s\): Initially token is at \(s\). Player 1 and 2 alternatively choose the next node, if the token is currently at \(v\), it can choose \(w\) if \((v, w)\) is an edge. No repetition is allowed. When a player cannot move on, it loses. Who has a winning strategy here?

\(\text{GG} = {\langle G, b \rangle \mid \text{Player 1 has a winning strategy for the Generalized Geography game played on graph } G \text{ starting at node } b }.\)

Clearly, \(\text{GG}\) is in \(\mathsf{PSPACE}\). And we can reduce the Formula Game for 3CNF to GG game as follows:

Thus GG is also \(\mathsf{PSPACE}\)-complete.

Ex. Gomoku (five-in-a-row): Players alternate turns placing a stone of their color on an empty intersection. Black plays first. The winner is the first player to form an unbroken line of five stones of their color horizontally, vertically, or diagonally.

Gomoku is also \(\mathsf{PSPACE}\)-complete. [Stefan Reisch, 1980]

Lem. If \(M(x)\) takes \(f(|x|)\) time, to simulate \(M\) on a universal TM takes \(\Theta(f(|x|)\log f(|x|))\) steps.

Thm (Time Hierarchy Theorem). For any time constructible function \(f: \mathbb{N} \rightarrow \mathbb{N}\), there exists a language \(\mathcal{L}\) that is decidable in time \(O(f(n))\), but not in

Pf. Consider following TM:

D(<M>):

Simulate M with input <M> for c⋅f(n=|<M>|) steps;

if accepts then reject;

else accept;

We know that \(L(D)\) is in \(\mathsf{TIME}(f(n))\). Suppose \(L(D)\) is in \(\mathsf{TIME}\left(\frac{f(n)}{\log f(n)}\right)\), that is, there is a machine \(M'\) deciding \(L(D)\) in \(O\left(\frac{f(n)}{\log f(n)}\right)\) time. Consider \(D(\langle M'\rangle)=M'(\langle M'\rangle)\). We can make \(|\langle M'\rangle|\) not too small and \(c\) large enough, so the simulation can finish in \(cf(n)\) steps, leads to a contradiction with \(D(\langle M'\rangle)=M'(\langle M'\rangle)\).

Thm (Space Hierarchy Theorem). For any space constructible function \(f: \mathbb{N} \rightarrow \mathbb{N}\), there exists a language \(\mathcal{L}\) that is decidable in space \(O(f(n))\), but not in space \(o(f(n))\)

Cor. If \(f(n)\) and \(g(n)\) are space constructible functions, and \(f(n)=o(g(n))\), then \(\mathsf{SPACE}(f) \varsubsetneqq \mathsf{SPACE}(g)\).

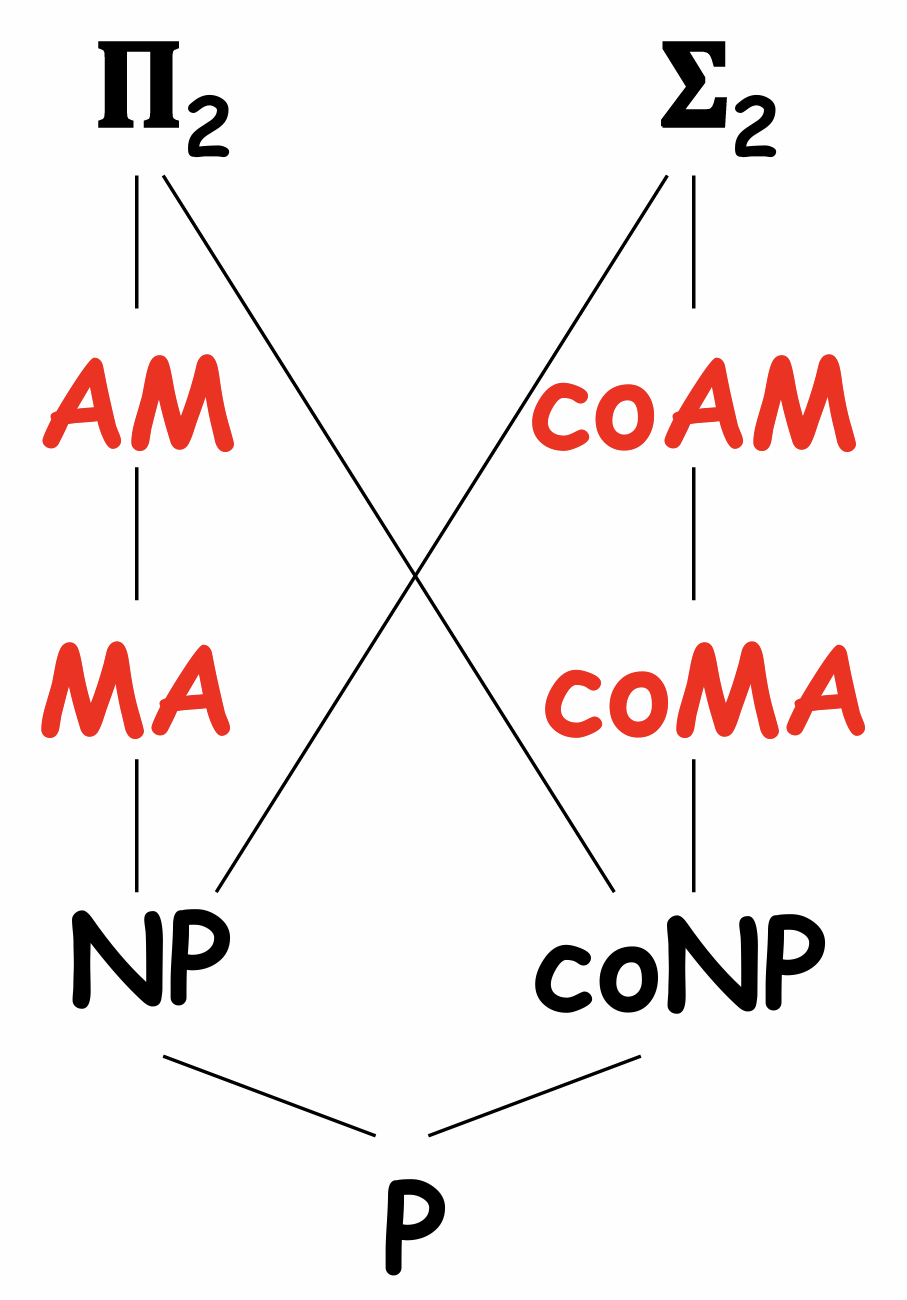

Polynomial-Time Hierarchy

In \(\mathsf{NP}\), most problems are either in \(\mathsf{P}\) or in \(\mathsf{NP}\)-Complete. Also, there are some problems we don't know whether they are in \(\mathsf{P}\) or in \(\mathsf{NP}\)-Complete.

Bold conjecture: every problem of \(\mathsf{NP}\) is either in \(\mathsf{P}\) or \(\mathsf{NP}\)-Complete?

Thm (Ladner 1975). Suppose that \(\mathsf{P} \neq \mathsf{NP}\), then there exists \(\mathsf{NP}\)-intermediate problems (in \(\mathsf{NP} \setminus \mathsf{P}\) and not \(\mathsf{NP}\)-Complete).

Pf. Define \(M_i\) to be the TM with code \(i\). Add a clock to \(M_i\) so that it runs in at most \(n^i\) steps (If it doesn't terminate after \(n^i\) steps, just reject). So we can enumerate (TMs deciding) all languages in \(\mathsf{P}\) by enumerating all polynomial time machines.

Similarly, we can also enumerate all \(\mathsf{NP}\)-hard languages: Enumerate TMs \(f_i\) computing all polynomial-time functions in \(n^i\) steps, then the NTM \(N_i\) that decides the language which SAT reduces to via \(f_i\) as the reduction virtually exists. So we can also enumerate all languages that is \(\mathsf{NP}\)-hard.

Now let's construct \(A\in\mathsf{NP}\) that is neither in \(\mathsf{P}\) nor \(\mathsf{NP}\)-hard. Recall that \(\{M_i\}\) enumerate \(\mathsf{P}\) and \(\{N_i\}\) enumerate \(\mathsf{NP}\)-hard.

-

For any \(M_i\) and any \(x\), we can always find some \(z\ge x\) on which \(M_i\) and SAT differ. (Otherwise SAT is in \(\mathsf{P}\).)

-

For any \(N_i\) and any \(x\), we can always find some \(z\ge x\) on which \(M_i\) and TRIV differ. (TRIV \(=\emptyset\))

Let \(g(n)\) be a carefully constructed function. Consider a language \(A=\{x|x\in\text{SAT and }g(|x|)\text{ is even}\}\), in other words,

- If \(g(|x|)\) is even, \(A(x)=\text{SAT}(x)\).

- If \(g(|x|)\) is odd, \(A(x)=\text{TRIV}(x)\).

Our aim: all \(M_i\) and \(N_i\) doesn’t decide \(A\) and \(A\in\mathsf{NP}\). Let \(g(0)=g(1)=2\). If \(\log^{g(n)}n\ge n\) then let \(g(n+1)=g(n)\), otherwise:

-

If \(g(n)=2i\): Find \(z\) s.t. \(|z|<\log n\), \(g(|z|)\) even and \(\text{SAT}(z)\neq M_i(z)\). If found, \(g(n+1)=g(n)+1\); otherwise, \(g(n+1)=g(n)\).

-

If \(g(n)=2i+1\): Find \(z\) s.t. \(|z|<\log n\), either

- \(g(|f_i(z)|)\) is odd and \(z\in\text{SAT}\), so \(f_i(z)\in L(N_i)\) but \(f_i(z)\notin A\).

- \(g(|f_i(z)|)\) is even and \(\text{SAT}(z)\neq \text{SAT}(f_i(z))\), so \(N_i(f_i(z))\neq A(f_i(z))\).

If found, \(g(n+1)=g(n)+1\); otherwise, \(g(n+1)=g(n)\).

Clearly, \(g\) can be computed in polynomial time so \(A\in\mathsf{NP}\). Besides, all \(M_i\) and \(N_i\) doesn’t decide \(A\).

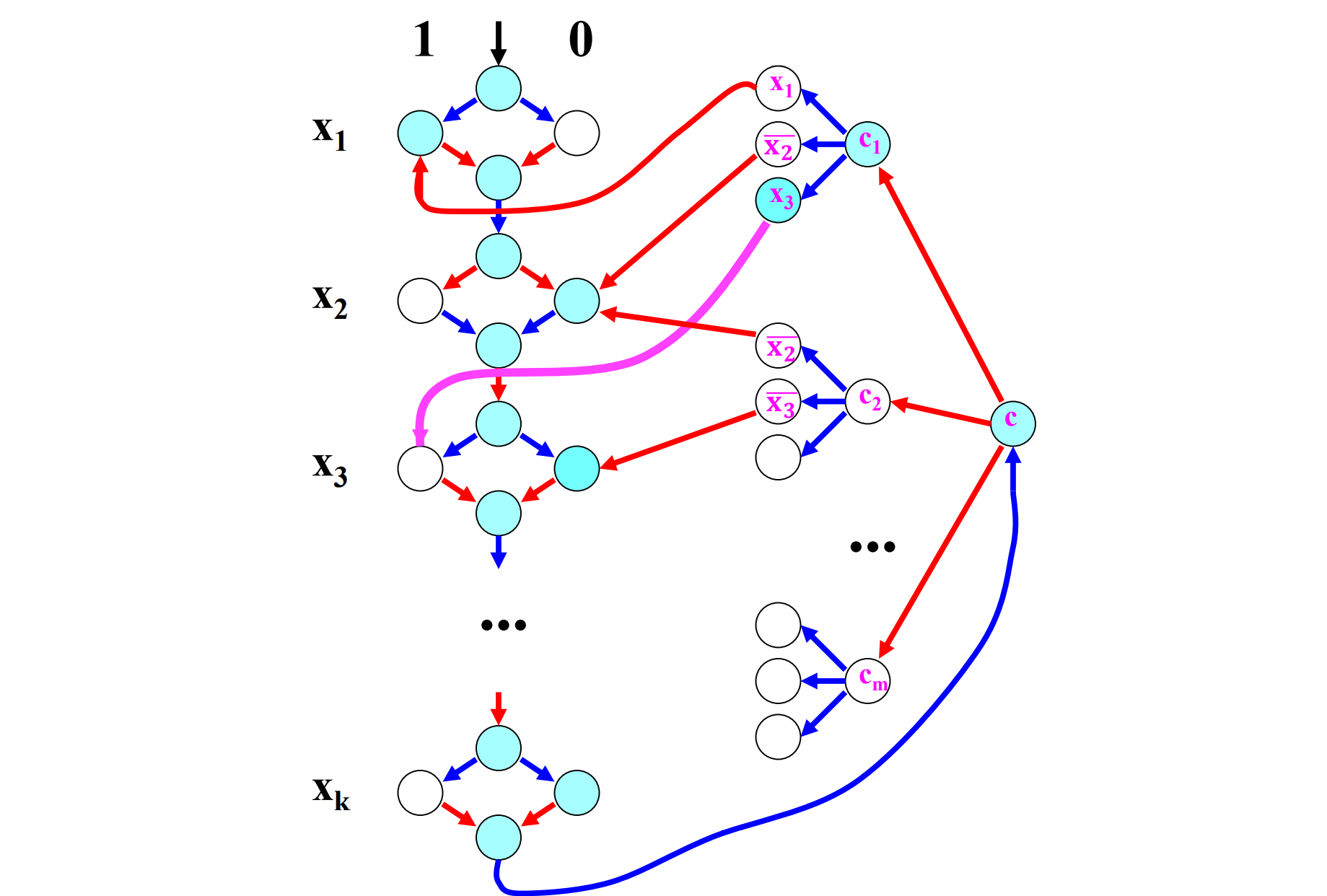

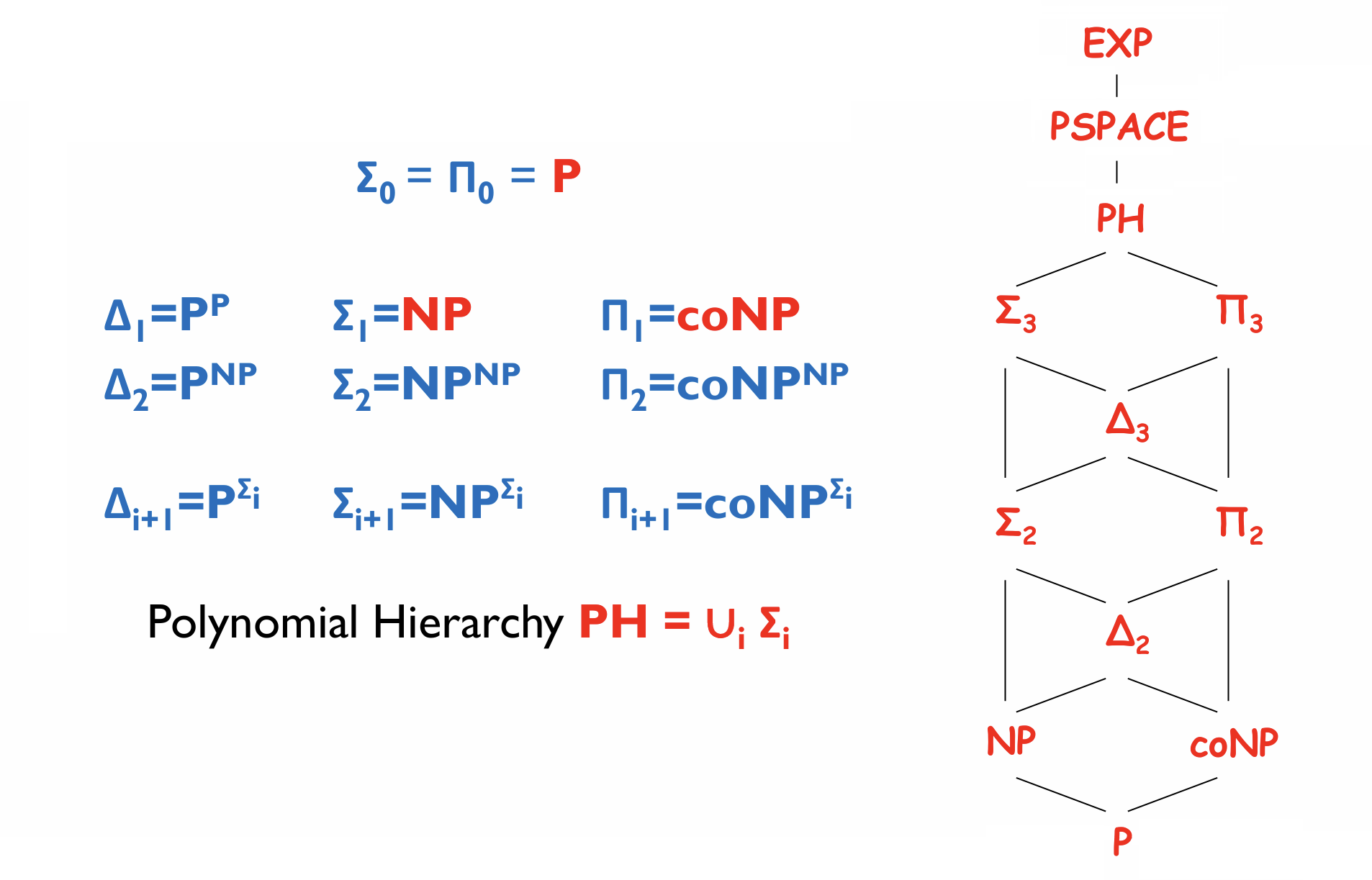

Def (Polynomial Hierarchy). For \(i\ge 1\), a language \(L\) is in \(\Sigma_i^p\) if there exists a polynomial time TM \(M\) and a polynomial \(q\) s.t.

where \(Q_i\) is \(\exists\) when \(i\) is odd; is \(\forall\) when \(i\) is even. (sometimes we can omit superscript "\(p\)"). Define \(\mathsf{PH}=\bigcup_i\Sigma_i^p\).

Symmetrically, \(\Pi_i^p=\mathsf{co}-\Sigma_i^p\): for \(i\ge 1\), a language \(L\) is in \(\Pi_i^p\) if there exists a polynomial time TM \(M\) and a polynomial \(q\) s.t.

where \(Q_i\) is \(\forall\) when \(i\) is odd; is \(\exists\) when \(i\) is even.

Ex. \(\Sigma_0^p=\mathsf{P},\Sigma_1^p=\mathsf{NP}\).

Fact.

- \(\mathsf{PH}=\bigcup_i\Pi_i^p\) since \(\Sigma_i^p\subseteq\Pi_{i+1}^p\subseteq\Sigma_{i+2}^p\).

- \(\mathsf{PH\subseteq PSPACE}\).

We can define it by oracle Turing machines. Shorthand:

-

Let \(\mathsf{C}\) be a complexity class and \(A\) be a language. Define

\[\mathsf{C}^A=\{L\text{ decided by OTM }M\text{ with oracle }A\text{ with }M\text{ ``in'' }\mathsf{C}\} \] -

Let \(\mathsf{C}\) be a complexity class and \(M\) be a OTM. Define

\[M^{\mathsf{C}}=\{L\text{ decided by OTM }M\text{ with oracle }A\text{ with }A\in C\} \] -

Both together: Let \(\mathsf{C,D}\) be complexity classes. Define

\[\mathsf{C}^{\mathsf{D}}=\{L\text{ decided by OTM ``in'' }\mathsf{C}\text{ with oracle language from } \mathsf{D}\} \]

Ex. \(\Sigma_2=\mathsf{NP}^{\mathsf{NP}},\Pi_2=\mathsf{coNP}^{\mathsf{NP}}\).

Thm (Collapse of hierarchy). If \(\mathsf{P=NP}\), then \(\mathsf{PH=P}\), that is, the hierarchy collapses to \(\mathsf{P}\).

Pf. Assume \(\Sigma_1^P=\mathsf{NP=P}\), let's prove \(\Sigma_i^p=\mathsf{P}\) by induction on \(i\).

Suppose \(\Sigma_{i-1}^p=\mathsf{P}\), we will show \(\Sigma_i^p=\mathsf{P}\). For a language in \(\Sigma_i^p\):

Define \(L'\):

Then \(L'\in\Pi_{i-1}^p=\text{co}\Sigma_{i-1}^p=\mathsf{P}\). Since \(x\in L\Leftrightarrow \exists u_1\in\{0,1\}^{q(|x|)}[(x,u_1)\in L']\), \(L\in\mathsf{NP=P}\).

Rem. Similarly, if \(\Sigma_i^p=\Pi_i^p\), \(\mathsf{PH}=\Sigma_i^p.\)

Relativization

Many proofs and techniques we have seen are relativize: they hold after replacing all TMs with oracle TMs that have access to an oracle \(A\). (including diagonalization). e.g. \(\mathsf{L}^A \subseteq \mathsf{P}^{A}\) for all oracles \(A\), and \(\mathsf{P}^{A} \neq \mathsf{EXP}^{A}\) for all oracles \(A\).

Can we solve \(\mathsf{P}\text{ v.s. }\mathsf{NP}\) using such kind of proof?

Thm (Baker, Gill, and Solovay, 1975). There exists

- oracle \(A\) for which \(\mathsf{P}^A = \mathsf{NP}^A\);

- oracle \(B\) for which \(\mathsf{P}^B \neq \mathsf{NP}^B\).

Pf. For \(\mathsf{P}^A = \mathsf{NP}^A\), need \(A\) to be powerful. \(A=\text{TQBF}\) works, since

and we know \(\mathsf{PSPACE=NPSPACE}.\)

For \(\mathsf{P}^B \neq \mathsf{NP}^B\), define another language that depends on \(B\):

Obviously, \(L(B)\in\mathsf{NP}^B\) whatever \(B\) is. We will find a language \(B\) s.t. \(L(B)\notin\mathsf{P}^B\). Consider following process:

-

Initially, \(B,X=\emptyset\). (\(X\) is used to collect strings that are excluded from \(B\), so \(B\cap X=\emptyset\).)

-

At stage \(i\), let \(M_i\) be the \(\left(i-\lfloor\sqrt{i}\rfloor^2\right)\)-th deterministic OTMs:

-

select \(n\) so that \(n>|x|\) for all \(x \in B\) and \(x \in X\) currently

-

simulate \(M_i\left(1^n\right)\) for \(n^{\log n}\) steps

-

when \(M_i\) makes an oracle query \(q\):

-

if \(|q|<n\), answer using \(B\)

-

if \(|q| \geq n\), answer "no"; add \(q\) to \(X\)

-

-

if simulated \(M_i\) accepts \(1^n\), add all \(\left\{0, 1\right\}^n\) to \(X\) (so \(1^n \notin L(B)\))

-

if simulated \(M_i\) rejects \(1^n\), add \(\left\{0, 1\right\}^n \backslash X\) to \(B\)

-

Therefore, \(L(B)\notin\mathsf{TIME}(n^{\log n})^B\), so \(L(B)\notin \mathsf{P}^B\).

Rem. This theorem tells us that resolving \(\mathsf{P}\text{ v.s. }\mathsf{NP}\) requires a non-relativizing proof.

Exponential Time

Def (Alternating Turing machines). An alternating Turing machine is a nondeterministic TM with an additional feature. Its states, except for the accept and reject states, are divided into universal states and existential states. When we run an alternating TM on an input string, we label each node of its nondeterministic computation tree with \(\wedge\) or \(\vee\), depending on whether the corresponding configuration contains a universal or existential state. We determine acceptance by designating a node to be accepting if it is labeled with \(\wedge\) and all of its children are accepting or if it is labeled with \(\vee\) and at least one of its children is accepting.

Def. We can define classes of languages that are decided by certain alternating TM:

Claim. For \(f(n)\ge n\), \(\mathsf{ATIME}(f(n)) \subseteq \mathsf{SPACE}(f(n))\).

Pf. Do a DFS to explore all possible branches.

Claim. For \(f(n)\ge n\), \(\mathsf{SPACE}(f(n)) \subseteq \mathsf{ATIME}(f(n)^2)\).

Pf. In the TM \(M\), For configurations \(u, v\) and an integer \(t\), define \(\psi(u, v, t)=\text{true}\) iff \(v\) is reachable from \(u\) within \(t\) steps. For \(t>1\),

So the depth of the recursion is \(\log 2^{O(f(n))}=O(f(n))\), and generating each configuration \(m\) takes \(O(f(n))\) time, so the total time is \(O(f(n)^2)\).

Claim. For \(f(n) \ge \log n\), \(\mathsf{ASPACE}(f(n))=\mathsf{TIME}(2^{O(f(n))})\).

Pf. \(\mathsf{ASPACE}(f(n))\subseteq\mathsf{TIME}(2^{O(f(n))})\): Since the space is \(O(f(n))\), the number of all configurations is \(2^{O(f(n))}\), simply enumerate all the possible configurations and construct the computation tree.

\(\mathsf{TIME}(2^{O(f(n))})\subseteq\mathsf{ASPACE}(f(n))\): For a machine takes \(2^{O(f(n))}\) time, consider the table for computation history. Let \(C[i,j]\) denote the \(j\)-th cell in time \(i\) in the configuration table. Notice that \(C[i,j]\) only depends on \(C[i-1,j-1],C[i-1,j],C[i-1,j+1]\). So we can construct the following algorithm:

Guess(i, j, d) { \\Guess the C[i,j]=d

for all (a, b, c) that can yield d {

if all of

Guess(i-1, j-1, a),

Guess(i-1, j, b),

Guess(i-1, j+1, c)

are true, return true;

}

return false;

}

Finally, we can check \(\text{Guess}(n, j, q_{\text{accept}})\) for all cell \(j\) in the final time \(n\). We only need to store the pointer, i.e., \(i,j,d\), so the space is \(\log 2^{O(f(n))}=O(f(n)).\)

Thm (Stockmeyer & Chandra 1979). Variants of Boolean formula games which are closer to board games are \(\mathsf{EXPTIME}\)-Complete. For example, Game 1:

- Given a Boolean formula \(\psi(x_1,...,x_m,y_1,...,y_m,t)(\psi(X,Y,t))\). Each time player 1 set \(t=1\) and set \(x_1,…, x_m(X)\) to any values, and player 2 set \(t=0\) and set \(y_1,…, y_m (Y)\) to any values. Player 1 moves first, no pass. A player loses if \(\psi\) is false after its move. Let \(\alpha\) be the initial value for \(Y\).

Pf. Recall that \(\mathsf{APSPACE=EXPTIME}\). We only need to show that Game 1 is in \(\mathsf{APSPACE}\)-Complete. Suppose \(M\) is an alternating TM in polynomial space, w.l.o.g.,

-

The initial state is existential.

-

Existential states yield universal states, and universal states yield existential states.

-

Accepting states are universal, and rejecting states are existential.

Consider the configurations of \(M\) with length polynomial in \(n\). Define \(\text{NEXT}(C, D)\) where \(C, D\) are configurations of \(M\):

- When \(C \vdash_M D\), \(\text{NEXT}(C, D) = 1\).

- If \(C\) is an accepting/rejecting configuration, \(\text{NEXT}(C, D) = 0\) for all \(D\).

Construct a Boolean formula as

and let \(\alpha\) be the initial configuration of \(M\) on input \(w\). In Game 1, player 1 sets \(t = 1\) and chooses \(X\), then \(\psi = \text{NEXT}(Y, X)\); player 2 sets \(t = 0\) and chooses \(Y\), then \(\psi = \text{NEXT}(X, Y)\). So Player 1 is always in existential states, and Player 2 is always in universal states. Every player aims to select a halting configuration: Player 1 aims to select an accepting state; Player 2 aims to select a rejecting state.

Therefore, Player 1 wins if and only if \(M\) accepts \(w\).

We can reduce Game 1 to other games.

Thm (Fraenkel & Lichtenstein, 1981). \(n\times n\) chess is \(\mathsf{EXPTIME}\)-Complete.

Thm (J. M. Robson, 1984). \(n\times n\) checkers is \(\mathsf{EXPTIME}\)-Complete.

Def. A pebble game is a quadruple \(G = (X, R, S, t)\) where:

- \(X\) is a finite set of nodes;

- \(R \subseteq \{(x, y, z): x, y, z \text{ are distinct elements in } X\}\) is set of rules;

- \(S \subseteq X\) is the places of initial pebbles;

- \(t \in X\) is the terminal node.

Two players play the game. They alternatively move pebbles using any rule in \(R\). Let \(A\) be the current places of pebbles, if \((x, y, z) \in R\) is a rule, and \(x, y \in A\) but \(z \notin A\), then the player can turn \(A\) into \((A-\{x\}) \cup \{z\}\) in one turn. The winner is the first player who can put a pebble on the terminal node, or who can make the other player unable to move.

Thm (Kasai, Adachi, Iwata, 1978) . 2-person “pebble game” is \(\mathsf{EXPTIME}\)-Complete.

Pf. Reduction from \(\mathsf{APSPACE}\) to this pebble game. In alternating TM, if \(\delta(q, a)\) contains \((q', a', d)\) where \(q, q'\) are states, \(a, a'\) are tape symbols, \(d= \pm 1\) is direction, make nodes in pebble game:

and rules:

Def. Succinct circuit SAT: given \(i\) and \(k\), a circuit outputs the index and type of the \(k\)-th adjacent gate of the \(i\)-th gate. input/output of length \(n\), polynomial circuit size.

Thm. Succinct circuit SAT is \(\mathsf{NEXP}\)-complete.

Pf. As in the proof of \(\mathsf{NP}\)-completeness of SAT, we can use a poly-size circuit to generate that formula:

Thm. Go with Japanese ko rule is \(\mathsf{EXPTIME}\)-Complete.

Thm. Minesweeper is \(\mathsf{NP}\)-Complete.

Randomized Computation

Probabilistic TM

Def (Probabilistic Turing Machine). Deterministic TM with additional read-only tape containing “coin flips”. Formally, there are (at least) three tapes:

- \(1^{\text{st}}\) Tape holds the input;

- \(2^{\text{nd}}\) Tape (also known as the random tape) is covered randomly (and independently) with 0’s and 1’s, with \(\frac{1}{2}\) probability of a \(0\) and \(\frac{1}{2}\) probability of a \(1\);

- \(3^{\text{rd}}\) Tape is used as the working tape.

Def (Monte Carlo). A Monte Carlo algorithm is a randomized algorithm whose running time is deterministic, but whose output may be incorrect with a certain (typically small) probability.

Def (Las Vegas). A Las Vegas algorithm is a randomized algorithm that always gives correct results; it gambles only with the resources used for the computation.

Additive Spanner

Def. A \(c\)-additive spanner \(S\) in \(G\) is a spanning subgraph of \(G\) which satisfies two crucial properties:

- sparse;

- for all \(u, v \in G\), \(d_S(u, v) \leq d_G(u, v) + c\).

Here we consider undirected unweighted graphs.

Thm. Any undirected unweighted graph has a \(2\)-additive spanner with \(O(n^{3/2})\) edges.

Pf. The construction of the spanner \(S\):

S=Ø

Randomly select n^{1/2} vertices, call the set W

S includes the shortest path trees from all vertices in W

for all vertices u:

if there is an edge (u, w) s.t. w ∈ W:

add (u, w) in S

else:

include all edges (u, v) in S

For a vertex \(u\), if the degree of \(u\) is \(\Omega(n^{1/2})\), w.h.p. it is adjacent to a vertex in \(W\). So \(|S|=O(n^{3/2})\).

Consider the shortest path from \(u\) to \(v\) in \(G\):

-

If \(u\) or \(v\) is in \(W\), the shortest path must also be in \(S\), it is trivial

-

Otherwise if the first edge \((u, u') \in S\), recursively consider the \((u', v)\)

-

Otherwise if the first edge \((u, u') \notin S\), \(u\) is adjacent to a \(w \in W\). We can see that \((u, w)\) and a shortest path from \(w\) to \(v\) is in \(S\). And it's not much longer than the shortest path from \(u\) to \(v\):

\[d(w, v) \leq d(u, v) + d(w, u) = d(u, v) + 1 \]so

\[d_S(u, v) \leq d(u, w) + d(w, v) = d(w, v) + 1 \leq d(u, v) + 2. \]

Thm. Any undirected unweighted graph has a \(4\)-additive spanner with \(\tilde{O}(n^{7/5})\) edges.

Pf. \(N(w)\): neighbors of \(w\). Heavy vertices: vertices with degree \(>n^{2/5}\).

Randomly select n^{2/5}*log n vertices, call the set X;

Randomly select n^{3/5}*log n vertices, call the set Y;

S includes the shortest path trees from all vertices in X

for all vertices u:

if there is an edge (u,w) s.t. w ∈ Y,

add (u,w) in S

else

include all edges (u,v) in S

for every pair of v1, v2 ∈ Y

for all shortest paths from u1 ∈ N(v1) to u2 ∈ N(v2) with < n^{1/5} heavy vertices

add the shortest one to S, as well as edges (u1, v1) and (u2, v2)

Heavy u is adjacent to a w ∈ Y

S includes all edges associated with u

Thm [Baswana, Kavitha, Mehlhorn, and Pettie, 2005]. Any undirected unweighted graph has a \(6\)-additive spanner with \(O(n^{4/3})\) edges.

Thm. There is no constant additive spanner with \(O(n^{4/3-\varepsilon})\) edges.

Primality test

Checking whether an integer \(N\) is a prime number. Expected a algorithm polynomial of the length of the number.

Thm (Fermat’s little theorem). Let \(\mathbb{Z}_p=\{0,1,...,p-1\}\) and \(\mathbb{Z}_p^+=\{1,...,p-1\}\) for any \(p\). If \(p\) is a prime,

Rem. In fact, \(\mathbb{Z}^+_p\) is a group under multiplication module \(p\), so the order of any element is a factor of the order of the group.

Rem. Intuitively, we want to use Fermat’s little theorem to test primality, that is, randomly select \(a\in\mathbb{Z}^+_p\), and check whether \(a^{p-1}\equiv 1\pmod p\). Unfortunately, the converse of Fermat’s little theorem is not always true!

Def. A Carmichael number is a composite number \(n\) which satisfies that \(\forall a\in \mathbb{Z}_n^+\) that are relatively prime to \(n\), \(a^{n-1}\equiv 1\pmod n\).

Thm. Let \(C(X)\) denotes the number of Carmichael numbers \(\le X\), \(C(X)>X^{0.333367}\).

Thm. Let \(p\) is an odd prime, for an even number \(k\) and any \(a \in Z_p^+\), if \(a^k \equiv 1 \pmod{p}\), then \(a^{k/2} \equiv \pm 1 \pmod{p}\).

Pf. Let \(b = a^{k/2} \bmod p\). Since \(b^2 = c \cdot p + 1\) for an integer \(c\), \((b+1)(b-1) = c \cdot p\), so \(b\) can only be \(1\) or \(p-1\).

Alg.

Randomly select a ∈ Z_N^+

Compute a^{N-1} mod N, if not 1, reject

Let k = N - 1

while (k is even) do

k = k/2

if (a^k mod N = -1), accept

if (a^k mod N ≠ 1 or -1), reject

accept

Thm. The above algorithm can test primality with high probability.

Pf. First, let's show if \(N\) is an odd composite, there must be an \(a \in \mathbb{Z}_N^+\) which is relatively prime to \(N\) s.t. the algorithm rejects.

- If \(N = p^i\), consider \(t = 1 + p^{i-1}\), then \(t^N \equiv 1 \pmod{N}\), so \(t^{N-1} \neq 1 \pmod{N}\),

- Otherwise, \(N = q \cdot r\), find an \(h^k \equiv -1 \pmod{N}\) with largest \(k = (N-1)/2^i\). By the Chinese remainder theorem, there exists a \(t\) such that:

therefore,

so \(t^{2k} \equiv 1 \pmod{N}\) but \(t^k \not\equiv \pm 1 \pmod{N}\).

Furthermore, we can show that at least one half of elements \(\mathbb{Z}_N^+\) can make the algorithm reject.

For \(t \in \mathbb{Z}_N^+\) that are not relatively prime to \(N\), of course reject, so we only consider numbers that are relatively prime to \(N\).

For such a \(t \in Z_N^+\) we get above, if we have two \(a, b \in \mathbb{Z}_N^+\) which make the algorithm accept so \(a^k \equiv \pm 1 \pmod{N}\), \(a^{2k} \equiv 1 \pmod{N}\), and the same as \(b\). Then \(a \cdot t\) and \(b \cdot t\) are distinct elements that makes the algorithm reject since \((a \cdot t)^k \not\equiv \pm 1 \pmod{N}\), \((a \cdot t)^{2k} \equiv 1 \pmod{N}\), the same as \(b \cdot t\). If \(a \cdot t \equiv b \cdot t \pmod{N}\), \((a-b) t = c \cdot N\) for some integer \(c\), which is impossible.

Thus, #accept elements \(\leq\) #reject elements.

Randomized Complexity Classes

Def (Bounded-error Probabilistic Poly-time, BPP). \(L \in \mathsf{BPP}\) if there is a probabilistic polynomial time (p.p.t.) TM \(M\):

Rem. Why \(\frac{2}{3}\)? In fact can be any constant between \((\frac{1}{2},1]\). (as long as it is independent of the input)

Given \(L\), and p.p.t. TM \(M\):

- \(x \in L \Rightarrow \text{Pr}_y[M(x, y) \text{ accepts}] \geq 1/2+\varepsilon\)

- \(x \notin L \Rightarrow \text{Pr}_y[M(x, y) \text{ rejects}] \geq 1/2+\varepsilon\)