微指数爬虫

做微指数的爬虫时,遇到点难题,去网上查些资料,发现有点错误;

特此经过研究,有了正确的采集方法。

1、采集界面,搜索关键字后页面跳转到指数界面,显示搜索的指数。

2、fiddler抓包定位采集数据源,加载方式为json

3、获取json数据;

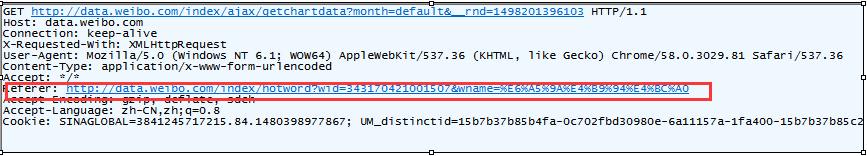

分析数据的加载方式,发现数据是在定位请求头中的referer地址进行返回数据的,

将重定向的地址解析后知道wid和wname是我们需要首先获得的,其实就是我们请求的地址。

4、代码验证,这些步骤也是网上可以搜索到的步骤

a、获取wid和对应的搜索关键字

b、拼接json数据的url

c、请求数据

5、用此方法验证的代码,结果无论搜索什么只显示欢乐颂的指数,说明该方法是不能实现的

#coding=utf-8 import sys reload(sys) sys.setdefaultencoding( "utf-8" ) import requests import urllib class xl(): def pc(self,name): url_name=urllib.quote(name) headers={ 'Host': 'data.weibo.com', 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.11; rv:46.0) Gecko/20100101 Firefox/46.0', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Language': 'zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3', 'Accept-Encoding': 'gzip, deflate', 'Content-Type': 'application/x-www-form-urlencoded', 'X-Requested-With': 'XMLHttpRequest', 'Referer': 'http://data.weibo.com/index/hotword?wname='+url_name, 'Cookie': 'UOR=www.baidu.com,data.weibo.com,www.baidu.com; SINAGLOBAL=1213237876483.9214.1464074185942; ULV=1464183246396:2:2:2:3463179069239.6826.1464183246393:1464074185944; DATA=usrmdinst_12; _s_tentry=www.baidu.com; Apache=3463179069239.6826.1464183246393; WBStore=8ca40a3ef06ad7b2|undefined; PHPSESSID=3mn5oie7g3cm954prqan14hbg5', 'Connection': 'keep-alive' } r=requests.get("http://data.weibo.com/index/ajax/getchartdata?month=default&__rnd=1464188164238",headers=headers) return r.text x=xl() print x.pc("欢乐颂")

6、纠正方法,重新进入搜索页;在红方框中重新搜索关键字进行刷新

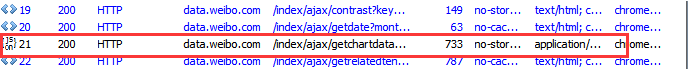

7、实际的数据加载过程为下面的四个步骤,“欢乐颂”的实际的请求url为

http://data.weibo.com/index/ajax/getchartdata?wid=1091324230349&sdate=2017-05-23&edate=2017-06-22&__rnd=1498202175662

8、获取url中的日期和wid即可以拿到数据,不需要cookie

#coding=utf-8 import requests import urllib import cProfile def search_name(name): url_format = "http://data.weibo.com/index/ajax/hotword?word={}&flag=nolike&_t=0" cookie_header = { "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.81 Safari/537.36", "Referer":"http://data.weibo.com/index?sudaref=www.google.com" } urlname = urllib.quote(name) first_requests = url_format.format(urlname) codes = requests.get(first_requests,headers=cookie_header).json() ids = codes["data"]["id"] header = { "Connection":"keep-alive", "Accept-Encoding": "gzip, deflate, sdch", "Accept": "*/*", "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.81 Safari/537.36", "Accept-Language": "zh-CN,zh;q=0.8", "Referer": "http://data.weibo.com/index/hotword?wid={}&wname={}".format(ids,urlname), "Content-Type": "application/x-www-form-urlencoded", "Host":"data.weibo.com" } #获取日期 date_url = "http://data.weibo.com/index/ajax/getdate?month=1&__rnd=1498190033389" dc = requests.get(date_url,headers=header).json() edate,sdate = dc["edate"],dc["sdate"] #数据返回 codes = requests.get("http://data.weibo.com/index/ajax/getchartdata?wid={}&sdate={}&edate={}"\ .format(ids,sdate,edate),headers=header).json() return codes if __name__ == "__main__": cProfile.run('search_name("天津")') #print search_name("天涯")

9、如有错误还请指正

浙公网安备 33010602011771号

浙公网安备 33010602011771号