class lightgbm.LGBMClassifier(boosting_type='gbdt', num_leaves=31, max_depth=-1, learning_rate=0.1, n_estimators=10, max_bin=255, subsample_for_bin=200000, objective=None, min_split_gain=0.0, min_child_weight=0.001, min_child_samples=20, subsample=1.0, subsample_freq=1, colsample_bytree=1.0, reg_alpha=0.0, reg_lambda=0.0, random_state=None, n_jobs=-1, silent=True, **kwargs)

boosting_type |

default="gbdt" |

"gbdt":Gradient Boosting Decision Tree "dart":Dropouts meet Multiple Additive Regression Trees "goss":Gradient-based One-Side Sampling "rf": Random Forest |

|

| num_leaves | (int, optional (default=31)) | 每个基学习器的最大叶子节点 | <=2^max_depth |

| max_depth | (int, optional (default=-1)) | 每个基学习器的最大深度, -1 means no limit | 当模型过拟合,首先降低max_depth |

| learning_rate | (float, optional (default=0.1)) | Boosting learning rate | |

| n_estimators | (int, optional (default=10)) | 基学习器的数量 | |

| max_bin | (int, optional (default=255)) | feature将存入的bin的最大数量,应该是直方图的k值 | |

| subsample_for_bin | (int, optional (default=50000)) | Number of samples for constructing bins | |

| objective | (string, callable or None, optional (default=None)) |

default: ‘regression’ for LGBMRegressor, ‘binary’ or ‘multiclass’ for LGBMClassifier, ‘lambdarank’ for LGBMRanker. |

|

| min_split_gain | (float, optional (default=0.)) | 树的叶子节点上进行进一步划分所需的最小损失减少 | |

| min_child_weight | (float, optional (default=1e-3)) |

Minimum sum of instance weight(hessian) needed in a child(leaf) |

|

| min_child_samples |

(int, optional (default=20)) | 叶子节点具有的最小记录数 | |

| subsample |

(float, optional (default=1.)) | 训练时采样一定比例的数据 | |

| subsample_freq | (int, optional (default=1)) | Frequence of subsample, <=0 means no enable | |

| colsample_bytree |

(float, optional (default=1.)) | Subsample ratio of columns when constructing each tree | |

| reg_alpha |

(float, optional (default=0.)) | L1 regularization term on weights | |

|

reg_lambda

|

(float, optional (default=0.)) | L2 regularization term on weights | |

|

random_state

|

(int or None, optional (default=None)) | ||

| silent | (bool, optional (default=True)) | ||

| n_jobs | (int, optional (default=-1)) |

######################################################################################################

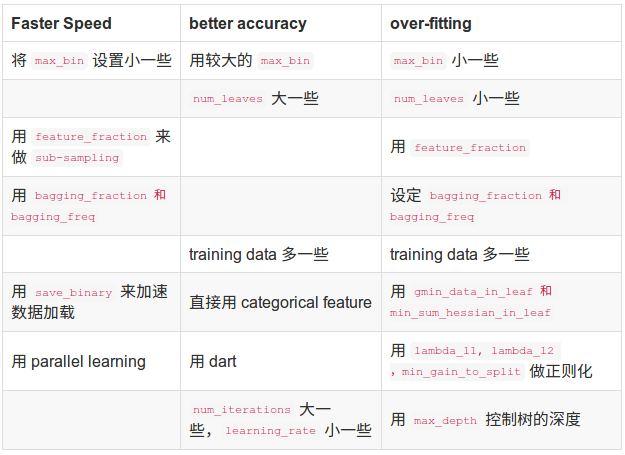

下表对应了Faster Spread,better accuracy,over-fitting三种目的时,可以调整的参数:

###########################################################################################

类的属性:

| n_features_ | int | 特征的数量 |

| classes_ | rray of shape = [n_classes] | 类标签数组(只针对分类问题) |

| n_classes_ | int | 类别数量 (只针对分类问题) |

| best_score_ | dict or None | 最佳拟合模型得分 |

| best_iteration_ | int or None | 如果已经指定了early_stopping_rounds,则拟合模型的最佳迭代次数 |

| objective_ | string or callable | 拟合模型时的具体目标 |

| booster_ | Booster | 这个模型的Booster |

| evals_result_ | dict or None | 如果已经指定了early_stopping_rounds,则评估结果 |

| feature_importances_ | array of shape = [n_features] | 特征的重要性 |

###########################################################################################

类的方法:

fit(X, y, sample_weight=None, init_score=None, eval_set=None, eval_names=None, eval_sample_weight=None, eval_init_score=None, eval_metric='logloss', early_stopping_rounds=None, verbose=True, feature_name='auto', categorical_feature='auto', callbacks=None)

| X | array-like or sparse matrix of shape = [n_samples, n_features] | 特征矩阵 |

| y | array-like of shape = [n_samples] | The target values (class labels in classification, real numbers in regression) |

| sample_weight | array-like of shape = [n_samples] or None, optional (default=None)) | 样本权重,可以采用np.where设置 |

| init_score | array-like of shape = [n_samples] or None, optional (default=None)) | Init score of training data |

| group | array-like of shape = [n_samples] or None, optional (default=None) | Group data of training data. |

| eval_set | list or None, optional (default=None) | A list of (X, y) tuple pairs to use as a validation sets for early-stopping |

| eval_names | list of strings or None, optional (default=None) | Names of eval_set |

| eval_sample_weight | list of arrays or None, optional (default=None) | Weights of eval data |

| eval_init_score | list of arrays or None, optional (default=None) | Init score of eval data |

| eval_group | list of arrays or None, optional (default=None) | Group data of eval data |

| eval_metric | string, list of strings, callable or None, optional (default="logloss") | "mae","mse",... |

| early_stopping_rounds | int or None, optional (default=None) | 一定rounds,即停止迭代 |

| verbose | bool, optional (default=True) | |

| feature_name | list of strings or 'auto', optional (default="auto") | If ‘auto’ and data is pandas DataFrame, data columns names are used |

| categorical_feature | list of strings or int, or 'auto', optional (default="auto") | If ‘auto’ and data is pandas DataFrame, pandas categorical columns are used |

| callbacks | list of callback functions or None, optional (default=None) |

###############################################################################################

| X | array-like or sparse matrix of shape = [n_samples, n_features] | Input features matrix |

| raw_score | bool, optional (default=False) | Whether to predict raw scores |

| num_iteration | int, optional (default=0) | Limit number of iterations in the prediction; defaults to 0 (use all trees). |

| Returns | predicted_probability | The predicted probability for each class for each sample. |

| Return type | array-like of shape = [n_samples, n_classes] |

不平衡处理的参数:

1.一个简单的方法是设置is_unbalance参数为True或者设置scale_pos_weight,二者只能选一个。 设置is_unbalance参数为True时会把负样本的权重设为:正样本数/负样本数。这个参数只能用于二分类。

2.自定义评价函数:

https://cloud.tencent.com/developer/article/1357671

lightGBM的原理总结:

http://www.cnblogs.com/gczr/p/9024730.html

论文翻译:https://blog.csdn.net/u010242233/article/details/79769950,https://zhuanlan.zhihu.com/p/42939089

处理分类变量的原理:https://blog.csdn.net/anshuai_aw1/article/details/83275299

CatBoost、LightGBM、XGBoost的对比

https://blog.csdn.net/LrS62520kV/article/details/79620615

浙公网安备 33010602011771号

浙公网安备 33010602011771号