爬虫学习--Requests库详解 Day2

什么是Requests

Requests是用python语言编写,基于urllib,采用Apache2 licensed开源协议的HTTP库,它比urllib更加方便,可以节约我们大量的工作,完全满足HTTP测试需求。

一句话总结:它是Python实现的简单易用的HTTP库

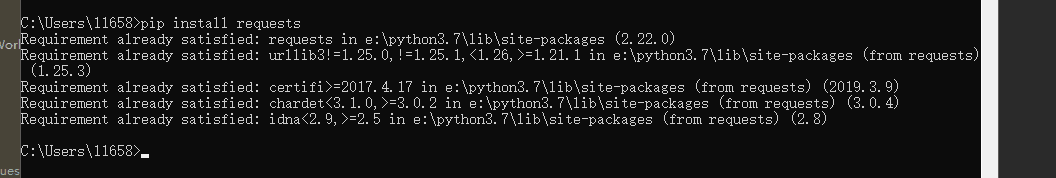

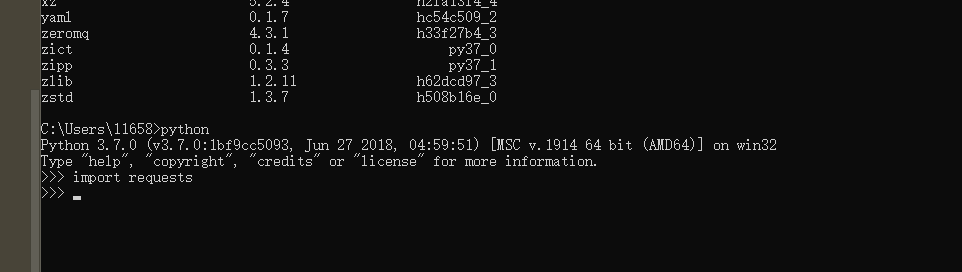

安装Requests pip install requests

验证没有报错,表示已经成功的安装了

实例引入

1 import requests 2 response = requests.get('https://www.baidu.com') 3 print(type(response)) 4 print(response.status_code) # 状态码 5 print(type(response.text)) 6 print(response.text) # 响应的内容 7 print(response.cookies) # 获取cookie

各种请求方式

1 import requests 2 print(requests.post('http://httpbin.org/post')) # 3 print(requests.put('http://httpbin.org/put')) 4 print(requests.delete('http://httpbin.org/delete')) 5 print(requests.head('http://httpbin.org/get')) 6 print(requests.options('http://httpbin.org/get'))

Requests库请求具体怎么用的

基本GET请求-----------------------------------------------------------------------------------------------------------

基本写法

1 import requests 2 3 response = requests.get('http://httpbin.org/get') 4 print(response.text) # 请求头啊,请求的IP地址,请求的链接

带参数的GET请求

1 import requests 2 3 response = requests.get('http://httpbin.org/get?name=germey&age=22') 4 print(response.text)

也可以用字典的形式传参 params

1 import requests 2 3 data = { 4 'name':'xiaohu', 5 'age':'21' 6 } 7 response = requests.get('http://httpbin.org/get',params=data) 8 print(response.text)

解析json

1 import requests 2 3 response = requests.get('http://httpbin.org/get') 4 print(type(response.text)) 5 print(response.json()) 6 print(type(response.json())) # 它是一个字典的类型

区别用json.loads与直接.json有什么不一样,结果其实是一样的

1 import requests 2 import json 3 4 response = requests.get('http://httpbin.org/get') 5 print(type(response.text)) 6 print(response.json()) 7 print(json.loads(response.text)) #区别用json.loads与直接.json有什么不一样,结果其实是一样的 8 print(type(response.json())) # 它是一个字典的类型

获取二进制数据

1 import requests 2 3 response = requests.get('https://github.com/favicon.ico') 4 print(type(response.text),type(response.content)) # .content是二进制内容 5 print(response.text) 6 print(response.content)

获取到图片把它保存

1 import requests 23 response = requests.get('https://github.com/favicon.ico') 4 with open('favicon','wb') as f: 5 f.write(response.content) 6 f.close()

1 添加headers 如果不加headers 报错500 2 import requests 3 4 headers = { 5 'User-Agent':'Mozilla/5.0(Macintosh;intel Mac OS X 10_11_4)AppleWebKit/537.36(KHTML,like Gecko)Chrome/52.0.2743.116 Safari/537.36' 6 } 7 response = requests.get("https://www.zhihu.com/explore",headers=headers) 8 print(response.text)

基本POST请求

1 import requests 2 3 data = { 4 'name':'xiaohu', 5 'age':'21', 6 'job':'IT' 7 } 8 response = requests.post('http://httpbin.org/post',data=data) 9 print(response.text)

1 import requests 2 3 data = { 4 'name':'xiaohu', 5 'age':'21', 6 'job':'IT' 7 } 8 headers = { 9 'User-Agent':'Mozilla/5.0(Macintosh;intel Mac OS X 10_11_4)AppleWebKit/537.36(KHTML,like Gecko)Chrome/52.0.2743.116 Safari/537.36' 10 } 11 response = requests.post('http://httpbin.org/post',data=data,headers=headers) 12 print(response.json())

响应

response属性

1 import requests 2 3 response = requests.get('http://www.jianshu.com') 4 print(type(response.status_code),response.status_code) # 状态码 5 print(type(response.headers),response.headers) # 请求头 6 print(type(response.cookies),response.cookies) # 7 print(type(response.url),response.url) 8 print(type(response.history),response.history) # 访问的历史记录

高级操作

文件上传

1 import requests 2 3 files = { 4 'file':open('favicon','rb') 5 } 6 response = requests.post('http://httpbin.org/post',files=files) 7 print(response.text)

获取cookie

1 import requests 2 3 response = requests.get('https://www.baidu.com') 4 print(response.cookies) 5 print(type(response.cookies)) 6 for key,value in response.cookies.items(): 7 print(key+'='+value)

会话维持

1 import requests 2 requests.get('http://httpbin.org/cookies/set/number/1165872335') # 为网站设置一个cookies 3 response = requests.get('http://httpbin.org/cookies') # 再用get访问这个cookies 4 print(response.text) # 为空,因为这里进行了两次get请求,相当于两个浏览器分别设置cookies和访问cookies,相对独立的

改进

1 import requests 2 S = requests.Session() # 声明对象 3 S.get('http://httpbin.org/cookies/set/number/1165872335') # 实现在同一个浏览器进行设置rookies和访问rookies 4 response = S.get('http://httpbin.org/cookies') # 再用get访问这个cookies 5 print(response.text) # 此时不为空

证书验证

1 import requests 2 from requests.packages import urllib3 3 urllib3.disable_warnings() # 消除警告信息 4 5 response = requests.get('https://www.12306.cn',verify=False) # verify=False 不需要验证进入,但是有警告 6 print(response.status_code)

1 import requests 2 3 response = requests.get('https://www.12306.cn',cert=('/path/server.crt','/path/key')) # 指定的证书 4 print(response.status_code)

代理设置

1 import requests 2 import socks5 3 4 proxies = { 5 "http":"socks5://127.0.0.1:8080", 6 "https":"socks5://127.0.0.1:8080", 7 } 8 9 response = requests.get('https://www.taobao.com',proxies=proxies) 10 print(response.status_code)

超时设置

1 import requests 2 from requests.exceptions import ReadTimeout 3 4 try: 5 response = requests.get('https://www.httpbin.org/get',timeout = 0.2) 6 print(response.status_code) 7 except ReadTimeout: 8 print("timeout")

认证设置

1 import requests 2 from requests.auth import HTTPBasicAuth 3 4 r = requests.get('http://127.27.34.24:9001',auth=HTTPBasicAuth('user','123')) 5 print(r.status_code) 6 7 # 第二种方式 8 import requests 9 10 r = requests.get('http://127.27.34.24:9001',auth=('user','123')) 11 print(r.status_code)

异常处理

import requests from requests.exceptions import ReadTimeout,HTTPError,RequestException,ConnectionError try: response = requests.get('http://httpbin.org/get',timeout = 0.2) print(response.status_code) except ReadTimeout: print('Timeout') except HTTPError: print('Http error') except ConnectionError: print('Connection Error') except RequestException: print('error')