zookeeper集群搭建

zookeeper下载地址:https://zookeeper.apache.org/releases.html

制作zookeeper镜像

将压缩包上传到服务器上并解压:

链接:https://pan.baidu.com/s/1z9j57t1joScozOjxnqzB3w

提取码:hkc9

下载slim_java:8镜像,并上传到harbor(zookeeper需要jdk环境)

nerdctl pull elevy/slim_java:8 nerdctl tag elevy/slim_java:8 harbor.wyh.net/test-sy/slim_java:8 nerdctl push harbor.wyh.net/test-sy/slim_java:8

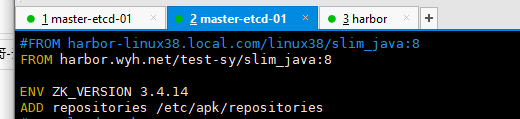

修改dockerfile,将源镜像地址配置成:harbor.wyh.net/test-sy/slim_java:8

修改build-command.sh文件(镜像制作脚本),将镜像上传到harbor上:

cat <<EOF >build-command.sh #!/bin/bash TAG=$1 #docker build -t harbor.magedu.net/magedu/zookeeper:${TAG} . #sleep 1 #docker push harbor.magedu.net/magedu/zookeeper:${TAG} nerdctl build -t harbor.wyh.net/test-sy/zookeeper:${TAG} . nerdctl push harbor.wyh.net/test-sy/zookeeper:${TAG} EOF chmod +x build-command.sh bash build-command.sh v20220817

运行服务

在存储服务(nfs共享目录)中创建zookeeper使目录

mkdir /data/kubernetes/wyh/{zookeeper-datadir-1,zookeeper-datadir-2,zookeeper-datadir-3} -p

创建pv

cat <<EOF >zookeeper-pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: zookeeper-datadir-pv-1 spec: capacity: storage: 5Gi accessModes: - ReadWriteOnce nfs: server: 192.168.213.21 path: /data/kubernetes/wyh/zookeeper-datadir-1 --- apiVersion: v1 kind: PersistentVolume metadata: name: zookeeper-datadir-pv-2 spec: capacity: storage: 5Gi accessModes: - ReadWriteOnce nfs: server: 192.168.213.21 path: /data/kubernetes/wyh/zookeeper-datadir-2 --- apiVersion: v1 kind: PersistentVolume metadata: name: zookeeper-datadir-pv-3 spec: capacity: storage: 5Gi accessModes: - ReadWriteOnce nfs: server: 192.168.213.21 path: /data/kubernetes/wyh/zookeeper-datadir-3 EOF kubectl apply -f zookeeper-pv.yaml

创建pvc关联pv

cat <<EOF >zookeeper-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: zookeeper-datadir-pvc-1 namespace: wyh-ns spec: accessModes: - ReadWriteOnce volumeName: zookeeper-datadir-pv-1 resources: requests: storage: 5Gi --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: zookeeper-datadir-pvc-2 namespace: wyh-ns spec: accessModes: - ReadWriteOnce volumeName: zookeeper-datadir-pv-2 resources: requests: storage: 5Gi --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: zookeeper-datadir-pvc-3 namespace: wyh-ns spec: accessModes: - ReadWriteOnce volumeName: zookeeper-datadir-pv-3 resources: requests: storage: 5Gi EOF kubectl apply -f zookeeper-pvc.yaml

创建zookeeper的deploy文件

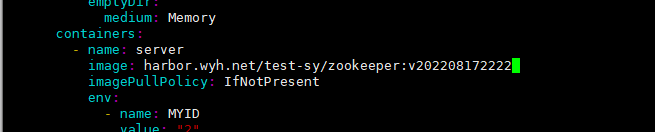

cat <<EOF >zookeeper-deploy.yaml apiVersion: v1 kind: Service metadata: name: zookeeper namespace: wyh-ns spec: ports: - name: client port: 2181 selector: app: zookeeper --- apiVersion: v1 kind: Service metadata: name: zookeeper1 namespace: wyh-ns spec: type: NodePort ports: - name: client port: 2181 nodePort: 32181 - name: followers port: 2888 - name: election port: 3888 selector: app: zookeeper server-id: "1" --- apiVersion: v1 kind: Service metadata: name: zookeeper2 namespace: wyh-ns spec: type: NodePort ports: - name: client port: 2181 nodePort: 32182 - name: followers port: 2888 - name: election port: 3888 selector: app: zookeeper server-id: "2" --- apiVersion: v1 kind: Service metadata: name: zookeeper3 namespace: wyh-ns spec: type: NodePort ports: - name: client port: 2181 nodePort: 32183 - name: followers port: 2888 - name: election port: 3888 selector: app: zookeeper server-id: "3" --- kind: Deployment #apiVersion: extensions/v1beta1 apiVersion: apps/v1 metadata: name: zookeeper1 namespace: wyh-ns spec: replicas: 1 selector: matchLabels: app: zookeeper template: metadata: labels: app: zookeeper server-id: "1" spec: volumes: - name: data emptyDir: {} - name: wal emptyDir: medium: Memory containers: - name: server image: harbor.wyh.net/test-sy/zookeeper:v20220817 imagePullPolicy: IfNotPresent env: - name: MYID value: "1" - name: SERVERS value: "zookeeper1,zookeeper2,zookeeper3" - name: JVMFLAGS value: "-Xmx2G" ports: - containerPort: 2181 - containerPort: 2888 - containerPort: 3888 volumeMounts: - mountPath: "/zookeeper/data" name: zookeeper-datadir-pvc-1 volumes: - name: zookeeper-datadir-pvc-1 persistentVolumeClaim: claimName: zookeeper-datadir-pvc-1 --- kind: Deployment #apiVersion: extensions/v1beta1 apiVersion: apps/v1 metadata: name: zookeeper2 namespace: wyh-ns spec: replicas: 1 selector: matchLabels: app: zookeeper template: metadata: labels: app: zookeeper server-id: "2" spec: volumes: - name: data emptyDir: {} - name: wal emptyDir: medium: Memory containers: - name: server image: harbor.wyh.net/test-sy/zookeeper:v20220817 imagePullPolicy: IfNotPresent env: - name: MYID value: "2" - name: SERVERS value: "zookeeper1,zookeeper2,zookeeper3" - name: JVMFLAGS value: "-Xmx2G" ports: - containerPort: 2181 - containerPort: 2888 - containerPort: 3888 volumeMounts: - mountPath: "/zookeeper/data" name: zookeeper-datadir-pvc-2 volumes: - name: zookeeper-datadir-pvc-2 persistentVolumeClaim: claimName: zookeeper-datadir-pvc-2 --- kind: Deployment #apiVersion: extensions/v1beta1 apiVersion: apps/v1 metadata: name: zookeeper3 namespace: wyh-ns spec: replicas: 1 selector: matchLabels: app: zookeeper template: metadata: labels: app: zookeeper server-id: "3" spec: volumes: - name: data emptyDir: {} - name: wal emptyDir: medium: Memory containers: - name: server image: harbor.wyh.net/test-sy/zookeeper:v20220817 imagePullPolicy: IfNotPresent env: - name: MYID value: "3" - name: SERVERS value: "zookeeper1,zookeeper2,zookeeper3" - name: JVMFLAGS value: "-Xmx2G" ports: - containerPort: 2181 - containerPort: 2888 - containerPort: 3888 volumeMounts: - mountPath: "/zookeeper/data" name: zookeeper-datadir-pvc-3 volumes: - name: zookeeper-datadir-pvc-3 persistentVolumeClaim: claimName: zookeeper-datadir-pvc-3 EOF kubectl apply -f zookeeper-deploy.yaml kubectl get pod -nwyh-ns | grep zookeeper

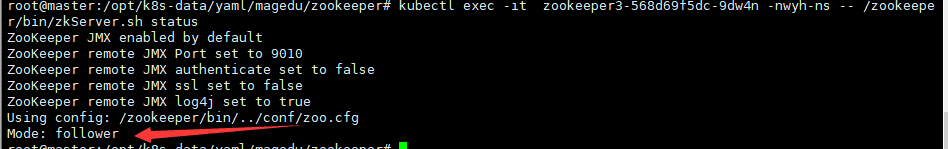

!!!验证集群!!!

kubectl exec -it zookeeper3-568d69f5dc-9dw4n -nwyh-ns -- /zookeeper/bin/zkServer.sh status

有follower节点,证明是集群

测试leader节点出现问题后是否会重新选举(因为现在2节点是leader,现在将2节点搞坏):

将拉取的镜像改成一个不存在的镜像

kubectl apply -f zookeeper-deploy.yaml kubectl get pod -nwyh-ns | grep zookeeper

检查哪个节点是leader

事实证明,3已经是leader,集群搭建成功!!!

######################################################################################################

zookeeper集群:半数机制,需要集群中需要有半数以上的节点存活,所以需要奇数节点(至少三台)

集群节点id:myid,每个节点的编号,在集群中唯一表示

事务id:zxid,zookeeper会给给一个请求分配事务id,由leader同一分配,全局唯一,不断递增

集群服务器角色:

-

leader:主节点

-

follower:从节点

-

observer:观察者

集群节点状态:

-

LOOKING:寻找leader状态,此时正在选举

-

FOLLOWING:表示当前节点是follower

-

LEADING:当前节点是主节点

-

OBSERVING:观察者状态(不参加竞选投票)

选举场景:

-

Zookeeper集群初始化时选举

-

leader节点异常失联时选举

选举前提条件

-

集群节点要保证一半以上的节点运行,如果集群节点有3台,那要保证2台以上(包括2台)正常使用,如果有5台,那要保证3台以上(包括3台)正常使用

-

节点处于LOOKING状态,说明此时集群没有leader

初始化选举:例,集群有a(myid=2),b(myid=2),c(myid=3),d(myid=4),e(myid=5)五个个节点

-

a向自己投一票,此时a票数为1,票数为过半,所以a处于LOOKING状态

-

b向自己投一票,然后和a交换选票信息,a发现b的myid大于自己,所以a将票投给b,a为0票,b为2票,为过半,a和b状态都为LOOKING

-

c发起一次选举,同理a和b会将选票信息给c,c的票数为三,大于一半,所以c改为LEADING,a和b状态改为FOLLOWING

-

d发起一次选举,因为a和b已经不是LOCKING,所以他只有自己一票,少数服从多数,d也将选票给c,d为FOLLOWING

-

e同理d

集群重新选举:

-

首先比较zxid,zxid大的为LEADING

-

zxid一样那就比较myid