第二次作业

一、

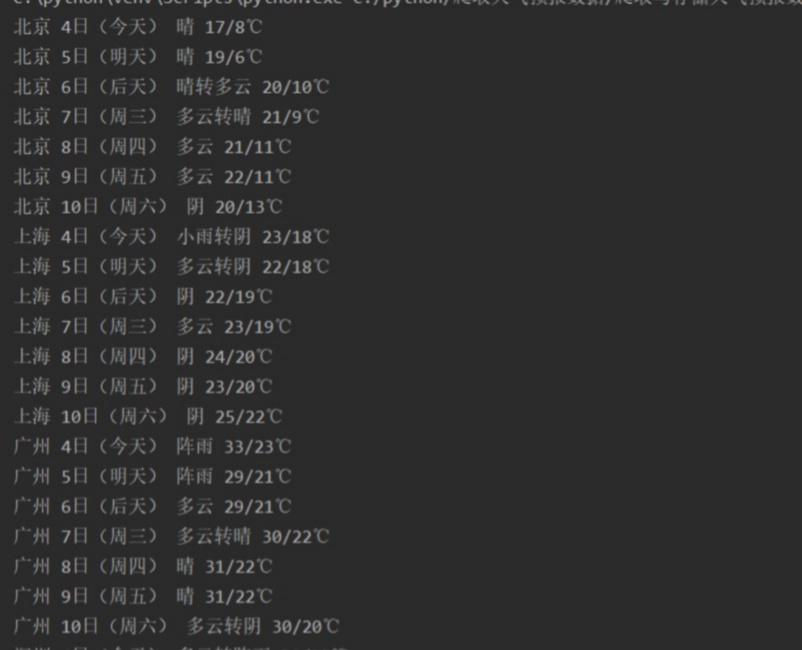

(1)在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

from bs4 import BeautifulSoup from bs4 import UnicodeDammit import urllib.request import sqlite3 class WeatherDB: def openDB(self): self.con = sqlite3.connect("weathers.db") self.cursor = self.con.cursor() try: self.cursor.execute("create table weathers (wNum varchar(16),wCity varchar(16),wDate varchar(16),wWeather varchar(64),wTemp varchar(32),constraint pk_weather primary key (wCity,wDate))") except: self.cursor.execute("delete from weathers") def closeDB(self): self.con.commit() self.con.close() def insert(self,num,city,date,weather,temp): try: self.cursor.execute("insert into weathers (wNum,wCity,wDate,wWeather,wTemp) values(?,?,?,?,?)",(num,city,date,weather,temp)) except Exception as err: print(err) def show(self): self.cursor.execute("select * from weathers") rows = self.cursor.fetchall() print("%-16s%-16s%-16s%-32s%-16s"%("序号","地区","日期","天气信息","温度")) for row in rows: print("%-16s%-16s%-16s%-32s%-16s"%(row[0],row[1],row[2],row[3],row[4])) class WeatherForecast: def __init__(self): self.headers={ "User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre" } self.cityCode={"北京":"101010100","上海":"101020100","广州":"101280101","深圳":"101280601"} def forecastCity(self,city): if city not in self.cityCode.keys(): print(city+" code cannot be found") return url="http://www.weather.com.cn/weather/"+self.cityCode[city]+".shtml" try: req=urllib.request.Request(url,headers=self.headers) data=urllib.request.urlopen(req) data=data.read() dammit=UnicodeDammit(data,["utf-8","gbk"]) data=dammit.unicode_markup soup=BeautifulSoup(data,"lxml") lis=soup.select("ul[class='t clearfix'] li") num=0 for li in lis: try: num=num+1 data=li.select('h1')[0].text weather=li.select('p[class="wea"]')[0].text temp=li.select('p[class="tem"] span')[0].text+"/"+li.select('p[class="tem"] i')[0].text print(num,city,data,weather,temp) self.db.insert(num,city,data,weather,temp) except Exception as err: print(err) except Exception as err: print(err) def process(self,cities): self.db=WeatherDB() self.db.openDB() for city in cities: self.forecastCity(city) self.db.show() self.db.closeDB() ws=WeatherForecast() ws.process(["北京","上海","广州","深圳"]) print("完成")

心得体会

本次作业的代码主要是参考课本上的例题,对书本给出的代码进行理解和复现,约会了数据库的应用

作业二:

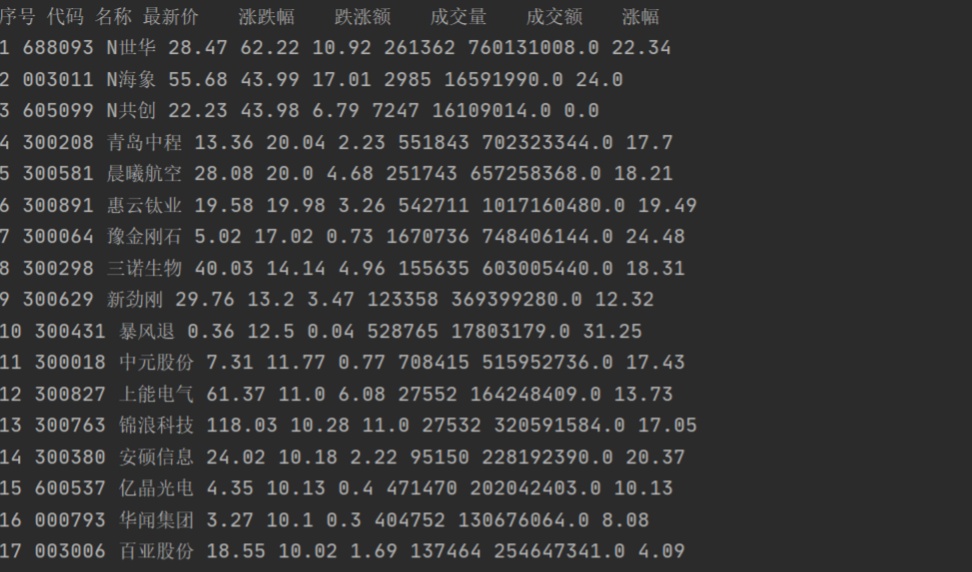

(1)用requests和BeautifulSoup库方法定向爬取股票相关信息。

import re import requests def getHtml(fs, pn):#fs控制股票号,pn控制页数 url = "http://56.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112409968248217612661_1601548126340&pn=" + str(pn) + "&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=" + fs + "&fields=f12,f14,f2,f3,f4,f5,f6,f7" r = requests.get(url) pat = '"diff":\[\{(.*?)\}\]' data = re.compile(pat, re.S).findall(r.text) return data # 获取股票数据 print("序号\t代码\t名称\t最新价\t涨跌幅\t跌涨额\t成交量\t成交额\t涨幅") def getOnePageStock(sort, fs, pn): data = getHtml(fs, pn) datas = data[0].split("},{") for i in range(len(datas)): line = datas[i].replace('"', "").split(",") print(sort, line[6][4:], line[7][4:], line[0][3:], line[1][3:], line[2][3:], line[3][3:], line[4][3:], line[5][3:]) # 输出 sort += 1 return sort def main(): sort = 1# 代码序号 pn = 1 #页数 fs = { "沪深A股": "m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23", "上证A股": "m:1+t:2,m:1+t:23", "深证A股": "m:0+t:6,m:0+t:13,m:0+t:80", "新股": "m:0+f:8,m:1+f:8", "中小板": "m:0+t:13", "创业板": "m:0+t:80", "科技版": "m:1+t:23" } #要爬取的股票 for i in fs.keys(): sort = getOnePageStock(sort, fs[i], pn) main()

心得体会:与之前作业不同的是,本次作业需要抓取js信息,学会了使用抓包工具获取网页数据。

三、

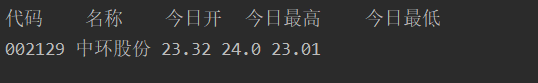

(1)根据自选3位数+学号后3位选取股票,获取印股票信息。

import re import requests def getHtml(sort): url = "http://push2.eastmoney.com/api/qt/stock/get? ut=fa5fd1943c7b386f172d6893dbfba10b&invt=2&fltt=2&fields=f44,f45,f46,f57,f58&secid=0."+sort+"&cb=jQuery112409396991179940428_1601692476366" r = requests.get(url) data = re.findall('{"f.*?}', r.text) return data # 获取股票数据 print("代码\t名称\t今日开\t今日最高\t今日最低") def getOnePageStock(sort): data = getHtml(sort) datas = data[0].split("},{") for i in range(len(datas)): line = datas[i].replace('"', "").split(",") print(line[3][4:], line[4][4:8], line[2][4:], line[0][5:], line[1][4:]) def main(): sort = "002129" # 并不是每股股票都存在,事先选择一支存在的股票 try: getOnePageStock(sort) except: print("该股票不存在!") main()

心得体会:在上一次作业的基础上,手动选择股票代码