python_day9

小甲鱼python学习笔记

爬虫

一:入门

1.python如何访问互联网

View Code

View Code

>>> import wwq >>> import urllib.request >>> response=urllib.request.urlopen("http://www.fishc.com") >>> html=response.read() >>> html=html.decode("utf-8")#解码操作 >>> print(html) 输出为这个网页的HTML

2.在某网页(http://placekitten.com)下载一只猫

import urllib.request response=urllib.request.urlopen('http://placekitten.com/200/300') cat_img=response.read() with open('cat_200_300','wb')as f: f.write(cat_img) response.geturl() response.info() print(response.info()) print(response.getcode())

3.爬虫访问有道翻译时报错"errorCode":50

解决办法:将在审查元素中获得的url中translate后面的_o去掉,错误就消失了,可以正常爬取。不知道为什么。

刚刚又试了一下,data除了doctype键和i键不能去掉,其余的即使删除了也能正常运行翻译。

刚刚又试了一下,data除了doctype键和i键不能去掉,其余的即使删除了也能正常运行翻译。

1 import urllib.request 2 import urllib.parse 3 import json 4 content=input("请输入需要翻译的内容:") 5 url='http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule' 6 data={} 7 data['i']=content 8 #data['i']='你好' 9 data['from']='AUTO' 10 data['to']='AUTO' 11 data['smartresult']='dict' 12 data['client']='fanyideskweb' 13 data['salt']='15555085552935' 14 data['sign']='db4c03a9216cfd2925f000aa371a5a61' 15 data['ts']='1555508555293' 16 data['bv']='d6c3cd962e29b66abe48fcb8f4dd7f7d' 17 data['doctype']='json' 18 data['version']='2.1' 19 data['keyfrom']='fanyi.web' 20 data['action']='FY_BY_CLICKBUTTION' 21 data=urllib.parse.urlencode(data).encode('utf-8') 22 response=urllib.request.urlopen(url,data) 23 html=response.read().decode('utf-8') 24 target=json.loads(html) 25 print("翻译结果是:%s" %(target['translateResult'][0][0]['tgt']))

二:隐藏

1.User-Agent

有些网站不希望被爬虫,所以会检查User-Agent。如果是python写的爬虫,User-Agent里面会有“python”关键字,就会被检测出来。但是这个属性是可以被手动修改的。

方法一:

通过Request的headers参数修改。先在浏览器中找到User-Agent,然后手动设置。

方法二:

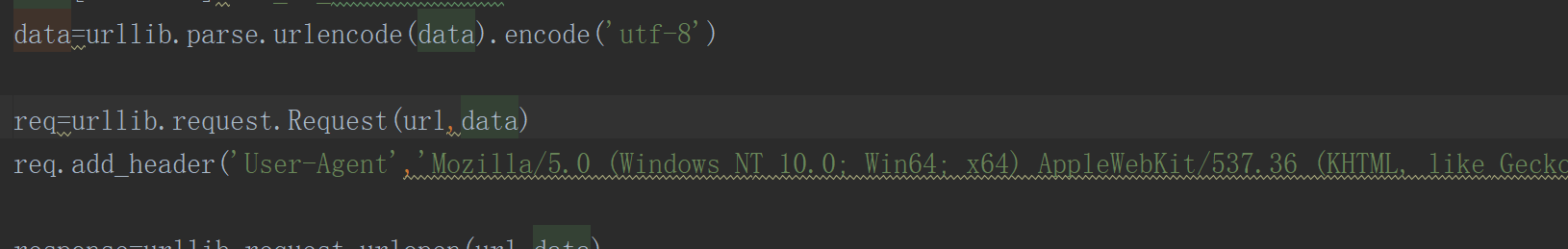

通过Request.add_header()方法修改

2.代理

比如爬取图片,连续对一个IP地址不停的访问(速度太快),不符合正常人的行为,就会网站发现。

第一种策略:延迟爬虫的时间(通过修改代码)

import urllib.request import urllib.parse import json import time while True: content=input("请输入需要翻译的内容(输入q退出程序):") if content=='q': break url='http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule' #方法一 ''' head={} head['User-Agent']="Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36" ''' data={} data['i']=content #data['i']='你好' data['from']='AUTO' data['to']='AUTO' data['smartresult']='dict' data['client']='fanyideskweb' data['salt']='15555085552935' data['sign']='db4c03a9216cfd2925f000aa371a5a61' data['ts']='1555508555293' data['bv']='d6c3cd962e29b66abe48fcb8f4dd7f7d' data['doctype']='json' data['version']='2.1' data['keyfrom']='fanyi.web' data['action']='FY_BY_CLICKBUTTION' data=urllib.parse.urlencode(data).encode('utf-8') req=urllib.request.Request(url,data) req.add_header('User-Agent','Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36') response=urllib.request.urlopen(url,data) html=response.read().decode('utf-8') target=json.loads(html) print("翻译结果是:%s" %(target['translateResult'][0][0]['tgt'])) time.sleep(5)

第二种:代理

中国IP地址代理服务器:https://cn-proxy.com/

代理步骤:

1.参数是一个字典{‘类型’:‘代理IP:端口号’}

proxy_support=urllib.request.ProxyHandler({})

2.定制、创建一个opener

opener=urllib.request.build_opener(proxy_support)

3.安装opener

urllib.request.install_opener(opener)

4.调用opener

opener.open(url)

实例:

View Code

View Code

View Code

View Code

代理步骤:

1.参数是一个字典{‘类型’:‘代理IP:端口号’}

proxy_support=urllib.request.ProxyHandler({})

2.定制、创建一个opener

opener=urllib.request.build_opener(proxy_support)

3.安装opener

urllib.request.install_opener(opener)

4.调用opener

opener.open(url)

实例:

import urllib.request import random url='http://www.runoob.com/html/html-tutorial.html' iplist=['117.191.11.103:80','39.137.168.230:80','117.191.11.102:8080'] #几个备选IP地址 proxy_support=urllib.request.ProxyHandler({'http':random.choice(iplist)}) opener=urllib.request.build_opener(proxy_support) opener.add_handler=[('User-Agent','Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36')] urllib.request.install_opener(opener) response=urllib.request.urlopen(url) html=response.read().decode('utf-8') print(html)

三:批量下载图片

1 import urllib.request 2 import os 3 4 #打开网页 5 def url_open(url): 6 req=urllib.request.Request(url) 7 req.add_header('User-Agent',' Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36(KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36') 8 response=urllib.request.urlopen(url) 9 html=response.read() 10 print(url) 11 return html 12 def get_page(url): 13 html=url_open(url).decode('gbk') 14 15 a=html.find('a href="/desk/')+14 16 b=html.find(".htm",a) 17 print(html[a:b]) 18 return html[a:b] 19 def find_imgs(url): 20 html=url_open(url).decode('gbk') 21 img_addrs=[] 22 23 a=html.find('img src=') 24 while a!=-1: 25 b=html.find('.jpg',a,a+255) 26 if b!=-1: 27 img_addrs.append((html[a+9:b+4])) 28 else: 29 b=a+7 30 a=html.find('img sr=',b) 31 for each in img_addrs: 32 print(each) 33 return img_addrs 34 def save_imgs(floder,img_addrs): 35 for each in img_addrs: 36 filename=each.split('/')[-1] 37 with open(filename,'wb') as f: 38 img=url_open(each) 39 f.write(img) 40 def download_mm(folder="小风景",pages=10): 41 os.mkdir(folder) 42 os.chdir(folder) 43 44 url="http://www.netbian.com/" 45 page_num=int(get_page(url)) 46 47 for i in range(pages): 48 page_num-=i 49 page_url=url+"/desk/"+str(page_num)+".htm" 50 img_addrs=find_imgs(page_url) 51 save_imgs(folder,img_addrs) 52 53 if __name__=="__main__": 54 download_mm()