高性能爬虫Scrapy框架

Scrapy

Scrapy是一个为了爬取网站数据,提取结构性数据而编写的应用框架。 其可以应用在数据挖掘,信息处理或存储历史数据等一系列的程序中。

其最初是为了页面抓取 (更确切来说, 网络抓取 )所设计的, 也可以应用在获取API所返回的数据(例如 Amazon Associates Web Services ) 或者通用的网络爬虫。Scrapy用途广泛,可以用于数据挖掘、监测和自动化测试。

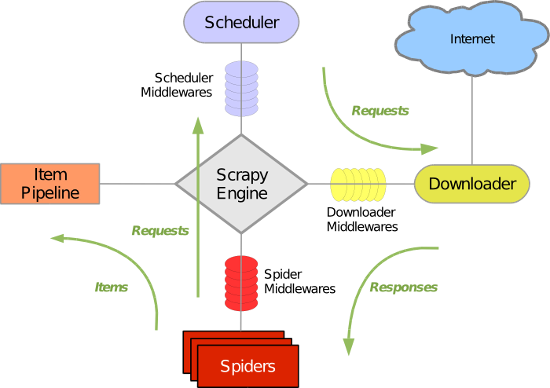

Scrapy 使用了 Twisted异步网络库来处理网络通讯。整体架构大致如下

Scrapy主要包括了以下组件:

- 引擎(Scrapy)

用来处理整个系统的数据流处理, 触发事务(框架核心) - 调度器(Scheduler)

用来接受引擎发过来的请求, 压入队列中, 并在引擎再次请求的时候返回. 可以想像成一个URL(抓取网页的网址或者说是链接)的优先队列, 由它来决定下一个要抓取的网址是什么, 同时去除重复的网址 - 下载器(Downloader)

用于下载网页内容, 并将网页内容返回给蜘蛛(Scrapy下载器是建立在twisted这个高效的异步模型上的) - 爬虫(Spiders)

爬虫是主要干活的, 用于从特定的网页中提取自己需要的信息, 即所谓的实体(Item)。用户也可以从中提取出链接,让Scrapy继续抓取下一个页面 - 项目管道(Pipeline)

负责处理爬虫从网页中抽取的实体,主要的功能是持久化实体、验证实体的有效性、清除不需要的信息。当页面被爬虫解析后,将被发送到项目管道,并经过几个特定的次序处理数据。 - 下载器中间件(Downloader Middlewares)

位于Scrapy引擎和下载器之间的框架,主要是处理Scrapy引擎与下载器之间的请求及响应。 - 爬虫中间件(Spider Middlewares)

介于Scrapy引擎和爬虫之间的框架,主要工作是处理蜘蛛的响应输入和请求输出。 - 调度中间件(Scheduler Middewares)

介于Scrapy引擎和调度之间的中间件,从Scrapy引擎发送到调度的请求和响应。

Scrapy运行流程大概如下:

- 引擎从调度器中取出一个链接(URL)用于接下来的抓取

- 引擎把URL封装成一个请求(Request)传给下载器

- 下载器把资源下载下来,并封装成应答包(Response)

- 爬虫解析Response

- 解析出实体(Item),则交给实体管道进行进一步的处理

- 解析出的是链接(URL),则把URL交给调度器等待抓取

安装

Linux

pip3 install scrapy

Windows

a. pip3 install wheel

b. 下载twisted http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

c. 进入下载目录,执行 pip3 install Twisted‑17.1.0‑cp35‑cp35m‑win_amd64.whl

d. pip3 install scrapy

e. 下载并安装pywin32:https://sourceforge.net/projects/pywin32/files/

基本使用

基本命令

1. scrapy startproject 项目名称

- 在当前目录中创建中创建一个项目文件(类似于Django)

2. scrapy genspider [-t template] <name> <domain>

- 创建爬虫应用

如:

scrapy gensipider oldboy oldboy.com

scrapy gensipider -t xmlfeed autohome autohome.com.cn

PS:

查看所有命令:scrapy gensipider -l

查看模板命令:scrapy gensipider -d 模板名称

3. scrapy list

- 展示爬虫应用列表

4. scrapy crawl 爬虫应用名称

- 运行单独爬虫应用

项目结构以及爬虫应用简介

project_name/

scrapy.cfg

project_name/

__init__.py

items.py

pipelines.py

settings.py

spiders/

__init__.py

爬虫1.py

爬虫2.py

爬虫3.py

文件说明:

- scrapy.cfg 项目的主配置信息。(真正爬虫相关的配置信息在settings.py文件中)

- items.py 设置数据存储模板,用于结构化数据,如:Django的Model

- pipelines 数据处理行为,如:一般结构化的数据持久化

- settings.py 配置文件,如:递归的层数、并发数,延迟下载等

- spiders 爬虫目录,如:创建文件,编写爬虫规则

爬虫1.py

import scrapy

class XiaoHuarSpider(scrapy.spiders.Spider):

name = "xiaohuar" # 爬虫名称 *****

allowed_domains = ["xiaohuar.com"] # 允许的域名

start_urls = [

"http://www.xiaohuar.com/hua/", # 其实URL

]

def parse(self, response):

# 访问起始URL并获取结果后的回调函数

# windows编码问题在头部添加下面两句

import sys,os

sys.stdout=io.TextIOWrapper(sys.stdout.buffer,encoding='gb18030')

自带的HTML解析器

解析器用法

由HtmlResponse或HtmlXPathSelector对象调用,其中HtmlXPathSelector接受HtmlResponse对象作为参数

# 查找规则

obj.xpath("查找规则")

'//标签名' # 在根下查找指定标签

'//标签名[索引]' # 在根下查找指定标签,根据索引获得标签

'//标签名[@属性]' # 在根下查找指定标签,带有指定属性

'//标签名[@属性=值]' # 在根下查找指定标签,属性是指定值的

'//标签名[@属性=值][@属性=值]' # 在根下查找指定标签,属性是指定值的

'//标签名[contains(@属性, "link")]' # 在根下查找指定标签,属性包含指定值的

//标签名[re:test(@id, "i\d+")]' # 在根下查找指定标签,属性匹配到正则的

'//标签名/标签名' # 在查找到的标签名后查找儿子标签

obj.xpath("查找规则").xpath('./标签名') # # 在查找到的标签名后查找儿子标签

# 取值

new_obj=obj.xpath("//标签名/@属性名") # 获得可以获得属性值对象

new_obj=obj.xpath("//标签名/text()") # 获得可以获得文本内容对象

new_obj.extract() # 获得列表

new_obj.extract_first() # 获得值

单独应用的例子

from scrapy.selector import Selector, HtmlXPathSelector

from scrapy.http import HtmlResponse

html = """<!DOCTYPE html>

<html>

<head lang="en">

<meta charset="UTF-8">

<title></title>

</head>

<body>

<ul>

<li class="item-"><a id='i1' href="link.html">first item</a></li>

<li class="item-0"><a id='i2' href="llink.html">first item</a></li>

<li class="item-1"><a href="llink2.html">second item<span>vv</span></a></li>

</ul>

<div><a href="llink2.html">second item</a></div>

</body>

</html>

"""

response = HtmlResponse(url='http://example.com', body=html,encoding='utf-8')

hxs = HtmlXPathSelector(response) # 解析类接收响应对象

# print(hxs)

# hxs = Selector(response=response).xpath('//a')

# print(hxs)

# hxs = Selector(response=response).xpath('//a[2]')

# print(hxs)

# hxs = Selector(response=response).xpath('//a[@id]')

# print(hxs)

# hxs = Selector(response=response).xpath('//a[@id="i1"]')

# print(hxs)

# hxs = Selector(response=response).xpath('//a[@href="link.html"][@id="i1"]')

# print(hxs)

# hxs = Selector(response=response).xpath('//a[contains(@href, "link")]')

# print(hxs)

# hxs = Selector(response=response).xpath('//a[starts-with(@href, "link")]')

# print(hxs)

# hxs = Selector(response=response).xpath('//a[re:test(@id, "i\d+")]')

# print(hxs)

# hxs = Selector(response=response).xpath('//a[re:test(@id, "i\d+")]/text()').extract()

# print(hxs)

# hxs = Selector(response=response).xpath('//a[re:test(@id, "i\d+")]/@href').extract()

# print(hxs)

# hxs = Selector(response=response).xpath('/html/body/ul/li/a/@href').extract()

# print(hxs)

# hxs = Selector(response=response).xpath('//body/ul/li/a/@href').extract_first()

# print(hxs)

# ul_list = Selector(response=response).xpath('//body/ul/li')

# for item in ul_list:

# v = item.xpath('./a/span')

# # 或

# # v = item.xpath('a/span')

# # 或

# # v = item.xpath('*/a/span')

# print(v)

数据的存储

Pipeline和Item

items将数据格式化,然后统一交由pipelines来处理

items

class XianglongItem(scrapy.Item):

title = scrapy.Field()

href = scrapy.Field()

pipelines文件

class XianglongPipeline(object):

def process_item(self, item, spider):

self.f.write(item['href']+'\n')

self.f.flush()

return item

def open_spider(self, spider):

"""

爬虫开始执行时,调用

:param spider:

:return:

"""

self.f = open('url.log','w')

def close_spider(self, spider):

"""

爬虫关闭时,被调用

:param spider:

:return:

"""

self.f.close()

配置文件

# 当Item在Spider中被收集之后,它将会被传递到Item Pipeline,一些组件会按照一定的顺序执行对Item的处理。

ITEM_PIPELINES = {

'xianglongpipeline.pipelines.XianglongPipeline': 100,

}

# 每行后面的整型值,确定了他们运行的顺序,item按数字从低到高的顺序,通过pipeline,通常将这些数字定义在0-1000范围内。

pipelines的全部方法

class FilePipeline(object):

def __init__(self,path):

# 主要用来配合from_crawler

self.path = path

self.f = None

@classmethod

def from_crawler(cls, crawler):

"""

初始化时候,如果有此方法,会通过创建pipeline对象

crawler中包含配置信息

"""

path = crawler.settings.get('XL_FILE_PATH')

return cls(path)

def process_item(self, item, spider):

self.f.write(item['href']+'\n')

return item

def open_spider(self, spider):

"""

爬虫开始执行时,调用

"""

self.f = open(self.path,'w')

def close_spider(self, spider):

"""

爬虫关闭时,被调用

"""

self.f.close()

爬虫类

import scrapy

from bs4 import BeautifulSoup

from scrapy.selector import HtmlXPathSelector

from scrapy.http import Request

from ..items import XianglongItem

class ChoutiSpider(scrapy.Spider):

name = 'chouti'

allowed_domains = ['chouti.com']

start_urls = ['http://dig.chouti.com/',]

def parse(self, response):

"""

当起始URL下载完毕后,自动执行parse函数:response封装了响应相关的所有内容。

:param response:

:return:

"""

hxs = HtmlXPathSelector(response=response)

# 去下载的页面中:找新闻

items = hxs.xpath("//div[@id='content-list']/div[@class='item']")

for item in items:

href = item.xpath('.//div[@class="part1"]//a[1]/@href').extract_first()

text = item.xpath('.//div[@class="part1"]//a[1]/text()').extract_first()

item = XianglongItem(title=text,href=href)

yield item # 根据yield的类型会去执行不同的操作,yieldItem对象会去执行pipelines操作

pages = hxs.xpath('//div[@id="page-area"]//a[@class="ct_pagepa"]/@href').extract()

for page_url in pages:

page_url = "https://dig.chouti.com" + page_url

yield Request(url=page_url,callback=self.parse) # yieldRequest会执行新的爬虫任务,callback是回调函数

一个爬虫的开始

Spider类中的start_request

class Spider(object_ref):

def start_requests(self):

cls = self.__class__

if method_is_overridden(cls, Spider, 'make_requests_from_url'):

warnings.warn(

"Spider.make_requests_from_url method is deprecated; it "

"won't be called in future Scrapy releases. Please "

"override Spider.start_requests method instead (see %s.%s)." % (

cls.__module__, cls.__name__

),

)

for url in self.start_urls:

yield self.make_requests_from_url(url)

else:

for url in self.start_urls:

yield Request(url, dont_filter=True)

我们可以通过在自己的爬虫类中重写此方法,来设置回调函数,该方法可以返回一个迭代器或是一个可迭代对象,其内部帮我们转换成了迭代器.

def start_requests(self): for url in self.start_urls: yield Request(url=url,callback=self.parse2) def start_requests(self): req_list = [] for url in self.start_urls: req_list.append(Request(url=url,callback=self.parse2)) return req_list

Request对象

class Request(object_ref):

def __init__(self, url, callback=None, method='GET', headers=None, body=None,

cookies=None, meta=None, encoding='utf-8', priority=0,

dont_filter=False, errback=None, flags=None)

cookie操作

# 手动设置Cookie

# 响应原始Cookie

response.headers.getlist('Set-Cookie')

# 解析成字典形式

from scrapy.http.cookies import CookieJar

cookie_dict = {}

cookie_jar = CookieJar()

cookie_jar.extract_cookies(response, response.request)

for k, v in cookie_jar._cookies.items():

for i, j in v.items():

for m, n in j.items():

self.cookie_dict[m] = n.value

# 自动设置

# Scrapy通过使用 cookiejar Request meta key来支持单spider追踪多cookie session。 默认情况下其使用一个cookie jar(session),不过您可以传递一个标示符来使用多个。

# 需要注意的是 cookiejar meta key不是”黏性的(sticky)”。 您需要在之后的request请求中接着传递。

Request(

url='http://dig.chouti.com/login',

method='POST',

headers={'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8'},

body='phone=8613121758648&password=woshiniba&oneMonth=1',

callback=self.parse_check_login,

meta={'cookiejar': True} # 请求自动携带cookie

)

# 配置文件制定是否允许操作cookie:

# Disable cookies (enabled by default)

# COOKIES_ENABLED = False 默认是True,可以操作的

应用实例

import scrapy

from bs4 import BeautifulSoup

from scrapy.selector import HtmlXPathSelector

from scrapy.http import Request

from ..items import XianglongItem

from scrapy.http import HtmlResponse

from scrapy.http.response.html import HtmlResponse

class ChoutiSpider(scrapy.Spider):

name = 'chouti'

allowed_domains = ['chouti.com']

start_urls = ['http://dig.chouti.com/',]

def start_requests(self):

for url in self.start_urls:

yield Request(url=url,callback=self.parse_index,meta={'cookiejar':True})

def parse_index(self,response):

req = Request(

url='http://dig.chouti.com/login',

method='POST',

headers={'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8'},

body='phone=8613121758648&password=woshiniba&oneMonth=1',

callback=self.parse_check_login,

meta={'cookiejar': True}

)

yield req

def parse_check_login(self,response):

# print(response.text)

yield Request(

url='https://dig.chouti.com/link/vote?linksId=19440976',

method='POST',

callback=self.parse_show_result,

meta={'cookiejar': True}

)

def parse_show_result(self,response):

print(response.text)

去重

scrapy默认使用 scrapy.dupefilter.RFPDupeFilter 进行去重,相关配置有:

DUPEFILTER_CLASS = 'scrapy.dupefilter.RFPDupeFilter' DUPEFILTER_DEBUG = False JOBDIR = "保存范文记录的日志路径,如:/root/" # 最终路径为 /root/requests.seen

自定义去重类

from scrapy.dupefilter import BaseDupeFilter

from scrapy.utils.request import request_fingerprint

"""

1. 根据配置文件找到 DUPEFILTER_CLASS = 'xianglong.dupe.MyDupeFilter'

2. 判断是否存在from_settings

如果有:

obj = MyDupeFilter.from_settings()

否则:

obj = MyDupeFilter()

"""

class MyDupeFilter(BaseDupeFilter):

def __init__(self):

self.record = set()

@classmethod

def from_settings(cls, settings):

return cls()

def request_seen(self, request):

检测当前请求是否已经被访问过

:param request:

:return: True表示已经访问过;False表示未访问过

"""

ident = request_fingerprint(request)

if ident in self.record:

print('已经访问过了', request.url)

return True

self.record.add(ident)

def open(self):

"""

开始爬去请求时,调用

:return:

"""

print('open replication')

def close(self, reason):

"""

结束爬虫爬取时,调用

:param reason:

:return:

"""

print('close replication')

def log(self, request, spider):

"""

记录日志

:param request:

:param spider:

:return:

"""

print('repeat', request.url)

唯一url,url长度不固定,且参数位置也不固定,做重复判断以及保存时挺麻烦的,不过内部提供了条件排序和类似于md5验证的方法,将url转成固定长度的字符串

from scrapy.utils.request import request_fingerprint from scrapy.http import Request u1 = Request(url='http://www.oldboyedu.com?id=1&age=2') u2 = Request(url='http://www.oldboyedu.com?age=2&id=1') result1 = request_fingerprint(u1) result2 = request_fingerprint(u2) print(result1,result2) # c49a0582ee359d61d0fe5f28084b2ea04106050d # c49a0582ee359d61d0fe5f28084b2ea04106050d

调度器中判断是否去下载

from scrapy.core.scheduler import Scheduler

class Scheduler(object):

''''一堆代码''

def enqueue_request(self, request):

'''会根据request的dont_filter属性和判断重复类的结果进行判断

如果request的dont_filter属性为True不会进行去重,统一将请求_dqpush

为False时要根据判断重复类的结果来决定是不是执行if语句

'''

if not request.dont_filter and self.df.request_seen(request):

self.df.log(request, self.spider)

return False

dqok = self._dqpush(request)

''''一堆代码''

return True

下载中间件

配置文件

DOWNLOADER_MIDDLEWARES = {

'XXX.middlewares.XXXDownloaderMiddleware': 543,

}

中间件类方法详解

class XXXDownloaderMiddleware(object):

@classmethod

def from_crawler(cls, crawler):

# 会根据这个类方法来实例化对象

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

"""

请求需要被下载时,经过所有下载器中间件的process_request调用

:param request:

:param spider:

:return:

None,继续后续中间件去下载;

Response对象,停止process_request的执行,开始执行process_response

Request对象,停止中间件的执行,将Request重新调度器

raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception

"""

return None

def process_response(self, request, response, spider):

"""

spider处理完成,返回时调用

:param response:

:param result:

:param spider:

:return:

Response 对象:转交给其他中间件process_response

Request 对象:停止中间件,request会被重新调度下载

raise IgnoreRequest 异常:调用Request.errback

"""

return response

def process_exception(self, request, exception, spider):

"""

当下载处理器(download handler)或 process_request() (下载中间件)抛出异常

:param response:

:param exception:

:param spider:

:return:

None:继续交给后续中间件处理异常;

Response对象:停止后续process_exception方法

Request对象:停止中间件,request将会被重新调用下载

"""

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

代理中间件

# 全部默认中间件的位置 scrapy.contrib.downloadermiddleware # 代理中间件 scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware # 如何使用代理中间件 import os import scrapy from scrapy.http import Request class ChoutiSpider(scrapy.Spider): name = 'chouti' allowed_domains = ['chouti.com'] start_urls = ['https://dig.chouti.com/'] def start_requests(self): os.environ['HTTP_PROXY'] = "http://192.168.11.11" # 只需要配置环境变量就行user[:passwd]@host[:port] for url in self.start_urls: yield Request(url=url,callback=self.parse) def parse(self, response): print(response)

源码

class HttpProxyMiddleware(object):

def __init__(self, auth_encoding='latin-1'):

self.auth_encoding = auth_encoding

self.proxies = {}

for type, url in getproxies().items(): # getproxies获得代理字典

# os.environ['HTTP_PROXY'] = "http://192.168.11.11"

# 获得{'http': "http://192.168.11.11"}

self.proxies[type] = self._get_proxy(url, type) # 账号密码,代理IP端口

def process_request(self, request, spider):

'''...'''

parsed = urlparse_cached(request)

scheme = parsed.scheme

if scheme in ('http', 'https') and proxy_bypass(parsed.hostname):

return

if scheme in self.proxies:

self._set_proxy(request, scheme)

def _set_proxy(self, request, scheme):

creds, proxy = self.proxies[scheme]

request.meta['proxy'] = proxy # 设置代理

if creds:

request.headers['Proxy-Authorization'] = b'Basic ' + creds # 设置账号密码

自定义代理池

import random

import base64

import six

def to_bytes(text, encoding=None, errors='strict'):

"""Return the binary representation of `text`. If `text`

is already a bytes object, return it as-is."""

if isinstance(text, bytes):

return text

if not isinstance(text, six.string_types):

raise TypeError('to_bytes must receive a unicode, str or bytes '

'object, got %s' % type(text).__name__)

if encoding is None:

encoding = 'utf-8'

return text.encode(encoding, errors)

class MyProxyDownloaderMiddleware(object):

def process_request(self, request, spider):

proxy_list = [

{'ip_port': '111.11.228.75:80', 'user_pass': 'xxx:123'},

{'ip_port': '120.198.243.22:80', 'user_pass': ''},

{'ip_port': '111.8.60.9:8123', 'user_pass': ''},

{'ip_port': '101.71.27.120:80', 'user_pass': ''},

{'ip_port': '122.96.59.104:80', 'user_pass': ''},

{'ip_port': '122.224.249.122:8088', 'user_pass': ''},

]

proxy = random.choice(proxy_list)

if proxy['user_pass'] is not None:

request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port'])

encoded_user_pass = base64.encodestring(to_bytes(proxy['user_pass']))

request.headers['Proxy-Authorization'] = to_bytes('Basic ' + encoded_user_pass)

else:

request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port'])

关于https的中间件

from scrapy.core.downloader.contextfactory import ScrapyClientContextFactory

from twisted.internet.ssl import (optionsForClientTLS, CertificateOptions, PrivateCertificate)

class MySSLFactory(ScrapyClientContextFactory):

def getCertificateOptions(self):

from OpenSSL import crypto

v1 = crypto.load_privatekey(crypto.FILETYPE_PEM, open('秘钥', mode='r').read())

v2 = crypto.load_certificate(crypto.FILETYPE_PEM, open('证书文件', mode='r').read())

return CertificateOptions(

privateKey=v1, # pKey对象

certificate=v2, # X509对象

verify=False,

method=getattr(self, 'method', getattr(self, '_ssl_method', None))

)

# 配置:

DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory"

DOWNLOADER_CLIENTCONTEXTFACTORY = "自定义证书类"

爬虫中间件

class SpiderMiddleware(object):

def __init__(self):

pass

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

return s

def process_spider_input(self,response, spider):

"""

下载完成,执行,然后交给parse处理

:param response:

:param spider:

:return:

"""

pass

def process_spider_output(self,response, result, spider):

"""

spider处理完成,返回时调用

:param response:

:param result:

:param spider:

:return: 必须返回包含 Request 或 Item 对象的可迭代对象(iterable)

"""

return result

def process_spider_exception(self,response, exception, spider):

"""

异常调用

:param response:

:param exception:

:param spider:

:return: None,继续交给后续中间件处理异常;含 Response 或 Item 的可迭代对象(iterable),交给调度器或pipeline

"""

return None

def process_start_requests(self,start_requests, spider):

"""

爬虫启动时调用

:param start_requests:

:param spider:

:return: 包含 Request 对象的可迭代对象

"""

return start_requests

# 注册

SPIDER_MIDDLEWARES = {

'类路径': 543,

}

拓展+信号

# 拓展,项目启动时实例化拓展类,直接实例化,或根据from_crawler来实例化

class MyExtension(object):

def __init__(self):

pass

@classmethod

def from_crawler(cls, crawler):

obj = cls()

return obj

配置:

EXTENSIONS = {

'xiaohan.extends.MyExtension':500,

}

# 信号,为信号注册函数,在特定时刻触发全部信号

# scrapy.signals

engine_started = object()

engine_stopped = object()

spider_opened = object()

spider_idle = object()

spider_closed = object()

spider_error = object()

request_scheduled = object()

request_dropped = object()

response_received = object()

response_downloaded = object()

item_scraped = object()

item_dropped = object()

# 拓展+信号,项目启动时注册信号

from scrapy import signals

class MyExtension(object):

def __init__(self):

pass

@classmethod

def from_crawler(cls, crawler):

obj = cls()

# 在爬虫打开时,触发spider_opened信号相关的所有函数:xxxxxxxxxxx

crawler.signals.connect(obj.xxxxxxxxxxx1, signal=signals.spider_opened)

# 在爬虫关闭时,触发spider_closed信号相关的所有函数:xxxxxxxxxxx

crawler.signals.connect(obj.uuuuuuuuuu, signal=signals.spider_closed)

return obj

def xxxxxxxxxxx1(self, spider):

print('open')

def uuuuuuuuuu(self, spider):

print('close')

# 不要忘了配置拓展

配置文件

# -*- coding: utf-8 -*-

# Scrapy settings for step8_king project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

# http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

# 1. 爬虫名称

BOT_NAME = 'step8_king'

# 2. 爬虫应用路径

SPIDER_MODULES = ['step8_king.spiders']

NEWSPIDER_MODULE = 'step8_king.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

# 3. 客户端 user-agent请求头

# scrapy.contrib.downloadermiddleware.robotstxt.RobotsTxtMiddleware

# USER_AGENT = 'step8_king (+http://www.yourdomain.com)'

# Obey robots.txt rules

# 4. 禁止爬虫配置

# ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

# 5. 并发请求数

# CONCURRENT_REQUESTS = 4

# Configure a delay for requests for the same website (default: 0)

# See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

# 6. 延迟下载秒数

# DOWNLOAD_DELAY = 2

# The download delay setting will honor only one of:

# 7. 单域名访问并发数,并且延迟下次秒数也应用在每个域名

# CONCURRENT_REQUESTS_PER_DOMAIN = 2

# 单IP访问并发数,如果有值则忽略:CONCURRENT_REQUESTS_PER_DOMAIN,并且延迟下次秒数也应用在每个IP

# CONCURRENT_REQUESTS_PER_IP = 3

# Disable cookies (enabled by default)

# 8. 是否支持cookie,cookiejar进行操作cookie

# COOKIES_ENABLED = True

# COOKIES_DEBUG = True

# Disable Telnet Console (enabled by default)

# 9. Telnet用于查看当前爬虫的信息,操作爬虫等...

# 使用telnet ip port ,然后通过命令操作

# TELNETCONSOLE_ENABLED = True

# TELNETCONSOLE_HOST = '127.0.0.1'

# TELNETCONSOLE_PORT = [6023,]

# 10. 默认请求头

# Override the default request headers:

# DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

# }

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

# 11. 定义pipeline处理请求

# ITEM_PIPELINES = {

# 'step8_king.pipelines.JsonPipeline': 700,

# 'step8_king.pipelines.FilePipeline': 500,

# }

# 12. 自定义扩展,基于信号进行调用

# Enable or disable extensions

# See http://scrapy.readthedocs.org/en/latest/topics/extensions.html

# EXTENSIONS = {

# # 'step8_king.extensions.MyExtension': 500,

# }

# 13. 爬虫允许的最大深度,可以通过meta查看当前深度;0表示无深度

# DEPTH_LIMIT = 3

# 14. 爬取时,0表示深度优先Lifo(默认);1表示广度优先FiFo

# 后进先出,深度优先

# DEPTH_PRIORITY = 0

# SCHEDULER_DISK_QUEUE = 'scrapy.squeue.PickleLifoDiskQueue'

# SCHEDULER_MEMORY_QUEUE = 'scrapy.squeue.LifoMemoryQueue'

# 先进先出,广度优先

# DEPTH_PRIORITY = 1

# SCHEDULER_DISK_QUEUE = 'scrapy.squeue.PickleFifoDiskQueue'

# SCHEDULER_MEMORY_QUEUE = 'scrapy.squeue.FifoMemoryQueue'

# 15. 调度器队列

# SCHEDULER = 'scrapy.core.scheduler.Scheduler'

# from scrapy.core.scheduler import Scheduler

# 16. 访问URL去重

# DUPEFILTER_CLASS = 'step8_king.duplication.RepeatUrl'

# Enable and configure the AutoThrottle extension (disabled by default)

# See http://doc.scrapy.org/en/latest/topics/autothrottle.html

"""

17. 自动限速算法

from scrapy.contrib.throttle import AutoThrottle

自动限速设置

1. 获取最小延迟 DOWNLOAD_DELAY

2. 获取最大延迟 AUTOTHROTTLE_MAX_DELAY

3. 设置初始下载延迟 AUTOTHROTTLE_START_DELAY

4. 当请求下载完成后,获取其"连接"时间 latency,即:请求连接到接受到响应头之间的时间

5. 用于计算的... AUTOTHROTTLE_TARGET_CONCURRENCY

target_delay = latency / self.target_concurrency

new_delay = (slot.delay + target_delay) / 2.0 # 表示上一次的延迟时间

new_delay = max(target_delay, new_delay)

new_delay = min(max(self.mindelay, new_delay), self.maxdelay)

slot.delay = new_delay

"""

# 开始自动限速

# AUTOTHROTTLE_ENABLED = True

# The initial download delay

# 初始下载延迟

# AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

# 最大下载延迟

# AUTOTHROTTLE_MAX_DELAY = 10

# The average number of requests Scrapy should be sending in parallel to each remote server

# 平均每秒并发数

# AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

# 是否显示

# AUTOTHROTTLE_DEBUG = True

# Enable and configure HTTP caching (disabled by default)

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

"""

18. 启用缓存

目的用于将已经发送的请求或相应缓存下来,以便以后使用

from scrapy.downloadermiddlewares.httpcache import HttpCacheMiddleware

from scrapy.extensions.httpcache import DummyPolicy

from scrapy.extensions.httpcache import FilesystemCacheStorage

"""

# 是否启用缓存策略

# HTTPCACHE_ENABLED = True

# 缓存策略:所有请求均缓存,下次在请求直接访问原来的缓存即可

# HTTPCACHE_POLICY = "scrapy.extensions.httpcache.DummyPolicy"

# 缓存策略:根据Http响应头:Cache-Control、Last-Modified 等进行缓存的策略

# HTTPCACHE_POLICY = "scrapy.extensions.httpcache.RFC2616Policy"

# 缓存超时时间

# HTTPCACHE_EXPIRATION_SECS = 0

# 缓存保存路径

# HTTPCACHE_DIR = 'httpcache'

# 缓存忽略的Http状态码

# HTTPCACHE_IGNORE_HTTP_CODES = []

# 缓存存储的插件

# HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

"""

19. 代理,需要在环境变量中设置

from scrapy.contrib.downloadermiddleware.httpproxy import HttpProxyMiddleware

方式一:使用默认

os.environ

{

http_proxy:http://root:woshiniba@192.168.11.11:9999/

https_proxy:http://192.168.11.11:9999/

}

方式二:使用自定义下载中间件

def to_bytes(text, encoding=None, errors='strict'):

if isinstance(text, bytes):

return text

if not isinstance(text, six.string_types):

raise TypeError('to_bytes must receive a unicode, str or bytes '

'object, got %s' % type(text).__name__)

if encoding is None:

encoding = 'utf-8'

return text.encode(encoding, errors)

class ProxyMiddleware(object):

def process_request(self, request, spider):

PROXIES = [

{'ip_port': '111.11.228.75:80', 'user_pass': ''},

{'ip_port': '120.198.243.22:80', 'user_pass': ''},

{'ip_port': '111.8.60.9:8123', 'user_pass': ''},

{'ip_port': '101.71.27.120:80', 'user_pass': ''},

{'ip_port': '122.96.59.104:80', 'user_pass': ''},

{'ip_port': '122.224.249.122:8088', 'user_pass': ''},

]

proxy = random.choice(PROXIES)

if proxy['user_pass'] is not None:

request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port'])

encoded_user_pass = base64.encodestring(to_bytes(proxy['user_pass']))

request.headers['Proxy-Authorization'] = to_bytes('Basic ' + encoded_user_pass)

print "**************ProxyMiddleware have pass************" + proxy['ip_port']

else:

print "**************ProxyMiddleware no pass************" + proxy['ip_port']

request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port'])

DOWNLOADER_MIDDLEWARES = {

'step8_king.middlewares.ProxyMiddleware': 500,

}

"""

"""

20. Https访问

Https访问时有两种情况:

1. 要爬取网站使用的可信任证书(默认支持)

DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory"

DOWNLOADER_CLIENTCONTEXTFACTORY = "scrapy.core.downloader.contextfactory.ScrapyClientContextFactory"

2. 要爬取网站使用的自定义证书

DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory"

DOWNLOADER_CLIENTCONTEXTFACTORY = "step8_king.https.MySSLFactory"

# https.py

from scrapy.core.downloader.contextfactory import ScrapyClientContextFactory

from twisted.internet.ssl import (optionsForClientTLS, CertificateOptions, PrivateCertificate)

class MySSLFactory(ScrapyClientContextFactory):

def getCertificateOptions(self):

from OpenSSL import crypto

v1 = crypto.load_privatekey(crypto.FILETYPE_PEM, open('/Users/wupeiqi/client.key.unsecure', mode='r').read())

v2 = crypto.load_certificate(crypto.FILETYPE_PEM, open('/Users/wupeiqi/client.pem', mode='r').read())

return CertificateOptions(

privateKey=v1, # pKey对象

certificate=v2, # X509对象

verify=False,

method=getattr(self, 'method', getattr(self, '_ssl_method', None))

)

其他:

相关类

scrapy.core.downloader.handlers.http.HttpDownloadHandler

scrapy.core.downloader.webclient.ScrapyHTTPClientFactory

scrapy.core.downloader.contextfactory.ScrapyClientContextFactory

相关配置

DOWNLOADER_HTTPCLIENTFACTORY

DOWNLOADER_CLIENTCONTEXTFACTORY

"""

"""

21. 爬虫中间件

class SpiderMiddleware(object):

def process_spider_input(self,response, spider):

'''

下载完成,执行,然后交给parse处理

:param response:

:param spider:

:return:

'''

pass

def process_spider_output(self,response, result, spider):

'''

spider处理完成,返回时调用

:param response:

:param result:

:param spider:

:return: 必须返回包含 Request 或 Item 对象的可迭代对象(iterable)

'''

return result

def process_spider_exception(self,response, exception, spider):

'''

异常调用

:param response:

:param exception:

:param spider:

:return: None,继续交给后续中间件处理异常;含 Response 或 Item 的可迭代对象(iterable),交给调度器或pipeline

'''

return None

def process_start_requests(self,start_requests, spider):

'''

爬虫启动时调用

:param start_requests:

:param spider:

:return: 包含 Request 对象的可迭代对象

'''

return start_requests

内置爬虫中间件:

'scrapy.contrib.spidermiddleware.httperror.HttpErrorMiddleware': 50,

'scrapy.contrib.spidermiddleware.offsite.OffsiteMiddleware': 500,

'scrapy.contrib.spidermiddleware.referer.RefererMiddleware': 700,

'scrapy.contrib.spidermiddleware.urllength.UrlLengthMiddleware': 800,

'scrapy.contrib.spidermiddleware.depth.DepthMiddleware': 900,

"""

# from scrapy.contrib.spidermiddleware.referer import RefererMiddleware

# Enable or disable spider middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

SPIDER_MIDDLEWARES = {

# 'step8_king.middlewares.SpiderMiddleware': 543,

}

"""

22. 下载中间件

class DownMiddleware1(object):

def process_request(self, request, spider):

'''

请求需要被下载时,经过所有下载器中间件的process_request调用

:param request:

:param spider:

:return:

None,继续后续中间件去下载;

Response对象,停止process_request的执行,开始执行process_response

Request对象,停止中间件的执行,将Request重新调度器

raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception

'''

pass

def process_response(self, request, response, spider):

'''

spider处理完成,返回时调用

:param response:

:param result:

:param spider:

:return:

Response 对象:转交给其他中间件process_response

Request 对象:停止中间件,request会被重新调度下载

raise IgnoreRequest 异常:调用Request.errback

'''

print('response1')

return response

def process_exception(self, request, exception, spider):

'''

当下载处理器(download handler)或 process_request() (下载中间件)抛出异常

:param response:

:param exception:

:param spider:

:return:

None:继续交给后续中间件处理异常;

Response对象:停止后续process_exception方法

Request对象:停止中间件,request将会被重新调用下载

'''

return None

默认下载中间件

{

'scrapy.contrib.downloadermiddleware.robotstxt.RobotsTxtMiddleware': 100,

'scrapy.contrib.downloadermiddleware.httpauth.HttpAuthMiddleware': 300,

'scrapy.contrib.downloadermiddleware.downloadtimeout.DownloadTimeoutMiddleware': 350,

'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware': 400,

'scrapy.contrib.downloadermiddleware.retry.RetryMiddleware': 500,

'scrapy.contrib.downloadermiddleware.defaultheaders.DefaultHeadersMiddleware': 550,

'scrapy.contrib.downloadermiddleware.redirect.MetaRefreshMiddleware': 580,

'scrapy.contrib.downloadermiddleware.httpcompression.HttpCompressionMiddleware': 590,

'scrapy.contrib.downloadermiddleware.redirect.RedirectMiddleware': 600,

'scrapy.contrib.downloadermiddleware.cookies.CookiesMiddleware': 700,

'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 750,

'scrapy.contrib.downloadermiddleware.chunked.ChunkedTransferMiddleware': 830,

'scrapy.contrib.downloadermiddleware.stats.DownloaderStats': 850,

'scrapy.contrib.downloadermiddleware.httpcache.HttpCacheMiddleware': 900,

}

"""

# from scrapy.contrib.downloadermiddleware.httpauth import HttpAuthMiddleware

# Enable or disable downloader middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# DOWNLOADER_MIDDLEWARES = {

# 'step8_king.middlewares.DownMiddleware1': 100,

# 'step8_king.middlewares.DownMiddleware2': 500,

# }

自定义命令

- 在spiders同级创建任意目录,如:commands

- 在其中创建 crawlall.py 文件 (此处文件名就是自定义的命令)

from scrapy.commands import ScrapyCommand from scrapy.utils.project import get_project_settings class Command(ScrapyCommand): requires_project = True def syntax(self): # 参数 return '[options]' def short_desc(self): # 提示 return 'Runs all of the spiders' def run(self, args, opts): spider_list = self.crawler_process.spiders.list() # crawler_process研究爬虫启动过程 for name in spider_list: self.crawler_process.crawl(name, **opts.__dict__) self.crawler_process.start()

- 在settings.py 中添加配置 COMMANDS_MODULE = '项目名称.目录名称'

- 在项目目录执行命令:scrapy crawlall