keras_训练人脸识别模型心得

keras_cnn_实现人脸训练分类

废话不多扯,直接进入正题吧!今天在训练自己分割出来的图片,感觉效果挺不错的,所以在这分享一下心得,望入门的同孩采纳。

1、首先使用python OpenCV库里面的人脸检测分类器把你需要训练的测试人脸图片给提取出来,这一步很重要,因为deep learn他也不是万能的,很多原始人脸图片有很多干扰因素,直接拿去做模型训练效果是非常low的。所以必须得做这一步。而且还提醒一点就是你的人脸图片每个类别的人脸图片光线不要相差太大,虽然都是灰度图片,但是会影响你的结果,我测试过了好多次了,

2、把分割出来的人脸全部使用resize的方法变成[100x100]的图片,之前我也试过rgb的图片,但是效果不好,所以我建议都转成灰度图片,这样数据量小,计算速度也快,当然了keras的后端我建议使用TensorFlow-GPU版,这样计算过程明显比CPU快1万倍。

3、时间有限,我的数据集只有六张,前面三张是某某的人脸,后面三张又是另一个人脸,这样就只有两个类别,说到这里的时候很多人都觉得不可思议了吧,数据集这么小你怎么训练的?效果会好吗?那么你不要着急慢慢读下去吧!其次我把每个类别的前面两张图片自我复制了100次,这样我就有数据集了,类别的最后一张使用来做测试集,

自我复制50次。下面我来为大家揭晓答案吧,请详细参考如下代码:

(1)、导包

# coding:utf-8 import numpy as np import os import cv2 os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' from keras.models import Sequential from keras.layers import Dense,Dropout,Flatten from keras.layers import Conv2D,MaxPooling2D from keras import optimizers import pandas as pd import matplotlib.pyplot as plt

(2)、读取我们的图片数据

filePath = os.listdir('img_test/') print(filePath) img_data = [] for i in filePath: img_data.append(cv2.resize(cv2.cvtColor(cv2.imread('img_test/%s'%i),cv2.COLOR_BGR2GRAY),(100,100),interpolation=cv2.INTER_AREA))

(3)、制作训练集合测试集

x_train = np.zeros([100,100,100,1]) y_train = [] x_test = np.zeros([50,100,100,1]) y_test = [] for i in range(100): if i<25: x_train[i,:,:,0] = img_data[2] y_train.append(1) elif 25<=i<50: x_train[i, :, :, 0] = img_data[4] y_train.append(2) elif 50<=i<75: x_train[i, :, :, 0] = img_data[1] y_train.append(1) else: x_train[i, :, :, 0] = img_data[5] y_train.append(2) for j in range(50): if j%2==0: x_test[j, :, :, 0] = img_data[0] y_test.append(1) else: x_test[j, :, :, 0] = img_data[3] # np.ones((100,100)) y_test.append(2) y_train = np.array(pd.get_dummies(y_train)) y_ts = np.array(y_test) y_test = np.array(pd.get_dummies(y_test))

(4)、建立keras_cnn模型

model = Sequential() # 第一层: model.add(Conv2D(32,(3,3),input_shape=(100,100,1),activation='relu')) model.add(MaxPooling2D(pool_size=(2,2))) model.add(Dropout(0.5)) # model.add(Conv2D(64,(3,3),activation='relu')) #第二层: # model.add(Conv2D(32,(3,3),activation='relu')) # model.add(Dropout(0.25)) # model.add(MaxPooling2D(pool_size=(2,2))) # model.add(Dropout(0.25)) # 2、全连接层和输出层: model.add(Flatten()) # model.add(Dense(500,activation='relu')) # model.add(Dropout(0.5)) model.add(Dense(20,activation='relu')) model.add(Dropout(0.5)) model.add(Dense(2,activation='softmax')) model.summary() model.compile(loss='categorical_crossentropy',#,'binary_crossentropy' optimizer=optimizers.Adadelta(lr=0.01, rho=0.95, epsilon=1e-06),#,'Adadelta' metrics=['accuracy'])

(5)、训练模型和得分输出

1 2 3 4 5 6 7 8 9 | # 模型训练model.fit(x_train,y_train,batch_size=30,epochs=100)y_predict = model.predict(x_test)score = model.evaluate(x_test, y_test)print(score)y_pred = np.argmax(y_predict,axis=1)plt.figure('keras')plt.scatter(list(range(len(y_pred))),y_pred ,c=y_pred)plt.show() |

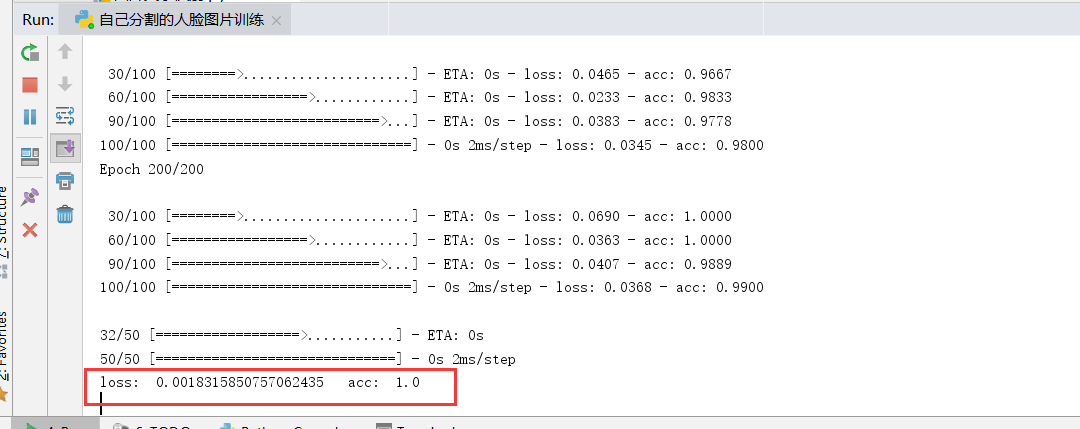

下面是结果输出,loss = 0.0018 acc = 1.0 效果很不错,主要在于你训练时候的深度。

完整代码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 | 1 # coding:utf-8 2 import numpy as np 3 import os 4 import cv2 5 os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' 6 from keras.models import Sequential 7 from keras.layers import Dense,Dropout,Flatten 8 from keras.layers import Conv2D,MaxPooling2D 9 from keras import optimizers 10 import pandas as pd 11 import matplotlib.pyplot as plt 12 13 filePath = os.listdir('img_test/') 14 print(filePath) 15 img_data = [] 16 for i in filePath: 17 img_data.append(cv2.resize(cv2.cvtColor(cv2.imread('img_test/%s'%i),cv2.COLOR_BGR2GRAY),(100,100),interpolation=cv2.INTER_AREA)) 18 19 20 x_train = np.zeros([100,100,100,1]) 21 y_train = [] 22 x_test = np.zeros([50,100,100,1]) 23 y_test = [] 24 25 for i in range(100): 26 if i<25: 27 x_train[i,:,:,0] = img_data[2] 28 y_train.append(1) 29 elif 25<=i<50: 30 x_train[i, :, :, 0] = img_data[4] 31 y_train.append(2) 32 elif 50<=i<75: 33 x_train[i, :, :, 0] = img_data[1] 34 y_train.append(1) 35 else: 36 x_train[i, :, :, 0] = img_data[5] 37 y_train.append(2) 38 39 for j in range(50): 40 if j%2==0: 41 x_test[j, :, :, 0] = img_data[0] 42 y_test.append(1) 43 else: 44 x_test[j, :, :, 0] = img_data[3] # np.ones((100,100)) 45 y_test.append(2) 46 47 y_train = np.array(pd.get_dummies(y_train)) 48 y_ts = np.array(y_test) 49 y_test = np.array(pd.get_dummies(y_test)) 50 ''' 51 from keras.models import load_model 52 from sklearn.metrics import accuracy_score 53 54 model = load_model('model/my_model.h5') 55 y_predict = model.predict(x_test) 56 y_p = np.argmax(y_predict,axis=1)+1 57 score = accuracy_score(y_ts,y_p) 58 # score = model.evaluate(x_train,y_train) 59 print(score) 60 ''' 61 62 model = Sequential() 63 # 第一层: 64 model.add(Conv2D(32,(3,3),input_shape=(100,100,1),activation='relu')) 65 model.add(MaxPooling2D(pool_size=(2,2))) 66 model.add(Dropout(0.5)) 67 # model.add(Conv2D(64,(3,3),activation='relu')) 68 #第二层: 69 # model.add(Conv2D(32,(3,3),activation='relu')) # model.add(Dropout(0.25)) 70 # model.add(MaxPooling2D(pool_size=(2,2))) 71 # model.add(Dropout(0.25)) 72 73 # 2、全连接层和输出层: 74 model.add(Flatten()) 75 # model.add(Dense(500,activation='relu')) 76 # model.add(Dropout(0.5)) 77 model.add(Dense(20,activation='relu')) 78 model.add(Dropout(0.5)) 79 model.add(Dense(2,activation='softmax')) 80 81 model.summary() 82 model.compile(loss='categorical_crossentropy',#,'binary_crossentropy' 83 optimizer=optimizers.Adadelta(lr=0.01, rho=0.95, epsilon=1e-06),#,'Adadelta' 84 metrics=['accuracy']) 85 86 # 模型训练 87 model.fit(x_train,y_train,batch_size=30,epochs=150) 88 y_predict = model.predict(x_test) 89 score = model.evaluate(x_test, y_test) 90 print('loss: ',score[0],' acc: ',score[1]) 91 y_pred = np.argmax(y_predict,axis=1) 92 plt.figure('keras',figsize=(12,6)) 93 plt.scatter(list(range(len(y_pred))),y_pred ,c=y_pred) 94 plt.show() 95 96 # 保存模型 97 # model.save('test/my_model.h5') 98 99 # import matplotlib.pyplot as plt100 # plt.imshow(x_train[30,:,:,0].reshape(100,100),cmap='gray')101 # plt.figure()102 # plt.imshow(x_test[3,:,:,0].reshape(100,100),cmap='gray')103 # plt.xticks([]);plt.yticks([])104 # plt.show() |

自动化学习。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统