win10安装FileBeat

前面我们安装了ELK(参见win10安装ELK),数据流向是:L -> E -> K,其实L的前面还可以再接一根管道B。这个B就是Beat。Beat组件的加入,打破了ELK的三国鼎立,ELK成了Elastic Stack。有各种Beat可以成为Logstash或Elasticsearch的数据源:FileBeat、PacketBeat和MetricBeat。对日志文件的传输,首选FileBeat。FileBeat可以对接Logstash,也可以直接对接Elasticsearch。

首先,去下载地址https://www.elastic.co/cn/downloads/beats/filebeat下载压缩包,我们还是以最新的7.9.0版本为例:

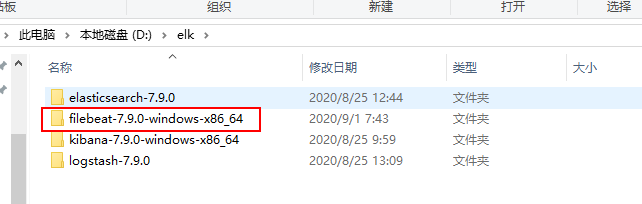

我们选择windows版本64位的压缩包,下载后解压到D盘elk目录下:

点击开始菜单 -> 找到W开头的菜单项 -> 点开Windows PowerShell -> 右键点击Winows PowerShell(x86) -> 选择“以管理员身份运行”:

进入FileBeat安装目录,执行安装命令,不出意外,会报错:

PS C:\Users\wulf> cd D:\elk\filebeat-7.9.0-windows-x86_64\ PS D:\elk\filebeat-7.9.0-windows-x86_64> .\install-service-filebeat.ps1 .\install-service-filebeat.ps1 : 无法加载文件 D:\elk\filebeat-7.9.0-windows-x86_64\install-service-filebeat.ps1,因为在 此系统上禁止运行脚本。有关详细信息,请参阅 https:/go.microsoft.com/fwlink/?LinkID=135170 中的 about_Execution_Policies 。 所在位置 行:1 字符: 1 + .\install-service-filebeat.ps1 + ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ + CategoryInfo : SecurityError: (:) [],PSSecurityException + FullyQualifiedErrorId : UnauthorizedAccess PS D:\elk\filebeat-7.9.0-windows-x86_64>

为啥呢?虽然我们用管理员身份运行PowerShell,但执行的命令有问题,我们需要换种方式执行以上命令:

PS D:\elk\filebeat-7.9.0-windows-x86_64> Get-ExecutionPolicy Restricted PS D:\elk\filebeat-7.9.0-windows-x86_64> Set-ExecutionPolicy UnRestricted 执行策略更改 执行策略可帮助你防止执行不信任的脚本。更改执行策略可能会产生安全风险,如 https:/go.microsoft.com/fwlink/?LinkID=135170 中的 about_Execution_Policies 帮助主题所述。是否要更改执行策略? [Y] 是(Y) [A] 全是(A) [N] 否(N) [L] 全否(L) [S] 暂停(S) [?] 帮助 (默认值为“N”): y PS D:\elk\filebeat-7.9.0-windows-x86_64> .\install-service-filebeat.ps1 Status Name DisplayName ------ ---- ----------- Stopped filebeat filebeat PS D:\elk\filebeat-7.9.0-windows-x86_64>

从上面可以看到,是执行策略阻止了我们对fileBeat的安装,变更一下就好了。安装好后PowerShell窗口就可以关掉了。

接着修改配置文件,进入D:\elk\filebeat-7.9.0-windows-x86_64目录,复制filebeat.yml,重命名为filebeat-simple.yml,修改它,内容如下:

filebeat.inputs: - type: log enabled: true paths: - D:\\wlf\\logs\\hello*.log output.logstash: hosts: ["localhost:5044"]

这里指定FileBeat读取D盘logs目录下一个叫hello开头的日志文件,把日志输出到logstash的5044端口。

先把logstash启起来,启动时指定配置文件:logstash-simple.conf,把原来的输入源由stdin改为FileBeat,为了简单在logstash输出界面看看,不指定es:

input{ beats { port => "5044" } } output { stdout { codec => rubydebug } }

logstash启动日志:

C:\Users\wulf>D: D:\>cd elk\logstash-7.9.0\bin D:\elk\logstash-7.9.0\bin>.\logstash -f ..\config\logstash-simple.conf Sending Logstash logs to D:/elk/logstash-7.9.0/logs which is now configured via log4j2.properties [2020-09-03T21:57:41,911][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.9.0", "jruby.version"=>"jruby 9.2.12.0 (2.5.7) 2020-07-01 db01a49ba6 Java HotSpot(TM) 64-Bit Server VM 25.102-b14 on 1.8.0_102-b14 +indy +jit [mswin32-x86_64]"} [2020-09-03T21:57:42,259][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified [2020-09-03T21:57:44,742][INFO ][org.reflections.Reflections] Reflections took 46 ms to scan 1 urls, producing 22 keys and 45 values [2020-09-03T21:57:44,998][WARN ][org.logstash.netty.SslContextBuilder] JCE Unlimited Strength Jurisdiction Policy not installed - max key length is 128 bits [2020-09-03T21:57:47,363][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, "pipeline.sources"=>["D:/elk/logstash-7.9.0/config/logstash-simple.conf"], :thread=>"#<Thread:0x30aae30d run>"} [2020-09-03T21:57:48,518][INFO ][logstash.javapipeline ][main] Pipeline Java execution initialization time {"seconds"=>1.14} [2020-09-03T21:57:48,547][INFO ][logstash.inputs.beats ][main] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"} [2020-09-03T21:57:48,573][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"} [2020-09-03T21:57:48,702][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]} [2020-09-03T21:57:48,820][INFO ][org.logstash.beats.Server][main][af3dcc0a25640c2afc7ea292b455b1260403e81008f9a1579f987486d2f7e56b] Starting server on port: 5044 [2020-09-03T21:57:49,215][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

用一个定时任务每5秒往D:\wlf\log目录下的hello-2020-09-03.0.log文件打印日志:

1、启动类:

package com.wlf.elasticsearchstatictis; import lombok.extern.slf4j.Slf4j; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; import org.springframework.scheduling.annotation.EnableScheduling; import org.springframework.scheduling.annotation.Scheduled; @Slf4j @SpringBootApplication @EnableScheduling public class Begin { public static void main(String[] args) { SpringApplication.run(Begin.class, args); } @Scheduled(fixedRate = 5000) public void logProduceTask() { log.info("hello, world."); } }

2、logback-spring.xml:

<?xml version="1.0" encoding="UTF-8"?> <configuration> <property name="BASE_DIR" value="D:\\wlf\\logs"/> <appender name="console" class="ch.qos.logback.core.ConsoleAppender"> <encoder> <pattern>%date{HH:mm:ss.SSS} [%thread] [%X{msgid}] [%X{appid}] %-5level %logger{36} - %msg%n</pattern> </encoder> </appender> <appender name="hello" class="ch.qos.logback.core.rolling.RollingFileAppender"> <rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy"> <fileNamePattern>${BASE_DIR}/hello-%d{yyyy-MM-dd}.%i.log</fileNamePattern> <!--日志文件保留天数--> <MaxHistory>5</MaxHistory> <MaxFileSize>10MB</MaxFileSize> <totalSizeCap>100MB</totalSizeCap> </rollingPolicy> <encoder> <pattern>%date{HH:mm:ss.SSS} [%thread] [%X{msgid}] [%X{appid}] %-5level %logger{36} - %msg%n</pattern> </encoder> </appender> <logger name="com.wlf.elasticsearchstatictis.Begin" additivity="false"> <level value="INFO"/> <appender-ref ref="hello"/> <appender-ref ref="console"/> </logger> </configuration>

把Begin跑起来,每5秒打印一条日志,然后启动FileBeat:

C:\Users\wulf>d: D:\>cd elk\filebeat-7.9.0-windows-x86_64 D:\elk\filebeat-7.9.0-windows-x86_64>.\filebeat -e -c filebeat-simple.yml 2020-09-03T22:02:33.203+0800 INFO instance/beat.go:640 Home path: [D:\elk\filebeat-7.9.0-windows-x86_64] Config path: [D:\elk\filebeat-7.9.0-windows-x86_64] Data path: [D:\elk\filebeat-7.9.0-windows-x86_64\data] Logs path: [D:\elk\filebeat-7.9.0-windows-x86_64\logs] 2020-09-03T22:02:33.206+0800 INFO instance/beat.go:648 Beat ID: ae375dc0-d6e2-488c-be87-2544c05b1242 2020-09-03T22:02:33.209+0800 INFO [beat] instance/beat.go:976 Beat info {"system_info": {"beat": {"path": {"config": "D:\\elk\\filebeat-7.9.0-windows-x86_64", "data": "D:\\elk\\filebeat-7.9.0-windows-x86_64\\data", "home": "D:\\elk\\filebeat-7.9.0-windows-x86_64", "logs": "D:\\elk\\filebeat-7.9.0-windows-x86_64\\logs"}, "type": "filebeat", "uuid": "ae375dc0-d6e2-488c-be87-2544c05b1242"}}} 2020-09-03T22:02:33.211+0800 INFO [beat] instance/beat.go:985 Build info {"system_info": {"build": {"commit": "b2ee705fc4a59c023136c046803b56bc82a16c8d", "libbeat": "7.9.0", "time": "2020-08-11T20:11:10.000Z", "version": "7.9.0"}}} 2020-09-03T22:02:33.211+0800 INFO [beat] instance/beat.go:988 Go runtime info {"system_info": {"go": {"os":"windows","arch":"amd64","max_procs":4,"version":"go1.14.4"}}} 2020-09-03T22:02:33.279+0800 INFO [beat] instance/beat.go:992 Host info {"system_info": {"host": {"architecture":"x86_64","boot_time":"2020-08-19T01:16:40.99+08:00","name":"wulf00","ip":["fe80::8d8e:da9f:cdde:a6b8/64","2.0.2.177/24","fe80::589d:d728:5523:99e5/64","10.73.166.158/24","fe80::759e:b0eb:609:cf8f/64","169.254.207.143/16","fe80::f58b:cdd3:6144:9492/64","169.254.148.146/16","fe80::b4c3:3952:c602:bbb6/64","10.129.217.84/21","fe80::cbd:73cc:2721:24a0/64","169.254.36.160/16","::1/128","127.0.0.1/8"],"kernel_version":"10.0.18362.1016 (WinBuild.160101.0800)","mac":["00:ff:ef:08:d8:e5","54:e1:ad:57:79:63","a0:af:bd:73:a2:09","a2:af:bd:73:a2:08","a0:af:bd:73:a2:08","00:ff:5e:c9:2d:c6"],"os":{"family":"windows","platform":"windows","name":"Windows 10 Pro","version":"10.0","major":10,"minor":0,"patch":0,"build":"18362.1016"},"timezone":"CST","timezone_offset_sec":28800,"id":"bd5672aa-84f8-4043-b25f-47453b5a9362"}}} 2020-09-03T22:02:33.280+0800 INFO [beat] instance/beat.go:1021 Process info {"system_info": {"process": {"cwd": "D:\\elk\\filebeat-7.9.0-windows-x86_64", "exe": "D:\\elk\\filebeat-7.9.0-windows-x86_64\\filebeat.exe", "name": "filebeat.exe", "pid": 68892, "ppid": 68040, "start_time": "2020-09-03T22:02:30.172+0800"}}} 2020-09-03T22:02:33.280+0800 INFO instance/beat.go:299 Setup Beat: filebeat; Version: 7.9.0 2020-09-03T22:02:33.294+0800 INFO [publisher] pipeline/module.go:113 Beat name: wulf00 2020-09-03T22:02:33.302+0800 WARN beater/filebeat.go:178 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning. 2020-09-03T22:02:33.303+0800 INFO instance/beat.go:450 filebeat start running. 2020-09-03T22:02:33.303+0800 INFO [monitoring] log/log.go:118 Starting metrics logging every 30s 2020-09-03T22:02:33.309+0800 INFO memlog/store.go:119 Loading data file of 'D:\elk\filebeat-7.9.0-windows-x86_64\data\registry\filebeat' succeeded. Active transaction id=0 2020-09-03T22:02:33.327+0800 INFO memlog/store.go:124 Finished loading transaction log file for 'D:\elk\filebeat-7.9.0-windows-x86_64\data\registry\filebeat'. Active transaction id=427 2020-09-03T22:02:33.327+0800 WARN beater/filebeat.go:381 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning. 2020-09-03T22:02:33.333+0800 INFO [registrar] registrar/registrar.go:108 States Loaded from registrar: 4 2020-09-03T22:02:33.334+0800 INFO [crawler] beater/crawler.go:71 Loading Inputs: 1 2020-09-03T22:02:33.337+0800 INFO log/input.go:157 Configured paths: [D:\wlf\logs\hello*.log] 2020-09-03T22:02:33.337+0800 INFO [crawler] beater/crawler.go:141 Starting input (ID: 9386287014943630624) 2020-09-03T22:02:33.339+0800 INFO [crawler] beater/crawler.go:108 Loading and starting Inputs completed. Enabled inputs: 1 2020-09-03T22:03:03.319+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":281,"time":{"ms":281}},"total":{"ticks":437,"time":{"ms":437},"value":437},"user":{"ticks":156,"time":{"ms":156}}},"handles":{"open":213},"info":{"ephemeral_id":"09af0d14-6589-4eeb-8fd1-3315aba33f07","uptime":{"ms":32876}},"memstats":{"gc_next":16354592,"memory_alloc":8873952,"memory_total":41138008,"rss":48308224},"runtime":{"goroutines":23}},"filebeat":{"events":{"added":1,"done":1},"harvester":{"open_files":0,"running":0}},"libbeat":{"config":{"module":{"running":0}},"output":{"type":"logstash"},"pipeline":{"clients":1,"events":{"active":0,"filtered":1,"total":1}}},"registrar":{"states":{"current":4,"update":1},"writes":{"success":1,"total":1}},"system":{"cpu":{"cores":4}}}}} 2020-09-03T22:03:33.309+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":281},"total":{"ticks":437,"value":437},"user":{"ticks":156}},"handles":{"open":210},"info":{"ephemeral_id":"09af0d14-6589-4eeb-8fd1-3315aba33f07","uptime":{"ms":62867}},"memstats":{"gc_next":16354592,"memory_alloc":8953176,"memory_total":41217232,"rss":-24576},"runtime":{"goroutines":23}},"filebeat":{"harvester":{"open_files":0,"running":0}},"libbeat":{"config":{"module":{"running":0}},"pipeline":{"clients":1,"events":{"active":0}}},"registrar":{"states":{"current":4}}}}} 2020-09-03T22:03:33.395+0800 INFO log/harvester.go:297 Harvester started for file: D:\wlf\logs\hello-2020-09-03.0.log 2020-09-03T22:03:34.405+0800 INFO [publisher_pipeline_output] pipeline/output.go:143 Connecting to backoff(async(tcp://localhost:5044)) 2020-09-03T22:03:34.405+0800 INFO [publisher] pipeline/retry.go:219 retryer: send unwait signal to consumer 2020-09-03T22:03:34.417+0800 INFO [publisher] pipeline/retry.go:223 done 2020-09-03T22:03:34.463+0800 INFO [publisher_pipeline_output] pipeline/output.go:151 Connection to backoff(async(tcp://localhost:5044)) established

代码跑着,日志在刷着,FileBeat把日志不断送往logstash,logstash控制台也在刷着新打印的日志:

{

"agent" => {

"id" => "ae375dc0-d6e2-488c-be87-2544c05b1242",

"type" => "filebeat",

"name" => "wulf00",

"version" => "7.9.0",

"ephemeral_id" => "09af0d14-6589-4eeb-8fd1-3315aba33f07",

"hostname" => "wulf00"

},

"@version" => "1",

"message" => "22:47:24.477 [scheduling-1] [] [] INFO com.wlf.elasticsearchstatictis.Begin - hello, world.",

"log" => {

"file" => {

"path" => "D:\\wlf\\logs\\hello-2020-09-03.0.log"

},

"offset" => 71861

},

"input" => {

"type" => "log"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"host" => {

"name" => "wulf00"

},

"ecs" => {

"version" => "1.5.0"

},

"@timestamp" => 2020-09-03T14:47:31.980Z

}