爬虫程序1

Requests库

Requests库的作用就是请求网站获取网页数据的。让我们从简单的实例开始,讲解Requests库的使用方法。

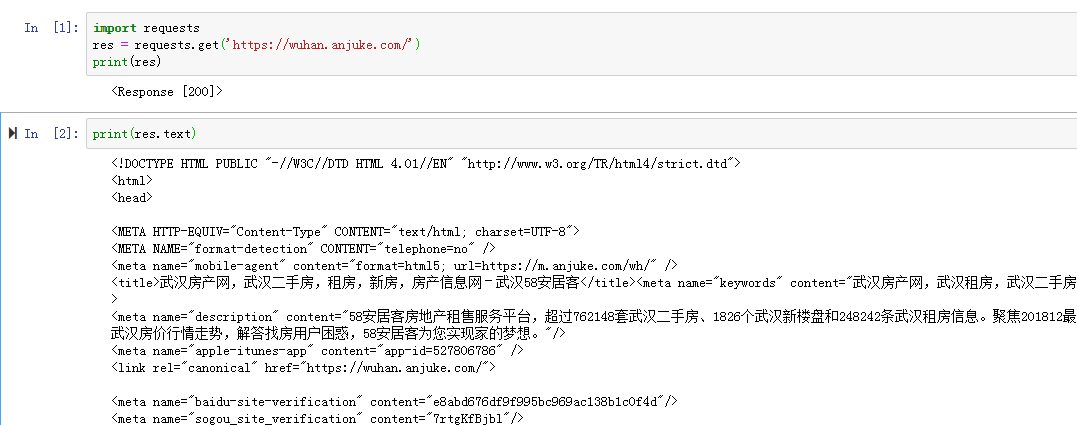

import requests

res = requests.get('https://wuhan.anjuke.com/')

print(res)

#返回结果为<Response [200]>,说明请求网址成功,若为404,400则请求网址失败。

print(res.text)

#部分结果如图

BeautifulSoup库

BeautifulSoup库是一个非常流行的Python模块。通过BeautifulSoup库可以轻松的解析Requests库请求的网页,并把网页源代码解析为Soup文档,以便过滤提取数据。

import requests

from bs4 import BeautifulSoup

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT

6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.143

Safari/537.36'

}

res = requests.get('https://wuhan.anjuke.com/',headers=headers)

soup = BeautifulSoup(res.text,'html.parser') #对返回的结果进行解析

print(soup.prettify())

Lxml库

Lxml库是基于libxm12这一个XML解析库的Python封装。该模块使用C语言编写,解析速度比BeautifulSoup更快

综合示例(一)——爬取北京地区短租房信息

(1)爬取小猪短租北京地区短租房13页的信息,通过手动浏览,以下为前四页的网址:

http://bj.xiaozhu.com/

http://bj.xiaozhu.com/search-duanzufang-p2-0/

http://bj.xiaozhu.com/search-duanzufang-p3-0/

http://bj.xiaozhu.com/search-duanzufang-p4-0/

只需更改p后面的数字即可,以此来构造出13页的网址。

(2)本次爬虫在详细页中进行,故先需爬取进入详细页的网址链接,进而爬取数据。

(3)需要爬取的信息有:

标题、地址、价格、房东名称、房东性别已经房东头像的链接

from bs4 import BeautifulSoup

import requests

import time

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.143 Safari/537.36'

}

def judgment_sex(class_name):

if class_name == ['member_ico1']:

return '女'

else:

return '男'

def get_links(url):

wb_data = requests.get(url,headers=headers)

soup = BeautifulSoup(wb_data.text,'lxml')

links = soup.select('#page_list > ul > li > a')

for link in links:

href = link.get("href")

get_info(href)

def get_info(url):

wb_data = requests.get(url,headers=headers)

soup = BeautifulSoup(wb_data.text,'lxml')

tittles = soup.select('div.pho_info > h4')

addresses = soup.select('span.pr5')

prices = soup.select('#pricePart > div.day_l > span')

imgs = soup.select('#floatRightBox > div.js_box.clearfix > div.member_pic > a > img')

names = soup.select('#floatRightBox > div.js_box.clearfix > div.w_240 > h6 > a')

sexs = soup.select('#floatRightBox > div.js_box.clearfix > div.member_pic > div')

for tittle, address, price, img, name, sex in zip(tittles,addresses,prices,imgs,names,sexs):

data = {

'tittle':tittle.get_text().strip(),

'address':address.get_text().strip(),

'price':price.get_text(),

'img':img.get("src"),

'name':name.get_text(),

'sex':judgment_sex(sex.get("class"))

}

print(data)

if __name__ == '__main__':

urls = ['http://bj.xiaozhu.com/search-duanzufang-p{}-0/'.format(number) for number in range(1,14)]

for single_url in urls:

get_links(single_url)

time.sleep(2)