第一个爬虫测试

今天主要介绍网页爬虫以及上次的比赛预测程序的测试

1.首先是对上次的测试程序进行测试

# -*- coding: utf-8 -*- """ Created on Thu May 23 00:41:48 2019 @author: h2446 """ from random import random def printIntro(): print("这个程序模拟两支排球队A和B的排球比赛") print("程序运行需要A和B的能力值(以0到1之间的小数表示)") def getInputs(): a=eval(input("请输入队伍A的能力值(0~1):")) b=eval(input("请输入队伍B的能力值(0~1):")) n=eval(input("模拟比赛的场次:")) return a,b,n def simNGames(n,probA,probB): winsA,winsB=0,0 for i in range(n): scoreA,scoreB=simOneGame(probA,probB) if scoreA>scoreB: winsA +=1 else: winsB +=1 return winsA,winsB def gameOver(a,b): if (a>25 and abs(a-b)>=2 )or(b>25 and abs(a-b)>=2): return True else: return False def simOneGame(probA,probB): scoreA,scoreB=0,0 serving = "A" while not gameOver(scoreA,scoreB): if serving =="A": if random()<probA: scoreA +=1 else: serving="B" else: if random()<probB: scoreB +=1 else: serving="A" return scoreA,scoreB def final(probA,probB): winsA,winsB=simNGames1(4,probA,probB) printSummary(winsA,winsB) if not winsA==3 or winsB==3: if winsA==winsB==2: winsA1,winsB1=simOneGame1(probA,probB) finalprintSummary(winsA,winsB) else: finalprintSummary(winsA,winsB) def simNGames1(n,probA,probB): winsA,winsB=0,0 for i in range(n): scoreA,scoreB=simOneGame2(probA,probB) if winsA==3 or winsB==3: break if scoreA>scoreB: winsA+=1 else: winsB+=1 return winsA,winsB def simOneGame2(probA,probB): scoreA,scoreB=0,0 serving="A" while not GG(scoreA,scoreB): if serving=="A": if random() < probA: scoreA += 1 else: serving="B" else: if random() < probB: scoreB += 1 else: serving="A" return scoreA,scoreB def simOneGame1(probA,probB): scoreA,scoreB=0,0 serving="A" while not finalGameOver(scoreA,scoreB): if serving=="A": if random() < probA: scoreA += 1 else: serving="B" else: if random() < probB: scoreB += 1 else: serving="A" return scoreA,scoreB def GG(a,b): return a==3 or b==3 def finalGameOver(a,b): if (a==8 or b==8): if a>b: print("A队获得8分,双方交换场地") else: print("B队获得8分,双方交换场地") if (a>15 and abs(a-b)>=2 )or(b>15 and abs(a-b)>=2): return True else: return False def finalprintSummary(winsA,winsB): n=winsA+winsB if n>=4: print("进行最终决赛") if winsA>winsB: print("最终决赛由A获胜") else: print("最终决赛由B获胜") else: if winsA>winsB: print("最终决赛由A获胜") else: print("最终决赛由B获胜") def printSummary(winsA,winsB): n=winsA+winsB print("竞技分析开始,共模拟{}场比赛".format(n)) print("选手A获胜{}场比赛,占比{:0.1%}".format(winsA,winsA/n)) print("选手B获胜{}场比赛,占比{:0.1%}".format(winsB,winsB/n)) def main(): printIntro() probA,probB,n=getInputs() winsA,winsB=simNGames(n,probA,probB) printSummary(winsA,winsB) final(probA,probB) try: main() except: print("Error")

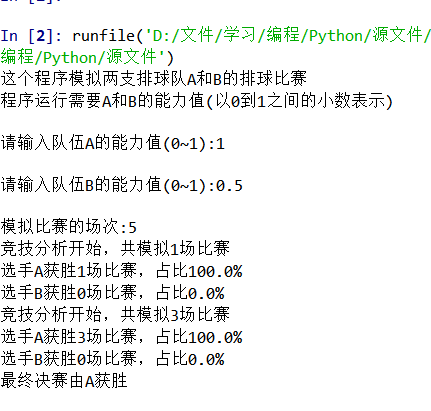

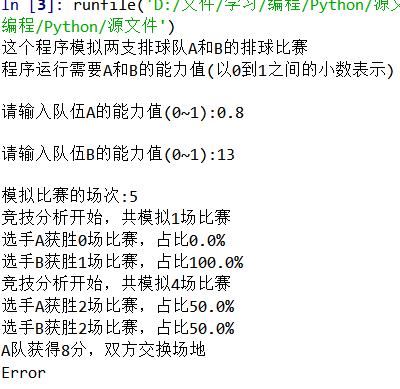

测试及结果为

2.接下来是网页爬虫的应用

先是爬中国大学排名代码如# -*- coding: utf-8 -*-"""

Created on Wed May 22 16:08:16 2019 @author: h2446 """ import requests from bs4 import BeautifulSoup allUniv = [] def getHTMLText(url): try: r=requests.get(url,timeout=30) r.raise_for_status() r.encoding='utf-8' return r.text except: return"" def fillUniVList(soup): data=soup.find_all('tr') for tr in data: ltd = tr.find_all('td') if len(ltd)==0: continue singleUniv = [] for td in ltd: singleUniv.append(td.string) allUniv.append(singleUniv) def printUnivList(num): print("

{1:^2}{2:{0}^10}{3:{0}^6}{4:{0}^4}{5:{0}^10}

".format("排名","学校名称","省事","总分","培养规模")) for i in range(num): u=allUniv[i] print("{:^4}{:^10}{:^5}{:^8}{:^10}".format(u[0],u[1],u[2],u[3],u[6])) def main(num): url = "http://www.zuihaodaxue.cn/zuihaodaxuepaiming2017.html"

html = getHTMLText(url)

soup = BeautifulSoup(html,"html.parser")

fillUniVList(soup)

printUnivList(num)

main(10)

运行结果为

出乎我意料的是这与上课时提取2016年的完全不一样

可惜我是16号

后来我将那个网址放到浏览器里确实是2017年的中国大学排名

然后我在按照上课时的网址发现

居然成功了

3.然后是爬取谷歌网的

# -*- coding: utf-8 -*- """ Created on Wed May 22 16:08:16 2019 @author: h2446 """ import requests from bs4 import BeautifulSoup alluniv = [] def getHTMLText(url): try: r = requests.get(url,timeout = 30) r.raise_for_status() r.encoding = 'utf-8' return r.text except: return "error" def xunhuang(url): for i in range(20): getHTMLText(url) def fillunivlist(soup): data=soup.find_all('tr') for tr in data: ltd =tr.find_all('td') if len(ltd)==0: continue singleuniv=[] for td in ltd: singleuniv.append(td.string) alluniv.append(singleuniv) def printf(): print("\n") print("\n") print("\n") def main(): url = "http://www.google.com" html=getHTMLText(url) xunhuang(url) print(html) soup=BeautifulSoup(html,"html.parser") fillunivlist(soup) print(html) printf() print(soup.title) printf() print(soup.head) printf() print(soup.body) main()

但由于谷歌网早些年在中国被封的原因

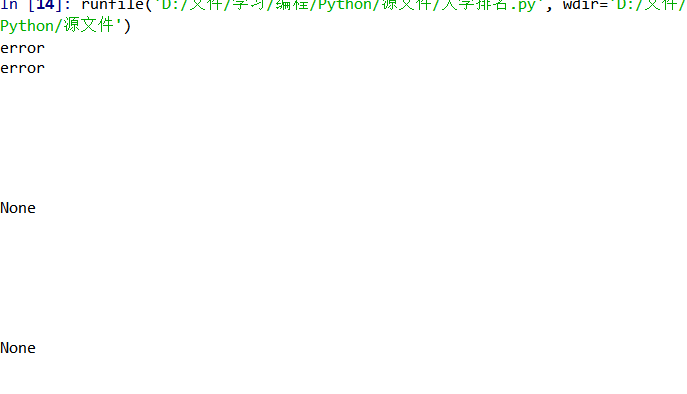

我得到的结果是这样的

最后附上表情包以表我此刻的心情

谢谢观看