centos7.2 部署k8s集群

一、背景

二、使用范围

♦ 测试环境及实验环境

三、安装前说明

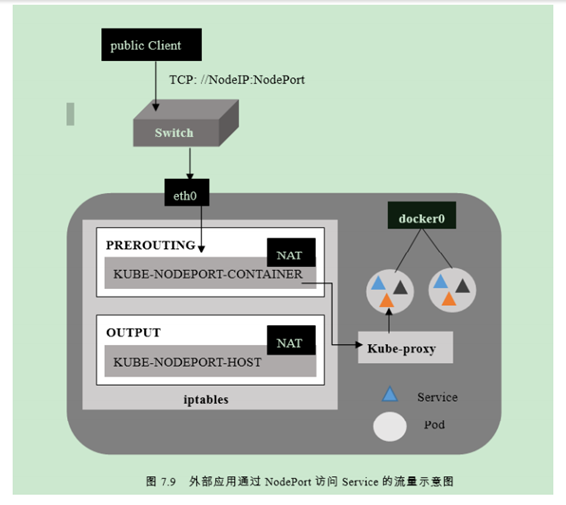

♦ k8s网络基本概念

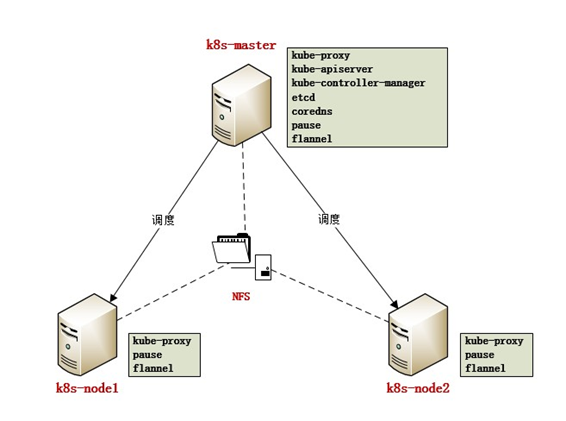

♦ 集群规划图

♦ 软件版本选取

|

Name |

Version |

Description |

|

docker-ce |

18.06.1 |

容器 |

|

kubelet |

1.12.3 |

k8s和docker的中间桥梁,保证容器被启动并持续运行 |

|

kubeadm |

1.12.3 |

集群安装工具 |

|

kubectl |

1.12.3 |

集群管理工具 |

|

kube-apiserver |

1.12.3 |

集群入口,对外提供接口以操作资源 |

|

kube-controller-manager |

1.12.3 |

所有资源的自动化控制中心 |

|

kube-scheduler |

1.12.3 |

负责资源调度 |

|

kube-proxy |

1.12.3 |

实现service通信与负载均衡 |

|

etcd |

3.2.24 |

保存集群网络配置和资源状态信息 |

|

coredns |

1.2.2 |

自动发现service name(相当于集群内部DNS) |

|

pause |

3.1 |

接管pod网络信息 |

|

flannel |

v0.10.0 |

网络插件,负责网络自动划分 |

四、集群安装实例:

1.基础服务:(请在所有节点执行)

♦ 关闭防火墙

[root@k8s-master ~]# systemctl stop firewalld.service

[root@k8s-master ~]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service.

♦ 关闭selinux

sed -i s'/enforcing/disabled/' /etc/selinux/config

♦ 添加hosts

[root@k8s-master ~]# echo “10.10.14.53 k8s-master

10.10.14.55 k8s-node1

10.10.14.57 k8s-node2” >> /etc/hosts

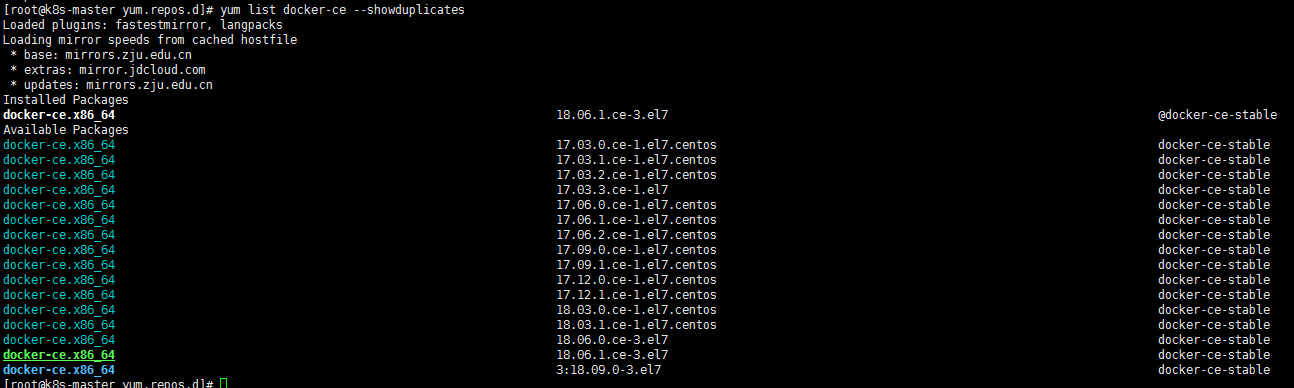

♦ yum 安装docker-ce

注:需先添加docker-ce.repo到/etc/yum.repos.d(见附件)

##查看docker-ce历史版本

yum list docker-ce --showduplicates

##选择需要的docker版本

yum install docker-ce-18.06.1.ce-3.el7 -y

注:kubernetes 1.12暂不支持docker-ce 18.06以上的版本

##添加开机启动并启动服务

[root@k8s-node-1 yum.repos.d]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@k8s-node-1 yum.repos.d]# systemctl start docker

[root@k8s-node-1 yum.repos.d]# docker -v

Docker version 18.06.1-ce, build e68fc7a

♦ 开启IPV4路由转发

[root@k8s-node-1 yum.repos.d]# echo "net.ipv4.ip_forward = 1">>/etc/sysctl.conf

[root@k8s-node-1 yum.repos.d]# sysctl -p

♦ Yum安装kubernetes相关管理工具

注:需先添加kubernetes.repo到/etc/yum.repos.d(见附件)

##查看各插件历史版本

[root@k8s-master yum.repos.d]# yum list kubelet --showduplicates

##选择需要的版本

[root@k8s-master yum.repos.d]# yum install -y kubelet-1.12.3-0 kubeadm-1.12.3-0 kubectl-1.12.3-0 --disableexcludes=kubernetes

##添加开机自启动

[root@k8s-master yum.repos.d]# systemctl enable kubelet && systemctl start kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service.

2. 初始化master节点:

♦ 拉取kubernetes模块镜像

注:kubernetes是谷歌的产品,因某些不可描述的因素国内无法直接从谷歌下载镜像,故我已将相关模块的镜像上传到私服,从私服下载再docker tag成需要的名字即可。首次登录私服需先上传证书ca.crt到/etc/docker/certs.d/harbor.linshimuye.com

需要将私服服务端的证书放在客服端

[root@k8s-node-1 yum.repos.d]# mkdir -p /etc/docker/certs.d/harbor.linshimuye.com

[root@k8s-node-1 harbor.linshimuye.com]# ll

total 4

-rw-r--r-- 1 root root 2000 Dec 12 20:39 ca.crt

♦ 添加私服的域名至hosts或者修改DNS

[root@k8s-node-1 harbor.linshimuye.com]# echo "10.10.14.56 harbor.linshimuye.com" >> /etc/hosts

登录私服: [root@k8s-node-1 harbor.linshimuye.com]# docker login -u xxxx -p xxxxx harbor.linshimuye.com

拉取K8s需要的镜像:(master节点需要的镜像)

docker pull harbor.linshimuye.com/kubernetes/kube-proxy:v1.12.3

docker pull harbor.linshimuye.com/kubernetes/pause:3.1

docker pull harbor.linshimuye.com/kubernetes/etcd:3.2.24

docker pull harbor.linshimuye.com/kubernetes/kube-apiserver:v1.12.3

docker pull harbor.linshimuye.com/kubernetes/flannel:v0.10.0-amd64

docker pull harbor.linshimuye.com/kubernetes/coredns:1.2.2

docker pull harbor.linshimuye.com/kubernetes/kube-controller-manager:v1.12.3

docker pull harbor.linshimuye.com/kubernetes/kube-scheduler:v1.12.3

修改镜像名称:

修改前:harbor.linshimuye.com/kubernetes/xxxx 修改后: k8s.gcr.io/xxxx

例如: harbor.linshimuye.com/kubernetes/kube-proxy:v1.12.3 k8s.gcr.io/kube-proxy:v1.12.3

harbor.linshimuye.com/kubernetes/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64 (只有这个名字不一样)

docker tag harbor.linshimuye.com/kubernetes/kube-proxy:v1.12.3 k8s.gcr.io/kube-proxy:v1.12.3

docker tag harbor.linshimuye.com/kubernetes/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64

docker tag harbor.linshimuye.com/kubernetes/pause:3.1 k8s.gcr.io/pause:3.1

docker tag harbor.linshimuye.com/kubernetes/etcd:3.2.24 k8s.gcr.io/etcd:3.2.24

docker tag harbor.linshimuye.com/kubernetes/kube-apiserver:v1.12.3 k8s.gcr.io/kube-apiserver:v1.12.3

docker tag harbor.linshimuye.com/kubernetes/coredns:1.2.2 k8s.gcr.io/coredns:1.2.2

docker tag harbor.linshimuye.com/kubernetes/kube-controller-manager:v1.12.3 k8s.gcr.io/kube-controller-manager:v1.12.3

docker tag harbor.linshimuye.com/kubernetes/kube-scheduler:v1.12.3 k8s.gcr.io/kube-scheduler:v1.12.3

(node节点的镜像)

docker pull harbor.linshimuye.com/kubernetes/kube-proxy:v1.12.3

docker pull harbor.linshimuye.com/kubernetes/flannel:v0.10.0-amd64

docker pull harbor.linshimuye.com/kubernetes/pause:3.1

docker tag harbor.linshimuye.com/kubernetes/kube-proxy:v1.12.3 k8s.gcr.io/kube-proxy:v1.12.3

docker tag harbor.linshimuye.com/kubernetes/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64

docker tag harbor.linshimuye.com/kubernetes/pause:3.1 k8s.gcr.io/pause:3.1

♦ 修改kubeadm配置(每个节点都需要添加)

## kubelet的文件修改后如下

[root@k8s-node-4 ~]# more /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS=--fail-swap-on=false

You have new mail in /var/spool/mail/root

注:此处设置kubernetes不使用swap

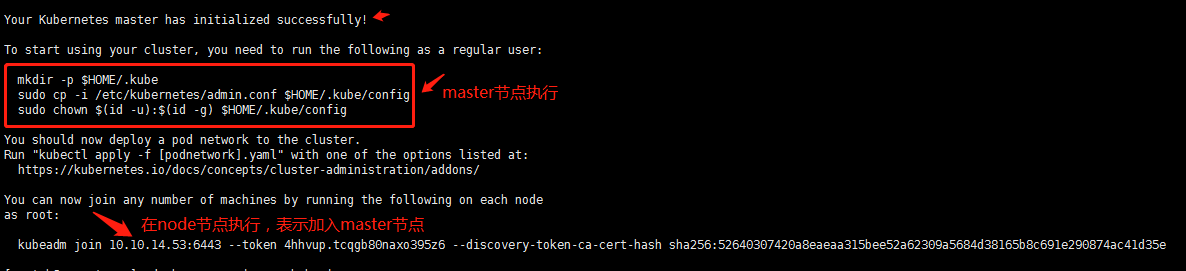

♦ 初始化master节点

[root@k8s-master ~]# kubeadm init --kubernetes-version=1.12.3 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=10.10.14.53 --ignore-preflight-errors=swap

##如果报错

[init] using Kubernetes version: v1.12.3

[preflight] running pre-flight checks

[WARNING Swap]: running with swap on is not supported. Please disable swap

[preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

解决办法:[root@k8s-master ~]# echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

[root@k8s-master ~]# kubeadm init --kubernetes-version=1.12.3 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=10.10.14.53 --ignore-preflight-errors=swap

♦ 把kubeadmin配置文件复制到当前用户的家目录

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

♦ 安装 flannel(master节点)

wget -o https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

##--pod-network-cidr=10.244.0.0/16:划分pod的网段,--apiserver-advertise-address=10.10.14.53:这是master节点的IP

♦ 查看节点信息

[root@k8s-master kubernetes]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 15h v1.12.3

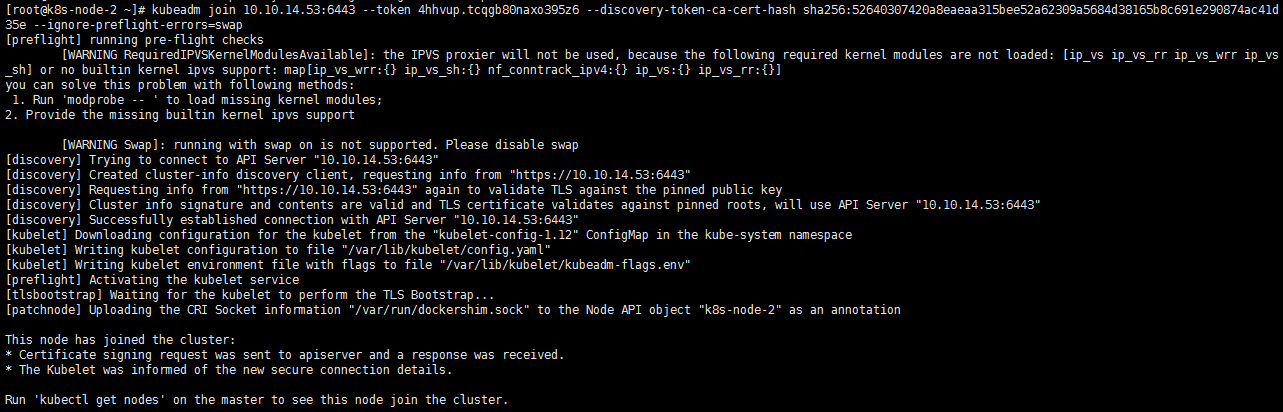

♦ node 节点加入集群

[root@k8s-node-2 ~]# echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

[root@k8s-node-2 ~]# kubeadm join 10.10.14.53:6443 --token 4hhvup.tcqgb80naxo395z6 --discovery-token-ca-cert-hash sha256:52640307420a8eaeaa315bee52a62309a5684d38165b8c691e290874ac41d35e --ignore-preflight-errors=swap

♦ 查看集群和各节点运行情况

[root@k8s-master ~]# kubectl get nodes

♦ 查看集群和各pod的运行情况

[root@k8s-master ~]# kubectl get pod --all-namespaces -o wide

♦ 清除警告和错误,需要清空节点配置

如在配置过程出现问题,可用以下方法清空配置

♦ node节点

## 在master节点清空node配置

[root@k8s-master ~]# kubectl drain k8s-node1 --delete-local-data --force --ignore-daemonsets

[root@k8s-master ~]# kubectl delete node k8s-node-1

## 在node节点清空配置

[root@k8s-node1 ~]# kubeadmin reset

##注意,master节点不要轻易reset,否则就需要重新配置

master节点创建永不过期token

♦ kubeadm token create --ttl 0 --print-join-command

xpt-sit token:

参考地址:https://blog.csdn.net/solaraceboy/article/details/83308339