下载hadoop

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.3.6/hadoop-3.3.6.tar.gz

创建hduser:

sudo groupadd hadoop

sudo useradd -g hadoop hduser

sudo chown -R hduser:hadoop /opt/hadoop-3.3.6

passwd hduser

su -hduser

ssh-keygen -t rsa -P ""

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_key

chmod 0600 ~/.ssh/authorized_keys

chmod 0700 ~/.ssh

ssh localhost

无密码登录datanode

# namenode上执行

scp ~/.ssh/id_rsa.pub hduser@10.111.8.231:~/namenode_id_rsa.pub

# datanode上执行

cat ~/namenode_id_rsa.pub >> ~/.ssh/authorized_keys

安装jdk

sudo yum install java-1.8.0-openjdk-devel

配置./bashrc

export HADOOP_HOME=/opt/hadoop-3.3.6

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HDFS_NAMENODE_USER="hduser"

export HDFS_DATANODE_USER="hduser"

export HDFS_SECONDARYNAMENODE_USER="hduser"

export YARN_RESOURCEMANAGER_UER="hduser"

export YARN_NODEMANAGER_USER="hduser"

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.402.b06-1.el7_9.x86_64 # readlink -f $(which java)

配置hadoop文件: core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://127.0.0.1:9000</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop-3.3.6/hadoop_data/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoop-3.3.6/hadoop_data/hdfs/datanode</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>VM-16-14-centos</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>0.0.0.0:8088</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

~

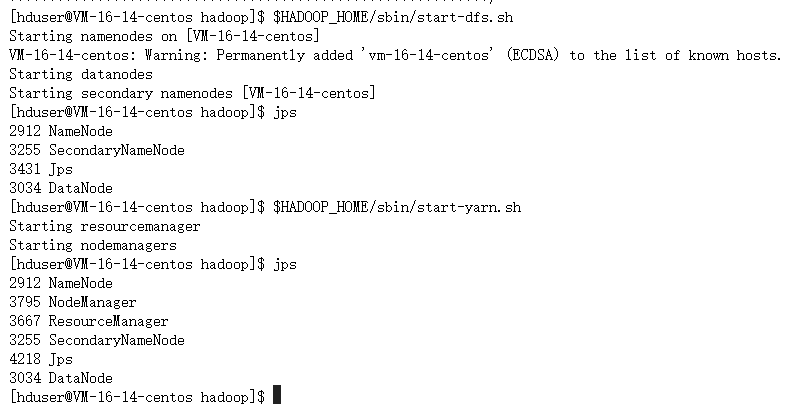

启动hdfs

$HADOOP_HOME/bin/hdfs namenode -format

$HADOOP_HOME/sbin/start-dfs.sh

$HADOOP_HOME/sbin/start-yarn.sh

jps查看

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律