利用dotnet-dump分析docker容器内存泄露

教程:官方文档https://docs.microsoft.com/zh-cn/dotnet/core/diagnostics/debug-memory-leak

环境:Linux、Docker、.NET Core 3.1 SDK及更高版本

示例代码:https://github.com/dotnet/samples/tree/master/core/diagnostics/DiagnosticScenarios

一 运行官方示例

示例中包含了泄漏内存、死锁线程、CPU占用过高等接口,方便学习。

1,Clone代码并编译

[root@localhost ~]# cd Diagnostic_scenarios_sample_debug_target

[root@localhost Diagnostic_scenarios_sample_debug_target]# dotnet build -c Release

Microsoft (R) Build Engine version 16.7.0+7fb82e5b2 for .NET

...

Build succeeded.

0 Warning(s)

0 Error(s)

Time Elapsed 00:00:05.62

2,创建Dockerfile构建镜像

FROM mcr.microsoft.com/dotnet/core/aspnet:3.1-buster-slim AS base

WORKDIR /app

EXPOSE 80

COPY bin/Release/netcoreapp3.1 .

ENTRYPOINT ["dotnet", "DiagnosticScenarios.dll"]

[root@localhost Diagnostic_scenarios_sample_debug_target]# vim Dockerfile

[root@localhost Diagnostic_scenarios_sample_debug_target]# docker build -t dumptest .

Sending build context to Docker daemon 1.47MB

Step 1/5 : FROM mcr.microsoft.com/dotnet/core/aspnet:3.1-buster-slim AS base

...

Successfully built 928e512d9be4

Successfully tagged dumptest:latest

[root@localhost Diagnostic_scenarios_sample_debug_target]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

dumptest latest 928e512d9be4 5 seconds ago 267MB

3,启动容器

--privileged=true参数的作用是给容器赋予root权限,后面createdump需要该支持。

[root@localhost Diagnostic_scenarios_sample_debug_target]# docker run --name diagnostic --privileged=true -p 888:80 -d dumptest

a68efbfbfb13117142bac9b0c6fb9f4c5124e4490eaf4850dd17fdc612e2cfda

[root@localhost Diagnostic_scenarios_sample_debug_target]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a68efbfbfb13 dumptest "dotnet DiagnosticSc…" 24 seconds ago Up 23 seconds 0.0.0.0:888->80/tcp diagnostic

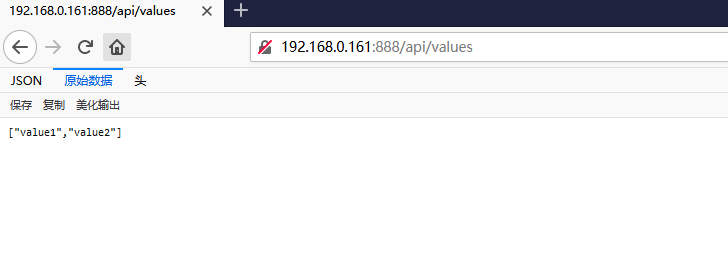

看看能否访问成功 http://192.168.0.161:888/api/values

二 生成dump转储文件

很多时候问题出现在生产环境,而测试环境又很难重现,所以问题出现时优先生成一个dump文件,然后去恢复服务(重启解决大部分问题啊😂),最后再来慢慢分析问题是一个比较合理的方案。

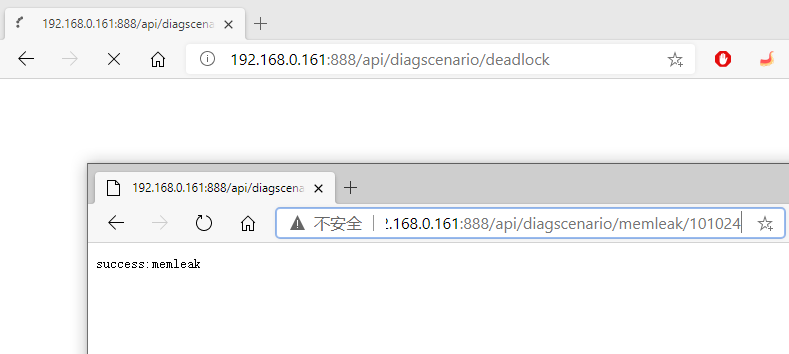

1,制造问题

通过示例中提供的接口生成一些死锁线程和内存泄漏。

http://192.168.0.161:888/api/diagscenario/deadlock

http://192.168.0.161:888/api/diagscenario/memleak/101024

2,创建dump文件

进入示例容器,通过find找到netcore自带的createdump工具;

执行createdump路径 PID命令创建dump文件(如果容器内只有一个应用,一般PID默认为1,也可以使用top命令来查看PID)

容器占用越大createdump越慢,创建完之后退出容器,将coredump.1文件拷贝到宿主机慢慢分析。

[root@localhost dumpfile]# docker exec -it diagnostic bash

root@6b6f0a7ebe10:/app# find / -name createdump

/usr/share/dotnet/shared/Microsoft.NETCore.App/3.1.10/createdump

root@6b6f0a7ebe10:/app# /usr/share/dotnet/shared/Microsoft.NETCore.App/3.1.10/createdump 1

Writing minidump with heap to file /tmp/coredump.1

Written 2920263680 bytes (712955 pages) to core file

root@6b6f0a7ebe10:/app# exit

[root@localhost dumpfile]# docker cp 6b6f0a7ebe10:/tmp/coredump.1 /mnt/dumpfile/coredump.1

[root@localhost dumpfile]# ls

coredump.1

三 分析dump文件

dotnet-dump工具依赖dotnet的sdk,如果宿主机中安装了sdk可以直接在宿主机中分析;

如果不想污染宿主机环境可以拉取一个sdk镜像mcr.microsoft.com/dotnet/core/sdk:3.1,创建一个临时环境用于分析。

1,创建一个用于分析的临时容器

需要把用于存放coredump.1文件的目录挂载到容器,或者自己cp进去。

创建好容器之后需要安装dotnet-dump工具

[root@iZwz9883hajros0tZ ~]# docker run --rm -it -v /mnt/dumpfile:/tmp/coredump mcr.microsoft.com/dotnet/core/sdk:3.1

root@6e560e9f5d55:/# cd /tmp/coredump

root@6e560e9f5d55:/tmp/coredump# ls

coredump.1

root@6e560e9f5d55:/tmp/coredump# dotnet tool install -g dotnet-dump

Tools directory '/root/.dotnet/tools' is not currently on the PATH environment variable.

...

export PATH="$PATH:/root/.dotnet/tools"

You can invoke the tool using the following command: dotnet-dump

Tool 'dotnet-dump' (version '5.0.152202') was successfully installed.

root@6e560e9f5d55:/tmp/coredump# export PATH="$PATH:/root/.dotnet/tools"

root@6e560e9f5d55:/tmp/coredump#

2,分析死锁

利用clrstack命令输出调用堆栈。

root@6e560e9f5d55:/tmp/coredump# dotnet-dump analyze coredump.1

Loading core dump: coredump.1 ...

Ready to process analysis commands. Type 'help' to list available commands or 'help [command]' to get detailed help on a command.

Type 'quit' or 'exit' to exit the session.

> clrstack -all

OS Thread Id: 0x311

Child SP IP Call Site

00007FCDE06DF530 00007fd1521e600c [GCFrame: 00007fcde06df530]

00007FCDE06DF620 00007fd1521e600c [GCFrame: 00007fcde06df620]

00007FCDE06DF680 00007fd1521e600c [HelperMethodFrame_1OBJ: 00007fcde06df680] System.Threading.Monitor.ReliableEnter(System.Object, Boolean ByRef)

00007FCDE06DF7D0 00007FD0DB4F765A testwebapi.Controllers.DiagScenarioController.<deadlock>b__3_1()

00007FCDE06DF800 00007FD0D7A25862 System.Threading.ThreadHelper.ThreadStart_Context(System.Object) [/_/src/System.Private.CoreLib/src/System/Threading/Thread.CoreCLR.cs @ 44]

00007FCDE06DF820 00007FD0DB03686D System.Threading.ExecutionContext.RunInternal(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object) [/_/src/System.Private.CoreLib/shared/System/Threading/ExecutionContext.cs @ 201]

00007FCDE06DF870 00007FD0D7A2597E System.Threading.ThreadHelper.ThreadStart() [/_/src/System.Private.CoreLib/src/System/Threading/Thread.CoreCLR.cs @ 93]

00007FCDE06DFBD0 00007fd1512f849f [GCFrame: 00007fcde06dfbd0]

00007FCDE06DFCA0 00007fd1512f849f [DebuggerU2MCatchHandlerFrame: 00007fcde06dfca0]

OS Thread Id: 0x312

Child SP IP Call Site

00007FCDDFEDE530 00007fd1521e600c [GCFrame: 00007fcddfede530]

00007FCDDFEDE620 00007fd1521e600c [GCFrame: 00007fcddfede620]

00007FCDDFEDE680 00007fd1521e600c [HelperMethodFrame_1OBJ: 00007fcddfede680] System.Threading.Monitor.ReliableEnter(System.Object, Boolean ByRef)

00007FCDDFEDE7D0 00007FD0DB4F765A testwebapi.Controllers.DiagScenarioController.<deadlock>b__3_1()

00007FCDDFEDE800 00007FD0D7A25862 System.Threading.ThreadHelper.ThreadStart_Context(System.Object) [/_/src/System.Private.CoreLib/src/System/Threading/Thread.CoreCLR.cs @ 44]

00007FCDDFEDE820 00007FD0DB03686D System.Threading.ExecutionContext.RunInternal(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object) [/_/src/System.Private.CoreLib/shared/System/Threading/ExecutionContext.cs @ 201]

00007FCDDFEDE870 00007FD0D7A2597E System.Threading.ThreadHelper.ThreadStart() [/_/src/System.Private.CoreLib/src/System/Threading/Thread.CoreCLR.cs @ 93]

00007FCDDFEDEBD0 00007fd1512f849f [GCFrame: 00007fcddfedebd0]

00007FCDDFEDECA0 00007fd1512f849f [DebuggerU2MCatchHandlerFrame: 00007fcddfedeca0]

...

300多个线程的调用堆栈大多数线程共享一个公共调用堆栈,

该调用堆栈似乎显示请求传入了死锁方法,而死锁方法继而又调用了 Monitor.ReliableEnter,此方法表示这些线程正试图进入锁定,然而这个obj可能已被其他线程获取了排它锁了。

光看这个调用堆栈,太难看出问题... 但是结合源码就很容易看出DeadlockFunc这个方法早就已经将o1,o2两个object造成了交叉死锁,然后后面启动的300个线程都试图获取获取锁定,结果就是无限等待。

00007FCDDEEDC530 00007fd1521e600c [GCFrame: 00007fcddeedc530]

00007FCDDEEDC620 00007fd1521e600c [GCFrame: 00007fcddeedc620]

00007FCDDEEDC680 00007fd1521e600c [HelperMethodFrame_1OBJ: 00007fcddeedc680] System.Threading.Monitor.ReliableEnter(System.Object, Boolean ByRef)

00007FCDDEEDC7D0 00007FD0DB4F765A testwebapi.Controllers.DiagScenarioController.<deadlock>b__3_1()

00007FCDDEEDC800 00007FD0D7A25862 System.Threading.ThreadHelper.ThreadStart_Context(System.Object) [/_/src/System.Private.CoreLib/src/System/Threading/Thread.CoreCLR.cs @ 44]

00007FCDDEEDC820 00007FD0DB03686D System.Threading.ExecutionContext.RunInternal(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object) [/_/src/System.Private.CoreLib/shared/System/Threading/ExecutionContext.cs @ 201]

00007FCDDEEDC870 00007FD0D7A2597E System.Threading.ThreadHelper.ThreadStart() [/_/src/System.Private.CoreLib/src/System/Threading/Thread.CoreCLR.cs @ 93]

00007FCDDEEDCBD0 00007fd1512f849f [GCFrame: 00007fcddeedcbd0]

00007FCDDEEDCCA0 00007fd1512f849f [DebuggerU2MCatchHandlerFrame: 00007fcddeedcca0]

利用syncblk命令找出实际持有排它锁的线程。

> syncblk

Index SyncBlock MonitorHeld Recursion Owning Thread Info SyncBlock Owner

25 0000000000E59BB8 603 1 00007FCE8C00FB30 1e8 14 00007fcea81ffb98 System.Object

26 0000000000E59C00 3 1 00007FD0C8001FA0 1e9 15 00007fcea81ffbb0 System.Object

-----------------------------

Total 326

Free 307

Owning Thread Info列下面有3个子列,第一列是地址,第二列是操作系统线程 ID,第三列是线程索引,也可以通过threads命令拿到线程索引。

通过setthread命令将线程切换到0x1e8上,然后用clrstack查看它的调用堆栈。

> setthread 14

> clrstack

OS Thread Id: 0x1e8 (14)

Child SP IP Call Site

00007FCE77FFE500 00007fd1521e600c [GCFrame: 00007fce77ffe500]

00007FCE77FFE5F0 00007fd1521e600c [GCFrame: 00007fce77ffe5f0]

00007FCE77FFE650 00007fd1521e600c [HelperMethodFrame_1OBJ: 00007fce77ffe650] System.Threading.Monitor.Enter(System.Object)

00007FCE77FFE7A0 00007FD0DB4F5D0C testwebapi.Controllers.DiagScenarioController.DeadlockFunc()

00007FCE77FFE7E0 00007FD0DB4F5B27 testwebapi.Controllers.DiagScenarioController.<deadlock>b__3_0()

00007FCE77FFE800 00007FD0D7A25862 System.Threading.ThreadHelper.ThreadStart_Context(System.Object) [/_/src/System.Private.CoreLib/src/System/Threading/Thread.CoreCLR.cs @ 44]

00007FCE77FFE820 00007FD0DB03686D System.Threading.ExecutionContext.RunInternal(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object) [/_/src/System.Private.CoreLib/shared/System/Threading/ExecutionContext.cs @ 201]

00007FCE77FFE870 00007FD0D7A2597E System.Threading.ThreadHelper.ThreadStart() [/_/src/System.Private.CoreLib/src/System/Threading/Thread.CoreCLR.cs @ 93]

00007FCE77FFEBD0 00007fd1512f849f [GCFrame: 00007fce77ffebd0]

00007FCE77FFECA0 00007fd1512f849f [DebuggerU2MCatchHandlerFrame: 00007fce77ffeca0]

这个线程的调用堆栈跟上面的300多个比起来多了一些信息,可以看出它通过DiagScenarioController.DeadlockFunc中的Monitor.Enter方法获得了某个object得排它锁。

3,分析内存泄漏

SOS命令:https://docs.microsoft.com/zh-cn/dotnet/core/diagnostics/dotnet-dump#dotnet-dump-analyze

检查当前所有托管类型的统计信息 -min [byte]可选参数可以限制统计范围

root@6e560e9f5d55:/tmp/coredump# dotnet-dump analyze coredump.1

Loading core dump: coredump.1 ...

Ready to process analysis commands. Type 'help' to list available commands or 'help [command]' to get detailed help on a command.

Type 'quit' or 'exit' to exit the session.

> dumpheap -stat

Statistics:

MT Count TotalSize Class Name

00007fd0dc2b3ab0 1 24 System.Collections.Generic.ObjectEqualityComparer`1[[System.Threading.ThreadPoolWorkQueue+WorkStealingQueue, System.Private.CoreLib]]

00007fd0dc29ca28 1 24 System.Collections.Generic.ObjectEqualityComparer`1[[Microsoft.AspNetCore.Mvc.ModelBinding.Metadata.DefaultModelMetadata, Microsoft.AspNetCore.Mvc.Core]]

...

00007fd0d7f85510 482 160864 System.Object[]

0000000000da9d80 19191 7512096 Free

00007fd0dc2b3718 2 8388656 testwebapi.Controllers.Customer[]

00007fd0dadbeb58 1010240 24245760 testwebapi.Controllers.Customer

00007fd0d7f90f90 1012406 95190854 System.String

可在此处看到大多数是String或Customer对象。String对象占用了90MB左右的空间

使用方法表mt分析占用最多的System.String类型

>dumpheap -mt 00007f61876a0f90

00007fcfabc376f8 00007fd0d7f90f90 94

00007fcfabc37770 00007fd0d7f90f90 94

00007fcfabc377e8 00007fd0d7f90f90 94

00007fcfabc37860 00007fd0d7f90f90 94

00007fcfabc378d8 00007fd0d7f90f90 94

...

Statistics:

MT Count TotalSize Class Name

00007fd0d7f90f90 1012406 95190854 System.String

Total 1012406 objects

一百万个对象,大小都是94byte,使用gcroot命令随便查看几个对象的根。

> gcroot -all 00007fcfabc378d8

HandleTable:

00007FD1508815F8 (pinned handle)

-> 00007FD0A7FFF038 System.Object[]

-> 00007FCEA830A230 testwebapi.Controllers.Processor

-> 00007FCEA830A248 testwebapi.Controllers.CustomerCache

-> 00007FCEA830A260 System.Collections.Generic.List`1[[testwebapi.Controllers.Customer, DiagnosticScenarios]]

-> 00007FD0B821F0A8 testwebapi.Controllers.Customer[]

-> 00007FCFABC378C0 testwebapi.Controllers.Customer

-> 00007FCFABC378D8 System.String

Found 1 roots.

>

基本上就是Customer,CustomerCache,Processor这几个对象造成的内存占用过高。

下一篇利用perf分析容器中CPU占用的问题

参考:

调试内存泄漏教程 | Microsoft Docs https://docs.microsoft.com/zh-cn/dotnet/core/diagnostics/debug-memory-leak

dotnet core调试docker下生成的dump文件 https://www.cnblogs.com/iamsach/p/10118628.html