docker 搭建 Hadoop

1. 制作Hadoop镜像

-

拉取centos镜像

docker pull centos:6.6 -

启动镜像

docker run -it --name centos centos:6.6 /bin/bash -

安装JDK

wget --no-check-certificate --no-cookies --header "Cookie: oraclelicense=accept-securebackup-cookie" http://download.oracle.com/otn-pub/java/jdk/8u131-b11/d54c1d3a095b4ff2b6607d096fa80163/jdk-8u131-linux-x64.tar.gz

mkdir /usr/java

tar -zxvf jdk-8u131-linux-x64.tar.gz -C /usr/java

- 修改环境变量

vim /etc/profile

#在最下方加入JAVA配置

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

export JAVA_HOME=/usr/java/jdk1.8.0_131

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

#source一下

source /etc/profile

- 安装ssh

yum -y install openssh-server

yum -y install openssh-clients

- 配置ssh免密登录

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

-

关闭selinux

setenforce 0 -

下载Hadoop

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.9.0/hadoop-2.9.0.tar.gz

mkdir /usr/local/hadoop

tar -zxvf hadoop-2.9.0.tar.gz -C /usr/local/hadoop

- 配置环境变量

vim /etc/profile

#在最下方加入Hadoop配置

export HADOOP_HOME=/usr/local/hadoop/hadoop-2.9.0

export PATH=$PATH:$HADOOP_HOME/bin

- 修改Hadoop配置

cd /usr/local/hadoop/hadoop-2.9.0/etc/hadoop/

在hadoop-env.sh 和 yarn-env.sh 在开头添加JAVA环境变量JAVA_HOME

- 修改hadoop core-site.xml文件

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131702</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/tsk/hadoop-2.9.0/tmp</value>

</property>

</configuration>

- 修改hdfs-site.xml文件

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/tsk/hadoop-2.9.0/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/tsk/hadoop-2.9.0/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

- 修改mapred-site.xml文件

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

- 修改yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>1024</value>

</property>

</configuration>

-

根据需求配置slaves文件

vim slaves -

测试一下

ldd /usr/local/hadoop/hadoop-2.9.0/lib/native/libhadoop.so.1.0.0 -

这时提示GLIBC_2.14 required,centos6的源最高到2.12,这里需要2.14,所以只能手动make安装

cd /usr/local/

wget http://ftp.gnu.org/gnu/glibc/glibc-2.14.tar.gz

tar zxvf glibc-2.14.tar.gz

cd glibc-2.14

mkdir build

cd build

../configure --prefix=/usr/local/glibc-2.14

make

make install

ln -sf /usr/local/glibc-2.14/lib/libc-2.14.so /lib64/libc.so.6

- 退出保存

exit

docker commit centos centos/hadopp

2.启动镜像

- 查看master的IP

docker inspect --format='{{.NetworkSettings.IPAddress}}' centos

- 启动镜像

docker stop master

docker rm master

docker run -it -p 50070:50070 -p 19888:19888 -p 8088:8088 -h master --name master centos/hadoop /bin/bash

vim /etc/hosts #添加slave的IP

docker run -it -h slave1 --name slave1 tsk/hadoop /bin/bash

vim /etc/hosts #添加master的IP

- master 运行

# 第一次启用前,格式化HDFS

hadoop namenode -format

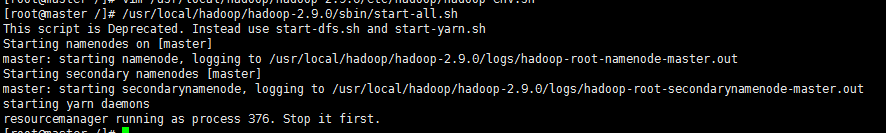

/usr/local/hadoop/hadoop-2.9.0/sbin/start-all.sh

http://www.tianshangkun.com/2017/06/13/Centos下docker搭建Hadoop集群/

不积跬步,无以至千里