MapReduce WordCount实操

一、前提

1、创建Maven项目

2、导入依赖

<dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>RELEASE</version> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-core</artifactId> <version>2.8.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.7</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.7</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.7</version> </dependency> </dependencies>

3、src/main/resources目录下,创建log4j.properties

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

二、Mapper

1、规范

a、继承Mapper

b、重写 map()方法,业务逻辑书写的地方

c、Mapper输入 k, v 键值对

d、Mapper输出 k, v 键值对

e、map()方法,每一个key,调用一次

2、创建类

package com.wt; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; public class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> { Text k = new Text(); IntWritable v = new IntWritable(1); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { // 1.获取第一行 String line = value.toString(); // 2.切割 String[] words = line.split("\\s+"); // 3.输出 for (String word : words) { /* * Text k = new Text(); 每个 key 执行一次 map 因此,把 这个放在外面,减少内存消耗 * new IntWritable(1); 同上 * */ k.set(word); context.write(k, v); } } }

三、Reducer

1、规范

a、继承Reducer

b、重写rence()方法,存放业务逻辑代码

c、Reducer的输入数据类型是Mapper的输出数据类型

d、Reducer每使用一次可以,调用一次 reduce()方法

2、创建类

package com.wt; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> { IntWritable v = new IntWritable(); // 省内存 @Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { // 1. 累加求和 int sum = 0; for (IntWritable value : values) { sum += value.get(); } // 2. 输出 v.set(sum); context.write(key, v); } }

四、Driver(基本不需要改变)

1、规范

1)、获取配置信息已经封装任务

2)、设置jar加载路径

3)、设置map和reduce类

4)、设置map输出

5)、设置最终输出kv类型

6)、设置输入和输出路径

7)、提交

2、创建类

package com.wt; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import java.io.IOException; public class WordCountDriver { public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { //1、获取配置信息已经封装任务 Configuration conf = new Configuration(); Job job = Job.getInstance(conf); //2、设置jar加载路径 job.setJarByClass(WordCountDriver.class); //3、设置map和reduce类 job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class); //4、设置map输出的k, v job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); //5、设置最终输出kv类型 job.setOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); //6、设置输入和输出路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); //7、提交 boolean wait = job.waitForCompletion(true); System.exit(wait ? 0 : 1); } }

五、在Hadoop环境上运行

1、导出jar包

2、把jar包上传到节点(服务器)

3、在集群上创建 /usr/input,并上传 文本到 该路径下

4、运行命令

hadoop jar wc.jar com.wt.WordCountDriver /usr/input /usr/output

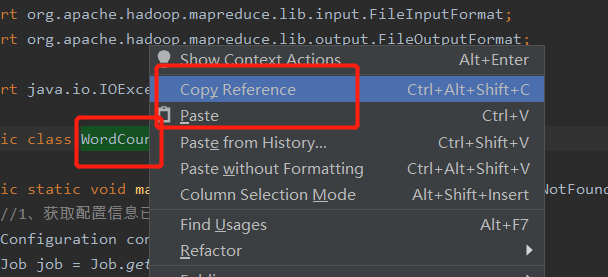

com.wt.WordCountDriver main方法类的全路径

获取方式

浙公网安备 33010602011771号

浙公网安备 33010602011771号