OpenStack-Queens版 实践

OpenStack简介

OpenStack是一个由NASA(美国国家航空航天局)和Rackspace合作研发并发起的,以Apache许可证授权的自由软件和开放源代码项目。

OpenStack是一个开源的云计算管理平台项目,由几个主要的组件组合起来完成具体工作。OpenStack支持几乎所有类型的云环境,项目目标是提供实施简单、可大规模扩展、丰富、标准统一的云计算管理平台。OpenStack通过各种互补的服务提供了基础设施即服务(IaaS)的解决方案,每个服务提供API以进行集成。

1.环境准备

服务器:两台

系统:CentOS 7.6.1810

关闭防火墙,关闭selinux等基础优化

打开支持虚拟化功能

1.1 hosts解析及主机名定义(两台)

echo "172.16.1.205 openstack-node1" >>/etc/hosts

echo "172.16.1.205 openstack-node2" >>/etc/hosts

两台主机要能上网~

1.2 安装q版本openstack库和selinux(两台)

yum install centos-release-openstack-queens -y

yum install https://rdoproject.org/repos/rdo-release.rpm -y

yum install python-openstackclient openstack-selinux -y

1.3 时间服务

openstack-node1执行:

yum install chrony -y

vim /etc/chrony.conf

allow 172.16.1.0/24 #允许哪些网段同步

systemctl restart chronyd

systemctl enable chronyd

openstack-node2执行:

yum install chrony -y

vim /etc/chrony.conf

server openstack-node1 iburst #连接同步node1的时间

systemctl restart chronyd

systemctl enable chronyd

1.4 数据库

openstack-node1上执行

yum install mariadb mariadb-server python2-PyMySQL -y

vim /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = openstack-node1

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

systemctl enable mariadb.service

systemctl start mariadb.service

mysql_secure_installation #mysql安全设置,设置一个密码,一路yyy。

1.5 消息队列

openstack-node1执行

yum install rabbitmq-server -y

systemctl enable rabbitmq-server.service

systemctl start rabbitmq-server.service

添加openstack用户并授权

rabbitmqctl add_user openstack openstack #用户名openstack,密码openstack

rabbitmqctl set_permissions openstack ".*" ".*" ".*" #给所有权限

1.6 缓存

openstack-node1执行

yum install memcached python-memcached -y

vi /etc/sysconfig/memcached

OPTIONS="127.0.0.1,::1,openstack-node1"

systemctl enable memcached.service

systemctl restart memcached.service

1.7 ETCD

OpenStack服务可以使用Etcd,一种分布式可靠的键值存储,用于分布式密钥锁定,存储配置,跟踪服务生存和其他场景。

yum install etcd -y

vim /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://openstack-node1:2380"

ETCD_LISTEN_CLIENT_URLS="http://openstack-node1:2379"

ETCD_NAME="controller"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://openstack-node1:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://openstack-node1:2379"

ETCD_INITIAL_CLUSTER="controller=http://openstack-node1:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

systemctl enable etcd

systemctl start etcd

这里如果起不来,可以使用ip地址

2.Keystone认证服务

在openstack-node1上安装

名词解释

Tenant:即租户,它是各个服务中的一些可以访问的资源集合或是资源组。它是一个容器,用于组织和隔离资源,或标识对象。

User:即用户,它是用一个数字代表使用openstack云服务的一个人、系统、或服务。身份验证服务将会验证传入的由用户声明将调用的请求。

一个租户可以由多个用户

一个用户可以属于一个或多个租户

用户对租户和操作权限由用户在租户中担任的角色来决定

project:项目同租户~

Token:令牌

Role:角色 权限的集合Roles代表一组用户可以访问的资源权限,例如nova中的虚拟机,glance中的镜像

Service:即服务,一个服务可以确认当前用户是否具有访问资源的权限。但当一个user尝试访问租户内的service时,他必须知道这个service是否存在以及如何访问这个service

Endpoint:即端点,服务的访问点,如果需要访问一个服务,必须知道他的endpoint,每个endpoint的url都对他一个服务实例的访问地址

2.1 创建数据库

mysql -u root -p

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'keystone';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'keystone';

2.2 顺便创建其他服务的数据库

glance

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'glance';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'glance';

nova

CREATE DATABASE nova_api;CREATE DATABASE nova;CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'nova';

neutron

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'neutron';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'neutron';

cinder

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'cinder';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'cinder';

2.3 安装配置keystone服务

yum install openstack-keystone httpd mod_wsgi -y

vim /etc/keystone/keystone.conf

[database]

connection = mysql+pymysql://keystone:keystone@openstack-node1/keystone

[token]

provider = fernet

2.4 导入数据

su -s /bin/sh -c "keystone-manage db_sync" keystone

2.5 注册服务

keystone监听5000端口

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

keystone-manage bootstrap --bootstrap-password admin \ #账号admin 密码设置admin

--bootstrap-admin-url http://openstack-node1:5000/v3/ \

--bootstrap-internal-url http://openstack-node1:5000/v3/ \

--bootstrap-public-url http://openstack-node1:5000/v3/ \

--bootstrap-region-id RegionOne

2.6 使用环境变量

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://openstack-node1:5000/v3

export OS_IDENTITY_API_VERSION=3

2.7 创建服务,角色,用户

openstack project create --domain default \

--description "Service Project" service

openstack project create --domain default \

--description "Demo Project" demo

openstack user create --domain default \

--password-prompt demo

openstack role create user

openstack role add --project demo --user demo user

2.8 配置httpd

vim /etc/httpd/conf/httpd.conf

ServerName openstack-node1

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

systemctl enable httpd.service

systemctl start httpd.service

2.9 验证

unset OS_AUTH_URL OS_PASSWORD

openstack --os-auth-url http://openstack-node1:35357/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin token issue

密码admin

openstack --os-auth-url http://openstack-node1:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name demo --os-username demo token issue

密码demo

2.10 脚本文件

脚本文件 admin-keystone.sh

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://openstack-node1:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

脚本文件 demo-keystone.sh

export OS_USERNAME=demo

export OS_PASSWORD=demo

export OS_PROJECT_NAME=demo

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://openstack-node1:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

3.Glance镜像服务

3.1 安装配置服务

yum install openstack-glance -y

vim /etc/glance/glance-api.conf

[database]

# ...

connection = mysql+pymysql://glance:glance@openstack-node1/glance

[keystone_authtoken]

# ...

auth_uri = http://openstack-node1:5000

auth_url = http://openstack-node1:5000

memcached_servers = openstack-node1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = glance

[paste_deploy]

# ...

flavor = keystone

[glance_store]

# ...

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

vim /etc/glance/glance-registry.conf

[database]

# ...

connection = mysql+pymysql://glance:glance@openstack-node1/glance

[keystone_authtoken]

# ...

auth_uri = http://openstack-node1:5000

auth_url = http://openstack-node1:5000

memcached_servers = openstack-node1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = glance

[paste_deploy]

# ...

flavor = keystone

su -s /bin/sh -c "glance-manage db_sync" glance

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl start openstack-glance-api.service openstack-glance-registry.service

. admin-stone.sh

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

openstack image create "cirros" \

--file cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--public

3.2 注册服务

openstack user create --domain default --password-prompt glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image" image

openstack endpoint create --region RegionOne image public http://openstack-node1:9292

openstack endpoint create --region RegionOne image internal http://openstack-node1:9292

openstack endpoint create --region RegionOne image admin http://openstack-node1:9292

3.3 验证

openstack image list

4.Nova计算服务-控制节点

openstack-node1上执行

4.1 安装配置服务

yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api -y

vim /etc/nova/nova.conf

[DEFAULT] enabled_apis = osapi_compute,metadata

[api_database] connection = mysql+pymysql://nova:nova@openstack-node1/nova_api [database] connection = mysql+pymysql://nova:nova@openstack-node1/nova

[DEFAULT] transport_url = rabbit://openstack:openstack@openstack-node1

[api] auth_strategy = keystone [keystone_authtoken] auth_url = http://openstack-node1:5000/v3 memcached_servers = openstack-node1:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = nova [DEFAULT] use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc] enabled = true server_listen = 0.0.0.0 server_proxyclient_address = openstack-node1

[glance] api_servers = http://openstack-node1:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp

[placement] os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://openstack-node1:5000/v3 username = placement password = placement

4.2 追加httpd配置

配置允许访问

vim /etc/httpd/conf.d/00-nova-placement-api.conf <Directory /usr/bin> <IfVersion >= 2.4> Require all granted </IfVersion> <IfVersion < 2.4> Order allow,deny Allow from all </IfVersion> </Directory>

systemctl restart httpd

4.3 注册服务

openstack user create --domain default --password-prompt nova

openstack role add --project service --user nova admin

openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://openstack-node1:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://openstack-node1:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://openstack-node1:8774/v2.1

openstack user create --domain default --password-prompt placement

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://openstack-node1:8778

openstack endpoint create --region RegionOne placement internal http://openstack-node1:8778

openstack endpoint create --region RegionOne placement admin http://openstack-node1:8778

4.4 同步数据

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

4.5 启动服务

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl restart openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

5. Nova计算服务-计算节点

openstack-node2上执行

5.1 安装配置服务

yum install openstack-nova-compute -y

vim /etc/nova/nova.conf

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

[DEFAULT]

# ...

transport_url = rabbit://openstack:openstack@openstack-node1

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://openstack-node1:5000/v3

memcached_servers = openstack-node1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = nova

[DEFAULT]

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

enabled = True

server_listen = 0.0.0.0

server_proxyclient_address = openstack-node2

novncproxy_base_url = http://openstack-node1:6080/vnc_auto.html

[glance]

api_servers = http://openstack-node1:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://openstack-node1:5000/v3

username = placement

password = placement

[libvirt]

virt_type = kvm

5.2 启动服务

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl restart libvirtd.service openstack-nova-compute.service

5.3 导入数据

查看计算节点服务在openstack-node1上执行

. admin-keystone.sh

openstack compute service list --service nova-compute

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

5.4 验证

去openstack-node1执行

openstack compute service list

+----+------------------+-----------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+-----------------+----------+---------+-------+----------------------------+

| 1 | nova-conductor | openstack-node1 | internal | enabled | up | 2018-12-24T05:43:56.000000 |

| 2 | nova-scheduler | openstack-node1 | internal | enabled | up | 2018-12-24T05:43:57.000000 |

| 3 | nova-consoleauth | openstack-node1 | internal | enabled | up | 2018-12-24T05:43:58.000000 |

| 6 | nova-compute | openstack-node2 | nova | enabled | up | 2018-12-24T05:44:06.000000 |

+----+------------------+-----------------+----------+---------+-------+----------------------------+

openstack catalog list

+-----------+-----------+----------------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+----------------------------------------------+

| glance | image | RegionOne |

| | | public: http://openstack-node1:9292 |

| | | RegionOne |

| | | internal: http://openstack-node1:9292 |

| | | RegionOne |

| | | admin: http://openstack-node1:9292 |

| | | |

| placement | placement | RegionOne |

| | | public: http://openstack-node1:8778 |

| | | RegionOne |

| | | admin: http://openstack-node1:8778 |

| | | RegionOne |

| | | internal: http://openstack-node1:8778 |

| | | |

| nova | compute | RegionOne |

| | | public: http://openstack-node1:8774/v2.1 |

| | | RegionOne |

| | | admin: http://openstack-node1:8774/v2.1 |

| | | RegionOne |

| | | internal: http://openstack-node1:8774/v2.1 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://openstack-node1:5000/v3/ |

| | | RegionOne |

| | | public: http://openstack-node1:5000/v3/ |

| | | RegionOne |

| | | admin: http://openstack-node1:5000/v3/ |

| | | |

+-----------+-----------+----------------------------------------------+

nova-status upgrade check

+--------------------------------+

| Upgrade Check Results |

+--------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Resource Providers |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: API Service Version |

| Result: Success |

| Details: None |

+--------------------------------+

6.Neutron网络服务-控制节点

以网络1,单一扁平网络为例

在openstack-node1上执行

6.1安装配置

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y

vim /etc/neutron/neutron.conf

[database]

# ...

connection = mysql+pymysql://neutron:neutron@openstack-node1/neutron

[DEFAULT]

# ...

core_plugin = ml2

service_plugins =

[DEFAULT]

# ...

transport_url = rabbit://openstack:openstack@openstack-node1

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_uri = http://openstack-node1:5000

auth_url = http://openstack-node1:35357

memcached_servers = openstack-node1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

[DEFAULT]

# ...

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

# ...

auth_url = http://openstack-node1:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = nova

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

-------------------------------------------------------

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

# ...

type_drivers = flat,vlan

[ml2]

# ...

tenant_network_types =

[ml2]

# ...

mechanism_drivers = linuxbridge

[ml2]

# ...

extension_drivers = port_security

[ml2_type_flat]

# ...

flat_networks = provider

[securitygroup]

# ...

enable_ipset = true

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0

[vxlan]

enable_vxlan = false

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

vim /etc/neutron/metadata_agent.ini

[DEFAULT]

# ...

nova_metadata_host = openstack-node1

metadata_proxy_shared_secret = wangsiyu

vim /etc/nova/nova.conf

[neutron]

# ...

url = http://openstack-node1:9696

auth_url = http://openstack-node1:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

service_metadata_proxy = true

metadata_proxy_shared_secret = wangsiyu

6.2 注册服务

openstack user create --domain default --password-prompt neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network

openstack endpoint create --region RegionOne network public http://openstack-node1:9696

openstack endpoint create --region RegionOne network internal http://openstack-node1:9696

openstack endpoint create --region RegionOne network admin http://openstack-node1:9696

6.3 导入数据

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

6.4 重启nova-api并启动neutron

systemctl restart openstack-nova-api.service

systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl restart neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

7.Neutron网络服务-计算节点

在openstack-node2上执行

7.1 安装配置

yum install openstack-neutron-linuxbridge ebtables ipset -y

vim /etc/neutron/neutron.conf [DEFAULT] # ... transport_url = rabbit://openstack:openstack@openstack-node1 [DEFAULT] # ... auth_strategy = keystone [keystone_authtoken] # ... auth_uri = http://openstack-node1:5000 auth_url = http://openstack-node1:35357 memcached_servers = openstack-node1:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = neutron [oslo_concurrency] # ... lock_path = /var/lib/neutron/tmp

配置/etc/neutron/plugins/ml2/linuxbridge_agent.ini

直接从node1直接拷过来 在openstack-node1上执行

scp /etc/neutron/plugins/ml2/linuxbridge_agent.ini openstack-node2:/etc/neutron/plugins/ml2/

vim /etc/nova/nova.conf

[neutron]

url = http://openstack-node1:9696

auth_url = http://openstack-node1:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

7.2 重启nova-compute服务并启动neutron服务

systemctl restart openstack-nova-compute.service

systemctl enable neutron-linuxbridge-agent.service

systemctl restart neutron-linuxbridge-agent.service

8.开始创建虚拟机

在openstack-node1上执行

8.1 验证各个服务

glance和keystone

openstack image list

+--------------------------------------+--------+------------+

| ID | Name | Status |

+--------------------------------------+--------+------------+

| 7e17b824-54f1-435b-85af-a9b6ec3a42b6 | cirros | active |

+--------------------------------------+--------+------------+

nova

+--------------------------------------+------------------+-----------------+----------+---------+-------+----------------------------+-----------------+-------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | Forced down |

+--------------------------------------+------------------+-----------------+----------+---------+-------+----------------------------+-----------------+-------------+

| cf136374-554d-44ea-b996-86df0256852c | nova-conductor | openstack-node1 | internal | enabled | up | 2018-12-26T05:38:34.000000 | - | False |

| a489d766-3a03-48dd-99f4-b8a9b6ad811b | nova-scheduler | openstack-node1 | internal | enabled | up | 2018-12-26T05:38:37.000000 | - | False |

| c26ef7b6-a1df-49b8-9c3c-936d5a6fb2c6 | nova-consoleauth | openstack-node1 | internal | enabled | up | 2018-12-26T05:38:34.000000 | - | False |

| 2ddc04b6-a50b-40aa-9017-554a2d4ba844 | nova-compute | openstack-node2 | nova | enabled | up | 2018-12-25T04:07:18.000000 | - | False |

+--------------------------------------+------------------+-----------------+----------+---------+-------+----------------------------+-----------------+-------------+

neutron

+--------------------------------------+--------------------+-----------------+-------------------+-------+----------------+---------------------------+

| id | agent_type | host | availability_zone | alive | admin_state_up | binary |

+--------------------------------------+--------------------+-----------------+-------------------+-------+----------------+---------------------------+

| 0a1cd690-ff2e-49f9-a1cb-41adca653702 | Linux bridge agent | openstack-node2 | | :-) | True | neutron-linuxbridge-agent |

| 2d32eb51-b067-4710-b6ee-734e8de6be4c | DHCP agent | openstack-node1 | nova | :-) | True | neutron-dhcp-agent |

| 9656ef44-ccf7-4d01-bb19-8384d5edb9ce | Linux bridge agent | openstack-node1 | | :-) | True | neutron-linuxbridge-agent |

| e5d0f391-4825-42c5-a358-765aedb0ea8d | Metadata agent | openstack-node1 | | :-) | True | neutron-metadata-agent |

+--------------------------------------+--------------------+-----------------+-------------------+-------+----------------+---------------------------+

nova四个up,neutron四个笑脸说明成功

8.2 创建网络

. admin-keystone.sh

openstack network create --share --external \

--provider-physical-network provider \

--provider-network-type flat provider

neutron net-list

+--------------------------------------+----------+----------------------------------+---------+

| id | name | tenant_id | subnets |

+--------------------------------------+----------+----------------------------------+---------+

| 37141da8-97cc-4cac-b592-7c194179331f | provider | 05e7ebc641f745c9a99cedc7d5fab789 | |

+--------------------------------------+----------+----------------------------------+---------+

创建子网

openstack subnet create --network provider-1 \

--allocation-pool start=172.16.1.10,end=172.16.1.254 \

--dns-nameserver 114.114.114.114 --gateway 172.16.1.1 \

--subnet-range 172.16.1.0/24 provider-subnet

8.3创建虚拟机类型,键值对和安全策略

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

. demo-keystone.sh

ssh-keygen -q -N ""

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

+-------------+-------------------------------------------------+

| Field | Value |

+-------------+-------------------------------------------------+

| fingerprint | b8:92:c8:00:d9:39:f6:86:82:7f:7d:4e:5f:1b:f6:13 |

| name | mykey |

| user_id | aba5b2569a324ac99b1183353fa07b5b |

+-------------+-------------------------------------------------+

查看键值对

openstack keypair list

+-------+-------------------------------------------------+

| Name | Fingerprint |

+-------+-------------------------------------------------+

| mykey | b8:92:c8:00:d9:39:f6:86:82:7f:7d:4e:5f:1b:f6:13 |

+-------+-------------------------------------------------+

安全组

开放ping和ssh

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default

8.4 启动虚拟机

openstack server create --flavor m1.nano --image cirros --security-group default --key-name mykey provider-test

openstack server list

+--------------------------------------+---------------+---------+----------------------+--------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+---------------+---------+----------------------+--------+---------+

| af56f2b3-671f-4945-b772-450aa28841a4 | provider-test | running | provider=172.16.1.14 | cirros | m1.nano |

+--------------------------------------+---------------+---------+----------------------+--------+---------+

获取url

openstack console url show provider-test

+-------+--------------------------------------------------------------------------------------+

| Field | Value |

+-------+--------------------------------------------------------------------------------------+

| type | novnc |

| url | http://openstack-node1:6080/vnc_auto.html?token=ee12db7a-8ac5-49cf-9100-5b0af30337b8 |

+-------+--------------------------------------------------------------------------------------+

访问这个地址,没有报错而且可以看到虚拟机说明创建成功

9.Horizon web页面

9.1 安装配置服务

yum install openstack-dashboard -y

vim /etc/openstack-dashboard/local_settings

ALLOWED_HOSTS = ['*',]

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"data-processing": 1.1,

"identity": 3,

"image": 2,

"volume": 2,

"compute": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'openstack-node1:11211',

}

}

OPENSTACK_HOST = "openstack-node1"

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

OPENSTACK_NEUTRON_NETWORK = {

...

'enable_router': False,

'enable_quotas': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_vpn': False,

'enable_fip_topology_check': False,

}

TIME_ZONE = "Asia/Shanghai"

vim /etc/httpd/conf.d/openstack-dashboard.conf

WSGIApplicationGroup %{GLOBAL}

systemctl restart httpd.service memcached.service

systemctl enable httpd.service

主机访问

http://openstack-node1/dashboard #如果没做解析就要用ip访问

账号admin 密码admin 域default

10.Cinder存储管理服务

cinder

用于管理存储

cinder-api

接收api请求,并将其路由到cinder-volume执行

cinder-volume

与块存储服务和例如cinder-scheduler的进程进行直接交互

cinder-scheduler守护进程

选择最优存储提供节点来创建卷与nova-schduler组件类似

cinder-backup daemon

cinder-backup服务提供任何种类备份卷到一个备份存储提供者,就像cinder-volume服务,它与多种存储提供者在驱动架构下进行交互

消息队列

块存储的进程之间的路由信息

10.1 安装配置服务

yum install openstack-cinder -y vim /etc/cinder/cinder.conf [database] connection = mysql+pymysql://cinder:cinder@openstack-node1/cinder [DEFAULT] transport_url = rabbit://openstack:openstack@openstack-node1 [DEFAULT] auth_strategy = keystone [keystone_authtoken] auth_uri = http://openstack-node1:5000 auth_url = http://openstack-node1:5000 memcached_servers = openstack-node1:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = cinder [oslo_concurrency] lock_path = /var/lib/cinder/tmp su -s /bin/sh -c "cinder-manage db sync" cinder #导入数据 vim /etc/nova/nova.conf os_region_name = RegionOne

systemctl restart openstack-nova-api.service

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl restart openstack-cinder-api.service openstack-cinder-scheduler.service

10.2 注册服务

. admin-keystone.sh

openstack user create --domain default --password-prompt cinder

openstack role add --project service --user cinder admin

openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

创建endpoint

openstack endpoint create --region RegionOne volumev2 public http://openstack-node1:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev2 internal http://openstack-node1:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev2 admin http://openstack-node1:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 public http://openstack-node1:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 internal http://openstack-node1:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 admin http://openstack-node1:8776/v3/%\(project_id\)s

10.3 cinder存储

cinder存储节点openstack-node1

没有cinder的时候存在本地 /var/lib/nova/instances/

yum install lvm2 device-mapper-persistent-data

systemctl enable lvm2-lvmetad.service

systemctl start lvm2-lvmetad.service

yum install openstack-cinder targetcli python-keystone -y

创建磁盘

关机挂载盘

创建

pvcreate /dev/sdb

创建cinder的磁盘

vgcreate cinder-volumes /dev/sdb

vgdisplay

输出

File descriptor 6 (/dev/pts/0) leaked on vgdisplay invocation. Parent PID 1679: -bash

--- Volume group ---

VG Name cinder-volumes

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size <8.00 GiB

PE Size 4.00 MiB

Total PE 2047

Alloc PE / Size 0 / 0

Free PE / Size 2047 / <8.00 GiB

VG UUID edVGVd-YrEV-7o8W-Eo78-3GZ3-a2uy-CtN8NW

10.4 配置lvm

vim /etc/cinder/cinder.conf

[DEFAULT]

enabled_backends = lvm

glance_api_servers = http://openstack-node1:9292

target_ip_address = openstack-node1

#尾部追加

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

iscsi_protocol = iscsi

iscsi_helper = lioadm

10.5 启动服务并挂载磁盘

systemctl enable openstack-cinder-volume.service target.service

systemctl restart openstack-cinder-volume.service target.service

挂载

mkfs.xfs /dev/vdc

mkdir /data

mount /dev/vdc /data

11.Cinder用NFS存储

openstack-node2执行

11.1安装,配置,启动服务

yum install openstack-cinder python-keystone -y

yum install nfs-utils rpcbind -y

创建挂载目录

mkdir /data/nfs -p

echo "/data/nfs *(rw,sync,no_root_squash)" >>/etc/exports

systemctl restart rpcbind nfs

systemctl enable rpcbind nfs

去node1拷贝配置文件到node2

scp /etc/cinder/cinder.conf openstack-node2:/etc/cinder

echo "openstack-node2:/data/nfs" >>/etc/cinder/nfs_shares #填共享的目录 域名、IP:/目录

vim /etc/cinder/cinder.conf

[DEFAULT]

enabled_backends = nfs

[nfs]

volume_driver = cinder.volume.drivers.nfs.NfsDriver

nfs_shares_config = /etc/cinder/nfs_shares

nfs_mount_point_base = $state_path/mn #state_path = /var/lib/cinder

cd /etc/cinder/ && chown root:cinder nfs_shares

systemctl enable openstack-cinder-volume

systemctl start openstack-cinder-volume

去openstack-node1上查看

cinder service-list

+------------------+---------------------+------+---------+-------+----------------------------+-----------------+

| Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+------------------+---------------------+------+---------+-------+----------------------------+-----------------+

| cinder-scheduler | openstack-node1 | nova | enabled | up | 2018-12-26T06:16:43.000000 | - |

| cinder-volume | openstack-node2@nfs | nova | enabled | up | 2018-12-26T01:59:08.000000 | - |

| cinder-volume | openstack-node1@lvm | nova | enabled | up | 2018-12-26T06:16:39.000000 | - |

+------------------+---------------------+------+---------+-------+----------------------------+-----------------+

11.2 创建NFS和ISCSI类型

cinder type-create NFS

cinder type-create ISCSI

11.3 配置volume_backend_name

openstack-node1:

vim /etc/cinder/cinder.conf

[lvm]

volume_backend_name = ISCSI-test #名称可以随便起

systemctl restart openstack-cinder-volume

openstack-node2:

echo "volume_backend_name = NFS-test" >>/etc/cinder/cinder.conf

systemctl restart openstack-cinder-volume

类型和存储关联openstack-node1

cinder type-key NFS set volume_backend_name=NFS-test #第一个NFS是创建的类型,第二个NFS是在配置文件里配置的名称

cinder type-key ISCSI set volume_backend_name=ISCSI-test

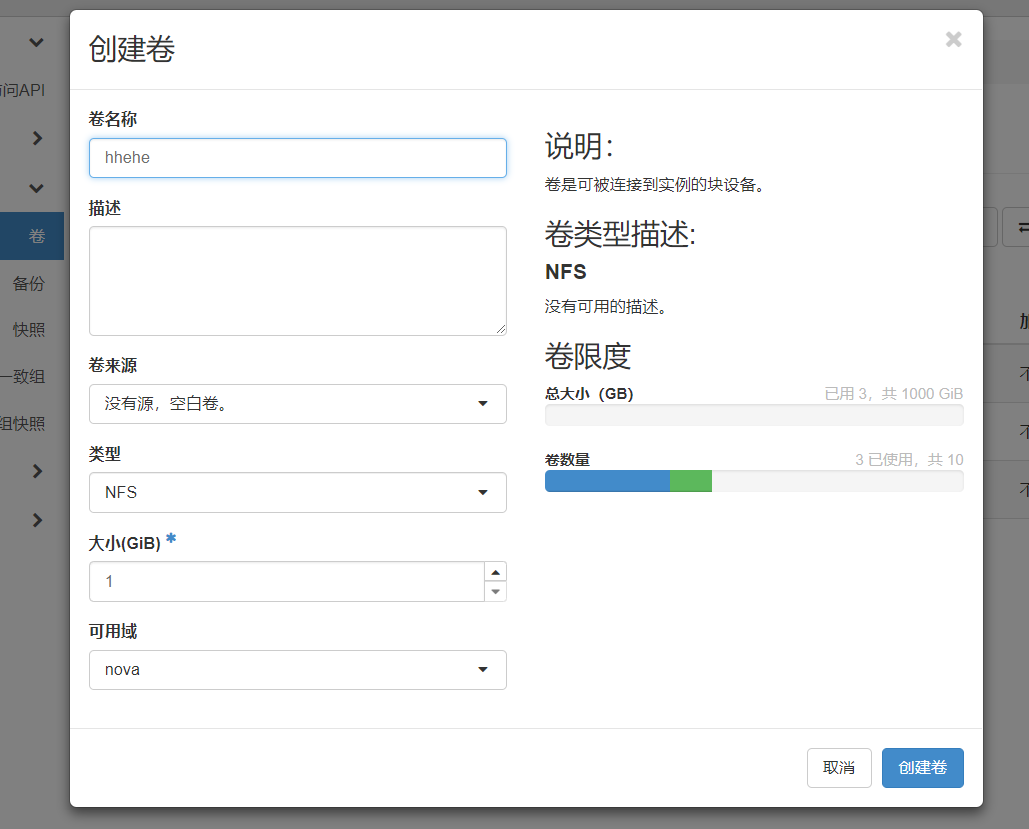

去创建卷

选NFS

创建成功~

cinder用其他存储的公式(例如ceph)

1.存储准备好

2.安装cinder-volume

3.配置cinder.conf 增加

[ceph]

volume_drivers = ceph的驱动

...

volume_backend_name = ceph-test

4.启动cinder-volume

5.创建类型

cinder type-create ceph

6.关联类型

cinder type-key ceph set volume_backend_name = ceph-test

至此openstack基础搭建完毕

-------------------------------------------------------------------------------------------------------

学习笔记&面试题

虚拟机创建流程

三种方法:通过dashboard创建,通过命令行创建,通过openstack-api进行创建

1.第一个阶段:keystone交互阶段 登录dashboard的时候 发送一个请求用户名密码给keystone,获取token

带着token访问nova-api 发送要创建虚拟机的请求,nova-api去keystone验证token有效性

2.第二个阶段:nova组件之间的交互,验证成功后

nova-api把信息写入数据库,同时把请求放到消息队列中

nova-scheduler连接数据库获取数据并调度,选一个nova-compute进行创建

nova-compute去队列提取消息并创建虚拟机

nova-compute连接nova-conductor获取实例信息,nova-conductor去数据库获取数据

3.第三个阶段:nova服务和其他服务的交互获取镜像,网络,存储,glance去keystone验证,网络去keystone验证,cinder去keystone验证

4.第四个阶段:创建虚拟机nova-compute 调用hypervisor(kvm)通过libvirtd(kvm管理工具)创建虚拟机,写入数据库,同时nova-api轮询数据库,获取当前虚拟机的状态

scheduler 调度选择当前计算节点能不能满足创建虚拟机的条件,对符合的机器进行权重的计算,默认会选择一个资源比较多的宿主机创建,

conductor 代替nova其他服务访问数据库,是一个连接数据的中间件,为了保证数据库的安全性

keystone 两大功能,用户验证,服务目录管理需要注册endpoint 三种admin 内部使用,公共的api admin端口35357 其他的是5000

消息队列 交通枢纽,nova组件的交互通过消息队列

单一扁平网络 虚拟机和宿主机在同一个网络里,不需要路由

openstack和kvm区别 openstack是iaas kvm是虚拟化技术,openstack使用kvm技术来创建虚拟机

创建不了虚拟机怎么办:

读取所有日志寻找error内容定位问题

如果使用openstack做企业私有云对企业有什么优势:

使用虚拟化提高物理机的资源利用率,如果一台机器起多个进程,两大难题,资源不隔离,管理难,端口冲突,

1.openstsck社区活跃,会的人多,有问题好解决,2.架构清晰,3.python写的,运维会py比较多,cloudstack是java写的

FAQ:小坑~

时间不同步导致服务出问题重启之后nova起不来等,具体查看nova日志

如果nfs磁盘创建不了 看看nfs节点服务是否启动,挂载点是否挂载了,具体查看cinder日志

什么报错看什么日志,python日志也好理解~