mapreduce案例二

练习题:求每个平均消费,如果遇到异常数据,money异常,就回填为10

数据:

张三,12月3号,20

张三,12月3号,10

李四,12月3号,12

王五,12月3号,10

王五,12月2号

王五,12月2号,30

王二麻,12月2号,0

王二麻,12月2号,,

王二麻,12月2号, ,

代码

package com.shujia.avgmoney; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import java.io.IOException; /* 李四,12月3号,12 王五,12月3号,10 王五,12月2号 王五,12月2号,30 王二麻,12月2号,0 王二麻,12月2号,, 王二麻,12月2号, , */ class AvgMapper extends Mapper<LongWritable,Text,Text,LongWritable>{ @Override protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, LongWritable>.Context context) throws IOException, InterruptedException { String line = value.toString(); String s = line.replaceAll("[,|,,|, ,]", "\t"); String[] split = s.split("\t"); if(split.length!=3) { String[] strings = new String[3]; strings[0]=split[0]; strings[1]=split[1]; strings[2]="10"; Text name = new Text(strings[0]); LongWritable res = new LongWritable(new Long(strings[2])); context.write(name,res); }else{ Text name = new Text(split[0]); LongWritable res = new LongWritable(new Long(split[2])); context.write(name,res); } } } class AvgReducer extends Reducer<Text,LongWritable,Text,LongWritable> { @Override protected void reduce(Text key, Iterable<LongWritable> values, Reducer<Text, LongWritable, Text, LongWritable>.Context context) throws IOException, InterruptedException { long sum=0; long count=0; for (LongWritable value : values) { long l = value.get(); sum+=l; count++; } long avg=sum / count; context.write(key,new LongWritable(avg)); } } public class AvgDemo { public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException { Configuration conf = new Configuration(); Job job = Job.getInstance(conf); job.setJarByClass(AvgDemo.class); job.setMapperClass(AvgMapper.class); job.setReducerClass(AvgReducer.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(LongWritable.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(LongWritable.class); FileInputFormat.setInputPaths(job,new Path(args[0])); FileOutputFormat.setOutputPath(job,new Path(args[1])); job.waitForCompletion(true); } }

注意的点:需要数据清洗,替换

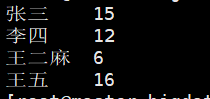

结果: