我用自己的话描述一遍DDPM加深理解,原文可参考苏剑林博客 https://spaces.ac.cn/archives/9119

加噪过程

设 \(\bf x_0\)表示一张图片, 逐步在当前图片上添加微小噪音,经过T步得到T张中间图片,依次为 \(\bf x_1, \bf x_2, \cdots, \bf x_T\)。添加噪声的计算公式如下:

\[\bf x_t = \alpha_t \bf x_{t-1} + \beta_t \bf \epsilon_t \tag{1}

\]

其中,

\[\bf \epsilon_t \sim \mathcal N(0,\mit I)

\]

\[\alpha_t^2 + \beta_t^2 = 1, \alpha_t,\beta_t > 0

\]

为了实现每次添加噪音都很小,因此从标准正态分布取样,然后乘以一个很小的系数就可以保证。DDPM中系数设计如下[注1]:

\[\alpha_t = \sqrt{1-\frac{0.02t}{T}}, \beta_t = \frac{0.02t}{T},T=1000

\]

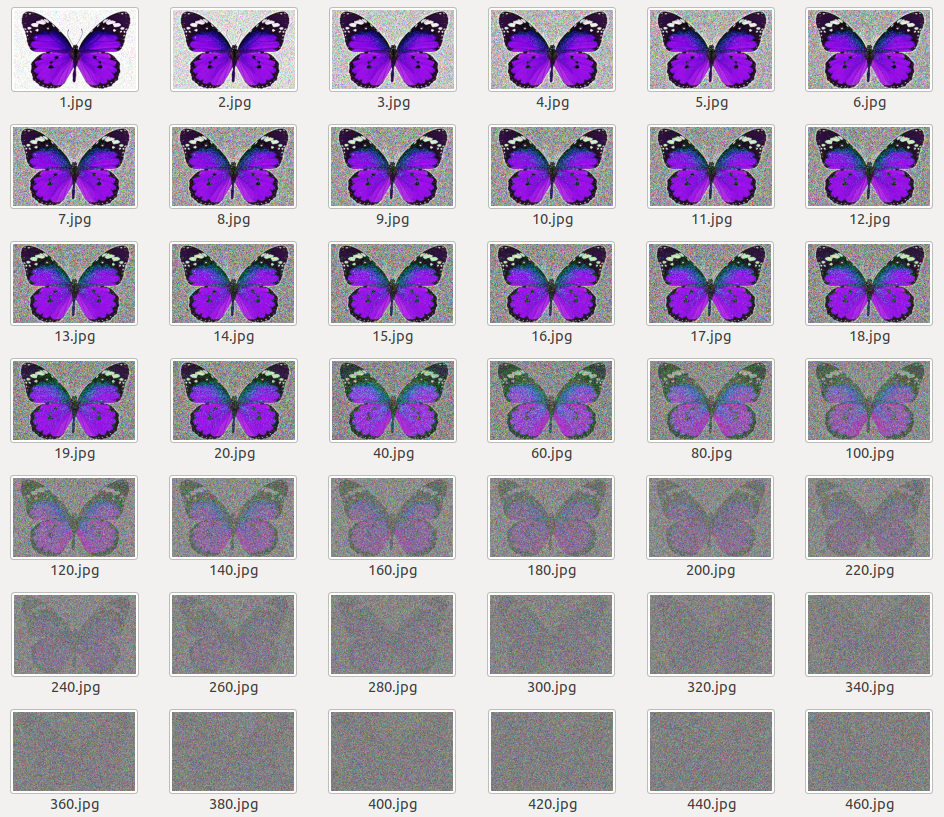

下图Fig. 1就是按照上面公式及系数设计,对一张蝴蝶图片迭代式添加微小噪音后得到的一系列图片[注2]

![image]()

反复执行公式(1)可以得到

\[\begin{aligned}

\bf x_t

& = \alpha_t \bf x_{t-1} + \beta_t \epsilon_t \\

& = \alpha_t (\alpha_{t-1} \bf x_{t-2} + \beta_{t-1}

\epsilon_{t-1})+ \beta_t \epsilon_t \\

& = \cdots \\

& = (\alpha_t\cdots\alpha_1)\bf x_0 + (\alpha_t\cdots\alpha_2)\beta_1\epsilon_1 + (\alpha_t\cdots\alpha_3)\beta_2\epsilon_2 + \cdots + \alpha_t\beta_{t-1}\epsilon_{t-1} + \beta_t \epsilon_t \\

\end{aligned}

\tag{2}

\]

公式(2)给出了任意迭代步数时,当前加噪图片与最原始的图片之间的计算公式。公式(2)除了 \(\bf x_0\)项之后,所有项可以看作是多个相互独立的正态噪音之和。也等价于从均值为0,方差为 \((\alpha_t\cdots\alpha_2)^2\beta_1^2 + (\alpha_t\cdots\alpha_3)^2\beta_2^2 + \cdots + \alpha_t^2\beta_{t-1})^2 + \beta_t^2\) 正态分布取样[注3]。而且公式(2)中各个系数之和等于1(在\(\alpha_t^2 + \beta_t^2 = 1\)条件下)

\[(\alpha_t\cdots\alpha_1)^2 +(\alpha_t\cdots\alpha_2)^2\beta_1^2 + (\alpha_t\cdots\alpha_3)^2\beta_2^2 + \cdots + \alpha_t^2\beta_{t-1})^2 + \beta_t^2 \tag{3}

\]

因此可以改写公式(2)得到

\[\bf x_t = \underbrace{(\alpha_t\cdots\alpha_1)}_{记为\bar{\alpha}_t} \bf x_0 + \underbrace{\sqrt{1-(\alpha_t\cdots\alpha_1)^2}}_{记为\bar{\beta}_t} \bf{\bar \epsilon_t}, \bar \epsilon_t \sim \mathcal N(0, I) \tag{4}

\]

去噪过程

加噪过程是 \(\bf x_{t-1} \rightarrow \bf x_t\)的过程,得到一系列的数据对 \((\bf x_{t-1} , \bf x_t)\)。每一张图片 \(\bf x_0\)就对应T组这样数据对,这些数据对是按照公式(2)计算得到的,算是已知。

现在想基于上述数据来建模 \(\bf x_{t} \rightarrow \bf x_{t-1}\) 过程,而公式(2)中不确定的是 \(\bf \epsilon_t\) , 因此去噪过程就是训练模型使得下述式子最小化

\[||\bf \epsilon_t - \epsilon_{\theta}(\bf x_t, t)||^2 \tag{5}

\]

基于公式(1)和(4)可以得到如下表达式

\[\begin{aligned}

\bf x_t

& = \alpha_t \bf x_{t-1} + \beta_t \epsilon_t \\

& = \alpha_t (\bar{\alpha}_{t-1} \bf x_0 + \bar{\beta}_{t-1} \bar{\epsilon}_{t-1}) + \beta_t \epsilon_t \\

& = \bar{\alpha}_{t} \bf x_0 + \alpha_t \bar{\beta}_{t-1} \bar{\epsilon}_{t-1} + \beta_t \epsilon_t

\end{aligned}

\tag{6}

\]

得到损失函数形式为[注4]

\[||\bf \epsilon_t - \epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \alpha_t \bar{\beta}_{t-1} \bar{\epsilon}_{t-1} + \beta_t \epsilon_t, t)||^2 \tag{7}

\]

降低方差

公式涉及到4个需要采样的随机变量

- 从所有训练样本中采样 \(\bf x_0\)

- 从正态分布 \(\mathcal N(0,I)\) 中采样 \(\bf \bar{\epsilon}_{t-1}, \bf \epsilon_t\)

- 从\(1 \sim T\)中采样\(t\)

损失函数是由这4个随机变量组合而成,其值是受到这4个随机变量取值波动而波动,随机变量越多则损失函数值波动也就越大。即使不更新参数,这个损失函数值也随着随机变量不同取值而波动(可能变大可能变小)。而参数的优化则是使得损失值朝着变小的方向进行。这样造成参数更新“困惑”,导致收敛过慢问题。

可以通过一个积分技巧将 \(\bf \bar{\epsilon}_{t-1}, \bf \epsilon_t\) 合并成单个正态随机变量,从而缓解以上问题。

\(\alpha_t \bar{\beta}_{t-1} \bar{\epsilon}_{t-1} + \beta_t \epsilon_t\) 相当于单个随机变量 \(\bar{\beta}_t \epsilon, \epsilon \sim \mathcal N(0,I)\) , 同理 \(\beta_t \bar{\epsilon}_{t-1} - \alpha_t \bar{\beta}_{t-1} \epsilon_t\) 相当于单个随机变量 \(\bar{\beta}_t \omega, \omega \sim \mathcal N(0,I)\) [注5]。并且可以验证 \(\mathbb{E} [\epsilon \omega^T] = 0\),所以这是两个相互独立的正态随机变量[注6]。

使用消元法,很容易用 \(\epsilon, \omega\) 来表示 \(\epsilon_t\),

\[\epsilon_t = \frac {(\beta_t\epsilon - \alpha_t\bar{\beta}_{t-1})\bar{\beta}_t}{\beta_t^2 + \alpha_t^2 \bar{\beta}_{t-1}^2} = \frac{\beta_t\epsilon - \alpha_t\bar{\beta}_{t-1}}{\bar{\beta}_t}

\]

代入上式子得到[注7]

\[\mathbb {E}_{\bar{\epsilon}_{t-1}, \epsilon_t \sim \mathcal N(0,I)} \{||\bf \epsilon_t - \epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \alpha_t \bar{\beta}_{t-1} \bar{\epsilon}_{t-1} + \beta_t \epsilon_t, t)||^2 \}

= \mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{ || \frac{\beta_t\epsilon - \alpha_t\bar{\beta}_{t-1}}{\bar{\beta}_t} - \epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t) || \}

\]

[注1]

-

图片重建任务中,使用欧式距离来作为度量函数,往往得到模糊的结果,效果不佳。除非是当输入输出图片非常接近,才能得到比较清晰的结果。之所以选择尽可能大的T,就是将重建任务拆分为很多个步骤,每个步骤的重建输入输出都差异就很小,减小了重建难度也减弱欧式距离带来的模糊问题。

-

\(\alpha_t\)设计为递减函数,当t较小时,\(\bf x_t\)还比较接近真实图片,所以要设计\(\bf x_{t-1}\)与\(\bf x_t\)差异要小,就必须使得设计的\(\alpha_t\)较大;当t比较大时,\(\bf x_t\)已经接近纯噪声了,适当地增大\(\bf x_{t-1}\)与\(\bf x_t\)差异,用欧式距离来作为重建度量也没有什么不好的。此外因为降低重建难度消除欧式聚类度量的模糊影响,\(\alpha_t\)要很接近于1(不管t取何值),所以要迭代很多步才能将原始图片变成一个随机噪音,不然迭代几步就变成随机噪音。就背离设计初衷,也不可能达到好的效果。比如设计\(\alpha_t=0.9\),大概100步就将原始图片变成随机噪音;\(\alpha_t=0.8\),大概50步就将原始图片变成随机噪音。

[注2]

import numpy as np

import cv2

img = cv2.imread("~/Pictures/butterfly.jpg")

img = np.array(img, dtype=np.float32)

img = (img-127.5)/127.5

h,w,_ = img.shape

T = 1000

x = []

x.append(img)

for t in range(1,T+1,1):

alpha_t = np.sqrt(1-0.02*t/T)

beta_t = np.sqrt(0.02*t/T)

epsilon_t = np.random.normal(0,1,h*w*3)

epsilon_t = np.reshape(epsilon_t, (h, w, 3))

img_t = alpha_t * x[-1] + beta_t * epsilon_t

x.append(img_t)

if t < 20 or t % 20 == 0:

img_save = img_t * 127.5 + 127.5

img_save = np.array(img_save, dtype=np.uint8)

cv2.imwrite("show/{}.jpg".format(t), img_save)

[注3]

可以依据随机变量期望和方差的定义,及正态分布的性质推导处理。

[注4]

给定一张图片\(\bf x_0\) 按照文中公式(3),逐步从正态分布中进行采样得到\(\epsilon_t\),可以得到T组数据对 \((x_0,x_1),(x_1,x_2),\cdots,(x_{t−1},x_t),\cdots,(x_{T−1},x_T)\)。因此在建模去噪过程中t步骤时度量模型损失采用公式(1),而其中的\((\epsilon_t, \bf x_t)\)都是已知的,为什么还要那么麻烦使用公式(7)呢?

[注5]

使用期望和方差定义,很容易证明。由于 \(\bar{\epsilon}_{t-1}, \epsilon_t \sim \mathcal N(0,I)\),且相互独立,因此\(E[\alpha_t \bar{\beta}_{t-1} \bar{\epsilon}_{t-1} + \beta_t \epsilon_t] = 0\), \(Var[\alpha_t \bar{\beta}_{t-1} \bar{\epsilon}_{t-1} + \beta_t \epsilon_t] = \alpha_t^2 \bar{\beta}_{t-1}^2 + \beta_t^2 = \bar{\beta_t}^2\)

[注6]

\[\begin{aligned}

\mathbb E [\epsilon \omega^T]

& = \mathbb E [(\alpha_t \bar{\beta}_{t-1} \bar{\epsilon}_{t-1} + \beta_t \epsilon_t)(\beta_t \bar{\epsilon}_{t-1} - \alpha_t \bar{\beta}_{t-1} \epsilon_t)^T] \\

& = \mathbb E [\alpha_t \bar{\beta}_{t-1} \beta_t \bar{\epsilon}_{t-1} \bar{\epsilon}_{t-1}^T - \alpha_t^2 \bar{\beta}_{t-1}^2 \bar{\epsilon}_{t-1} \epsilon_t^T + \beta_t^2 \epsilon_t \bar{\epsilon}_{t-1}^T - \alpha_t \bar{\beta}_{t-1} \beta_t \epsilon_t \epsilon_t^T] \\

& = \alpha_t \bar{\beta}_{t-1} \beta_t \mathbb E[\bar{\epsilon}_{t-1} \bar{\epsilon}_{t-1}^T] - \alpha_t^2 \bar{\epsilon}_{t-1}^2 \mathbb E [\bar{\epsilon}_{t-1} \epsilon_t^T] + \beta_t^2 \mathbb E [\epsilon_t \bar{\epsilon}_{t-1}^T] - \alpha_t \bar{\beta}_{t-1} \beta_t \mathbb E [\epsilon_t \epsilon_t^T] \\

& = \alpha_t \bar{\beta}_{t-1} \beta_t \mathbb E[\bar{\epsilon}_{t-1} \bar{\epsilon}_{t-1}^T] - \alpha_t \bar{\beta}_{t-1} \beta_t \mathbb E [\epsilon_t \epsilon_t^T] \\

& = \bf 0

\end{aligned}

\]

[注7]

\[\begin{aligned}

&\ \ \ \ \ \mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{ || \frac{\beta_t\epsilon - \alpha_t\bar{\beta}_{t-1}\omega}{\bar{\beta}_t} - \epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t) ||^2 \} \\

& = \mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{ || \frac{\beta_t\epsilon}{\bar{\beta}_t} -\epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t) - \frac{\alpha_t\bar{\beta}_{t-1}\omega}{\bar{\beta}_t} ||^2 \} \\

& = \mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{|| \underbrace{\frac{\beta_t\epsilon}{\bar{\beta}_t} -\epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t)}_{\eta} - \underbrace{\frac{\alpha_t\bar{\beta}_{t-1}}{\bar{\beta}_t} }_{r} \omega ||^2\} \\

& = \mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{|| \eta -r\omega ||^2\} \\

& = \mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{(\eta^T\eta -2r\eta^T\omega +r^2\omega^T )\} \\

& = \mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{\eta^T\eta\}

-2r\mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{\eta^T\omega\}

+r^2\mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{\omega^T\omega\} \\

\end{aligned}

\]

为简化公式,用向量\(\eta\),标量\(r\)代替比较长的符号。

(1)上述公式等号最后一行中 $\mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} {\eta^T\eta} $ 就等于 \(\mathbb {E}_{\epsilon \sim \mathcal N(0,I)} \{||\frac{\beta_t\epsilon}{\bar{\beta}_t} -\epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t)||\}\)

(2)上述公式等号最后一行中 $\mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} {\omega^T\omega} $ 就是仅关于\(\omega \sim \mathcal N(0,I)\) ,这个期望是可求出来的,是一个常数(但我不知道怎么求)。

(3)上述公式等号最后一行中 \(\mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{\eta^T\omega\}\) 中 \(\eta = \frac{\beta_t\epsilon}{\bar{\beta}_t} -\epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t)\) 是关于\(\epsilon\)的函数,由于 \(E[\epsilon^T\omega]=0\) ,因此 \(\mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{\eta^T\omega\}=-\mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{\epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t)^T\omega\}\) 。这里的 \(\epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t)\) 是关于 \(\epsilon\)的函数,我不明白的是 \(\mathbb {E}_{\omega, \epsilon \sim \mathcal N(0,I)} \{\epsilon_{\theta}(\bar{\alpha}_{t} \bf x_0 + \bar{\beta}_t \epsilon, t)^T\omega\}\) 是否等于0?

[注8]

关于https://kexue.fm/archives/9152 博客中公式(6),(7)推导过程中,对曾影响我理解地方进行注释.

\[\begin{aligned}

& \ \ \ \ - \int p(x_T|x_{T-1}) \cdots p(x_1|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_1 \cdots dx_T \\

& = - \int p(x_t|x_{t-1}) \cdots p(x_1|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_1 \cdots dx_t \\

& = - \int p(x_t|x_{t-1}) p(x_{t-1}|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_{t-1} dx_t \\

\end{aligned}

\tag{6}

\]

(1)关于上述公式第一等号,因为 \(q(x_{t-1}|x_t)\) 仅依赖\(x_t\), 因此\(x_{t+1},\cdots,x_T\)部分的积分可以分拆开来

\[\begin{aligned}

& \ \ \ \ - \int p(x_T|x_{T-1}) \cdots p(x_1|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_1 \cdots dx_T \\

& = - \int p(x_t|x_{t-1}) \cdots p(x_1|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_1 \cdots dx_t \times \int p(x_T|x_{T-1}) p(x_{T-1}|x_{T-2}) \cdots p(x_{t+1}|x_{t}) dx_{t+1} \cdots dx_T \\

\end{aligned}

\]

又由于

\[\begin{aligned}

& \ \ \ \ \int p(x_T|x_{T-1}) p(x_{T-1}|x_{T-2}) \cdots p(x_{t+1}|x_{t}) dx_{t+1} \cdots dx_T \\

& = \int p(x_T,x_{T-1},\cdots,x_{t+1}|x_{t}) dx_{t+1} \cdots dx_T \\

& = 1 \\

\end{aligned}

\]

因此

\[\begin{aligned}

& \ \ \ \ - \int p(x_T|x_{T-1}) \cdots p(x_1|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_1 \cdots dx_T \\

& = - \int p(x_t|x_{t-1}) \cdots p(x_1|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_1 \cdots dx_t \\

\end{aligned}

\]

(2)公式中第2个等号

\[\begin{aligned}

& \ \ \ \ - \int p(x_T|x_{T-1}) \cdots p(x_1|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_1 \cdots dx_T \\

& = - \int p(x_t|x_{t-1}) \cdots p(x_1|x_0)\bar{p}(x_0)log \ q(x_{t-1}|x_t)dx_0dx_1 \cdots dx_t \\

& = - \int p(x_t|x_{t-1})\bar{p}(x_0)log \ q(x_{t-1}|x_t) \{\int p(x_{t-1}|x_{t-2}) \cdots p(x_1|x_0)dx_1 \cdots dx_{t-2} \} \ dx_0dx_{t-1}dx_t\\

& = - \int p(x_t|x_{t-1})\bar{p}(x_0)log \ q(x_{t-1}|x_t) \{\int p(x_{t-1},x_{t-2},\cdots,x_1|x_0)dx_1 \cdots dx_{t-2} \} \ dx_0dx_{t-1}dx_t\\

& = - \int p(x_t|x_{t-1})\bar{p}(x_0)log \ q(x_{t-1}|x_t) p(x_{t-1}|x_0) \ dx_0dx_{t-1}dx_t\\

\end{aligned}

\]

(3)公式(6)推导到公式(7),注意将积分转换成期望即可得到。

参考资料

浙公网安备 33010602011771号

浙公网安备 33010602011771号