1.Reduce Join

1.1 工作原理

map端的主要工作:为来自不同表或文件的key/value对,打标签以区别不同的来源记录;然后用连接字段作为key,其余部分和新加的标志作为是value,最后进行输出;

reduce端的主要工作:在reduce端以连接字段作为key的分组已经完成,我们只主要在每一个分组当中将那些来源于不用文件的记录(在map阶段已经打标签)分开,最后进行合并就OK了;

1.2 需求

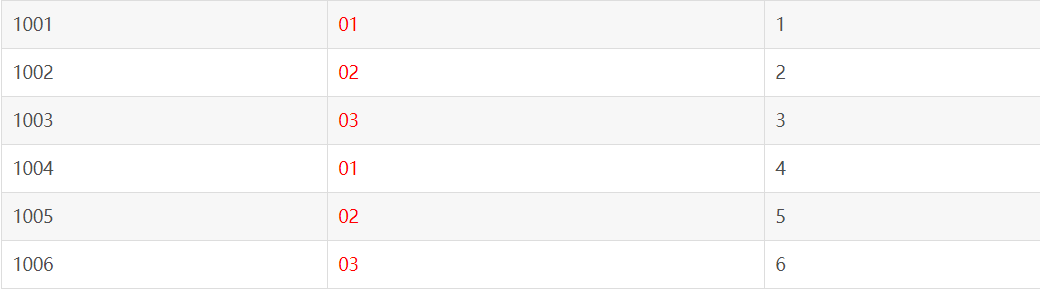

订单表数据

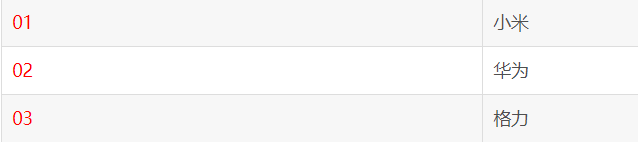

表商品信息

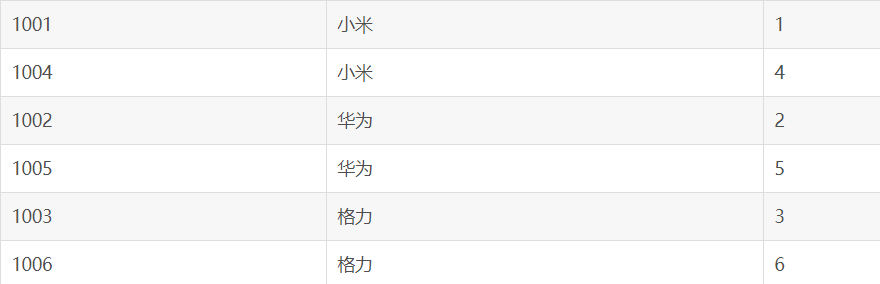

将最终数据形成

2.Reduce Join 案例实操

2.1 OrderBean编写

package com.wn.bean;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class OrderBean implements WritableComparable<OrderBean> {

private String id;

private String pid;

private int amount;

private String pname;

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getPid() {

return pid;

}

public void setPid(String pid) {

this.pid = pid;

}

public int getAmount() {

return amount;

}

public void setAmount(int amount) {

this.amount = amount;

}

public String getPname() {

return pname;

}

public void setPname(String pname) {

this.pname = pname;

}

@Override

public String toString() {

return "OrderBean{" +

"id='" + id + '\'' +

", pid='" + pid + '\'' +

", amount=" + amount +

", pname='" + pname + '\'' +

'}';

}

@Override

public int compareTo(OrderBean o) {

int compare = this.pid.compareTo(o.pid);

if (compare==0){

return o.pname.compareTo(this.pname);

}else{

return compare;

}

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeUTF(id);

dataOutput.writeUTF(pid);

dataOutput.writeInt(amount);

dataOutput.writeUTF(pname);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

this.id=dataInput.readUTF();

this.pid=dataInput.readUTF();

this.amount=dataInput.readInt();

this.pname=dataInput.readUTF();

}

}

2.2 RJMapper编写

package com.wn.reducejoin;

import com.wn.bean.OrderBean;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.InputSplit;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import java.io.IOException;

public class RJMapper extends Mapper<LongWritable, Text, OrderBean, NullWritable> {

private OrderBean orderBean=new OrderBean();

private String filename;

@Override

protected void setup(Context context) throws IOException, InterruptedException {

FileSplit fs = (FileSplit) context.getInputSplit();

filename = fs.getPath().getName();

}

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] fields = value.toString().split("\t");

if (filename.equals("order.txt")){

orderBean.setId(fields[0]);

orderBean.setPid(fields[1]);

orderBean.setAmount(Integer.parseInt(fields[2]));

orderBean.setPname("");

}else{

orderBean.setPid(fields[0]);

orderBean.setPname(fields[1]);

orderBean.setId("");

orderBean.setAmount(0);

}

context.write(orderBean,NullWritable.get());

}

}

2.3 RJComparator编写

package com.wn.reducejoin;

import com.wn.bean.OrderBean;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

public class RJComparator extends WritableComparator {

protected RJComparator(){

super(OrderBean.class,true);

}

@Override

public int compare(WritableComparable a, WritableComparable b) {

OrderBean oa=(OrderBean) a;

OrderBean ob=(OrderBean) b;

return oa.getPid().compareTo(ob.getPid());

}

}

2.4 RJReducer编写

package com.wn.reducejoin;

import com.wn.bean.OrderBean;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

import java.util.Iterator;

public class RJReducer extends Reducer<OrderBean, NullWritable,OrderBean,NullWritable> {

private OrderBean orderBean=new OrderBean();

@Override

protected void reduce(OrderBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

Iterator<NullWritable> iterator = values.iterator();

iterator.next();

String pname = key.getPname();

while(iterator.hasNext()){

iterator.next();

key.setPname(pname);

context.write(key,NullWritable.get());

}

}

}

2.5 RJDriver编写

package com.wn.reducejoin;

import com.wn.bean.OrderBean;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class RJDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Job job = Job.getInstance(new Configuration());

job.setJarByClass(RJDriver.class);

job.setMapperClass(RJMapper.class);

job.setReducerClass(RJReducer.class);

job.setMapOutputKeyClass(OrderBean.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(OrderBean.class);

job.setOutputValueClass(NullWritable.class);

job.setGroupingComparatorClass(RJComparator.class);

FileInputFormat.setInputPaths(job,new Path("E:\\北大青鸟\\大数据04\\hadoop\\Join\\Reduce"));

FileOutputFormat.setOutputPath(job,new Path("E:\\北大青鸟\\大数据04\\hadoop\\Join\\OutReduce"));

boolean b = job.waitForCompletion(true);

System.exit(b ? 0: 1);

}

}

3.Map Join

3.1 使用场景

Map Join适用于一张表十分小,一张表很大的场景;

3.2 优点

在map端缓存多张表,提前处理业务逻辑,这样增加map端业务,减少reduce端数据的压力,尽可能的减少数据倾斜;

3.3 具体方法

3.3.1 在mapper的setup阶段,将文件读取到缓存集合中;

3.3.2 在驱动函数中加载缓存;

//缓存普通文件到Task运行节点;

job.addCacheFile(new URI("file://e:/cache/pd.txt"));

4.Map Join案例实操

4.1 Driver编写

package com.wn.mapjoin;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

import java.net.URI;

public class MJDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Job job = Job.getInstance(new Configuration());

job.setJarByClass(MJDriver.class);

job.setMapperClass(MJMapper.class);

job.setNumReduceTasks(0);

job.addCacheFile(URI.create("file:///E:/北大青鸟/大数据04/hadoop/Join/Reduce/pd.txt"));

FileInputFormat.setInputPaths(job,new Path("E:\\北大青鸟\\大数据04\\hadoop\\Join\\Reduce\\order.txt"));

FileOutputFormat.setOutputPath(job,new Path("E:\\北大青鸟\\大数据04\\hadoop\\Join\\OutReduce"));

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

4.2 mapper编写

package com.wn.mapjoin;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.*;

import java.net.URI;

import java.util.HashMap;

import java.util.Map;

public class MJMapper extends Mapper<LongWritable, Text,Text, NullWritable> {

private Map<String,String> pMap=new HashMap<>();

private Text k=new Text();

@Override

protected void setup(Context context) throws IOException, InterruptedException {

URI[] cacheFiles = context.getCacheFiles();

String path = cacheFiles[0].getPath().toString();

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(new FileInputStream(path)));

String line;

while (StringUtils.isNotEmpty(line=bufferedReader.readLine())){

String[] split = line.split("\t");

pMap.put(split[0],split[1]);

}

IOUtils.closeStream(bufferedReader);

}

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] fieIds = value.toString().split("\t");

String pname = pMap.get(fieIds[1]);

k.set(fieIds[0]+"\t"+pname+"\t"+fieIds[2]);

context.write(k,NullWritable.get());

}

}

posted on

posted on