日志打印规范

1. 配置好日志输出格式

<?xml version="1.0" encoding="UTF-8"?> <configuration debug="true" scan="true"> <!--读取yml指定的日志输出级别--> <springProperty scope="context" name="LOG_LEVEL" source="logging.level.root"/> <!--读取yml指定的文件名称--> <springProperty scope="context" name="APPNAME" source="logging.name"/> <!--日志文件输出地址--> <property name="LOG_HOME" value="/apps/log" /> <jmxConfigurator /> <!-- 彩色日志 --> <!-- 彩色日志依赖的渲染类 --> <conversionRule conversionWord="clr" converterClass="org.springframework.boot.logging.logback.ColorConverter" /> <conversionRule conversionWord="wex" converterClass="org.springframework.boot.logging.logback.WhitespaceThrowableProxyConverter" /> <conversionRule conversionWord="wEx" converterClass="org.springframework.boot.logging.logback.ExtendedWhitespaceThrowableProxyConverter" /> <!-- 彩色日志格式 --> <property name="CONSOLE_LOG_PATTERN" value="${CONSOLE_LOG_PATTERN:-%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${PID:- }){magenta} %X{reqIP} --- %clr([%X{requestId}]) [%thread] %clr([%level: %file:%line]) %clr(--) %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}}" /> <!--error日志级别--> <appender name="error_file" class="ch.qos.logback.core.rolling.RollingFileAppender"> <!--文件输出路径--> <file>${LOG_HOME}/${APPNAME}/error.log</file> <!-- 设置滚动策略 --> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>${LOG_HOME}/${APPNAME}/web_error_%d{yyyy-MM-dd}_%i.log.zip</fileNamePattern> <!--日志文件保留天数--> <maxHistory>3</maxHistory> <cleanHistoryOnStart>true</cleanHistoryOnStart> <!--每个文件最大大小--> <timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"> <maxFileSize>100MB</maxFileSize> </timeBasedFileNamingAndTriggeringPolicy> </rollingPolicy> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>ERROR</level> </filter> <encoder> <charset>UTF-8</charset> <!--日志输出格式--> <!--<pattern>${CONSOLE_LOG_PATTERN}</pattern>--> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} ${PID:- } %X{reqIP} --- [%X{requestId}] [%thread] [%level: %file:%line] -- %msg%n</pattern> </encoder> </appender> <!--warn日志级别 <appender name="warn_file" class="ch.qos.logback.core.rolling.RollingFileAppender"> <file>${LOG_HOME}/${APPNAME}/warn.log</file> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>${LOG_HOME}/${APPNAME}/web_warn_%d{yyyy-MM-dd}_%i.log.zip </fileNamePattern> <maxHistory>7</maxHistory> <cleanHistoryOnStart>true</cleanHistoryOnStart> <timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"> <maxFileSize>100MB</maxFileSize> </timeBasedFileNamingAndTriggeringPolicy> </rollingPolicy> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>WARN</level> </filter> <encoder> <charset>UTF-8</charset> <pattern>${CONSOLE_LOG_PATTERN}</pattern> </encoder> </appender> --> <!--info日志级别--> <appender name="info_file" class="ch.qos.logback.core.rolling.RollingFileAppender"> <file>${LOG_HOME}/${APPNAME}/info.log</file> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>${LOG_HOME}/${APPNAME}/web_info_%d{yyyy-MM-dd}_%i.log.zip </fileNamePattern> <maxHistory>3</maxHistory> <cleanHistoryOnStart>false</cleanHistoryOnStart> <timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"> <!--每次100mb时生成新的文件--> <maxFileSize>100MB</maxFileSize> </timeBasedFileNamingAndTriggeringPolicy> </rollingPolicy> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>INFO</level> </filter> <encoder> <charset>UTF-8</charset> <!--<pattern>${CONSOLE_LOG_PATTERN}</pattern>--> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} ${PID:- } %X{reqIP} --- [%X{requestId}] [%thread] [%level: %file:%line] -- %msg%n</pattern> </encoder> </appender> <!--控制台日志级别--> <appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender"> <encoder> <charset>UTF-8</charset> <pattern>${CONSOLE_LOG_PATTERN}</pattern> </encoder> </appender> <!--异步输出--> <appender name="async_info_log" class="ch.qos.logback.classic.AsyncAppender"> <discardingThreshold>0</discardingThreshold> <queueSize>512</queueSize> <includeCallerData>true</includeCallerData> <appender-ref ref="info_file"/> </appender> <root level="${LOG_LEVEL}"> <appender-ref ref="async_info_log" /> <!--<appender-ref ref="warn_file" />--> <appender-ref ref="error_file" /> <appender-ref ref="STDOUT" /> </root> <!-- 文件包(包括子包)下默认级别 project default level --> <logger name="com.wulei" level="INFO"/> <!--log4jdbc --> <logger name="jdbc.sqltiming" level="INFO"/> <logger name="org.springframework" level="INFO"/> <!--Mybatis SQL语句--> <logger name="com.wulei.mapper" level="DEBUG"/> <!--<logger name="com.miniso.common.returnplan.mapper" level="DEBUG"/> <logger name="com.miniso.common.campaign.mapper.MpCampaignLevelCommonMapper" level="INFO"/>--> <!-- springside modules --> <logger name="org.springside.modules" level="INFO"/> </configuration>

2. 指定我们的日志文件

logging: name: ${spring.application.name} config: classpath:logback-spring.xml level: root: info spring: application: name: dcloud-account

3. 自定义线程池,实现值传递

package com.wulei.config; import org.slf4j.MDC; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import org.springframework.scheduling.annotation.EnableAsync; import org.springframework.scheduling.concurrent.ThreadPoolTaskExecutor; import java.util.Map; import java.util.concurrent.Callable; import java.util.concurrent.Future; import java.util.concurrent.ThreadPoolExecutor; /** * 线程池配置类 @Async注解失效的4种情况 1. 注解@Async的⽅法不是public⽅法 2. 注解@Async的返回值不为void或者Future 3. 注解@Async⽅法使⽤static修饰也会失效 4. 调⽤⽅与被调⽅在同⼀个类 ⼀般将要异步执⾏的⽅法单独抽取成⼀个类, 因为他是基于spring动态代理实现的,如果 方法加了@Async注解,spring就会自动生成代理类。而如果调用者与被调用者在一个类里 面,就不会经过动态代理,而是基于本身的bean去调用。 还用一个方式可以破解上述失效场景,就是不用 @Async("threadPoolTaskExecutor") 注解 而是直接用线程池去调用方法。 @Autowired private ThreadPoolTaskExecutor threadPoolTaskExecutor; threadPoolTaskExecutor.execute(()->{ testSend(); }); */ @Configuration // 开启线程池 @EnableAsync public class ThreadPoolTaskConfig { @Bean("threadPoolTaskExecutor") public ThreadPoolTaskExecutor threadPoolTaskExecutor(){ // ThreadPoolTaskExecutor executor = new ThreadPoolTaskExecutor(); ThreadPoolTaskExecutor executor = new HydraThreadPoolTaskExecutor(); //线程池创建的核心线程数,线程池维护线程的最少数量,即使没有任务需要执行,也会一直存活 //如果设置allowCoreThreadTimeout=true(默认false)时,核心线程会超时关闭 executor.setCorePoolSize(4); //executor.setAllowCoreThreadTimeOut(); //等待队列长度 executor.setQueueCapacity(1000); //最大线程池数量,当线程数>=corePoolSize,且任务队列已满时。线程池会创建新线程来处理任务 //任务队列已满时, 且当线程数=maxPoolSize,,线程池会拒绝处理任务而抛出异常 executor.setMaxPoolSize(8); //当线程空闲时间达到keepAliveTime时,线程会退出,直到线程数量=corePoolSize //允许线程空闲时间30秒,当maxPoolSize的线程在空闲时间到达的时候销毁 //如果allowCoreThreadTimeout=true,则会直到线程数量=0 executor.setKeepAliveSeconds(30); //spring 提供的 ThreadPoolTaskExecutor 线程池,是有setThreadNamePrefix() 方法的。 //jdk 提供的ThreadPoolExecutor 线程池是没有 setThreadNamePrefix() 方法的 executor.setThreadNamePrefix("我的线程池-"); /** * 拒绝策略 * * CallerRunsPolicy():交由调用方线程运行,比如 main 线程;如果添加到线程池失败,那么主线程会自己去执行该任务,不会等待线程池中的线程去执行 * AbortPolicy():该策略是线程池的默认策略,如果线程池队列满了丢掉这个任务并且抛出RejectedExecutionException异常。 * DiscardPolicy():如果线程池队列满了,会直接丢掉这个任务并且不会有任何异常 * DiscardOldestPolicy():丢弃队列中最老的任务,队列满了,会将最早进入队列的任务删掉腾出空间,再尝试加入队列 */ executor.setRejectedExecutionHandler(new ThreadPoolExecutor.AbortPolicy()); executor.initialize(); return executor; } /** * 内部类重写 ThreadPoolTaskExecutor 里面的方法,进行线程的值传递 */ static class HydraThreadPoolTaskExecutor extends ThreadPoolTaskExecutor { private static final long serialVersionUID = 1L; private boolean useFixedContext = false; private Map<String, String> fixedContext; public HydraThreadPoolTaskExecutor() { super(); } public HydraThreadPoolTaskExecutor(Map<String, String> fixedContext) { super(); this.fixedContext = fixedContext; useFixedContext = (fixedContext != null); } private Map<String, String> getContextForTask() { return useFixedContext ? fixedContext : MDC.getCopyOfContextMap(); } @Override public void execute(Runnable command) { super.execute(wrapExecute(command, getContextForTask())); } @Override public <T> Future<T> submit(Callable<T> task) { return super.submit(wrapSubmit(task, getContextForTask())); } private <T> Callable<T> wrapSubmit(Callable<T> task, final Map<String, String> context) { return () -> { Map<String, String> previous = MDC.getCopyOfContextMap(); if (context == null) { MDC.clear(); } else { MDC.setContextMap(context); } try { return task.call(); } finally { if (previous == null) { MDC.clear(); } else { MDC.setContextMap(previous); } } }; } private Runnable wrapExecute(final Runnable runnable, final Map<String, String> context) { return () -> { Map<String, String> previous = MDC.getCopyOfContextMap(); if (context == null) { MDC.clear(); } else { MDC.setContextMap(context); } try { runnable.run(); } finally { if (previous == null) { MDC.clear(); } else { MDC.setContextMap(previous); } } }; } } }

4. 给request绑定唯一标识

import com.miniso.shelf.utils.IPHelper; import org.slf4j.MDC; import org.springframework.stereotype.Component; import javax.servlet.*; import javax.servlet.http.HttpServletRequest; import javax.servlet.http.HttpServletResponse; import java.io.IOException; import java.lang.management.ManagementFactory; import java.lang.management.RuntimeMXBean; import java.util.UUID; @Component public class LogMDCFilter implements Filter { private static final String REQUEST_ID = "requestId"; private static final String REQ_IP = "reqIP"; private static final String REQUEST_ID_PRE = "requestId-"; @Override public void init(FilterConfig filterConfig) throws ServletException { } @Override public void doFilter(ServletRequest request, ServletResponse response, FilterChain chain) throws IOException, ServletException { HttpServletRequest httpRequest = (HttpServletRequest) request; HttpServletResponse httpResponse = (HttpServletResponse) response; String requestId = httpRequest.getHeader(REQUEST_ID); if (requestId == null) { requestId = REQUEST_ID_PRE+UUID.randomUUID().toString().replace("-", ""); } MDC.put(REQ_IP, IPHelper.getClientIp()); MDC.put(REQUEST_ID, requestId); httpResponse.setHeader(REQUEST_ID, requestId); try { chain.doFilter(request, response); } finally { MDC.clear(); } } @Override public void destroy() { } public static final int getProcessID() { RuntimeMXBean runtimeMXBean = ManagementFactory.getRuntimeMXBean(); return Integer.valueOf(runtimeMXBean.getName().split("@")[0]) .intValue(); } }

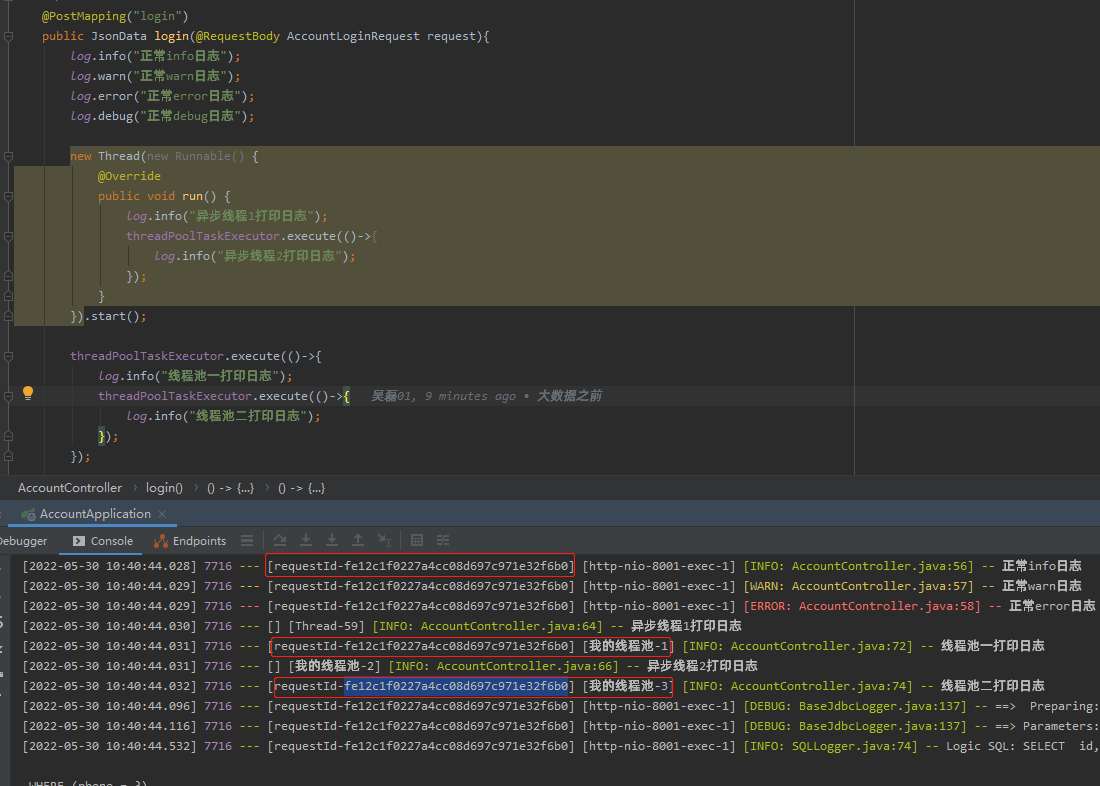

测试

封装下http请求,实现多个服务之间的值传递

@Configuration public class AppConfig { /** * feign调用丢失token解决方式,新增拦截器 * @return */ @Bean public RequestInterceptor requestInterceptor(){ return template -> { ServletRequestAttributes attributes = (ServletRequestAttributes) RequestContextHolder.getRequestAttributes(); if(attributes!=null){ HttpServletRequest httpServletRequest = attributes.getRequest(); if(httpServletRequest == null){ return; } String token = httpServletRequest.getHeader("token"); template.header("token",token); // 我们还可以绑定请求id,把多个服务间的请求串起来 } }; } }

。

浙公网安备 33010602011771号

浙公网安备 33010602011771号