Flink

1.WordCount入门程序

创建一个maven项目,依赖如下

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>Flink</artifactId>

<groupId>com.oasisgames</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<encoding>UTF-8</encoding>

<java.version>1.8</java.version>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<flink.version>1.13.6</flink.version>

<scala.binary.version>2.11</scala.binary.version>

</properties>

<modelVersion>4.0.0</modelVersion>

<dependencies>

<!--Flink-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<!-- <version>1.10.1</version>-->

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-kafka -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!--Flink-clients-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!--filesystem-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-filesystem_${scala.binary.version}</artifactId>

<version>1.11.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/cn.hutool/hutool-all -->

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>5.0.5</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.parquet/parquet-avro -->

<dependency>

<groupId>org.apache.parquet</groupId>

<artifactId>parquet-avro</artifactId>

<version>1.11.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-parquet_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.alibaba/fastjson -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.78</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-hadoop-2-uber</artifactId>

<version>2.7.5-10.0</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.16</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.projectlombok/lombok -->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.16.6</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/com.drewnoakes/metadata-extractor -->

<dependency>

<groupId>com.drewnoakes</groupId>

<artifactId>metadata-extractor</artifactId>

<version>2.16.0</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-files -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-files</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- Table API 和 Flink SQL -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-csv</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-avro</artifactId>

<version>${flink.version}</version>

</dependency>

<!--动态代理 的包-->

<dependency>

<groupId>cglib</groupId>

<artifactId>cglib</artifactId>

<version>3.1</version>

</dependency>

<dependency>

<groupId>com.google.collections</groupId>

<artifactId>google-collections</artifactId>

<version>1.0</version>

</dependency>

<!--json扁平化-->

<dependency>

<groupId>com.github.wnameless.json</groupId>

<artifactId>json-flattener</artifactId>

<version>0.8.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-jdbc -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc_2.11</artifactId>

<version>1.13.6</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/mysql/mysql-connector-java -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.16</version>

</dependency>

</dependencies>

<artifactId>FlinkTutorial</artifactId>

</project>

批处理WordCount

package com.wl;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.DataSet;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.api.java.operators.DataSource;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.util.Collector;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/3/25 下午5:14

* @qq 2315290571

* @Description 批处理统计单词出现的次数

*/

public class WordCount {

public static void main(String[] args) throws Exception {

//创建批处理执行环境

ExecutionEnvironment environment = ExecutionEnvironment.getExecutionEnvironment();

// 读取文件

DataSource<String> input = environment.readTextFile("/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/word.txt");

// 分组并求和

DataSet<Tuple2<String, Integer>> result = input.flatMap(new MyFlatMapper()).groupBy(0).sum(1);

result.print();

}

public static class MyFlatMapper implements FlatMapFunction<String, Tuple2<String, Integer>> {

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) throws Exception {

// 按空格分组

String[] words = value.split(" ");

for (String word : words) {

// 将数据进行整合

out.collect(new Tuple2<>(word, 1));

}

}

}

}

word.txt

hello kotlin

hello java

hello spark

hello hadoop

hello flink

hello python

who are you

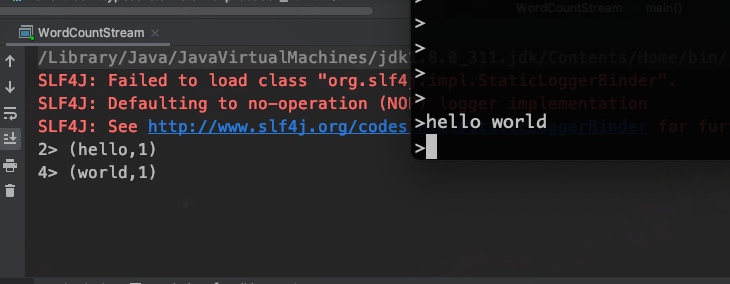

流式文件处理(配合kafka)

package com.wl;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/3/25 下午5:58

* @qq 2315290571

* @Description 流式处理文件

*/

public class WordCountStream {

public static void main(String[] args) throws Exception {

// 创建流处理运行环境

StreamExecutionEnvironment environment = StreamExecutionEnvironment.getExecutionEnvironment();

// 获取流

Properties properties = new Properties();

properties.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.160.2:9092");

properties.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "test-topic");

DataStream<String> dataStream = environment.addSource(new FlinkKafkaConsumer<String>("test-topic", new SimpleStringSchema(), properties));

// 打散成单词并分组求和

DataStream<Tuple2<String, Integer>> result = dataStream.flatMap(new WordCount.MyFlatMapper())

.keyBy(0)

.sum(1);

// 打印

result.print();

environment.execute("com.wl.WorldCount");

}

}

再启动kafka,发送消息

2.Flink安装和部署

StandaLone模式

安装启动

下载Flink

wget https://archive.apache.org/dist/flink/flink-1.10.1/flink-1.10.1-bin-scala_2.11.tgz

下载flink-hadoop插件

wget https://repo.maven.apache.org/maven2/org/apache/flink/flink-shaded-hadoop-2-uber/2.7.5-10.0/flink-shaded-hadoop-2-uber-2.7.5-10.0.jar

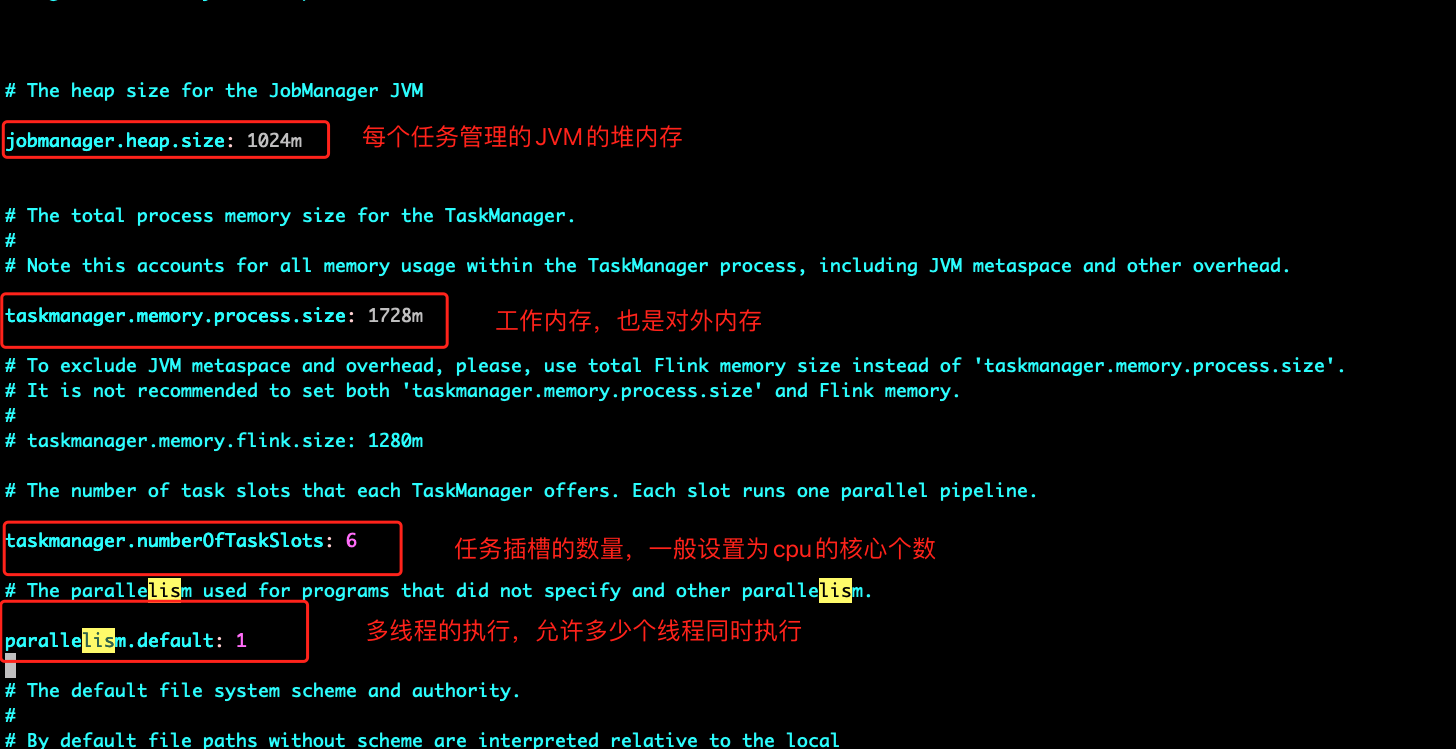

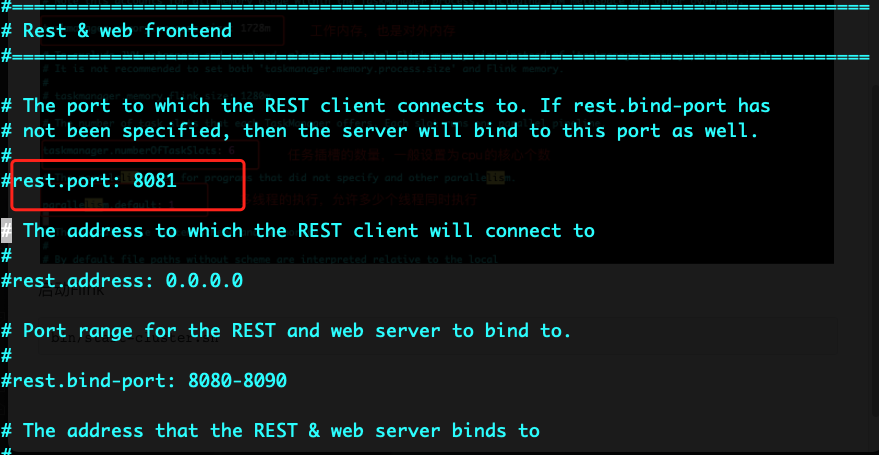

conf文件说明:

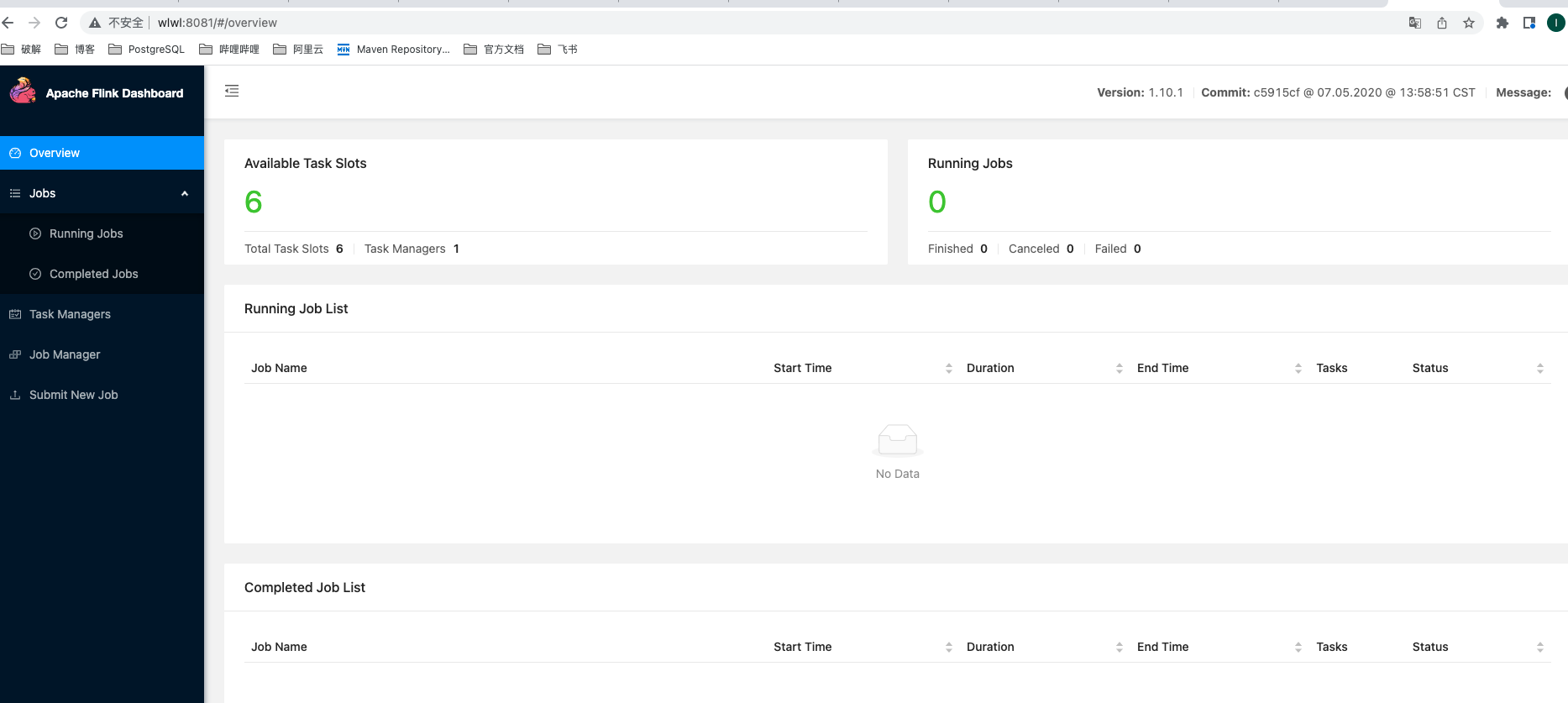

启动Flink

bin/start-cluster.sh

可以看到flink的默认web端口是8081

访问 8081端口得到web界面

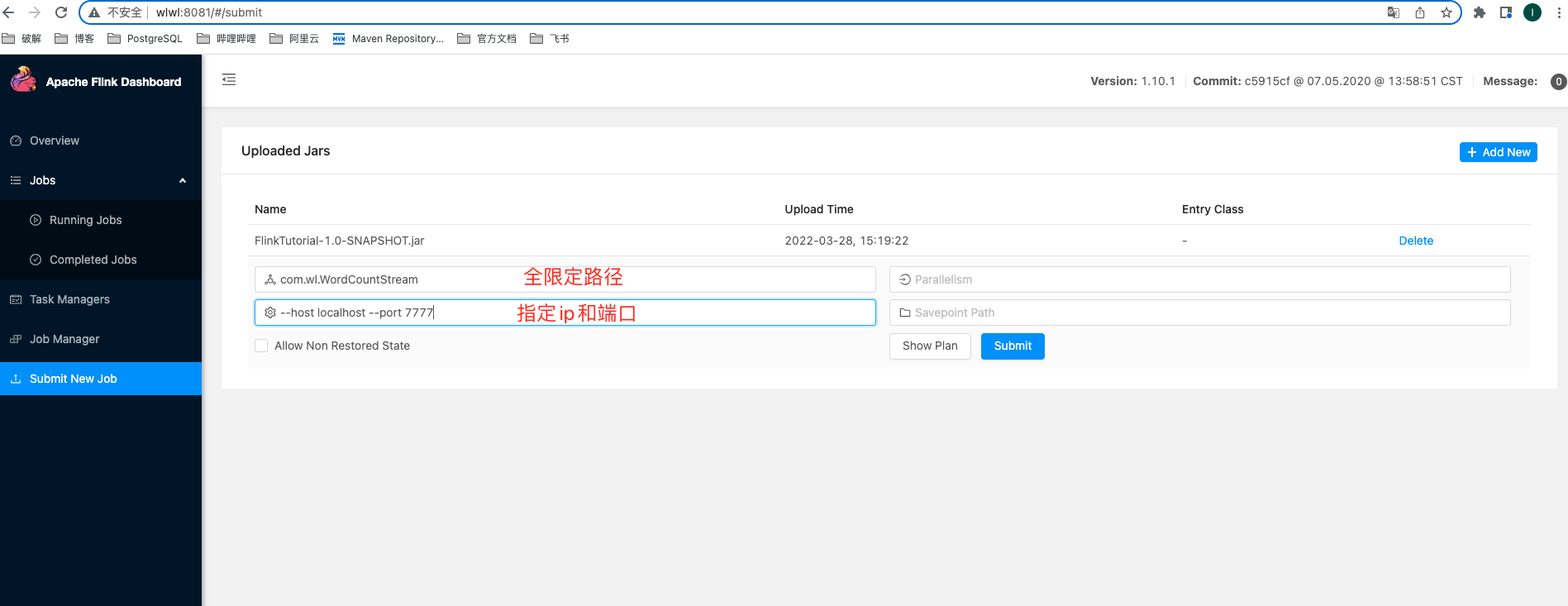

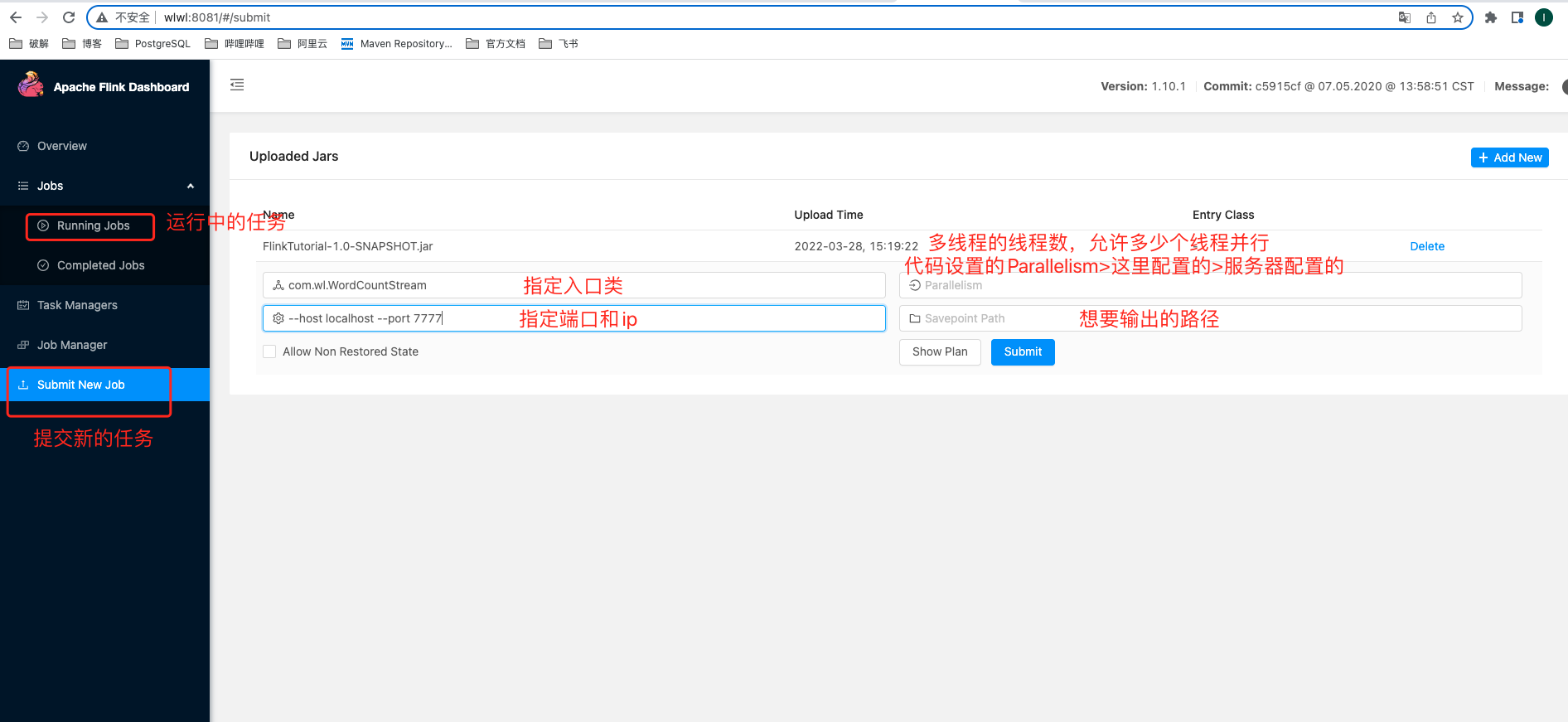

将写的java程序打成jar包在 Submit New Job 按钮中添加

然后点击jar包,输入以下内容

再点击Submit

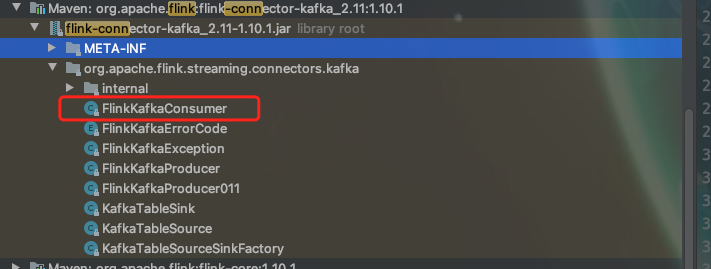

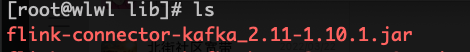

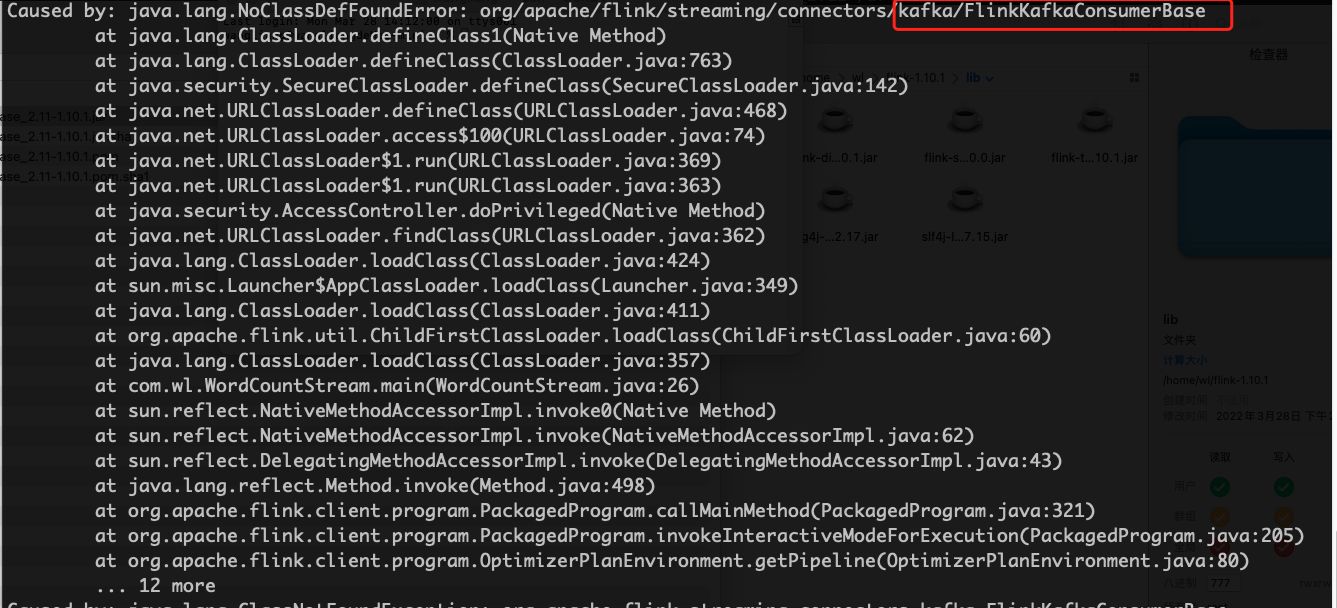

如果出现以下错误:

Caused by: java.lang.ClassNotFoundException:

org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

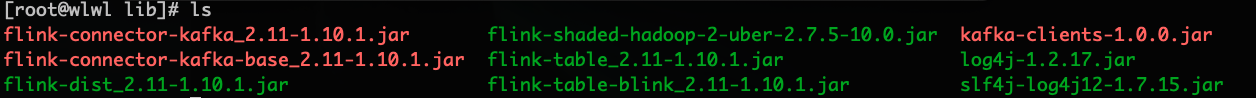

在 服务器上lib 目录下添加 FlinkKafkaConsumer所对应的jar包

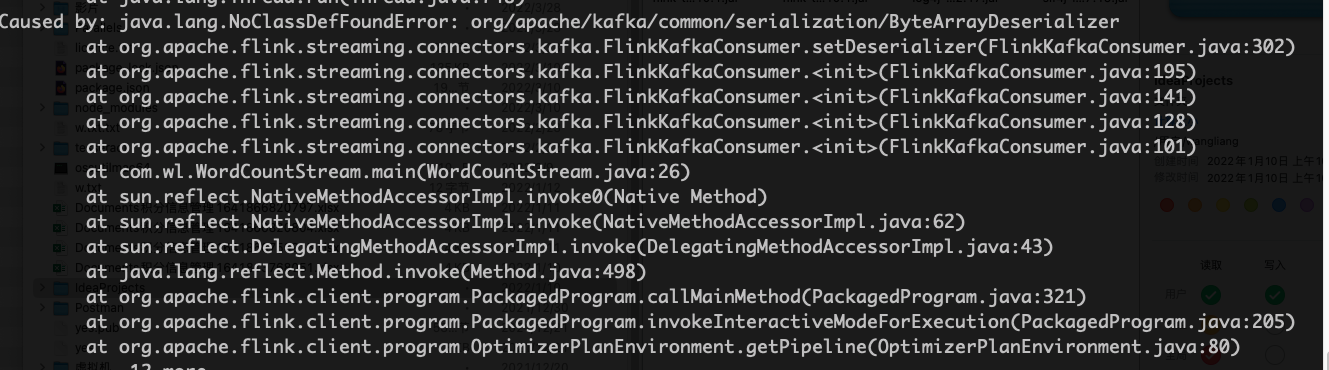

然后运行Flink集群,出现了新的错误

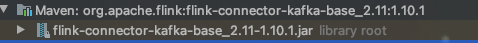

解决方法就是 将kafka-base的jar包上传到服务器上

再运行又报了个新错误

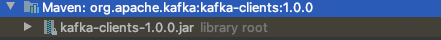

解决方法就是将 kafka-clients的jar加入到服务器的Flink的lib目录下

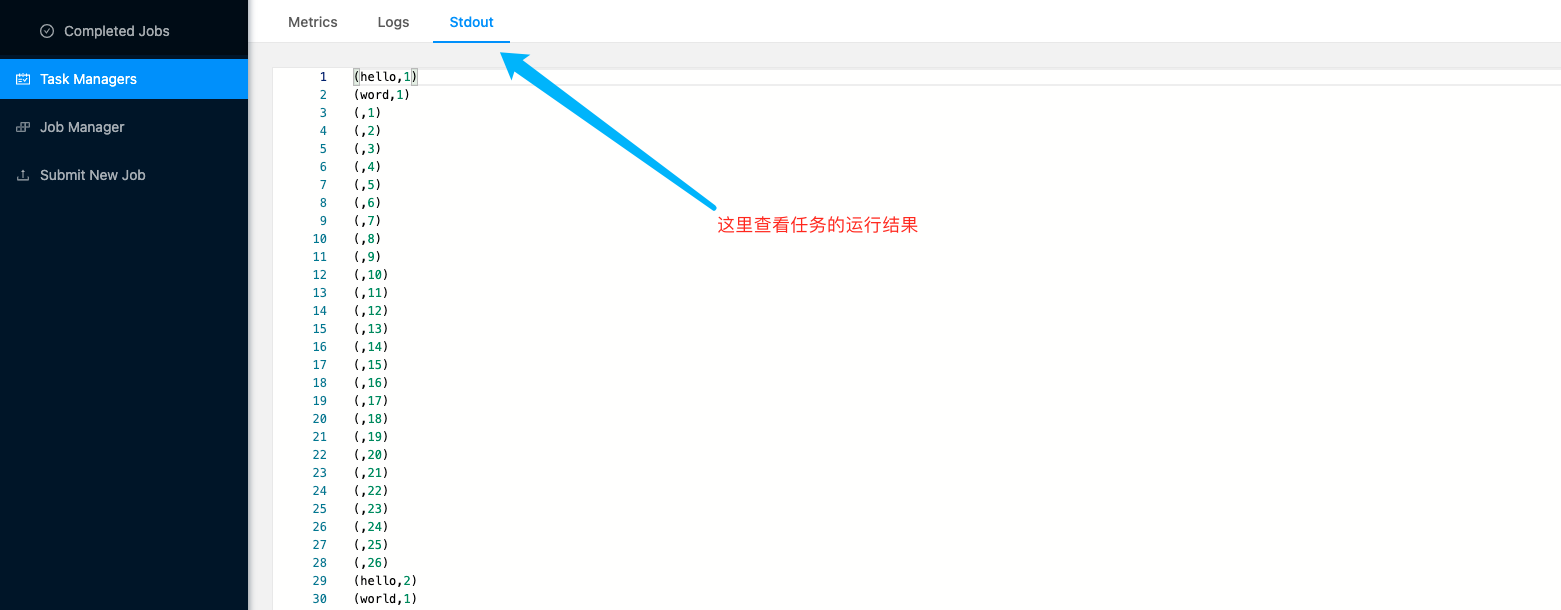

web页面提交任务

Submit New Job

Job Manager

Task Managers

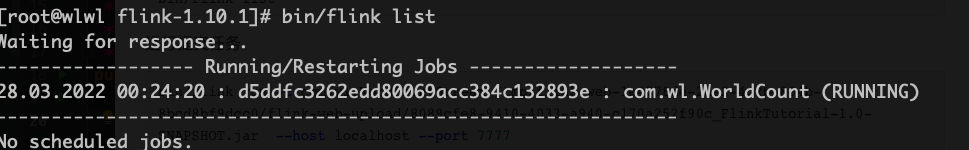

Shell命令提交任务

查看已经提交的任务

bin/flink list

运行提交任务

bin/flink run -c com.wl.WordCountStream /tmp/flink-web-1a4778de-2ae2-4c2b-884b-8bcd8bf9dcc0/flink-web-upload/8089cfe8-9410-4033-a940-c170a252f90c_FlinkTutorial-1.0-SNAPSHOT.jar --host localhost --port 7777

- -c 指定入口类

- -p 指定并行度 Parallelism

- jar包路径

- 启动参数

取消job

bin/flink cancel 4e0c7a93c3b4bec8ddc9d17451c6016f

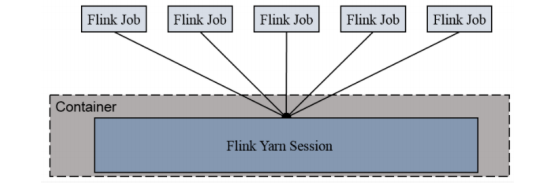

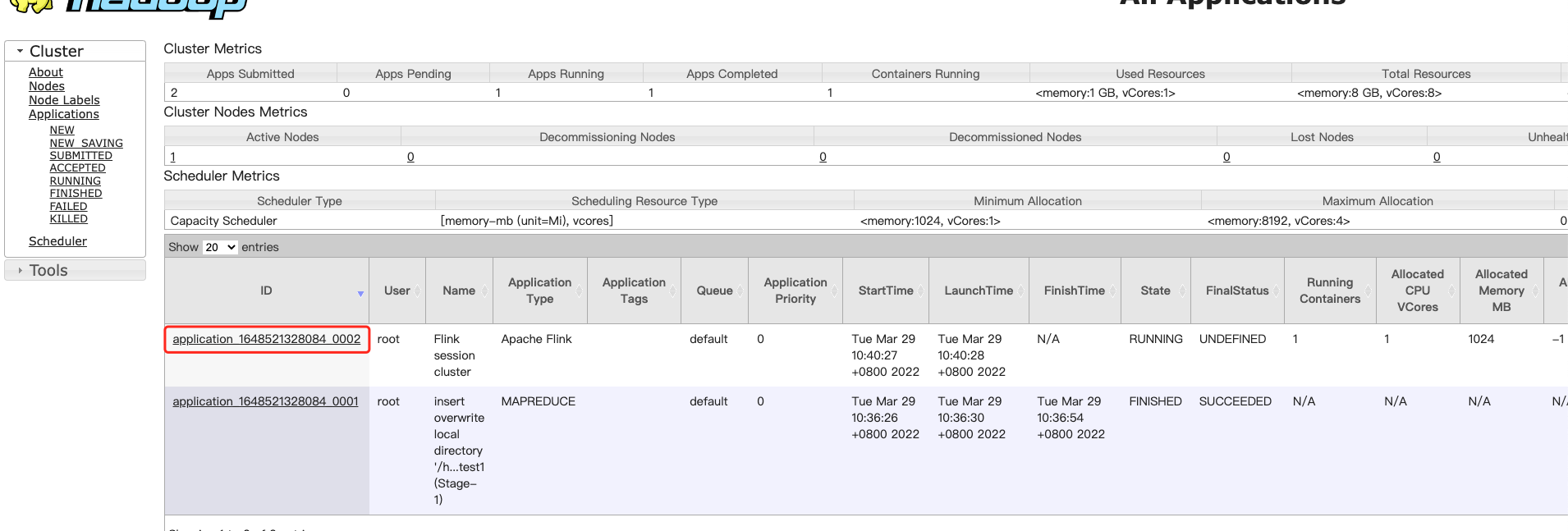

Yarn模式

Session Cluster模式

Session-Cluster 模式需要先启动集群,然后再提交作业,接着会向 yarn 申请一块空间后,资源永远保持不变。适合规模小执行时间短的作业

启动yarn-session

bin/yarn-session.sh -n 3 -s 3 -nm wl -d

-n(--container):TaskManager的数量-s(--slots):每个TaskManager的slot数量,默认一个slot一个core-jm:JobManager的内存(单位MB)。-tm:每个taskmanager的内存(单位MB)。-nm:yarn 的appName(现在yarn的webUi上的名字)。-d:后台执行。

关闭yarn-session

yarn application --kill application_1648521328084_0002

3.运行时架构

运行时的组件

JobManager(作业管理器)

主要的作用是控制一个应用程序的主进程,每个应用程序都会被一个不同的JobManager所控制执行。

应用程序会包括以下内容:

- JobGraph(作业图)

- logical dataflow graph(逻辑数据流图)

- jar包和类

JobManager会向ResourceManager(资源管理器)请求执行任务所必须的资源(插槽slot),一旦获取到了足够的资源,就会将JobGraph(作业图)转换为ExecutionGrap(执行图)分发给TaskManager去执行。

ResourceManager(资源管理器)

主要负责管理插槽,插槽是Flink中定义的处理资源单元。

JobManager申请插槽资源时,ResourceManager会将有空闲插槽的TaskManager分配给JobManager

TaskManger(任务管理器)

执行工作的进程。每个JobManager都包含一个或多个TaskManager运行,,每一个TaskManager都包含了一定数量的插槽(slots)。插槽的数量限制了TaskManager能够执行的任务数量。

TaskManager会向资源管理器注册自己的插槽,收到资源管理器的指令后,TaskManager就会将一个或者多个插槽提供给JobManager调用。JobManager就可以向插槽分配任务来执行了

在执行过程中,一个TaskManager可以跟其它运行同一应用程序的TaskManager交换数据

Dispatcher(分发器)

当一个应用被提交执行时,分发器就会启动并将应用移交给一个JobManager

Dispatcher也会启动一个Web UI,用来方便地展示和监控作业执行的信息。

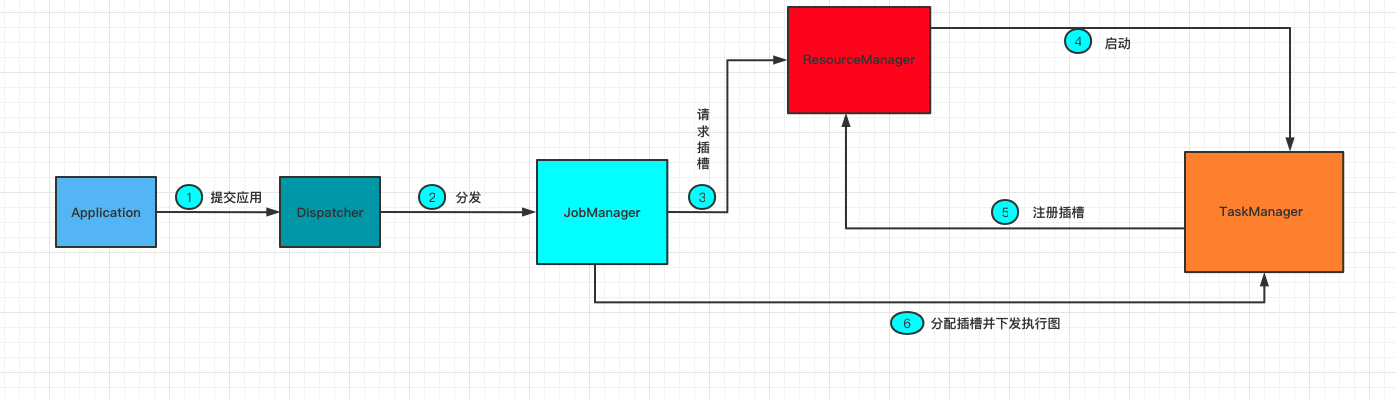

任务提交流程

当一个应用提交时,Flink的组件是如何交互协作的

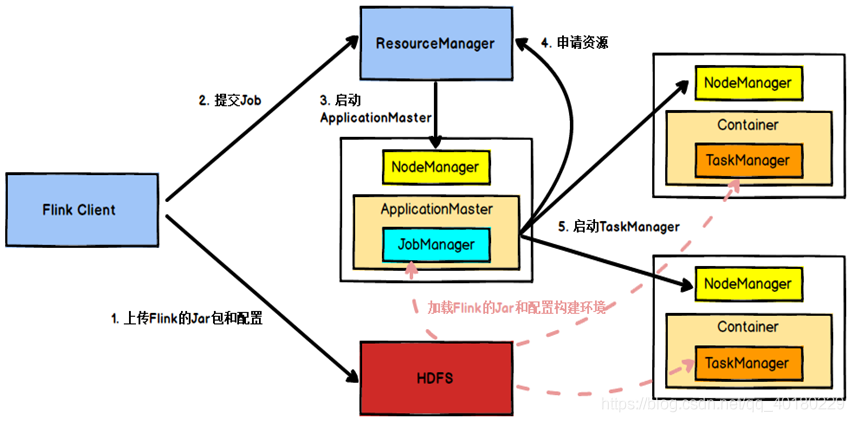

当部署在yarn上,流程如下

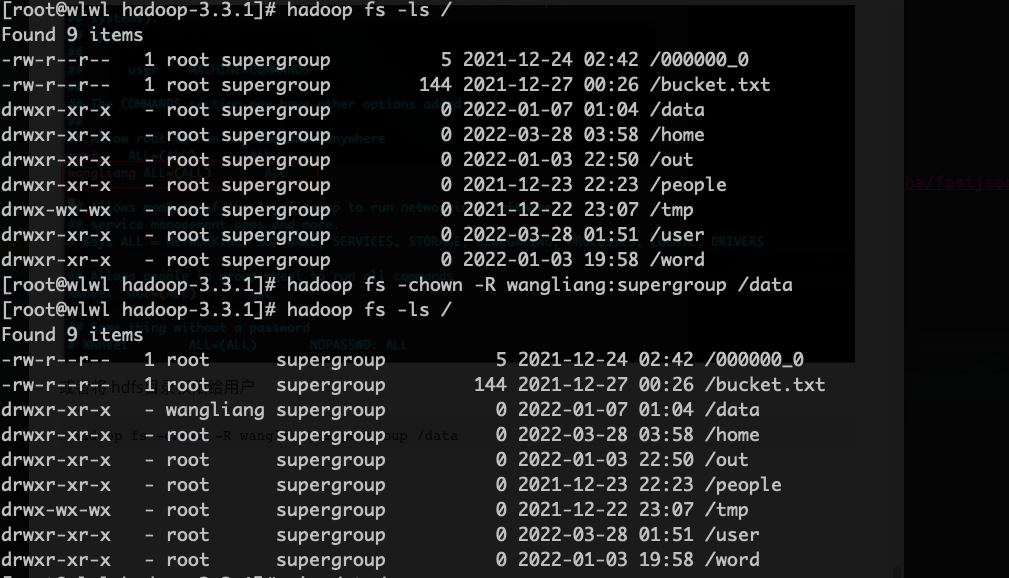

1.Flink任务提交后,Client向HDFS上传Flink的Jar包和配置

2.之后客户端向Yarn ResourceManager提交任务,ResourceManager分配Container资源并通知对应的NodeManager启动ApplicationMaster

3.ApplicationMaster启动后加载Flink的Jar包和配置构建环境,去启动JobManager,之后JobManager向Flink自身的RM进行申请资源,自身的RM向Yarn 的ResourceManager申请资源(因为是yarn模式,所有资源归yarn RM管理)启动TaskManager

4.Yarn ResourceManager分配Container资源后,由ApplicationMaster通知资源所在节点的NodeManager启动TaskManager

5.NodeManager加载Flink的Jar包和配置构建环境并启动TaskManager,TaskManager启动后向JobManager发送心跳包,并等待JobManager向其分配任务。

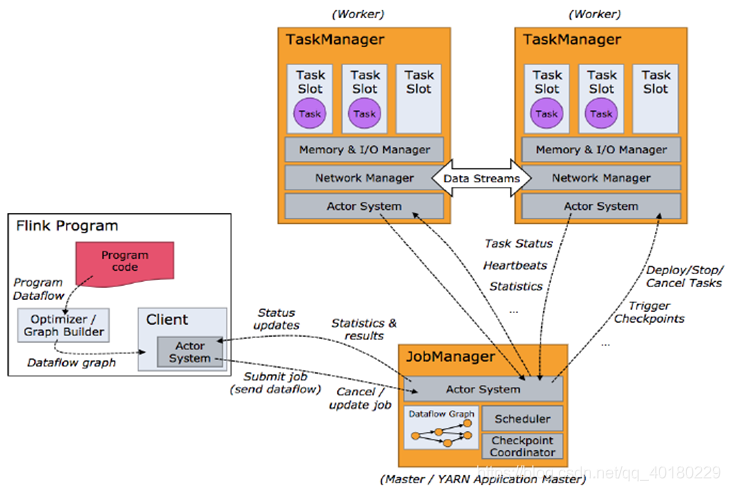

任务调度原理

1.客户端不是运行时和程序执行的一部分,但它用于准备并发送dataflow(JobGraph)给Master(JobManager),然后,客户端断开连接或者维持连接以等待接收计算结果。而Job Manager会产生一个执行图(Dataflow Graph)

2.当 Flink 集群启动后,首先会启动一个 JobManger 和一个或多个的 TaskManager。由 Client 提交任务给 JobManager,JobManager 再调度任务到各个 TaskManager 去执行,然后 TaskManager 将心跳和统计信息汇报给 JobManager。TaskManager 之间以流的形式进行数据的传输。上述三者均为独立的 JVM 进程。

3.Client 为提交 Job 的客户端,可以是运行在任何机器上(与 JobManager 环境连通即可)。提交 Job 后,Client 可以结束进程(Streaming的任务),也可以不结束并等待结果返回。

4.JobManager 主要负责调度 Job 并协调 Task 做 checkpoint,职责上很像 Storm 的 Nimbus。从 Client 处接收到 Job 和 JAR 包等资源后,会生成优化后的执行计划,并以 Task 的单元调度到各个 TaskManager 去执行。

5.TaskManager 在启动的时候就设置好了槽位数(Slot),每个 slot 能启动一个 Task,Task 为线程。从 JobManager 处接收需要部署的 Task,部署启动后,与自己的上游建立 Netty 连接,接收数据并处理。

5.Flink流处理API

从集合中读取数据

package com.wl;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.Arrays;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/3/30 下午5:19

* @qq 2315290571

* @Description 从集合中读取数据

*/

public class ReadFromCollection {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStream<Student> dataStream= env.fromCollection(

Arrays.asList(

new Student("1001", "小明", 12),

new Student("1002", "小张", 13),

new Student("1003", "小李", 14),

new Student("1004", "小小", 15)

)

);

dataStream.print();

env.execute("collection job");

}

}

@AllArgsConstructor

@NoArgsConstructor

@Data

class Student{

private String stuNo;

private String name;

private Integer age;

}

从文件中获取数据

见1.WordCount实例

从Kafka中读取数据

见10.Flink读取Kafka投递文件到HDFS

基本转换算子(map/flatMap/filter)

java代码如下

package com.wl;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

import java.util.Arrays;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/3/30 下午5:58

* @qq 2315290571

* @Description 基础转换算子

*/

public class TransformDemo {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 从集合中读取数据

DataStream<Cat> dataStream = env.fromCollection(Arrays.asList(

new Cat(5, "cat1", "吃鱼"),

new Cat(4, "cat2", "抓老鼠"),

new Cat(3, "cat3", "睡觉"),

new Cat(2, "cat4", "睡觉")

));

// map映射 将对象转换为字符串输出

DataStream<String> mapStream = dataStream.map(cat -> {

return cat.toString();

});

// flatMap 拆分

DataStream<Character> flatMapStream = dataStream.flatMap(

new FlatMapFunction<Cat, Character>() {

@Override

public void flatMap(Cat value, Collector<Character> out) throws Exception {

char[] ch = value.toString().toCharArray();

for (char c : ch) {

out.collect(c);

}

}

}

);

// filter 过滤

DataStream<Cat> filterDataStream = dataStream.filter(cat -> {

return cat.getAge() > 3;

});

mapStream.print("map");

flatMapStream.print("flatMap");

filterDataStream.print("filter");

env.execute("Transform");

}

}

@AllArgsConstructor

@NoArgsConstructor

@Data

class Cat {

private Integer age;

private String name;

private String action;

}

聚合操作算子

DataStream里没有reduce和sum这类聚合操作的方法,因为Flink设计中,所有数据必须先分组才能做聚合操作。

KeyBy

这些算子可以针对KeyedStream的每一个支流做聚合。

-

sum()

-

min()

-

max()

-

minBy()

-

maxBy()

maxBy()

package com.wl;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/3/30 下午6:35

* @qq 2315290571

* @Description 分组聚合

*/

public class KeyByTransform {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 获取数据源

DataStream<String> dataStream = env.readTextFile("/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/Cat.txt");

// 进行map整合操作

DataStream<Dog> stream = dataStream

.map(str -> {

String[] value = str.split(",");

return new Dog(Integer.parseInt(value[0]), value[1], value[2]);

})

// 按照 姓名分组

.keyBy(Dog::getName)

// 年龄最小的

.maxBy("age");

stream.print("result");

env.execute();

}

}

@AllArgsConstructor

@NoArgsConstructor

@Data

class Dog {

private Integer age;

private String name;

private String action;

}

启动

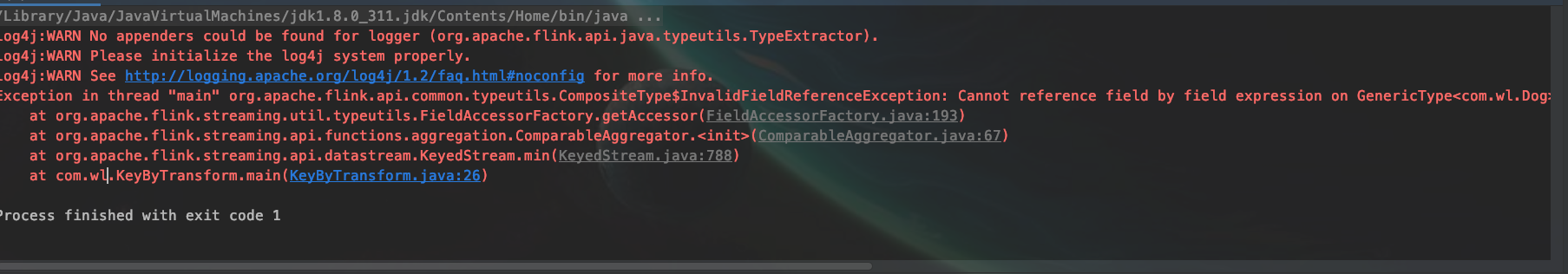

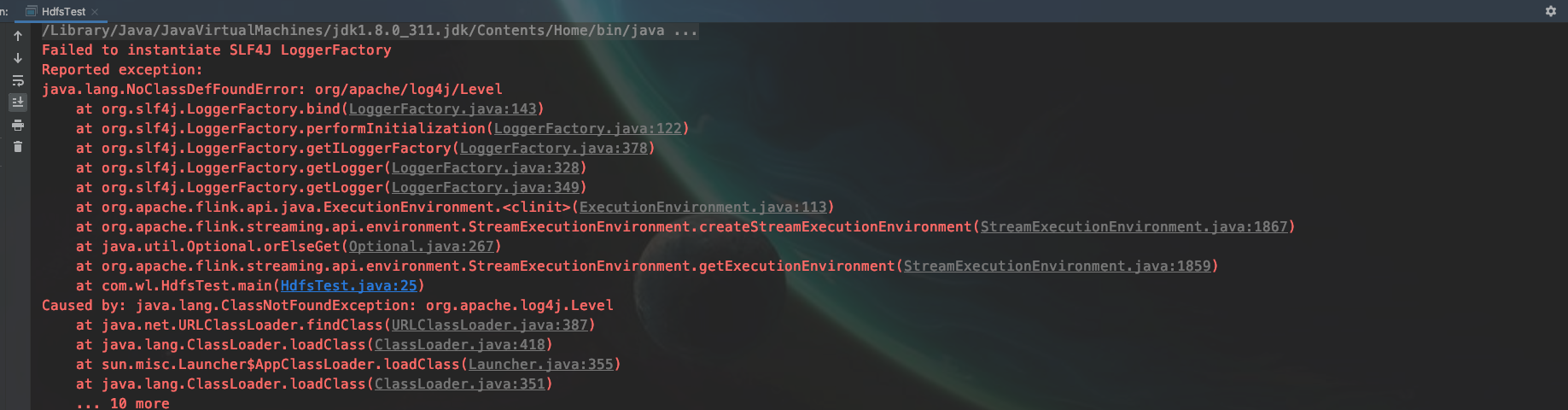

出现这个异常,我们在resources 目录下建一个文件 log4j.properties

可以更详细的看到报错信息

# 可以设置级别: debug>info>error

#debug :显示 debug 、 info 、 error

#info :显示 info 、 error

#error :只 error

# 也就是说只显示比大于等于当前级别的信息

log4j.rootLogger=info,appender1

#log4j.rootLogger=info,appender1

#log4j.rootLogger=error,appender1

# 输出到控制台

log4j.appender.appender1=org.apache.log4j.ConsoleAppender

# 样式为 TTCCLayout

log4j.appender.appender1.layout=org.apache.log4j.TTCCLayout

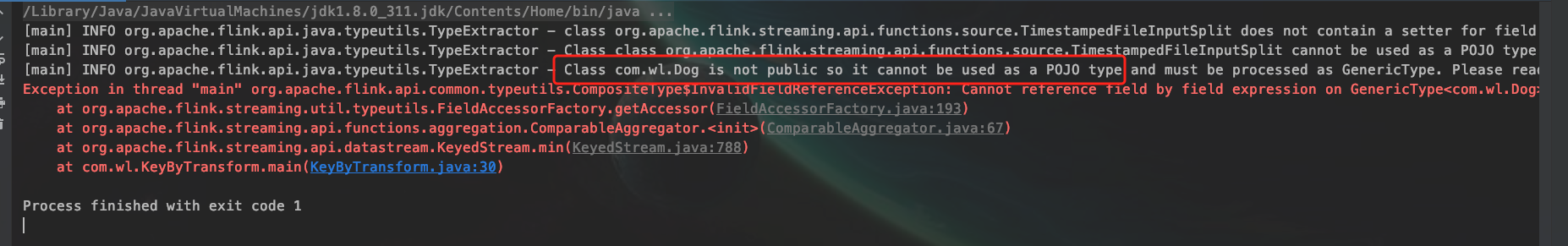

再次启动

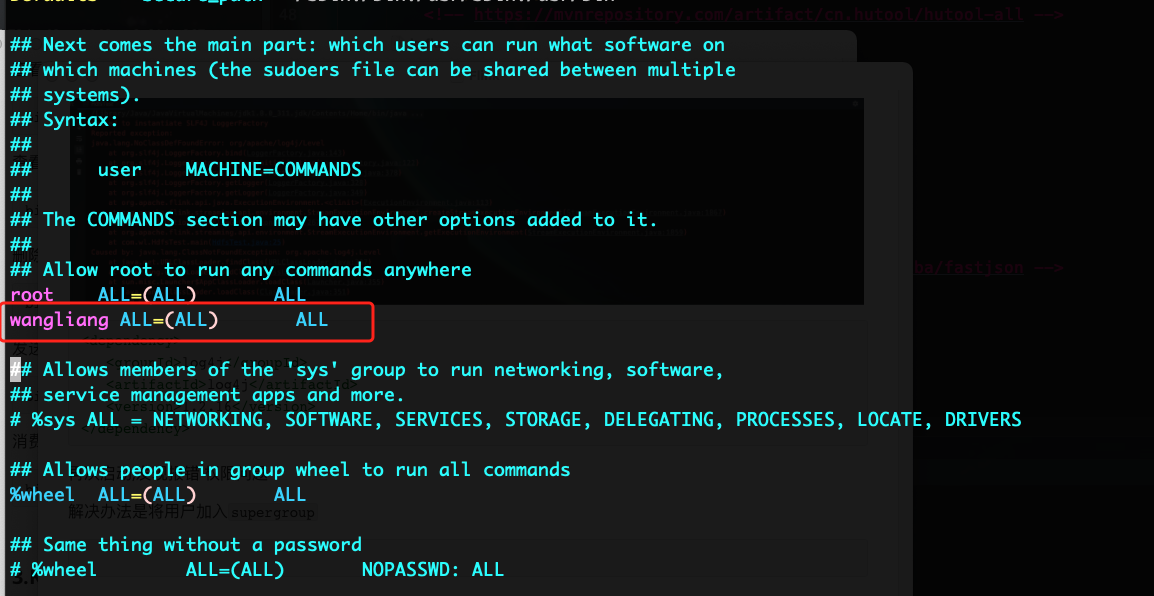

发现了一个重要的信息, Dog类不是一个public,flink不认为是一个标准的POJO类型

所以需要加上修饰符public,不放在一个类中了

package com.wl;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

@AllArgsConstructor

@NoArgsConstructor

@Data

public class Dog{

private Integer age;

private String name;

private String action;

}

Cat.txt

5,catA,eat mouse

10,catF,run

9,catA,sleep

7,catD,jump

8,catH,catch mouse

9,catF,sleep

6,catH,sleep

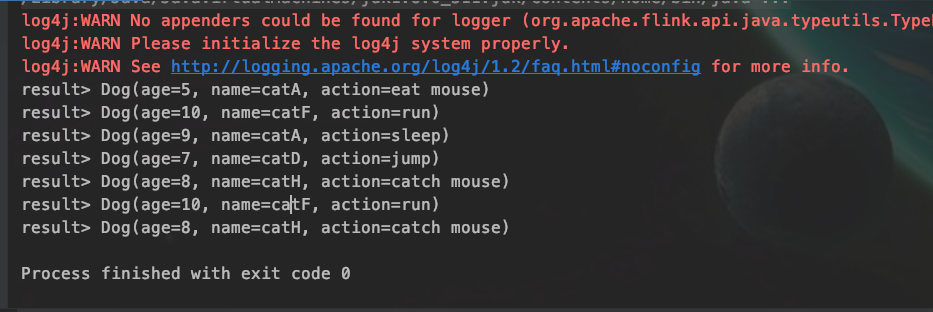

运行结果

结果的解释说明:

首先keyBy进行name分组,然后分别求每组的最大值,第一条数据进来,只有他自己,所以是最大的,然后第二条,接着继续滚动

多流转换算子

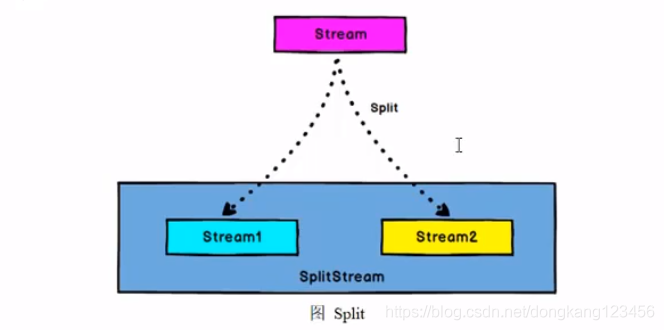

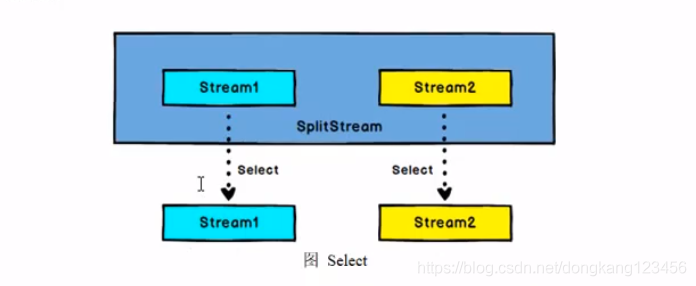

Split和Select

split将一个Stream 拆分成 一个SplitStream,他的里面包含多个stream

select是从SplitStream中获取stream

package com.wl;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SplitStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.Collections;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/3/31 上午11:19

* @qq 2315290571

* @Description Connect 和 map

*/

public class ConnectStreamDemo {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 转换成实体类

DataStream<Dog> mapStream = env.readTextFile("/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/Cat.txt").map(value -> {

String[] str = value.split(",");

return new Dog(Integer.parseInt(str[0]), str[1], str[2]);

});

// 分流 按年龄分为两条流

SplitStream<Dog> splitStream = mapStream.split(value -> {

return (value.getAge() > 6) ? Collections.singletonList("old") : Collections.singletonList("young");

});

// 获取流

DataStream<Dog> oldStream = splitStream.select("old");

DataStream<Dog> youngStream = splitStream.select("young");

DataStream<Dog> allStream = splitStream.select("young", "old");

// 打印

oldStream.print("old");

youngStream.print("young");

// allStream.print("all");

// 执行

env.execute("split and select");

}

}

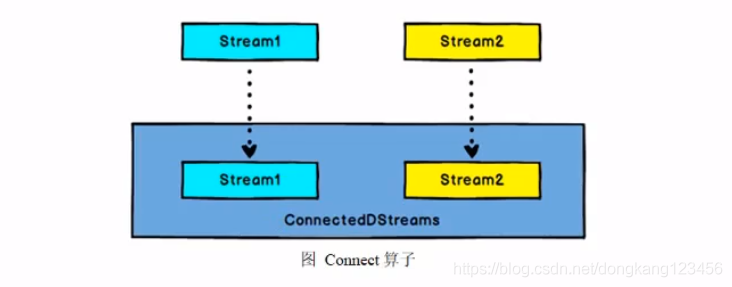

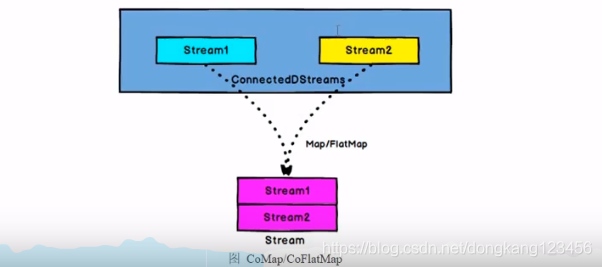

Connect和CoMap

Connect 连接两个保持他们类型的数据流,两个数据流被Connect 之后,只是被放在了一个流中,内部依然保持各自的数据和形式不发生任何变化,两个流相互独立

package com.wl;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.ConnectedStreams;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SplitStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoMapFunction;

import scala.Tuple2;

import scala.Tuple3;

import java.util.Collections;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/3/31 上午11:19

* @qq 2315290571

* @Description Connect 和 map

*/

public class ConnectStreamDemo {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 转换成实体类

DataStream<Dog> mapStream = env.readTextFile("/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/Cat.txt").map(value -> {

String[] str = value.split(",");

return new Dog(Integer.parseInt(str[0]), str[1], str[2]);

});

// 分流 按年龄分为两条流

SplitStream<Dog> splitStream = mapStream.split(value -> {

return (value.getAge() > 6) ? Collections.singletonList("old") : Collections.singletonList("young");

});

// 获取流

DataStream<Dog> oldStream = splitStream.select("old");

DataStream<Dog> youngStream = splitStream.select("young");

DataStream<Dog> allStream = splitStream.select("young", "old");

// 将 oldStream 转换成二元组类型

DataStream<Tuple2<String,Integer>> tuple2DataStream= oldStream.map(new MapFunction<Dog, Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> map(Dog value) throws Exception {

return new Tuple2<>(value.getName(),value.getAge());

}

});

// 使用Connect 保持他们类型的数据流

ConnectedStreams<Tuple2<String, Integer>, Dog> connectedStreams = tuple2DataStream.connect(youngStream);

// 使用CoMap进行分别操作

DataStream<Object> resultStream = connectedStreams.map(new CoMapFunction<Tuple2<String, Integer>, Dog, Object>() {

@Override

public Object map1(Tuple2<String, Integer> value) throws Exception {

return new Tuple3<>(value._1, value._2, "old");

}

@Override

public Object map2(Dog dog) throws Exception {

return new Tuple2(dog.getAge(), "normal");

}

});

// 打印

resultStream.print();

// 执行

env.execute("Connect CosMap");

}

}

Union

合并两个及两个以上的流,产生一个新的流

对比Connect

- Connect的数据类型可以不同,Connect只能合并两个流

- Union可以合并多条流,Union的数据结构必须是一样的

代码演示 省略

7.WindowApi

要统计在一段时间内的数据,或者计数达到某个值的时候需要用到窗口。窗口就是在将无限流转换为有限块进行处理

窗口类型:

-

时间窗口(Time Window)

- 滚动时间窗口(Tumbling window)

- 滑动时间窗口 (Sliding window)

- 会话窗口(Session window)

-

计数窗口(Count Window)

- 滚动计数窗口

- 滑动计数窗口

开窗代码的实现

package com.wl.window;

import com.wl.Student;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.EventTimeSessionWindows;

import org.apache.flink.streaming.api.windowing.assigners.SlidingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.assigners.WindowAssigner;

import org.apache.flink.streaming.api.windowing.time.Time;

import java.util.Arrays;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/5/16 下午2:52

* @qq 2315290571

* @Description 创建窗口

*/

public class CreateWindow {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStream<Student> dataStream = env.fromCollection(

Arrays.asList(

new Student("1001", "小明", 12),

new Student("1002", "小张", 13),

new Student("1003", "小李", 14),

new Student("1004", "小小", 15)

)

);

// 必须要在keyBy=以后才能开窗口 或者 创建全局窗口 windowAll(不推荐,因为会将并行度都为1)

/**

* 方法一:

* 开窗 window方法里面需要 传一个 {@link WindowAssigner} 类型的值 , WindowAssigner有一些实现类对应不同的窗口

* 例如 如果要创建 {@link TumblingProcessingTimeWindows} 滑动和滚动时间窗口 则需要调用它本身的 of方法,因为构造方法被 protected保护了 不能直接new

*方法二:

* 直接调用 timeWindow方法 和

*/

dataStream.keyBy("stuNo")

// 滚动时间窗口 和简写方式

// .window(TumblingProcessingTimeWindows.of(Time.seconds(15)))

// .timeWindow(Time.seconds(15))

// 滑动时间窗口 和简写方式

// .window(SlidingProcessingTimeWindows.of(Time.seconds(15),Time.seconds(20)))

// .timeWindow(Time.seconds(15),Time.seconds(20))

// 会话时间窗口 (无简写形式)

// .window(EventTimeSessionWindows.withGap(Time.minutes(1)))

// 滚动计数窗口

// .countWindow(5)

// 滑动计数窗口

.countWindow(5, 10);

dataStream.print();

env.execute("collection job");

}

}

窗口函数

开窗以后做的聚合运算 称之为窗口函数,分为两类:

- 增量聚合函数(来一条数据处理一条 ReduceFunction AggregateFunction)

- 全窗口聚合函数(批处理数据 ProcessWindowFunction WindowFunction)

增量聚合函数案例代码实现

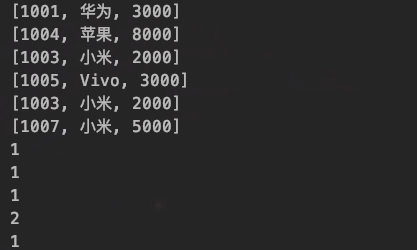

根据对应的id进行累加计算

package com.wl.window;

import com.wl.Order;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import java.util.Arrays;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/5/16 下午4:06

* @qq 2315290571

* @Description 增量聚合函数

*/

public class AccumulatorFunction {

public static void main(String[] args) throws Exception {

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.enableCheckpointing(2000L);

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "192.168.160.2:9092");//kafka

properties.setProperty("group.id", "flink-test"); //group.id

DataStream<Order> dataStream = env.addSource(new FlinkKafkaConsumer<String>("flink-test", new SimpleStringSchema(), properties))

.map(value -> {

String[] values = value.split(",");

System.out.println(Arrays.toString(values));

return new Order(Integer.parseInt(values[0]), values[1], Double.parseDouble(values[2]));

});

// 开窗处理

DataStream<Integer> countStream = dataStream.keyBy("orderId")

.timeWindow(Time.seconds(15))

// 增量聚合函数

.aggregate(new MyAggregateFunction());

countStream.print();

env.execute();

}

}

class MyAggregateFunction implements AggregateFunction<Order, Integer, Integer> {

@Override

public Integer createAccumulator() {

// 返回值是累加器初始值

return 0;

}

@Override

public Integer add(Order order, Integer accumulator) {

// 具体怎么累加 这里是来一个累加一次计算

return accumulator + 1;

}

@Override

public Integer getResult(Integer accumulator) {

// 返回结果 累加器的值

return accumulator;

}

@Override

public Integer merge(Integer integer, Integer acc1) {

return null;

}

}

实体类

package com.wl;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/4/1 上午11:43

* @qq 2315290571

* @Description 学生实体类

*/

@Data

@AllArgsConstructor

@NoArgsConstructor

public class Order {

private Integer orderId;

private String orderName;

private Double orderPrice;

}

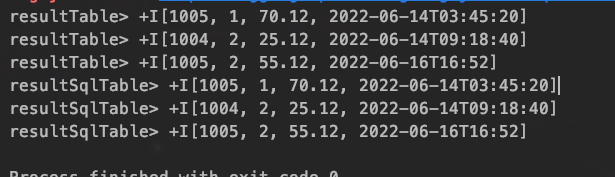

运行结果如下

全窗口聚合函数

根据不同的id输出对应的时间窗口

package com.wl.window;

import com.wl.Order;

import org.apache.commons.collections.IteratorUtils;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.util.Collector;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Arrays;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/5/17 上午11:08

* @qq 2315290571

* @Description 全窗口聚合函数

*/

public class WindowFunctionTest {

public static void main(String[] args) throws Exception {

// 创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 配置kafka环境

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.160.2:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "flink-test");

// 读取kafka内容

DataStream<Order> sourceStream = env.addSource(new FlinkKafkaConsumer<String>("flink-test", new SimpleStringSchema(), pro))

.map(value->{

String[] values = value.split(",");

System.out.println(Arrays.toString(values));

return new Order(Integer.parseInt(values[0].trim()), values[1].trim(), Double.parseDouble(values[2].trim()));

// return new Order();

})

;

// 全窗口聚合函数 开窗操作 累加计数

DataStream<Tuple3<Integer, Long, Integer>> windowStream = sourceStream.keyBy("orderId")

// 窗口滚动15秒

.timeWindow(Time.seconds(15))

// 开窗函数

.apply(new WindowFunction<Order, Tuple3<Integer, Long, Integer>, Tuple, TimeWindow>() {

@Override

public void apply(Tuple tuple, TimeWindow window, Iterable<Order> input, Collector<Tuple3<Integer, Long, Integer>> out) throws Exception {

// 获取订单id

Integer orderId = tuple.getField(0);

// 迭代累加

Integer count = IteratorUtils.toList(input.iterator()).size();

// 获取窗口的时间毫秒值

long endTime = window.getEnd();

out.collect(new Tuple3(orderId,endTime,count));

}

});

windowStream.print();

env.execute();

}

}

class MyWindowFunction implements WindowFunction<Order, Tuple3<String, Long, Integer>, Tuple, TimeWindow> {

@Override

public void apply(Tuple tuple, TimeWindow window, Iterable<Order> input, Collector<Tuple3<String, Long, Integer>> out) {

// 获取订单id

String orderId = tuple.getField(0);

// 迭代累加

Integer count = IteratorUtils.toList(input.iterator()).size();

// 获取窗口的时间毫秒值

long endTime = window.getEnd();

out.collect(new Tuple3(orderId,endTime,count));

}

}

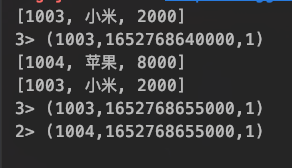

运行结果

因为设置的滚动窗口是15秒滚动,所以根据输入的内容时间来进行批处理

滑动计数增量窗口

求平均值

package com.wl.window;

import com.wl.Order;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Arrays;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/5/17 下午2:45

* @qq 2315290571

* @Description 计数滑动窗口

*/

public class SlidingCountWindow {

public static void main(String[] args) throws Exception {

// 创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 配置kafka环境

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.160.2:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "flink-test");

// 读取kafka内容

DataStream<Order> sourceStream = env.addSource(new FlinkKafkaConsumer<String>("flink-test", new SimpleStringSchema(), pro))

.map(value->{

String[] values = value.split(",");

System.out.println(Arrays.toString(values));

return new Order(Integer.parseInt(values[0].trim()), values[1].trim(), Double.parseDouble(values[2].trim()));

});

// 计数滑动窗口 使用增量函数窗口计算平均值

DataStream<Double> avgStream = sourceStream.keyBy("orderId")

.countWindow(10, 2)

.aggregate(new AggregateCountWindow());

avgStream.print();

env.execute();

}

}

class AggregateCountWindow implements AggregateFunction<Order, Tuple2<Double,Integer>,Double>{

@Override

public Tuple2<Double, Integer> createAccumulator() {

return new Tuple2(0.0,0);

}

@Override

public Tuple2<Double, Integer> add(Order order, Tuple2<Double, Integer> tuple2) {

return new Tuple2(order.getOrderPrice()+tuple2.f0,tuple2.f1+1);

}

@Override

public Double getResult(Tuple2<Double, Integer> tuple2) {

return tuple2.f0/tuple2.f1;

}

@Override

public Tuple2<Double, Integer> merge(Tuple2<Double, Integer> t1, Tuple2<Double, Integer> t2 ) {

return new Tuple2(t1.f0+ t2.f0,t2.f1+t2.f0);

}

}

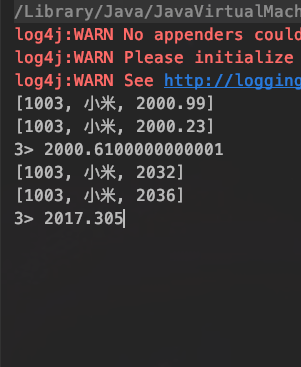

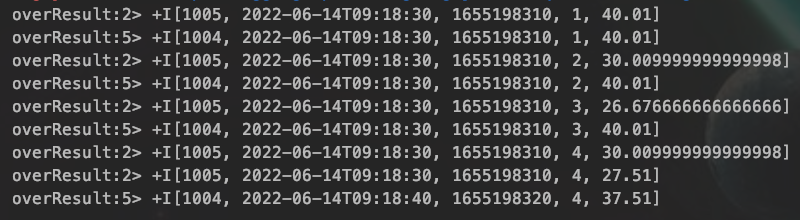

运行结果

运行结果中发现 ,当输入两个数的时候,就已经开始计算平均值了,但是代码中要求的是10个数开始计算平均值,跟我们预期的不一样。

那是因为我们设置的滑动窗口是达到十个数,就往后滑两步,能往后面滑动,那也可以往前面滑动,所以从后面计算十个数,就往前滑两步进行计算。当达到10时,就会开启新的窗口

其他函数

trigger:触发器,定义window关闭的时间,触发并计算输出结果

evictor:移除器,定义移除某些数据的逻辑

allowedlateness:允许迟到的数据

sideOutputLateData:将迟到的数据放入侧输出流

getSideOutput:获取侧输出流

测试代码:

package com.wl.window;

import com.wl.Order;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Arrays;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/5/17 下午3:45

* @qq 2315290571

* @Description 其他APi

*/

public class OtherFunction {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 配置kafka环境

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.160.2:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "flink-test");

// 读取kafka内容

DataStream<Order> sourceStream = env.addSource(new FlinkKafkaConsumer<String>("flink-test", new SimpleStringSchema(), pro))

.map(value->{

String[] values = value.split(",");

System.out.println(Arrays.toString(values));

return new Order(Integer.parseInt(values[0].trim()), values[1].trim(), Double.parseDouble(values[2].trim()));

});

// 开窗操作

SingleOutputStreamOperator<Order> priceSumStream = sourceStream.keyBy("orderId")

.timeWindow(Time.seconds(20))

// 允许等待迟到的数据的时长

.allowedLateness(Time.seconds(10))

// 聚合操作

.sum("orderPrice");

// priceSumStream.getSideOutput(outputTag).print("late");

priceSumStream.print("normal");

env.execute();

}

}

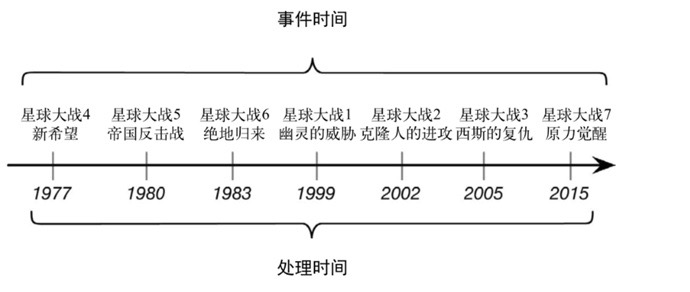

8. 时间语义和WaterMark

时间语义

在windowApi中提到了迟到的数据,数据是相对于什么时间而言算是迟到的呢,flink里面有三种时间语义:

-

EventTime:时间创建的时间

-

Ingestion Time:数据进入Flink的时间

-

Processing Time:执行操作算子的本地系统时间,与机器相关

一般情况下我们更关心EventTime,举如图所示的例子

如果我们想去看星球大战,应该是从第一部开始看,更关心的是事件故事发展的顺序,而不是什么时间上映的。

在代码中使用EventTime

系统默认使用的是ProcessingTime,所以需要手动修改时间语义。

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 从调用时刻开始给env创建的每一个stream追加时间特征

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

WaterMark

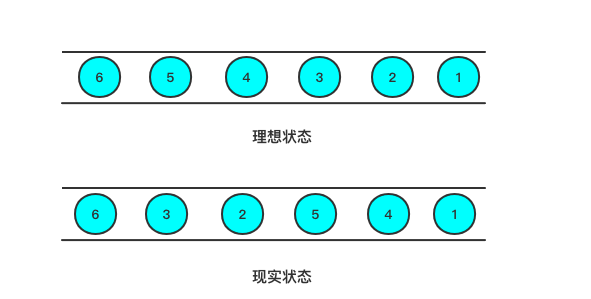

流处理从事件产生,到流经source,再到operator,中间是有一个过程和时间的,虽然大部分情况下,流到operator的数据都是按照事件产生的时间顺序来的,但是可能网络、分布式等原因导致Flink接收到的事件的先后顺序不是严格按照事件的Event Time顺序排列的。

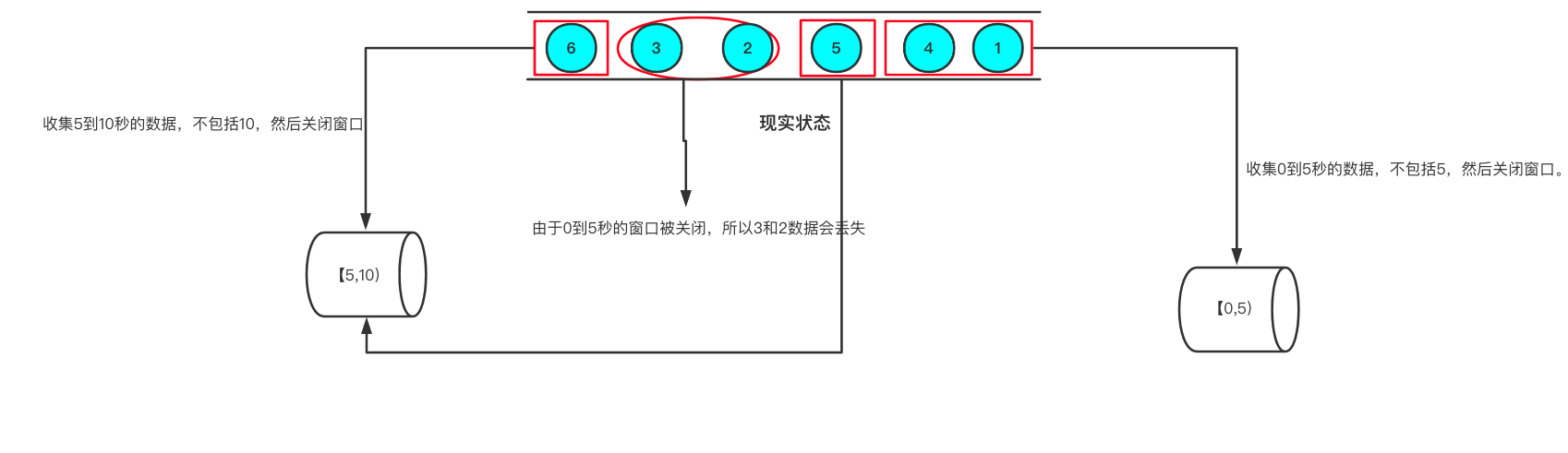

当事件的先后顺序不是严格按照事件的Event Time顺序排列的时候,则会出现以下的情况,flink在收到第三个数据时间戳为5s的时候,会认为已经到5s的时间戳了,于是关闭0到5的窗口开启5到10的窗口,导致 2和3数据丢失。

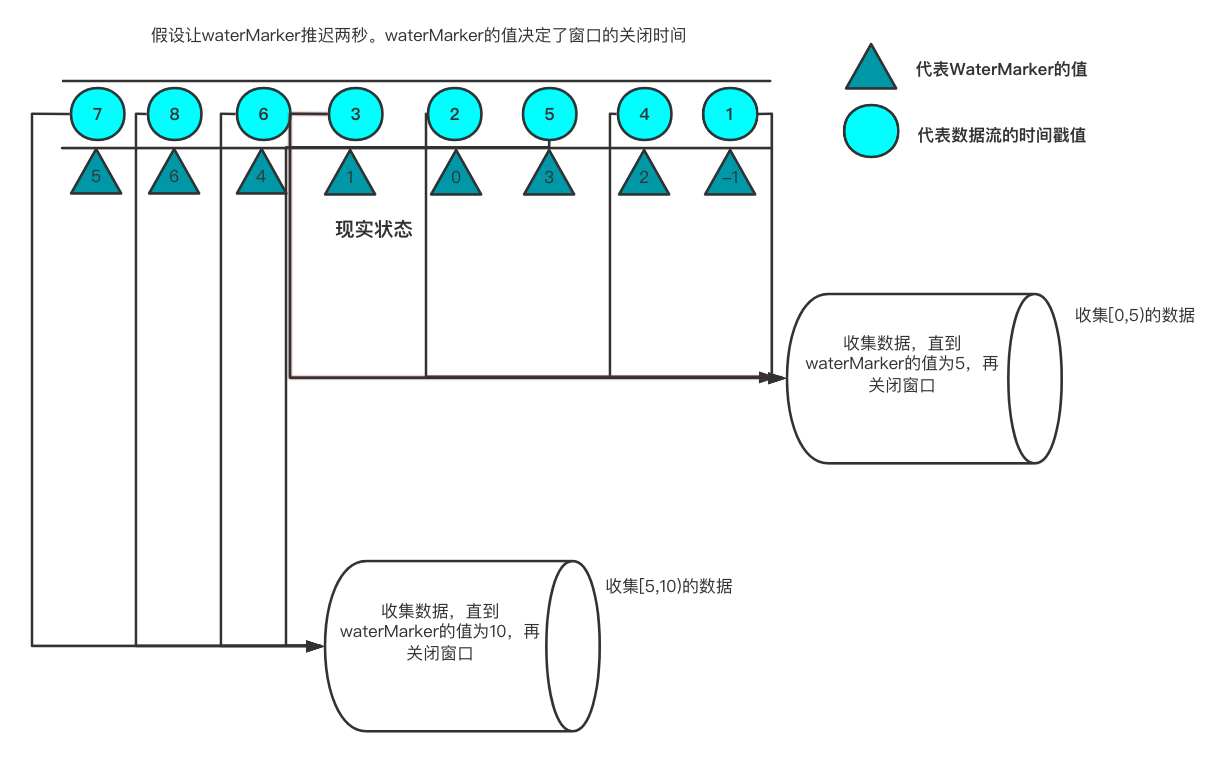

然后flink就提出了水位线(waterMark)的理念,水位线的值来决定窗口什么时间关闭。一般设置水位线的值是最大的迟到时间差值。通俗来说,水位线的概念就好像一个大巴车在8:00等学生上车,但是有些学生迟到了,需要8:02才能来,于是大巴车将钟表时间调成7:58,等到8点如果再不来就发车了。

9.状态后端

在实际开发中,数据一般都是以流的形式出现,当我们想对一个流式数据做一些标记性处理,例如累加计数,需要用到状态。Flink为我们提供了两种状态管理:

-

算子状态(Operator State) 作用范围是算子任务之内

-

键控状态(Keyed State) 按照key来操作,作为范围是key分组以后

算子状态

flink提供了三种数据结构来存储算子状态:

-

列表状态(List State) 状态用List集合来存储

-

联合列表状态(Union List State) 将数据用List集合存储

-

广播状态(Broadcast State) 算子有多项任务,状态相同,用广播

不常用不举例。。。

键控状态(Keyed State)

flink提供了四种数据结构来存储键控状态:

-

值状态(Value State) 存储单个状态

-

列表状态 (List State) 用list集合来存储状态

-

映射状态(Map State) 用key-value来存储状态

-

聚合状态(Reducing State&Aggergating State) 将状态表示为聚合操作的列表

Value State

package com.wl.state;

import com.wl.Order;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/1 上午11:32

* @qq 2315290571

* @Description 键控(Keyed State)状态管理

*/

public class StateManage {

public static void main(String[] args) throws Exception {

// 创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 读取kafka

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.160.3:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG,"test-topic");

DataStream<Order> orderStream = env.addSource(new FlinkKafkaConsumer<String>("test-topic", new SimpleStringSchema(), pro))

.map(value -> {

String[] order = value.split(",");

return new Order(Integer.parseInt(order[0]),order[1],Double.parseDouble(order[2]));

})

;

// orderStream.print();

// 键控处理

DataStream<Integer> keyStream = orderStream.keyBy("orderId")

.map(new OrderCountMapFunction());

keyStream.print("keyState");

// 运行

env.execute("stateTest");

}

}

class OrderCountMapFunction extends RichMapFunction<Order, Integer> {

// 定义键的数据类型

private ValueState<Integer> countValue;

// 定义赋值初始值

@Override

public void open(Configuration parameters) throws Exception {

// 因为要获取上下文 所以不能直接在外面直接定义

countValue=getRuntimeContext().getState(new ValueStateDescriptor<Integer>("count",Integer.class,0));

}

@Override

public Integer map(Order value) throws Exception {

Integer count = countValue.value();

count++;

countValue.update(count);

return count;

}

}

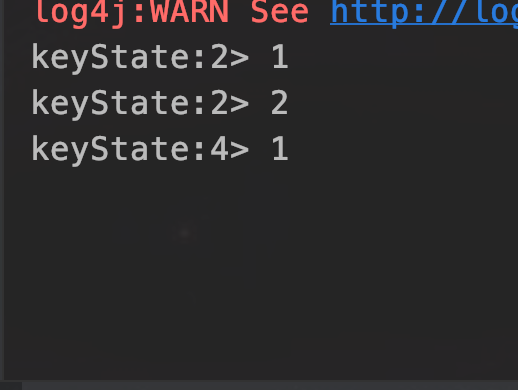

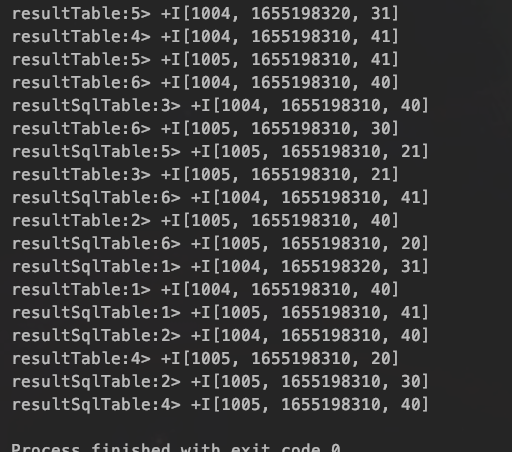

运行结果

List State

package com.wl.state;

import com.wl.Order;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.common.state.ListStateDescriptor;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.ArrayList;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/1 上午11:32

* @qq 2315290571

* @Description 键控(Keyed State)状态管理

*/

public class StateManage {

public static void main(String[] args) throws Exception {

// 创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 读取kafka

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.160.3:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "test-topic");

DataStream<Order> orderStream = env.addSource(new FlinkKafkaConsumer<String>("test-topic", new SimpleStringSchema(), pro))

.map(value -> {

String[] order = value.split(",");

return new Order(Integer.parseInt(order[0]), order[1], Double.parseDouble(order[2]));

});

// orderStream.print();

// 键控处理

DataStream<Tuple2<Integer, Double>> keyStream = orderStream.keyBy("orderId")

.map(new OrderCountMapFunction());

keyStream.print("keyState");

// 运行

env.execute("stateTest");

}

}

class OrderCountMapFunction extends RichMapFunction<Order, Tuple2<Integer, Double>> {

// 定义键的数据类型

private ListState<Object> countValue;

// 定义赋值初始值

@Override

public void open(Configuration parameters) throws Exception {

// 因为要获取上下文 所以不能直接在外面直接定义

countValue = getRuntimeContext().getListState(new ListStateDescriptor<Object>("count", Object.class));

}

@Override

public Tuple2<Integer, Double> map(Order value) throws Exception {

Integer id=0;

Double price=0.00;

for (Object o : countValue.get()) {

if (o instanceof Integer){

id=(int)o;

}else if (o instanceof Double){

price=(double)o;

}

}

ArrayList<Object> list = new ArrayList<>();

list.add(id+value.getOrderId() + 1);

list.add(price+value.getOrderPrice() + 5);

countValue.update(list);

return new Tuple2<>(id+value.getOrderId() + 1,price+value.getOrderPrice() + 5);

}

}

MapState

package com.wl.state;

import com.wl.Order;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.common.state.MapState;

import org.apache.flink.api.common.state.MapStateDescriptor;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/1 上午11:32

* @qq 2315290571

* @Description 键控(Keyed State)状态管理

*/

public class StateManage {

public static void main(String[] args) throws Exception {

// 创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 读取kafka

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.160.3:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "test-topic");

DataStream<Order> orderStream = env.addSource(new FlinkKafkaConsumer<String>("test-topic", new SimpleStringSchema(), pro))

.map(value -> {

String[] order = value.split(",");

return new Order(Integer.parseInt(order[0]), order[1], Double.parseDouble(order[2]));

});

// orderStream.print();

// 键控处理

DataStream<Tuple2<String, Double>> keyStream = orderStream.keyBy("orderId")

.map(new OrderCountMapFunction());

keyStream.print("keyState");

// 运行

env.execute("stateTest");

}

}

class OrderCountMapFunction extends RichMapFunction<Order, Tuple2<String, Double>> {

// 定义键的数据类型

private MapState<String,Double> countValue;

// 定义赋值初始值

@Override

public void open(Configuration parameters) throws Exception {

// 因为要获取上下文 所以不能直接在外面直接定义

countValue = getRuntimeContext().getMapState(new MapStateDescriptor<String, Double>("count", String.class,Double.class));

}

@Override

public Tuple2<String, Double> map(Order value) throws Exception {

if (countValue.get(value.getOrderId().toString())==null){

countValue.put(value.getOrderId().toString(),value.getOrderPrice());

}else {

Double price=countValue.get(value.getOrderId().toString())+value.getOrderPrice();

countValue.put(value.getOrderId().toString(),price);

}

return new Tuple2<>(value.getOrderId().toString(),countValue.get(value.getOrderId().toString()));

}

}

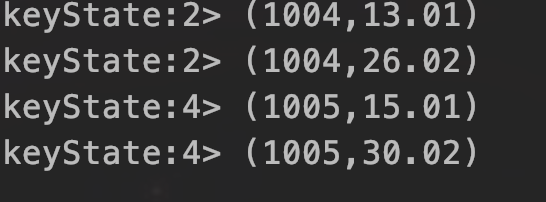

输入的值以及运行结果

1004,梨子,13.01

1004,梨子,13.01

1005,香蕉,15.01

1005,香蕉,15.01

前面几种状态数据结构不能访问事件的时间戳信息和watermark信息,在一些场景下需要访问 处理的时间戳信息时,就要使用到Flink提供的底层API ProcessFunction。

下面举例一个温度持续上升报警的案例

package com.wl.state;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.time.Time;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.util.Collector;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/8 上午10:31

* @qq 2315290571

* @Description 温度监控

*/

public class temperatureMonitor {

public static void main(String[] args) throws Exception {

// 创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 读取kafka

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "wl:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "flink");

DataStream<Temperature> sourceStream = env.addSource(new FlinkKafkaConsumer<String>("flink", new SimpleStringSchema(), pro))

.map(value -> {

String[] tempStr = value.split(",");

return new Temperature(Integer.parseInt(tempStr[0]), Double.parseDouble(tempStr[1]));

});

sourceStream.print();

// 执行KeyedByProcession

sourceStream.keyBy(Temperature::getTemp_id)

.process(new TempWarning(Time.seconds(10).toMilliseconds())).print();

// 执行

env.execute("temperature monitor");

}

}

class TempWarning extends KeyedProcessFunction<Integer, Temperature, String> {

// 定义时间间隔

private Long interval;

// 定义上一个温度值

private ValueState<Double> lastTemperature;

// 最后一次定时器的触发时间

private ValueState<Long> recentTimerTimeStamp;

public TempWarning(Long interval) {

this.interval = interval;

}

// 初始化

@Override

public void open(Configuration parameters) throws Exception {

// 定义生命周期

lastTemperature = getRuntimeContext().getState(new ValueStateDescriptor<Double>("lastTemp", Double.class));

recentTimerTimeStamp = getRuntimeContext().getState(new ValueStateDescriptor<Long>("recentTemp", Long.class));

}

@Override

public void processElement(Temperature temperature, Context context, Collector<String> collector) throws Exception {

// 当前温度值

Double curTemp = temperature.getTemp_num();

// 上一次温度

Double lastTemp = lastTemperature.value() != null ? lastTemperature.value() : curTemp;

// 计时器状态的时间戳

Long timerStamp = recentTimerTimeStamp.value();

if (curTemp > lastTemp && timerStamp == null) {

long warningTimeStamp = context.timerService().currentProcessingTime() + interval;

// 触发定时器

context.timerService().registerProcessingTimeTimer(warningTimeStamp);

recentTimerTimeStamp.update(warningTimeStamp);

} else if (curTemp < lastTemp && timerStamp != null) {

context.timerService().deleteProcessingTimeTimer(timerStamp);

recentTimerTimeStamp.clear();

}

// 更新保存的温度值

lastTemperature.update(curTemp);

}

// 关闭

@Override

public void close() throws Exception {

lastTemperature.clear();

}

// 定时器

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

System.out.println("定时器触发 " + timestamp);

// 触发报警并且清除 定时器状态的值

out.collect("传感器id" + ctx.getCurrentKey() + "温度持续" + interval + "ms" + "上升");

recentTimerTimeStamp.clear();

}

}

@Data

@AllArgsConstructor

@NoArgsConstructor

class Temperature {

private Integer temp_id;

private Double temp_num;

}

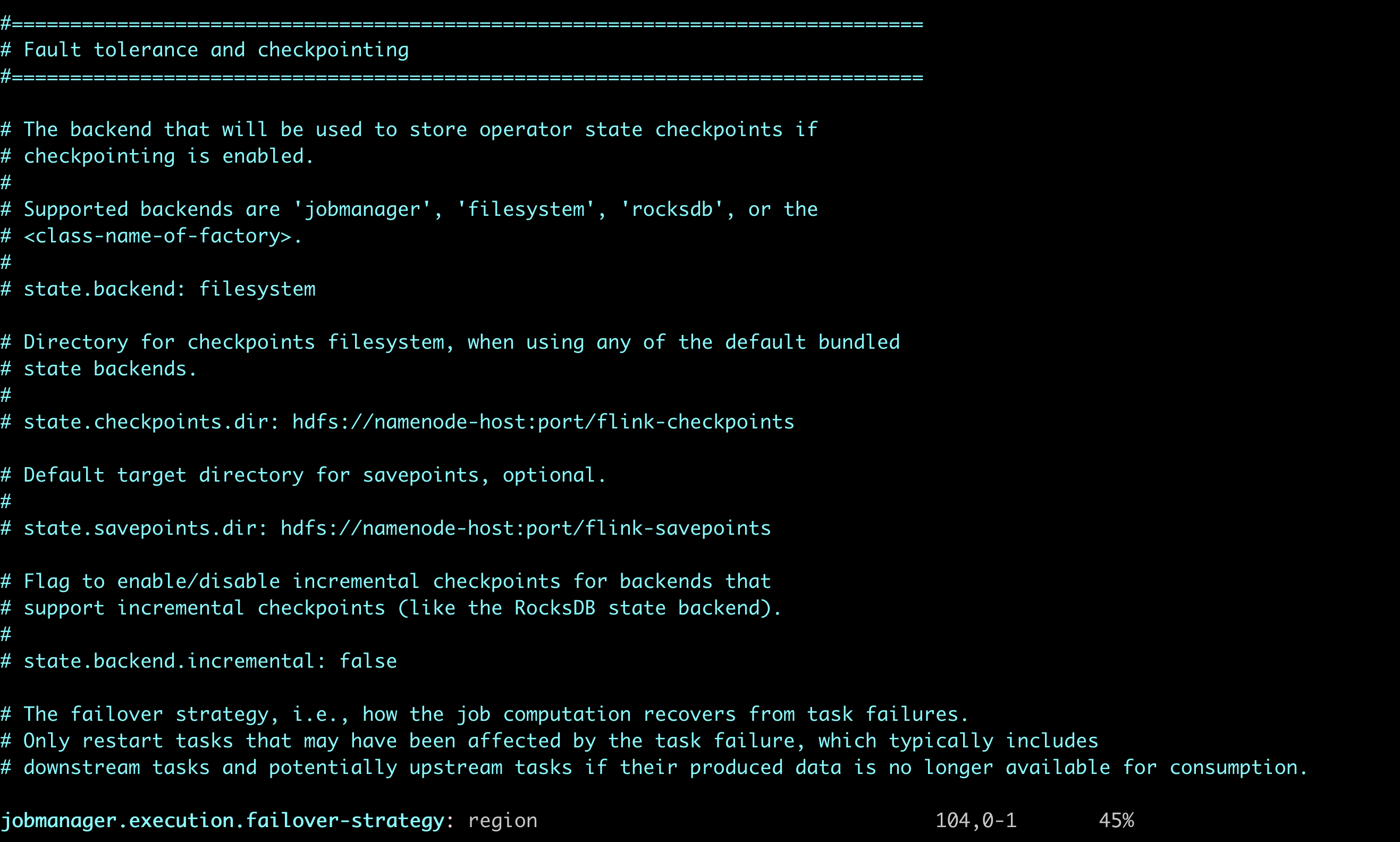

状态后端存储

为了确保flink 的检查点状态持久化存储,flink提供了三种状态后端:

-

MemoryStateBackend 将检查点状态存储在JVM的堆内存上(速度快,低延迟,但是不稳定,可能会丢失数据)

-

FsStateBackend 将检查点状态存储到文件系统(有本地文件系统和远程文件系统,容错率高)

-

RocksDBStateBackend 将所有状态序列化后,存入RocksDB中存储

状态后端可以有两种修改方式

配置文件修改-全局修改

代码修改-可以每个任务修改

// filesystem

env.setStateBackend(newFsStateBackend("hdfs://192.168.160.3:8020/data/stateBackend"));

10.容错机制

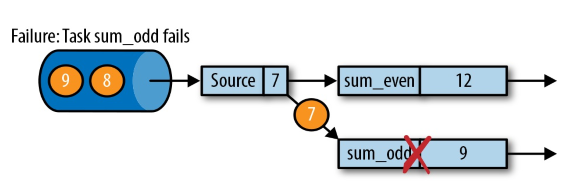

一致性检查点

flink故障恢复的核心就是应用状态的一致性检查点,在某个时间点,所有任务都进行一份自身快照。

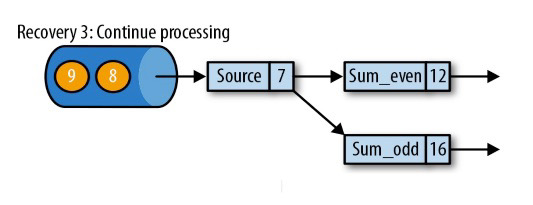

如图,假设从kafka中读取到源数据5,此时数据进入了奇数求和(1+3+5),那么做快照的时候,偶数流也要快照,因为同一个相同的数据 ,只是没有被处理。

从检查点恢复状态

如图在处理到奇数流的时候宕机了,数据传输发生中断。

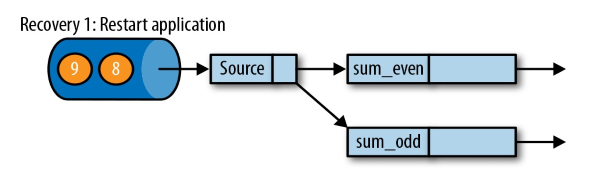

遇到故障之后,第一步就是重启应用

(重启后,起初流都是空的)

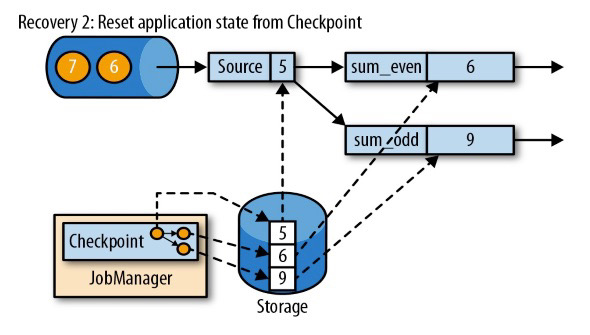

第二步是从 checkpoint 中读取状态,将状态重置

(读取在远程仓库(Storage,这里的仓库指状态后端保存数据指定的三种方式之一)保存的状态)

从检查点重新启动应用程序后,其内部状态与检查点完成时的状态完全相同

第三步:开始消费并处理检查点到发生故障之间的所有数据

这种检查点的保存和恢复机制可以为应用程序状态提供“精确一次”(exactly-once)的一致性,因为所有算子都会保存检查点并恢复其所有状态,这样一来所有的输入流就都会被重置到检查点完成时的位置

检查点和重启策略配置

重启策略配置 可以在配置文件也可以在代码中配置,为了方便一般都在代码中配置

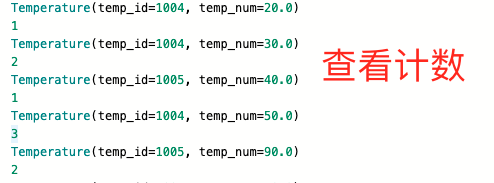

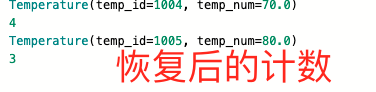

用一个计数的实例来说明程序中断,状态如何恢复:

已经配置了检查点策略的代码

package com.wl.state;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.time.Time;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/8 下午3:23

* @qq 2315290571

* @Description 配置检查点

*/

public class CheckPointConfig {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//设置并行度

env.setParallelism(1);

// 开启检查点

env.enableCheckpointing(1000);

// 设置语义 精确一次

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

// CheckPoint的处理超时时间

env.getCheckpointConfig().setCheckpointTimeout(60000);

// 最大允许同时处理几个CheckPoint

env.getCheckpointConfig().setMaxConcurrentCheckpoints(2);

// 重启策略配置

// 固定延迟重启(最多尝试3次,每次间隔10s)

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 10000L));

// 失败率重启(在10分钟内最多尝试3次,每次至少间com.wl.state.CheckPointConfig隔1分钟)

env.setRestartStrategy(RestartStrategies.failureRateRestart(3, Time.minutes(10), Time.minutes(1)));

// 检查点存储

env.getCheckpointConfig().setCheckpointStorage("hdfs://192.168.160.2:8020/data/flink");

// 检查点默认不保留 当程序被取消时会被删除,使用以下配置可以在程序被取消或任务失败时,检查点不会被自动清理

env.getCheckpointConfig().setExternalizedCheckpointCleanup(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

// 读取消息队列

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "wlwl:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "flink");

DataStream<Temperature> sourceStream = env.addSource(new FlinkKafkaConsumer<String>("flink", new SimpleStringSchema(), pro))

.map(value -> {

String[] strValue = value.split(",");

return new Temperature(Integer.parseInt(strValue[0]), Double.parseDouble(strValue[1]));

});

sourceStream.print();

// 记录状态

DataStream<Integer> KeyCount = sourceStream.keyBy(Temperature::getTemp_id)

.map(new KeyCountMapper());

KeyCount.print();

// 执行

env.execute("execute CheckPoint");

}

private static class KeyCountMapper extends RichMapFunction<Temperature, Integer> {

private ValueState<Integer> countState;

@Override

public void open(Configuration parameters) throws Exception {

countState = getRuntimeContext().getState(new ValueStateDescriptor<Integer>("keyCount", Integer.class, 0));

}

@Override

public Integer map(Temperature temperature) throws Exception {

countState.update(countState.value() == null ? 0 : countState.value() + 1);

return countState.value();

}

}

}

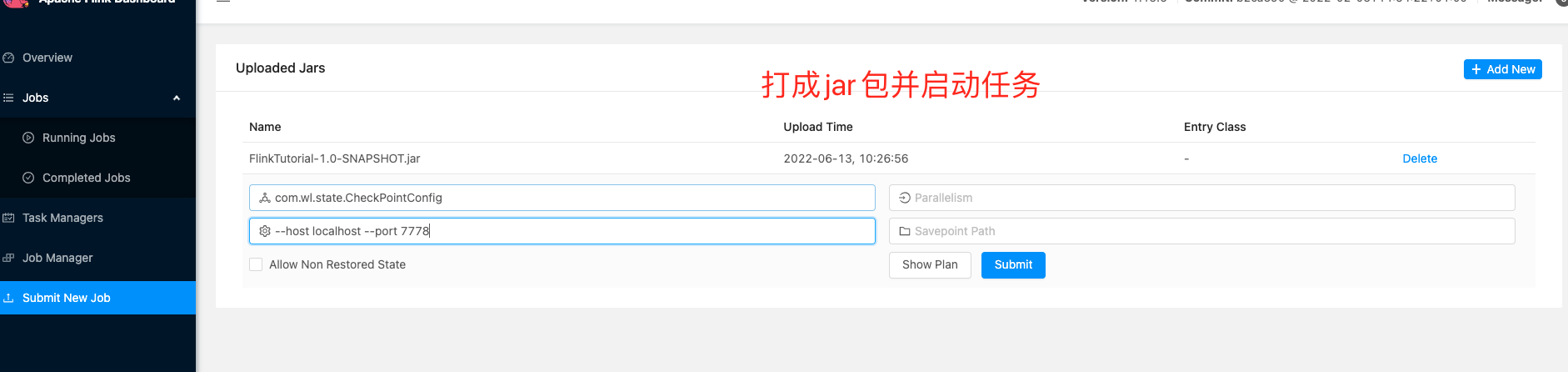

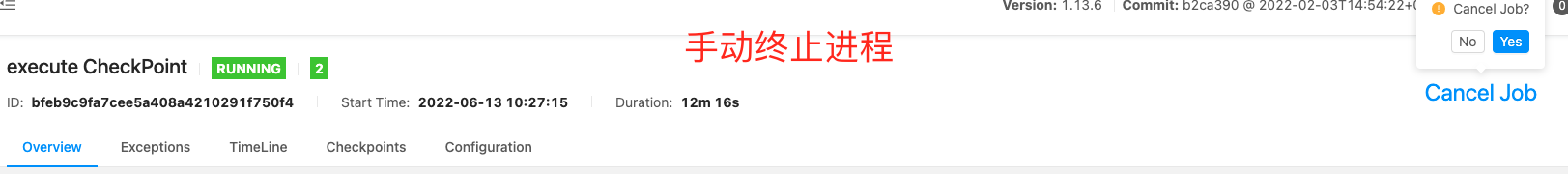

然后服务器上打开flink并启动集群,将任务打成jar包运行,在运行过程中中断进程。

或者命令行手动停止

#找到任务的id

../bin/flink list

#找到对应的 任务id取消

bin/flink cancel 4e0c7a93c3b4bec8ddc9d17451c6016f

然后命令行恢复

# -s 检查点保存路径 -c 指定启动类 指定jar包 --host 指定ip --port 指定端口

bin/flink run -s hdfs://192.168.160.2:8020/data/flink/bfeb9c9fa7cee5a408a4210291f750f4/chk-1408/_metadata -c com.wl.state.CheckPointConfig /tmp/flink-web-c06e4543-b3f8-4746-966c-36e2a303d6f4/flink-web-upload/74c2d0ad-c66c-4ac0-ae77-7c3ec568545f_FlinkTutorial-1.0-SNAPSHOT.jar --host localhost --port 7778

11.TableAPI的使用

加入依赖

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_2.12</artifactId>

<version>1.10.1</version>

</dependency>

dog.txt

12,dogA,sleep

23,dogB,watchDoor

14,dogC,eat

13,dogD,run

12,dogM,lie

13,dogW,shout

TableApi的简单使用

package com.wl;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/4/21 下午6:59

* @qq 2315290571

* @Description tableApi的使用

*/

public class TableDemoTest {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 转换成实体类

DataStream<Dog> mapStream = env.readTextFile("/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/dog.txt").map(value -> {

String[] str = value.split(",");

return new Dog(Integer.parseInt(str[0]), str[1], str[2]);

});

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 使用tableApi创建虚拟表表并查询

Table dogTable = tableEnv.fromDataStream(mapStream);

Table tableApiResult = dogTable.select("name");

tableEnv.createTemporaryView("dog", dogTable);

// Using SQL DDL

Table ddlResult = tableEnv.sqlQuery("select name,action from dog");

tableEnv.toAppendStream(tableApiResult, Row.class).print("tableApiResult");

tableEnv.toAppendStream(ddlResult, Row.class).print("ddlResult");

env.execute("testTableApi");

}

}

TableApi批处理和流处理的创建

package com.wl.table;

import com.wl.Dog;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.java.BatchTableEnvironment;

import org.apache.flink.table.api.java.StreamTableEnvironment;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/4/22 下午3:09

* @qq 2315290571

* @Description 基础程序结构

*/

public class BasicTable {

public static void main(String[] args) {

// 创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 清洗数据转换为POJO类

DataStream<Dog> dogStream = env.readTextFile("/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/dog.txt").map(

value -> {

String[] catValue = value.split(",");

return new Dog(Integer.parseInt(catValue[0]), catValue[1], catValue[2]);

}

);

// 创建表读取数据

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// planner流处理

EnvironmentSettings OldPlannerSettings = EnvironmentSettings.newInstance()

.useOldPlanner()

.inStreamingMode()

.build();

StreamTableEnvironment plannerStreamTableEnv = StreamTableEnvironment.create(env, OldPlannerSettings);

// planner批处理

ExecutionEnvironment plannerBatchEnv = ExecutionEnvironment.getExecutionEnvironment();

BatchTableEnvironment plannerBatchTableEnv = BatchTableEnvironment.create(plannerBatchEnv);

// Blink批处理

EnvironmentSettings blinkStreamSettings = EnvironmentSettings.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamTableEnvironment blinkStreamTableEnv = StreamTableEnvironment.create(env, blinkStreamSettings);

// Blink的批处理

EnvironmentSettings blinkBatchSettings = EnvironmentSettings.newInstance()

.useBlinkPlanner()

.inBatchMode()

.build();

TableEnvironment blinkBatchTableEnv = TableEnvironment.create(blinkBatchSettings);

}

}

创建TableEnvironment 读取数据

package com.wl.table;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.FileSystem;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.types.Row;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/4/22 下午3:43

* @qq 2315290571

* @Description 从文件中读取数据创建表

*/

public class CommonApi {

public static void main(String[] args) throws Exception {

// 创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 获取表的运行环境

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 读取文件

String filePath = "/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/dog.txt";

tableEnv.connect(new FileSystem().path(filePath))// 从文件中获取数据

.withFormat(new Csv())// 格式化为csv文件

.withSchema(new Schema().field("age", DataTypes.INT()).field("name", DataTypes.STRING()).field("action", DataTypes.STRING())) // 定义表的结构

.createTemporaryTable("dogTable"); // 创建临时表

// 从文件中拿取数据

Table table = tableEnv.from("dogTable");

// 打印表的结构

table.printSchema();

// 1.Table 查询

// 1.1过滤

Table filterAgeTable = table.select("age,name,action").filter("age>12");

// 1.2分组统计

Table groupByAgeTable = table.groupBy("age").select("age,count(name)");

// 2.Sql查询sqlQueryTable

Table sqlQueryTable = tableEnv.sqlQuery("select count(action) as totalAge,avg(age) as avgAge from dogTable group by age");

tableEnv.toAppendStream(table, Row.class).print("data");

tableEnv.toAppendStream(filterAgeTable, Row.class).print("filterAgeTable");

tableEnv.toRetractStream(groupByAgeTable, Row.class).print("groupByAgeTable");

tableEnv.toRetractStream(sqlQueryTable, Row.class).print("sqlQueryTable");

env.execute();

}

}

运行结果

root

|-- age: INT

|-- name: STRING

|-- action: STRING

data> 12,dogA,sleep

filterAgeTable> 23,dogB,watchDoor

data> 23,dogB,watchDoor

filterAgeTable> 14,dogC,eat

data> 14,dogC,eat

filterAgeTable> 13,dogD,run

data> 13,dogD,run

filterAgeTable> 13,dogW,shout

groupByAgeTable> (true,12,1)

data> 12,dogM,lie

groupByAgeTable> (true,23,1)

data> 13,dogW,shout

groupByAgeTable> (true,14,1)

groupByAgeTable> (true,13,1)

groupByAgeTable> (false,12,1)

groupByAgeTable> (true,12,2)

groupByAgeTable> (false,13,1)

sqlQueryTable> (true,1,12)

groupByAgeTable> (true,13,2)

sqlQueryTable> (true,1,23)

sqlQueryTable> (true,1,14)

sqlQueryTable> (true,1,13)

sqlQueryTable> (false,1,12)

sqlQueryTable> (true,2,12)

sqlQueryTable> (false,1,13)

sqlQueryTable> (true,2,13)

false表示上一条保存的记录被删除,true则是新加入的数据所以Flink的Table API在更新数据时,实际是先删除原本的数据,再添加新数据

创建TableEnvironment 写数据

package com.wl.table;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.FileSystem;

import org.apache.flink.table.descriptors.Schema;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/4/24 上午11:49

* @qq 2315290571

* @Description 写出数据到文件

*/

public class FileOutPut {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 获取TableApi的运行环境

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//根据文件创建表

String filePath = "/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/dog.txt";

tableEnv.connect(new FileSystem().path(filePath))

.withFormat(new Csv())

.withSchema(new Schema().field("age", DataTypes.INT()).field("name", DataTypes.STRING()).field("action", DataTypes.STRING()))

.createTemporaryTable("dog");

// 从文件中获取数据

Table table = tableEnv.from("dog");

// 分组统计

Table filterAgeTable = table.select("age,name,action").filter("age>12");

String outPath = "/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/out.txt";

tableEnv.connect(new FileSystem().path(outPath))

.withFormat(new Csv())

.withSchema(new Schema().field("age", DataTypes.INT()).field("name", DataTypes.STRING()).field("action", DataTypes.STRING()))

.createTemporaryTable("outTable");

filterAgeTable.insertInto("outTable");

tableEnv.execute("");

}

}

运行结果

23,dogB,watchDoor

14,dogC,eat

13,dogD,run

13,dogW,shout

这种方式写入文件有局限性,只能是批处理,再次运行代码会报错。

读写Kafka

新版Flink和新版kafka连接器,version指定"universal"

package com.wl.table;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.FileSystem;

import org.apache.flink.table.descriptors.Kafka;

import org.apache.flink.table.descriptors.Schema;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/4/24 下午2:36

* @qq 2315290571

* @Description 读写Kafka

*/

public class KafkaReadAndWrite {

public static void main(String[] args) throws Exception {

//获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置并行度

env.setParallelism(1);

// 获取TableApi的运行环境

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 读取kafka

tableEnv.connect(new Kafka()

.topic("test-topic")

.version("universal")

.property("bootstrap.servers", "192.168.160.2:9092")

)

.withFormat(new Csv())

.withSchema(new Schema().field("id", DataTypes.INT()).field("name", DataTypes.STRING()).field("address", DataTypes.STRING()))

.createTemporaryTable("student");

// 从student表中获取数据

Table table = tableEnv.from("student");

Table resultTable = table.select("id,name,address");

// 将kafka数据写入txt文件

tableEnv.connect(new FileSystem().path("/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/kafkaOut.txt"))

.withFormat(new Csv())

.withSchema(new Schema().field("id", DataTypes.INT()).field("name", DataTypes.STRING()).field("address", DataTypes.STRING()))

.createTemporaryTable("outTable");

resultTable.insertInto("outTable");

//将kafka数据写入kafka

tableEnv.connect(new Kafka()

.topic("flink-test")

.version("universal")

.property("bootstrap.servers", "192.168.160.2:9092")

)

.withFormat(new Csv())

.withSchema(new Schema().field("id", DataTypes.INT()).field("name", DataTypes.STRING()).field("address", DataTypes.STRING()))

.createTemporaryTable("toKafka");

resultTable.insertInto("toKafka");

tableEnv.execute("");

}

}

写入到mysql

package com.wl.table;

import com.wl.Order;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.Kafka;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.types.Row;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/13 下午2:32

* @qq 2315290571

* @Description table Api 写入到mysql

*/

public class FlinkMysql {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置并行度

env.setParallelism(1);

// 设置table api的运行环境

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 读取kafka

// Properties pro = new Properties();

// pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "wlwl:9092");

// pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "flink");

//

// DataStream<Order> sourceStream = env.addSource(new FlinkKafkaConsumer<String>("flink", new SimpleStringSchema(), pro))

// .map(value -> {

// String[] orderInfo = value.split(",");

// return new Order(Integer.parseInt(orderInfo[0]), orderInfo[1], Double.parseDouble(orderInfo[2]));

// });

tableEnv.connect(new Kafka()

.topic("flink")

.version("universal")

.property("bootstrap.servers", "192.168.160.2:9092")

)

.withFormat(new Csv())

.withSchema(new Schema().field("orderId", DataTypes.INT()).field("orderName", DataTypes.STRING()).field("orderPrice", DataTypes.DOUBLE()))

.createTemporaryTable("order_test");

// 将流的数据转换为动态表

Table selectResult = tableEnv.sqlQuery("select * from order_test ");

tableEnv.toRetractStream(selectResult, Row.class).print("sqlQueryTable");

// 执行DDL

String sinkDDL = "create table flink_test (" +

" order_id INT," +

" order_name STRING," +

" order_price DOUBLE" +

") WITH (" +

" 'connector' = 'jdbc', " +

" 'url' = 'jdbc:mysql://localhost:3306/flink', " +

" 'table-name' = 'order_test', " +

" 'username' = 'root', " +

" 'password' = 'wl990922' " +

")";

// 输入输出

tableEnv.executeSql(sinkDDL);

selectResult.executeInsert("flink_test");

// tableEnv.execute("test");

env.execute();

}

}

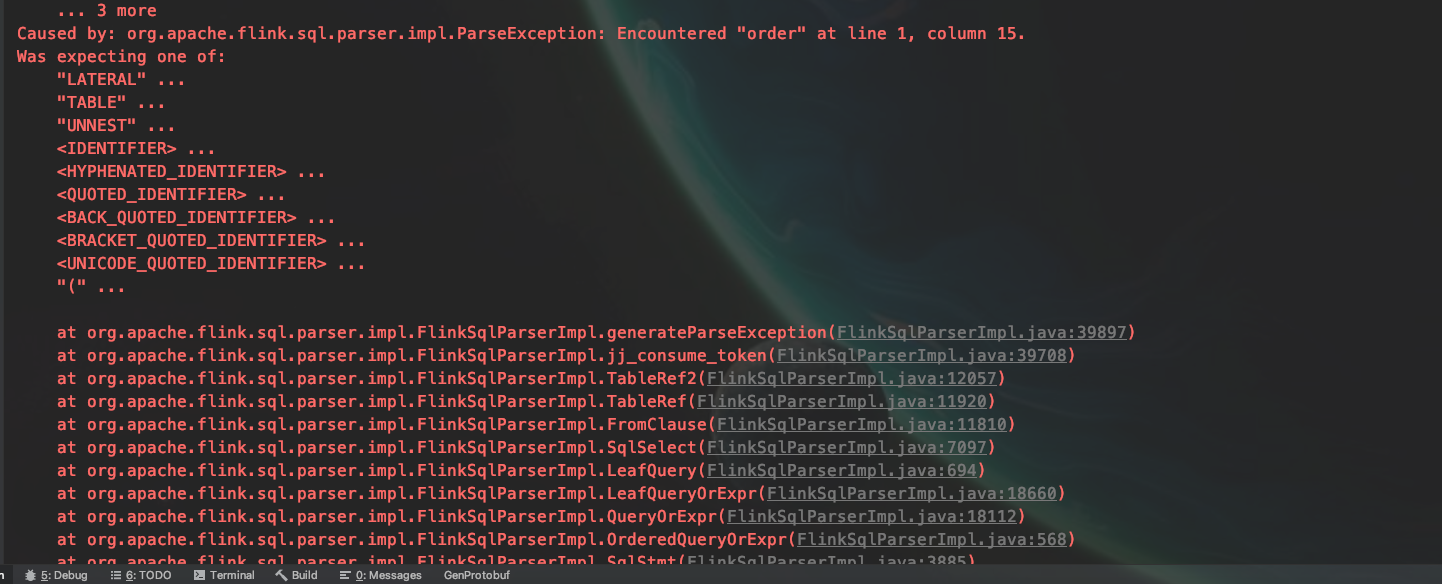

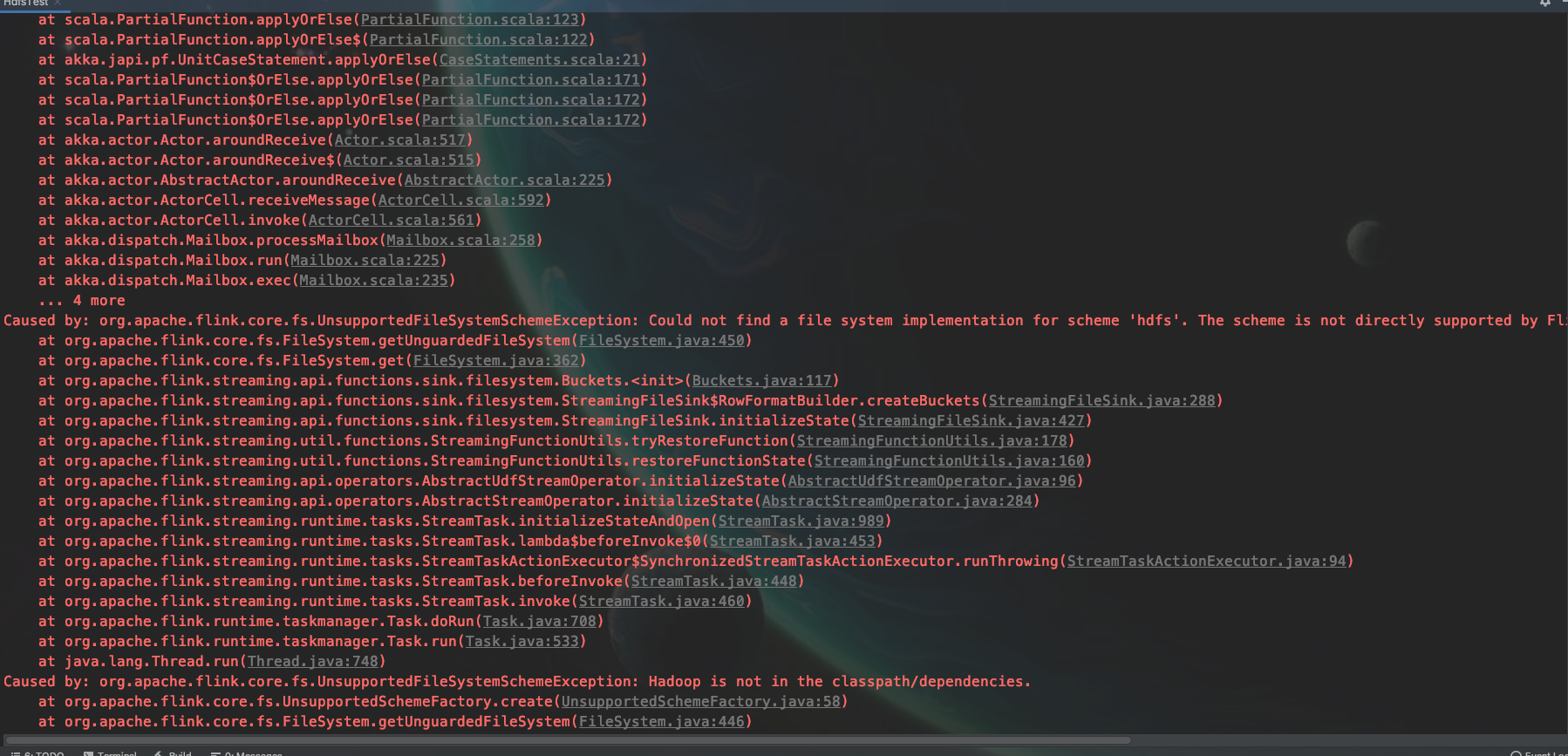

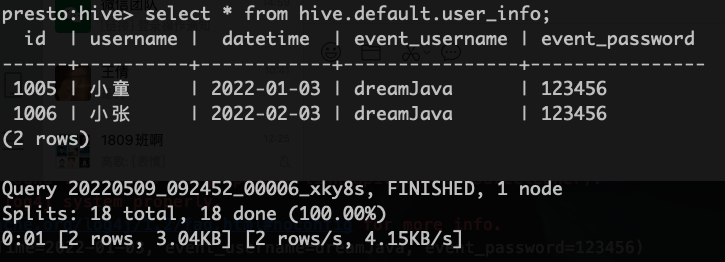

注意:表的名称不能包含关键字,否则会报以下异常

时间特性

Table 可以提供一个逻辑上的时间字段,用于在表处理程序中,指示时间和访问相应的时间戳

定义处理时间(Processing Time)

由DataStream转换成表时指定

package com.wl.window_sql;

import com.wl.Order;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.table.api.GroupWindow;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/14 下午3:03

* @qq 2315290571

* @Description 时间特性

*/

public class WindowTest {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 读取kafka

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "wlwl:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "flink");

DataStream<Order> sourceStream = env.addSource(new FlinkKafkaConsumer<String>("flink", new SimpleStringSchema(), pro))

.map(value -> {

String[] orderInfo = value.split(",");

return new Order(Integer.parseInt(orderInfo[0]), orderInfo[1], Double.parseDouble(orderInfo[2]));

});

// 流转表

Table table = tableEnv.fromDataStream(sourceStream,"orderId as oId,orderName as oName,orderPrice as oPrice,pt.proctime");

table.printSchema();

tableEnv.toAppendStream(table, Row.class).print();

env.execute();

}

}

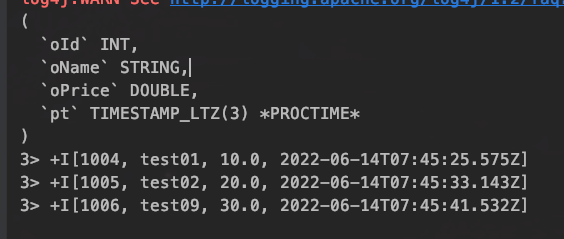

运行结果

定义TableSchema时指定

tableEnv.connect(new FileSystem().path("src/main/resources/order.txt"))

.withFormat(new Csv())

.withSchema(new Schema()

.field("orderId", DataTypes.INT())

.field("orderName",DataTypes.STRING())

.field("orderPrice",DataTypes.DOUBLE())

.field("pt",DataTypes.TIMESTAMP(3)).proctime()

)

.createTemporaryTable("order_test");

DDL定义

String sinkDDL = "create table flink_test (" +

" order_id INT," +

" order_name STRING," +

" order_price DOUBLE" +

"pt AS PROCTIME() "+

") WITH (" +

" 'connector' = 'jdbc', " +

" 'url' = 'jdbc:mysql://localhost:3306/flink', " +

" 'table-name' = 'order_test', " +

" 'username' = 'root', " +

" 'password' = '111111' " +

")";

tableEnv.executeSql(sinkDDL);

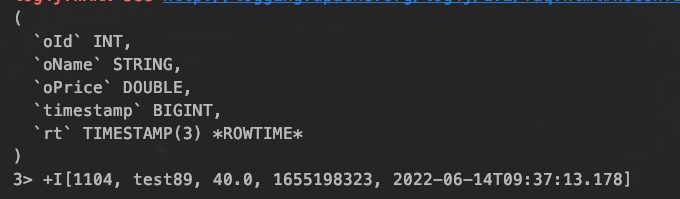

定义时间事件(Event Time)

从流中获取定义

package com.wl.window_sql;

import com.wl.Order;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.table.api.*;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.FileSystem;

import org.apache.flink.table.descriptors.Rowtime;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.table.types.DataType;

import org.apache.flink.types.Row;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/14 下午3:03

* @qq 2315290571

* @Description 时间特性

*/

public class WindowTest {

public static void main(String[] args) throws Exception {

// 获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 读取kafka

Properties pro = new Properties();

pro.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "wlwl:9092");

pro.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "flink");

DataStream<Order> sourceStream = env.addSource(new FlinkKafkaConsumer<String>("flink", new SimpleStringSchema(), pro))

.map(value -> {

String[] orderInfo = value.split(",");

return new Order(Integer.parseInt(orderInfo[0]), orderInfo[1], Double.parseDouble(orderInfo[2]),Long.parseLong(orderInfo[3]));

// return new Order();

});

// 流转表

Table table = tableEnv.fromDataStream(sourceStream,"orderId as oId,orderName as oName,orderPrice as oPrice,timestamp,rt.rowtime");

table.printSchema();

tableEnv.toAppendStream(table, Row.class).print();

env.execute();

}

}

Schema定义

tableEnv.connect(new FileSystem().path("/Users/wangliang/Documents/ideaProject/Flink/FlinkTutorial/src/main/resources/order.txt"))

.withFormat(new Csv())

.withSchema(new Schema()

.field("orderId", DataTypes.INT())

.field("orderName",DataTypes.STRING())

.field("orderPrice",DataTypes.DOUBLE())

.field("timestamp",DataTypes.BIGINT())

.rowtime(new Rowtime().timestampsFromField("timestamp") // 从字段中提取时间戳

.watermarksPeriodicBounded(1000) // watermark延迟一秒

)

)

.createTemporaryTable("order_test");

DDL语言定义

String sinkDDL = "create table flink_test (" +

" order_id INT," +

" order_name STRING," +

" order_price DOUBLE," +

" rt AS TO_TIMESTAMP( FROM_UNIXTIME(ts) ),"+

") WITH (" +

" 'connector' = 'jdbc', " +

" 'url' = 'jdbc:mysql://localhost:3306/flink', " +

" 'table-name' = 'order_test', " +

" 'username' = 'root', " +

" 'password' = '111111' " +

")";

tableEnv.executeSql(sinkDDL);

Group Windows

类似于sql中的 group by,处理时间要定义事件时间语义

package com.wl.window_sql;

import com.wl.Temperature;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.Tumble;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Arrays;

import java.util.Properties;

/**

* @author 没有梦想的java菜鸟

* @Date 创建时间:2022/6/15 上午11:14

* @qq 2315290571

* @Description GroupWindow开窗

*/