Python 2.7 爬取51job 全国java岗位

一页有50条数据一共2000页 分页是get分页

#!/usr/bin/python # encoding: utf-8 import requests import threading from lxml import etree import sys import os import datetime import re import random import time reload(sys) sys.setdefaultencoding('utf-8') # 定义写入日志的方法 def log(context): txtName = "./log/log.txt" f=file(txtName, "a+") f.writelines(context+"\n") f.close() def xin(): # 请求头 header = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8', 'Accept-Encoding': 'gzip, deflate, br', 'Accept-Language': 'zh-CN,zh;q=0.9' } count=1 # 一共2000页 while (count < 2000): url="https://search.51job.com/list/000000,000000,0000,00,9,99,java,2,"+str(count)+".html?lang=c&stype=1&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=" response=requests.get(url,headers=header) html=response.content.decode("gbk") print(html) selector=etree.HTML(html) contents = selector.xpath('//div[@class="dw_table"]/div[@class="el"]') log("第"+str(count)+"页了--"+str(len(contents))+"条数据") for eachlink in contents: company = eachlink.xpath('span[@class="t2"]/a/text()')[0] url= eachlink.xpath('p/span/a/@href')[0] name= eachlink.xpath('p/span/a/text()')[0] city= eachlink.xpath('span[@class="t3"]/text()')[0] # 工资有的是没有的 key="0" if len(eachlink.xpath('span[@class="t4"]/text()'))<1: key="0" else: key= eachlink.xpath('span[@class="t4"]/text()')[0] # 把空格去掉 company=company.replace(' ','') name=name.replace(' ','') city=city.replace(' ','') zhi=name+"============="+company+"============="+city+"============="+str(key)+"============="+url txtName = "./file/java.txt" f=file(txtName, "a+") f.write(zhi) f.close() sui=random.randint(1,5) log("休眠"+str(sui)) time.sleep(sui) count=count+1 if __name__=="__main__": xin()

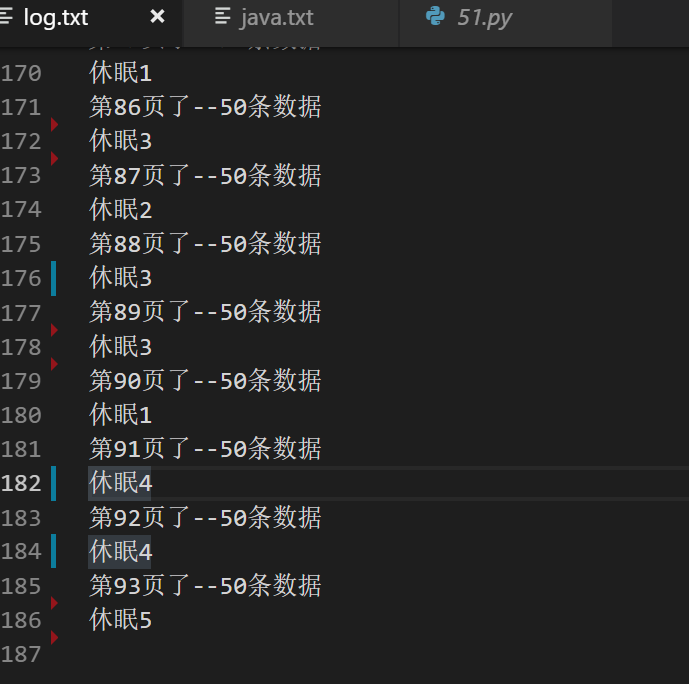

日志文件

爬去的数据

但是爬去的速度有点慢,

于是乎采用了多线程爬去,

但是51job 立刻就把IP段给封掉了,

于是用户4台服务器,每台爬取500条数据,最后再结合一起加到数据库中

人生苦短,我用Python!!!

浙公网安备 33010602011771号

浙公网安备 33010602011771号