数据采集第五次作业

1.作业①:

码云地址:https://gitee.com/wjz51/wjz/blob/master/project_5/5_1.py

1.1要求:

熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。使用Selenium框架爬取京东商城某类商品信息及图片。

1.2解题思路:

本次作业为复现京东商城手机商品信息及图片,其中我对数据库存储部分做了更改,改为MySQL。

def startUp(self, url, key):

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

self.driver = webdriver.Chrome(chrome_options=chrome_options)

# Initializing variables

self.threads = []

self.No = 0

self.imgNo = 0

self.page = 1 # 控制页数

# Initializing database

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root",

passwd="Wjz20010501", db="mydb", charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from phones")

self.opened = True

except Exception as err:

print(err)

self.opened = False

try:

if not os.path.exists(MySpider.imagePath):

os.mkdir(MySpider.imagePath)

images = os.listdir(MySpider.imagePath)

for img in images:

s = os.path.join(MySpider.imagePath, img)

os.remove(s)

except Exception as err:

print(err)

self.driver.get(url)

keyInput = self.driver.find_element_by_id("key")

keyInput.send_keys(key)

keyInput.send_keys(Keys.ENTER)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err);

def insertDB(self, mNo, mMark, mPrice, mNote, mFile):

try:

sql = "insert into phones (mNo,mMark,mPrice,mNote,mFile) values (%s,%s,%s,%s,%s)"

self.cursor.execute(sql, (mNo, mMark, mPrice, mNote, mFile))

except Exception as err:

print(err)对京东商品信息爬取代码如下,其中图片下载使用了线程的处理

def download(self, src1, src2, mFile):

data = None

if src1:

try:

req = urllib.request.Request(src1, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if not data and src2:

try:

req = urllib.request.Request(src2, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if data:

print("download begin", mFile)

fobj = open(MySpider.imagePath + "\\" + mFile, "wb")

fobj.write(data)

fobj.close()

print("download finish", mFile)

def processSpider(self):

try:

time.sleep(1)

print(self.driver.current_url)

lis =self.driver.find_elements_by_xpath("//div[@id='J_goodsList']//li[@class='gl-item']")

for li in lis:

# We find that the image is either in src or in data-lazy-img attribute

try:

src1 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("src")

except:

src1 = ""

try:

src2 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("data-lazy-img")

except:

src2 = ""

try:

price = li.find_element_by_xpath(".//div[@class='p-price']//i").text

except:

price = "0"

try:

note = li.find_element_by_xpath(".//div[@class='p-name p-name-type-2']//em").text

mark = note.split(" ")[0]

mark = mark.replace("爱心东东\n", "")

mark = mark.replace(",", "")

note = note.replace("爱心东东\n", "")

note = note.replace(",", "")

except:

note = ""

mark = ""

self.No = self.No + 1

no = str(self.No)

while len(no) < 6:

no = "0" + no

print(no, mark, price)

if src1:

src1 = urllib.request.urljoin(self.driver.current_url, src1)

p = src1.rfind(".")

mFile = no + src1[p:]

elif src2:

src2 = urllib.request.urljoin(self.driver.current_url, src2)

p = src2.rfind(".")

mFile = no + src2[p:]

if src1 or src2:

T = threading.Thread(target=self.download, args=(src1, src2, mFile))

T.setDaemon(False)

T.start()

self.threads.append(T)

else:

mFile = ""

self.insertDB(no, mark, price, note, mFile)

if(self.page<2):

nextPage = self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-next']")

time.sleep(10)

self.page = self.page + 1

nextPage.click()

self.processSpider()

except Exception as err:

print(err)

def executeSpider(self, url, key):

starttime = datetime.datetime.now()

print("Spider starting......")

self.startUp(url, key)

print("Spider processing......")

self.processSpider()

print("Spider closing......")

self.closeUp()

for t in self.threads:

t.join()

print("Spider completed......")

endtime = datetime.datetime.now()

elapsed = (endtime - starttime).seconds

print("Total ", elapsed, " seconds elapsed")1.3运行结果:

控制台结果

图片下载结果

MySQL结果

1.4心得体会

该作业为复现代码,使我对Selenium的使用及MySQL存储数据更加熟练。

2.作业②:

码云地址:https://gitee.com/wjz51/wjz/blob/master/project_5/5_2.py

2.1要求:

熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、教学进度、课程状态,课程图片地址),同时存储图片到本地项目根目录下的imgs文件夹中,图片的名称用课程名来存储。

2.2解题思路:

用户模拟登录实现代码

def root(self):

time.sleep(4)

self.driver.find_element_by_xpath('//div[@class="unlogin"]//a[@class="f-f0 navLoginBtn"]').click() # 登录或注册

time.sleep(4)

self.driver.find_element_by_class_name('ux-login-set-scan-code_ft_back').click() # 其他登录方式

time.sleep(2)

self.driver.find_element_by_xpath("//ul[@class='ux-tabs-underline_hd']//li[2]").click() # 手机号登录

time.sleep(2)

self.driver.switch_to.frame(self.driver.find_element_by_xpath("//div[@class='ux-login-set-container']//iframe"))

self.driver.find_element_by_xpath('//input[@id="phoneipt"]').send_keys("18256132051") # 输入账号

time.sleep(2)

self.driver.find_element_by_xpath('//input[@placeholder="请输入密码"]').send_keys("Wjz20010501") # 输入密码

time.sleep(2)

self.driver.find_element_by_xpath('//div[@class="f-cb loginbox"]//a[@id="submitBtn"]').click() # 点击登录

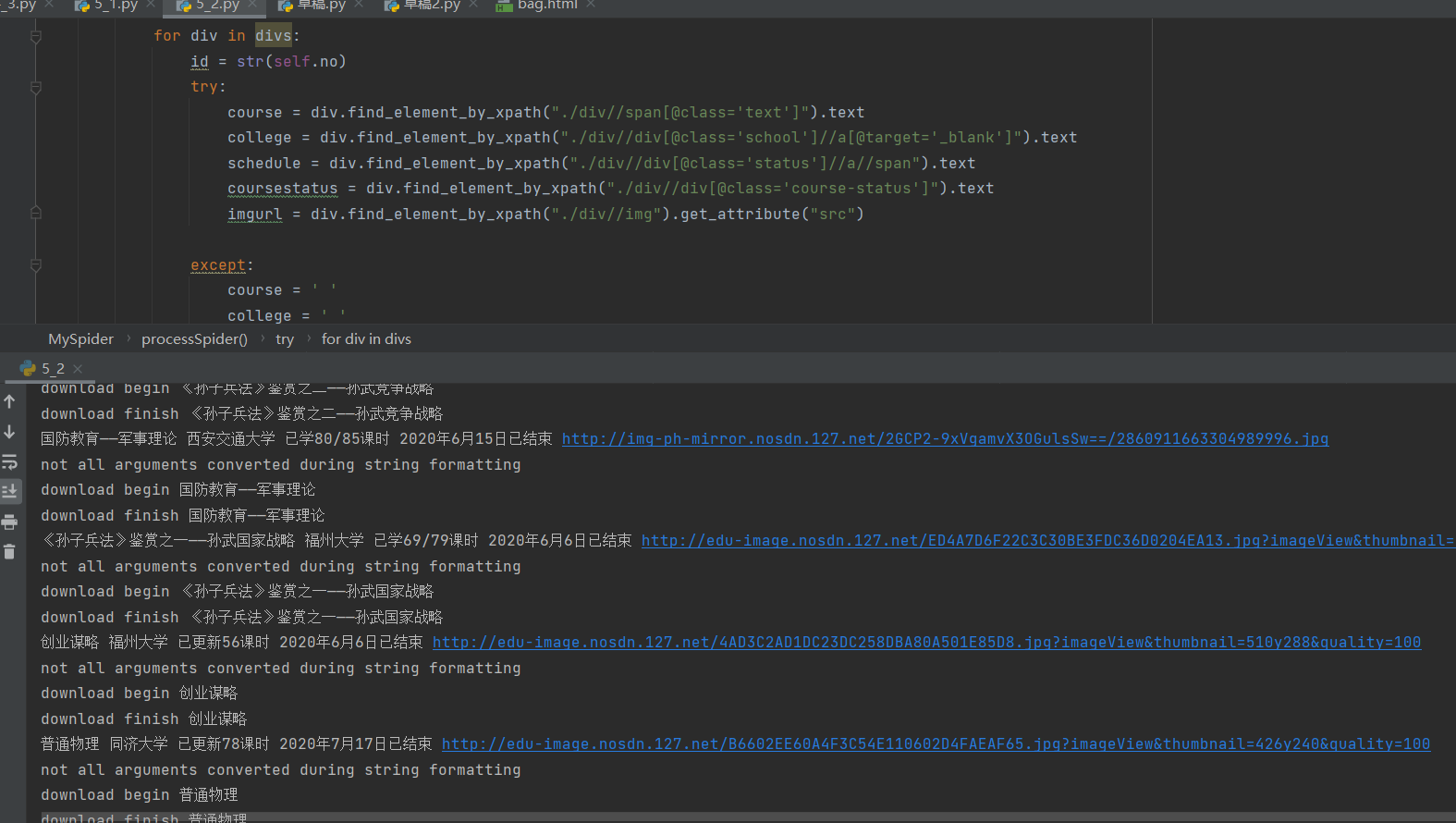

time.sleep(10)由图可知,所需要爬取的课程信息均在//div[@class='course-panel-body-wrapper']//div[@class='course-card-wrapper']

爬取课程信息

代码如下

def processSpider(self):#提取网页信息

try:

self.driver.implicitly_wait(10)

print(self.driver.current_url)

divs= self.driver.find_elements_by_xpath("//div[@class='course-panel-body-wrapper']//div[@class='course-card-wrapper']")

for div in divs:

id = str(self.no)

try:

course = div.find_element_by_xpath("./div//span[@class='text']").text

college = div.find_element_by_xpath("./div//div[@class='school']//a[@target='_blank']").text

schedule = div.find_element_by_xpath("./div//div[@class='status']//a//span").text

coursestatus = div.find_element_by_xpath("./div//div[@class='course-status']").text

imgurl = div.find_element_by_xpath("./div//img").get_attribute("src")

except:

course = ' '

college = ' '

schedule = ' '

coursestatus = ' '

imgurl = ' '

print(id,course, college, schedule, coursestatus, imgurl)

self.insertDB(id, course, college, schedule, coursestatus, imgurl)

self.no += 1

src = urllib.request.urljoin(self.driver.current_url,imgurl)

T = threading.Thread(target=self.download, args=(src,course))

T.setDaemon(False)

T.start()

self.threads.append(T)数据库部分

def startUp(self, url):#创建数据库

# # Initializing Chrome browser

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

self.driver = webdriver.Chrome(chrome_options=chrome_options)

self.no = 1 #确定第几个数据

self.threads = []

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root",

passwd="Wjz20010501", db="mydb", charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from moocs")

self.opened = True

except Exception as err:

print(err)

self.opened = False

try:

if not os.path.exists(MySpider.imagePath):

os.mkdir(MySpider.imagePath)

images = os.listdir(MySpider.imagePath)

for img in images:

s = os.path.join(MySpider.imagePath, img)

os.remove(s)

except Exception as err:

print(err)

self.driver.get(url)

def closeUp(self):#关闭数据库

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

def insertDB(self, Id,cCourse,cCollege,cSchedule,cCourseStatus,cImgUrl):#向数据库中插入数据

try:

sql="insert into moocs (Id,cCourse,cCollege,cSchedule,cCourseStatus,cImgUrl) values (%s,%s, %s, %s, %s,%s)"

self.cursor.execute(sql, (Id,cCourse,cCollege,cSchedule,cCourseStatus,cImgUrl) )

except Exception as err:

print(err)图片下载部分

def download(self, src1, name):

data = None

try:

req = urllib.request.Request(src1, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if data:

print("download begin", name)

fobj = open(MySpider.imagePath + "\\" + name + ".jpg", "wb")

fobj.write(data)

fobj.close()

print("download finish", name)2.3运行结果:

控制台结果

图片下载结果

MySQL结果

2.4心得体会

本次作业使我进一步熟悉Selenium框架+MySQL以及对模拟用户登录进行了实现。

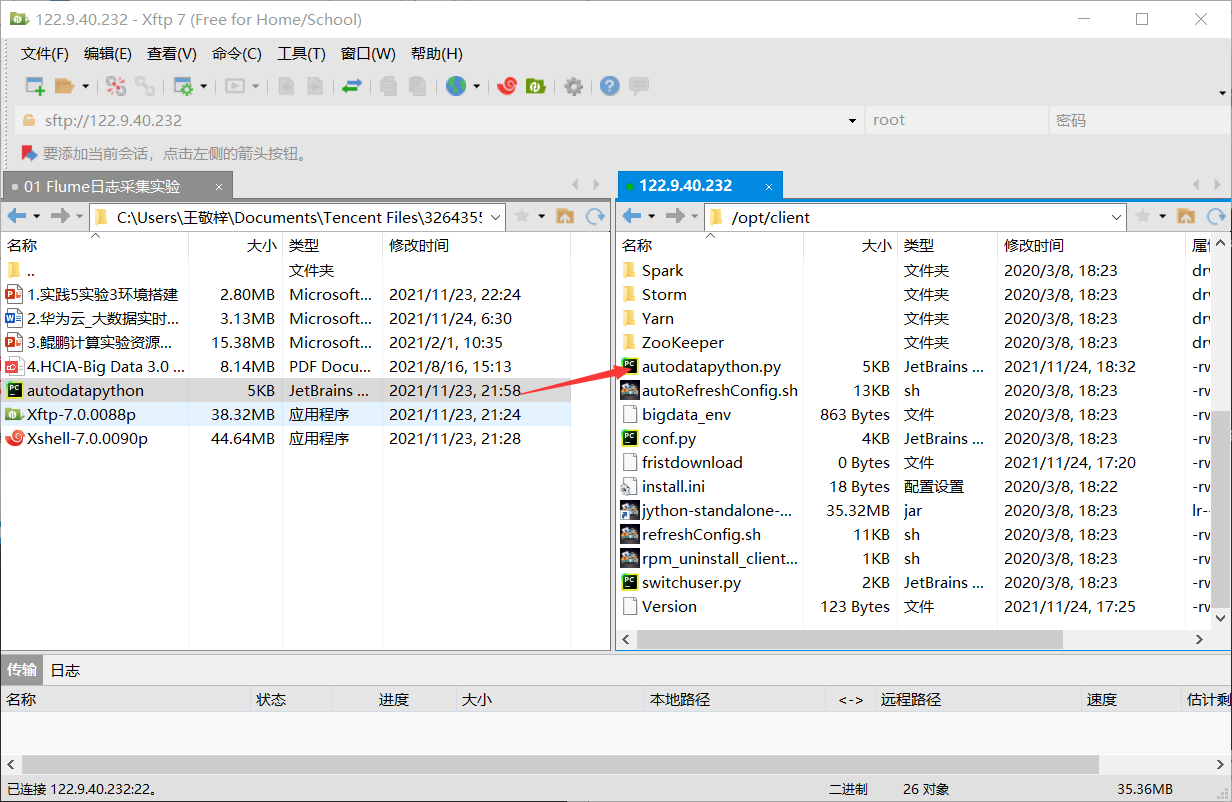

3.作业③:

3.1要求:

理解Flume架构和关键特性,掌握使用Flume完成日志采集任务。

完成Flume日志采集实验,包含以下步骤:

3.2Flume日志采集实验步骤:

3.2.1任务一:开通MapReduce服务

3.2.2任务二:Python脚本生成测试数据

使用Xshell 7连接服务器后,进入/opt/client/目录,将autodatapython.py文件移至该目录下

使用mkdir命令在/tmp下创建目录flume_spooldir,我们把Python脚本模拟生成的数据放到此目录下,后面Flume就监控这个文件下的目录,以读取数据。

![]()

执行Python命令,测试生成100条数据,并查看数据。

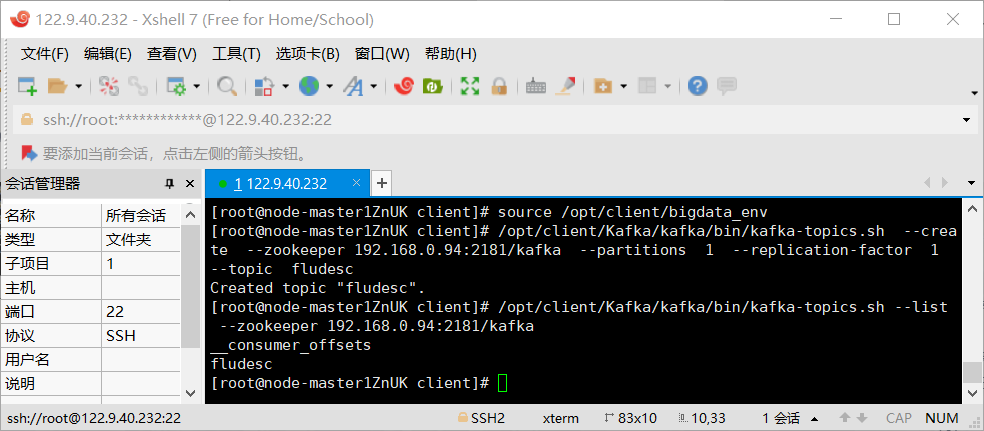

3.2.3任务三:配置Kafka

首先设置环境变量,执行source命令,使变量生效,之后在kafka中创建topic,查看topic信息。

3.2.4任务四:安装Flume客户端

进入MRS Manager集群管理界面,打开服务管理,点击flume,进入Flume服务, 点击下载客户端

下载完成后会有弹出框提示下载到哪一台服务器上(这台机器就是Master节点),路径就是/tmp/MRS-client:

解压下载的flume客户端文件,使用Xshell7登录到上步中的弹性服务器上,进入/tmp/MRS-client目录,解压压缩包获取校验文件与客户端配置包;校验文件包,界面显示OK,表明文件包校验成功,解压“MRS_Flume_ClientConfig.tar”文件

安装客户端运行环境到新的目录“/opt/Flumeenv”,安装时自动生成目录。

![]()

查看安装输出信息,如有以下结果表示客户端运行环境安装成功:Components client installation is complete

配置环境变量, 解压Flume客户端

安装Flume到新目录”/opt/FlumeClient”,安装时自动生成目录。

重启Flume服务,安装成功

3.2.5任务五:配置Flume采集数据

进入Flume安装目录,修改配置文件,在conf目录下编辑文件properties.properties。

创建消费者消费kafka中的数据,再生成一份数据,查看Kafka中是否有数据产生,可以看到,已经消费出数据了:

3.3心得体会:

本次实验是使用Flume进行实时流前端数据采集,通过该实验的学习,使我认识并熟悉在实时场景下的数据处理和数据的可视化。