Python机器学习笔记:使用Keras进行回归预测

Keras是一个深度学习库,包含高效的数字库Theano和TensorFlow。是一个高度模块化的神经网络库,支持CPU和GPU。

本文学习的目的是学习如何加载CSV文件并使其可供Keras使用,如何使用Keras创建一个回归问题的神经网络模型,如何使用scikit-learn和Keras一起使用交叉验证来评估模型,如何进行数据准备以提高Keras模型的技能,如何使用Keras调整模型的网络拓扑。

前期准备之Keras的scikit-learn接口包装器

Git地址:https://github.com/scikit-learn/scikit-learn

Scikit-learn 是基于Scipy为机器学习建造的的一个Python模块,他的特色就是多样化的分类,回归和聚类的算法包括支持向量机,逻辑回归,朴素贝叶斯分类器,随机森林,Gradient Boosting,聚类算法和DBSCAN。而且也设计出了Python numerical和scientific libraries Numpy and Scipy。

我们可以通过包装器将Sequential模块(仅有一个输入)作为Scikit-learn工作流的一部分,相关的包装器定义在keras.wrappers.scikit_learn.py中。

1:目前有两个包装器可用

其中实现了sklearn的分类器接口是下面包装器:

keras.wrappers.scikit_learn.KerasClassifier(build_fn=None, **sk_params)

实现了sklearn的回归器接口的是下面包装器:

keras.wrappers.scikit_learn.KerasRegressor(build_fn=None, **sk_params)

2,参数build_fn:可调用的函数或者类对象

build_fn应构造,编译并返回一个Keras模型,该模型将稍后用于训练/测试,build_fn值可能为以下三种之一:

- 1,一个函数

- 2,一个具有call方法的类对象

- 3,None,代表你的类继承自KerasClassifier或者KerasRegressor,其call方法为其父类call方法

3,参数sk_params:模型参数和训练参数

sk_params以模型参数和训练(超)参数作为参数,合法的模型参数为build_fn的参数,注意:‘build_fn’应提供其参数的默认值。所以我们不传递任何值给sk_params也可以创建一个分类器/回归器。

sk_params还接受用于调用fit,predict,predict_proba和score方法的参数,如nb_epoch,batch_size等。这些用于训练或预测的参数按如下顺序选择:

-

传递给

fit,predict,predict_proba和score的字典参数 -

传递个

sk_params的参数 -

keras.models.Sequential,fit,predict,predict_proba和score的默认值

当使用scikit-learn的grid_search接口时,合法的可转换参数是你可以传递给sk_params的参数,包括训练参数。即,你可以使用grid_search来搜索最佳的batch_size或nb_epoch以及其他模型参数。

一,问题描述

在本文学习中,我们将使用是波士顿房价数据集进行回归预测

您可以下载此数据集并将其直接保存到当前工作文件名housing.csv(更新:从此处下载数据)。

该数据集描述了波士顿郊区房屋的13个数字属性,并关注以数千美元(单位为k$)模拟这些郊区房屋的价格。目标值是一个位置的房屋的中值。因此,这是回归预测建模问题。输入属性包括犯罪率,非经营业务面积比例,化学品浓度等。

这是机器学习中经过深入研究的问题。使用起来很方便,因为所有输入和输出属性都是数字的,并且有506个实例可供使用。

使用均方误差(MSE)评估的模型的合理性能约为20平方每十万美元(也就是每平方米4500美元)。这个数字对于我们的神经网络来说是一个很好的训练目标。

housing.csv

0.00632 18.00 2.310 0 0.5380 6.5750 65.20 4.0900 1 296.0 15.30 396.90 4.98 24.00 0.02731 0.00 7.070 0 0.4690 6.4210 78.90 4.9671 2 242.0 17.80 396.90 9.14 21.60 0.02729 0.00 7.070 0 0.4690 7.1850 61.10 4.9671 2 242.0 17.80 392.83 4.03 34.70 0.03237 0.00 2.180 0 0.4580 6.9980 45.80 6.0622 3 222.0 18.70 394.63 2.94 33.40 0.06905 0.00 2.180 0 0.4580 7.1470 54.20 6.0622 3 222.0 18.70 396.90 5.33 36.20 0.02985 0.00 2.180 0 0.4580 6.4300 58.70 6.0622 3 222.0 18.70 394.12 5.21 28.70 0.08829 12.50 7.870 0 0.5240 6.0120 66.60 5.5605 5 311.0 15.20 395.60 12.43 22.90 0.14455 12.50 7.870 0 0.5240 6.1720 96.10 5.9505 5 311.0 15.20 396.90 19.15 27.10 0.21124 12.50 7.870 0 0.5240 5.6310 100.00 6.0821 5 311.0 15.20 386.63 29.93 16.50 0.17004 12.50 7.870 0 0.5240 6.0040 85.90 6.5921 5 311.0 15.20 386.71 17.10 18.90 0.22489 12.50 7.870 0 0.5240 6.3770 94.30 6.3467 5 311.0 15.20 392.52 20.45 15.00 0.11747 12.50 7.870 0 0.5240 6.0090 82.90 6.2267 5 311.0 15.20 396.90 13.27 18.90 0.09378 12.50 7.870 0 0.5240 5.8890 39.00 5.4509 5 311.0 15.20 390.50 15.71 21.70 0.62976 0.00 8.140 0 0.5380 5.9490 61.80 4.7075 4 307.0 21.00 396.90 8.26 20.40 0.63796 0.00 8.140 0 0.5380 6.0960 84.50 4.4619 4 307.0 21.00 380.02 10.26 18.20 0.62739 0.00 8.140 0 0.5380 5.8340 56.50 4.4986 4 307.0 21.00 395.62 8.47 19.90 1.05393 0.00 8.140 0 0.5380 5.9350 29.30 4.4986 4 307.0 21.00 386.85 6.58 23.10 0.78420 0.00 8.140 0 0.5380 5.9900 81.70 4.2579 4 307.0 21.00 386.75 14.67 17.50 0.80271 0.00 8.140 0 0.5380 5.4560 36.60 3.7965 4 307.0 21.00 288.99 11.69 20.20 0.72580 0.00 8.140 0 0.5380 5.7270 69.50 3.7965 4 307.0 21.00 390.95 11.28 18.20 1.25179 0.00 8.140 0 0.5380 5.5700 98.10 3.7979 4 307.0 21.00 376.57 21.02 13.60 0.85204 0.00 8.140 0 0.5380 5.9650 89.20 4.0123 4 307.0 21.00 392.53 13.83 19.60 1.23247 0.00 8.140 0 0.5380 6.1420 91.70 3.9769 4 307.0 21.00 396.90 18.72 15.20 0.98843 0.00 8.140 0 0.5380 5.8130 100.00 4.0952 4 307.0 21.00 394.54 19.88 14.50 0.75026 0.00 8.140 0 0.5380 5.9240 94.10 4.3996 4 307.0 21.00 394.33 16.30 15.60 0.84054 0.00 8.140 0 0.5380 5.5990 85.70 4.4546 4 307.0 21.00 303.42 16.51 13.90 0.67191 0.00 8.140 0 0.5380 5.8130 90.30 4.6820 4 307.0 21.00 376.88 14.81 16.60 0.95577 0.00 8.140 0 0.5380 6.0470 88.80 4.4534 4 307.0 21.00 306.38 17.28 14.80 0.77299 0.00 8.140 0 0.5380 6.4950 94.40 4.4547 4 307.0 21.00 387.94 12.80 18.40 1.00245 0.00 8.140 0 0.5380 6.6740 87.30 4.2390 4 307.0 21.00 380.23 11.98 21.00 1.13081 0.00 8.140 0 0.5380 5.7130 94.10 4.2330 4 307.0 21.00 360.17 22.60 12.70 1.35472 0.00 8.140 0 0.5380 6.0720 100.00 4.1750 4 307.0 21.00 376.73 13.04 14.50 1.38799 0.00 8.140 0 0.5380 5.9500 82.00 3.9900 4 307.0 21.00 232.60 27.71 13.20 1.15172 0.00 8.140 0 0.5380 5.7010 95.00 3.7872 4 307.0 21.00 358.77 18.35 13.10 1.61282 0.00 8.140 0 0.5380 6.0960 96.90 3.7598 4 307.0 21.00 248.31 20.34 13.50 0.06417 0.00 5.960 0 0.4990 5.9330 68.20 3.3603 5 279.0 19.20 396.90 9.68 18.90 0.09744 0.00 5.960 0 0.4990 5.8410 61.40 3.3779 5 279.0 19.20 377.56 11.41 20.00 0.08014 0.00 5.960 0 0.4990 5.8500 41.50 3.9342 5 279.0 19.20 396.90 8.77 21.00 0.17505 0.00 5.960 0 0.4990 5.9660 30.20 3.8473 5 279.0 19.20 393.43 10.13 24.70 0.02763 75.00 2.950 0 0.4280 6.5950 21.80 5.4011 3 252.0 18.30 395.63 4.32 30.80 0.03359 75.00 2.950 0 0.4280 7.0240 15.80 5.4011 3 252.0 18.30 395.62 1.98 34.90 0.12744 0.00 6.910 0 0.4480 6.7700 2.90 5.7209 3 233.0 17.90 385.41 4.84 26.60 0.14150 0.00 6.910 0 0.4480 6.1690 6.60 5.7209 3 233.0 17.90 383.37 5.81 25.30 0.15936 0.00 6.910 0 0.4480 6.2110 6.50 5.7209 3 233.0 17.90 394.46 7.44 24.70 0.12269 0.00 6.910 0 0.4480 6.0690 40.00 5.7209 3 233.0 17.90 389.39 9.55 21.20 0.17142 0.00 6.910 0 0.4480 5.6820 33.80 5.1004 3 233.0 17.90 396.90 10.21 19.30 0.18836 0.00 6.910 0 0.4480 5.7860 33.30 5.1004 3 233.0 17.90 396.90 14.15 20.00 0.22927 0.00 6.910 0 0.4480 6.0300 85.50 5.6894 3 233.0 17.90 392.74 18.80 16.60 0.25387 0.00 6.910 0 0.4480 5.3990 95.30 5.8700 3 233.0 17.90 396.90 30.81 14.40 0.21977 0.00 6.910 0 0.4480 5.6020 62.00 6.0877 3 233.0 17.90 396.90 16.20 19.40 0.08873 21.00 5.640 0 0.4390 5.9630 45.70 6.8147 4 243.0 16.80 395.56 13.45 19.70 0.04337 21.00 5.640 0 0.4390 6.1150 63.00 6.8147 4 243.0 16.80 393.97 9.43 20.50 0.05360 21.00 5.640 0 0.4390 6.5110 21.10 6.8147 4 243.0 16.80 396.90 5.28 25.00 0.04981 21.00 5.640 0 0.4390 5.9980 21.40 6.8147 4 243.0 16.80 396.90 8.43 23.40 0.01360 75.00 4.000 0 0.4100 5.8880 47.60 7.3197 3 469.0 21.10 396.90 14.80 18.90 0.01311 90.00 1.220 0 0.4030 7.2490 21.90 8.6966 5 226.0 17.90 395.93 4.81 35.40 0.02055 85.00 0.740 0 0.4100 6.3830 35.70 9.1876 2 313.0 17.30 396.90 5.77 24.70 0.01432 100.00 1.320 0 0.4110 6.8160 40.50 8.3248 5 256.0 15.10 392.90 3.95 31.60 0.15445 25.00 5.130 0 0.4530 6.1450 29.20 7.8148 8 284.0 19.70 390.68 6.86 23.30 0.10328 25.00 5.130 0 0.4530 5.9270 47.20 6.9320 8 284.0 19.70 396.90 9.22 19.60 0.14932 25.00 5.130 0 0.4530 5.7410 66.20 7.2254 8 284.0 19.70 395.11 13.15 18.70 0.17171 25.00 5.130 0 0.4530 5.9660 93.40 6.8185 8 284.0 19.70 378.08 14.44 16.00 0.11027 25.00 5.130 0 0.4530 6.4560 67.80 7.2255 8 284.0 19.70 396.90 6.73 22.20 0.12650 25.00 5.130 0 0.4530 6.7620 43.40 7.9809 8 284.0 19.70 395.58 9.50 25.00 0.01951 17.50 1.380 0 0.4161 7.1040 59.50 9.2229 3 216.0 18.60 393.24 8.05 33.00 0.03584 80.00 3.370 0 0.3980 6.2900 17.80 6.6115 4 337.0 16.10 396.90 4.67 23.50 0.04379 80.00 3.370 0 0.3980 5.7870 31.10 6.6115 4 337.0 16.10 396.90 10.24 19.40 0.05789 12.50 6.070 0 0.4090 5.8780 21.40 6.4980 4 345.0 18.90 396.21 8.10 22.00 0.13554 12.50 6.070 0 0.4090 5.5940 36.80 6.4980 4 345.0 18.90 396.90 13.09 17.40 0.12816 12.50 6.070 0 0.4090 5.8850 33.00 6.4980 4 345.0 18.90 396.90 8.79 20.90 0.08826 0.00 10.810 0 0.4130 6.4170 6.60 5.2873 4 305.0 19.20 383.73 6.72 24.20 0.15876 0.00 10.810 0 0.4130 5.9610 17.50 5.2873 4 305.0 19.20 376.94 9.88 21.70 0.09164 0.00 10.810 0 0.4130 6.0650 7.80 5.2873 4 305.0 19.20 390.91 5.52 22.80 0.19539 0.00 10.810 0 0.4130 6.2450 6.20 5.2873 4 305.0 19.20 377.17 7.54 23.40 0.07896 0.00 12.830 0 0.4370 6.2730 6.00 4.2515 5 398.0 18.70 394.92 6.78 24.10 0.09512 0.00 12.830 0 0.4370 6.2860 45.00 4.5026 5 398.0 18.70 383.23 8.94 21.40 0.10153 0.00 12.830 0 0.4370 6.2790 74.50 4.0522 5 398.0 18.70 373.66 11.97 20.00 0.08707 0.00 12.830 0 0.4370 6.1400 45.80 4.0905 5 398.0 18.70 386.96 10.27 20.80 0.05646 0.00 12.830 0 0.4370 6.2320 53.70 5.0141 5 398.0 18.70 386.40 12.34 21.20 0.08387 0.00 12.830 0 0.4370 5.8740 36.60 4.5026 5 398.0 18.70 396.06 9.10 20.30 0.04113 25.00 4.860 0 0.4260 6.7270 33.50 5.4007 4 281.0 19.00 396.90 5.29 28.00 0.04462 25.00 4.860 0 0.4260 6.6190 70.40 5.4007 4 281.0 19.00 395.63 7.22 23.90 0.03659 25.00 4.860 0 0.4260 6.3020 32.20 5.4007 4 281.0 19.00 396.90 6.72 24.80 0.03551 25.00 4.860 0 0.4260 6.1670 46.70 5.4007 4 281.0 19.00 390.64 7.51 22.90 0.05059 0.00 4.490 0 0.4490 6.3890 48.00 4.7794 3 247.0 18.50 396.90 9.62 23.90 0.05735 0.00 4.490 0 0.4490 6.6300 56.10 4.4377 3 247.0 18.50 392.30 6.53 26.60 0.05188 0.00 4.490 0 0.4490 6.0150 45.10 4.4272 3 247.0 18.50 395.99 12.86 22.50 0.07151 0.00 4.490 0 0.4490 6.1210 56.80 3.7476 3 247.0 18.50 395.15 8.44 22.20 0.05660 0.00 3.410 0 0.4890 7.0070 86.30 3.4217 2 270.0 17.80 396.90 5.50 23.60 0.05302 0.00 3.410 0 0.4890 7.0790 63.10 3.4145 2 270.0 17.80 396.06 5.70 28.70 0.04684 0.00 3.410 0 0.4890 6.4170 66.10 3.0923 2 270.0 17.80 392.18 8.81 22.60 0.03932 0.00 3.410 0 0.4890 6.4050 73.90 3.0921 2 270.0 17.80 393.55 8.20 22.00 0.04203 28.00 15.040 0 0.4640 6.4420 53.60 3.6659 4 270.0 18.20 395.01 8.16 22.90 0.02875 28.00 15.040 0 0.4640 6.2110 28.90 3.6659 4 270.0 18.20 396.33 6.21 25.00 0.04294 28.00 15.040 0 0.4640 6.2490 77.30 3.6150 4 270.0 18.20 396.90 10.59 20.60 0.12204 0.00 2.890 0 0.4450 6.6250 57.80 3.4952 2 276.0 18.00 357.98 6.65 28.40 0.11504 0.00 2.890 0 0.4450 6.1630 69.60 3.4952 2 276.0 18.00 391.83 11.34 21.40 0.12083 0.00 2.890 0 0.4450 8.0690 76.00 3.4952 2 276.0 18.00 396.90 4.21 38.70 0.08187 0.00 2.890 0 0.4450 7.8200 36.90 3.4952 2 276.0 18.00 393.53 3.57 43.80 0.06860 0.00 2.890 0 0.4450 7.4160 62.50 3.4952 2 276.0 18.00 396.90 6.19 33.20 0.14866 0.00 8.560 0 0.5200 6.7270 79.90 2.7778 5 384.0 20.90 394.76 9.42 27.50 0.11432 0.00 8.560 0 0.5200 6.7810 71.30 2.8561 5 384.0 20.90 395.58 7.67 26.50 0.22876 0.00 8.560 0 0.5200 6.4050 85.40 2.7147 5 384.0 20.90 70.80 10.63 18.60 0.21161 0.00 8.560 0 0.5200 6.1370 87.40 2.7147 5 384.0 20.90 394.47 13.44 19.30 0.13960 0.00 8.560 0 0.5200 6.1670 90.00 2.4210 5 384.0 20.90 392.69 12.33 20.10 0.13262 0.00 8.560 0 0.5200 5.8510 96.70 2.1069 5 384.0 20.90 394.05 16.47 19.50 0.17120 0.00 8.560 0 0.5200 5.8360 91.90 2.2110 5 384.0 20.90 395.67 18.66 19.50 0.13117 0.00 8.560 0 0.5200 6.1270 85.20 2.1224 5 384.0 20.90 387.69 14.09 20.40 0.12802 0.00 8.560 0 0.5200 6.4740 97.10 2.4329 5 384.0 20.90 395.24 12.27 19.80 0.26363 0.00 8.560 0 0.5200 6.2290 91.20 2.5451 5 384.0 20.90 391.23 15.55 19.40 0.10793 0.00 8.560 0 0.5200 6.1950 54.40 2.7778 5 384.0 20.90 393.49 13.00 21.70 0.10084 0.00 10.010 0 0.5470 6.7150 81.60 2.6775 6 432.0 17.80 395.59 10.16 22.80 0.12329 0.00 10.010 0 0.5470 5.9130 92.90 2.3534 6 432.0 17.80 394.95 16.21 18.80 0.22212 0.00 10.010 0 0.5470 6.0920 95.40 2.5480 6 432.0 17.80 396.90 17.09 18.70 0.14231 0.00 10.010 0 0.5470 6.2540 84.20 2.2565 6 432.0 17.80 388.74 10.45 18.50 0.17134 0.00 10.010 0 0.5470 5.9280 88.20 2.4631 6 432.0 17.80 344.91 15.76 18.30 0.13158 0.00 10.010 0 0.5470 6.1760 72.50 2.7301 6 432.0 17.80 393.30 12.04 21.20 0.15098 0.00 10.010 0 0.5470 6.0210 82.60 2.7474 6 432.0 17.80 394.51 10.30 19.20 0.13058 0.00 10.010 0 0.5470 5.8720 73.10 2.4775 6 432.0 17.80 338.63 15.37 20.40 0.14476 0.00 10.010 0 0.5470 5.7310 65.20 2.7592 6 432.0 17.80 391.50 13.61 19.30 0.06899 0.00 25.650 0 0.5810 5.8700 69.70 2.2577 2 188.0 19.10 389.15 14.37 22.00 0.07165 0.00 25.650 0 0.5810 6.0040 84.10 2.1974 2 188.0 19.10 377.67 14.27 20.30 0.09299 0.00 25.650 0 0.5810 5.9610 92.90 2.0869 2 188.0 19.10 378.09 17.93 20.50 0.15038 0.00 25.650 0 0.5810 5.8560 97.00 1.9444 2 188.0 19.10 370.31 25.41 17.30 0.09849 0.00 25.650 0 0.5810 5.8790 95.80 2.0063 2 188.0 19.10 379.38 17.58 18.80 0.16902 0.00 25.650 0 0.5810 5.9860 88.40 1.9929 2 188.0 19.10 385.02 14.81 21.40 0.38735 0.00 25.650 0 0.5810 5.6130 95.60 1.7572 2 188.0 19.10 359.29 27.26 15.70 0.25915 0.00 21.890 0 0.6240 5.6930 96.00 1.7883 4 437.0 21.20 392.11 17.19 16.20 0.32543 0.00 21.890 0 0.6240 6.4310 98.80 1.8125 4 437.0 21.20 396.90 15.39 18.00 0.88125 0.00 21.890 0 0.6240 5.6370 94.70 1.9799 4 437.0 21.20 396.90 18.34 14.30 0.34006 0.00 21.890 0 0.6240 6.4580 98.90 2.1185 4 437.0 21.20 395.04 12.60 19.20 1.19294 0.00 21.890 0 0.6240 6.3260 97.70 2.2710 4 437.0 21.20 396.90 12.26 19.60 0.59005 0.00 21.890 0 0.6240 6.3720 97.90 2.3274 4 437.0 21.20 385.76 11.12 23.00 0.32982 0.00 21.890 0 0.6240 5.8220 95.40 2.4699 4 437.0 21.20 388.69 15.03 18.40 0.97617 0.00 21.890 0 0.6240 5.7570 98.40 2.3460 4 437.0 21.20 262.76 17.31 15.60 0.55778 0.00 21.890 0 0.6240 6.3350 98.20 2.1107 4 437.0 21.20 394.67 16.96 18.10 0.32264 0.00 21.890 0 0.6240 5.9420 93.50 1.9669 4 437.0 21.20 378.25 16.90 17.40 0.35233 0.00 21.890 0 0.6240 6.4540 98.40 1.8498 4 437.0 21.20 394.08 14.59 17.10 0.24980 0.00 21.890 0 0.6240 5.8570 98.20 1.6686 4 437.0 21.20 392.04 21.32 13.30 0.54452 0.00 21.890 0 0.6240 6.1510 97.90 1.6687 4 437.0 21.20 396.90 18.46 17.80 0.29090 0.00 21.890 0 0.6240 6.1740 93.60 1.6119 4 437.0 21.20 388.08 24.16 14.00 1.62864 0.00 21.890 0 0.6240 5.0190 100.00 1.4394 4 437.0 21.20 396.90 34.41 14.40 3.32105 0.00 19.580 1 0.8710 5.4030 100.00 1.3216 5 403.0 14.70 396.90 26.82 13.40 4.09740 0.00 19.580 0 0.8710 5.4680 100.00 1.4118 5 403.0 14.70 396.90 26.42 15.60 2.77974 0.00 19.580 0 0.8710 4.9030 97.80 1.3459 5 403.0 14.70 396.90 29.29 11.80 2.37934 0.00 19.580 0 0.8710 6.1300 100.00 1.4191 5 403.0 14.70 172.91 27.80 13.80 2.15505 0.00 19.580 0 0.8710 5.6280 100.00 1.5166 5 403.0 14.70 169.27 16.65 15.60 2.36862 0.00 19.580 0 0.8710 4.9260 95.70 1.4608 5 403.0 14.70 391.71 29.53 14.60 2.33099 0.00 19.580 0 0.8710 5.1860 93.80 1.5296 5 403.0 14.70 356.99 28.32 17.80 2.73397 0.00 19.580 0 0.8710 5.5970 94.90 1.5257 5 403.0 14.70 351.85 21.45 15.40 1.65660 0.00 19.580 0 0.8710 6.1220 97.30 1.6180 5 403.0 14.70 372.80 14.10 21.50 1.49632 0.00 19.580 0 0.8710 5.4040 100.00 1.5916 5 403.0 14.70 341.60 13.28 19.60 1.12658 0.00 19.580 1 0.8710 5.0120 88.00 1.6102 5 403.0 14.70 343.28 12.12 15.30 2.14918 0.00 19.580 0 0.8710 5.7090 98.50 1.6232 5 403.0 14.70 261.95 15.79 19.40 1.41385 0.00 19.580 1 0.8710 6.1290 96.00 1.7494 5 403.0 14.70 321.02 15.12 17.00 3.53501 0.00 19.580 1 0.8710 6.1520 82.60 1.7455 5 403.0 14.70 88.01 15.02 15.60 2.44668 0.00 19.580 0 0.8710 5.2720 94.00 1.7364 5 403.0 14.70 88.63 16.14 13.10 1.22358 0.00 19.580 0 0.6050 6.9430 97.40 1.8773 5 403.0 14.70 363.43 4.59 41.30 1.34284 0.00 19.580 0 0.6050 6.0660 100.00 1.7573 5 403.0 14.70 353.89 6.43 24.30 1.42502 0.00 19.580 0 0.8710 6.5100 100.00 1.7659 5 403.0 14.70 364.31 7.39 23.30 1.27346 0.00 19.580 1 0.6050 6.2500 92.60 1.7984 5 403.0 14.70 338.92 5.50 27.00 1.46336 0.00 19.580 0 0.6050 7.4890 90.80 1.9709 5 403.0 14.70 374.43 1.73 50.00 1.83377 0.00 19.580 1 0.6050 7.8020 98.20 2.0407 5 403.0 14.70 389.61 1.92 50.00 1.51902 0.00 19.580 1 0.6050 8.3750 93.90 2.1620 5 403.0 14.70 388.45 3.32 50.00 2.24236 0.00 19.580 0 0.6050 5.8540 91.80 2.4220 5 403.0 14.70 395.11 11.64 22.70 2.92400 0.00 19.580 0 0.6050 6.1010 93.00 2.2834 5 403.0 14.70 240.16 9.81 25.00 2.01019 0.00 19.580 0 0.6050 7.9290 96.20 2.0459 5 403.0 14.70 369.30 3.70 50.00 1.80028 0.00 19.580 0 0.6050 5.8770 79.20 2.4259 5 403.0 14.70 227.61 12.14 23.80 2.30040 0.00 19.580 0 0.6050 6.3190 96.10 2.1000 5 403.0 14.70 297.09 11.10 23.80 2.44953 0.00 19.580 0 0.6050 6.4020 95.20 2.2625 5 403.0 14.70 330.04 11.32 22.30 1.20742 0.00 19.580 0 0.6050 5.8750 94.60 2.4259 5 403.0 14.70 292.29 14.43 17.40 2.31390 0.00 19.580 0 0.6050 5.8800 97.30 2.3887 5 403.0 14.70 348.13 12.03 19.10 0.13914 0.00 4.050 0 0.5100 5.5720 88.50 2.5961 5 296.0 16.60 396.90 14.69 23.10 0.09178 0.00 4.050 0 0.5100 6.4160 84.10 2.6463 5 296.0 16.60 395.50 9.04 23.60 0.08447 0.00 4.050 0 0.5100 5.8590 68.70 2.7019 5 296.0 16.60 393.23 9.64 22.60 0.06664 0.00 4.050 0 0.5100 6.5460 33.10 3.1323 5 296.0 16.60 390.96 5.33 29.40 0.07022 0.00 4.050 0 0.5100 6.0200 47.20 3.5549 5 296.0 16.60 393.23 10.11 23.20 0.05425 0.00 4.050 0 0.5100 6.3150 73.40 3.3175 5 296.0 16.60 395.60 6.29 24.60 0.06642 0.00 4.050 0 0.5100 6.8600 74.40 2.9153 5 296.0 16.60 391.27 6.92 29.90 0.05780 0.00 2.460 0 0.4880 6.9800 58.40 2.8290 3 193.0 17.80 396.90 5.04 37.20 0.06588 0.00 2.460 0 0.4880 7.7650 83.30 2.7410 3 193.0 17.80 395.56 7.56 39.80 0.06888 0.00 2.460 0 0.4880 6.1440 62.20 2.5979 3 193.0 17.80 396.90 9.45 36.20 0.09103 0.00 2.460 0 0.4880 7.1550 92.20 2.7006 3 193.0 17.80 394.12 4.82 37.90 0.10008 0.00 2.460 0 0.4880 6.5630 95.60 2.8470 3 193.0 17.80 396.90 5.68 32.50 0.08308 0.00 2.460 0 0.4880 5.6040 89.80 2.9879 3 193.0 17.80 391.00 13.98 26.40 0.06047 0.00 2.460 0 0.4880 6.1530 68.80 3.2797 3 193.0 17.80 387.11 13.15 29.60 0.05602 0.00 2.460 0 0.4880 7.8310 53.60 3.1992 3 193.0 17.80 392.63 4.45 50.00 0.07875 45.00 3.440 0 0.4370 6.7820 41.10 3.7886 5 398.0 15.20 393.87 6.68 32.00 0.12579 45.00 3.440 0 0.4370 6.5560 29.10 4.5667 5 398.0 15.20 382.84 4.56 29.80 0.08370 45.00 3.440 0 0.4370 7.1850 38.90 4.5667 5 398.0 15.20 396.90 5.39 34.90 0.09068 45.00 3.440 0 0.4370 6.9510 21.50 6.4798 5 398.0 15.20 377.68 5.10 37.00 0.06911 45.00 3.440 0 0.4370 6.7390 30.80 6.4798 5 398.0 15.20 389.71 4.69 30.50 0.08664 45.00 3.440 0 0.4370 7.1780 26.30 6.4798 5 398.0 15.20 390.49 2.87 36.40 0.02187 60.00 2.930 0 0.4010 6.8000 9.90 6.2196 1 265.0 15.60 393.37 5.03 31.10 0.01439 60.00 2.930 0 0.4010 6.6040 18.80 6.2196 1 265.0 15.60 376.70 4.38 29.10 0.01381 80.00 0.460 0 0.4220 7.8750 32.00 5.6484 4 255.0 14.40 394.23 2.97 50.00 0.04011 80.00 1.520 0 0.4040 7.2870 34.10 7.3090 2 329.0 12.60 396.90 4.08 33.30 0.04666 80.00 1.520 0 0.4040 7.1070 36.60 7.3090 2 329.0 12.60 354.31 8.61 30.30 0.03768 80.00 1.520 0 0.4040 7.2740 38.30 7.3090 2 329.0 12.60 392.20 6.62 34.60 0.03150 95.00 1.470 0 0.4030 6.9750 15.30 7.6534 3 402.0 17.00 396.90 4.56 34.90 0.01778 95.00 1.470 0 0.4030 7.1350 13.90 7.6534 3 402.0 17.00 384.30 4.45 32.90 0.03445 82.50 2.030 0 0.4150 6.1620 38.40 6.2700 2 348.0 14.70 393.77 7.43 24.10 0.02177 82.50 2.030 0 0.4150 7.6100 15.70 6.2700 2 348.0 14.70 395.38 3.11 42.30 0.03510 95.00 2.680 0 0.4161 7.8530 33.20 5.1180 4 224.0 14.70 392.78 3.81 48.50 0.02009 95.00 2.680 0 0.4161 8.0340 31.90 5.1180 4 224.0 14.70 390.55 2.88 50.00 0.13642 0.00 10.590 0 0.4890 5.8910 22.30 3.9454 4 277.0 18.60 396.90 10.87 22.60 0.22969 0.00 10.590 0 0.4890 6.3260 52.50 4.3549 4 277.0 18.60 394.87 10.97 24.40 0.25199 0.00 10.590 0 0.4890 5.7830 72.70 4.3549 4 277.0 18.60 389.43 18.06 22.50 0.13587 0.00 10.590 1 0.4890 6.0640 59.10 4.2392 4 277.0 18.60 381.32 14.66 24.40 0.43571 0.00 10.590 1 0.4890 5.3440 100.00 3.8750 4 277.0 18.60 396.90 23.09 20.00 0.17446 0.00 10.590 1 0.4890 5.9600 92.10 3.8771 4 277.0 18.60 393.25 17.27 21.70 0.37578 0.00 10.590 1 0.4890 5.4040 88.60 3.6650 4 277.0 18.60 395.24 23.98 19.30 0.21719 0.00 10.590 1 0.4890 5.8070 53.80 3.6526 4 277.0 18.60 390.94 16.03 22.40 0.14052 0.00 10.590 0 0.4890 6.3750 32.30 3.9454 4 277.0 18.60 385.81 9.38 28.10 0.28955 0.00 10.590 0 0.4890 5.4120 9.80 3.5875 4 277.0 18.60 348.93 29.55 23.70 0.19802 0.00 10.590 0 0.4890 6.1820 42.40 3.9454 4 277.0 18.60 393.63 9.47 25.00 0.04560 0.00 13.890 1 0.5500 5.8880 56.00 3.1121 5 276.0 16.40 392.80 13.51 23.30 0.07013 0.00 13.890 0 0.5500 6.6420 85.10 3.4211 5 276.0 16.40 392.78 9.69 28.70 0.11069 0.00 13.890 1 0.5500 5.9510 93.80 2.8893 5 276.0 16.40 396.90 17.92 21.50 0.11425 0.00 13.890 1 0.5500 6.3730 92.40 3.3633 5 276.0 16.40 393.74 10.50 23.00 0.35809 0.00 6.200 1 0.5070 6.9510 88.50 2.8617 8 307.0 17.40 391.70 9.71 26.70 0.40771 0.00 6.200 1 0.5070 6.1640 91.30 3.0480 8 307.0 17.40 395.24 21.46 21.70 0.62356 0.00 6.200 1 0.5070 6.8790 77.70 3.2721 8 307.0 17.40 390.39 9.93 27.50 0.61470 0.00 6.200 0 0.5070 6.6180 80.80 3.2721 8 307.0 17.40 396.90 7.60 30.10 0.31533 0.00 6.200 0 0.5040 8.2660 78.30 2.8944 8 307.0 17.40 385.05 4.14 44.80 0.52693 0.00 6.200 0 0.5040 8.7250 83.00 2.8944 8 307.0 17.40 382.00 4.63 50.00 0.38214 0.00 6.200 0 0.5040 8.0400 86.50 3.2157 8 307.0 17.40 387.38 3.13 37.60 0.41238 0.00 6.200 0 0.5040 7.1630 79.90 3.2157 8 307.0 17.40 372.08 6.36 31.60 0.29819 0.00 6.200 0 0.5040 7.6860 17.00 3.3751 8 307.0 17.40 377.51 3.92 46.70 0.44178 0.00 6.200 0 0.5040 6.5520 21.40 3.3751 8 307.0 17.40 380.34 3.76 31.50 0.53700 0.00 6.200 0 0.5040 5.9810 68.10 3.6715 8 307.0 17.40 378.35 11.65 24.30 0.46296 0.00 6.200 0 0.5040 7.4120 76.90 3.6715 8 307.0 17.40 376.14 5.25 31.70 0.57529 0.00 6.200 0 0.5070 8.3370 73.30 3.8384 8 307.0 17.40 385.91 2.47 41.70 0.33147 0.00 6.200 0 0.5070 8.2470 70.40 3.6519 8 307.0 17.40 378.95 3.95 48.30 0.44791 0.00 6.200 1 0.5070 6.7260 66.50 3.6519 8 307.0 17.40 360.20 8.05 29.00 0.33045 0.00 6.200 0 0.5070 6.0860 61.50 3.6519 8 307.0 17.40 376.75 10.88 24.00 0.52058 0.00 6.200 1 0.5070 6.6310 76.50 4.1480 8 307.0 17.40 388.45 9.54 25.10 0.51183 0.00 6.200 0 0.5070 7.3580 71.60 4.1480 8 307.0 17.40 390.07 4.73 31.50 0.08244 30.00 4.930 0 0.4280 6.4810 18.50 6.1899 6 300.0 16.60 379.41 6.36 23.70 0.09252 30.00 4.930 0 0.4280 6.6060 42.20 6.1899 6 300.0 16.60 383.78 7.37 23.30 0.11329 30.00 4.930 0 0.4280 6.8970 54.30 6.3361 6 300.0 16.60 391.25 11.38 22.00 0.10612 30.00 4.930 0 0.4280 6.0950 65.10 6.3361 6 300.0 16.60 394.62 12.40 20.10 0.10290 30.00 4.930 0 0.4280 6.3580 52.90 7.0355 6 300.0 16.60 372.75 11.22 22.20 0.12757 30.00 4.930 0 0.4280 6.3930 7.80 7.0355 6 300.0 16.60 374.71 5.19 23.70 0.20608 22.00 5.860 0 0.4310 5.5930 76.50 7.9549 7 330.0 19.10 372.49 12.50 17.60 0.19133 22.00 5.860 0 0.4310 5.6050 70.20 7.9549 7 330.0 19.10 389.13 18.46 18.50 0.33983 22.00 5.860 0 0.4310 6.1080 34.90 8.0555 7 330.0 19.10 390.18 9.16 24.30 0.19657 22.00 5.860 0 0.4310 6.2260 79.20 8.0555 7 330.0 19.10 376.14 10.15 20.50 0.16439 22.00 5.860 0 0.4310 6.4330 49.10 7.8265 7 330.0 19.10 374.71 9.52 24.50 0.19073 22.00 5.860 0 0.4310 6.7180 17.50 7.8265 7 330.0 19.10 393.74 6.56 26.20 0.14030 22.00 5.860 0 0.4310 6.4870 13.00 7.3967 7 330.0 19.10 396.28 5.90 24.40 0.21409 22.00 5.860 0 0.4310 6.4380 8.90 7.3967 7 330.0 19.10 377.07 3.59 24.80 0.08221 22.00 5.860 0 0.4310 6.9570 6.80 8.9067 7 330.0 19.10 386.09 3.53 29.60 0.36894 22.00 5.860 0 0.4310 8.2590 8.40 8.9067 7 330.0 19.10 396.90 3.54 42.80 0.04819 80.00 3.640 0 0.3920 6.1080 32.00 9.2203 1 315.0 16.40 392.89 6.57 21.90 0.03548 80.00 3.640 0 0.3920 5.8760 19.10 9.2203 1 315.0 16.40 395.18 9.25 20.90 0.01538 90.00 3.750 0 0.3940 7.4540 34.20 6.3361 3 244.0 15.90 386.34 3.11 44.00 0.61154 20.00 3.970 0 0.6470 8.7040 86.90 1.8010 5 264.0 13.00 389.70 5.12 50.00 0.66351 20.00 3.970 0 0.6470 7.3330 100.00 1.8946 5 264.0 13.00 383.29 7.79 36.00 0.65665 20.00 3.970 0 0.6470 6.8420 100.00 2.0107 5 264.0 13.00 391.93 6.90 30.10 0.54011 20.00 3.970 0 0.6470 7.2030 81.80 2.1121 5 264.0 13.00 392.80 9.59 33.80 0.53412 20.00 3.970 0 0.6470 7.5200 89.40 2.1398 5 264.0 13.00 388.37 7.26 43.10 0.52014 20.00 3.970 0 0.6470 8.3980 91.50 2.2885 5 264.0 13.00 386.86 5.91 48.80 0.82526 20.00 3.970 0 0.6470 7.3270 94.50 2.0788 5 264.0 13.00 393.42 11.25 31.00 0.55007 20.00 3.970 0 0.6470 7.2060 91.60 1.9301 5 264.0 13.00 387.89 8.10 36.50 0.76162 20.00 3.970 0 0.6470 5.5600 62.80 1.9865 5 264.0 13.00 392.40 10.45 22.80 0.78570 20.00 3.970 0 0.6470 7.0140 84.60 2.1329 5 264.0 13.00 384.07 14.79 30.70 0.57834 20.00 3.970 0 0.5750 8.2970 67.00 2.4216 5 264.0 13.00 384.54 7.44 50.00 0.54050 20.00 3.970 0 0.5750 7.4700 52.60 2.8720 5 264.0 13.00 390.30 3.16 43.50 0.09065 20.00 6.960 1 0.4640 5.9200 61.50 3.9175 3 223.0 18.60 391.34 13.65 20.70 0.29916 20.00 6.960 0 0.4640 5.8560 42.10 4.4290 3 223.0 18.60 388.65 13.00 21.10 0.16211 20.00 6.960 0 0.4640 6.2400 16.30 4.4290 3 223.0 18.60 396.90 6.59 25.20 0.11460 20.00 6.960 0 0.4640 6.5380 58.70 3.9175 3 223.0 18.60 394.96 7.73 24.40 0.22188 20.00 6.960 1 0.4640 7.6910 51.80 4.3665 3 223.0 18.60 390.77 6.58 35.20 0.05644 40.00 6.410 1 0.4470 6.7580 32.90 4.0776 4 254.0 17.60 396.90 3.53 32.40 0.09604 40.00 6.410 0 0.4470 6.8540 42.80 4.2673 4 254.0 17.60 396.90 2.98 32.00 0.10469 40.00 6.410 1 0.4470 7.2670 49.00 4.7872 4 254.0 17.60 389.25 6.05 33.20 0.06127 40.00 6.410 1 0.4470 6.8260 27.60 4.8628 4 254.0 17.60 393.45 4.16 33.10 0.07978 40.00 6.410 0 0.4470 6.4820 32.10 4.1403 4 254.0 17.60 396.90 7.19 29.10 0.21038 20.00 3.330 0 0.4429 6.8120 32.20 4.1007 5 216.0 14.90 396.90 4.85 35.10 0.03578 20.00 3.330 0 0.4429 7.8200 64.50 4.6947 5 216.0 14.90 387.31 3.76 45.40 0.03705 20.00 3.330 0 0.4429 6.9680 37.20 5.2447 5 216.0 14.90 392.23 4.59 35.40 0.06129 20.00 3.330 1 0.4429 7.6450 49.70 5.2119 5 216.0 14.90 377.07 3.01 46.00 0.01501 90.00 1.210 1 0.4010 7.9230 24.80 5.8850 1 198.0 13.60 395.52 3.16 50.00 0.00906 90.00 2.970 0 0.4000 7.0880 20.80 7.3073 1 285.0 15.30 394.72 7.85 32.20 0.01096 55.00 2.250 0 0.3890 6.4530 31.90 7.3073 1 300.0 15.30 394.72 8.23 22.00 0.01965 80.00 1.760 0 0.3850 6.2300 31.50 9.0892 1 241.0 18.20 341.60 12.93 20.10 0.03871 52.50 5.320 0 0.4050 6.2090 31.30 7.3172 6 293.0 16.60 396.90 7.14 23.20 0.04590 52.50 5.320 0 0.4050 6.3150 45.60 7.3172 6 293.0 16.60 396.90 7.60 22.30 0.04297 52.50 5.320 0 0.4050 6.5650 22.90 7.3172 6 293.0 16.60 371.72 9.51 24.80 0.03502 80.00 4.950 0 0.4110 6.8610 27.90 5.1167 4 245.0 19.20 396.90 3.33 28.50 0.07886 80.00 4.950 0 0.4110 7.1480 27.70 5.1167 4 245.0 19.20 396.90 3.56 37.30 0.03615 80.00 4.950 0 0.4110 6.6300 23.40 5.1167 4 245.0 19.20 396.90 4.70 27.90 0.08265 0.00 13.920 0 0.4370 6.1270 18.40 5.5027 4 289.0 16.00 396.90 8.58 23.90 0.08199 0.00 13.920 0 0.4370 6.0090 42.30 5.5027 4 289.0 16.00 396.90 10.40 21.70 0.12932 0.00 13.920 0 0.4370 6.6780 31.10 5.9604 4 289.0 16.00 396.90 6.27 28.60 0.05372 0.00 13.920 0 0.4370 6.5490 51.00 5.9604 4 289.0 16.00 392.85 7.39 27.10 0.14103 0.00 13.920 0 0.4370 5.7900 58.00 6.3200 4 289.0 16.00 396.90 15.84 20.30 0.06466 70.00 2.240 0 0.4000 6.3450 20.10 7.8278 5 358.0 14.80 368.24 4.97 22.50 0.05561 70.00 2.240 0 0.4000 7.0410 10.00 7.8278 5 358.0 14.80 371.58 4.74 29.00 0.04417 70.00 2.240 0 0.4000 6.8710 47.40 7.8278 5 358.0 14.80 390.86 6.07 24.80 0.03537 34.00 6.090 0 0.4330 6.5900 40.40 5.4917 7 329.0 16.10 395.75 9.50 22.00 0.09266 34.00 6.090 0 0.4330 6.4950 18.40 5.4917 7 329.0 16.10 383.61 8.67 26.40 0.10000 34.00 6.090 0 0.4330 6.9820 17.70 5.4917 7 329.0 16.10 390.43 4.86 33.10 0.05515 33.00 2.180 0 0.4720 7.2360 41.10 4.0220 7 222.0 18.40 393.68 6.93 36.10 0.05479 33.00 2.180 0 0.4720 6.6160 58.10 3.3700 7 222.0 18.40 393.36 8.93 28.40 0.07503 33.00 2.180 0 0.4720 7.4200 71.90 3.0992 7 222.0 18.40 396.90 6.47 33.40 0.04932 33.00 2.180 0 0.4720 6.8490 70.30 3.1827 7 222.0 18.40 396.90 7.53 28.20 0.49298 0.00 9.900 0 0.5440 6.6350 82.50 3.3175 4 304.0 18.40 396.90 4.54 22.80 0.34940 0.00 9.900 0 0.5440 5.9720 76.70 3.1025 4 304.0 18.40 396.24 9.97 20.30 2.63548 0.00 9.900 0 0.5440 4.9730 37.80 2.5194 4 304.0 18.40 350.45 12.64 16.10 0.79041 0.00 9.900 0 0.5440 6.1220 52.80 2.6403 4 304.0 18.40 396.90 5.98 22.10 0.26169 0.00 9.900 0 0.5440 6.0230 90.40 2.8340 4 304.0 18.40 396.30 11.72 19.40 0.26938 0.00 9.900 0 0.5440 6.2660 82.80 3.2628 4 304.0 18.40 393.39 7.90 21.60 0.36920 0.00 9.900 0 0.5440 6.5670 87.30 3.6023 4 304.0 18.40 395.69 9.28 23.80 0.25356 0.00 9.900 0 0.5440 5.7050 77.70 3.9450 4 304.0 18.40 396.42 11.50 16.20 0.31827 0.00 9.900 0 0.5440 5.9140 83.20 3.9986 4 304.0 18.40 390.70 18.33 17.80 0.24522 0.00 9.900 0 0.5440 5.7820 71.70 4.0317 4 304.0 18.40 396.90 15.94 19.80 0.40202 0.00 9.900 0 0.5440 6.3820 67.20 3.5325 4 304.0 18.40 395.21 10.36 23.10 0.47547 0.00 9.900 0 0.5440 6.1130 58.80 4.0019 4 304.0 18.40 396.23 12.73 21.00 0.16760 0.00 7.380 0 0.4930 6.4260 52.30 4.5404 5 287.0 19.60 396.90 7.20 23.80 0.18159 0.00 7.380 0 0.4930 6.3760 54.30 4.5404 5 287.0 19.60 396.90 6.87 23.10 0.35114 0.00 7.380 0 0.4930 6.0410 49.90 4.7211 5 287.0 19.60 396.90 7.70 20.40 0.28392 0.00 7.380 0 0.4930 5.7080 74.30 4.7211 5 287.0 19.60 391.13 11.74 18.50 0.34109 0.00 7.380 0 0.4930 6.4150 40.10 4.7211 5 287.0 19.60 396.90 6.12 25.00 0.19186 0.00 7.380 0 0.4930 6.4310 14.70 5.4159 5 287.0 19.60 393.68 5.08 24.60 0.30347 0.00 7.380 0 0.4930 6.3120 28.90 5.4159 5 287.0 19.60 396.90 6.15 23.00 0.24103 0.00 7.380 0 0.4930 6.0830 43.70 5.4159 5 287.0 19.60 396.90 12.79 22.20 0.06617 0.00 3.240 0 0.4600 5.8680 25.80 5.2146 4 430.0 16.90 382.44 9.97 19.30 0.06724 0.00 3.240 0 0.4600 6.3330 17.20 5.2146 4 430.0 16.90 375.21 7.34 22.60 0.04544 0.00 3.240 0 0.4600 6.1440 32.20 5.8736 4 430.0 16.90 368.57 9.09 19.80 0.05023 35.00 6.060 0 0.4379 5.7060 28.40 6.6407 1 304.0 16.90 394.02 12.43 17.10 0.03466 35.00 6.060 0 0.4379 6.0310 23.30 6.6407 1 304.0 16.90 362.25 7.83 19.40 0.05083 0.00 5.190 0 0.5150 6.3160 38.10 6.4584 5 224.0 20.20 389.71 5.68 22.20 0.03738 0.00 5.190 0 0.5150 6.3100 38.50 6.4584 5 224.0 20.20 389.40 6.75 20.70 0.03961 0.00 5.190 0 0.5150 6.0370 34.50 5.9853 5 224.0 20.20 396.90 8.01 21.10 0.03427 0.00 5.190 0 0.5150 5.8690 46.30 5.2311 5 224.0 20.20 396.90 9.80 19.50 0.03041 0.00 5.190 0 0.5150 5.8950 59.60 5.6150 5 224.0 20.20 394.81 10.56 18.50 0.03306 0.00 5.190 0 0.5150 6.0590 37.30 4.8122 5 224.0 20.20 396.14 8.51 20.60 0.05497 0.00 5.190 0 0.5150 5.9850 45.40 4.8122 5 224.0 20.20 396.90 9.74 19.00 0.06151 0.00 5.190 0 0.5150 5.9680 58.50 4.8122 5 224.0 20.20 396.90 9.29 18.70 0.01301 35.00 1.520 0 0.4420 7.2410 49.30 7.0379 1 284.0 15.50 394.74 5.49 32.70 0.02498 0.00 1.890 0 0.5180 6.5400 59.70 6.2669 1 422.0 15.90 389.96 8.65 16.50 0.02543 55.00 3.780 0 0.4840 6.6960 56.40 5.7321 5 370.0 17.60 396.90 7.18 23.90 0.03049 55.00 3.780 0 0.4840 6.8740 28.10 6.4654 5 370.0 17.60 387.97 4.61 31.20 0.03113 0.00 4.390 0 0.4420 6.0140 48.50 8.0136 3 352.0 18.80 385.64 10.53 17.50 0.06162 0.00 4.390 0 0.4420 5.8980 52.30 8.0136 3 352.0 18.80 364.61 12.67 17.20 0.01870 85.00 4.150 0 0.4290 6.5160 27.70 8.5353 4 351.0 17.90 392.43 6.36 23.10 0.01501 80.00 2.010 0 0.4350 6.6350 29.70 8.3440 4 280.0 17.00 390.94 5.99 24.50 0.02899 40.00 1.250 0 0.4290 6.9390 34.50 8.7921 1 335.0 19.70 389.85 5.89 26.60 0.06211 40.00 1.250 0 0.4290 6.4900 44.40 8.7921 1 335.0 19.70 396.90 5.98 22.90 0.07950 60.00 1.690 0 0.4110 6.5790 35.90 10.7103 4 411.0 18.30 370.78 5.49 24.10 0.07244 60.00 1.690 0 0.4110 5.8840 18.50 10.7103 4 411.0 18.30 392.33 7.79 18.60 0.01709 90.00 2.020 0 0.4100 6.7280 36.10 12.1265 5 187.0 17.00 384.46 4.50 30.10 0.04301 80.00 1.910 0 0.4130 5.6630 21.90 10.5857 4 334.0 22.00 382.80 8.05 18.20 0.10659 80.00 1.910 0 0.4130 5.9360 19.50 10.5857 4 334.0 22.00 376.04 5.57 20.60 8.98296 0.00 18.100 1 0.7700 6.2120 97.40 2.1222 24 666.0 20.20 377.73 17.60 17.80 3.84970 0.00 18.100 1 0.7700 6.3950 91.00 2.5052 24 666.0 20.20 391.34 13.27 21.70 5.20177 0.00 18.100 1 0.7700 6.1270 83.40 2.7227 24 666.0 20.20 395.43 11.48 22.70 4.26131 0.00 18.100 0 0.7700 6.1120 81.30 2.5091 24 666.0 20.20 390.74 12.67 22.60 4.54192 0.00 18.100 0 0.7700 6.3980 88.00 2.5182 24 666.0 20.20 374.56 7.79 25.00 3.83684 0.00 18.100 0 0.7700 6.2510 91.10 2.2955 24 666.0 20.20 350.65 14.19 19.90 3.67822 0.00 18.100 0 0.7700 5.3620 96.20 2.1036 24 666.0 20.20 380.79 10.19 20.80 4.22239 0.00 18.100 1 0.7700 5.8030 89.00 1.9047 24 666.0 20.20 353.04 14.64 16.80 3.47428 0.00 18.100 1 0.7180 8.7800 82.90 1.9047 24 666.0 20.20 354.55 5.29 21.90 4.55587 0.00 18.100 0 0.7180 3.5610 87.90 1.6132 24 666.0 20.20 354.70 7.12 27.50 3.69695 0.00 18.100 0 0.7180 4.9630 91.40 1.7523 24 666.0 20.20 316.03 14.00 21.90 13.52220 0.00 18.100 0 0.6310 3.8630 100.00 1.5106 24 666.0 20.20 131.42 13.33 23.10 4.89822 0.00 18.100 0 0.6310 4.9700 100.00 1.3325 24 666.0 20.20 375.52 3.26 50.00 5.66998 0.00 18.100 1 0.6310 6.6830 96.80 1.3567 24 666.0 20.20 375.33 3.73 50.00 6.53876 0.00 18.100 1 0.6310 7.0160 97.50 1.2024 24 666.0 20.20 392.05 2.96 50.00 9.23230 0.00 18.100 0 0.6310 6.2160 100.00 1.1691 24 666.0 20.20 366.15 9.53 50.00 8.26725 0.00 18.100 1 0.6680 5.8750 89.60 1.1296 24 666.0 20.20 347.88 8.88 50.00 11.10810 0.00 18.100 0 0.6680 4.9060 100.00 1.1742 24 666.0 20.20 396.90 34.77 13.80 18.49820 0.00 18.100 0 0.6680 4.1380 100.00 1.1370 24 666.0 20.20 396.90 37.97 13.80 19.60910 0.00 18.100 0 0.6710 7.3130 97.90 1.3163 24 666.0 20.20 396.90 13.44 15.00 15.28800 0.00 18.100 0 0.6710 6.6490 93.30 1.3449 24 666.0 20.20 363.02 23.24 13.90 9.82349 0.00 18.100 0 0.6710 6.7940 98.80 1.3580 24 666.0 20.20 396.90 21.24 13.30 23.64820 0.00 18.100 0 0.6710 6.3800 96.20 1.3861 24 666.0 20.20 396.90 23.69 13.10 17.86670 0.00 18.100 0 0.6710 6.2230 100.00 1.3861 24 666.0 20.20 393.74 21.78 10.20 88.97620 0.00 18.100 0 0.6710 6.9680 91.90 1.4165 24 666.0 20.20 396.90 17.21 10.40 15.87440 0.00 18.100 0 0.6710 6.5450 99.10 1.5192 24 666.0 20.20 396.90 21.08 10.90 9.18702 0.00 18.100 0 0.7000 5.5360 100.00 1.5804 24 666.0 20.20 396.90 23.60 11.30 7.99248 0.00 18.100 0 0.7000 5.5200 100.00 1.5331 24 666.0 20.20 396.90 24.56 12.30 20.08490 0.00 18.100 0 0.7000 4.3680 91.20 1.4395 24 666.0 20.20 285.83 30.63 8.80 16.81180 0.00 18.100 0 0.7000 5.2770 98.10 1.4261 24 666.0 20.20 396.90 30.81 7.20 24.39380 0.00 18.100 0 0.7000 4.6520 100.00 1.4672 24 666.0 20.20 396.90 28.28 10.50 22.59710 0.00 18.100 0 0.7000 5.0000 89.50 1.5184 24 666.0 20.20 396.90 31.99 7.40 14.33370 0.00 18.100 0 0.7000 4.8800 100.00 1.5895 24 666.0 20.20 372.92 30.62 10.20 8.15174 0.00 18.100 0 0.7000 5.3900 98.90 1.7281 24 666.0 20.20 396.90 20.85 11.50 6.96215 0.00 18.100 0 0.7000 5.7130 97.00 1.9265 24 666.0 20.20 394.43 17.11 15.10 5.29305 0.00 18.100 0 0.7000 6.0510 82.50 2.1678 24 666.0 20.20 378.38 18.76 23.20 11.57790 0.00 18.100 0 0.7000 5.0360 97.00 1.7700 24 666.0 20.20 396.90 25.68 9.70 8.64476 0.00 18.100 0 0.6930 6.1930 92.60 1.7912 24 666.0 20.20 396.90 15.17 13.80 13.35980 0.00 18.100 0 0.6930 5.8870 94.70 1.7821 24 666.0 20.20 396.90 16.35 12.70 8.71675 0.00 18.100 0 0.6930 6.4710 98.80 1.7257 24 666.0 20.20 391.98 17.12 13.10 5.87205 0.00 18.100 0 0.6930 6.4050 96.00 1.6768 24 666.0 20.20 396.90 19.37 12.50 7.67202 0.00 18.100 0 0.6930 5.7470 98.90 1.6334 24 666.0 20.20 393.10 19.92 8.50 38.35180 0.00 18.100 0 0.6930 5.4530 100.00 1.4896 24 666.0 20.20 396.90 30.59 5.00 9.91655 0.00 18.100 0 0.6930 5.8520 77.80 1.5004 24 666.0 20.20 338.16 29.97 6.30 25.04610 0.00 18.100 0 0.6930 5.9870 100.00 1.5888 24 666.0 20.20 396.90 26.77 5.60 14.23620 0.00 18.100 0 0.6930 6.3430 100.00 1.5741 24 666.0 20.20 396.90 20.32 7.20 9.59571 0.00 18.100 0 0.6930 6.4040 100.00 1.6390 24 666.0 20.20 376.11 20.31 12.10 24.80170 0.00 18.100 0 0.6930 5.3490 96.00 1.7028 24 666.0 20.20 396.90 19.77 8.30 41.52920 0.00 18.100 0 0.6930 5.5310 85.40 1.6074 24 666.0 20.20 329.46 27.38 8.50 67.92080 0.00 18.100 0 0.6930 5.6830 100.00 1.4254 24 666.0 20.20 384.97 22.98 5.00 20.71620 0.00 18.100 0 0.6590 4.1380 100.00 1.1781 24 666.0 20.20 370.22 23.34 11.90 11.95110 0.00 18.100 0 0.6590 5.6080 100.00 1.2852 24 666.0 20.20 332.09 12.13 27.90 7.40389 0.00 18.100 0 0.5970 5.6170 97.90 1.4547 24 666.0 20.20 314.64 26.40 17.20 14.43830 0.00 18.100 0 0.5970 6.8520 100.00 1.4655 24 666.0 20.20 179.36 19.78 27.50 51.13580 0.00 18.100 0 0.5970 5.7570 100.00 1.4130 24 666.0 20.20 2.60 10.11 15.00 14.05070 0.00 18.100 0 0.5970 6.6570 100.00 1.5275 24 666.0 20.20 35.05 21.22 17.20 18.81100 0.00 18.100 0 0.5970 4.6280 100.00 1.5539 24 666.0 20.20 28.79 34.37 17.90 28.65580 0.00 18.100 0 0.5970 5.1550 100.00 1.5894 24 666.0 20.20 210.97 20.08 16.30 45.74610 0.00 18.100 0 0.6930 4.5190 100.00 1.6582 24 666.0 20.20 88.27 36.98 7.00 18.08460 0.00 18.100 0 0.6790 6.4340 100.00 1.8347 24 666.0 20.20 27.25 29.05 7.20 10.83420 0.00 18.100 0 0.6790 6.7820 90.80 1.8195 24 666.0 20.20 21.57 25.79 7.50 25.94060 0.00 18.100 0 0.6790 5.3040 89.10 1.6475 24 666.0 20.20 127.36 26.64 10.40 73.53410 0.00 18.100 0 0.6790 5.9570 100.00 1.8026 24 666.0 20.20 16.45 20.62 8.80 11.81230 0.00 18.100 0 0.7180 6.8240 76.50 1.7940 24 666.0 20.20 48.45 22.74 8.40 11.08740 0.00 18.100 0 0.7180 6.4110 100.00 1.8589 24 666.0 20.20 318.75 15.02 16.70 7.02259 0.00 18.100 0 0.7180 6.0060 95.30 1.8746 24 666.0 20.20 319.98 15.70 14.20 12.04820 0.00 18.100 0 0.6140 5.6480 87.60 1.9512 24 666.0 20.20 291.55 14.10 20.80 7.05042 0.00 18.100 0 0.6140 6.1030 85.10 2.0218 24 666.0 20.20 2.52 23.29 13.40 8.79212 0.00 18.100 0 0.5840 5.5650 70.60 2.0635 24 666.0 20.20 3.65 17.16 11.70 15.86030 0.00 18.100 0 0.6790 5.8960 95.40 1.9096 24 666.0 20.20 7.68 24.39 8.30 12.24720 0.00 18.100 0 0.5840 5.8370 59.70 1.9976 24 666.0 20.20 24.65 15.69 10.20 37.66190 0.00 18.100 0 0.6790 6.2020 78.70 1.8629 24 666.0 20.20 18.82 14.52 10.90 7.36711 0.00 18.100 0 0.6790 6.1930 78.10 1.9356 24 666.0 20.20 96.73 21.52 11.00 9.33889 0.00 18.100 0 0.6790 6.3800 95.60 1.9682 24 666.0 20.20 60.72 24.08 9.50 8.49213 0.00 18.100 0 0.5840 6.3480 86.10 2.0527 24 666.0 20.20 83.45 17.64 14.50 10.06230 0.00 18.100 0 0.5840 6.8330 94.30 2.0882 24 666.0 20.20 81.33 19.69 14.10 6.44405 0.00 18.100 0 0.5840 6.4250 74.80 2.2004 24 666.0 20.20 97.95 12.03 16.10 5.58107 0.00 18.100 0 0.7130 6.4360 87.90 2.3158 24 666.0 20.20 100.19 16.22 14.30 13.91340 0.00 18.100 0 0.7130 6.2080 95.00 2.2222 24 666.0 20.20 100.63 15.17 11.70 11.16040 0.00 18.100 0 0.7400 6.6290 94.60 2.1247 24 666.0 20.20 109.85 23.27 13.40 14.42080 0.00 18.100 0 0.7400 6.4610 93.30 2.0026 24 666.0 20.20 27.49 18.05 9.60 15.17720 0.00 18.100 0 0.7400 6.1520 100.00 1.9142 24 666.0 20.20 9.32 26.45 8.70 13.67810 0.00 18.100 0 0.7400 5.9350 87.90 1.8206 24 666.0 20.20 68.95 34.02 8.40 9.39063 0.00 18.100 0 0.7400 5.6270 93.90 1.8172 24 666.0 20.20 396.90 22.88 12.80 22.05110 0.00 18.100 0 0.7400 5.8180 92.40 1.8662 24 666.0 20.20 391.45 22.11 10.50 9.72418 0.00 18.100 0 0.7400 6.4060 97.20 2.0651 24 666.0 20.20 385.96 19.52 17.10 5.66637 0.00 18.100 0 0.7400 6.2190 100.00 2.0048 24 666.0 20.20 395.69 16.59 18.40 9.96654 0.00 18.100 0 0.7400 6.4850 100.00 1.9784 24 666.0 20.20 386.73 18.85 15.40 12.80230 0.00 18.100 0 0.7400 5.8540 96.60 1.8956 24 666.0 20.20 240.52 23.79 10.80 10.67180 0.00 18.100 0 0.7400 6.4590 94.80 1.9879 24 666.0 20.20 43.06 23.98 11.80 6.28807 0.00 18.100 0 0.7400 6.3410 96.40 2.0720 24 666.0 20.20 318.01 17.79 14.90 9.92485 0.00 18.100 0 0.7400 6.2510 96.60 2.1980 24 666.0 20.20 388.52 16.44 12.60 9.32909 0.00 18.100 0 0.7130 6.1850 98.70 2.2616 24 666.0 20.20 396.90 18.13 14.10 7.52601 0.00 18.100 0 0.7130 6.4170 98.30 2.1850 24 666.0 20.20 304.21 19.31 13.00 6.71772 0.00 18.100 0 0.7130 6.7490 92.60 2.3236 24 666.0 20.20 0.32 17.44 13.40 5.44114 0.00 18.100 0 0.7130 6.6550 98.20 2.3552 24 666.0 20.20 355.29 17.73 15.20 5.09017 0.00 18.100 0 0.7130 6.2970 91.80 2.3682 24 666.0 20.20 385.09 17.27 16.10 8.24809 0.00 18.100 0 0.7130 7.3930 99.30 2.4527 24 666.0 20.20 375.87 16.74 17.80 9.51363 0.00 18.100 0 0.7130 6.7280 94.10 2.4961 24 666.0 20.20 6.68 18.71 14.90 4.75237 0.00 18.100 0 0.7130 6.5250 86.50 2.4358 24 666.0 20.20 50.92 18.13 14.10 4.66883 0.00 18.100 0 0.7130 5.9760 87.90 2.5806 24 666.0 20.20 10.48 19.01 12.70 8.20058 0.00 18.100 0 0.7130 5.9360 80.30 2.7792 24 666.0 20.20 3.50 16.94 13.50 7.75223 0.00 18.100 0 0.7130 6.3010 83.70 2.7831 24 666.0 20.20 272.21 16.23 14.90 6.80117 0.00 18.100 0 0.7130 6.0810 84.40 2.7175 24 666.0 20.20 396.90 14.70 20.00 4.81213 0.00 18.100 0 0.7130 6.7010 90.00 2.5975 24 666.0 20.20 255.23 16.42 16.40 3.69311 0.00 18.100 0 0.7130 6.3760 88.40 2.5671 24 666.0 20.20 391.43 14.65 17.70 6.65492 0.00 18.100 0 0.7130 6.3170 83.00 2.7344 24 666.0 20.20 396.90 13.99 19.50 5.82115 0.00 18.100 0 0.7130 6.5130 89.90 2.8016 24 666.0 20.20 393.82 10.29 20.20 7.83932 0.00 18.100 0 0.6550 6.2090 65.40 2.9634 24 666.0 20.20 396.90 13.22 21.40 3.16360 0.00 18.100 0 0.6550 5.7590 48.20 3.0665 24 666.0 20.20 334.40 14.13 19.90 3.77498 0.00 18.100 0 0.6550 5.9520 84.70 2.8715 24 666.0 20.20 22.01 17.15 19.00 4.42228 0.00 18.100 0 0.5840 6.0030 94.50 2.5403 24 666.0 20.20 331.29 21.32 19.10 15.57570 0.00 18.100 0 0.5800 5.9260 71.00 2.9084 24 666.0 20.20 368.74 18.13 19.10 13.07510 0.00 18.100 0 0.5800 5.7130 56.70 2.8237 24 666.0 20.20 396.90 14.76 20.10 4.34879 0.00 18.100 0 0.5800 6.1670 84.00 3.0334 24 666.0 20.20 396.90 16.29 19.90 4.03841 0.00 18.100 0 0.5320 6.2290 90.70 3.0993 24 666.0 20.20 395.33 12.87 19.60 3.56868 0.00 18.100 0 0.5800 6.4370 75.00 2.8965 24 666.0 20.20 393.37 14.36 23.20 4.64689 0.00 18.100 0 0.6140 6.9800 67.60 2.5329 24 666.0 20.20 374.68 11.66 29.80 8.05579 0.00 18.100 0 0.5840 5.4270 95.40 2.4298 24 666.0 20.20 352.58 18.14 13.80 6.39312 0.00 18.100 0 0.5840 6.1620 97.40 2.2060 24 666.0 20.20 302.76 24.10 13.30 4.87141 0.00 18.100 0 0.6140 6.4840 93.60 2.3053 24 666.0 20.20 396.21 18.68 16.70 15.02340 0.00 18.100 0 0.6140 5.3040 97.30 2.1007 24 666.0 20.20 349.48 24.91 12.00 10.23300 0.00 18.100 0 0.6140 6.1850 96.70 2.1705 24 666.0 20.20 379.70 18.03 14.60 14.33370 0.00 18.100 0 0.6140 6.2290 88.00 1.9512 24 666.0 20.20 383.32 13.11 21.40 5.82401 0.00 18.100 0 0.5320 6.2420 64.70 3.4242 24 666.0 20.20 396.90 10.74 23.00 5.70818 0.00 18.100 0 0.5320 6.7500 74.90 3.3317 24 666.0 20.20 393.07 7.74 23.70 5.73116 0.00 18.100 0 0.5320 7.0610 77.00 3.4106 24 666.0 20.20 395.28 7.01 25.00 2.81838 0.00 18.100 0 0.5320 5.7620 40.30 4.0983 24 666.0 20.20 392.92 10.42 21.80 2.37857 0.00 18.100 0 0.5830 5.8710 41.90 3.7240 24 666.0 20.20 370.73 13.34 20.60 3.67367 0.00 18.100 0 0.5830 6.3120 51.90 3.9917 24 666.0 20.20 388.62 10.58 21.20 5.69175 0.00 18.100 0 0.5830 6.1140 79.80 3.5459 24 666.0 20.20 392.68 14.98 19.10 4.83567 0.00 18.100 0 0.5830 5.9050 53.20 3.1523 24 666.0 20.20 388.22 11.45 20.60 0.15086 0.00 27.740 0 0.6090 5.4540 92.70 1.8209 4 711.0 20.10 395.09 18.06 15.20 0.18337 0.00 27.740 0 0.6090 5.4140 98.30 1.7554 4 711.0 20.10 344.05 23.97 7.00 0.20746 0.00 27.740 0 0.6090 5.0930 98.00 1.8226 4 711.0 20.10 318.43 29.68 8.10 0.10574 0.00 27.740 0 0.6090 5.9830 98.80 1.8681 4 711.0 20.10 390.11 18.07 13.60 0.11132 0.00 27.740 0 0.6090 5.9830 83.50 2.1099 4 711.0 20.10 396.90 13.35 20.10 0.17331 0.00 9.690 0 0.5850 5.7070 54.00 2.3817 6 391.0 19.20 396.90 12.01 21.80 0.27957 0.00 9.690 0 0.5850 5.9260 42.60 2.3817 6 391.0 19.20 396.90 13.59 24.50 0.17899 0.00 9.690 0 0.5850 5.6700 28.80 2.7986 6 391.0 19.20 393.29 17.60 23.10 0.28960 0.00 9.690 0 0.5850 5.3900 72.90 2.7986 6 391.0 19.20 396.90 21.14 19.70 0.26838 0.00 9.690 0 0.5850 5.7940 70.60 2.8927 6 391.0 19.20 396.90 14.10 18.30 0.23912 0.00 9.690 0 0.5850 6.0190 65.30 2.4091 6 391.0 19.20 396.90 12.92 21.20 0.17783 0.00 9.690 0 0.5850 5.5690 73.50 2.3999 6 391.0 19.20 395.77 15.10 17.50 0.22438 0.00 9.690 0 0.5850 6.0270 79.70 2.4982 6 391.0 19.20 396.90 14.33 16.80 0.06263 0.00 11.930 0 0.5730 6.5930 69.10 2.4786 1 273.0 21.00 391.99 9.67 22.40 0.04527 0.00 11.930 0 0.5730 6.1200 76.70 2.2875 1 273.0 21.00 396.90 9.08 20.60 0.06076 0.00 11.930 0 0.5730 6.9760 91.00 2.1675 1 273.0 21.00 396.90 5.64 23.90 0.10959 0.00 11.930 0 0.5730 6.7940 89.30 2.3889 1 273.0 21.00 393.45 6.48 22.00 0.04741 0.00 11.930 0 0.5730 6.0300 80.80 2.5050 1 273.0 21.00 396.90 7.88 11.90

二,开发基线神经网络模型

在本节中,我们将为回归问题创建基线神经网络模型。

1,导入所需的库

让我们从包含本文所需的所有函数和对象开始。

import numpy import pandas from keras.models import Sequential from keras.layers import Dense from keras.wrappers.scikit_learn import KerasRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import KFold from sklearn.preprocessing import StandardScaler from sklearn.pipeline import Pipeline

2,加载数据集

我们现在可以从本地目录中的文件加载数据集。

事实上,数据集在UCI机器学习库中不是CSV格式,而是用空格分隔属性。我们可以使用pandas库轻松加载它。然后我们可以分割输入(X)和输出(Y)属性,以便使用Keras和scikit-learn更容易建模。

# load dataset

dataframe = pandas.read_csv("housing.csv", delim_whitespace=True, header=None)

dataset = dataframe.values

# split into input (X) and output (Y) variables

X = dataset[:,0:13]

Y = dataset[:,13]

当然了,我们可以直接导入sklearn中的Boston数据集

boston = datasets.load_Boston() # 导入数据集 X = boston.data # 获得其特征向量 y = boston.target # 获得样本label

3,创建评估的神经网络模型

我们可以使用Keras库提供的方便的包装器对象创建Keras模型并使用scikit-learn来评估它们。这是可取的,因为scikit-learn在评估模型方面表现出色,并且允许我们使用强大的数据准备和模型评估方案,只需很少的代码。

Keras包装器需要一个函数作为参数。我们必须定义的这个函数负责创建要评估的神经网络模型。

下面我们定义用于创建要评估的基线模型的函数。这是一个简单的模型,它有一个完全连接的隐藏层,与输入属性具有相同数量的神经元(13)。网络使用诸如隐藏层的整流器激活功能之类的良好实践。没有激活函数用于输出层,因为它是一个回归问题,我们有兴趣直接预测数值而不进行转换。

使用有效的ADAM优化算法并且优化均方误差损失函数。这将与我们用于评估模型性能的指标相同。这是一个理想的指标,因为通过取平方根给出了我们可以在问题的上下文中直接理解的错误值(数千美元)。

# define base model def baseline_model(): # create model model = Sequential() model.add(Dense(13, input_dim=13, kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model

在 scikit-learn 库中用作回归计算估计器的 Keras 封装对象名为 KerasRegressor。我们创建一个 KerasRegressor对象实例,并将创建神经网络模型的函数名称,以及一些稍后传递给模型 fit( ) 函数的参数,比如最大训练次数,每批数据的大小等。两者都被设置为合理的默认值。

对于sklearn不了解的可以参考小编的博客:Python机器学习笔记:sklearn库的学习

我们还使用常量随机种子初始化随机数生成器,我们将为本教程中评估的每个模型重复该过程(相同的随机数)。这是为了确保我们始终如一的比较模型。

# fix random seed for reproducibility seed = 7 numpy.random.seed(seed) # evaluate model with standardized dataset estimator = KerasRegressor(build_fn=baseline_model, epochs=100, batch_size=5, verbose=0)

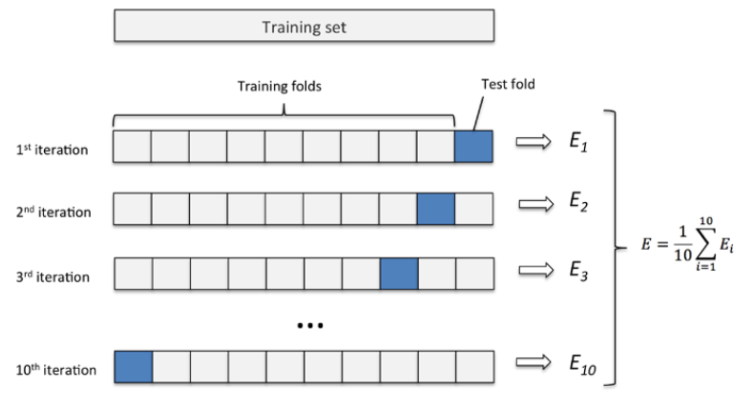

最后一步是评估此基线模型。我们将使用10倍交叉验证来评估模型。

4,评估所创建的神经网络模型

kfold = KFold(n_splits=10, random_state=seed)

results = cross_val_score(estimator, X, Y, cv=kfold)

print("Results: %.2f (%.2f) MSE" % (results.mean(), results.std()))

运行此代码可以估算出模型在看不见的数据问题上的表现。结果报告均方误差,包括交叉验证评估的所有10倍的平均值和标准偏差(平均方差)

Baseline: 31.64 (26.82) MSE

5(补充):交叉验证的学习

1,导入k折交叉验证模块

from sklearn.cross_validation import cross_val_score

2,交叉验证的思想

把某种意义下将原始数据(dataset)进行分组,一部分作为训练集(train set),另一部分作为验证集(validation set or test set),首先用训练集对分类器进行训练,再利用验证集来测试训练得到的模型(model),以此来作为评价分类器的性能指标。

3,为什么使用交叉验证法

- 交叉验证用于评估模型的预测性能,尤其是训练好的模型在新数据上的表现,可以在一定程序熵减少过拟合。

- 交叉验证还可以从有限的数据中获取尽可能多的有效信息

4,主要有哪些方法

1,留出法(holdout cross validation)

在机器学习任务中,拿到数据后,我们首先会将原始数据集分为三部分:训练集,验证集和测试集。

训练集用于训练模型,验证集用于模型的参数选择配置,测试集对于模型来说是未知数据,用于评估模型的泛化能力。

这个方法操作简单,只需要随机将原始数据分为三组即可。

不过如果只做一次分割,它对训练集,验证集和测试机的样本比例,还有分割后数据的分布是否和原始数据集的分布相同等因素比较敏感,不同的划分会得到不同的最优模型,,而且分成三个集合后,用于训练的数据更少了。于是又了2.k折交叉验证(k-fold cross validation).

下面例子,一共有150条数据:

>>> import numpy as np >>> from sklearn.model_selection import train_test_split >>> from sklearn import datasets >>> from sklearn import svm >>> iris = datasets.load_iris() >>> iris.data.shape, iris.target.shape ((150, 4), (150,))

用train_test_split来随机划分数据集,其中40%用于测试集,有60条数据,60%为训练集,有90条数据:

>>> X_train, X_test, y_train, y_test = train_test_split( ... iris.data, iris.target, test_size=0.4, random_state=0) >>> X_train.shape, y_train.shape ((90, 4), (90,)) >>> X_test.shape, y_test.shape ((60, 4), (60,))

用train来训练,用test来评价模型的分数。

>>> clf = svm.SVC(kernel='linear', C=1).fit(X_train, y_train) >>> clf.score(X_test, y_test) 0.96...

2,2. k 折交叉验证(k-fold cross validation)

K折交叉验证通过对k个不同分组训练的结果进行平均来减少方差,因此模型的性能对数据的划分就不那么敏感。

- 第一步,不重复抽样将原始数据随机分为 k 份。

- 第二步,每一次挑选其中 1 份作为测试集,剩余 k-1 份作为训练集用于模型训练。

- 第三步,重复第二步 k 次,这样每个子集都有一次机会作为测试集,其余机会作为训练集。

- 在每个训练集上训练后得到一个模型,

- 用这个模型在相应的测试集上测试,计算并保存模型的评估指标,

- 第四步,计算 k 组测试结果的平均值作为模型精度的估计,并作为当前 k 折交叉验证下模型的性能指标。

K一般取10,数据量小的是,k可以设大一点,这样训练集占整体比例就比较大,不过同时训练的模型个数也增多。数据量大的时候,k可以设置小一点。当k=m的时候,即样本总数,出现了留一法。

举例,这里直接调用了cross_val_score,这里用了5折交叉验证

>>> from sklearn.model_selection import cross_val_score >>> clf = svm.SVC(kernel='linear', C=1) >>> scores = cross_val_score(clf, iris.data, iris.target, cv=5) >>> scores array([ 0.96..., 1. ..., 0.96..., 0.96..., 1. ])

得到最后平均分数为0.98,以及它的95%置信区间:

>>> print("Accuracy: %0.2f (+/- %0.2f)" % (scores.mean(), scores.std() * 2))

Accuracy: 0.98 (+/- 0.03)

我们可以直接看一下K-Fold是怎么样划分数据的:X有四个数据,把它分成2折,结构中最后一个集合是测试集,前面的是训练集,每一行为1折:

>>> import numpy as np

>>> from sklearn.model_selection import KFold

>>> X = ["a", "b", "c", "d"]

>>> kf = KFold(n_splits=2)

>>> for train, test in kf.split(X):

... print("%s %s" % (train, test))

[2 3] [0 1]

[0 1] [2 3]

同样的数据X,我们来看LeaveOneOut后是什么样子,那就是把它分成4折,结果中最后一个集合是测试集,只有一个元素,前面的是训练集,每一行为1折:

>>> from sklearn.model_selection import LeaveOneOut

>>> X = [1, 2, 3, 4]

>>> loo = LeaveOneOut()

>>> for train, test in loo.split(X):

... print("%s %s" % (train, test))

[1 2 3] [0]

[0 2 3] [1]

[0 1 3] [2]

[0 1 2] [3]

3,留一法(Leave one out cross validation)

每次的测试集都只有一个样本,要进行m次训练和预测,这个方法用于训练的数据只比整体数据集少一个样本,因此最接近原始样本的分布。但是训练复杂度增加了,因为模型的数量与原始数据样本数量相同。一般在数据缺少时使用。

此外:

- 多次 k 折交叉验证再求均值,例如:10 次 10 折交叉验证,以求更精确一点。

- 划分时有多种方法,例如对非平衡数据可以用分层采样,就是在每一份子集中都保持和原始数据集相同的类别比例。

- 模型训练过程的所有步骤,包括模型选择,特征选择等都是在单个折叠 fold 中独立执行的。

4,Bootstrapping

通过自助采样法,即在含有 m 个样本的数据集中,每次随机挑选一个样本,再放回到数据集中,再随机挑选一个样本,这样有放回地进行抽样 m 次,组成了新的数据集作为训练集。

这里会有重复多次的样本,也会有一次都没有出现的样本,原数据集中大概有 36.8% 的样本不会出现在新组数据集中。

优点是训练集的样本总数和原数据集一样都是 m,并且仍有约 1/3 的数据不被训练而可以作为测试集。

缺点是这样产生的训练集的数据分布和原数据集的不一样了,会引入估计偏差。

(此种方法不是很常用,除非数据量真的很少)

三,建模标准化数据集

波士顿房价数据集的一个重要问题是输入的特征对于房价的影响各不相同。

在使用神经网络模型对数据进行建模之前,准备好所要使用数据总是一种好的做法。

继续上述基线模型,我们可以使用输入数据集的标准化版本重新评估相同的模型。

我们可以使用scikit-learn的Pipeline框架在模型评估过程中,在交叉验证的每个折叠内执行标准化。这确保了每个测试集交叉验证折叠中没有数据泄漏到训练数据中。

下面的代码创建了一个scikit-learn Pipeline,它首先标准化数据集,然后创建和评估基线神经网络模型。

# evaluate model with standardized dataset

numpy.random.seed(seed)

estimators = []

estimators.append(('standardize', StandardScaler()))

estimators.append(('mlp', KerasRegressor(build_fn=baseline_model, epochs=50, batch_size=5, verbose=0)))

pipeline = Pipeline(estimators)

kfold = KFold(n_splits=10, random_state=seed)

results = cross_val_score(pipeline, X, Y, cv=kfold)

print("Standardized: %.2f (%.2f) MSE" % (results.mean(), results.std()))

运行该示例提供了比没有标准化数据的基线模型更好的性能,从而减少了错误。

Standardized: 29.54 (27.87) MSE

此部分的进一步扩展将类似地对输出变量应用重新缩放,例如将其归一化到0-1的范围,并在输出层上使用Sigmoid或类似的激活函数,以将输出预测缩小到相同的范围。

四,调整神经网络拓扑

对于神经网络模型而言,可以优化的方面有很多。

也许效果最明显的优化之处是网络本身的结构,包括层数和每层神经元的数量。

在本节中,我们将评估另外两种网络拓扑,以进一步提高模型的性能。这两个结构分别是层数更深和层度更宽的网络拓扑结构。

4.1 评估层数更深的网络拓扑

提高神经网络性能的一种方法是添加更多层。这可能允许模型提取并重新组合数据中嵌入的高阶特征。

在本节中,我们将评估向模型添加一个隐藏层的效果。这就像定义一个新函数一样简单,这个函数将创建从上面的基线模型复制的更深层次的模型。然后我们可以在第一个隐藏层之后插入一个新行。在这种情况下,神经元的数量约为一半(6个)。

# define the model def larger_model(): # create model model = Sequential() model.add(Dense(13, input_dim=13, kernel_initializer='normal', activation='relu')) model.add(Dense(6, kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model

我们的网络拓扑现在看起来像:

13 inputs -> [13 -> 6] -> 1 output

我们可以采用与上述相同的方式评估此网络拓扑,同时还使用上面显示的数据集的标准化来提高性能。

numpy.random.seed(seed)

estimators = []

estimators.append(('standardize', StandardScaler()))

estimators.append(('mlp', KerasRegressor(build_fn=larger_model, epochs=50, batch_size=5, verbose=0)))

pipeline = Pipeline(estimators)

kfold = KFold(n_splits=10, random_state=seed)

results = cross_val_score(pipeline, X, Y, cv=kfold)

print("Larger: %.2f (%.2f) MSE" % (results.mean(), results.std()))

运行此模型确定表明性能从28降到24,000平方美元的进一步改善。

Larger: 22.83 (25.33) MSE

4.2 评估层宽更宽的网络拓扑

增加模型的表示能力的另一种方法是创建更广泛的网络。

在本节中,我们将评估保持浅层网络架构的效果,并使一个隐藏层中的神经元数量几乎翻倍。

同样,我们需要做的就是定义一个创建神经网络模型的新函数。在这里,与13到20的基线模型相比,我们增加了隐藏层中神经元的数量。

# define wider model def wider_model(): # create model model = Sequential() model.add(Dense(20, input_dim=13, kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model

我们可以使用与上面相同的方案评估更广泛的网络拓扑:

numpy.random.seed(seed)

estimators = []

estimators.append(('standardize', StandardScaler()))

estimators.append(('mlp', KerasRegressor(build_fn=wider_model, epochs=100, batch_size=5, verbose=0)))

pipeline = Pipeline(estimators)

kfold = KFold(n_splits=10, random_state=seed)

results = cross_val_score(pipeline, X, Y, cv=kfold)

print("Wider: %.2f (%.2f) MSE" % (results.mean(), results.std()))

建立模型的确看到误差进一步下降到大约2.1万平方美元,对于这个问题,这个不是一个糟糕的结果。

Wider: 21.64 (23.75) MSE

很难想象更广泛的网络在这个问题上会胜过更深层次的网络。结果证明了在开发神经网络模型时经验测试的重要性。

五,完整代码的总结

1,代码

import numpy

import pandas

from keras.models import Sequential

from keras.layers import Dense

from keras.wrappers.scikit_learn import KerasRegressor

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

# 导入数据

filename = 'housing.csv'

dataframe = pandas.read_csv(filename,delim_whitespace=True,header=None)

dataset = dataframe.values

# print(dataset)

# 把数据分为输入和输出两个变量

X = dataset[:,0:13]

Y = dataset[:,13]

# print(len(Y))

# 定义个基类模型

def baseline_model():

# 创建模型,与输入属性具有相同的神经元13

model = Sequential()

model.add(Dense(13,input_dim=13,kernel_initializer='normal',activation='relu'))

model.add(Dense(1,kernel_initializer='normal'))

# Compile model

model.compile(loss='mean_squared_error',optimizer='adam')

return model

# 固定随机种子的重现性

seed = 7

numpy.random.seed(seed)

# 使用标准化数据集评估模型

estimator = KerasRegressor(build_fn=baseline_model,epochs=100,batch_size=5,verbose=0)

# 评估此基类模型,我们使用10倍交叉验证来评估模型

kfold = KFold(n_splits=10,random_state=seed)

results = cross_val_score(estimator,X,Y,cv=kfold)

print("Results:%.2f(%.2f)MSE"%(results.mean(),results.std()))

# 使用scikit-learn Pipeline 首先标准化数据集,然后创建和评估基线神经网络模型

numpy.random.seed(seed)

estimators = []

estimators.append(('standaedize',StandardScaler()))

estimators.append(('mlp',KerasRegressor(build_fn=baseline_model,epochs=50,batch_size=5,verbose=0)))

pipeline = Pipeline(estimators)

# 评估所创建的神经网络模型

kfold = KFold(n_splits=10,random_state=seed)

results = cross_val_score(pipeline,X,Y,cv=kfold)

print("Standardized:%.2f(%.2f)MSE"%(results.mean(),results.std()))

# 针对神经网络模型进行优化

# 提高神经网络性能的一种方法是添加更多层,这可能允许模型提取并重新组合数据中嵌入的高阶特征

def larger_model():

# 创建模型

model = Sequential()

model.add(Dense(13,input_dim=13,kernel_initializer='normal',activation='relu'))

model.add(Dense(6,kernel_initializer='normal',activation='relu'))

model.add(Dense(1,kernel_initializer='normal'))

# 编译模型

model.compile(loss='mean_squared_error',optimizer='adam')

return model

# 使用scikit-learn Pipeline 首先标准化数据集,然后创建和评估基线神经网络模型

numpy.random.seed(seed)

estimators = []

estimators.append(('standaedize',StandardScaler()))

estimators.append(('mlp',KerasRegressor(build_fn=larger_model,epochs=50,batch_size=5,verbose=0)))

pipeline = Pipeline(estimators)

# 评估所创建的神经网络模型

kfold = KFold(n_splits=10,random_state=seed)

results = cross_val_score(pipeline,X,Y,cv=kfold)

print("Larger:%.2f(%.2f)MSE"%(results.mean(),results.std()))

# 针对神经网络模型进行优化,评估更广泛的模型

# 提高神经网络性能的一种方法是使其更广泛,这可能允许模型提取并重新组合数据中嵌入的高阶特征

def wider_model():

# 创建模型

model = Sequential()

model.add(Dense(20, input_dim=13, kernel_initializer='normal', activation='relu'))

model.add(Dense(1, kernel_initializer='normal'))

# 编译模型

model.compile(loss='mean_squared_error', optimizer='adam')

# predict model

model.fit(X,Y,epochs=50,batch_size=5)

predict = model.predict(X)

# print(predict)

return model

# 使用scikit-learn Pipeline 首先标准化数据集,然后创建和评估基线神经网络模型

numpy.random.seed(seed)

estimators = []

estimators.append(('standaedize',StandardScaler()))

estimators.append(('mlp',KerasRegressor(build_fn=wider_model,epochs=100,batch_size=5,verbose=0)))

pipeline = Pipeline(estimators)

kfold = KFold(n_splits=10,random_state=seed)

results = cross_val_score(pipeline,X,Y,cv=kfold)

print("Wider:%.2f(%.2f)MSE"%(results.mean(),results.std()))

代码2:

import numpy

import pandas

from keras.models import Sequential

from keras.layers import Dense

from keras.wrappers.scikit_learn import KerasRegressor

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

import pandas as pd

# 导入数据

filename = 'housing.csv'

dataframe = pandas.read_csv(filename,delim_whitespace=True,header=None)

dataset = dataframe.values

# print(dataset)

# 把数据分为输入和输出两个变量

X = dataset[:,0:13]

Y = dataset[:,13]

# print(len(Y))

seed = 7

# # 定义基类模型

# def baseline_model():

# # 创建模型,与输入属性具有相同的神经元13

# model = Sequential()

# model.add(Dense(13,input_dim=13,kernel_initializer='normal',activation='relu'))

# model.add(Dense(1,kernel_initializer='normal'))

# # Compile model,使用高效的ADAM优化算法以及优化的最小均方误差损失函数

# model.compile(loss='mean_squared_error',optimizer='adam')

# return model

#

# # 固定随机种子的重现性

# seed = 7

# numpy.random.seed(seed)

# # 使用标准化数据集评估模型

# estimator = KerasRegressor(build_fn=baseline_model,epochs=100,batch_size=5,verbose=0)

# # 评估此基类模型,我们使用10倍交叉验证来评估模型

# kfold = KFold(n_splits=10,random_state=seed)

# results = cross_val_score(estimator,X,Y,cv=kfold)

# print("Results:%.2f(%.2f)MSE"%(results.mean(),results.std()))

#

# # 使用scikit-learn Pipeline 首先标准化数据集,然后创建和评估基线神经网络模型

# numpy.random.seed(seed)

# estimators = []

# estimators.append(('standaedize',StandardScaler()))

# estimators.append(('mlp',KerasRegressor(build_fn=baseline_model,epochs=50,batch_size=5,verbose=0)))

# pipeline = Pipeline(estimators)

# # 评估所创建的神经网络模型

# kfold = KFold(n_splits=10,random_state=seed)

# results = cross_val_score(pipeline,X,Y,cv=kfold)

# print("Standardized:%.2f(%.2f)MSE"%(results.mean(),results.std()))

#

#

# # 针对神经网络模型进行优化

# # 提高神经网络性能的一种方法是添加更多层,这可能允许模型提取并重新组合数据中嵌入的高阶特征

# def larger_model():

# # 创建模型

# model = Sequential()

# model.add(Dense(13,input_dim=13,kernel_initializer='normal',activation='relu'))

# model.add(Dense(6,kernel_initializer='normal',activation='relu'))

# model.add(Dense(1,kernel_initializer='normal'))

# # 编译模型

# model.compile(loss='mean_squared_error',optimizer='adam')

# return model

#

# # 使用scikit-learn Pipeline 首先标准化数据集,然后创建和评估基线神经网络模型

# numpy.random.seed(seed)

# estimators = []

# estimators.append(('standaedize',StandardScaler()))

# estimators.append(('mlp',KerasRegressor(build_fn=larger_model,epochs=50,batch_size=5,verbose=0)))

# pipeline = Pipeline(estimators)

# # 评估所创建的神经网络模型

# kfold = KFold(n_splits=10,random_state=seed)

# results = cross_val_score(pipeline,X,Y,cv=kfold)

# print("Larger:%.2f(%.2f)MSE"%(results.mean(),results.std()))

#

# 针对神经网络模型进行优化,评估更广泛的模型

# 提高神经网络性能的一种方法是使其更广泛,这可能允许模型提取并重新组合数据中嵌入的高阶特征

def wider_model():

# 创建模型

model = Sequential()

model.add(Dense(20, input_dim=13, kernel_initializer='normal', activation='relu'))

model.add(Dense(1, kernel_initializer='normal'))

# 编译模型

model.compile(loss='mean_squared_error', optimizer='adam')

# predict model

model.fit(X,Y,epochs=50,batch_size=5)

predict = model.predict(X)

# print(predict)

submit_txt = pd.DataFrame(predict)

print(submit_txt)

submit_txt.to_csv("predict_housing1.csv", sep=',', header=False, index=False)

return model

# 使用scikit-learn Pipeline 首先标准化数据集,然后创建和评估基线神经网络模型

numpy.random.seed(seed)

estimators = []

estimators.append(('standaedize',StandardScaler()))

estimators.append(('mlp',KerasRegressor(build_fn=wider_model,epochs=100,batch_size=5,verbose=0)))

pipeline = Pipeline(estimators)

kfold = KFold(n_splits=10,random_state=seed)

results = cross_val_score(pipeline,X,Y,cv=kfold)

print("Wider:%.2f(%.2f)MSE"%(results.mean(),results.std()))

2,结果

Results:-32.93(23.37)MSE Standardized:-29.54(27.53)MSE Larger:-23.31(27.07)MSE Wider:-21.76(26.31)MSE Process finished with exit code 0

结果2:

Using TensorFlow backend. Epoch 1/50 5/506 [..............................] - ETA: 15s - loss: 805.4844 435/506 [========================>.....] - ETA: 0s - loss: 250.8078 506/506 [==============================] - 0s 432us/step - loss: 228.3296 Epoch 2/50 5/506 [..............................] - ETA: 0s - loss: 61.6121 455/506 [=========================>....] - ETA: 0s - loss: 80.5485 506/506 [==============================] - 0s 127us/step - loss: 79.0767 Epoch 3/50 5/506 [..............................] - ETA: 0s - loss: 164.0555 485/506 [===========================>..] - ETA: 0s - loss: 69.5207 506/506 [==============================] - 0s 127us/step - loss: 68.9874 Epoch 4/50 5/506 [..............................] - ETA: 0s - loss: 45.3651 490/506 [============================>.] - ETA: 0s - loss: 63.1090 506/506 [==============================] - 0s 123us/step - loss: 63.5065 Epoch 5/50 5/506 [..............................] - ETA: 0s - loss: 31.9817 495/506 [============================>.] - ETA: 0s - loss: 60.3168 506/506 [==============================] - 0s 123us/step - loss: 59.6500 Epoch 6/50 5/506 [..............................] - ETA: 0s - loss: 75.1090 500/506 [============================>.] - ETA: 0s - loss: 57.5022 506/506 [==============================] - 0s 123us/step - loss: 57.5369 Epoch 7/50 5/506 [..............................] - ETA: 0s - loss: 20.0596 506/506 [==============================] - 0s 123us/step - loss: 55.5891 Epoch 8/50 5/506 [..............................] - ETA: 0s - loss: 67.5474 480/506 [===========================>..] - ETA: 0s - loss: 53.3222 506/506 [==============================] - 0s 125us/step - loss: 54.4315 Epoch 9/50 5/506 [..............................] - ETA: 0s - loss: 12.8274 485/506 [===========================>..] - ETA: 0s - loss: 52.5087 506/506 [==============================] - 0s 123us/step - loss: 51.9669 Epoch 10/50 5/506 [..............................] - ETA: 0s - loss: 44.8263 495/506 [============================>.] - ETA: 0s - loss: 50.9085 506/506 [==============================] - 0s 123us/step - loss: 50.2104 Epoch 11/50 5/506 [..............................] - ETA: 0s - loss: 10.7946 505/506 [============================>.] - ETA: 0s - loss: 48.8362 506/506 [==============================] - 0s 123us/step - loss: 48.7627 Epoch 12/50 5/506 [..............................] - ETA: 0s - loss: 29.8260 505/506 [============================>.] - ETA: 0s - loss: 47.2329 506/506 [==============================] - 0s 123us/step - loss: 47.1404 Epoch 13/50 5/506 [..............................] - ETA: 0s - loss: 15.9536 506/506 [==============================] - 0s 92us/step - loss: 43.1233 Epoch 14/50 5/506 [..............................] - ETA: 1s - loss: 36.2984 506/506 [==============================] - 0s 125us/step - loss: 43.6554 Epoch 15/50 5/506 [..............................] - ETA: 0s - loss: 11.6092 415/506 [=======================>......] - ETA: 0s - loss: 40.4716 506/506 [==============================] - 0s 123us/step - loss: 42.6683 Epoch 16/50 5/506 [..............................] - ETA: 0s - loss: 2.9016 425/506 [========================>.....] - ETA: 0s - loss: 38.2600 506/506 [==============================] - 0s 123us/step - loss: 39.1469 Epoch 17/50 5/506 [..............................] - ETA: 0s - loss: 26.3887 400/506 [======================>.......] - ETA: 0s - loss: 39.2786 506/506 [==============================] - 0s 123us/step - loss: 38.7250 Epoch 18/50 5/506 [..............................] - ETA: 1s - loss: 10.9436 490/506 [============================>.] - ETA: 0s - loss: 35.2829 506/506 [==============================] - 0s 154us/step - loss: 36.2559 Epoch 19/50 5/506 [..............................] - ETA: 0s - loss: 110.4335 420/506 [=======================>......] - ETA: 0s - loss: 38.3718 506/506 [==============================] - 0s 123us/step - loss: 37.2191 Epoch 20/50 5/506 [..............................] - ETA: 0s - loss: 4.4877 340/506 [===================>..........] - ETA: 0s - loss: 36.5170 506/506 [==============================] - 0s 154us/step - loss: 34.2345 Epoch 21/50 5/506 [..............................] - ETA: 0s - loss: 3.0911 335/506 [==================>...........] - ETA: 0s - loss: 27.8048 506/506 [==============================] - 0s 154us/step - loss: 34.6101 Epoch 22/50 5/506 [..............................] - ETA: 0s - loss: 15.4901 400/506 [======================>.......] - ETA: 0s - loss: 35.1135 506/506 [==============================] - 0s 154us/step - loss: 36.9043 Epoch 23/50 5/506 [..............................] - ETA: 0s - loss: 21.1735 455/506 [=========================>....] - ETA: 0s - loss: 33.6868 506/506 [==============================] - 0s 123us/step - loss: 34.8258 Epoch 24/50 5/506 [..............................] - ETA: 0s - loss: 85.7751 415/506 [=======================>......] - ETA: 0s - loss: 35.5314 506/506 [==============================] - 0s 123us/step - loss: 34.5349 Epoch 25/50 5/506 [..............................] - ETA: 0s - loss: 17.5053 385/506 [=====================>........] - ETA: 0s - loss: 32.1707 506/506 [==============================] - 0s 154us/step - loss: 33.2426 Epoch 26/50 5/506 [..............................] - ETA: 0s - loss: 15.1037 505/506 [============================>.] - ETA: 0s - loss: 32.9444 506/506 [==============================] - 0s 123us/step - loss: 32.9438 Epoch 27/50 5/506 [..............................] - ETA: 0s - loss: 9.0323 506/506 [==============================] - 0s 123us/step - loss: 33.0248 Epoch 28/50 5/506 [..............................] - ETA: 0s - loss: 72.4469 506/506 [==============================] - 0s 92us/step - loss: 31.2194 Epoch 29/50 5/506 [..............................] - ETA: 0s - loss: 19.6237 395/506 [======================>.......] - ETA: 0s - loss: 31.5439 506/506 [==============================] - 0s 123us/step - loss: 30.4992 Epoch 30/50 5/506 [..............................] - ETA: 0s - loss: 65.1235 400/506 [======================>.......] - ETA: 0s - loss: 31.3186 506/506 [==============================] - 0s 123us/step - loss: 30.5432 Epoch 31/50 5/506 [..............................] - ETA: 0s - loss: 8.7552 395/506 [======================>.......] - ETA: 0s - loss: 27.7944 506/506 [==============================] - 0s 154us/step - loss: 29.7216 Epoch 32/50 5/506 [..............................] - ETA: 0s - loss: 22.3568 505/506 [============================>.] - ETA: 0s - loss: 29.3601 506/506 [==============================] - 0s 123us/step - loss: 29.3569 Epoch 33/50 5/506 [..............................] - ETA: 0s - loss: 13.9683 506/506 [==============================] - 0s 123us/step - loss: 29.7533 Epoch 34/50 5/506 [..............................] - ETA: 0s - loss: 11.6751 506/506 [==============================] - 0s 123us/step - loss: 29.6476 Epoch 35/50 5/506 [..............................] - ETA: 0s - loss: 2.7265 506/506 [==============================] - 0s 92us/step - loss: 27.9348 Epoch 36/50 5/506 [..............................] - ETA: 1s - loss: 39.1157 506/506 [==============================] - 0s 123us/step - loss: 27.7667 Epoch 37/50 5/506 [..............................] - ETA: 0s - loss: 11.3104 395/506 [======================>.......] - ETA: 0s - loss: 28.7139 506/506 [==============================] - 0s 123us/step - loss: 26.7338 Epoch 38/50 5/506 [..............................] - ETA: 0s - loss: 33.0695 390/506 [======================>.......] - ETA: 0s - loss: 29.3709 506/506 [==============================] - 0s 123us/step - loss: 26.6316 Epoch 39/50 5/506 [..............................] - ETA: 1s - loss: 8.1361 506/506 [==============================] - 0s 123us/step - loss: 26.4067 Epoch 40/50 5/506 [..............................] - ETA: 1s - loss: 13.7220 506/506 [==============================] - 0s 123us/step - loss: 25.9756 Epoch 41/50 5/506 [..............................] - ETA: 0s - loss: 36.6162 405/506 [=======================>......] - ETA: 0s - loss: 27.9074 506/506 [==============================] - 0s 123us/step - loss: 27.2547 Epoch 42/50 5/506 [..............................] - ETA: 0s - loss: 44.2660 415/506 [=======================>......] - ETA: 0s - loss: 24.8610 506/506 [==============================] - 0s 123us/step - loss: 25.4775 Epoch 43/50 5/506 [..............................] - ETA: 0s - loss: 30.5703 425/506 [========================>.....] - ETA: 0s - loss: 25.0591 506/506 [==============================] - 0s 123us/step - loss: 26.3528 Epoch 44/50 5/506 [..............................] - ETA: 0s - loss: 15.4020 435/506 [========================>.....] - ETA: 0s - loss: 26.9322 506/506 [==============================] - 0s 123us/step - loss: 25.1340 Epoch 45/50 5/506 [..............................] - ETA: 0s - loss: 11.2487 440/506 [=========================>....] - ETA: 0s - loss: 24.6880 506/506 [==============================] - 0s 123us/step - loss: 24.4546 Epoch 46/50 5/506 [..............................] - ETA: 0s - loss: 3.0398 440/506 [=========================>....] - ETA: 0s - loss: 23.9014 506/506 [==============================] - 0s 123us/step - loss: 24.2430 Epoch 47/50 5/506 [..............................] - ETA: 0s - loss: 14.0619 450/506 [=========================>....] - ETA: 0s - loss: 24.5512 506/506 [==============================] - 0s 123us/step - loss: 23.2035 Epoch 48/50 5/506 [..............................] - ETA: 0s - loss: 20.2924 455/506 [=========================>....] - ETA: 0s - loss: 25.8009 506/506 [==============================] - 0s 123us/step - loss: 25.0679 Epoch 49/50 5/506 [..............................] - ETA: 0s - loss: 5.2805 445/506 [=========================>....] - ETA: 0s - loss: 23.9418 506/506 [==============================] - 0s 123us/step - loss: 23.4596 Epoch 50/50 5/506 [..............................] - ETA: 0s - loss: 194.0802 455/506 [=========================>....] - ETA: 0s - loss: 24.0001 506/506 [==============================] - 0s 123us/step - loss: 22.7273

六,基于Keras的神经网络回归模型

下面我们自己建立一个神经网络模型来看看。

1,代码:

import matplotlib.pyplot as plt

from math import sqrt

from matplotlib import pyplot

import pandas as pd

from numpy import concatenate

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error

from keras.models import Sequential

from keras.layers.core import Dense ,Dropout,Activation

from keras.optimizers import Adam

'''Keras实现神经网络回归模型'''

# 读取数据

path = 'housing.csv'

train_df = pd.read_csv(path)

# 删除不用字符串字段

# dataset = train_df.drop('jh',axis=1)

# df转换成array

values =train_df.values

# 原始数据标准化,为了加速收敛

scaler = MinMaxScaler(feature_range=(0,1))

scaled = scaler.fit_transform(values)

y = scaled[:,-1]

X = scaled[:,0:-1]

# 随机拆分训练集与测试集

from sklearn.model_selection import train_test_split

train_X,test_X,train_y,test_y = train_test_split(X,y,test_size=0.25)

# 全连接神经网络

model = Sequential()

input = X.shape[1]

# 隐藏层128

model.add(Dense(128,input_shape=(input,)))

model.add(Activation('rule'))

# Dropout层用于防止过拟合

# model.add(Dropout(0.2))

# 隐藏层128

model.add(Dense(128))

model.add(Activation('relu'))

# model.add(Dropout(0.2))

# 没有激活函数用于输出层,因为这是一个回归问题,

# 我们希望直接预测数值,而不需要采用激活函数进行变换

model.add(Dense(1))

# 使用高效的ADAM优化算法以及优化的最小均方误差损失函数

model.compile(loss='mean_squared_error',optimizer=Adam())

# early stopping

from keras.callbacks import EarlyStopping

early_stopping = EarlyStopping(monitor='val_loss',patience=50,verbose=2)

# 训练

history = model.fit(train_X,train_y,epochs=300,batch_size=20,

validation_data=(test_X,test_y),verbose=2,

shuffle=False,callbacks=[early_stopping])

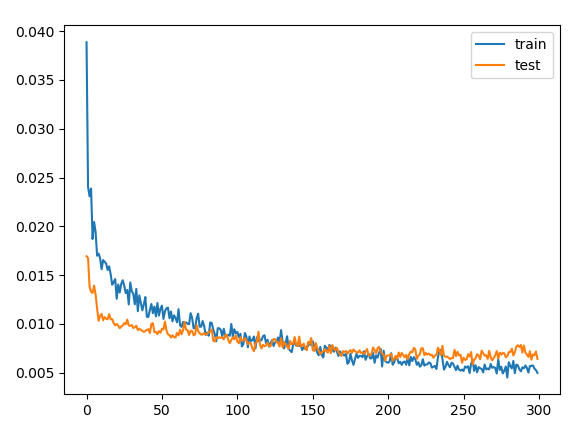

# loss曲线

pyplot.plot(history.history['loss'],label='train')

pyplot.plot(history.history['val_loss'],label='test')

pyplot.legend()

pyplot.show()

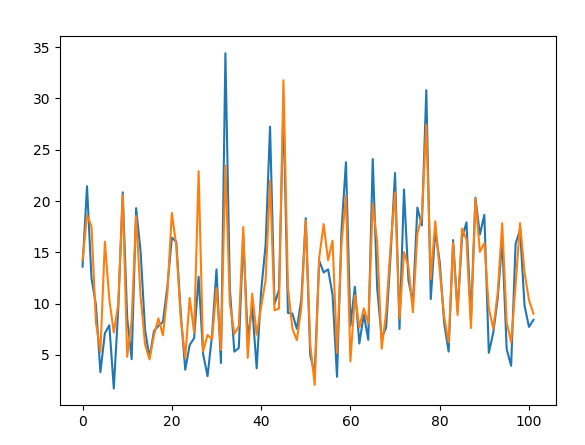

# 预测

yhat = model.predict(test_X)

# 预测y 逆标准化

inv_yhat0 = concatenate((test_X,yhat),axis=1)

inv_yhat1 = scaler.inverse_transform(inv_yhat0)

inv_yhat = inv_yhat1[:,-1]

# 原始y逆标准化

test_y = test_y.reshape(len(test_y),1)

inv_y0 = concatenate((test_X,test_y),axis=1)

inv_y1 = scaler.inverse_transform(inv_y0)

inv_y = inv_y1[:,-1]

# 计算RMSE

rmse = sqrt(mean_squared_error(inv_y,inv_yhat))

print('Test RMSE: %.3f' % rmse)

plt.plot(inv_y)

plt.plot(inv_yhat)

plt.show()

如果Boston数据报错,那么可以直接导入Boston数据(在深度学习中这算是小数据集,我们可以直接导入sklearn中Boston数据集)。

代码如下:

# 读取数据 boston = datasets.load_boston() df_values =boston.data

Using TensorFlow backend. _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense_1 (Dense) (None, 128) 1664 _________________________________________________________________ activation_1 (Activation) (None, 128) 0 _________________________________________________________________ dropout_1 (Dropout) (None, 128) 0 _________________________________________________________________ dense_2 (Dense) (None, 128) 16512 _________________________________________________________________ activation_2 (Activation) (None, 128) 0 _________________________________________________________________ dropout_2 (Dropout) (None, 128) 0 _________________________________________________________________ dense_3 (Dense) (None, 1) 129 ================================================================= Total params: 18,305 Trainable params: 18,305 Non-trainable params: 0 _________________________________________________________________ Train on 404 samples, validate on 102 samples

此处对结果做以解释:由于神经网络回归,我们是将506个数据按照4:1划分成测试集和训练集,所以得到的结果,也就是我预测的结果是101个数据,。也就是随机取到的数据,并且此神经网络模型建立之前,做了数据预处理,利用fit_transform()函数将数据转化成标准正态分布,并将其归到(0,1)之间,所以得到的结果是零点几也不足为奇,如果要得到原始数据,我们可以对数据不做归一化处理,这样得到的结果也就是原始值。

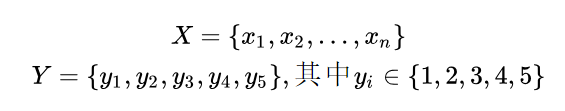

Keras解决多标签分类问题

multi-class classification problem :多分类问题是相对于二分类问题(典型的0-1分类)来说的,意思是类别总数超过两个的分类问题,比如手写数字识别mnist的label总数有10个,每一样本的标签在这10个中取一个。

multi-class classification problem:多标签分类(或者叫做多标记分类),是指一个样本的标签数量不止一个,即一个样本对应多个标签。

1,一般问题定义

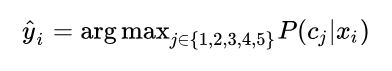

一般情况下,假设我们的分类问题有5个标签,样本数量为n ,数学表示为:

我们用神经网络模型对样本建模,计算:样本的标签概率。模型的输出为:

现在我们用keras的Sequential模型建立一个简单的模型:

from keras.layers import Input,Dense from keras.models import Sequential model = Sequential() model.add(Dense(10, activation="relu", input_shape=(10,))) model.add(Dense(5))

2,multi-class classification

对于多分类问题,接下来要做的是输出层的设计。在多分类中,最常用的就是softmax层。

softmax层中的softmax函数是logistic函数在多分类问题上的推广,它将一个N维的实数向量压缩成一个满足特定条件的N维实数向量。压缩后的向量满足两个条件:

- 像两种的每个元素的大小都在[0,1]

- 所有向量元素的和为1

因此,softmax适用于多分类问题中对每个类别的概率判断,softmax计算公式如下:

python代码示例:

import numpy as np

def Softmax_sim(x):

y = np.exp(x)

return y/np.sum(y)

x = np.array([1.0,2.0,3.0,4.0,1.0])

print(Softmax_sim(x))

#输出:[ 0.03106277 0.08443737 0.22952458 0.6239125 0.03106277]

假设隐藏层的输出为[1.0,2.0,3.0,4.0,1.0],我们可以根据softmax函数判断属于标签4

所以,利用keras的函数式定义多分类的模型:

from keras.layers import Input,Dense from keras.models import Model inputs = Input(shape=(10,)) hidden = Dense(units=10,activation='relu')(inputs) output = Dense(units=5,activation='softmax')(hidden)

3,multi-label classification

在预测多标签分类问题时,假设隐藏层的输出是[-1.0, 5.0, -0.5, 5.0, -0.5 ],如果用softmax函数的话,那么输出为:

z = np.array([-1.0, 5.0, -0.5, 5.0, -0.5 ]) print(Softmax_sim(z)) # 输出为[ 0.00123281 0.49735104 0.00203256 0.49735104 0.00203256]

通过使用softmax,我们可以清楚的选择标签2和标签4,但是我们必须知道每个样本需要多少个标签,或者为概率选择一个阈值。这显然不是我们想要的,因为样本属于每个标签的概率应该是独立的。

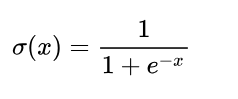

对于一个二分类问题,常用的激活函数是sigmoid函数:

PS:Sigmoid函数之所以在之前很长一段时间作为神经网络激活函数(现在大家都基本使用Relu函数了),一个很重要的原因是Sigmoid函数的倒数很容易计算,可以用自身表示:

python代码为:

import numpy as np

def Sigmoid_sim(x):

return 1 /(1+np.exp(-x))

a = np.array([-1.0, 5.0, -0.5, 5.0, -0.5])

print(Sigmoid_sim(a))

#输出为: [ 0.26894142 0.99330715 0.37754067 0.99330715 0.37754067]

此时,每个标签的概率即是独立的,完整整个模型构建之后,最后一步中最重要的是为模型的编译选择损失函数。在多标签分类中,大多使用binary_crossentropy损失而不是通常在多分类中使用的categorical_crossentropy损失函数,这可能看起来不合理,但是因为每个输出节点都是独立的,选择二元损失,并将网络输出建模为每个标签独立的bernoulli分布。整个多标签分类的模型为:

from keras.models import Model from keras.layers import Input,Dense inputs = Input(shape=(10,)) hidden = Dense(units=10,activation='relu')(inputs) output = Dense(units=5,activation='sigmoid')(hidden) model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

4,multi-class 和 multi-label的区别

multi-class 是相对于binary二分类来说的,指需要分类的东西不止有两个类别,可能是三个类别取一个(如iris分类),或者是10个类别取一个(如手写数字识别mnist)

而multi-label 是更加general的一种情况了,他说为什么一个sample的标签只能有一个呢。为什么一张图不是猫就是狗呢?难道我不能训练一个人工智能,它能告诉我这张图片既有猫又有狗呢?

话不多说,下面直接看Keras的multi-label代码:

def __create_model(self):

from keras.models import Sequential

from keras.layers import Dense

model = Sequential()

print("create model. feature_dim = %s, label_dim = %s" % (self.feature_dim, self.label_dim))

model.add(Dense(500, activation='relu', input_dim=self.feature_dim))

model.add(Dense(100, activation='relu'))

model.add(Dense(self.label_dim, activation='sigmoid'))

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

return model

稍微解说一下:

- * 整个网络是fully connected全连接网络。

- * 网络结构是输入层=你的特征的维度

- * 隐藏层是500*100,激励函数都是relu。隐藏层的节点数量和深度请根据自己的数量来自行调整,这里只是举例。

- * 输出层是你的label的维度。使用sigmoid作为激励,使输出值介于0-1之间。

- * 训练数据的label请用0和1的向量来表示。0代表这条数据没有这个位的label,1代表这条数据有这个位的label。假设3个label的向量[天空,人,大海]的向量值是[1,1,0]的编码的意思是这张图片有天空,有人,但是没有大海。

- * 使用binary_crossentropy来进行损失函数的评价,从而在训练过程中不断降低交叉商。实际变相的使1的label的节点的输出值更靠近1,0的label的节点的输出值更靠近0。

https://blog.csdn.net/tMb8Z9Vdm66wH68VX1/article/details/81090757

参考文献:(本文是学习此文献的知识,做笔记,仅此而已)

https://machinelearningmastery.com/regression-tutorial-keras-deep-learning-library-python/

https://blog.csdn.net/aliceyangxi1987/article/details/73532651

https://zhuanlan.zhihu.com/p/34712246