每周一坑-nginx日志写不到elk索引

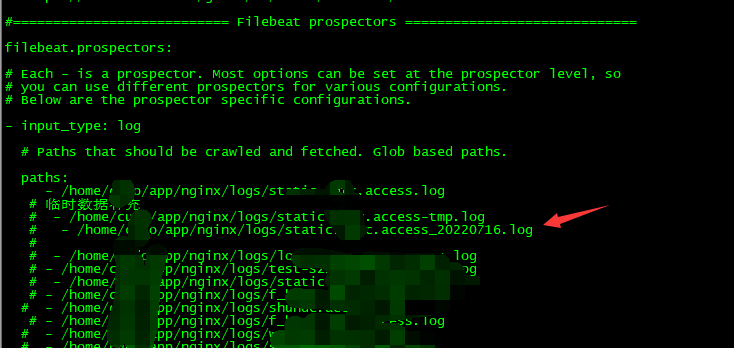

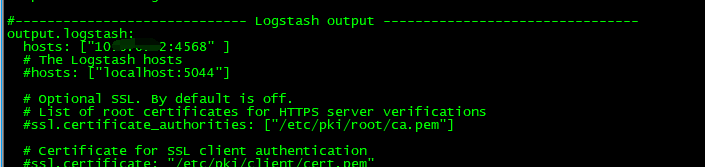

其中 Logstash output 配置写到 logstash 的 服务器ip和端口

b)运行命令:

nohup /usr/share/filebeat/bin/filebeat -e -c /etc/filebeat/filebeat.yml >> /tmp/startfilebeat.log 2>/tmp/startServerError.log & echo "filebeat success!!"

(2)在es服务器上运行:

/home/elk/xx/logstash-xx/bin/logstash -f /home/elk/xx/logstash-xx/config/logstash-xxx-weekly.conf

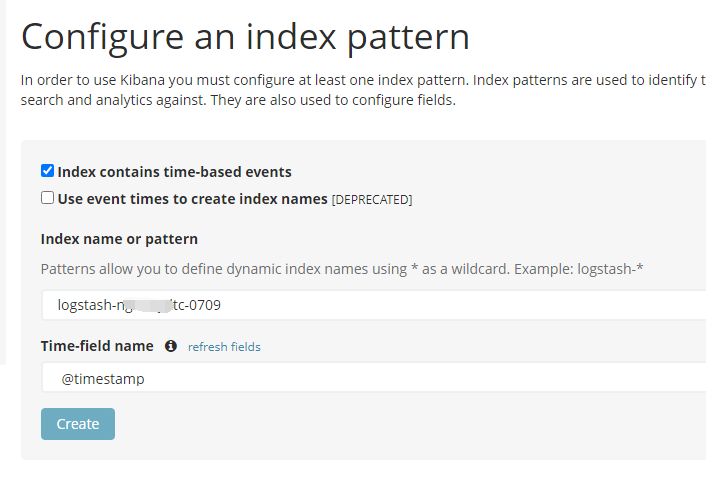

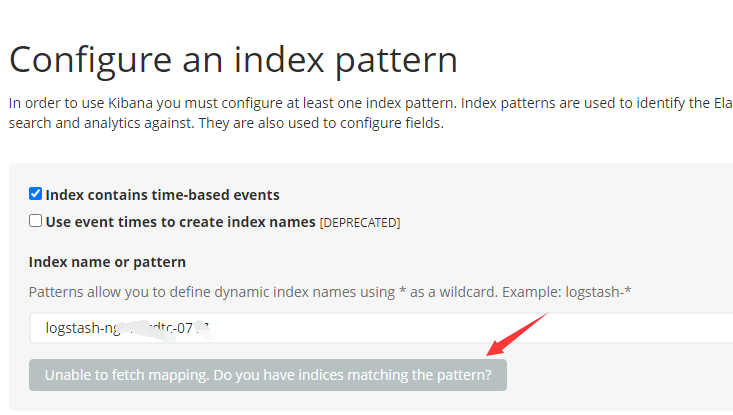

(3)如果没问题的话,kibana面板就可以添加索引的了

然而,这两天我想把nginx日志按上面步骤导入到es的时候,发现导入不了,表现在logstash启动之后,既没有报错,而且在kibana面板上也添加不了每周的新增索引。

跑完这条命令,控制台一点报错都没打印:

/home/elk/xx/logstash-xx/bin/logstash -f /home/elk/xx/logstash-xx/config/logstash-xxx-weekly.conf

最后查出原因,是nginx主配置文件定义的日志格式: log_format 跟logstash配置文件的不适配。最新的日志格式用:elk_logs, 而现在用了旧的那种。我想想是因为上周做了应急演练的拉闸测试,其中需要替换虚拟主机配置文件,用于替换的配置文件刚好就是读了旧的日志格式。

---------------------------------------------- 分割线--总结文

以下为总结文,是我踩了不少坑总结出来的,两种nginx日志格式分别对应logstash配置文件

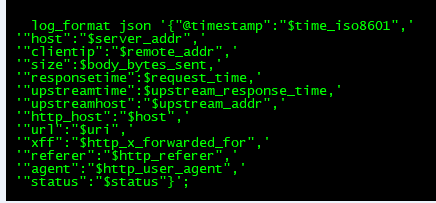

一、名字为json的日志格式(注意,json是个名字,名字随便起)

1、nginx主配置文件定义日志格式名字为json:

2、某虚拟主机配置文件使用该日志格式

server { 。。。 location / { 。。。 access_log /home/{普通用户}/app/nginx/logs/json.access.log json; } }

3、适配的logstash配置文件内容如下:

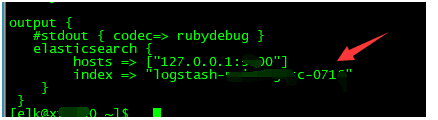

input { file { path=>"/home/{普通用户}/app/nginx/logs/json.access.log" start_position=>"beginning" } } filter { # 为了兼容中文路径,这里做了下数据替换 mutate { gsub => ["message", "\\x", "\\\x"] gsub => ["message", ":-,", ":0,"] } json { # 从数据中取出message source => "message" # 删除多余字段 remove_field => "message" remove_field => "[beat][hostname]" remove_field => "[beat][name]" remove_field => "[beat][version]" remove_field => "@version" remove_field => "offset" remove_field => "input_type" remove_field => "tags" remove_field => "type" remove_field => "host" } mutate { convert => ["status", "integer"] convert => ["size","integer"] convert => ["upstreamtime", "float"] convert => ["responsetime", "float"] } geoip { source => "clientip" database => "/home/elk/elk5.2/logstash-5.2.1/config/GeoLite2-City.mmdb" fields => ["city_name", "country_code2", "country_name", "latitude", "longitude", "region_name"] #指定自己所需的字段 add_field => [ "[geoip][location]", "%{[geoip][longitude]}" ] add_field => [ "[geoip][location]", "%{[geoip][latitude]}" ] target => "geoip" } mutate { convert => [ "[geoip][location]", "float" ] } if "_geoip_lookup_failure" in [tags] { drop { } } } output { # stdout { # codec => rubydebug #} elasticsearch { hosts => ["127.0.0.1:{es监听端口}"] index => "logstash_20220721" } }

二、名字为elk_logs的日志格式

后来我是从上面的json名字改良成这款,因为能读入到grafana分析【https://grafana.com/grafana/dashboards/11190】,装逼体面过人~~~

这个我觉得比较重要,而且日志信息比较全,所以贴全代码

1、nginx主配置文件定义日志格式名字为 elk_logs:

(优化过,获取真实客户端ip,下面的 real_remote_addr)

vim nginx.conf

http { 。。。。 map $http_x_forwarded_for $real_remote_addr { "" $remote_addr; ~^(?P<firstAddr>[0-9\.]+),?.*$ $firstAddr; } #新定义的日志格式-20220518: 导入elk分析 log_format elk_logs '{"@timestamp":"$time_iso8601",' '"host":"$hostname",' '"server_ip":"$server_addr",' '"client_ip":"$remote_addr",' '"xff":"$http_x_forwarded_for",' '"real_remote_addr":"$real_remote_addr",' '"domain":"$host",' '"url":"$uri",' '"referer":"$http_referer",' '"upstreamtime":"$upstream_response_time",' '"responsetime":"$request_time",' '"status":"$status",' '"size":"$body_bytes_sent",' '"protocol":"$server_protocol",' '"upstreamhost":"$upstream_addr",' '"file_dir":"$request_filename",' '"http_user_agent":"$http_user_agent"' '}'; 。。。 }

2、nginx虚拟主机配置文件

access_log /home/{普通用户}/app/nginx/logs/xx.access.log elk_logs;

3、适配的logstash配置文件内容如下:

input { beats { port => 4568 client_inactivity_timeout => 600 } } filter { # 为了兼容中文路径,这里做了下数据替换 mutate { gsub => ["message", "\\x", "\\\x"] gsub => ["message", ":-,", ":0,"] } json { # 从数据中取出message source => "message" # 删除多余字段 remove_field => "message" remove_field => "[beat][hostname]" remove_field => "[beat][name]" remove_field => "[beat][version]" remove_field => "@version" remove_field => "offset" remove_field => "input_type" remove_field => "tags" remove_field => "type" remove_field => "host" } mutate { convert => ["status", "integer"] convert => ["size","integer"] convert => ["upstreamtime", "float"] convert => ["responsetime", "float"] } geoip { target => "geoip" source => "real_remote_addr" database => "/home/elk/elk5.2/logstash-5.2.1/config/GeoLite2-City.mmdb" add_field => [ "[geoip][location]", "%{[geoip][longitude]}" ] add_field => [ "[geoip][location]", "%{[geoip][latitude]}" ] # 去掉显示 geoip 显示的多余信息 remove_field => ["[geoip][latitude]", "[geoip][longitude]", "[geoip][country_code]", "[geoip][country_code2]", "[geoip][country_code3]", "[geoip][timezone]", "[geoip][continent_code]", "[geoip][region_code]"] } mutate { convert => [ "size", "integer" ] convert => [ "status", "integer" ] convert => [ "responsetime", "float" ] convert => [ "upstreamtime", "float" ] convert => [ "[geoip][location]", "float" ] # 过滤 filebeat 没用的字段,这里过滤的字段要考虑好输出到es的,否则过滤了就没法做判断 remove_field => [ "ecs","agent","host","cloud","@version","input","logs_type" ] } if "_geoip_lookup_failure" in [tags] { drop { } } # 根据http_user_agent来自动处理区分用户客户端系统与版本 useragent { source => "http_user_agent" target => "ua" # 过滤useragent没用的字段 remove_field => [ "[ua][minor]","[ua][major]","[ua][build]","[ua][patch]","[ua][os_minor]","[ua][os_major]" ] } } output { #stdout { codec=> rubydebug } elasticsearch { hosts => ["127.0.0.1:{es监听端口}"] index => "logstash_20220721" } }

---------------------------------------------- 分割线-- 吐槽:goaccess

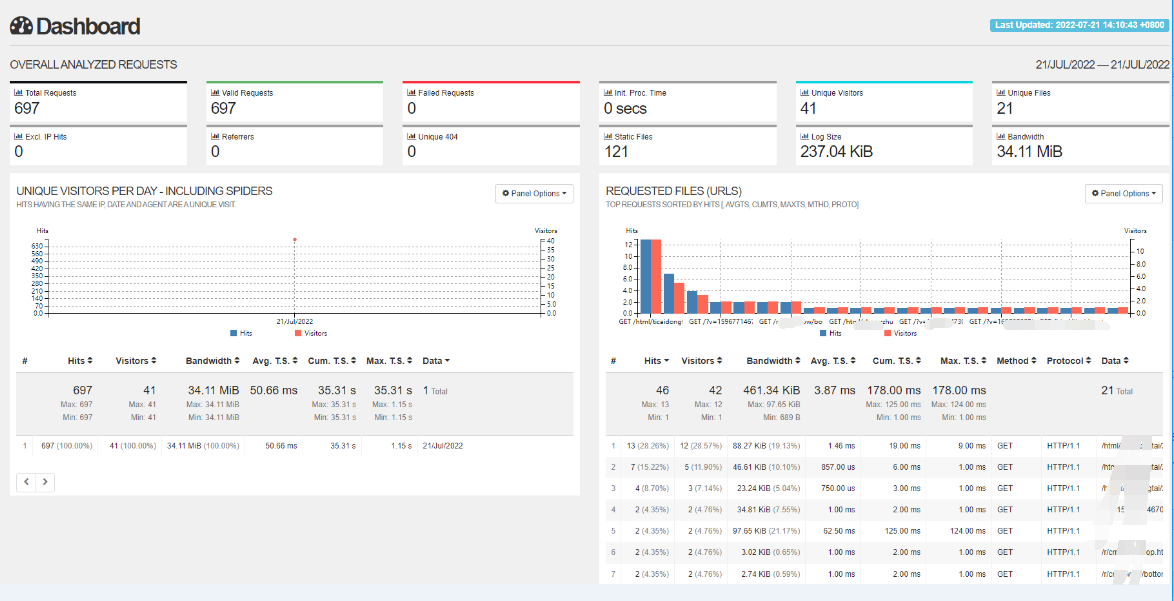

最后说说这个有点恶心的,goaccess,一个用于nginx日志分析的小工具,用起来确实方便,但我没试过读大文件效率怎么样

log_format test '$server_name $remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for" ' '$upstream_addr $request_time $upstream_response_time';

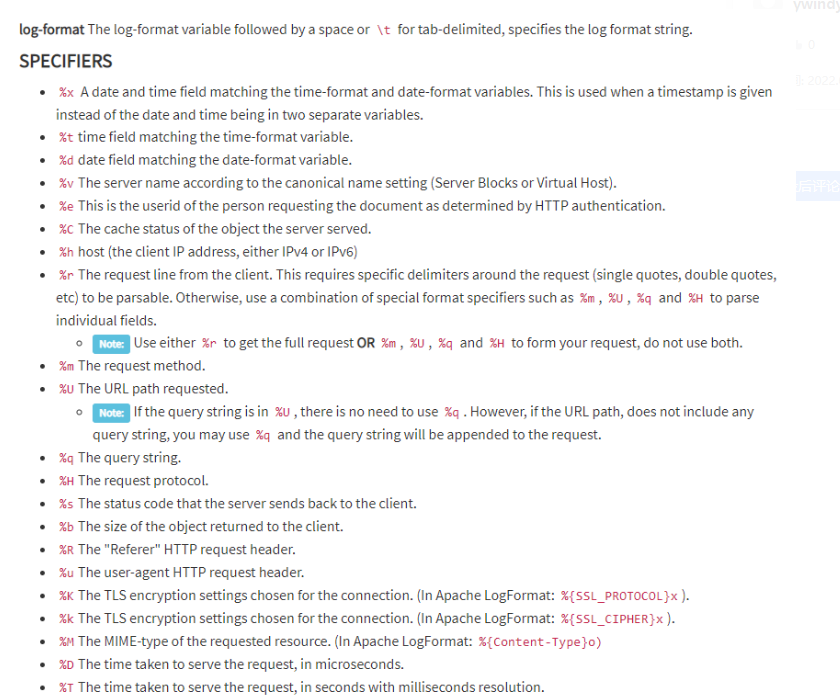

(2)goaccess配置文件

log-format %^ %h %^ %^ [%d:%t %^] "%r" %s %b "%R" "%u" "%^" %^ %T %^

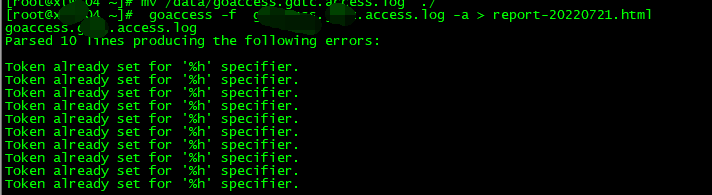

(3)运行:

goaccess -f access.log -a > report-20220721.html

会得到一个漂亮的html报告:

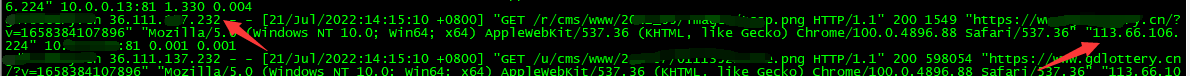

会发现说的客户端ip实际上都是防火墙的,我想要真实客户端ip,于是有了 http_x_forwarded_for 或者

然而,这个东西感觉无法定义自己想要的,比如我想要真实客户端ip,一般用这个nginx内置变量获取:http_x_forwarded_for 或者 $real_remote_addr

根据类似下表的资料去测试匹配,一直报错:

老实说,就日志输出而言,

$remote_addr 和 $http_x_forwarded_for 输出都是 ip 格式,但是用 %h 去匹配 $http_x_forwarded_for 就不行

至于为啥,我也不知道 = =,一个谜,洗洗睡