wenet在arm64开发板rk3588编译踩坑记录

第一步:手动编译pytorch

wenet用的是libtorch1.10.0,这里需要下载pytorch1.10.0进行编译。编译参考了https://lijingle.com/thread-33-1-1.html 和 https://icode.best/i/22625544885021

git clone -b v1.10.0 https://github.com/pytorch/pytorch.git pip install typing-extensions 如果编译时报错缺少这个包,需要提前安装 export USE_CUDA=False 不编译CUDA export BUILD_TEST=False 可节省编译时间 export USE_MKLDNN=False MKLDNN是针对intel cpu 的一个加速包,这里可以不编译 export USE_NNPACK=True 量化推理用到 有些教程里将USE_NNPACK 和 USE_QNNPACK设置成False了,会导致wenet加载模型时报错,尤其是加载量化模型时。 export USE_QNNPACK=True 量化推理用到 有些教程里将USE_NNPACK 和 USE_QNNPACK设置成False了,会导致wenet加载模型时报错,尤其是加载量化模型时。 export MAX_JOBS=1 编译时用的线程数量,可适当增大。若不设置或设置的值太大,编译时会报错,类似wait for other unfinished jobs 和 killed signal terminated program cc1plus 的错误。 cd pytorch sudo -E python setup.py install

内存不够或MAX_JOBS设置太大时会出现如下错误。 https://github.com/pytorch/pytorch/issues/49078

[ 73%] Building CXX object caffe2/CMakeFiles/torch_cpu.dir/__/aten/src/ATen/CPUType.cpp.o c++: fatal error: Killed signal terminated program cc1plus compilation terminated. make[2]: *** [caffe2/CMakeFiles/torch_cpu.dir/build.make:3717: caffe2/CMakeFiles/torch_cpu.dir/__/aten/src/ATen/CPUType.cpp.o] Error 1 make[1]: *** [CMakeFiles/Makefile2:2646: caffe2/CMakeFiles/torch_cpu.dir/all] Error 2 make: *** [Makefile:141: all] Error 2 Traceback (most recent call last): File "setup.py", line 724, in <module> build_deps() File "setup.py", line 312, in build_deps build_caffe2(version=version, File "/home/kuma/build/pytorch/tools/build_pytorch_libs.py", line 62, in build_caffe2 cmake.build(my_env) File "/home/kuma/build/pytorch/tools/setup_helpers/cmake.py", line 346, in build self.run(build_args, my_env) File "/home/kuma/build/pytorch/tools/setup_helpers/cmake.py", line 141, in run check_call(command, cwd=self.build_dir, env=env) File "/usr/lib/python3.8/subprocess.py", line 364, in check_call raise CalledProcessError(retcode, cmd) subprocess.CalledProcessError: Command '['cmake', '--build', '.', '--target', 'install', '--config', 'Release', '--', '-j', '1']' returned non-zero exit status 2.

- 测试libtorch是否编译成功。

在同一个文件夹下分别创建example-app.cpp 和 CMakeLists.txt,shell中运行如下命令行

mkdir build && cd build && cmake .. && make

编译不报错说明成功。

example-app.cpp如下:

#include <torch/script.h> // One-stop header. #include <iostream> #include <memory> int main(int argc, const char* argv[]) { if (argc != 2) { std::cerr << "usage: example-app <path-to-exported-script-module>\n"; return -1; } torch::jit::script::Module module; try { // Deserialize the ScriptModule from a file using torch::jit::load(). module = torch::jit::load(argv[1]); } catch (const c10::Error& e) { std::cerr << "error loading the model\n"; return -1; } std::cout << "Load model ok\n"; // test the model std::vector<torch::jit::IValue> inputs; inputs.push_back(torch::ones({1, 1, 30, 30})); at::Tensor output = module.forward(inputs).toTensor(); std::cout << output.slice(/*dim=*/1, /*start=*/0, /*out=*/5) << '\n'; }

CMakeLists.txt如下

cmake_minimum_required(VERSION 3.0 FATAL_ERROR) project(custom_ops) set(Torch_DIR "/home/firefly/pytorch/torch") # Adding the directory where torch as been installed find_package(Torch REQUIRED PATHS ${Torch_DIR} NO_DEFAULT_PATH) add_executable(example-app example-app.cpp) target_link_libraries(example-app "${TORCH_LIBRARIES}") set_property(TARGET example-app PROPERTY CXX_STANDARD 14)

- 测试pytorch。 shell中运行,不报错说明成功

python import torch torch.__version__

第二步:wenet编译

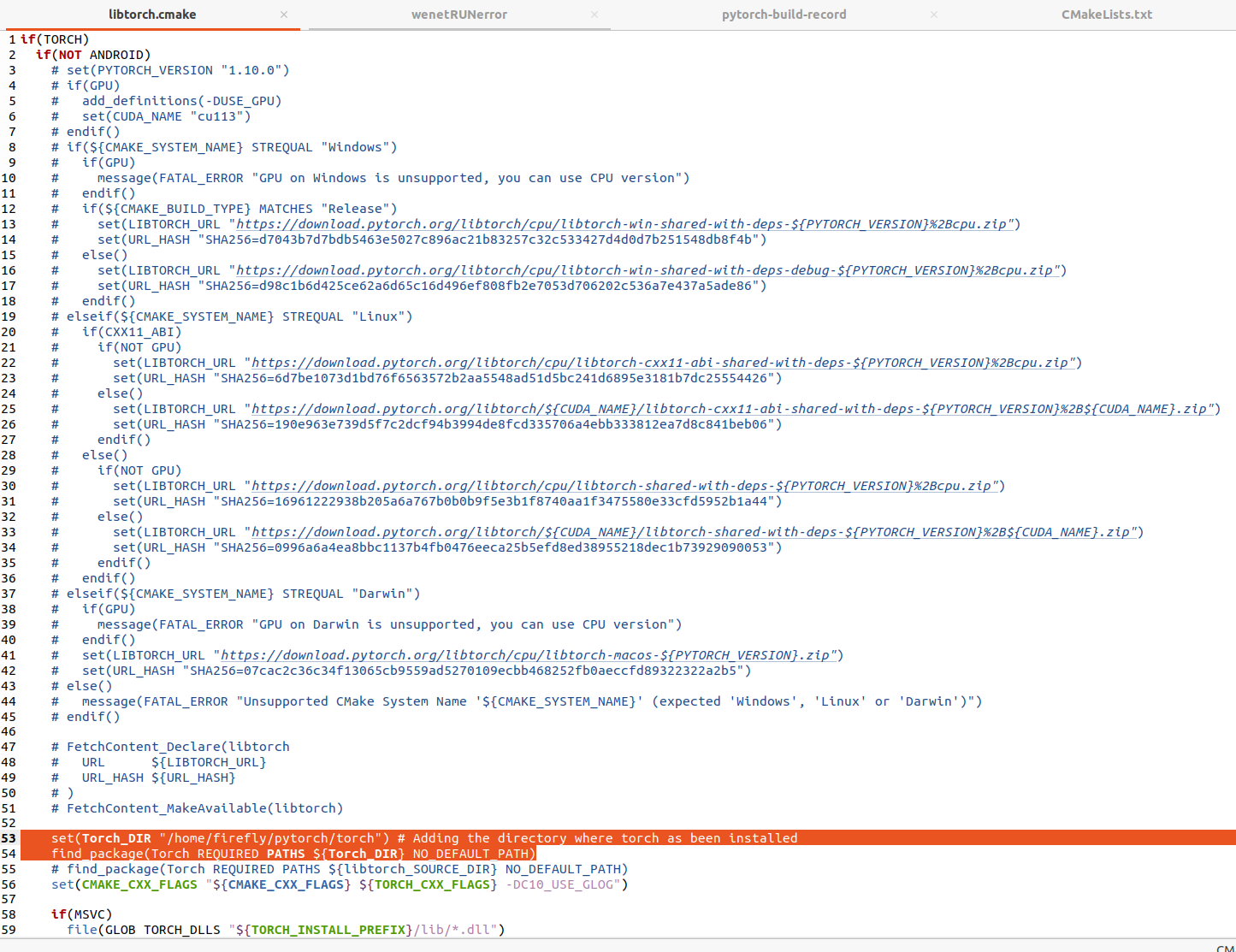

1)修该cmake文件夹下的libtorch.cmake

原wenet中是下载libtorch,这里改成第一步自己编译好的pytorch

注释libtorch下载部分,增加如下代码

set(Torch_DIR "your_path/pytorch/torch") 这里修改成你的pytorch安装路径

find_package(Torch REQUIRED PATHS ${Torch_DIR} NO_DEFAULT_PATH)

如下图所示

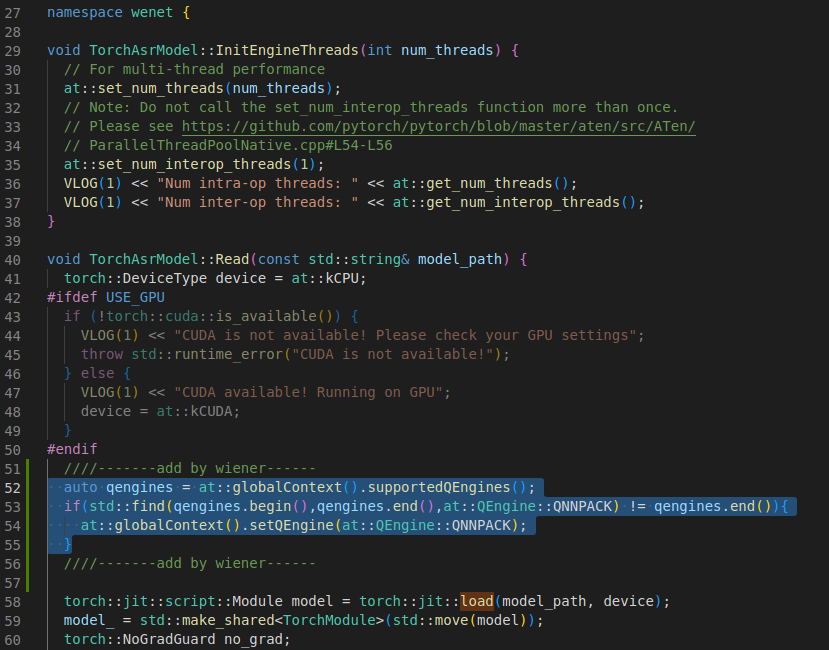

2)修改torch_asr_model.cc (在runtime/core/文件夹下)

在加载模型 torch::jit:load(model_path,device) 前,增加如下代码,

auto qengines = at::globalContext().supportedQEngines(); if (std::find(qengines.begin(), qengines.end(), at::QEngine::QNNPACK) != qengines.end()) { at::globalContext().setQEngine(at::QEngine::QNNPACK); }

如下图所示:

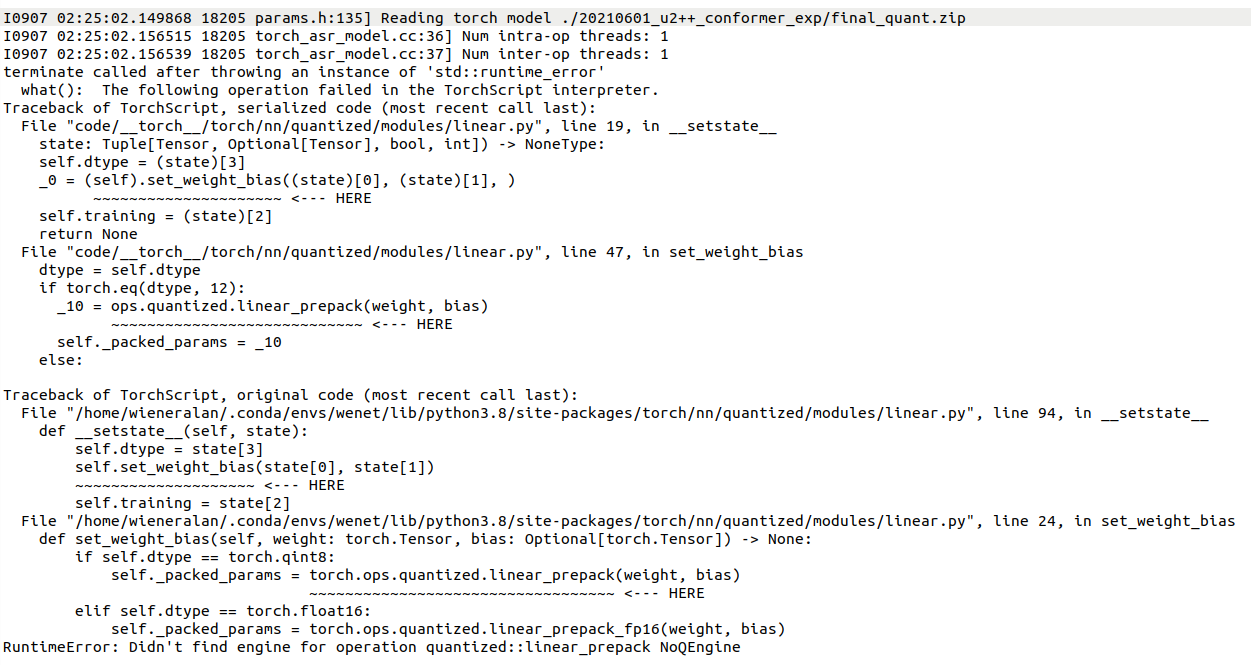

否则会出现 如下错误。 RuntimeError: Didn't find engine for operation quantized linear_prepack NoQEngine

3)wenet/runtime/libtorch文件夹下 进行编译

mkdir build && cd build && cmake .. && cmake --build .

一些其他记录

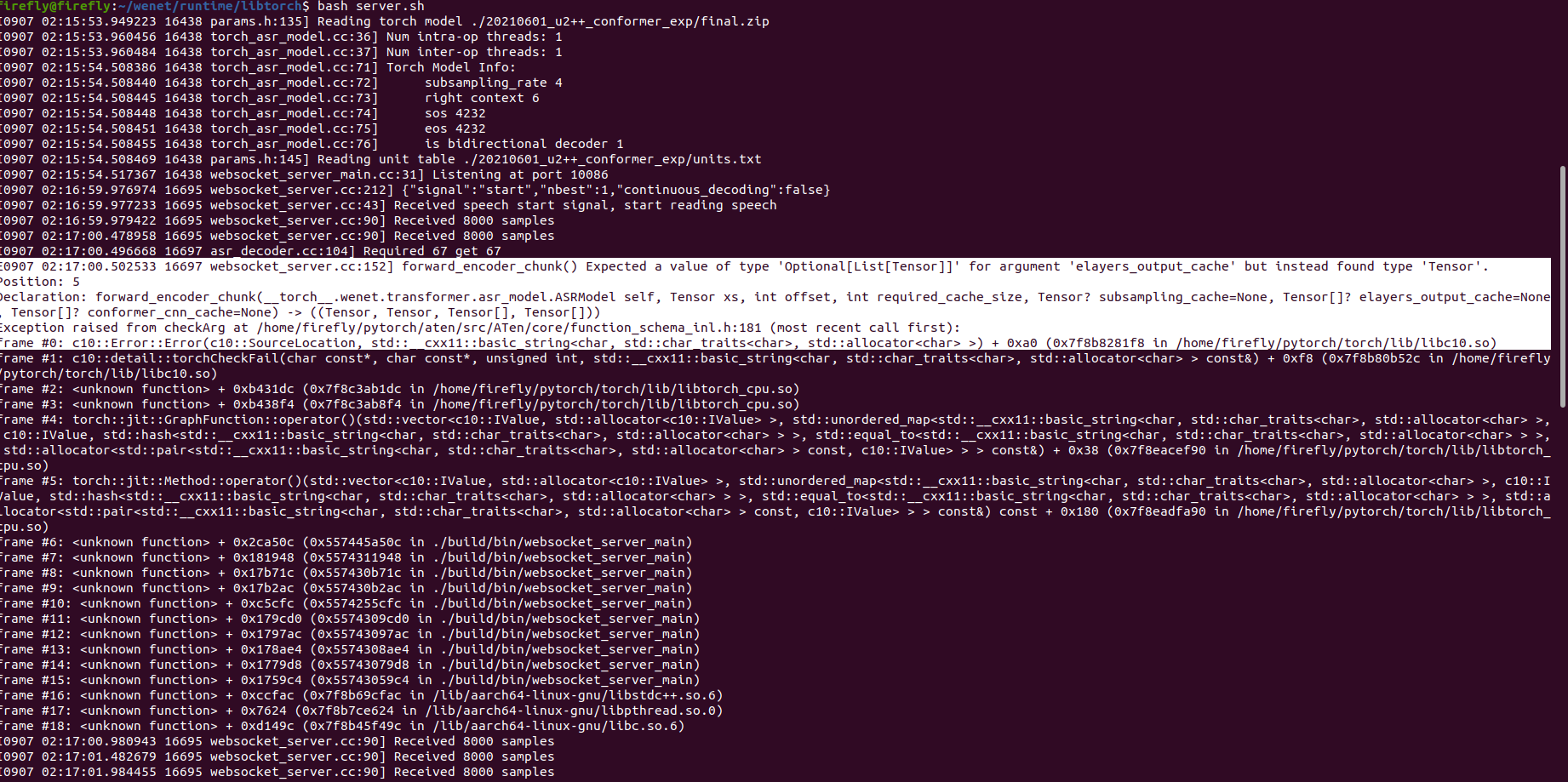

注意这里下载最新的模型,老版本比如wenet-1.0.1版本下的模型会出现类似下面的无法识别的错误。

- 下面是编译pytorch1.12.0时遇到的错误,与上面的pytorch1.10.0无关。

编译pytorch1.12.0报如下错误的解决办法:将每个error出现的代码中的 nullptr /* tp_print 改成 */ to 0 /* tp_print*/ 参考https://github.com/pytorch/pytorch/issues/28060

/home/xxx/driver/pytorch/torch/csrc/Layout.cpp:69:1: error: cannot convert ‘std::nullptr_t’ to ‘Py_ssize_t {aka long int}’ in initialization }; ^ caffe2/torch/CMakeFiles/torch_python.dir/build.make:350: recipe for target 'caffe2/torch/CMakeFiles/torch_python.dir/csrc/Layout.cpp.o' failed make[2]: *** [caffe2/torch/CMakeFiles/torch_python.dir/csrc/Layout.cpp.o] Error 1 make[2]: *** Waiting for unfinished jobs.... /home/xxx/driver/pytorch/torch/csrc/Dtype.cpp:98:1: error: cannot convert ‘std::nullptr_t’ to ‘Py_ssize_t {aka long int}’ in initialization }; ^ [ 96%] Linking CXX executable ../../../bin/TCPStoreTest /home/xxx/driver/pytorch/torch/csrc/Device.cpp:218:1: error: cannot convert ‘std::nullptr_t’ to ‘Py_ssize_t {aka long int}’ in initialization }; ^ /home/xxx/driver/pytorch/torch/csrc/TypeInfo.cpp:229:1: error: cannot convert ‘std::nullptr_t’ to ‘Py_ssize_t {aka long int}’ in initialization }; ^ /home/xxx/driver/pytorch/torch/csrc/TypeInfo.cpp:279:1: error: cannot convert ‘std::nullptr_t’ to ‘Py_ssize_t {aka long int}’ in initialization };

libtorch1.12.0可能报错参考,https://blog.csdn.net/weixin_44966641/article/details/122115544

terminate called after throwing an instance of 'c10::Error' what(): isTensor() INTERNAL ASSERT FAILED at "/home/pisong/miniconda3/lib/python3.7/site-packages/torch/include/ATen/core/ivalue_inl.h":133, please report a bug to PyTorch. Expected Tensor but got Tuple Exception raised from toTensor at /home/pisong/miniconda3/lib/python3.7/site-packages/torch/include/ATen/core/ivalue_inl.h:133 (most recent call first): frame #0: c10::Error::Error(c10::SourceLocation, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 0x8c (0xffffbd05c83c in /home/pisong/miniconda3/lib/python3.7/site-packages/torch/lib/libc10.so) frame #1: c10::IValue::toTensor() && + 0xfc (0xaaaaaf102548 in ./build/resnet50) frame #2: main + 0x324 (0xaaaaaf0fe178 in ./build/resnet50) frame #3: __libc_start_main + 0xe8 (0xffffb90e6090 in /lib/aarch64-linux-gnu/libc.so.6) frame #4: <unknown function> + 0x22c34 (0xaaaaaf0fdc34 in ./build/resnet50) Aborted (core dumped)