2024 数据采集与融合技术实践 - 作业2

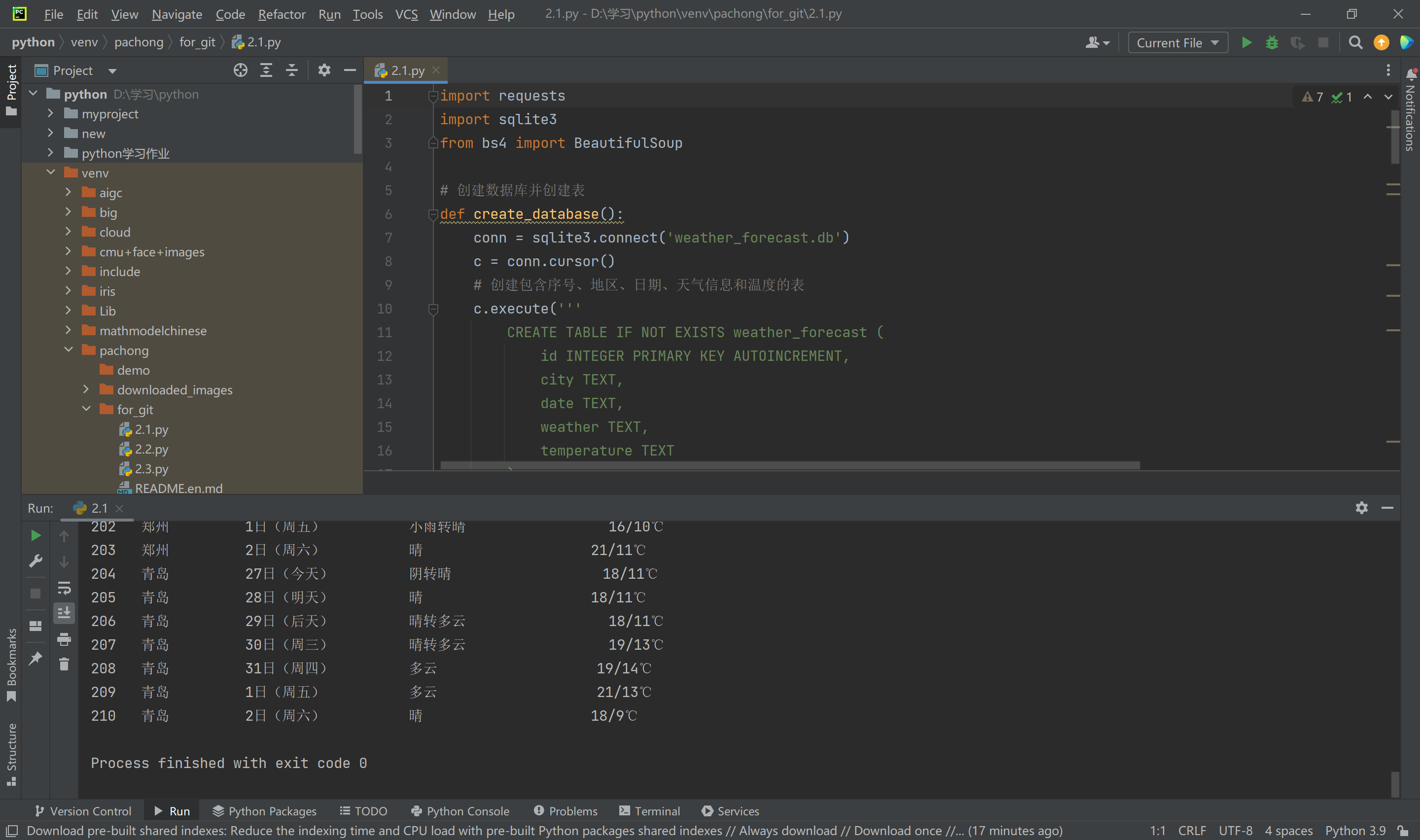

作业①

题目描述

- 在 中国气象网 给定城市集的 7 日天气预报,并将结果保存到数据库。

代码:

import requests

import sqlite3

from bs4 import BeautifulSoup

# 创建数据库并创建表

def create_database():

conn = sqlite3.connect('weather_forecast.db')

c = conn.cursor()

# 创建包含序号、地区、日期、天气信息和温度的表

c.execute('''

CREATE TABLE IF NOT EXISTS weather_forecast (

id INTEGER PRIMARY KEY AUTOINCREMENT,

city TEXT,

date TEXT,

weather TEXT,

temperature TEXT

)

''')

conn.commit()

return conn

# 获取7天的天气数据

def get_weather_data(city_code):

url = f'http://www.weather.com.cn/weather/{city_code}.shtml'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/129.0.0.0 Safari/537.36 Edg/129.0.0.0'

}

response = requests.get(url, headers=headers)

response.encoding = 'utf-8'

soup = BeautifulSoup(response.text, 'html.parser')

weather_data = []

forecast = soup.find('ul', class_='t clearfix')

days = forecast.find_all('li')

for day in days:

date = day.find('h1').text # 获取日期

weather = day.find('p', class_='wea').text # 获取天气描述

temperature_high = day.find('span').text if day.find('span') else '' # 最高温度

temperature_low = day.find('i').text # 最低温度

temperature = f"{temperature_high}/{temperature_low}" # 拼接温度信息

weather_data.append((date, weather, temperature))

return weather_data

# 保存数据到数据库

def save_to_database(city, weather_data, conn):

c = conn.cursor()

for date, weather, temperature in weather_data:

# 插入每行数据

c.execute("INSERT INTO weather_forecast (city, date, weather, temperature) VALUES (?, ?, ?, ?)",

(city, date, weather, temperature))

conn.commit()

# 显示表格形式的数据

def display_table(conn):

c = conn.cursor()

c.execute("SELECT * FROM weather_forecast")

rows = c.fetchall()

print(f"{'序号':<5} {'地区':<10} {'日期':<15} {'天气信息':<20} {'温度':<15}")

for row in rows:

print(f"{row[0]:<5} {row[1]:<10} {row[2]:<15} {row[3]:<20} {row[4]:<15}")

# 主函数

def main():

# 给定的城市集 (使用中国气象网的城市代码)

cities = {

'北京': '101010100',

'上海': '101020100',

'广州': '101280101',

'深圳': '101280601',

'杭州': '101210101',

'成都': '101270101',

'武汉': '101200101',

'南京': '101190101',

'重庆': '101040100',

'天津': '101030100',

'西安': '101110101',

'沈阳': '101070101',

'长沙': '101250101',

'郑州': '101180101',

'青岛': '101120201',

}

# 创建数据库连接

conn = create_database()

# 爬取并保存每个城市的天气数据

for city, city_code in cities.items():

weather_data = get_weather_data(city_code)

save_to_database(city, weather_data, conn)

# 显示表格数据

display_table(conn)

conn.close()

if __name__ == '__main__':

main()

输出结果

Gitee 文件夹链接

https://gitee.com/wang-hengjie-100/crawl_project/blob/master/2.1.py

心得体会

通过该题目,我复习了数据库的相关操作,在完成的过程中,我对数据库的使用更加熟练了,同时也复习了如何编写类和使用类,最主要的是能够规范地编写爬虫程序爬取想要的数据并存储,受益良多。

作业②

题目描述

- 使用

requests和BeautifulSoup库方法定向爬取股票相关信息,并存储在数据库中。

代码:

import requests

import sqlite3

import json

# 创建数据库并创建表

def create_database():

conn = sqlite3.connect('eastmoney_stock.db')

c = conn.cursor()

# 创建表格存储股票信息

c.execute('''

CREATE TABLE IF NOT EXISTS stock_info (

id INTEGER PRIMARY KEY AUTOINCREMENT,

stock_code TEXT,

stock_name TEXT,

latest_price REAL,

change_percent REAL,

change_amount REAL,

volume TEXT,

turnover TEXT,

amplitude REAL,

high REAL,

low REAL,

open_price REAL,

yesterday_close REAL

)

''')

conn.commit()

return conn

# 获取股票数据

def get_stock_data():

url = 'https://push2.eastmoney.com/api/qt/clist/get?cb=jQuery112409840494931556277_1633338445629&pn=1&pz=10&po=1&np=1&fltt=2&invt=2&fid=f3&fs=b:MK0021&fields=f12,f14,f2,f3,f4,f5,f6,f7,f8,f9,f10,f11,f18,f15,f16,f17,f23'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/129.0.0.0 Safari/537.36 Edg/129.0.0.0'

}

response = requests.get(url, headers=headers)

# 去除不必要的字符,提取有效的JSON部分

response_text = response.text.split('(', 1)[1].rsplit(')', 1)[0]

stock_data = json.loads(response_text)['data']['diff'] # 解析JSON并提取有用的字段

return stock_data

# 保存数据到数据库

def save_to_database(stock_data, conn):

c = conn.cursor()

for stock in stock_data:

stock_code = stock['f12'] # 股票代码

stock_name = stock['f14'] # 股票名称

latest_price = stock['f2'] # 最新报价

change_percent = stock['f3'] # 涨跌幅

change_amount = stock['f4'] # 涨跌额

volume = stock['f5'] # 成交量

turnover = stock['f6'] # 成交额

amplitude = stock['f7'] # 振幅

high = stock['f15'] # 最高价

low = stock['f16'] # 最低价

open_price = stock['f17'] # 今开

yesterday_close = stock['f18'] # 昨收

# 插入每行数据

c.execute('''

INSERT INTO stock_info

(stock_code, stock_name, latest_price, change_percent, change_amount, volume, turnover, amplitude, high, low, open_price, yesterday_close)

VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)

''', (

stock_code, stock_name, latest_price, change_percent, change_amount, volume, turnover, amplitude, high, low,

open_price, yesterday_close))

conn.commit()

# 显示表格形式的数据

def display_table(conn):

c = conn.cursor()

c.execute("SELECT * FROM stock_info")

rows = c.fetchall()

# 打印表头

print(

f"{'序号':<5} {'股票代码':<10} {'股票名称':<10} {'最新报价':<10} {'涨跌幅':<10} {'涨跌额':<10} {'成交量':<10} {'成交额':<15} {'振幅':<10} {'最高':<10} {'最低':<10} {'今开':<10} {'昨收':<10}")

# 打印每行数据

for row in rows:

# 尝试将字段转换为浮点数,如果失败则使用0.0

latest_price = float(row[3]) if row[3] not in ('-', None) else 0.0

change_percent = float(row[4]) if row[4] not in ('-', None) else 0.0

change_amount = float(row[5]) if row[5] not in ('-', None) else 0.0

amplitude = float(row[8]) if row[8] not in ('-', None) else 0.0

high = float(row[9]) if row[9] not in ('-', None) else 0.0

low = float(row[10]) if row[10] not in ('-', None) else 0.0

open_price = float(row[11]) if row[11] not in ('-', None) else 0.0

yesterday_close = float(row[12]) if row[12] not in ('-', None) else 0.0

print(f"{row[0]:<5} {row[1]:<10} {row[2]:<10} "

f"{latest_price:<10.2f} {change_percent:<10.2f} {change_amount:<10.2f} "

f"{row[6]:<10} {row[7]:<15} {amplitude:<10.2f} {high:<10.2f} "

f"{low:<10.2f} {open_price:<10.2f} {yesterday_close:<10.2f}")

# 主函数

def main():

# 创建数据库连接

conn = create_database()

# 获取股票数据

stock_data = get_stock_data()

# 保存数据到数据库

save_to_database(stock_data, conn)

# 显示表格数据

display_table(conn)

conn.close()

if __name__ == '__main__':

main()

输出结果

Gitee 文件夹链接

https://gitee.com/wang-hengjie-100/crawl_project/blob/master/2.2.py

心得体会

通过老师提供的股票爬取教程,我学到了如何从网页中抓包获取所需信息字段,在完成作业的过程中,我慢慢地能够精准地从网页中提取数据,同时对数据量较大的网站能够较为准确快速地获取所需数据。

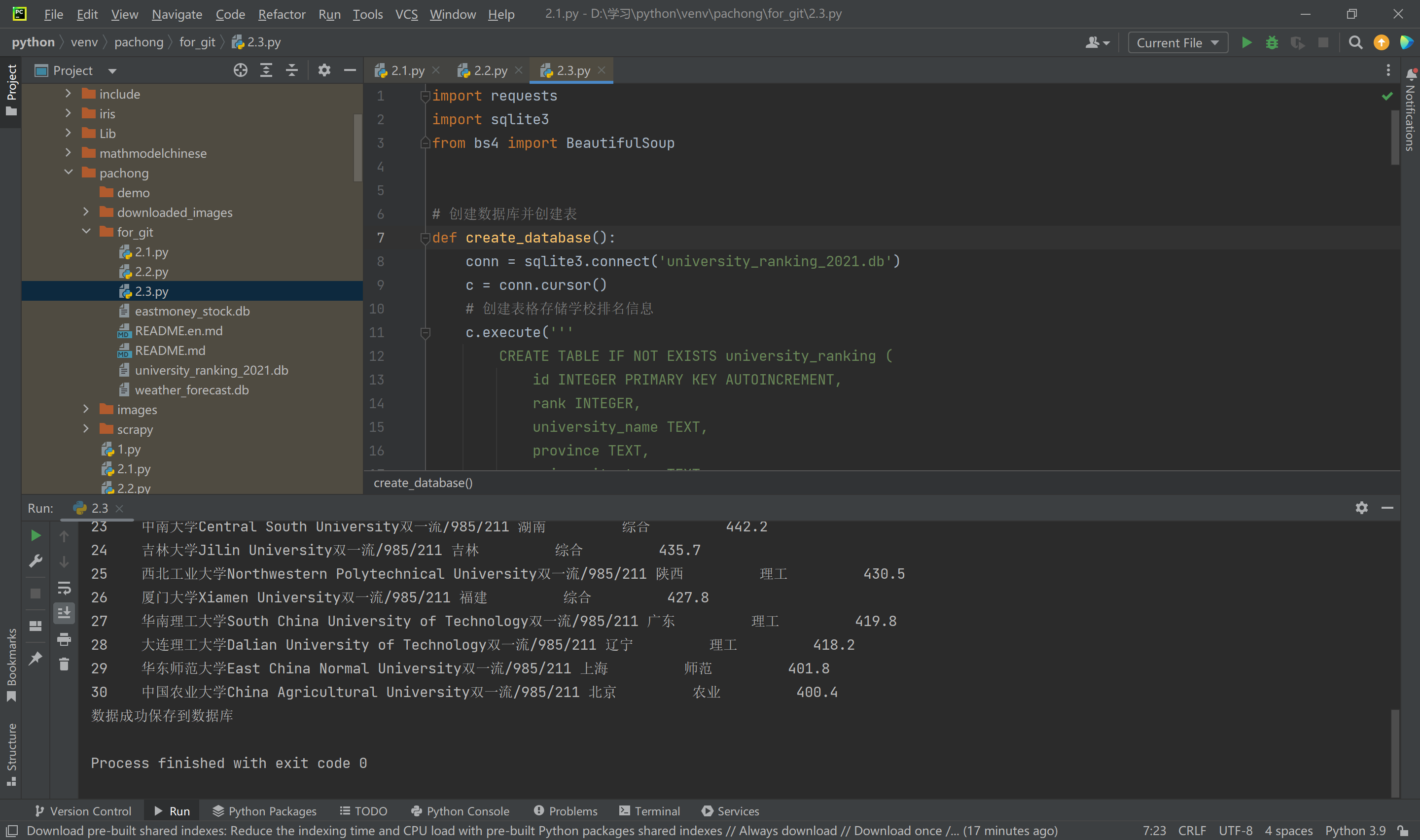

作业③

题目描述

- 爬取中国大学 2021 主榜(链接)所有院校信息,并存储在数据库中。同时将浏览器 F12 调试分析的过程录制为 GIF 并添加至博客。

代码:

import requests

import sqlite3

from bs4 import BeautifulSoup

# 创建数据库并创建表

def create_database():

conn = sqlite3.connect('university_ranking_2021.db')

c = conn.cursor()

# 创建表格存储学校排名信息

c.execute('''

CREATE TABLE IF NOT EXISTS university_ranking (

id INTEGER PRIMARY KEY AUTOINCREMENT,

rank INTEGER,

university_name TEXT,

province TEXT,

university_type TEXT,

total_score REAL

)

''')

conn.commit()

return conn

# 获取网页内容

def get_html(url):

try:

response = requests.get(url, timeout=30)

response.raise_for_status()

response.encoding = 'utf-8'

return response.text

except requests.RequestException as e:

print(f"请求错误: {e}")

return None

# 解析网页并提取大学排名信息

def parse_ranking_table(html):

soup = BeautifulSoup(html, 'html.parser')

ranking_table = soup.find('table', class_='rk-table')

if not ranking_table:

print("未找到排名表格")

return []

rows = ranking_table.find_all('tr')[1:] # 跳过表头

university_data = []

# 打印表头

print(f"{'排名':<5} {'学校':<20} {'省市':<10} {'类型':<10} {'总分':<10}")

for row in rows:

columns = row.find_all('td')

if len(columns) >= 5:

rank = int(columns[0].get_text(strip=True))

university_name = columns[1].get_text(strip=True)

province = columns[2].get_text(strip=True)

university_type = columns[3].get_text(strip=True)

total_score = float(columns[4].get_text(strip=True))

# 将每个学校信息存入列表

university_data.append((rank, university_name, province, university_type, total_score))

# 在控制台打印每所大学的信息

print(f"{rank:<5} {university_name:<20} {province:<10} {university_type:<10} {total_score:<10.1f}")

return university_data

# 保存数据到数据库

def save_to_database(university_data, conn):

c = conn.cursor()

for university in university_data:

c.execute('''

INSERT INTO university_ranking

(rank, university_name, province, university_type, total_score)

VALUES (?, ?, ?, ?, ?)

''', university)

conn.commit()

# 主函数

def main():

url = 'https://www.shanghairanking.cn/rankings/bcur/2021'

html_content = get_html(url)

if html_content:

university_data = parse_ranking_table(html_content)

if university_data:

conn = create_database()

save_to_database(university_data, conn)

conn.close()

print("数据成功保存到数据库")

if __name__ == '__main__':

main()

输出结果

Gitee 文件夹链接

https://gitee.com/wang-hengjie-100/crawl_project/blob/master/2.3.py

心得体会

该任务完成过了,同样地,通过该题目我复习了数据库的相关操作,我将之前的代码进行了修改,添加了存储到数据库的部分,使数据能够很好地保存到本地。

posted on

posted on