网络爬虫简介

以下是《用python写网络爬虫》的读书笔记:

一.背景调研

1.检查robots.txt文件,通过在所需要爬取数据的页面的网址后加上robots.txt就可以看到当前网站对于数据爬取有哪些限制

以下是一个典型的robots.txt文件,这个robots.txt文件是网站 http://example.webscarping.com/的robots.tx

#section 1 User-agent : BadCrawler Disallow:/ #section 2 User-agent : * Crawl-delay : 5 Disallow : /trap #section 3 Sitemap : http://example.webscarping.com/sitemap.xml

这里的sitemap表示的网站地图,我们可以进入相应的页面进行查看。网站地图提供了所有页面的链接

2.识别网站所用的技术

在进行爬虫之前如果我们知道了该网站的搭建技术,那么就可以更好更快的进行数据爬取

(1)首先我们要用cmd进入到安装python的目录

(2)执行 pip install builtwith

(3)测试

import bulitwith //test the builtwith builtwith.parse("http://www.zhipin.com")

3.寻找网站所有者

我们可以利用whois模块查询域名的注册者

(1)首先我们要用cmd进入到安装python的目录

(2)执行 pip install python-whois

(3)测试

import whois //test the whois modle whois.whois("www.zhipin.com")

4.编写第一个网络爬虫

(1)3种爬取网站的常见方法:

a.爬取网站地图

b.遍历每个网页的数据库ID

c.跟踪网页链接

(2)重新下载

import urllib2

def download(url, times = 2):

'''

download the html page from the url. and then return the html

:param url:

:param times:

:return: html

'''

print "download : ", url

try:

html = urllib2.urlopen(url).read()

except urllib2.URLError as e:

print "download error : ", e.reason

html = None

if times > 0:

if hasattr(e, "code") and 500 <= e.code < 600:

# if the error is server error, try it again

return download(url, times -1)

return html

(2)设置用户代理

默认情况下,urllib2使用Python-urllib/2.7作为用户代理,但是为了尽量防止被封号,我们应该设置自己的用户代理。

import urllib2 import re def download(url, user_agent="wuyanjing", times=2): ''' download the html page from the url. and then return the html it will catch the dowmload error, set the user-agent and try to dowmload again when occur 5xx error :param url: the url which you want to download :param user_agent: set the user_agent :param times: set the repeat times when occur 5xx error :return html: the html of the url page ''' print "download : ", url headers = {"User-agent":user_agent} request = urllib2.Request(url, headers=headers) try: html = urllib2.urlopen(request).read() except urllib2.URLError as e: print "download error : ", e.reason html = None if times > 0: if hasattr(e, "code") and 500 <= e.code < 600: # if the error is server error, try it again return download(url, times -1) return html

(3)使用网站地图进行爬虫

网站地图中的loc标签记录了当前网站的所有可爬取的页面的网址,因此我们只需要利用sitemap,然后结合之前写的download函数就可以得到想要下载页面的html

def crawl_sitemap(url): ''' download all html page from sitemap loc label :param url: :return: ''' # download the sitemap sitemap = download(url) # find all link page in the sitemap links = re.findall("<loc>(.*?)</loc>", sitemap) for link in links: html = download(link)

我用的测试网站是美团的网站地图

(4)使用ID遍历进行爬虫

因为网站地图标签中的loc标签中,可能不包含我们所需要的网址,所以为了更好的对所需要查找的网页实现覆盖,我们可以使用ID遍历进行爬虫。这种爬虫方式,是利用网站在存储页面的时候以数据库ID值代替页面别名的方式,对数据爬取进行简化。

def crawl_dataid(url, max_error = 5): ''' download the page link like url there can not have more than max_error page link not in the database in succession :param url: the general url :return htmls: ''' numerror = 0 htmls = [] for page in itertools.count(1): currentpage = url + "/-%d" % page html = download(currentpage) if html is None: numerror += 1 if numerror == max_error: break else: numerror = 0 htmls.append(html)

我的测试网址是:书中给的测试网址

(5)使用网站链接进行爬虫

不管是以上的任何一种方案,我们都要特别注意,在爬取数据的时候要暂停几秒再进行下一条数据的爬取,不然会被封号的,或者会出现request too much,不让你爬太多数据。我在根据书上的内容进行测试网址的数据爬取的时候就遇到了这个问题,这个网站限制一次性不能爬取超过十条数据,否则就不让爬了。

def crawl_link(url, link_regx): ''' find all unique link int the url and its son page the link should be unique and :param url: the url which you want to search :param link_regx: re match pattern :return: ''' links = [url] linksset = set(links) while links: currentpage = links.pop() html = download(currentpage) # pay attention that it should sleep one second to continue time.sleep(1) for linkson in find_html(html): if re.match(link_regx, linkson): # make sure dosen't have the same link linkson = urlparse.urljoin(url, linkson) if linkson not in linksset: linksset.add(linkson) links.append(linkson) def find_html(html): ''' find all link from the html :param html: :return: the all href ''' pattern = re.compile('<a[^>]+href=["\'](.*?)["\']', re.IGNORECASE) return pattern.findall(html) # the test code crawl_link("http://example.webscraping.com", '.*/(view|index)/')

(6)高级功能

1.解析robots.txt

因为robots.txt中包含了禁止访问的user-agent的名字,因此我们可以利用robotparser对robots.txt的内容进行读取,然后得到有效的user-agent

def crawl_link(url, link_regx, url_robots, user_agent="wuyanjing"): ''' find all unique link int the url and its son page the link should be unique and :param url: the url which you want to search :param link_regx: re match pattern :param url_robots: robots.txt :param user_agent: user-agent :return: ''' links = [url] linksset = set(links) while links: currentpage = links.pop() rp = robot_parse(url_robots) if rp.can_fetch(user_agent, currentpage): html = download(currentpage) # pay attention that it should sleep one second to continue time.sleep(1) for linkson in find_html(html): if re.match(link_regx, linkson): # make sure dosen't have the same link linkson = urlparse.urljoin(url, linkson) if linkson not in linksset: linksset.add(linkson) links.append(linkson) else: print 'Blocked by robots.txt', url def find_html(html): ''' find all link from the html :param html: :return: the all href ''' pattern = re.compile('<a[^>]+href=["\'](.*?)["\']', re.IGNORECASE) return pattern.findall(html) def robot_parse(url): ''' parse the robot.txt and read value :param url: the url of robots.txt :return rp: robotparser ''' rp = robotparser.RobotFileParser() rp.set_url(url) rp.read() return rp

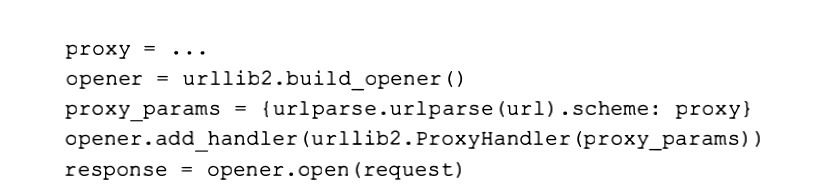

2.支持代理

有时候我们本机的ip地址会被封掉,那么这个时候我们就要使用代理。它相当于重新设定了本机的IP地址。具体的内容可见代理

以下是使用urllib2构建代理的一般方法:

我们可以将支持代理结合到download方法中,那么就有了以下方法:

def download(url, user_agent="wuyanjing", proxy=None, num_retrie=2): ''' use a proxy to visit server :param url: :param user_agent:user-agent name :param proxy:string, ip:port :param num_retrie: :return:the html of the url ''' print "download: ", url headers = {"User-agent": user_agent} request = urllib2.Request(url, headers=headers) opener = urllib2.build_opener() if proxy: proxy_params = {urlparse.urlparse(url).scheme: proxy} opener.add_handler(urllib2.ProxyHandler(proxy_params)) try: html = opener.open(request).read() except urllib2.URLError as e: html = None num_retrie -= 1 if num_retrie > 0: if hasattr(e, "code") and 500 <= e.code < 600: print "download error: ", e.reason return download(url, user_agent, proxy, num_retrie-1) return html

3.下载限速

首先我们要先弄懂urlparse模块,有什么用。urlparse.urllparse(url).netloc,能够获取当前url的服务器地址

我们首先要创建一个类,用来记录延迟时间,和上次申请服务器的时间:

import urlparse import datetime import time ''' the break time between the same download should more than delay :param self.delay: the delay time :param self.domains: the recent time we request a server ''' class Throttle: def __init__(self, delay): self.delay = delay self.domains = {} def wait(self, url): ''' make sure that the break between two same download should be more than delay time :param url: :return: ''' domain = urlparse.urlparse(url).netloc last_accessed = self.domains.get(domain) if self.delay > 0 and last_accessed is not None: sleep_seconds = self.delay - (datetime.datetime.now() - last_accessed).seconds if sleep_seconds > 0: time.sleep(sleep_seconds) self.domains[domain] = datetime.datetime.now()

在修改crawl_link函数的时候,我们要特别注意只能初始化Throttle类一次,也就是在循环外初始化Thtottle,否则的话它的domains值就一直都为空

def crawl_link(url, link_regx, url_robots, user_agent="wuyanjing"): ''' find all unique link int the url and its son page the link should be unique and :param url: the url which you want to search :param link_regx: re match pattern :param url_robots: robots.txt :param user_agent: user-agent :return: ''' links = [url] linksset = set(links) throttle = Throttle.Throttle(5) while links: currentpage = links.pop() rp = robot_parse(url_robots) if rp.can_fetch(user_agent, currentpage): throttle.wait(currentpage) html = download(currentpage) # pay attention that it should sleep one second to continue for linkson in find_html(html): if re.match(link_regx, linkson): # make sure dosen't have the same link linkson = urlparse.urljoin(url, linkson) if linkson not in linksset: linksset.add(linkson) links.append(linkson) else: print 'Blocked by robots.txt', url

4.避免爬虫陷阱

爬虫陷阱指的是当一个页面是动态生成的时候我们在进行爬虫的时候,因为我们之前写的程序碰到没有出现过的链接就会记录,然后继续执行下去,那么就会出现无穷多个链接,无法结束。避免这个困境的方法就是记录到达当前页面经历了多少个链接,称之为深度,如果深度超过最大深度的话,那么就要去除这个链接。

要解决这个问题呢,我们只要将crawl_link中的linkset变量改成字典,然后记录下每个url的深度即可。

def crawl_link(url, link_regx, url_robots, user_agent="wuyanjing", max_depth=2): ''' find all unique link int the url and its son page the link should be unique and :param url: the url which you want to search :param link_regx: re match pattern :param url_robots: robots.txt :param user_agent: user-agent :param max_depth: the max depth of every url :return: ''' links = [url] linkset = {url: 0} throttle = Throttle.Throttle(5) while links: currentpage = links.pop() rp = robot_parse(url_robots) if rp.can_fetch(user_agent, currentpage): throttle.wait(currentpage) html = download(currentpage) # pay attention that it should sleep one second to continue for linkson in find_html(html): if re.match(link_regx, linkson): # make sure dosen't have the same link linkson = urlparse.urljoin(url, linkson) depth = linkset[url] if depth < max_depth: if linkson not in linkset: linkset[linkson] = depth + 1 links.append(linkson) else: print 'Blocked by robots.txt', url