1. Linux内核基础简介

1.1 Linux中断处理

1.1.1 中断定义

中断是指在CPU正常运行期间,由于内外部事件或由程序预先安排的事件引起的CPU暂时停止正在运行的程序,转而为该内部或外部事件或预先安排的事件服务的程序中去,服务完毕后再返回去继续运行被暂时中断的程序。

1.1.2 中断作用

提供特殊的机制,使得CPU转去运行正常程序之外的代码,从而避免让高速的CPU迁就低俗的外设。

1.1.3 中断分类

分为异步的中断和同步的异常,中断又分为可屏蔽中断和不可屏蔽中断,异常又分为处理器探测异常和编程异常。处理器探测异常又进一步分为故障、陷阱、终止。

1.1.4 中断向量

0~255之间的一个数,当发生中断时,对应中断向量的电信号被CPU感知,CPU通过idtr寄存器找到中断描述符表的首地址(IDT)。CPU通过中断向量号和IDT首地址找到IDT表中的数据结构对应的段描述符表对应的项,再找到中断处理程序的首地址,并进行权限控制。

1.1.5 中断处理过程

- 确定与中断或者异常关联的中断向量i(0~255)

- 读idtr寄存器指向的IDT表中的第i项

- 从gdtr寄存器获得GDT的基地址,并在GDT中查找,读取IDT表项中的段选择符所标识的段描述符。

- 确定中断是由授权的发生源发处的。

- 检查是否发生了特权级的变化,比如从用户态陷入内核态。

- 如果发生的是故障,修改cs和eip寄存器的值,是发生故障的指令在中断处理结束后再次执行。

- 在内核栈中保存eflags、cs和eip寄存器的内容。

- 如果异常产生硬件出错码,那么也保存到内核栈中。

- 装载cs和eip寄存器,即让CPU转到中断处理程序进行执行。

- 中断处理程序运行。

- 中断处理程序运行结束后,用保存在内核栈的cs、eip、eflags寄存器恢复现场。

- 如果发生特权级变化,那么从内核栈切换回用户栈,即需要装载ss和eip寄存器。

1.2 softirq、tasklet、work queue

1.2.1 可延迟中断

Linux把中断过程中的要执行的任务分为紧急、 非紧急、非紧急可延迟三类。在中断处理程序中只完成不可延迟的部分以达到快速响应的目的,并把可延迟的操作内容推迟到内核的下半部分执行,在一个更合适的时机调用函数完成这些可延迟的操作。Linux内核提供了软中断softirq、tasklet和工作队列work queue等方法进行实现。

1.2.2 软中断和tasklet的四种操作

软中断和tasklet包含4种操作:初始化、激活、屏蔽、执行。软中断在Linux的softirq_vec中定义,使用open_softirq进行初始化,触发时调用raise_softirq函数。内核会每隔一段时间到达检查点,查看是否有软中断需要执行,如果有则调用do_softirq进行处理。

1.2.3 tasklet

tasklet是IO驱动程序实现可延迟函数的首选,建立在HI_SOFTIRQ和TASKLET_SOFTURQ等软中断之上,tasklet和高优先级的tasklet分别存放在tasklet_vec和tasklet_hi_vec数组中,分别由tasklet_action和tasklet_hi_action处理。

1.2.4 work queue

work queue工作队列把工作推后,交给一个内核线程执行,工作队列允许被重新调度甚至睡眠。在Linux中的数据结构是workqueue_struct和cpu_workqueue_struct。

2. TCP/IP协议栈

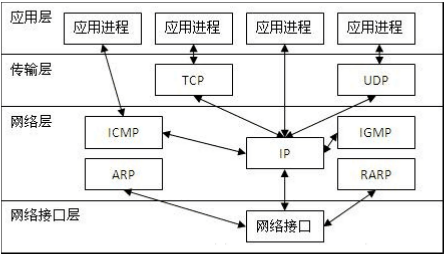

2.1 TCP/IP协议概述

TCP/IP协议分为4个层次,自上而下依次为应用层、传输层、网络层、网络接口层。分层如下图所示:

各层的功能如下:

1、应用层的功能为对客户发出的一个请求,服务器作出响应并提供相应的服务。

2、传输层的功能为通信双方的主机提供端到端的服务,传输层对信息流具有调节作用,提供可靠性传输,确保数据到达无误。

3、网络层功能为进行网络互连,根据网间报文IP地址,从一个网络通过路由器传到另一网络。

4、网络接口层负责接收IP数据报,并负责把这些数据报发送到指定网络上。

2.2 TCP/IP协议的主要特点

(1)TCP/IP协议不依赖于任何特定的计算机硬件或操作系统,提供开放的协议标准,即使不考虑Internet,TCP/IP协议也获得了广泛的支持。所以TCP/IP协议成为一种联合各种硬件和软件的实用系统。

(2)标准化的高层协议,可以提供多种可靠的用户服务。

(3)统一的网络地址分配方案,使得整个TCP/IP设备在网中都具有惟一的地址。

(4)TCP/IP协议并不依赖于特定的网络传输硬件,所以TCP/IP协议能够集成各种各样的网络。用户能够使用以太网(Ethernet)、令牌环网(Token Ring Network)、拨号线路(Dial-up line)、X.25网以及所有的网络传输硬件。

3. Socket编程概述

3.1Socket简介

各种网络应用程序基本上都是通过 Linux Socket 编程接口来和内核空间的网络协议栈通信的。Linux Socket 是从 BSD Socket 发展而来的,它是 Linux 操作系统的重要组成部分之一,它是网络应用程序的基础。从层次上来说,它位于应用层,是操作系统为应用程序员提供的 API,通过它,应用程序可以访问传输层协议。

socket的特点有:

- 独立于具体协议的网络编程接口

- 在OSI模型中,主要位于会话层和传输层之间

- BSD Socket(伯克利套接字)是通过标准的UNIX文件描述符和其它程序通讯的一个方法,目前已经被广泛移植到各个平台。

3.2 Socket API函数

•socket 创建套接字

•connect 建立连接

•bind 绑定本机端口

•listen 监听端口

•accept 接受连接

•recv, recvfrom 数据接收

•send, sendto 数据发送

close, shutdown 关闭套接字

3.3 Socket 数据结构

用户使用socket系统调用编写应用程序时,通过一个数字来表示一个socket,所有的操作都在该数字上进行,这个数字称为套接字描述符。在系统调用 的实现函数里,这个数字就会被映射成一个表示socket的结构体,该结构体保存了该socket的所有属性和数据。

1 struct socket { 2 socket_state state; //描述套接字的状态 3 unsigned long flags; //套接字的一组标志位 4 const struct proto_ops *ops; //将套接字层调用于传输层协议对应 5 struct fasync_struct *fasync_list; //存储异步通知队列 6 struct file *file; //套接字相关联的文件指针 7 struct sock *sk; //套接字相关联的传输控制块 8 wait_queue_head_t wait; //等待套接字的进程列表 9 short type; //套接字的类型 10 }; 11 12 state用于表示socket所处的状态,是一个枚举变量,其类型定义如下: 13 typedef enum { 14 SS_FREE = 0, //该socket还未分配 15 SS_UNCONNECTED, //未连向任何socket 16 SS_CONNECTING, //正在连接过程中 17 SS_CONNECTED, //已连向一个socket 18 SS_DISCONNECTING //正在断开连接的过程中 19 }socket_state;

3.4 Socketcall系统调用

socketcall()是Linux操作系统中所有的socket系统调用的总入口。sys_soketcall()函数的第一个参数即为具体的操作码,其中,每个数字可以代表一个操作码,一共17种,函数中正是通过操作码来跳转到真正的系统调用函数的。具体操作码对应情况如下:

1 #define SYS_SOCKET 1 /* sys_socket(2) */ 2 #define SYS_BIND 2 /* sys_bind(2) */ 3 #define SYS_CONNECT 3 /* sys_connect(2) */ 4 #define SYS_LISTEN 4 /* sys_listen(2) */ 5 #define SYS_ACCEPT 5 /* sys_accept(2) */ 6 #define SYS_GETSOCKNAME 6 /* sys_getsockname(2) */ 7 #define SYS_GETPEERNAME 7 /* sys_getpeername(2) */ 8 #define SYS_SOCKETPAIR 8 /* sys_socketpair(2) */ 9 #define SYS_SEND 9 /* sys_send(2) */ 10 #define SYS_RECV 10 /* sys_recv(2) */ 11 #define SYS_SENDTO 11 /* sys_sendto(2) */ 12 #define SYS_RECVFROM 12 /* sys_recvfrom(2) */ 13 #define SYS_SHUTDOWN 13 /* sys_shutdown(2) */ 14 #define SYS_SETSOCKOPT 14 /* sys_setsockopt(2) */ 15 #define SYS_GETSOCKOPT 15 /* sys_getsockopt(2) */ 16 #define SYS_SENDMSG 16 /* sys_sendmsg(2) */ 17 #define SYS_RECVMSG 17 /* sys_recvmsg(2) */ 18 sys_socketcall()函数的源代码在net/socket.c中,具体如下: 19 asmlinkage long sys_socketcall(int call, unsigned long __user *args) 20 { 21 unsigned long a[6]; 22 unsigned long a0, a1; 23 int err; 24 .......................................... 25 26 a0 = a[0]; 27 a1 = a[1]; 28 29 switch (call) { 30 case SYS_SOCKET: 31 err = sys_socket(a0, a1, a[2]); 32 break; 33 case SYS_BIND: 34 err = sys_bind(a0, (struct sockaddr __user *)a1, a[2]); 35 break; 36 case SYS_CONNECT: 37 err = sys_connect(a0, (struct sockaddr __user *)a1, a[2]); 38 break; 39 case SYS_LISTEN: 40 err = sys_listen(a0, a1); 41 break; 42 case SYS_ACCEPT: 43 err = 44 do_accept(a0, (struct sockaddr __user *)a1, 45 (int __user *)a[2], 0); 46 break; 47 case SYS_GETSOCKNAME: 48 err = 49 sys_getsockname(a0, (struct sockaddr __user *)a1, 50 (int __user *)a[2]); 51 break; 52 ..................................... 53 return err; 54 }

可以看到sys_socketcall()函数就是通过传递进来的call类型,来调用相应的socket相关的函数。而这里并没有出现我们所熟知得write、read、aio、poll等系统调用函数,这是因为socket上面其实还有一层VFS层,内核把socket当做一个文件系统来处理,并且实现了相应得VFS方法。

下图显示了使用上述系统调用函数的顺序,图中蓝色大方框中的IO系统调用函数可以在任何时候调用。注意,给出的图中不是一个完整的状态流程图,仅显示了一些常见的系统调用函数:

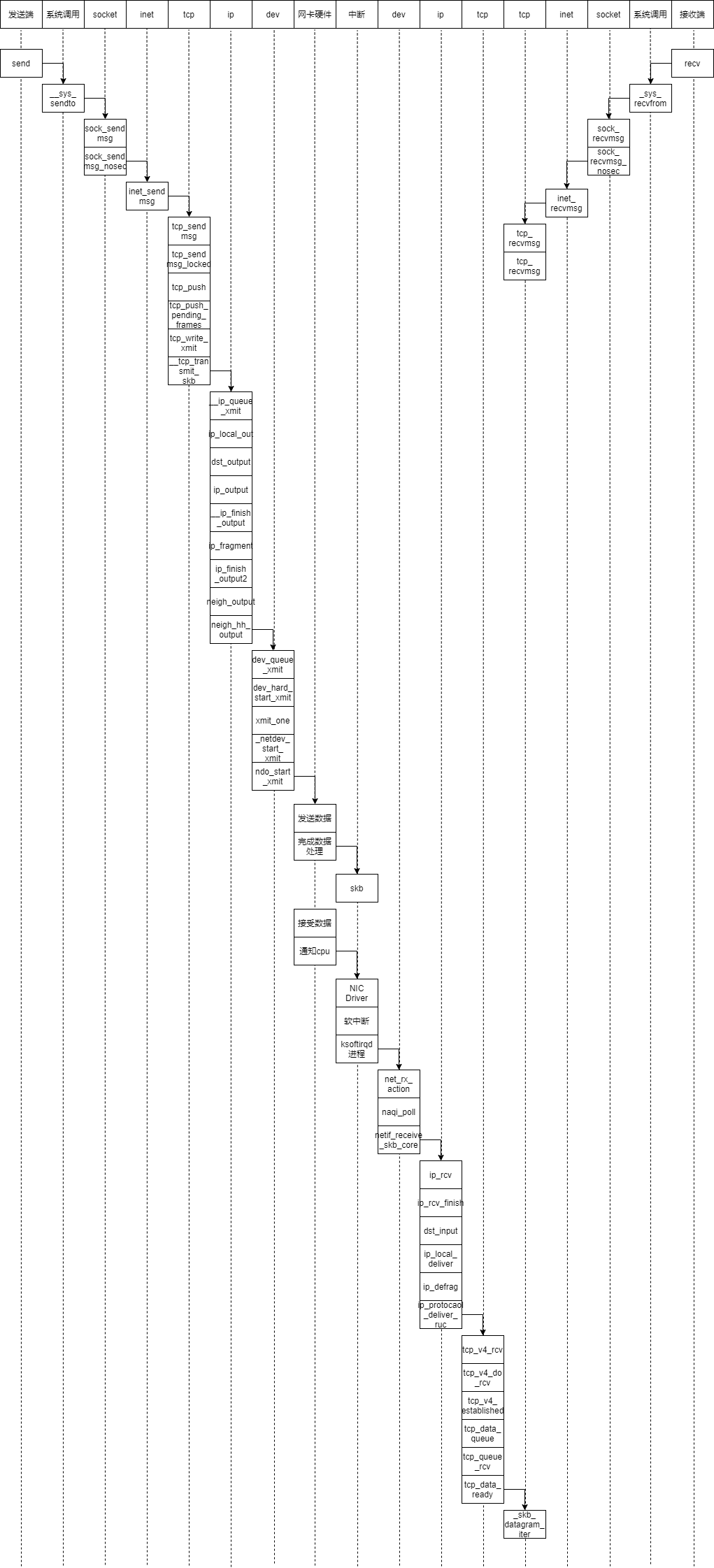

4. send过程分析

4.1 应用层

流程:

1.网络应用调用Socket API socket (int family, int type, int protocol) 创建一个 socket,该调用最终会调用 Linux system call socket() ,并最终调用 Linux Kernel 的 sock_create() 方法。该方法返回被创建好了的那个 socket 的 file descriptor。对于每一个 userspace 网络应用创建的 socket,在内核中都有一个对应的 struct socket和 struct sock。其中,struct sock 有三个队列(queue),分别是 rx , tx 和 err,在 sock 结构被初始化的时候,这些缓冲队列也被初始化完成;在收据收发过程中,每个 queue 中保存要发送或者接受的每个 packet 对应的 Linux 网络栈 sk_buffer 数据结构的实例 skb。

2.对于TCP socket 来说,应用调用 connect()API ,使得客户端和服务器端通过该 socket 建立一个虚拟连接。在此过程中,TCP 协议栈通过三次握手会建立 TCP 连接。默认地,该 API 会等到 TCP 握手完成连接建立后才返回。在建立连接的过程中的一个重要步骤是,确定双方使用的 Maxium Segemet Size (MSS)。因为 UDP 是面向无连接的协议,因此它是不需要该步骤的。

3.应用调用 Linux Socket 的 send 或者 write API 来发出一个 message 给接收端。

4.sock_sendmsg 被调用,它使用 socket descriptor 获取 sock struct,创建 message header 和 socket control message。

5._sock_sendmsg 被调用,根据 socket 的协议类型,调用相应协议的发送函数。

6.对于TCP ,调用 tcp_sendmsg 函数。

7.对于UDP 来说,userspace 应用可以调用 send()/sendto()/sendmsg() 三个 system call 中的任意一个来发送 UDP message,它们最终都会调用内核中的 udp_sendmsg() 函数。

源码分析:

在net/socket.c中定义了sys_sendto函数,当调用send()函数时,内核封装send()为sendto(),然后发起系统调用。其实也很好理解,send()就是sendto()的一种特殊情况,而sendto()在内核的系统调用服务程序为sys_sendto。

1 /* 2 * Send a datagram to a given address. We move the address into kernel 3 * space and check the user space data area is readable before invoking 4 * the protocol. 5 */ 6 int __sys_sendto(int fd, void __user *buff, size_t len, unsigned int flags, 7 struct sockaddr __user *addr, int addr_len) 8 { 9 struct socket *sock; 10 struct sockaddr_storage address; 11 int err; 12 struct msghdr msg; 13 struct iovec iov; 14 int fput_needed; 15 16 err = import_single_range(WRITE, buff, len, &iov, &msg.msg_iter); 17 if (unlikely(err)) 18 return err; 19 sock = sockfd_lookup_light(fd, &err, &fput_needed); 20 if (!sock) 21 goto out; 22 23 msg.msg_name = NULL; 24 msg.msg_control = NULL; 25 msg.msg_controllen = 0; 26 msg.msg_namelen = 0; 27 if (addr) { 28 err = move_addr_to_kernel(addr, addr_len, &address); 29 if (err < 0) 30 goto out_put; 31 msg.msg_name = (struct sockaddr *)&address; 32 msg.msg_namelen = addr_len; 33 } 34 if (sock->file->f_flags & O_NONBLOCK) 35 flags |= MSG_DONTWAIT; 36 msg.msg_flags = flags; 37 err = sock_sendmsg(sock, &msg); 38 39 out_put: 40 fput_light(sock->file, fput_needed); 41 out: 42 return err; 43 }

在socket.h中定义了一个struct msghdr msg,他是用来表示要发送的数据的一些属性。

1 /* Structure describing messages sent by 2 `sendmsg' and received by `recvmsg'. */ 3 struct msghdr 4 { 5 void *msg_name; /* Address to send to/receive from. */ 6 socklen_t msg_namelen; /* Length of address data. */ 7 8 struct iovec *msg_iov; /* Vector of data to send/receive into. */ 9 size_t msg_iovlen; /* Number of elements in the vector. */ 10 11 void *msg_control; /* Ancillary data (eg BSD filedesc passing). */ 12 size_t msg_controllen; /* Ancillary data buffer length. 13 !! The type should be socklen_t but the 14 definition of the kernel is incompatible 15 with this. */ 16 17 int msg_flags; /* Flags on received message. */ 18 };

__sys_sendto函数其实做了3件事:

1.通过fd获取了对应的struct socket

2.创建了用来描述要发送的数据的结构体struct msghdr。

3.调用了sock_sendmsg来执行实际的发送。

sys_sendto构建完这些后,调用sock_sendmsg继续执行发送流程,传入参数为struct msghdr和数据的长度。忽略中间的一些不重要的细节,sock_sendmsg继续调用 sock_sendmsg(),sock_sendmsg()最后调用struct socket->ops->sendmsg,即对应套接字类型的sendmsg()函数,所有的套接字类型的sendmsg()函数都是 sock_sendmsg,该函数首先检查本地端口是否已绑定,无绑定则执行自动绑定,而后调用具体协议的sendmsg函数。

1 /** 2 * sock_sendmsg - send a message through @sock 3 * @sock: socket 4 * @msg: message to send 5 * 6 * Sends @msg through @sock, passing through LSM. 7 * Returns the number of bytes sent, or an error code. 8 */ 9 int sock_sendmsg(struct socket *sock, struct msghdr *msg) 10 { 11 int err = security_socket_sendmsg(sock, msg, 12 msg_data_left(msg)); 13 14 return err ?: sock_sendmsg_nosec(sock, msg); 15 } 16 EXPORT_SYMBOL(sock_sendmsg);

1 static inline int sock_sendmsg_nosec(struct socket *sock, struct msghdr *msg) 2 { 3 int ret = INDIRECT_CALL_INET(sock->ops->sendmsg, inet6_sendmsg, 4 inet_sendmsg, sock, msg, 5 msg_data_left(msg)); 6 BUG_ON(ret == -EIOCBQUEUED); 7 return ret; 8 }

继续追踪这个函数,会看到最终调用的是inet_sendmsg

1 int inet_sendmsg(struct socket *sock, struct msghdr *msg, size_t size) 2 { 3 struct sock *sk = sock->sk; 4 5 if (unlikely(inet_send_prepare(sk))) 6 return -EAGAIN; 7 8 return INDIRECT_CALL_2(sk->sk_prot->sendmsg, tcp_sendmsg, udp_sendmsg, 9 sk, msg, size); 10 } 11 EXPORT_SYMBOL(inet_sendmsg);

这里间接调用了tcp_sendmsg即传送到传输层。

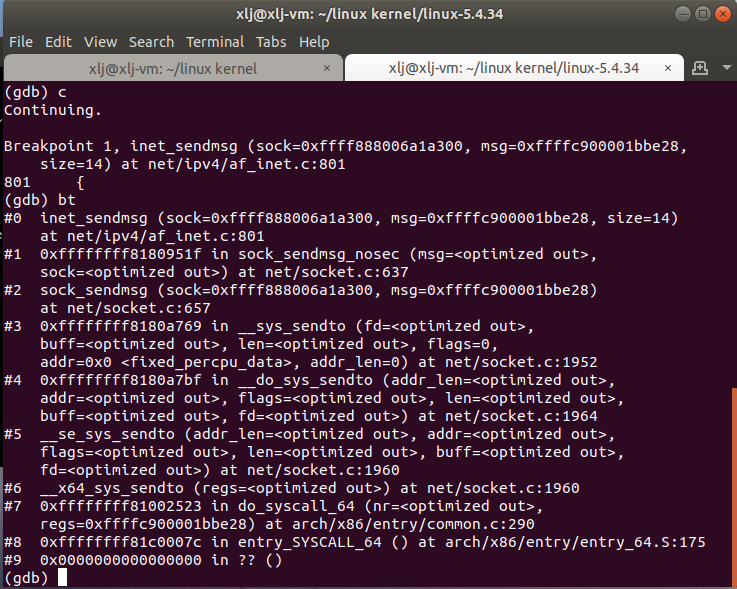

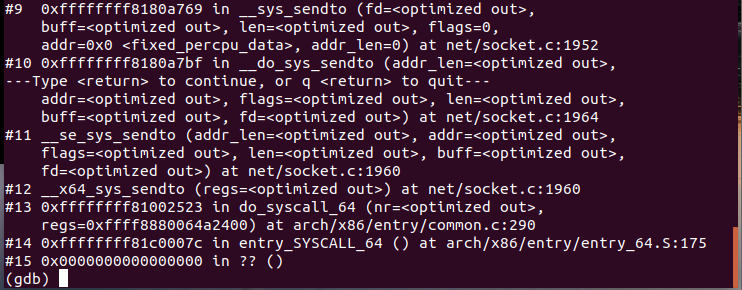

gdb验证:

4.2 传输层

流程:

1.tcp_sendmsg 函数会首先检查已经建立的 TCP connection 的状态,然后获取该连接的 MSS,开始 segement 发送流程。

2.构造TCP 段的 playload:它在内核空间中创建该 packet 的 sk_buffer 数据结构的实例 skb,从 userspace buffer 中拷贝 packet 的数据到 skb 的 buffer。

3.构造TCP header。

4.计算TCP 校验和(checksum)和 顺序号 (sequence number):TCP校验和是一个端到端的校验和,由发送端计算,然后由接收端验证。其目的是为了发现TCP首部和数据在发送端到接收端之间发生的任何改动。如果接收方检测到校验和有差错,则TCP段会被直接丢弃。TCP校验和覆盖 TCP 首部和 TCP 数据;TCP的校验和是必需的。

5.发到 IP 层处理:调用 IP handler 句柄 ip_queue_xmit,将 skb 传入 IP 处理流程。

源码分析:

入口函数是tcp_sendmsg

1 int tcp_sendmsg(struct sock *sk, struct msghdr *msg, size_t size) 2 { 3 int ret; 4 5 lock_sock(sk); 6 ret = tcp_sendmsg_locked(sk, msg, size); 7 release_sock(sk); 8 9 return ret; 10 } 11 EXPORT_SYMBOL(tcp_sendmsg);

tcp_sendmsg实际上调用的是tcp_sendmsg_locked函数

1 int tcp_sendmsg_locked(struct sock *sk, struct msghdr *msg, size_t size) 2 { 3 struct tcp_sock *tp = tcp_sk(sk); 4 struct ubuf_info *uarg = NULL; 5 struct sk_buff *skb; 6 struct sockcm_cookie sockc; 7 int flags, err, copied = 0; 8 int mss_now = 0, size_goal, copied_syn = 0; 9 int process_backlog = 0; 10 bool zc = false; 11 long timeo; 12 13 flags = msg->msg_flags; 14 15 if (flags & MSG_ZEROCOPY && size && sock_flag(sk, SOCK_ZEROCOPY)) { 16 skb = tcp_write_queue_tail(sk); 17 uarg = sock_zerocopy_realloc(sk, size, skb_zcopy(skb)); 18 if (!uarg) { 19 err = -ENOBUFS; 20 goto out_err; 21 } 22 23 zc = sk->sk_route_caps & NETIF_F_SG; 24 if (!zc) 25 uarg->zerocopy = 0; 26 } 27 28 if (unlikely(flags & MSG_FASTOPEN || inet_sk(sk)->defer_connect) && 29 !tp->repair) { 30 err = tcp_sendmsg_fastopen(sk, msg, &copied_syn, size, uarg); 31 if (err == -EINPROGRESS && copied_syn > 0) 32 goto out; 33 else if (err) 34 goto out_err; 35 } 36 37 timeo = sock_sndtimeo(sk, flags & MSG_DONTWAIT); 38 39 tcp_rate_check_app_limited(sk); /* is sending application-limited? */ 40 41 /* Wait for a connection to finish. One exception is TCP Fast Open 42 * (passive side) where data is allowed to be sent before a connection 43 * is fully established. 44 */ 45 if (((1 << sk->sk_state) & ~(TCPF_ESTABLISHED | TCPF_CLOSE_WAIT)) && 46 !tcp_passive_fastopen(sk)) { 47 err = sk_stream_wait_connect(sk, &timeo); 48 if (err != 0) 49 goto do_error; 50 } 51 52 if (unlikely(tp->repair)) { 53 if (tp->repair_queue == TCP_RECV_QUEUE) { 54 copied = tcp_send_rcvq(sk, msg, size); 55 goto out_nopush; 56 } 57 58 err = -EINVAL; 59 if (tp->repair_queue == TCP_NO_QUEUE) 60 goto out_err; 61 62 /* 'common' sending to sendq */ 63 } 64 65 sockcm_init(&sockc, sk); 66 if (msg->msg_controllen) { 67 err = sock_cmsg_send(sk, msg, &sockc); 68 if (unlikely(err)) { 69 err = -EINVAL; 70 goto out_err; 71 } 72 } 73 74 /* This should be in poll */ 75 sk_clear_bit(SOCKWQ_ASYNC_NOSPACE, sk); 76 77 /* Ok commence sending. */ 78 copied = 0; 79 80 restart: 81 mss_now = tcp_send_mss(sk, &size_goal, flags); 82 83 err = -EPIPE; 84 if (sk->sk_err || (sk->sk_shutdown & SEND_SHUTDOWN)) 85 goto do_error; 86 87 while (msg_data_left(msg)) { 88 int copy = 0; 89 90 skb = tcp_write_queue_tail(sk); 91 if (skb) 92 copy = size_goal - skb->len; 93 94 if (copy <= 0 || !tcp_skb_can_collapse_to(skb)) { 95 bool first_skb; 96 97 new_segment: 98 if (!sk_stream_memory_free(sk)) 99 goto wait_for_sndbuf; 100 101 if (unlikely(process_backlog >= 16)) { 102 process_backlog = 0; 103 if (sk_flush_backlog(sk)) 104 goto restart; 105 } 106 first_skb = tcp_rtx_and_write_queues_empty(sk); 107 skb = sk_stream_alloc_skb(sk, 0, sk->sk_allocation, 108 first_skb); 109 if (!skb) 110 goto wait_for_memory; 111 112 process_backlog++; 113 skb->ip_summed = CHECKSUM_PARTIAL; 114 115 skb_entail(sk, skb); 116 copy = size_goal; 117 118 /* All packets are restored as if they have 119 * already been sent. skb_mstamp_ns isn't set to 120 * avoid wrong rtt estimation. 121 */ 122 if (tp->repair) 123 TCP_SKB_CB(skb)->sacked |= TCPCB_REPAIRED; 124 } 125 126 /* Try to append data to the end of skb. */ 127 if (copy > msg_data_left(msg)) 128 copy = msg_data_left(msg); 129 130 /* Where to copy to? */ 131 if (skb_availroom(skb) > 0 && !zc) { 132 /* We have some space in skb head. Superb! */ 133 copy = min_t(int, copy, skb_availroom(skb)); 134 err = skb_add_data_nocache(sk, skb, &msg->msg_iter, copy); 135 if (err) 136 goto do_fault; 137 } else if (!zc) { 138 bool merge = true; 139 int i = skb_shinfo(skb)->nr_frags; 140 struct page_frag *pfrag = sk_page_frag(sk); 141 142 if (!sk_page_frag_refill(sk, pfrag)) 143 goto wait_for_memory; 144 145 if (!skb_can_coalesce(skb, i, pfrag->page, 146 pfrag->offset)) { 147 if (i >= sysctl_max_skb_frags) { 148 tcp_mark_push(tp, skb); 149 goto new_segment; 150 } 151 merge = false; 152 } 153 154 copy = min_t(int, copy, pfrag->size - pfrag->offset); 155 156 if (!sk_wmem_schedule(sk, copy)) 157 goto wait_for_memory; 158 159 err = skb_copy_to_page_nocache(sk, &msg->msg_iter, skb, 160 pfrag->page, 161 pfrag->offset, 162 copy); 163 if (err) 164 goto do_error; 165 166 /* Update the skb. */ 167 if (merge) { 168 skb_frag_size_add(&skb_shinfo(skb)->frags[i - 1], copy); 169 } else { 170 skb_fill_page_desc(skb, i, pfrag->page, 171 pfrag->offset, copy); 172 page_ref_inc(pfrag->page); 173 } 174 pfrag->offset += copy; 175 } else { 176 err = skb_zerocopy_iter_stream(sk, skb, msg, copy, uarg); 177 if (err == -EMSGSIZE || err == -EEXIST) { 178 tcp_mark_push(tp, skb); 179 goto new_segment; 180 } 181 if (err < 0) 182 goto do_error; 183 copy = err; 184 } 185 186 if (!copied) 187 TCP_SKB_CB(skb)->tcp_flags &= ~TCPHDR_PSH; 188 189 WRITE_ONCE(tp->write_seq, tp->write_seq + copy); 190 TCP_SKB_CB(skb)->end_seq += copy; 191 tcp_skb_pcount_set(skb, 0); 192 193 copied += copy; 194 if (!msg_data_left(msg)) { 195 if (unlikely(flags & MSG_EOR)) 196 TCP_SKB_CB(skb)->eor = 1; 197 goto out; 198 } 199 200 if (skb->len < size_goal || (flags & MSG_OOB) || unlikely(tp->repair)) 201 continue; 202 203 if (forced_push(tp)) { 204 tcp_mark_push(tp, skb); 205 __tcp_push_pending_frames(sk, mss_now, TCP_NAGLE_PUSH); 206 } else if (skb == tcp_send_head(sk)) 207 tcp_push_one(sk, mss_now); 208 continue; 209 210 wait_for_sndbuf: 211 set_bit(SOCK_NOSPACE, &sk->sk_socket->flags); 212 wait_for_memory: 213 if (copied) 214 tcp_push(sk, flags & ~MSG_MORE, mss_now, 215 TCP_NAGLE_PUSH, size_goal); 216 217 err = sk_stream_wait_memory(sk, &timeo); 218 if (err != 0) 219 goto do_error; 220 221 mss_now = tcp_send_mss(sk, &size_goal, flags); 222 } 223 224 out: 225 if (copied) { 226 tcp_tx_timestamp(sk, sockc.tsflags); 227 tcp_push(sk, flags, mss_now, tp->nonagle, size_goal); 228 } 229 out_nopush: 230 sock_zerocopy_put(uarg); 231 return copied + copied_syn; 232 233 do_error: 234 skb = tcp_write_queue_tail(sk); 235 do_fault: 236 tcp_remove_empty_skb(sk, skb); 237 238 if (copied + copied_syn) 239 goto out; 240 out_err: 241 sock_zerocopy_put_abort(uarg, true); 242 err = sk_stream_error(sk, flags, err); 243 /* make sure we wake any epoll edge trigger waiter */ 244 if (unlikely(tcp_rtx_and_write_queues_empty(sk) && err == -EAGAIN)) { 245 sk->sk_write_space(sk); 246 tcp_chrono_stop(sk, TCP_CHRONO_SNDBUF_LIMITED); 247 } 248 return err; 249 } 250 EXPORT_SYMBOL_GPL(tcp_sendmsg_locked);

在tcp_sendmsg_locked中,完成的是将所有的数据组织成发送队列,这个发送队列是struct sock结构中的一个域sk_write_queue,这个队列的每一个元素是一个skb,里面存放的就是待发送的数据。然后调用了tcp_push()函数。

在tcp协议的头部有几个标志字段:URG、ACK、RSH、RST、SYN、FIN,tcp_push中会判断这个skb的元素是否需要push,如果需要就将tcp头部字段的push置一,置一的过程如下:

1 static void tcp_push(struct sock *sk, int flags, int mss_now, 2 int nonagle, int size_goal) 3 { 4 struct tcp_sock *tp = tcp_sk(sk); 5 struct sk_buff *skb; 6 7 skb = tcp_write_queue_tail(sk); 8 if (!skb) 9 return; 10 if (!(flags & MSG_MORE) || forced_push(tp)) 11 tcp_mark_push(tp, skb); 12 13 tcp_mark_urg(tp, flags); 14 15 if (tcp_should_autocork(sk, skb, size_goal)) { 16 17 /* avoid atomic op if TSQ_THROTTLED bit is already set */ 18 if (!test_bit(TSQ_THROTTLED, &sk->sk_tsq_flags)) { 19 NET_INC_STATS(sock_net(sk), LINUX_MIB_TCPAUTOCORKING); 20 set_bit(TSQ_THROTTLED, &sk->sk_tsq_flags); 21 } 22 /* It is possible TX completion already happened 23 * before we set TSQ_THROTTLED. 24 */ 25 if (refcount_read(&sk->sk_wmem_alloc) > skb->truesize) 26 return; 27 } 28 29 if (flags & MSG_MORE) 30 nonagle = TCP_NAGLE_CORK; 31 32 __tcp_push_pending_frames(sk, mss_now, nonagle); 33 }

然后tcp_push调用了__tcp_push_pending_frames(sk, mss_now, nonagle);函数发送数据:

1 /* Push out any pending frames which were held back due to 2 * TCP_CORK or attempt at coalescing tiny packets. 3 * The socket must be locked by the caller. 4 */ 5 void __tcp_push_pending_frames(struct sock *sk, unsigned int cur_mss, 6 int nonagle) 7 { 8 /* If we are closed, the bytes will have to remain here. 9 * In time closedown will finish, we empty the write queue and 10 * all will be happy. 11 */ 12 if (unlikely(sk->sk_state == TCP_CLOSE)) 13 return; 14 15 if (tcp_write_xmit(sk, cur_mss, nonagle, 0, 16 sk_gfp_mask(sk, GFP_ATOMIC))) 17 tcp_check_probe_timer(sk); 18 }

随后又调用了tcp_write_xmit来发送数据

1 static bool tcp_write_xmit(struct sock *sk, unsigned int mss_now, int nonagle, 2 int push_one, gfp_t gfp) 3 { 4 struct tcp_sock *tp = tcp_sk(sk); 5 struct sk_buff *skb; 6 unsigned int tso_segs, sent_pkts; 7 int cwnd_quota; 8 int result; 9 bool is_cwnd_limited = false, is_rwnd_limited = false; 10 u32 max_segs; 11 12 sent_pkts = 0; 13 14 tcp_mstamp_refresh(tp); 15 if (!push_one) { 16 /* Do MTU probing. */ 17 result = tcp_mtu_probe(sk); 18 if (!result) { 19 return false; 20 } else if (result > 0) { 21 sent_pkts = 1; 22 } 23 } 24 25 max_segs = tcp_tso_segs(sk, mss_now); 26 while ((skb = tcp_send_head(sk))) { 27 unsigned int limit; 28 29 if (unlikely(tp->repair) && tp->repair_queue == TCP_SEND_QUEUE) { 30 /* "skb_mstamp_ns" is used as a start point for the retransmit timer */ 31 skb->skb_mstamp_ns = tp->tcp_wstamp_ns = tp->tcp_clock_cache; 32 list_move_tail(&skb->tcp_tsorted_anchor, &tp->tsorted_sent_queue); 33 tcp_init_tso_segs(skb, mss_now); 34 goto repair; /* Skip network transmission */ 35 } 36 37 if (tcp_pacing_check(sk)) 38 break; 39 40 tso_segs = tcp_init_tso_segs(skb, mss_now); 41 BUG_ON(!tso_segs); 42 43 cwnd_quota = tcp_cwnd_test(tp, skb); 44 if (!cwnd_quota) { 45 if (push_one == 2) 46 /* Force out a loss probe pkt. */ 47 cwnd_quota = 1; 48 else 49 break; 50 } 51 52 if (unlikely(!tcp_snd_wnd_test(tp, skb, mss_now))) { 53 is_rwnd_limited = true; 54 break; 55 } 56 57 if (tso_segs == 1) { 58 if (unlikely(!tcp_nagle_test(tp, skb, mss_now, 59 (tcp_skb_is_last(sk, skb) ? 60 nonagle : TCP_NAGLE_PUSH)))) 61 break; 62 } else { 63 if (!push_one && 64 tcp_tso_should_defer(sk, skb, &is_cwnd_limited, 65 &is_rwnd_limited, max_segs)) 66 break; 67 } 68 69 limit = mss_now; 70 if (tso_segs > 1 && !tcp_urg_mode(tp)) 71 limit = tcp_mss_split_point(sk, skb, mss_now, 72 min_t(unsigned int, 73 cwnd_quota, 74 max_segs), 75 nonagle); 76 77 if (skb->len > limit && 78 unlikely(tso_fragment(sk, skb, limit, mss_now, gfp))) 79 break; 80 81 if (tcp_small_queue_check(sk, skb, 0)) 82 break; 83 84 /* Argh, we hit an empty skb(), presumably a thread 85 * is sleeping in sendmsg()/sk_stream_wait_memory(). 86 * We do not want to send a pure-ack packet and have 87 * a strange looking rtx queue with empty packet(s). 88 */ 89 if (TCP_SKB_CB(skb)->end_seq == TCP_SKB_CB(skb)->seq) 90 break; 91 92 if (unlikely(tcp_transmit_skb(sk, skb, 1, gfp))) 93 break; 94 95 repair: 96 /* Advance the send_head. This one is sent out. 97 * This call will increment packets_out. 98 */ 99 tcp_event_new_data_sent(sk, skb); 100 101 tcp_minshall_update(tp, mss_now, skb); 102 sent_pkts += tcp_skb_pcount(skb); 103 104 if (push_one) 105 break; 106 } 107 108 if (is_rwnd_limited) 109 tcp_chrono_start(sk, TCP_CHRONO_RWND_LIMITED); 110 else 111 tcp_chrono_stop(sk, TCP_CHRONO_RWND_LIMITED); 112 113 if (likely(sent_pkts)) { 114 if (tcp_in_cwnd_reduction(sk)) 115 tp->prr_out += sent_pkts; 116 117 /* Send one loss probe per tail loss episode. */ 118 if (push_one != 2) 119 tcp_schedule_loss_probe(sk, false); 120 is_cwnd_limited |= (tcp_packets_in_flight(tp) >= tp->snd_cwnd); 121 tcp_cwnd_validate(sk, is_cwnd_limited); 122 return false; 123 } 124 return !tp->packets_out && !tcp_write_queue_empty(sk); 125 }

tcp_write_xmit位于tcpoutput.c中,它实现了tcp的拥塞控制,然后调用了tcp_transmit_skb(sk, skb, 1, gfp)传输数据,实际上调用的是__tcp_transmit_skb。

1 static int __tcp_transmit_skb(struct sock *sk, struct sk_buff *skb, 2 int clone_it, gfp_t gfp_mask, u32 rcv_nxt) 3 { 4 const struct inet_connection_sock *icsk = inet_csk(sk); 5 struct inet_sock *inet; 6 struct tcp_sock *tp; 7 struct tcp_skb_cb *tcb; 8 struct tcp_out_options opts; 9 unsigned int tcp_options_size, tcp_header_size; 10 struct sk_buff *oskb = NULL; 11 struct tcp_md5sig_key *md5; 12 struct tcphdr *th; 13 u64 prior_wstamp; 14 int err; 15 16 BUG_ON(!skb || !tcp_skb_pcount(skb)); 17 tp = tcp_sk(sk); 18 prior_wstamp = tp->tcp_wstamp_ns; 19 tp->tcp_wstamp_ns = max(tp->tcp_wstamp_ns, tp->tcp_clock_cache); 20 skb->skb_mstamp_ns = tp->tcp_wstamp_ns; 21 if (clone_it) { 22 TCP_SKB_CB(skb)->tx.in_flight = TCP_SKB_CB(skb)->end_seq 23 - tp->snd_una; 24 oskb = skb; 25 26 tcp_skb_tsorted_save(oskb) { 27 if (unlikely(skb_cloned(oskb))) 28 skb = pskb_copy(oskb, gfp_mask); 29 else 30 skb = skb_clone(oskb, gfp_mask); 31 } tcp_skb_tsorted_restore(oskb); 32 33 if (unlikely(!skb)) 34 return -ENOBUFS; 35 /* retransmit skbs might have a non zero value in skb->dev 36 * because skb->dev is aliased with skb->rbnode.rb_left 37 */ 38 skb->dev = NULL; 39 } 40 41 inet = inet_sk(sk); 42 tcb = TCP_SKB_CB(skb); 43 memset(&opts, 0, sizeof(opts)); 44 45 if (unlikely(tcb->tcp_flags & TCPHDR_SYN)) { 46 tcp_options_size = tcp_syn_options(sk, skb, &opts, &md5); 47 } else { 48 tcp_options_size = tcp_established_options(sk, skb, &opts, 49 &md5); 50 /* Force a PSH flag on all (GSO) packets to expedite GRO flush 51 * at receiver : This slightly improve GRO performance. 52 * Note that we do not force the PSH flag for non GSO packets, 53 * because they might be sent under high congestion events, 54 * and in this case it is better to delay the delivery of 1-MSS 55 * packets and thus the corresponding ACK packet that would 56 * release the following packet. 57 */ 58 if (tcp_skb_pcount(skb) > 1) 59 tcb->tcp_flags |= TCPHDR_PSH; 60 } 61 tcp_header_size = tcp_options_size + sizeof(struct tcphdr); 62 63 /* if no packet is in qdisc/device queue, then allow XPS to select 64 * another queue. We can be called from tcp_tsq_handler() 65 * which holds one reference to sk. 66 * 67 * TODO: Ideally, in-flight pure ACK packets should not matter here. 68 * One way to get this would be to set skb->truesize = 2 on them. 69 */ 70 skb->ooo_okay = sk_wmem_alloc_get(sk) < SKB_TRUESIZE(1); 71 72 /* If we had to use memory reserve to allocate this skb, 73 * this might cause drops if packet is looped back : 74 * Other socket might not have SOCK_MEMALLOC. 75 * Packets not looped back do not care about pfmemalloc. 76 */ 77 skb->pfmemalloc = 0; 78 79 skb_push(skb, tcp_header_size); 80 skb_reset_transport_header(skb); 81 82 skb_orphan(skb); 83 skb->sk = sk; 84 skb->destructor = skb_is_tcp_pure_ack(skb) ? __sock_wfree : tcp_wfree; 85 skb_set_hash_from_sk(skb, sk); 86 refcount_add(skb->truesize, &sk->sk_wmem_alloc); 87 88 skb_set_dst_pending_confirm(skb, sk->sk_dst_pending_confirm); 89 90 /* Build TCP header and checksum it. */ 91 th = (struct tcphdr *)skb->data; 92 th->source = inet->inet_sport; 93 th->dest = inet->inet_dport; 94 th->seq = htonl(tcb->seq); 95 th->ack_seq = htonl(rcv_nxt); 96 *(((__be16 *)th) + 6) = htons(((tcp_header_size >> 2) << 12) | 97 tcb->tcp_flags); 98 99 th->check = 0; 100 th->urg_ptr = 0; 101 102 /* The urg_mode check is necessary during a below snd_una win probe */ 103 if (unlikely(tcp_urg_mode(tp) && before(tcb->seq, tp->snd_up))) { 104 if (before(tp->snd_up, tcb->seq + 0x10000)) { 105 th->urg_ptr = htons(tp->snd_up - tcb->seq); 106 th->urg = 1; 107 } else if (after(tcb->seq + 0xFFFF, tp->snd_nxt)) { 108 th->urg_ptr = htons(0xFFFF); 109 th->urg = 1; 110 } 111 } 112 113 tcp_options_write((__be32 *)(th + 1), tp, &opts); 114 skb_shinfo(skb)->gso_type = sk->sk_gso_type; 115 if (likely(!(tcb->tcp_flags & TCPHDR_SYN))) { 116 th->window = htons(tcp_select_window(sk)); 117 tcp_ecn_send(sk, skb, th, tcp_header_size); 118 } else { 119 /* RFC1323: The window in SYN & SYN/ACK segments 120 * is never scaled. 121 */ 122 th->window = htons(min(tp->rcv_wnd, 65535U)); 123 } 124 #ifdef CONFIG_TCP_MD5SIG 125 /* Calculate the MD5 hash, as we have all we need now */ 126 if (md5) { 127 sk_nocaps_add(sk, NETIF_F_GSO_MASK); 128 tp->af_specific->calc_md5_hash(opts.hash_location, 129 md5, sk, skb); 130 } 131 #endif 132 133 icsk->icsk_af_ops->send_check(sk, skb); 134 135 if (likely(tcb->tcp_flags & TCPHDR_ACK)) 136 tcp_event_ack_sent(sk, tcp_skb_pcount(skb), rcv_nxt); 137 138 if (skb->len != tcp_header_size) { 139 tcp_event_data_sent(tp, sk); 140 tp->data_segs_out += tcp_skb_pcount(skb); 141 tp->bytes_sent += skb->len - tcp_header_size; 142 } 143 144 if (after(tcb->end_seq, tp->snd_nxt) || tcb->seq == tcb->end_seq) 145 TCP_ADD_STATS(sock_net(sk), TCP_MIB_OUTSEGS, 146 tcp_skb_pcount(skb)); 147 148 tp->segs_out += tcp_skb_pcount(skb); 149 /* OK, its time to fill skb_shinfo(skb)->gso_{segs|size} */ 150 skb_shinfo(skb)->gso_segs = tcp_skb_pcount(skb); 151 skb_shinfo(skb)->gso_size = tcp_skb_mss(skb); 152 153 /* Leave earliest departure time in skb->tstamp (skb->skb_mstamp_ns) */ 154 155 /* Cleanup our debris for IP stacks */ 156 memset(skb->cb, 0, max(sizeof(struct inet_skb_parm), 157 sizeof(struct inet6_skb_parm))); 158 159 tcp_add_tx_delay(skb, tp); 160 161 err = icsk->icsk_af_ops->queue_xmit(sk, skb, &inet->cork.fl); 162 163 if (unlikely(err > 0)) { 164 tcp_enter_cwr(sk); 165 err = net_xmit_eval(err); 166 } 167 if (!err && oskb) { 168 tcp_update_skb_after_send(sk, oskb, prior_wstamp); 169 tcp_rate_skb_sent(sk, oskb); 170 } 171 return err; 172 }

tcp_transmit_skb是tcp发送数据位于传输层的最后一步,这里首先对TCP数据段的头部进行了处理,然后调用了网络层提供的发送接口icsk->icsk_af_ops->queue_xmit(sk, skb, &inet->cork.fl);实现了数据的发送,自此,数据离开了传输层,传输层的任务也就结束了。

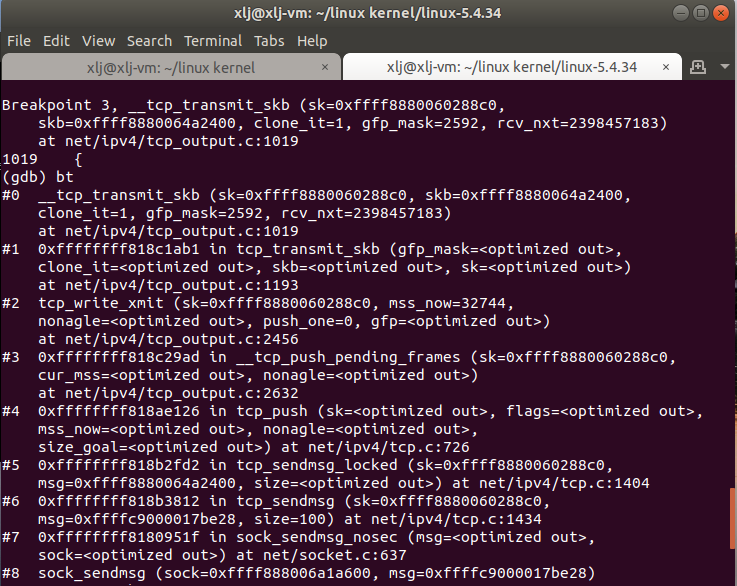

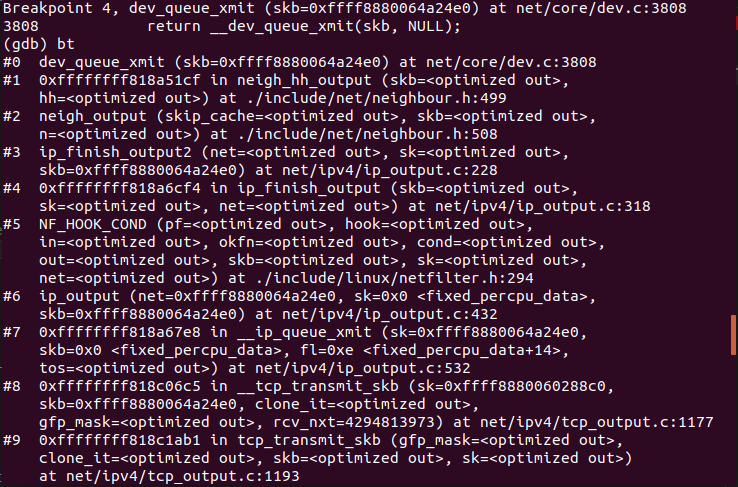

gdb调试验证:

4.3 网络层

流程:

1.首先,ip_queue_xmit(skb)会检查skb->dst路由信息。如果没有,比如套接字的第一个包,就使用ip_route_output()选择一个路由。

2.接着,填充IP包的各个字段,比如版本、包头长度、TOS等。

3.中间的一些分片等,可参阅相关文档。基本思想是,当报文的长度大于mtu,gso的长度不为0就会调用 ip_fragment 进行分片,否则就会调用ip_finish_output2把数据发送出去。ip_fragment 函数中,会检查 IP_DF 标志位,如果待分片IP数据包禁止分片,则调用 icmp_send()向发送方发送一个原因为需要分片而设置了不分片标志的目的不可达ICMP报文,并丢弃报文,即设置IP状态为分片失败,释放skb,返回消息过长错误码。

4.接下来就用 ip_finish_ouput2 设置链路层报文头了。如果,链路层报头缓存有(即hh不为空),那就拷贝到skb里。如果没,那么就调用neigh_resolve_output,使用 ARP 获取。

源码分析:

入口函数是ip_queue_xmit,ip_queue_xmit是 ip 层提供给 tcp 层发送回调函数。ip_queue_xmit()完成面向连接套接字的包输出,当套接字处于连接状态时,所有从套接字发出的包都具有确定的路由, 无需为每一个输出包查询它的目的入口,可将套接字直接绑定到路由入口上, 这由套接字的目的缓冲指针(dst_cache)来完成。ip_queue_xmit()首先为输入包建立IP包头, 经过本地包过滤器后,再将IP包分片输出(ip_fragment)。

1 static inline int ip_queue_xmit(struct sock *sk, struct sk_buff *skb, 2 struct flowi *fl) 3 { 4 return __ip_queue_xmit(sk, skb, fl, inet_sk(sk)->tos); 5 }

Ip_queue_xmit实际上是调用__ip_queue_xmit

1 int __ip_queue_xmit(struct sock *sk, struct sk_buff *skb, struct flowi *fl, 2 __u8 tos) 3 { 4 struct inet_sock *inet = inet_sk(sk); 5 struct net *net = sock_net(sk); 6 struct ip_options_rcu *inet_opt; 7 struct flowi4 *fl4; 8 struct rtable *rt; 9 struct iphdr *iph; 10 int res; 11 12 /* Skip all of this if the packet is already routed, 13 * f.e. by something like SCTP. 14 */ 15 rcu_read_lock(); 16 inet_opt = rcu_dereference(inet->inet_opt); 17 fl4 = &fl->u.ip4; 18 rt = skb_rtable(skb); 19 if (rt) 20 goto packet_routed; 21 22 /* Make sure we can route this packet. */ 23 rt = (struct rtable *)__sk_dst_check(sk, 0); 24 if (!rt) { 25 __be32 daddr; 26 27 /* Use correct destination address if we have options. */ 28 daddr = inet->inet_daddr; 29 if (inet_opt && inet_opt->opt.srr) 30 daddr = inet_opt->opt.faddr; 31 32 /* If this fails, retransmit mechanism of transport layer will 33 * keep trying until route appears or the connection times 34 * itself out. 35 */ 36 rt = ip_route_output_ports(net, fl4, sk, 37 daddr, inet->inet_saddr, 38 inet->inet_dport, 39 inet->inet_sport, 40 sk->sk_protocol, 41 RT_CONN_FLAGS_TOS(sk, tos), 42 sk->sk_bound_dev_if); 43 if (IS_ERR(rt)) 44 goto no_route; 45 sk_setup_caps(sk, &rt->dst); 46 } 47 skb_dst_set_noref(skb, &rt->dst); 48 49 packet_routed: 50 if (inet_opt && inet_opt->opt.is_strictroute && rt->rt_uses_gateway) 51 goto no_route; 52 53 /* OK, we know where to send it, allocate and build IP header. */ 54 skb_push(skb, sizeof(struct iphdr) + (inet_opt ? inet_opt->opt.optlen : 0)); 55 skb_reset_network_header(skb); 56 iph = ip_hdr(skb); 57 *((__be16 *)iph) = htons((4 << 12) | (5 << 8) | (tos & 0xff)); 58 if (ip_dont_fragment(sk, &rt->dst) && !skb->ignore_df) 59 iph->frag_off = htons(IP_DF); 60 else 61 iph->frag_off = 0; 62 iph->ttl = ip_select_ttl(inet, &rt->dst); 63 iph->protocol = sk->sk_protocol; 64 ip_copy_addrs(iph, fl4); 65 66 /* Transport layer set skb->h.foo itself. */ 67 68 if (inet_opt && inet_opt->opt.optlen) { 69 iph->ihl += inet_opt->opt.optlen >> 2; 70 ip_options_build(skb, &inet_opt->opt, inet->inet_daddr, rt, 0); 71 } 72 73 ip_select_ident_segs(net, skb, sk, 74 skb_shinfo(skb)->gso_segs ?: 1); 75 76 /* TODO : should we use skb->sk here instead of sk ? */ 77 skb->priority = sk->sk_priority; 78 skb->mark = sk->sk_mark; 79 80 res = ip_local_out(net, sk, skb); 81 rcu_read_unlock(); 82 return res; 83 84 no_route: 85 rcu_read_unlock(); 86 IP_INC_STATS(net, IPSTATS_MIB_OUTNOROUTES); 87 kfree_skb(skb); 88 return -EHOSTUNREACH; 89 } 90 EXPORT_SYMBOL(__ip_queue_xmit);

其中Skb_rtable(skb)获取 skb 中的路由缓存,然后判断是否有缓存,如果有缓存就直接进行packet_routed函数,否则就 执行ip_route_output_ports查找路由缓存。最后调用ip_local_out发送数据包。

1 int ip_local_out(struct net *net, struct sock *sk, struct sk_buff *skb) 2 { 3 int err; 4 5 err = __ip_local_out(net, sk, skb); 6 if (likely(err == 1)) 7 err = dst_output(net, sk, skb); 8 9 return err; 10 } 11 EXPORT_SYMBOL_GPL(ip_local_out);

同函数ip_queue_xmit一样,ip_local_out函数内部调用__ip_local_out。

1 int __ip_local_out(struct net *net, struct sock *sk, struct sk_buff *skb) 2 { 3 struct iphdr *iph = ip_hdr(skb); 4 5 iph->tot_len = htons(skb->len); 6 ip_send_check(iph); 7 8 /* if egress device is enslaved to an L3 master device pass the 9 * skb to its handler for processing 10 */ 11 skb = l3mdev_ip_out(sk, skb); 12 if (unlikely(!skb)) 13 return 0; 14 15 skb->protocol = htons(ETH_P_IP); 16 17 return nf_hook(NFPROTO_IPV4, NF_INET_LOCAL_OUT, 18 net, sk, skb, NULL, skb_dst(skb)->dev, 19 dst_output); 20 }

返回一个nf_hook函数,里面调用了dst_output,这个函数实质上是调用ip_finish__output函数,ip_finish__output函数内部在调用__ip_finish_output函数

1 static int __ip_finish_output(struct net *net, struct sock *sk, struct sk_buff *skb) 2 { 3 unsigned int mtu; 4 5 #if defined(CONFIG_NETFILTER) && defined(CONFIG_XFRM) 6 /* Policy lookup after SNAT yielded a new policy */ 7 if (skb_dst(skb)->xfrm) { 8 IPCB(skb)->flags |= IPSKB_REROUTED; 9 return dst_output(net, sk, skb); 10 } 11 #endif 12 mtu = ip_skb_dst_mtu(sk, skb); 13 if (skb_is_gso(skb)) 14 return ip_finish_output_gso(net, sk, skb, mtu); 15 16 if (skb->len > mtu || (IPCB(skb)->flags & IPSKB_FRAG_PMTU)) 17 return ip_fragment(net, sk, skb, mtu, ip_finish_output2); 18 19 return ip_finish_output2(net, sk, skb); 20 }

如果需要分片就调用ip_fragment,否则直接调用ip_finish_output2。

1 static int ip_fragment(struct net *net, struct sock *sk, struct sk_buff *skb, 2 unsigned int mtu, 3 int (*output)(struct net *, struct sock *, struct sk_buff *)) 4 { 5 struct iphdr *iph = ip_hdr(skb); 6 7 if ((iph->frag_off & htons(IP_DF)) == 0) 8 return ip_do_fragment(net, sk, skb, output); 9 10 if (unlikely(!skb->ignore_df || 11 (IPCB(skb)->frag_max_size && 12 IPCB(skb)->frag_max_size > mtu))) { 13 IP_INC_STATS(net, IPSTATS_MIB_FRAGFAILS); 14 icmp_send(skb, ICMP_DEST_UNREACH, ICMP_FRAG_NEEDED, 15 htonl(mtu)); 16 kfree_skb(skb); 17 return -EMSGSIZE; 18 } 19 20 return ip_do_fragment(net, sk, skb, output); 21 }

1 static int ip_finish_output2(struct net *net, struct sock *sk, struct sk_buff *skb) 2 { 3 struct dst_entry *dst = skb_dst(skb); 4 struct rtable *rt = (struct rtable *)dst; 5 struct net_device *dev = dst->dev; 6 unsigned int hh_len = LL_RESERVED_SPACE(dev); 7 struct neighbour *neigh; 8 bool is_v6gw = false; 9 10 if (rt->rt_type == RTN_MULTICAST) { 11 IP_UPD_PO_STATS(net, IPSTATS_MIB_OUTMCAST, skb->len); 12 } else if (rt->rt_type == RTN_BROADCAST) 13 IP_UPD_PO_STATS(net, IPSTATS_MIB_OUTBCAST, skb->len); 14 15 /* Be paranoid, rather than too clever. */ 16 if (unlikely(skb_headroom(skb) < hh_len && dev->header_ops)) { 17 struct sk_buff *skb2; 18 19 skb2 = skb_realloc_headroom(skb, LL_RESERVED_SPACE(dev)); 20 if (!skb2) { 21 kfree_skb(skb); 22 return -ENOMEM; 23 } 24 if (skb->sk) 25 skb_set_owner_w(skb2, skb->sk); 26 consume_skb(skb); 27 skb = skb2; 28 } 29 30 if (lwtunnel_xmit_redirect(dst->lwtstate)) { 31 int res = lwtunnel_xmit(skb); 32 33 if (res < 0 || res == LWTUNNEL_XMIT_DONE) 34 return res; 35 } 36 37 rcu_read_lock_bh(); 38 neigh = ip_neigh_for_gw(rt, skb, &is_v6gw); 39 if (!IS_ERR(neigh)) { 40 int res; 41 42 sock_confirm_neigh(skb, neigh); 43 /* if crossing protocols, can not use the cached header */ 44 res = neigh_output(neigh, skb, is_v6gw); 45 rcu_read_unlock_bh(); 46 return res; 47 } 48 rcu_read_unlock_bh(); 49 50 net_dbg_ratelimited("%s: No header cache and no neighbour!\n", 51 __func__); 52 kfree_skb(skb); 53 return -EINVAL; 54 }

在构造好 ip 头,检查完分片之后,会调用邻居子系统的输出函数 neigh_output进行输出。

1 static inline int neigh_output(struct neighbour *n, struct sk_buff *skb, 2 bool skip_cache) 3 { 4 const struct hh_cache *hh = &n->hh; 5 6 if ((n->nud_state & NUD_CONNECTED) && hh->hh_len && !skip_cache) 7 return neigh_hh_output(hh, skb); 8 else 9 return n->output(n, skb); 10 }

输出分为有二层头缓存和没有两种情况,有缓存时调用neigh_hh_output进行快速输出,没有缓存时,则调用邻居子系统的输出回调函数进行慢速输出。

1 static inline int neigh_hh_output(const struct hh_cache *hh, struct sk_buff *skb) 2 { 3 unsigned int hh_alen = 0; 4 unsigned int seq; 5 unsigned int hh_len; 6 7 do { 8 seq = read_seqbegin(&hh->hh_lock); 9 hh_len = READ_ONCE(hh->hh_len); 10 if (likely(hh_len <= HH_DATA_MOD)) { 11 hh_alen = HH_DATA_MOD; 12 13 /* skb_push() would proceed silently if we have room for 14 * the unaligned size but not for the aligned size: 15 * check headroom explicitly. 16 */ 17 if (likely(skb_headroom(skb) >= HH_DATA_MOD)) { 18 /* this is inlined by gcc */ 19 memcpy(skb->data - HH_DATA_MOD, hh->hh_data, 20 HH_DATA_MOD); 21 } 22 } else { 23 hh_alen = HH_DATA_ALIGN(hh_len); 24 25 if (likely(skb_headroom(skb) >= hh_alen)) { 26 memcpy(skb->data - hh_alen, hh->hh_data, 27 hh_alen); 28 } 29 } 30 } while (read_seqretry(&hh->hh_lock, seq)); 31 32 if (WARN_ON_ONCE(skb_headroom(skb) < hh_alen)) { 33 kfree_skb(skb); 34 return NET_XMIT_DROP; 35 } 36 37 __skb_push(skb, hh_len); 38 return dev_queue_xmit(skb); 39 }

最后调用dev_queue_xmit函数进行向链路层发送包。

gdb调试验证:

4.4 链路层和物理层

流程:

1.数据链路层在不可靠的物理介质上提供可靠的传输。该层的作用包括:物理地址寻址、数据的成帧、流量控制、数据的检错、重发等。这一层数据的单位称为帧(frame)。从dev_queue_xmit函数开始,位于net/core/dev.c文件中。上层调用dev_queue_xmit,进而调用 __dev_queue_xmit,再调用dev_hard_start_xmit函数获取skb。

2.在xmit_one中调用__net_dev_start_xmit函数。进而调用netdev_start_xmit,实际上是调用__netdev_start_xmit函数。

3.调用各网络设备实现的ndo_start_xmit回调函数指针,从而把数据发送给网卡,物理层在收到发送请求之后,通过 DMA 将该主存中的数据拷贝至内部RAM(buffer)之中。在数据拷贝中,同时加入符合以太网协议的相关header,IFG、前导符和CRC。对于以太网网络,物理层发送采用CSMA/CD,即在发送过程中侦听链路冲突。一旦网卡完成报文发送,将产生中断通知CPU,然后驱动层中的中断处理程序就可以删除保存的 skb 了。

源码分析:

上层调用dev_queue_xmit进入链路层的处理流程,实际上调用的是__dev_queue_xmit。

1 /** 2 * __dev_queue_xmit - transmit a buffer 3 * @skb: buffer to transmit 4 * @sb_dev: suboordinate device used for L2 forwarding offload 5 * 6 * Queue a buffer for transmission to a network device. The caller must 7 * have set the device and priority and built the buffer before calling 8 * this function. The function can be called from an interrupt. 9 * 10 * A negative errno code is returned on a failure. A success does not 11 * guarantee the frame will be transmitted as it may be dropped due 12 * to congestion or traffic shaping. 13 * 14 * ----------------------------------------------------------------------------------- 15 * I notice this method can also return errors from the queue disciplines, 16 * including NET_XMIT_DROP, which is a positive value. So, errors can also 17 * be positive. 18 * 19 * Regardless of the return value, the skb is consumed, so it is currently 20 * difficult to retry a send to this method. (You can bump the ref count 21 * before sending to hold a reference for retry if you are careful.) 22 * 23 * When calling this method, interrupts MUST be enabled. This is because 24 * the BH enable code must have IRQs enabled so that it will not deadlock. 25 * --BLG 26 */ 27 static int __dev_queue_xmit(struct sk_buff *skb, struct net_device *sb_dev) 28 { 29 struct net_device *dev = skb->dev; 30 struct netdev_queue *txq; 31 struct Qdisc *q; 32 int rc = -ENOMEM; 33 bool again = false; 34 35 skb_reset_mac_header(skb); 36 37 if (unlikely(skb_shinfo(skb)->tx_flags & SKBTX_SCHED_TSTAMP)) 38 __skb_tstamp_tx(skb, NULL, skb->sk, SCM_TSTAMP_SCHED); 39 40 /* Disable soft irqs for various locks below. Also 41 * stops preemption for RCU. 42 */ 43 rcu_read_lock_bh(); 44 45 skb_update_prio(skb); 46 47 qdisc_pkt_len_init(skb); 48 #ifdef CONFIG_NET_CLS_ACT 49 skb->tc_at_ingress = 0; 50 # ifdef CONFIG_NET_EGRESS 51 if (static_branch_unlikely(&egress_needed_key)) { 52 skb = sch_handle_egress(skb, &rc, dev); 53 if (!skb) 54 goto out; 55 } 56 # endif 57 #endif 58 /* If device/qdisc don't need skb->dst, release it right now while 59 * its hot in this cpu cache. 60 */ 61 if (dev->priv_flags & IFF_XMIT_DST_RELEASE) 62 skb_dst_drop(skb); 63 else 64 skb_dst_force(skb); 65 66 txq = netdev_core_pick_tx(dev, skb, sb_dev); 67 q = rcu_dereference_bh(txq->qdisc); 68 69 trace_net_dev_queue(skb); 70 if (q->enqueue) { 71 rc = __dev_xmit_skb(skb, q, dev, txq); 72 goto out; 73 } 74 75 /* The device has no queue. Common case for software devices: 76 * loopback, all the sorts of tunnels... 77 78 * Really, it is unlikely that netif_tx_lock protection is necessary 79 * here. (f.e. loopback and IP tunnels are clean ignoring statistics 80 * counters.) 81 * However, it is possible, that they rely on protection 82 * made by us here. 83 84 * Check this and shot the lock. It is not prone from deadlocks. 85 *Either shot noqueue qdisc, it is even simpler 8) 86 */ 87 if (dev->flags & IFF_UP) { 88 int cpu = smp_processor_id(); /* ok because BHs are off */ 89 90 if (txq->xmit_lock_owner != cpu) { 91 if (dev_xmit_recursion()) 92 goto recursion_alert; 93 94 skb = validate_xmit_skb(skb, dev, &again); 95 if (!skb) 96 goto out; 97 98 HARD_TX_LOCK(dev, txq, cpu); 99 100 if (!netif_xmit_stopped(txq)) { 101 dev_xmit_recursion_inc(); 102 skb = dev_hard_start_xmit(skb, dev, txq, &rc); 103 dev_xmit_recursion_dec(); 104 if (dev_xmit_complete(rc)) { 105 HARD_TX_UNLOCK(dev, txq); 106 goto out; 107 } 108 } 109 HARD_TX_UNLOCK(dev, txq); 110 net_crit_ratelimited("Virtual device %s asks to queue packet!\n", 111 dev->name); 112 } else { 113 /* Recursion is detected! It is possible, 114 * unfortunately 115 */ 116 recursion_alert: 117 net_crit_ratelimited("Dead loop on virtual device %s, fix it urgently!\n", 118 dev->name); 119 } 120 } 121 122 rc = -ENETDOWN; 123 rcu_read_unlock_bh(); 124 125 atomic_long_inc(&dev->tx_dropped); 126 kfree_skb_list(skb); 127 return rc; 128 out: 129 rcu_read_unlock_bh(); 130 return rc; 131 }

__dev_queue_xmit会调用dev_hard_start_xmit函数获取skb。

1 struct sk_buff *dev_hard_start_xmit(struct sk_buff *first, struct net_device *dev, 2 struct netdev_queue *txq, int *ret) 3 { 4 struct sk_buff *skb = first; 5 int rc = NETDEV_TX_OK; 6 7 while (skb) { 8 struct sk_buff *next = skb->next; 9 10 skb_mark_not_on_list(skb); 11 rc = xmit_one(skb, dev, txq, next != NULL); 12 if (unlikely(!dev_xmit_complete(rc))) { 13 skb->next = next; 14 goto out; 15 } 16 17 skb = next; 18 if (netif_tx_queue_stopped(txq) && skb) { 19 rc = NETDEV_TX_BUSY; 20 break; 21 } 22 } 23 24 out: 25 *ret = rc; 26 return skb; 27 }

然后在xmit_one中调用__net_dev_start_xmit函数。

1 static int xmit_one(struct sk_buff *skb, struct net_device *dev, 2 struct netdev_queue *txq, bool more) 3 { 4 unsigned int len; 5 int rc; 6 7 if (dev_nit_active(dev)) 8 dev_queue_xmit_nit(skb, dev); 9 10 len = skb->len; 11 trace_net_dev_start_xmit(skb, dev); 12 rc = netdev_start_xmit(skb, dev, txq, more); 13 trace_net_dev_xmit(skb, rc, dev, len); 14 15 return rc; 16 }

调用netdev_start_xmit,实际上是调用__netdev_start_xmit。

1 static inline netdev_tx_t netdev_start_xmit(struct sk_buff *skb, struct net_device *dev, 2 struct netdev_queue *txq, bool more) 3 { 4 const struct net_device_ops *ops = dev->netdev_ops; 5 netdev_tx_t rc; 6 7 rc = __netdev_start_xmit(ops, skb, dev, more); 8 if (rc == NETDEV_TX_OK) 9 txq_trans_update(txq); 10 11 return rc; 12 }

调用各网络设备实现的ndo_start_xmit回调函数指针,其为数据结构struct net_device,从而把数据发送给网卡,物理层在收到发送请求之后,通过 DMA 将该主存中的数据拷贝至内部RAM(buffer)之中。在数据拷贝中,同时加入符合以太网协议的相关header,IFG、前导符和CRC。对于以太网网络,物理层发送采用CSMA/CD,即在发送过程中侦听链路冲突。

一旦网卡完成报文发送,将产生中断通知CPU,然后驱动层中的中断处理程序就可以删除保存的 skb 了。

gdb验证:

5. recv过程分析

5.1 链路层和物理层

流程:

1.包到达机器的物理网卡时候触发一个中断,并将通过DMA传送到位于 linux kernel 内存中的rx_ring。中断处理程序分配 skb_buff 数据结构,并将接收到的数据帧从网络适配器I/O端口拷贝到skb_buff 缓冲区中,并设置 skb_buff 相应的参数,这些参数将被上层的网络协议使用,例如skb->protocol;

2.然后发出一个软中断(NET_RX_SOFTIRQ,该变量定义在include/linux/interrupt.h 文件中),通知内核接收到新的数据帧。进入软中断处理流程,调用 net_rx_action 函数。包从 rx_ring 中被删除,进入 netif _receive_skb 处理流程。

3.netif_receive_skb根据注册在全局数组 ptype_all 和 ptype_base 里的网络层数据报类型,把数据报递交给不同的网络层协议的接收函数(INET域中主要是ip_rcv和arp_rcv)。

源码分析:

接受数据的入口函数是net_rx_action。

1 static __latent_entropy void net_rx_action(struct softirq_action *h) 2 { 3 struct softnet_data *sd = this_cpu_ptr(&softnet_data); 4 unsigned long time_limit = jiffies + 5 usecs_to_jiffies(netdev_budget_usecs); 6 int budget = netdev_budget; 7 LIST_HEAD(list); 8 LIST_HEAD(repoll); 9 10 local_irq_disable(); 11 list_splice_init(&sd->poll_list, &list); 12 local_irq_enable(); 13 14 for (;;) { 15 struct napi_struct *n; 16 17 if (list_empty(&list)) { 18 if (!sd_has_rps_ipi_waiting(sd) && list_empty(&repoll)) 19 goto out; 20 break; 21 } 22 23 n = list_first_entry(&list, struct napi_struct, poll_list); 24 budget -= napi_poll(n, &repoll); 25 26 /* If softirq window is exhausted then punt. 27 * Allow this to run for 2 jiffies since which will allow 28 * an average latency of 1.5/HZ. 29 */ 30 if (unlikely(budget <= 0 || 31 time_after_eq(jiffies, time_limit))) { 32 sd->time_squeeze++; 33 break; 34 } 35 } 36 37 local_irq_disable(); 38 39 list_splice_tail_init(&sd->poll_list, &list); 40 list_splice_tail(&repoll, &list); 41 list_splice(&list, &sd->poll_list); 42 if (!list_empty(&sd->poll_list)) 43 __raise_softirq_irqoff(NET_RX_SOFTIRQ); 44 45 net_rps_action_and_irq_enable(sd); 46 out: 47 __kfree_skb_flush(); 48 }

net_rx_action调用网卡驱动里的napi_poll函数来一个一个的处理数据包。在poll函数中,驱动会一个接一个的读取网卡写到内存中的数据包,内存中数据包的格式只有驱动知道。驱动程序将内存中的数据包转换成内核网络模块能识别的skb格式,然后调用napi_gro_receive函数。

1 gro_result_t napi_gro_receive(struct napi_struct *napi, struct sk_buff *skb) 2 { 3 gro_result_t ret; 4 5 skb_mark_napi_id(skb, napi); 6 trace_napi_gro_receive_entry(skb); 7 8 skb_gro_reset_offset(skb); 9 10 ret = napi_skb_finish(dev_gro_receive(napi, skb), skb); 11 trace_napi_gro_receive_exit(ret); 12 13 return ret; 14 } 15 EXPORT_SYMBOL(napi_gro_receive);

然后会直接调用netif_receive_skb_core函数。

1 int netif_receive_skb_core(struct sk_buff *skb) 2 { 3 int ret; 4 5 rcu_read_lock(); 6 ret = __netif_receive_skb_one_core(skb, false); 7 rcu_read_unlock(); 8 9 return ret; 10 } 11 EXPORT_SYMBOL(netif_receive_skb_core);

netif_receive_skb_core调用 __netif_receive_skb_one_core,将数据包交给上层ip_rcv进行处理。

1 static int __netif_receive_skb_one_core(struct sk_buff *skb, bool pfmemalloc) 2 { 3 struct net_device *orig_dev = skb->dev; 4 struct packet_type *pt_prev = NULL; 5 int ret; 6 7 ret = __netif_receive_skb_core(skb, pfmemalloc, &pt_prev); 8 if (pt_prev) 9 ret = INDIRECT_CALL_INET(pt_prev->func, ipv6_rcv, ip_rcv, skb, 10 skb->dev, pt_prev, orig_dev); 11 return ret; 12 }

待内存中的所有数据包被处理完成后(即poll函数执行完成),启用网卡的硬中断,这样下次网卡再收到数据的时候就会通知CPU。

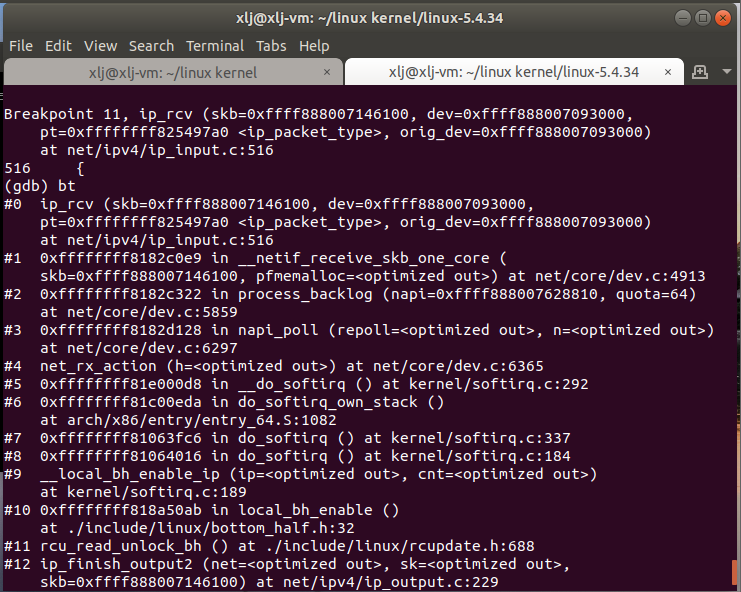

gdb调试验证:

5.2 网络层

流程:

1.IP层的入口函数在 ip_rcv 函数。该函数首先会做包括 package checksum 在内的各种检查,如果需要的话会做 IP defragment(将多个分片合并),然后 packet 调用已经注册的 Pre-routing netfilter hook ,完成后最终到达 ip_rcv_finish 函数。

2.ip_rcv_finish 函数会调用 ip_router_input 函数,进入路由处理环节。它首先会调用 ip_route_input 来更新路由,然后查找 route,决定该 package 将会被发到本机还是会被转发还是丢弃: (1)如果是发到本机的话,调用 ip_local_deliver 函数,可能会做 de-fragment(合并多个 IP packet),然后调用 ip_local_deliver 函数。该函数根据 package 的下一个处理层的 protocal number,调用下一层接口,包括 tcp_v4_rcv (TCP), udp_rcv (UDP),icmp_rcv (ICMP),igmp_rcv(IGMP)。对于 TCP 来说,函数 tcp_v4_rcv 函数会被调用,从而处理流程进入 TCP 栈。(2)如果需要转发 (forward),则进入转发流程。该流程需要处理 TTL,再调用 dst_input 函数。该函数会 <1>处理 Netfilter Hook<2>执行 IP fragmentation<3>调用 dev_queue_xmit,进入链路层处理流程。

源码分析:

IP 层的入口函数在 ip_rcv 函数。

1 /* 2 * IP receive entry point 3 */ 4 int ip_rcv(struct sk_buff *skb, struct net_device *dev, struct packet_type *pt, 5 struct net_device *orig_dev) 6 { 7 struct net *net = dev_net(dev); 8 9 skb = ip_rcv_core(skb, net); 10 if (skb == NULL) 11 return NET_RX_DROP; 12 13 return NF_HOOK(NFPROTO_IPV4, NF_INET_PRE_ROUTING, 14 net, NULL, skb, dev, NULL, 15 ip_rcv_finish); 16 }

ip_rcv 函数内部会调用 ip_rcv_finish 函数。

1 static int ip_rcv_finish(struct net *net, struct sock *sk, struct sk_buff *skb) 2 { 3 struct net_device *dev = skb->dev; 4 int ret; 5 6 /* if ingress device is enslaved to an L3 master device pass the 7 * skb to its handler for processing 8 */ 9 skb = l3mdev_ip_rcv(skb); 10 if (!skb) 11 return NET_RX_SUCCESS; 12 13 ret = ip_rcv_finish_core(net, sk, skb, dev); 14 if (ret != NET_RX_DROP) 15 ret = dst_input(skb); 16 return ret; 17 }

如果是发到本机就调用dst_input,进一步调用ip_local_deliver函数。

1 /* 2 * Deliver IP Packets to the higher protocol layers. 3 */ 4 int ip_local_deliver(struct sk_buff *skb) 5 { 6 /* 7 * Reassemble IP fragments. 8 */ 9 struct net *net = dev_net(skb->dev); 10 11 if (ip_is_fragment(ip_hdr(skb))) { 12 if (ip_defrag(net, skb, IP_DEFRAG_LOCAL_DELIVER)) 13 return 0; 14 } 15 16 return NF_HOOK(NFPROTO_IPV4, NF_INET_LOCAL_IN, 17 net, NULL, skb, skb->dev, NULL, 18 ip_local_deliver_finish); 19 }

判断是否分片,如果有分片就ip_defrag()进行合并多个数据包的操作。

1 /* Process an incoming IP datagram fragment. */ 2 int ip_defrag(struct net *net, struct sk_buff *skb, u32 user) 3 { 4 struct net_device *dev = skb->dev ? : skb_dst(skb)->dev; 5 int vif = l3mdev_master_ifindex_rcu(dev); 6 struct ipq *qp; 7 8 __IP_INC_STATS(net, IPSTATS_MIB_REASMREQDS); 9 skb_orphan(skb); 10 11 /* Lookup (or create) queue header */ 12 qp = ip_find(net, ip_hdr(skb), user, vif); 13 if (qp) { 14 int ret; 15 16 spin_lock(&qp->q.lock); 17 18 ret = ip_frag_queue(qp, skb); 19 20 spin_unlock(&qp->q.lock); 21 ipq_put(qp); 22 return ret; 23 } 24 25 __IP_INC_STATS(net, IPSTATS_MIB_REASMFAILS); 26 kfree_skb(skb); 27 return -ENOMEM; 28 } 29 EXPORT_SYMBOL(ip_defrag);

没有分片就调用ip_local_deliver_finish()。

1 static int ip_local_deliver_finish(struct net *net, struct sock *sk, struct sk_buff *skb) 2 { 3 __skb_pull(skb, skb_network_header_len(skb)); 4 5 rcu_read_lock(); 6 ip_protocol_deliver_rcu(net, skb, ip_hdr(skb)->protocol); 7 rcu_read_unlock(); 8 9 return 0; 10 }

进一步调用ip_protocol_deliver_rcu,该函数根据 package 的下一个处理层的 protocal number,调用下一层接口,包括 tcp_v4_rcv (TCP), udp_rcv (UDP)。对于 TCP 来说,函数 tcp_v4_rcv 函数会被调用,从而处理流程进入 TCP 栈。

1 void ip_protocol_deliver_rcu(struct net *net, struct sk_buff *skb, int protocol) 2 { 3 const struct net_protocol *ipprot; 4 int raw, ret; 5 6 resubmit: 7 raw = raw_local_deliver(skb, protocol); 8 9 ipprot = rcu_dereference(inet_protos[protocol]); 10 if (ipprot) { 11 if (!ipprot->no_policy) { 12 if (!xfrm4_policy_check(NULL, XFRM_POLICY_IN, skb)) { 13 kfree_skb(skb); 14 return; 15 } 16 nf_reset_ct(skb); 17 } 18 ret = INDIRECT_CALL_2(ipprot->handler, tcp_v4_rcv, udp_rcv, 19 skb); 20 if (ret < 0) { 21 protocol = -ret; 22 goto resubmit; 23 } 24 __IP_INC_STATS(net, IPSTATS_MIB_INDELIVERS); 25 } else { 26 if (!raw) { 27 if (xfrm4_policy_check(NULL, XFRM_POLICY_IN, skb)) { 28 __IP_INC_STATS(net, IPSTATS_MIB_INUNKNOWNPROTOS); 29 icmp_send(skb, ICMP_DEST_UNREACH, 30 ICMP_PROT_UNREACH, 0); 31 } 32 kfree_skb(skb); 33 } else { 34 __IP_INC_STATS(net, IPSTATS_MIB_INDELIVERS); 35 consume_skb(skb); 36 } 37 } 38 }

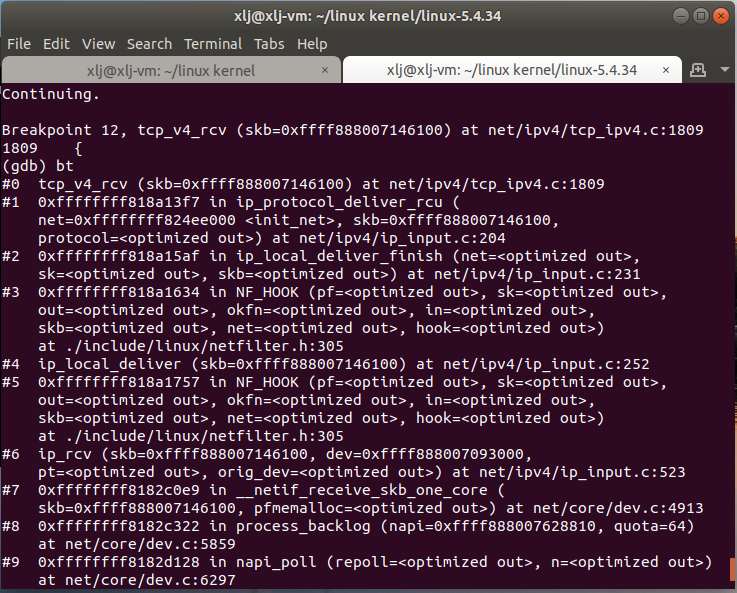

gdb调试验证:

5.3 传输层

流程:

1.传输层TCP 处理入口在 tcp_v4_rcv 函数(位于 linux/net/ipv4/tcp ipv4.c 文件中),它会做 TCP header 检查等处理。

2.调用 _tcp_v4_lookup,查找该package的open socket。如果找不到,该package会被丢弃。接下来检查 socket 和 connection 的状态。

3.如果socket 和 connection 一切正常,调用 tcp_prequeue 使 package 从内核进入 user space,放进 socket 的 receive queue。然后 socket 会被唤醒,调用 system call,并最终调用 tcp_recvmsg 函数去从 socket recieve queue 中获取 segment。

源码分析:

tcp_v4_rcv函数为TCP的总入口,数据包从IP层传递上来,进入该函数;其协议操作函数结构如下所示,其中handler即为IP层向TCP传递数据包的回调函数,设置为tcp_v4_rcv;

1 static struct net_protocol tcp_protocol = { 2 .early_demux = tcp_v4_early_demux, 3 .early_demux_handler = tcp_v4_early_demux, 4 .handler = tcp_v4_rcv, 5 .err_handler = tcp_v4_err, 6 .no_policy = 1, 7 .netns_ok = 1, 8 .icmp_strict_tag_validation = 1, 9 };

在IP层处理本地数据包时,会获取到上述结构的实例,并且调用实例的handler回调,也就是调用了tcp_v4_rcv;

tcp_v4_rcv函数只要做以下几个工作:(1) 设置TCP_CB (2) 查找控制块 (3)根据控制块状态做不同处理,包括TCP_TIME_WAIT状态处理,TCP_NEW_SYN_RECV状态处理,TCP_LISTEN状态处理 (4) 接收TCP段;

之后,调用的也就是__sys_recvfrom,整个函数的调用路径与send非常类似。整个函数实际调用的是sock->ops->recvmsg(sock, msg, msg_data_left(msg), flags),同样,根据tcp_prot结构的初始化,调用的其实是tcp_rcvmsg .接受函数比发送函数要复杂得多,因为数据接收不仅仅只是接收,tcp的三次握手也是在接收函数实现的,所以收到数据后要判断当前的状态,是否正在建立连接等,根据发来的信息考虑状态是否要改变,在这里,我们仅仅考虑在连接建立后数据的接收。

1 int tcp_recvmsg(struct sock *sk, struct msghdr *msg, size_t len, int nonblock, 2 int flags, int *addr_len) 3 { 4 struct tcp_sock *tp = tcp_sk(sk); 5 int copied = 0; 6 u32 peek_seq; 7 u32 *seq; 8 unsigned long used; 9 int err, inq; 10 int target; /* Read at least this many bytes */ 11 long timeo; 12 struct sk_buff *skb, *last; 13 u32 urg_hole = 0; 14 struct scm_timestamping_internal tss; 15 int cmsg_flags; 16 17 if (unlikely(flags & MSG_ERRQUEUE)) 18 return inet_recv_error(sk, msg, len, addr_len); 19 20 if (sk_can_busy_loop(sk) && skb_queue_empty_lockless(&sk->sk_receive_queue) && 21 (sk->sk_state == TCP_ESTABLISHED)) 22 sk_busy_loop(sk, nonblock); 23 24 lock_sock(sk); 25 26 err = -ENOTCONN; 27 if (sk->sk_state == TCP_LISTEN) 28 goto out; 29 30 cmsg_flags = tp->recvmsg_inq ? 1 : 0; 31 timeo = sock_rcvtimeo(sk, nonblock); 32 33 /* Urgent data needs to be handled specially. */ 34 if (flags & MSG_OOB) 35 goto recv_urg; 36 37 if (unlikely(tp->repair)) { 38 err = -EPERM; 39 if (!(flags & MSG_PEEK)) 40 goto out; 41 42 if (tp->repair_queue == TCP_SEND_QUEUE) 43 goto recv_sndq; 44 45 err = -EINVAL; 46 if (tp->repair_queue == TCP_NO_QUEUE) 47 goto out; 48 49 /* 'common' recv queue MSG_PEEK-ing */ 50 } 51 52 seq = &tp->copied_seq; 53 if (flags & MSG_PEEK) { 54 peek_seq = tp->copied_seq; 55 seq = &peek_seq; 56 } 57 58 target = sock_rcvlowat(sk, flags & MSG_WAITALL, len); 59 60 do { 61 u32 offset; 62 63 /* Are we at urgent data? Stop if we have read anything or have SIGURG pending. */ 64 if (tp->urg_data && tp->urg_seq == *seq) { 65 if (copied) 66 break; 67 if (signal_pending(current)) { 68 copied = timeo ? sock_intr_errno(timeo) : -EAGAIN; 69 break; 70 } 71 } 72 73 /* Next get a buffer. */ 74 75 last = skb_peek_tail(&sk->sk_receive_queue); 76 skb_queue_walk(&sk->sk_receive_queue, skb) { 77 last = skb; 78 /* Now that we have two receive queues this 79 * shouldn't happen. 80 */ 81 if (WARN(before(*seq, TCP_SKB_CB(skb)->seq), 82 "TCP recvmsg seq # bug: copied %X, seq %X, rcvnxt %X, fl %X\n", 83 *seq, TCP_SKB_CB(skb)->seq, tp->rcv_nxt, 84 flags)) 85 break; 86 87 offset = *seq - TCP_SKB_CB(skb)->seq; 88 if (unlikely(TCP_SKB_CB(skb)->tcp_flags & TCPHDR_SYN)) { 89 pr_err_once("%s: found a SYN, please report !\n", __func__); 90 offset--; 91 } 92 if (offset < skb->len) 93 goto found_ok_skb; 94 if (TCP_SKB_CB(skb)->tcp_flags & TCPHDR_FIN) 95 goto found_fin_ok; 96 WARN(!(flags & MSG_PEEK), 97 "TCP recvmsg seq # bug 2: copied %X, seq %X, rcvnxt %X, fl %X\n", 98 *seq, TCP_SKB_CB(skb)->seq, tp->rcv_nxt, flags); 99 } 100 101 /* Well, if we have backlog, try to process it now yet. */ 102 103 if (copied >= target && !sk->sk_backlog.tail) 104 break; 105 106 if (copied) { 107 if (sk->sk_err || 108 sk->sk_state == TCP_CLOSE || 109 (sk->sk_shutdown & RCV_SHUTDOWN) || 110 !timeo || 111 signal_pending(current)) 112 break; 113 } else { 114 if (sock_flag(sk, SOCK_DONE)) 115 break; 116 117 if (sk->sk_err) { 118 copied = sock_error(sk); 119 break; 120 } 121 122 if (sk->sk_shutdown & RCV_SHUTDOWN) 123 break; 124 125 if (sk->sk_state == TCP_CLOSE) { 126 /* This occurs when user tries to read 127 * from never connected socket. 128 */ 129 copied = -ENOTCONN; 130 break; 131 } 132 133 if (!timeo) { 134 copied = -EAGAIN; 135 break; 136 } 137 138 if (signal_pending(current)) { 139 copied = sock_intr_errno(timeo); 140 break; 141 } 142 } 143 144 tcp_cleanup_rbuf(sk, copied); 145 146 if (copied >= target) { 147 /* Do not sleep, just process backlog. */ 148 release_sock(sk); 149 lock_sock(sk); 150 } else { 151 sk_wait_data(sk, &timeo, last); 152 } 153 154 if ((flags & MSG_PEEK) && 155 (peek_seq - copied - urg_hole != tp->copied_seq)) { 156 net_dbg_ratelimited("TCP(%s:%d): Application bug, race in MSG_PEEK\n", 157 current->comm, 158 task_pid_nr(current)); 159 peek_seq = tp->copied_seq; 160 } 161 continue; 162 163 found_ok_skb: 164 /* Ok so how much can we use? */ 165 used = skb->len - offset; 166 if (len < used) 167 used = len; 168 169 /* Do we have urgent data here? */ 170 if (tp->urg_data) { 171 u32 urg_offset = tp->urg_seq - *seq; 172 if (urg_offset < used) { 173 if (!urg_offset) { 174 if (!sock_flag(sk, SOCK_URGINLINE)) { 175 WRITE_ONCE(*seq, *seq + 1); 176 urg_hole++; 177 offset++; 178 used--; 179 if (!used) 180 goto skip_copy; 181 } 182 } else 183 used = urg_offset; 184 } 185 } 186 187 if (!(flags & MSG_TRUNC)) { 188 err = skb_copy_datagram_msg(skb, offset, msg, used); 189 if (err) { 190 /* Exception. Bailout! */ 191 if (!copied) 192 copied = -EFAULT; 193 break; 194 } 195 } 196 197 WRITE_ONCE(*seq, *seq + used); 198 copied += used; 199 len -= used; 200 201 tcp_rcv_space_adjust(sk); 202 203 skip_copy: 204 if (tp->urg_data && after(tp->copied_seq, tp->urg_seq)) { 205 tp->urg_data = 0; 206 tcp_fast_path_check(sk); 207 } 208 if (used + offset < skb->len) 209 continue; 210 211 if (TCP_SKB_CB(skb)->has_rxtstamp) { 212 tcp_update_recv_tstamps(skb, &tss); 213 cmsg_flags |= 2; 214 } 215 if (TCP_SKB_CB(skb)->tcp_flags & TCPHDR_FIN) 216 goto found_fin_ok; 217 if (!(flags & MSG_PEEK)) 218 sk_eat_skb(sk, skb); 219 continue; 220 221 found_fin_ok: 222 /* Process the FIN. */ 223 WRITE_ONCE(*seq, *seq + 1); 224 if (!(flags & MSG_PEEK)) 225 sk_eat_skb(sk, skb); 226 break; 227 } while (len > 0); 228 229 /* According to UNIX98, msg_name/msg_namelen are ignored 230 * on connected socket. I was just happy when found this 8) --ANK 231 */ 232 233 /* Clean up data we have read: This will do ACK frames. */ 234 tcp_cleanup_rbuf(sk, copied); 235 236 release_sock(sk); 237 238 if (cmsg_flags) { 239 if (cmsg_flags & 2) 240 tcp_recv_timestamp(msg, sk, &tss); 241 if (cmsg_flags & 1) { 242 inq = tcp_inq_hint(sk); 243 put_cmsg(msg, SOL_TCP, TCP_CM_INQ, sizeof(inq), &inq); 244 } 245 } 246 247 return copied; 248 249 out: 250 release_sock(sk); 251 return err; 252 253 recv_urg: 254 err = tcp_recv_urg(sk, msg, len, flags); 255 goto out; 256 257 recv_sndq: 258 err = tcp_peek_sndq(sk, msg, len); 259 goto out; 260 } 261 EXPORT_SYMBOL(tcp_recvmsg);

这里共维护了三个队列:prequeue、backlog、receive_queue,分别为预处理队列,后备队列和接收队列,在连接建立后,若没有数据到来,接收队列为空,进程会在sk_busy_loop函数内循环等待,知道接收队列不为空,并调用函数数skb_copy_datagram_msg将接收到的数据拷贝到用户态,实际调用的是__skb_datagram_iter,这里同样用了struct msghdr *msg来实现。

即skb_copy_datagram_msg → skb_copy_datagram_iter → __skb_datagram_iter

1 static inline int skb_copy_datagram_msg(const struct sk_buff *from, int offset, 2 struct msghdr *msg, int size) 3 { 4 return skb_copy_datagram_iter(from, offset, &msg->msg_iter, size); 5 }

1 /** 2 * skb_copy_datagram_iter - Copy a datagram to an iovec iterator. 3 * @skb: buffer to copy 4 * @offset: offset in the buffer to start copying from 5 * @to: iovec iterator to copy to 6 * @len: amount of data to copy from buffer to iovec 7 */ 8 int skb_copy_datagram_iter(const struct sk_buff *skb, int offset, 9 struct iov_iter *to, int len) 10 { 11 trace_skb_copy_datagram_iovec(skb, len); 12 return __skb_datagram_iter(skb, offset, to, len, false, 13 simple_copy_to_iter, NULL); 14 } 15 EXPORT_SYMBOL(skb_copy_datagram_iter);

1 static int __skb_datagram_iter(const struct sk_buff *skb, int offset, 2 struct iov_iter *to, int len, bool fault_short, 3 size_t (*cb)(const void *, size_t, void *, 4 struct iov_iter *), void *data) 5 { 6 int start = skb_headlen(skb); 7 int i, copy = start - offset, start_off = offset, n; 8 struct sk_buff *frag_iter; 9 10 /* Copy header. */ 11 if (copy > 0) { 12 if (copy > len) 13 copy = len; 14 n = cb(skb->data + offset, copy, data, to); 15 offset += n; 16 if (n != copy) 17 goto short_copy; 18 if ((len -= copy) == 0) 19 return 0; 20 } 21 22 /* Copy paged appendix. Hmm... why does this look so complicated? */ 23 for (i = 0; i < skb_shinfo(skb)->nr_frags; i++) { 24 int end; 25 const skb_frag_t *frag = &skb_shinfo(skb)->frags[i]; 26 27 WARN_ON(start > offset + len); 28 29 end = start + skb_frag_size(frag); 30 if ((copy = end - offset) > 0) { 31 struct page *page = skb_frag_page(frag); 32 u8 *vaddr = kmap(page); 33 34 if (copy > len) 35 copy = len; 36 n = cb(vaddr + skb_frag_off(frag) + offset - start, 37 copy, data, to); 38 kunmap(page); 39 offset += n; 40 if (n != copy) 41 goto short_copy; 42 if (!(len -= copy)) 43 return 0; 44 } 45 start = end; 46 } 47 48 skb_walk_frags(skb, frag_iter) { 49 int end; 50 51 WARN_ON(start > offset + len); 52 53 end = start + frag_iter->len; 54 if ((copy = end - offset) > 0) { 55 if (copy > len) 56 copy = len; 57 if (__skb_datagram_iter(frag_iter, offset - start, 58 to, copy, fault_short, cb, data)) 59 goto fault; 60 if ((len -= copy) == 0) 61 return 0; 62 offset += copy; 63 } 64 start = end; 65 } 66 if (!len) 67 return 0; 68 69 /* This is not really a user copy fault, but rather someone 70 * gave us a bogus length on the skb. We should probably 71 * print a warning here as it may indicate a kernel bug. 72 */ 73 74 fault: 75 iov_iter_revert(to, offset - start_off); 76 return -EFAULT; 77 78 short_copy: 79 if (fault_short || iov_iter_count(to)) 80 goto fault; 81 82 return 0; 83 }

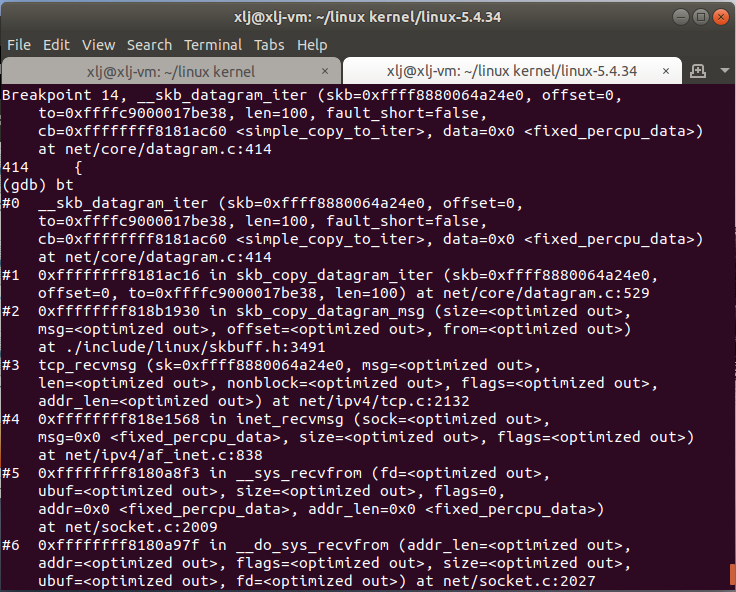

gdb调试验证:

5.4 应用层

流程:

1.每当用户应用调用 read 或者 recvfrom 时,该调用会被映射为/net/socket.c 中的 sys_recv 系统调用,并被转化为 sys_recvfrom 调用,然后调用 sock_recgmsg 函数。

2.对于 INET 类型的 socket,/net/ipv4/af inet.c 中的 inet_recvmsg 方法会被调用,它会调用相关协议的数据接收方法。

3.对TCP 来说,调用 tcp_recvmsg。该函数从 socket buffer 中拷贝数据到 user buffer。

4.对UDP 来说,从 user space 中可以调用三个 system call recv()/recvfrom()/recvmsg() 中的任意一个来接收 UDP package,这些系统调用最终都会调用内核中的 udp_recvmsg 方法。

源码分析:

对于recv函数,也是recvfrom的特殊情况,调用的也就是__sys_recvfrom,整个函数的调用路径与send在应用层的情况非常类似:

1 int __sys_recvfrom(int fd, void __user *ubuf, size_t size, unsigned int flags, 2 struct sockaddr __user *addr, int __user *addr_len) 3 { 4 struct socket *sock; 5 struct iovec iov; 6 struct msghdr msg; 7 struct sockaddr_storage address; 8 int err, err2; 9 int fput_needed; 10 11 err = import_single_range(READ, ubuf, size, &iov, &msg.msg_iter); 12 if (unlikely(err)) 13 return err; 14 sock = sockfd_lookup_light(fd, &err, &fput_needed); 15 if (!sock) 16 goto out; 17 18 msg.msg_control = NULL; 19 msg.msg_controllen = 0; 20 /* Save some cycles and don't copy the address if not needed */ 21 msg.msg_name = addr ? (struct sockaddr *)&address : NULL; 22 /* We assume all kernel code knows the size of sockaddr_storage */ 23 msg.msg_namelen = 0; 24 msg.msg_iocb = NULL; 25 msg.msg_flags = 0; 26 if (sock->file->f_flags & O_NONBLOCK) 27 flags |= MSG_DONTWAIT; 28 err = sock_recvmsg(sock, &msg, flags); 29 30 if (err >= 0 && addr != NULL) { 31 err2 = move_addr_to_user(&address, 32 msg.msg_namelen, addr, addr_len); 33 if (err2 < 0) 34 err = err2; 35 } 36 37 fput_light(sock->file, fput_needed); 38 out: 39 return err; 40 }

1 /** 2 * sock_recvmsg - receive a message from @sock 3 * @sock: socket 4 * @msg: message to receive 5 * @flags: message flags 6 * 7 * Receives @msg from @sock, passing through LSM. Returns the total number 8 * of bytes received, or an error. 9 */ 10 int sock_recvmsg(struct socket *sock, struct msghdr *msg, int flags) 11 { 12 int err = security_socket_recvmsg(sock, msg, msg_data_left(msg), flags); 13 14 return err ?: sock_recvmsg_nosec(sock, msg, flags); 15 } 16 EXPORT_SYMBOL(sock_recvmsg);

1 static inline int sock_recvmsg_nosec(struct socket *sock, struct msghdr *msg, 2 int flags) 3 { 4 return INDIRECT_CALL_INET(sock->ops->recvmsg, inet6_recvmsg, 5 inet_recvmsg, sock, msg, msg_data_left(msg), 6 flags); 7 }

sock->ops->recvmsg即inet_recvmsg,最后在inet_recvmsg中调用的是tcp_recvmsg。

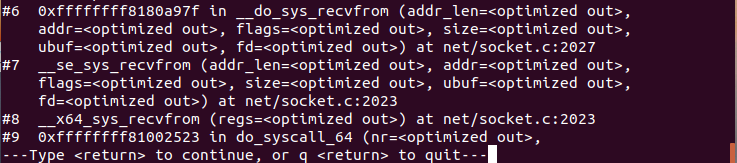

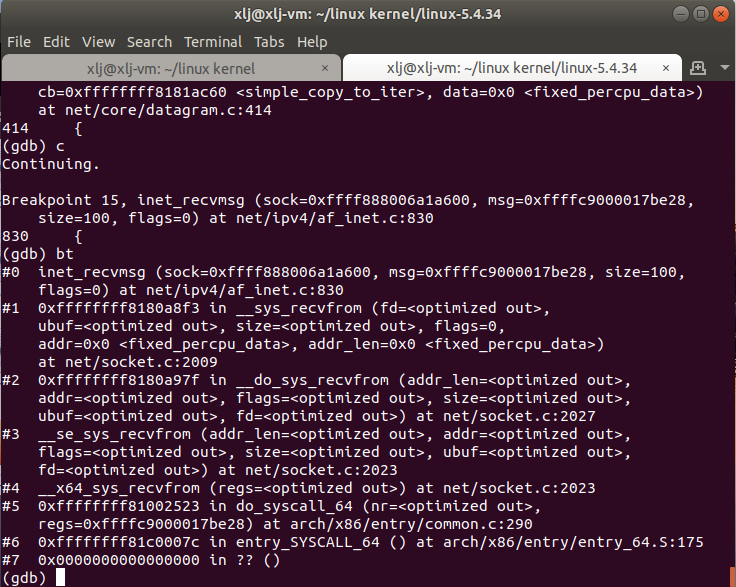

gdb调试验证:

6. 时序图