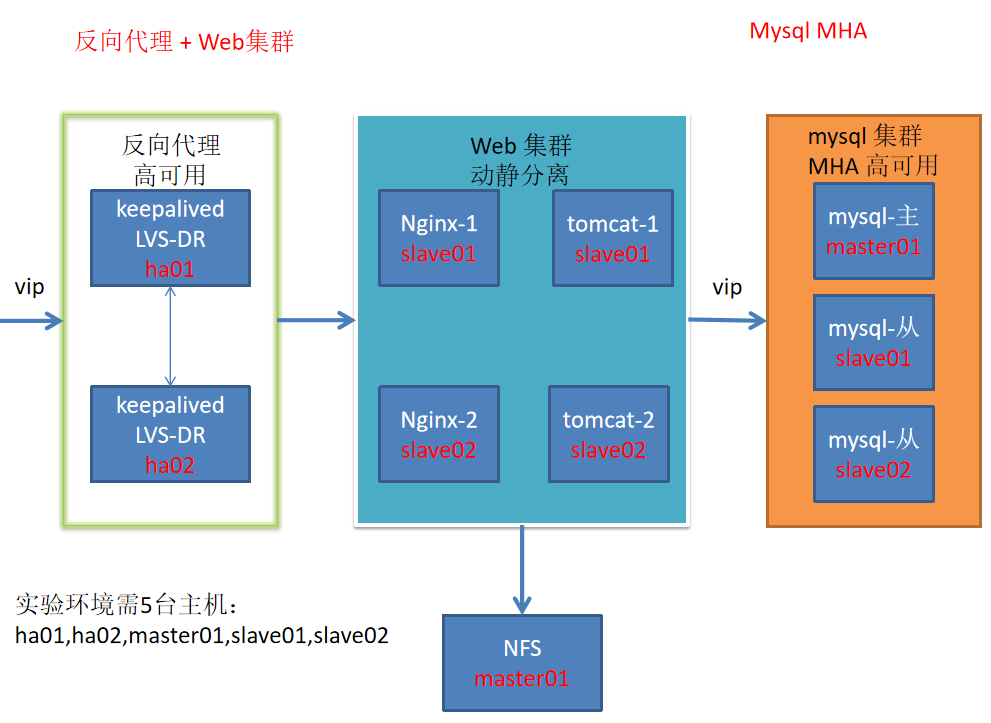

LVS+keepalived+Nginx Tomcat动静分离+MySQL MHA综合实验

一、实验架构图以及要求

实验架构图

实验要求:

要求能通过keepalived的vip访问静态或者动态页面,mysql的master节点能够实现故障自动切换

二、实验步骤

1、环境准备

| 服务器类型 | IP地址 | 需安装 |

| ha01 | 192.168.229.60 | ipvsadm、keepalived |

| ha02 | 192.168.229.50 | ipvsadm、keepalived |

| master01 | 192.168.229.90 | MySQL、NFS、MHA node |

| slave01 | 192.168.229.80 | MySQL、Nginx、Tomcat、MHA manager、MHA node |

| slave02 | 192.168.229.70 | MySQL、Nginx、Tomcat、MHA node |

| VIP | 192.168.229.188 | |

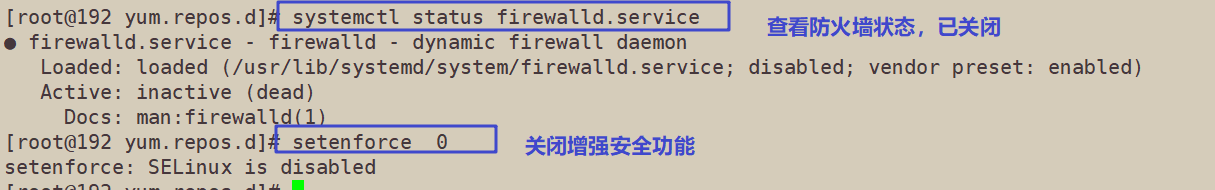

2、所有服务器关闭防火墙

systemctl stop firewalld.service setenforce 0

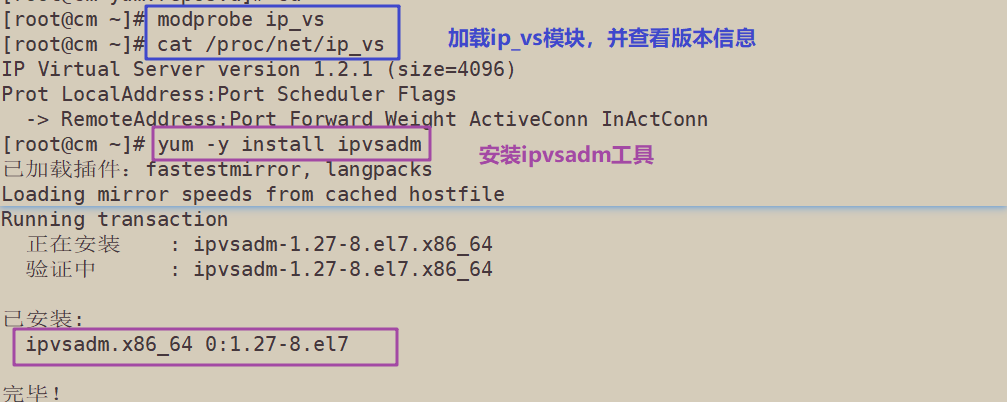

3、搭建LVS-DR

3.1 配置负载调度器ha01(192.168.229.60)

modprobe ip_vs cat /proc/net/ip_vs yum -y install ipvsadm

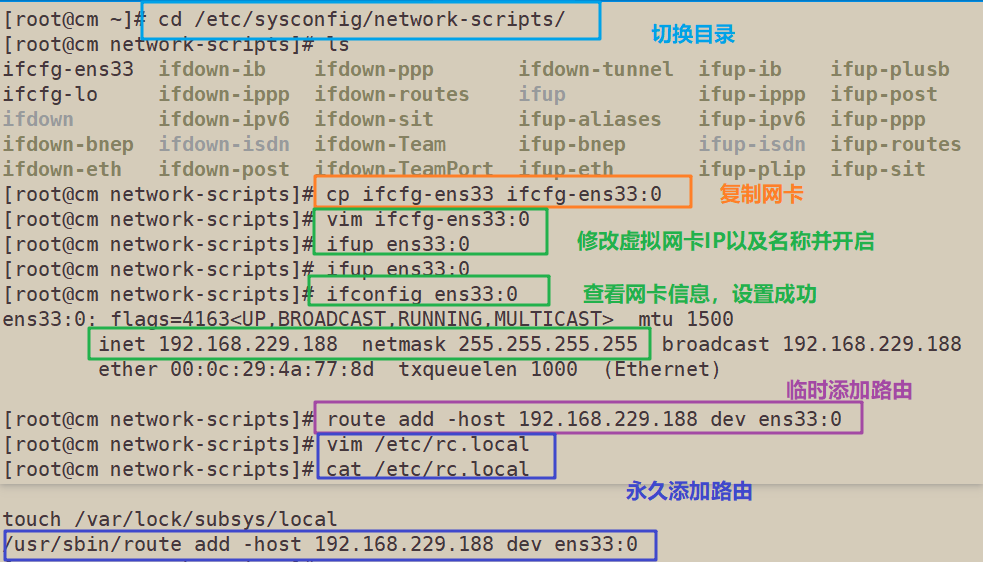

3.2 配置虚拟 IP 地址(VIP:192.168.229.188)

root@cm ~]# cd /etc/sysconfig/network-scripts/

[root@cm network-scripts]# ls

ifcfg-ens33 ifdown-ib ifdown-ppp ifdown-tunnel ifup-ib ifup-plusb ifup-Team network-functions

ifcfg-lo ifdown-ippp ifdown-routes ifup ifup-ippp ifup-post ifup-TeamPort network-functions-ipv6

ifdown ifdown-ipv6 ifdown-sit ifup-aliases ifup-ipv6 ifup-ppp ifup-tunnel

ifdown-bnep ifdown-isdn ifdown-Team ifup-bnep ifup-isdn ifup-routes ifup-wireless

ifdown-eth ifdown-post ifdown-TeamPort ifup-eth ifup-plip ifup-sit init.ipv6-global

[root@cm network-scripts]# cp ifcfg-ens33 ifcfg-ens33:0

[root@cm network-scripts]# vim ifcfg-ens33:0

[root@cm network-scripts]# ifup ens33:0

[root@cm network-scripts]# ifup ens33:0

[root@cm network-scripts]# ifconfig ens33:0

ens33:0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.229.188 netmask 255.255.255.255 broadcast 192.168.229.188

ether 00:0c:29:4a:77:8d txqueuelen 1000 (Ethernet)

[root@cm network-scripts]# route add -host 192.168.229.188 dev ens33:0

[root@cm network-scripts]# vim /etc/rc.local

[root@cm network-scripts]# cat /etc/rc.local

#!/bin/bash

# THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES

#

# It is highly advisable to create own systemd services or udev rules

# to run scripts during boot instead of using this file.

#

# In contrast to previous versions due to parallel execution during boot

# this script will NOT be run after all other services.

#

# Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure

# that this script will be executed during boot.

touch /var/lock/subsys/local

/usr/sbin/route add -host 192.168.229.188 dev ens33:0

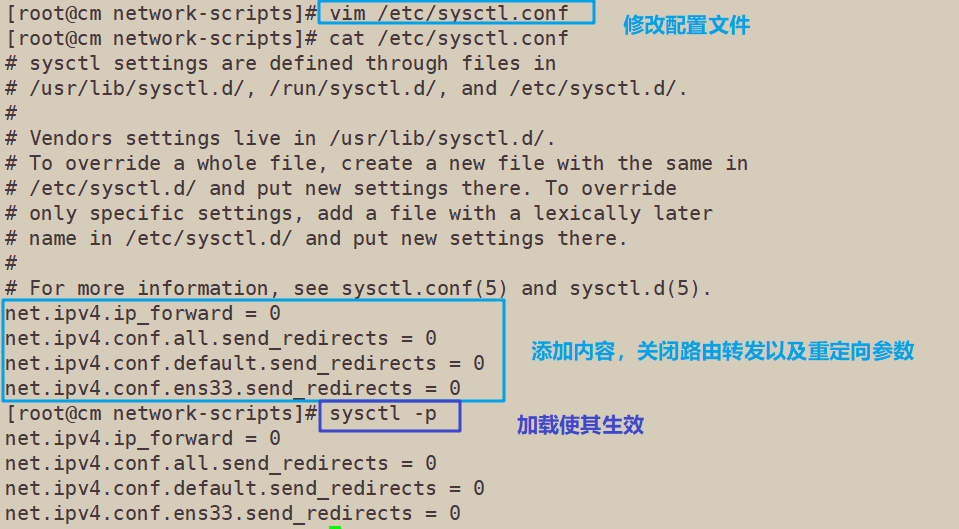

3.3 调整内核的ARP响应参数以阻止更新VIP的MAC地址,避免发生冲突

[root@cm network-scripts]# vim /etc/sysctl.conf [root@cm network-scripts]# cat /etc/sysctl.conf # sysctl settings are defined through files in # /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/. # # Vendors settings live in /usr/lib/sysctl.d/. # To override a whole file, create a new file with the same in # /etc/sysctl.d/ and put new settings there. To override # only specific settings, add a file with a lexically later # name in /etc/sysctl.d/ and put new settings there. # # For more information, see sysctl.conf(5) and sysctl.d(5). net.ipv4.ip_forward = 0 net.ipv4.conf.all.send_redirects = 0 net.ipv4.conf.default.send_redirects = 0 net.ipv4.conf.ens33.send_redirects = 0 [root@cm network-scripts]# sysctl -p net.ipv4.ip_forward = 0 net.ipv4.conf.all.send_redirects = 0 net.ipv4.conf.default.send_redirects = 0 net.ipv4.conf.ens33.send_redirects = 0

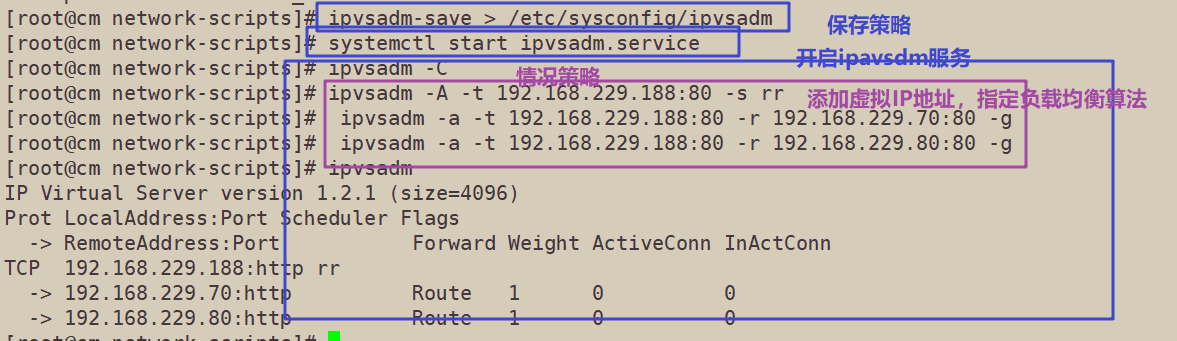

3.4 配置负载分配策略

[root@cm network-scripts]# ipvsadm-save > /etc/sysconfig/ipvsadm [root@cm network-scripts]# systemctl start ipvsadm.service [root@cm network-scripts]# ipvsadm -C [root@cm network-scripts]# ipvsadm -A -t 192.168.229.188:80 -s rr [root@cm network-scripts]# ipvsadm -a -t 192.168.229.188:80 -r 192.168.229.70:80 -g [root@cm network-scripts]# ipvsadm -a -t 192.168.229.188:80 -r 192.168.229.80:80 -g [root@cm network-scripts]# ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.229.188:http rr -> 192.168.229.70:http Route 1 0 0 -> 192.168.229.80:http Route 1 0 0

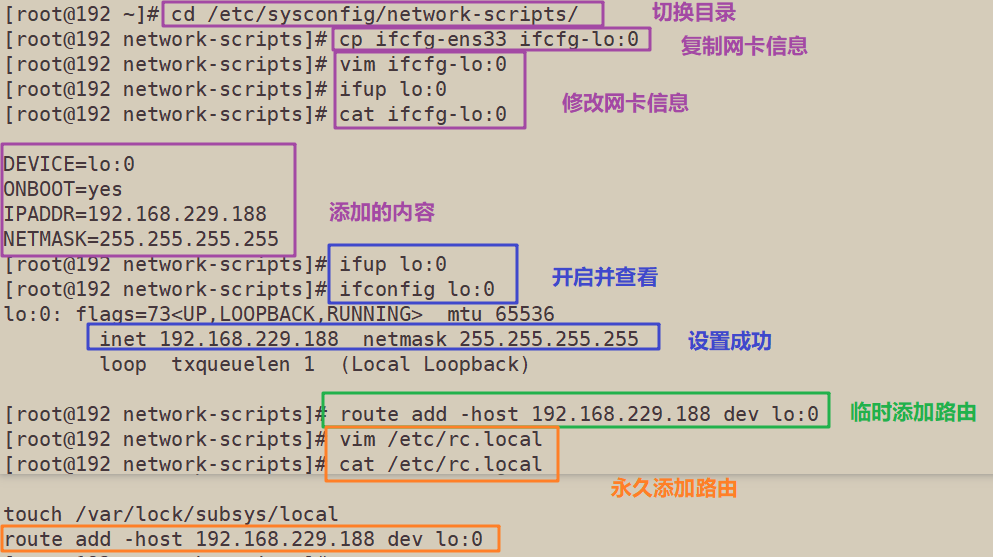

3.5 配置节点服务器(192.168.229.80、192.168.229.70)

3.5.1 配置虚拟 IP 地址(VIP:192.168.229.188)

[root@192 ~]# cd /etc/sysconfig/network-scripts/

[root@192 network-scripts]# cp ifcfg-ens33 ifcfg-lo:0

[root@192 network-scripts]# vim ifcfg-lo:0

[root@192 network-scripts]# ifup lo:0

[root@192 network-scripts]# cat ifcfg-lo:0

DEVICE=lo:0

ONBOOT=yes

IPADDR=192.168.229.188

NETMASK=255.255.255.255

[root@192 network-scripts]# ifup lo:0

[root@192 network-scripts]# ifconfig lo:0

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.229.188 netmask 255.255.255.255

loop txqueuelen 1 (Local Loopback)

[root@192 network-scripts]# route add -host 192.168.229.188 dev lo:0

[root@192 network-scripts]# vim /etc/rc.local

[root@192 network-scripts]# cat /etc/rc.local

#!/bin/bash

# THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES

#

# It is highly advisable to create own systemd services or udev rules

# to run scripts during boot instead of using this file.

#

# In contrast to previous versions due to parallel execution during boot

# this script will NOT be run after all other services.

#

# Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure

# that this script will be executed during boot.

touch /var/lock/subsys/local

route add -host 192.168.229.188 dev lo:0

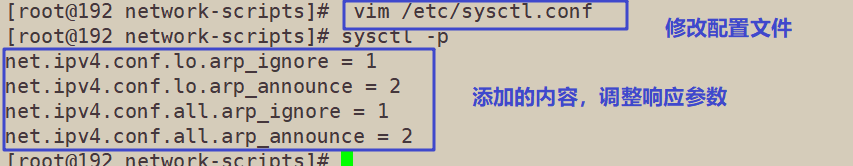

3.5.2 调整内核的ARP响应参数以阻止更新VIP的MAC地址,避免发生冲突

[root@192 network-scripts]# vim /etc/sysctl.conf [root@192 network-scripts]# sysctl -p net.ipv4.conf.lo.arp_ignore = 1 net.ipv4.conf.lo.arp_announce = 2 net.ipv4.conf.all.arp_ignore = 1 net.ipv4.conf.all.arp_announce = 2

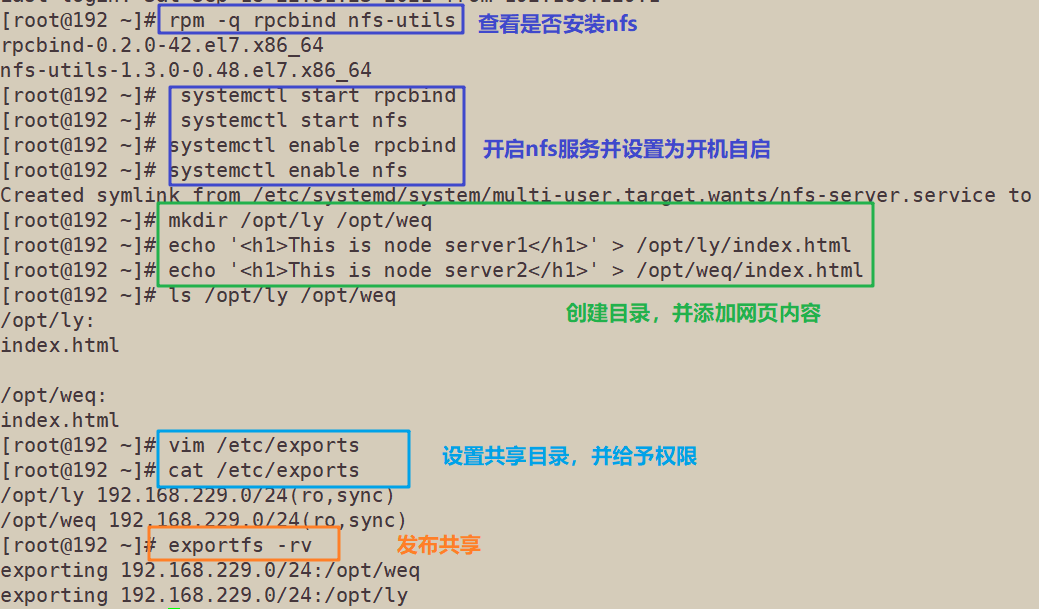

3.6 部署共享存储(NFS服务器:192.168.229.90)

3.7 在节点服务器安装web服务(Nginx)并挂载共享目录

Nginx安装详见博客Nginx网站服务——编译安装、基于授权和客户端访问控制

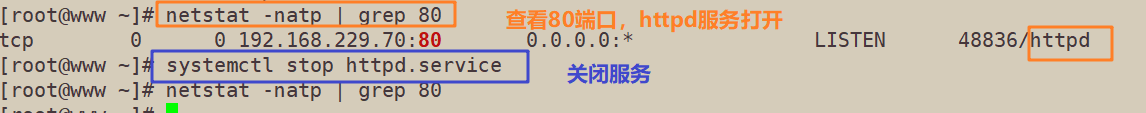

如果节点服务器已经安装了httpd服务,记得关闭,再安装nginx,以防端口冲突

[root@www ~]# netstat -natp | grep 80 tcp 0 0 192.168.229.70:80 0.0.0.0:* LISTEN 48836/httpd [root@www ~]# systemctl stop httpd.service [root@www ~]# netstat -natp | grep 80

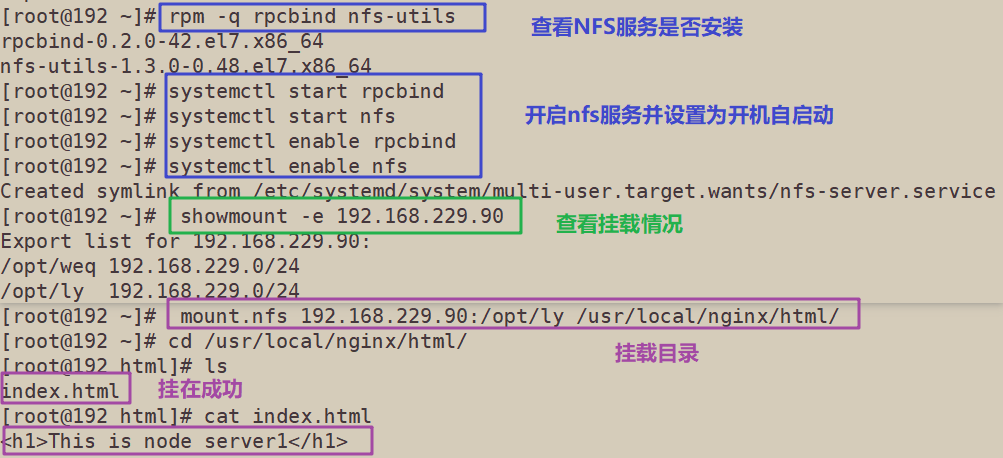

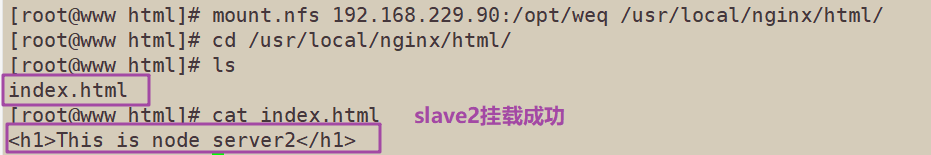

节点服务器挂载共享目录 (slave1 slave2分别挂载/opt/ly /opt/weq)

[root@192 ~]# rpm -q rpcbind nfs-utils rpcbind-0.2.0-42.el7.x86_64 nfs-utils-1.3.0-0.48.el7.x86_64 [root@192 ~]# systemctl start rpcbind [root@192 ~]# systemctl start nfs [root@192 ~]# systemctl enable rpcbind [root@192 ~]# systemctl enable nfs Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service. [root@192 ~]# showmount -e 192.168.229.90 Export list for 192.168.229.90: /opt/weq 192.168.229.0/24 /opt/ly 192.168.229.0/24 [root@192 ~]# mount.nfs 192.168.229.90:/opt/ly /usr/local/nginx/html/ [root@192 ~]# cd /usr/local/nginx/html/ [root@192 html]# ls index.html [root@192 html]# cat index.html <h1>This is node server1</h1>

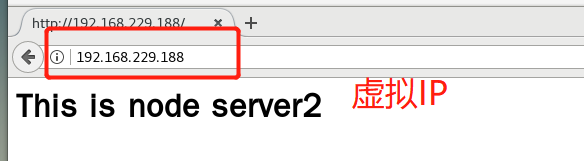

使用(192.168.229.90)访问浏览器,测试LVS—DR模式

这并不是最终目的,我们需要在LVS-DR的基础上设置nginx tomcat的动静分离,访问静态页面交给nginx处理,动态页面交给tomcat处理

4、搭建Nginx+Tomcat的动静分离

4.1 安装nginx+tomcat

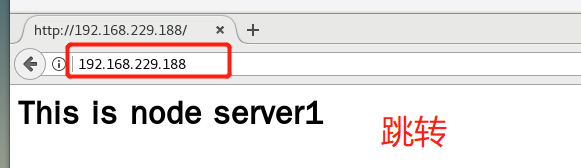

节点服务器已经安装了Nginx,再安装tomcat作为后端服务器(192.168.229.80 192.168.229.70)

tomcat的安装详见博客Tomcat部署及优化,也可使用shell脚本一键部署详见博客shell脚本一键部署——tomcat安装部署(含可复制代码)

4.2 动静分离Tomcat server配置(192.168.229.80 192.168.229.70)

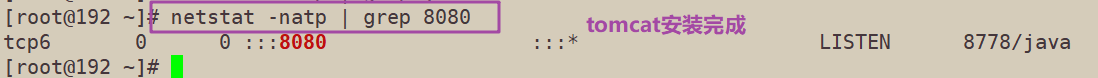

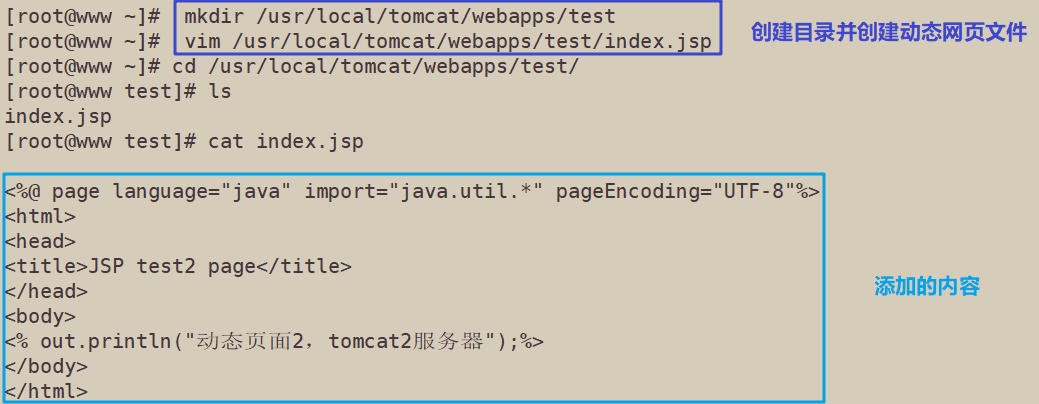

4.2.1 配置Tomcat的动态网页显示内容

slave1 192.168.2229.80

slave2 192.168.2229.70

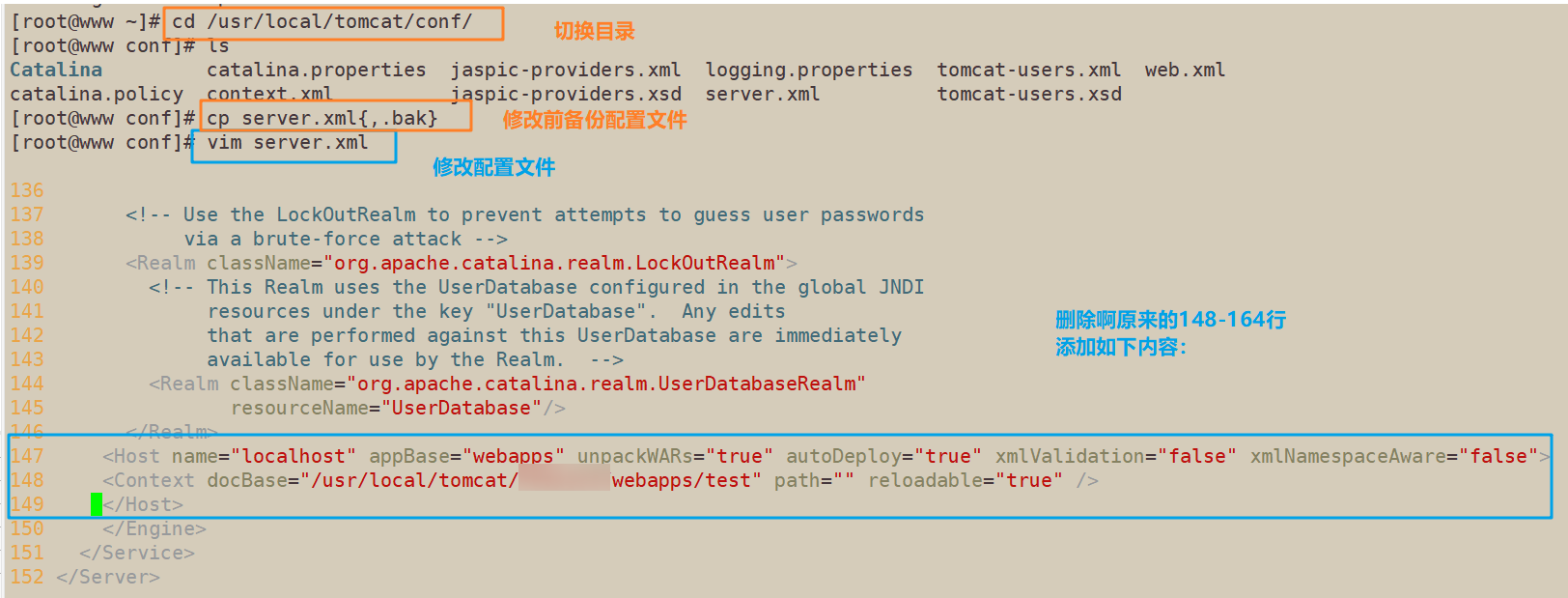

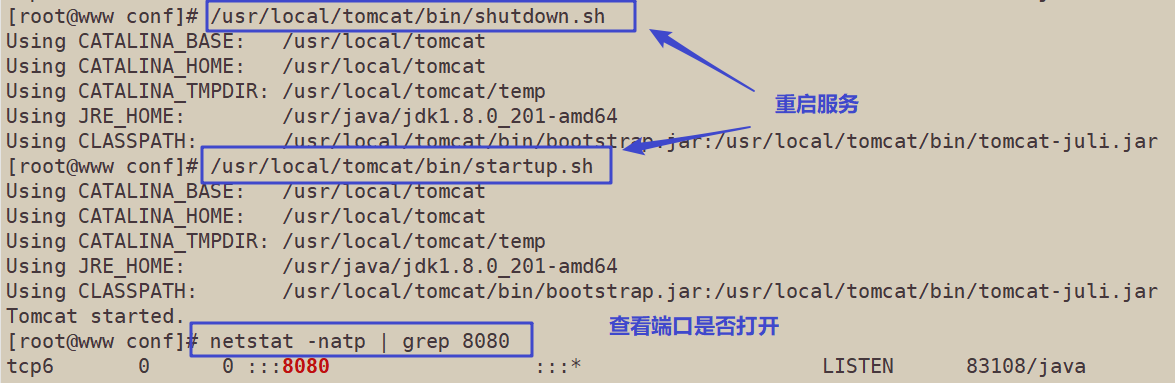

4.2.2 Tomcat实例主配置删除前面的 HOST 配置,添加新的HOST配置

两个节点服务器配置相同

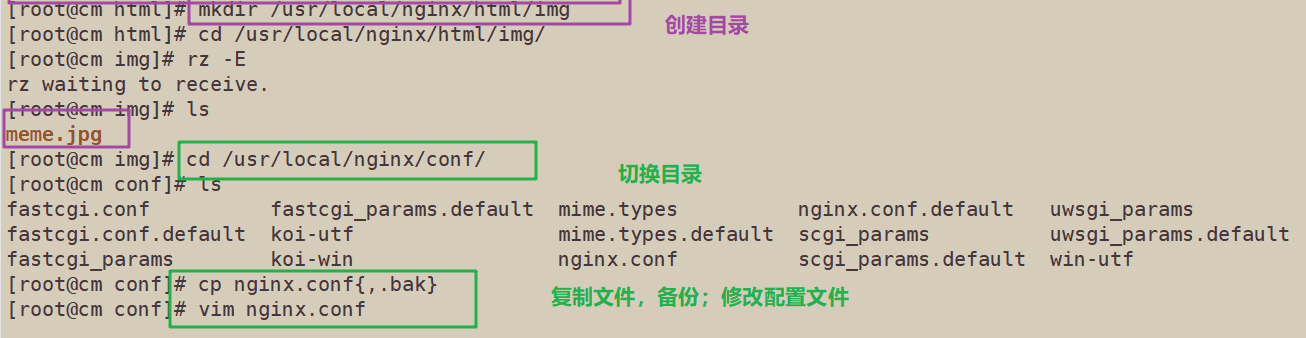

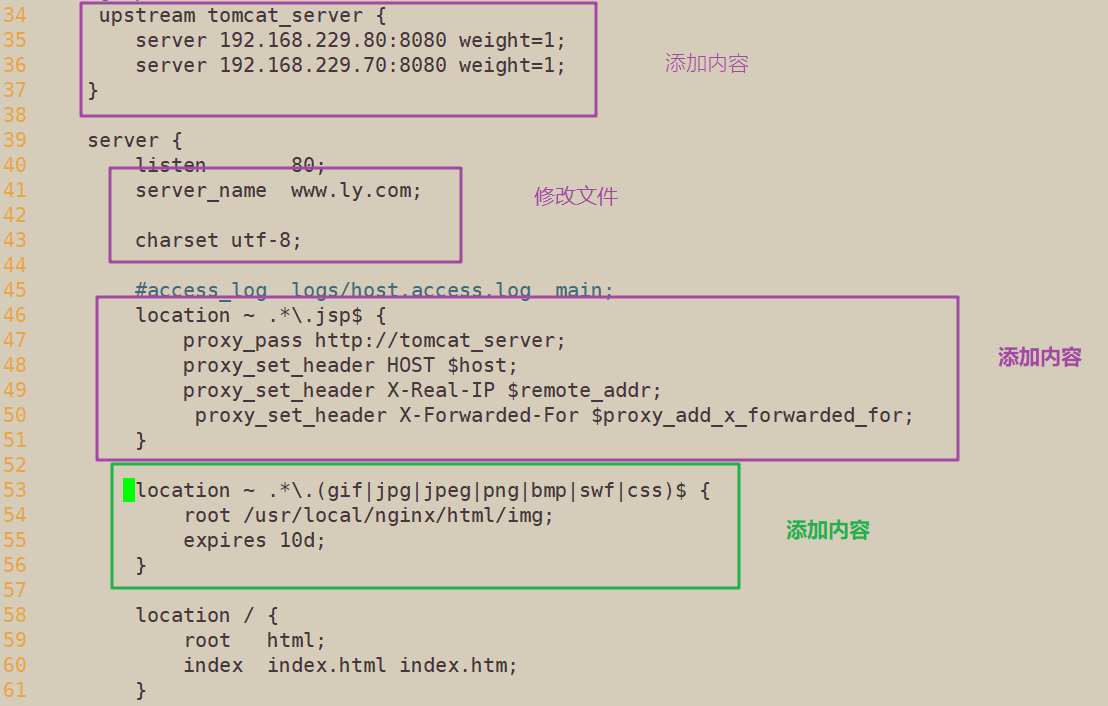

4.3 Nginx server 配置(192.168.229.80 192.168.229.70)两者配置相同

[root@cm html]# mkdir /usr/local/nginx/html/img

[root@cm html]# cd /usr/local/nginx/html/img/

[root@cm img]# rz -E

rz waiting to receive.

[root@cm img]# ls

meme.jpg

[root@cm img]# cd /usr/local/nginx/conf/

[root@cm conf]# ls

fastcgi.conf fastcgi_params.default mime.types nginx.conf.default uwsgi_params

fastcgi.conf.default koi-utf mime.types.default scgi_params uwsgi_params.default

fastcgi_params koi-win nginx.conf scgi_params.default win-utf

[root@cm conf]# cp nginx.conf{,.bak}

[root@cm conf]# vim nginx.conf

upstream tomcat_server {

35 server 192.168.229.80:8080 weight=1;

36 server 192.168.229.70:8080 weight=1;

37 }

38

39 server {

40 listen 80;

41 server_name www.ly.com;

42

43 charset utf-8;

44

45 #access_log logs/host.access.log main;

46 location ~ .*\.jsp$ {

47 proxy_pass http://tomcat_server;

48 proxy_set_header HOST $host;

49 proxy_set_header X-Real-IP $remote_addr;

50 proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

51 }

52

53 location ~ .*\.(gif|jpg|jpeg|png|bmp|swf|css)$ {

54 root /usr/local/nginx/html/img;

55 expires 10d;

56 }

57

58 location / {

59 root html;

60 index index.html index.htm;

61 }

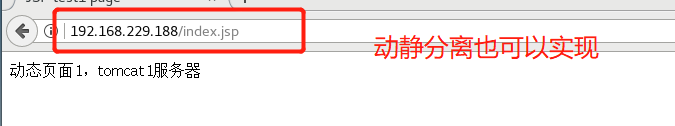

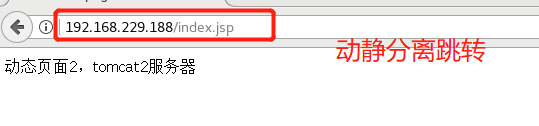

4.4、测试效果

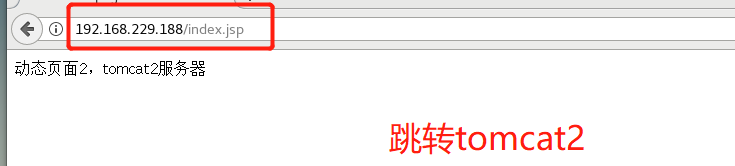

使用浏览器(192.168.229.90)测试动静分离以及LVS-DR效果(前面已测试),不断刷新浏览器测试:

浏览器(192.168.229.90)访问 http://192.168.229.188/index.jsp

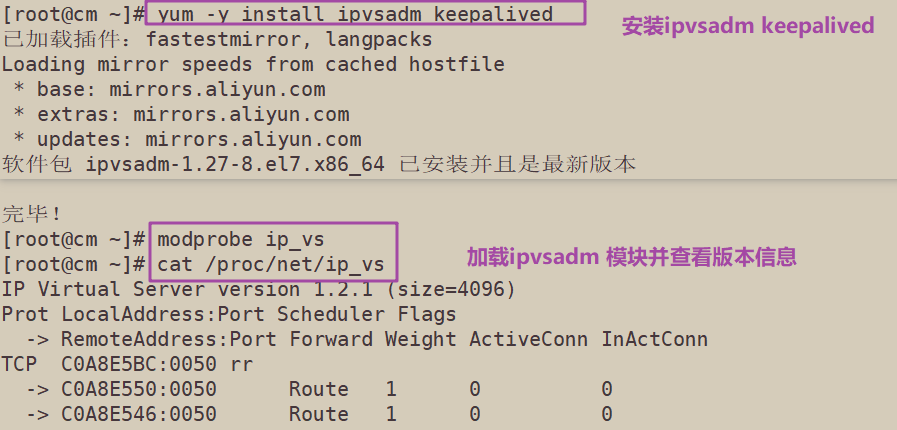

5、搭建keepalived

5.1 配置负载调度器(两者配置相同)

ha01(192.168.229.60)ha02(192.168.229.50)

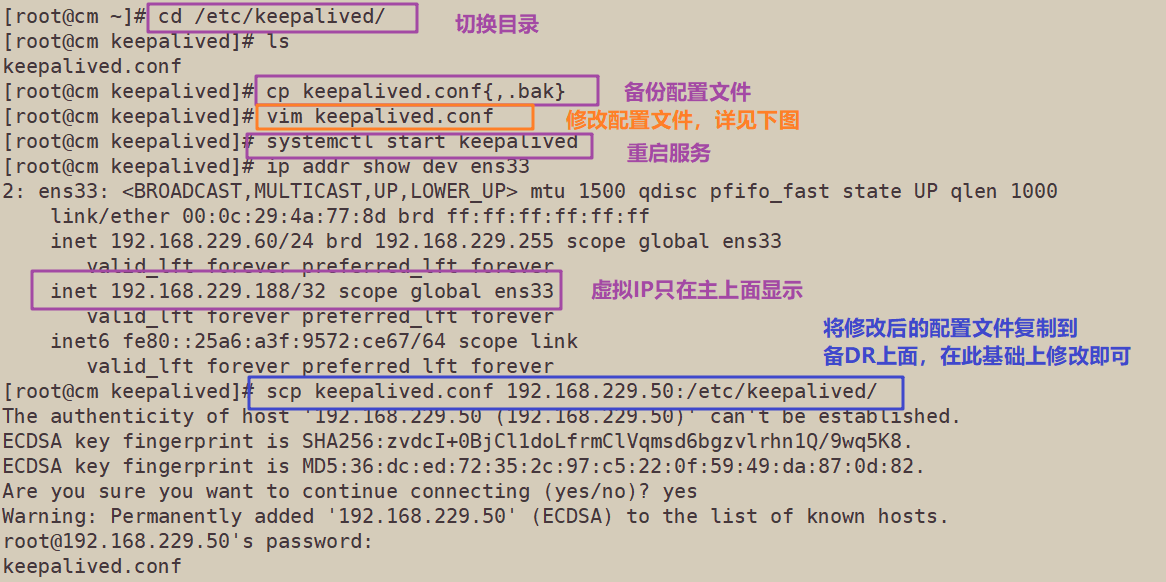

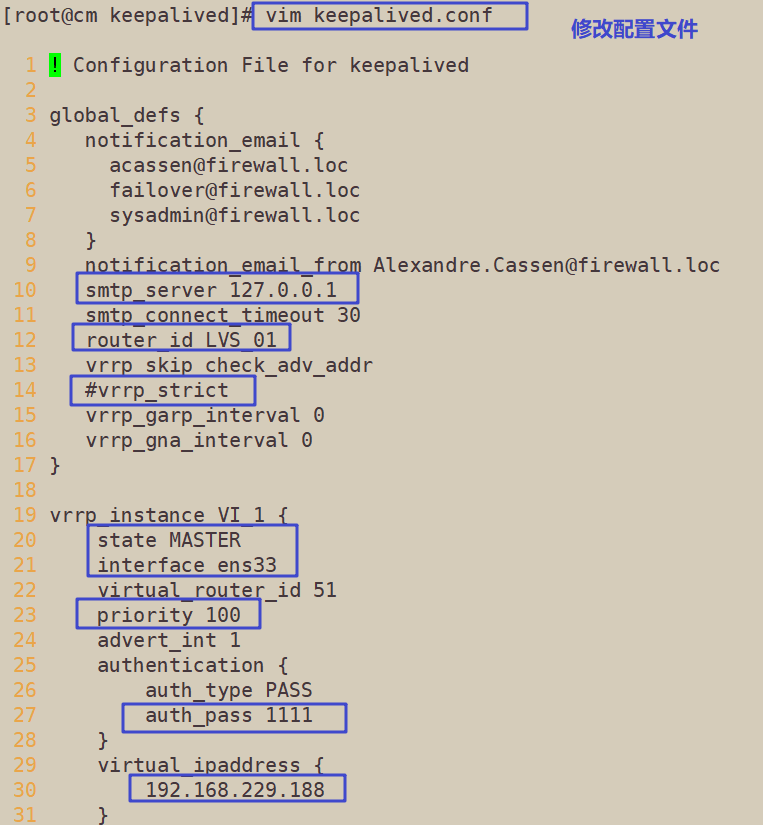

5.2 配置keeplived(主(ha01)、备(ha02)DR 服务器上都要设置)

主DR 服务器: 192.168.229.60

[root@cm ~]# cd /etc/keepalived/

[root@cm keepalived]# ls

keepalived.conf

[root@cm keepalived]# cp keepalived.conf{,.bak}

[root@cm keepalived]# vim keepalived.conf

[root@cm keepalived]# systemctl start keepalived

[root@cm keepalived]# ip addr show dev ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:4a:77:8d brd ff:ff:ff:ff:ff:ff

inet 192.168.229.60/24 brd 192.168.229.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.229.188/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::25a6:a3f:9572:ce67/64 scope link

valid_lft forever preferred_lft forever

[root@cm keepalived]# scp keepalived.conf 192.168.229.50:/etc/keepalived/

The authenticity of host '192.168.229.50 (192.168.229.50)' can't be established.

ECDSA key fingerprint is SHA256:zvdcI+0BjCl1doLfrmClVqmsd6bgzvlrhn1Q/9wq5K8.

ECDSA key fingerprint is MD5:36:dc:ed:72:35:2c:97:c5:22:0f:59:49:da:87:0d:82.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.229.50' (ECDSA) to the list of known hosts.

root@192.168.229.50's password:

keepalived.conf 100% 1177 2.3MB/s 00:00

重启服务

[root@cm keepalived]# systemctl start keepalived

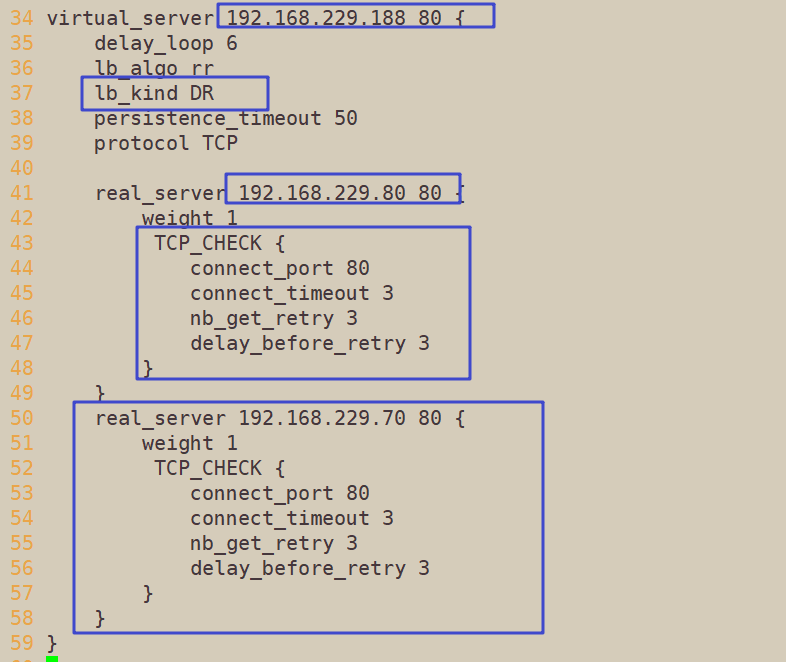

备DR 服务器: 192.168.229.50

重启服务

[root@192 keepalived]# systemctl restart keepalived.service

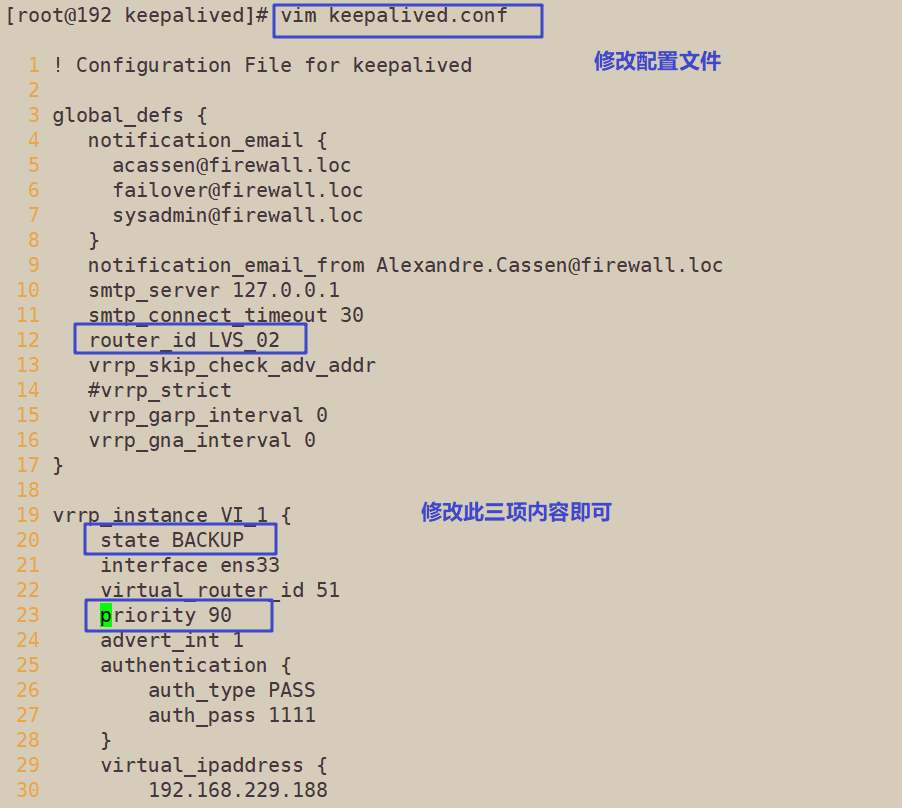

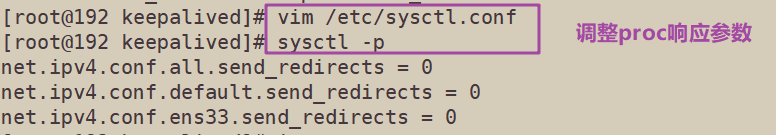

5.3 调整内核 proc 响应参数,关闭linux内核的重定向参数响应

主DR在LVS-DR模式中已经调整了响应参数,这里只需要修改备DR服务器即可

[root@192 keepalived]# vim /etc/sysctl.conf [root@192 keepalived]# sysctl -p net.ipv4.conf.all.send_redirects = 0 net.ipv4.conf.default.send_redirects = 0 net.ipv4.conf.ens33.send_redirects = 0

5.4 测试

在浏览器(192.168.229.90)访问测试

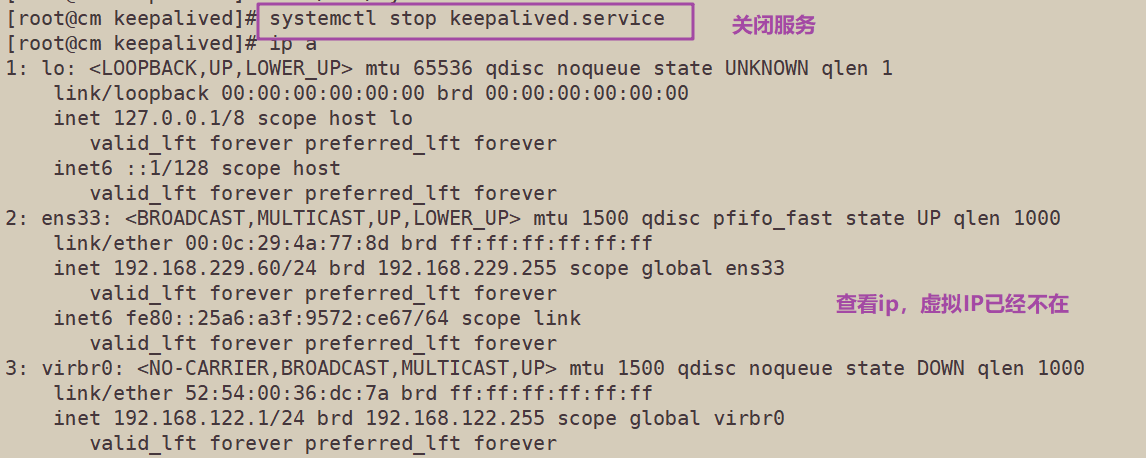

关闭主DR(ha01)的keepalived服务

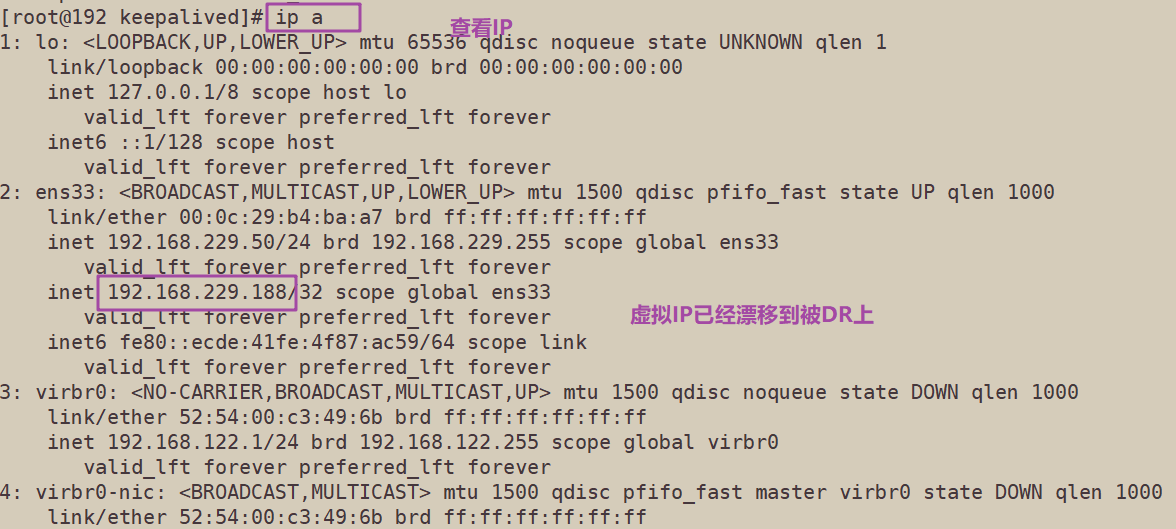

查看备DR(ha01)的IP

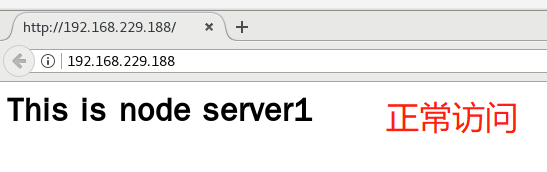

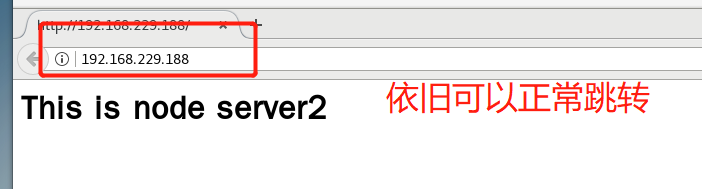

在浏览器(192.168.229.90)访问测试

6、搭建MHA

6.1 搭建主从复制(一主两从)

master01(192.168.229.90)、slave01(192.168.229.80)、slave02(192.168.229.70)安装mysql,详见博客LAMP源码编译安装(二)——编译安装mysqld 服务、PHP解析环境以及安装论坛(附超详细步骤,快来学习吧!)

Master01、Slave01、Slave02 节点上安装 mysql5.7

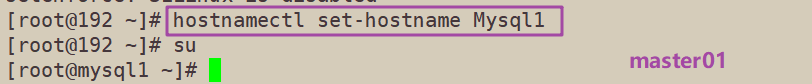

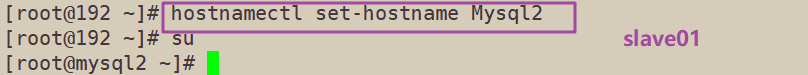

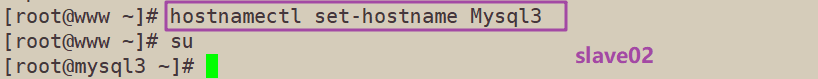

修改 Master01、Slave01、Slave02 节点的主机名

hostnamectl set-hostname Mysql1 hostnamectl set-hostname Mysql2 hostnamectl set-hostname Mysql3

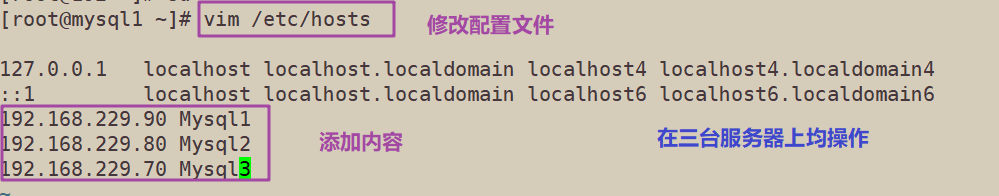

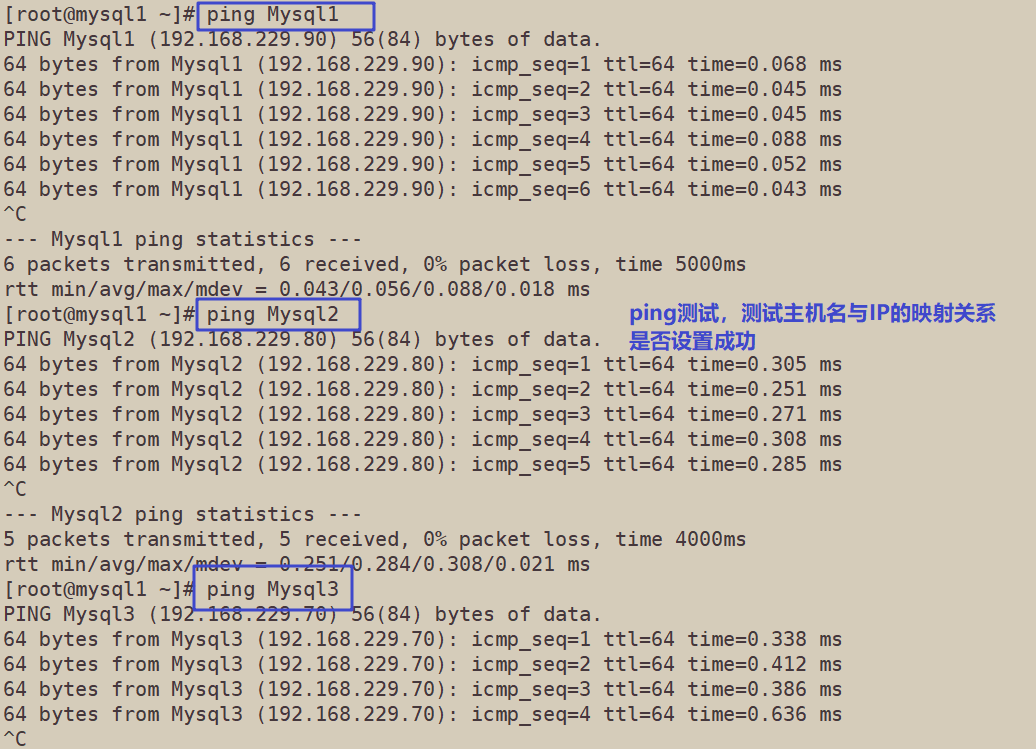

在所有服务器上/etc/hosts添加IP与主机名的解析并进行ping测试

[root@mysql1 ~]# vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.229.90 Mysql1 192.168.229.80 Mysql2 192.168.229.70 Mysql3

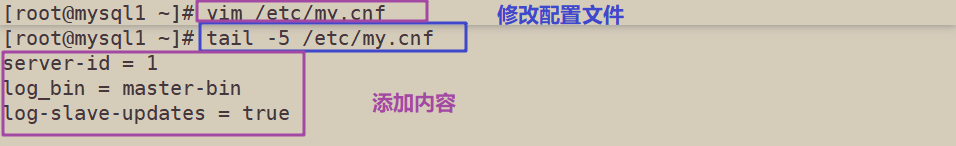

修改 Master01、Slave01、Slave02 节点的 Mysql主配置文件/etc/my.cnf

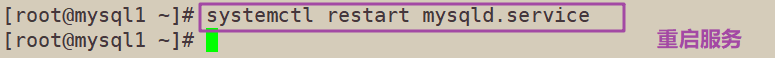

##Master01 节点##

vim /etc/my.cnf [mysqld]

#添加内容 server-id = 1 log_bin = master-bin log-slave-updates = true systemctl restart mysqld

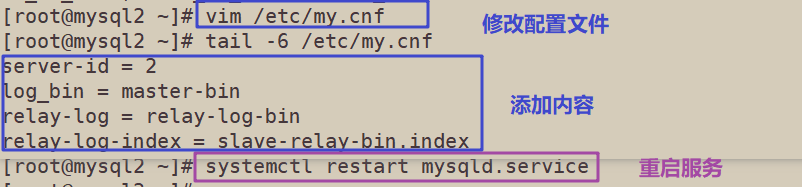

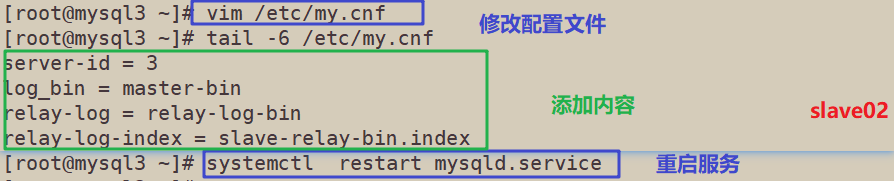

##Slave01、Slave02 节点##

vim /etc/my.cnf server-id = 2 #三台服务器的 server-id 不能一样 log_bin = master-bin relay-log = relay-log-bin relay-log-index = slave-relay-bin.index systemctl restart mysqld

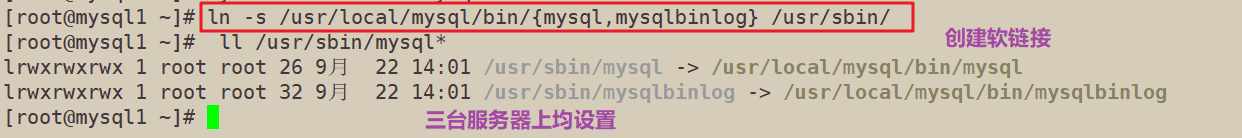

在 Master01、Slave01、Slave02 节点上都创建两个软链接

[root@mysql1 ~]# systemctl restart mysqld.service

[root@mysql1 ~]# ln -s /usr/local/mysql/bin/{mysql,mysqlbinlog} /usr/sbin/

[root@mysql1 ~]# ll /usr/sbin/mysql*

lrwxrwxrwx 1 root root 26 9月 22 14:01 /usr/sbin/mysql -> /usr/local/mysql/bin/mysql

lrwxrwxrwx 1 root root 32 9月 22 14:01 /usr/sbin/mysqlbinlog -> /usr/local/mysql/bin/mysqlbinlog

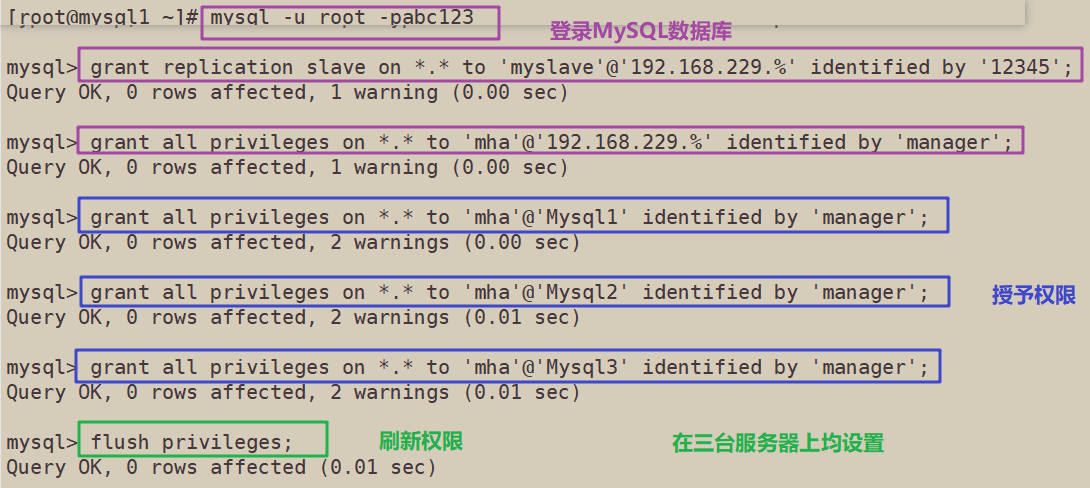

所有数据库节点进行 mysql 授权(一主两从)

mysql -uroot -pabc123 grant replication slave on *.* to 'myslave'@'192.168.229.%' identified by '12345'; #从数据库同步使用 grant all privileges on *.* to 'mha'@'192.168.229.%' identified by 'manager'; #manager 使用 grant all privileges on *.* to 'mha'@'Mysql1' identified by 'manager'; #防止从库通过主机名连接不上主库 grant all privileges on *.* to 'mha'@'Mysql2' identified by 'manager'; grant all privileges on *.* to 'mha'@'Mysql3' identified by 'manager'; flush privileges;

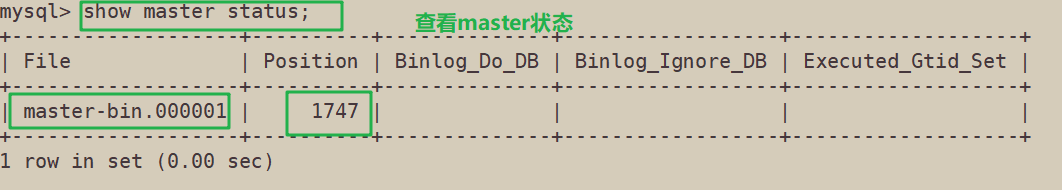

在 Master01 节点查看二进制文件和同步点

mysql> show master status; +-------------------+----------+--------------+------------------+-------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set | +-------------------+----------+--------------+------------------+-------------------+ | master-bin.000001 | 1747 | | | | +-------------------+----------+--------------+------------------+-------------------+ 1 row in set (0.00 sec)

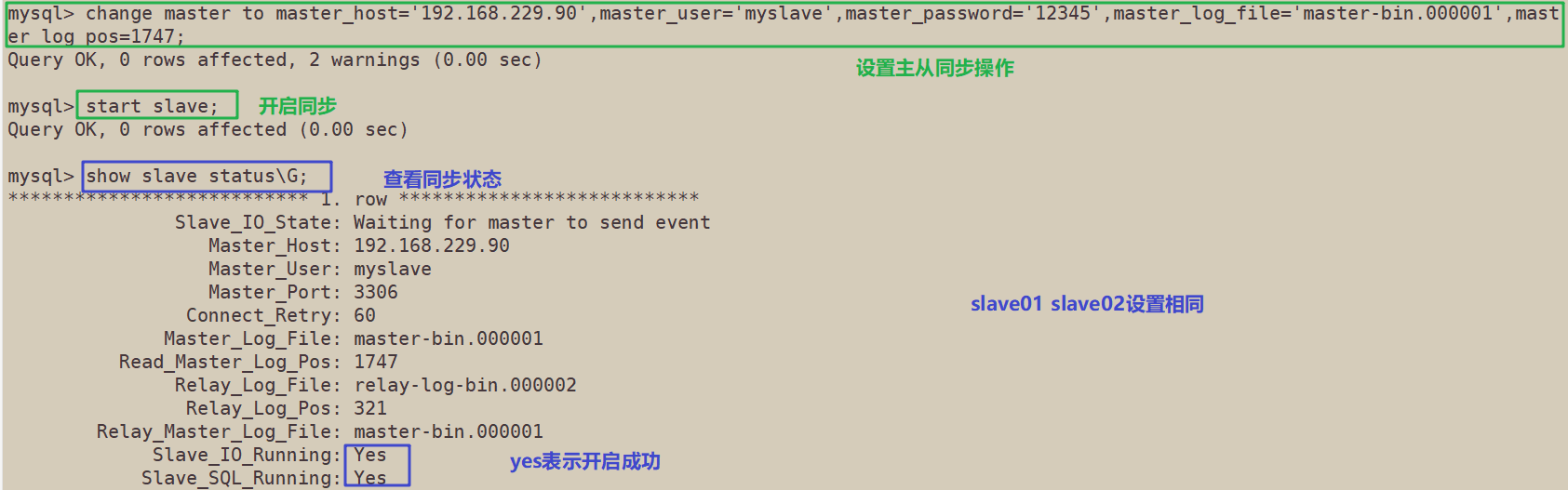

在 Slave01、Slave02 节点执行同步操作,并查看数据同步结果

mysql> change master to master_host='192.168.229.90',master_user='myslave',master_password='12345',master_log_file='master-bin.000001',master_log_pos=1747;

Query OK, 0 rows affected, 2 warnings (0.04 sec)

mysql> start slave;

Query OK, 0 rows affected (0.00 sec)

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.229.90

Master_User: myslave

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: master-bin.000001

Read_Master_Log_Pos: 1747

Relay_Log_File: relay-log-bin.000002

Relay_Log_Pos: 321

Relay_Master_Log_File: master-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

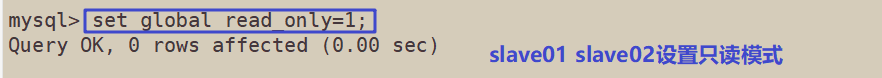

两个从库必须设置为只读模式:

set global read_only=1;

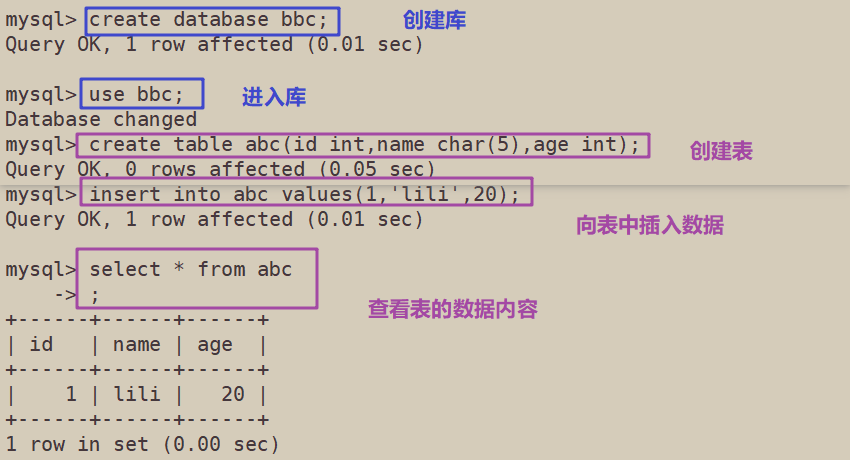

插入数据测试数据库同步

##在 Master 主库插入条数据,测试是否同步##

mysql> create database bbc;

Query OK, 1 row affected (0.01 sec)

mysql> use bbc;

Database changed

mysql> create table abc(id int,name char(5),age int);

Query OK, 0 rows affected (0.05 sec)

mysql> insert into abc values(1,'lili',20);

Query OK, 1 row affected (0.01 sec)

mysql> select * from abc

-> ;

+------+------+------+

| id | name | age |

+------+------+------+

| 1 | lili | 20 |

+------+------+------+

1 row in set (0.00 sec)

##在slave01 02 查看数据是否同步##

6.2、MHA搭建

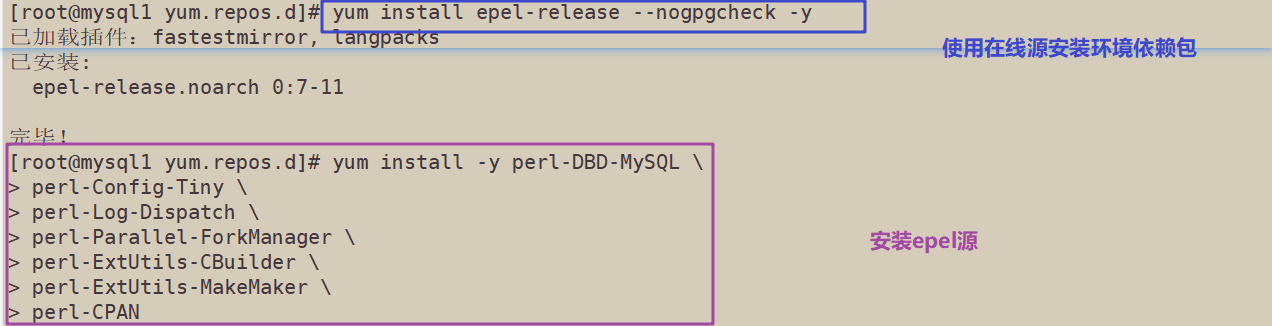

在 Master01、Slave01、Slave02 所有服务器上都安装 MHA 依赖的环境,首先安装 epel 源

yum install epel-release --nogpgcheck -y yum install -y perl-DBD-MySQL \ perl-Config-Tiny \ perl-Log-Dispatch \ perl-Parallel-ForkManager \ perl-ExtUtils-CBuilder \ perl-ExtUtils-MakeMaker \ perl-CPAN

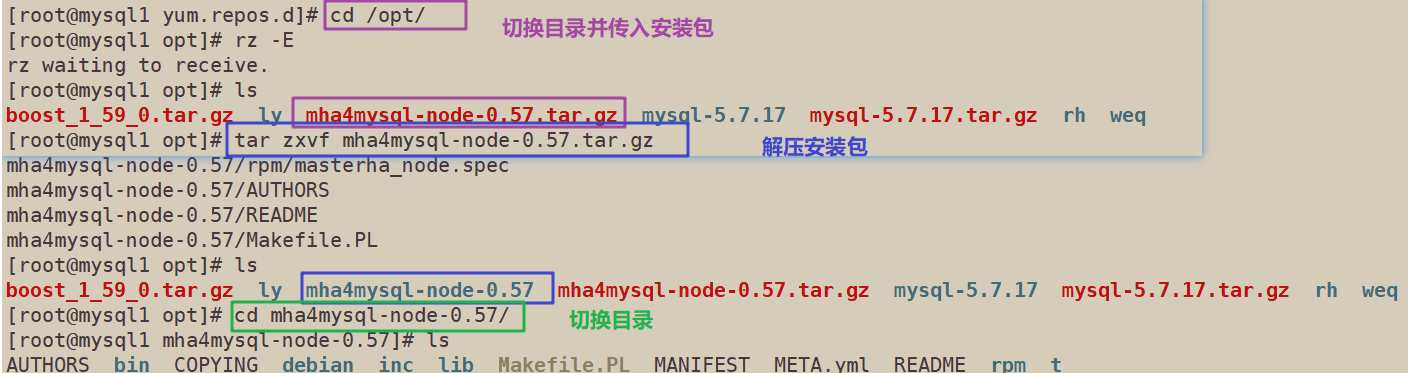

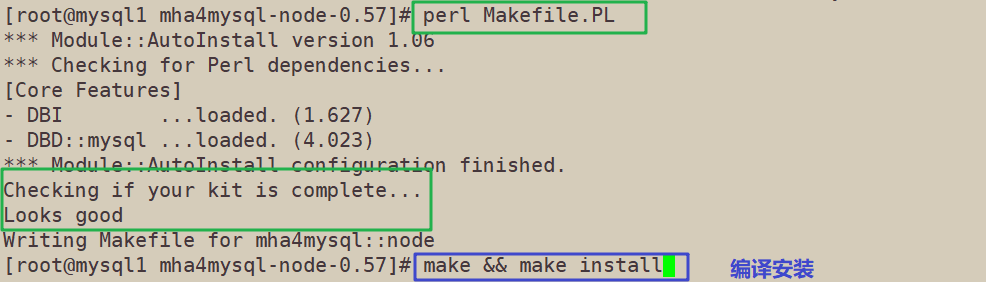

安装 MHA 软件包,先在 Master01、Slave01、Slave02所有服务器上必须先安装 node 组件

cd /opt tar zxvf mha4mysql-node-0.57.tar.gz cd mha4mysql-node-0.57 perl Makefile.PL make && make install

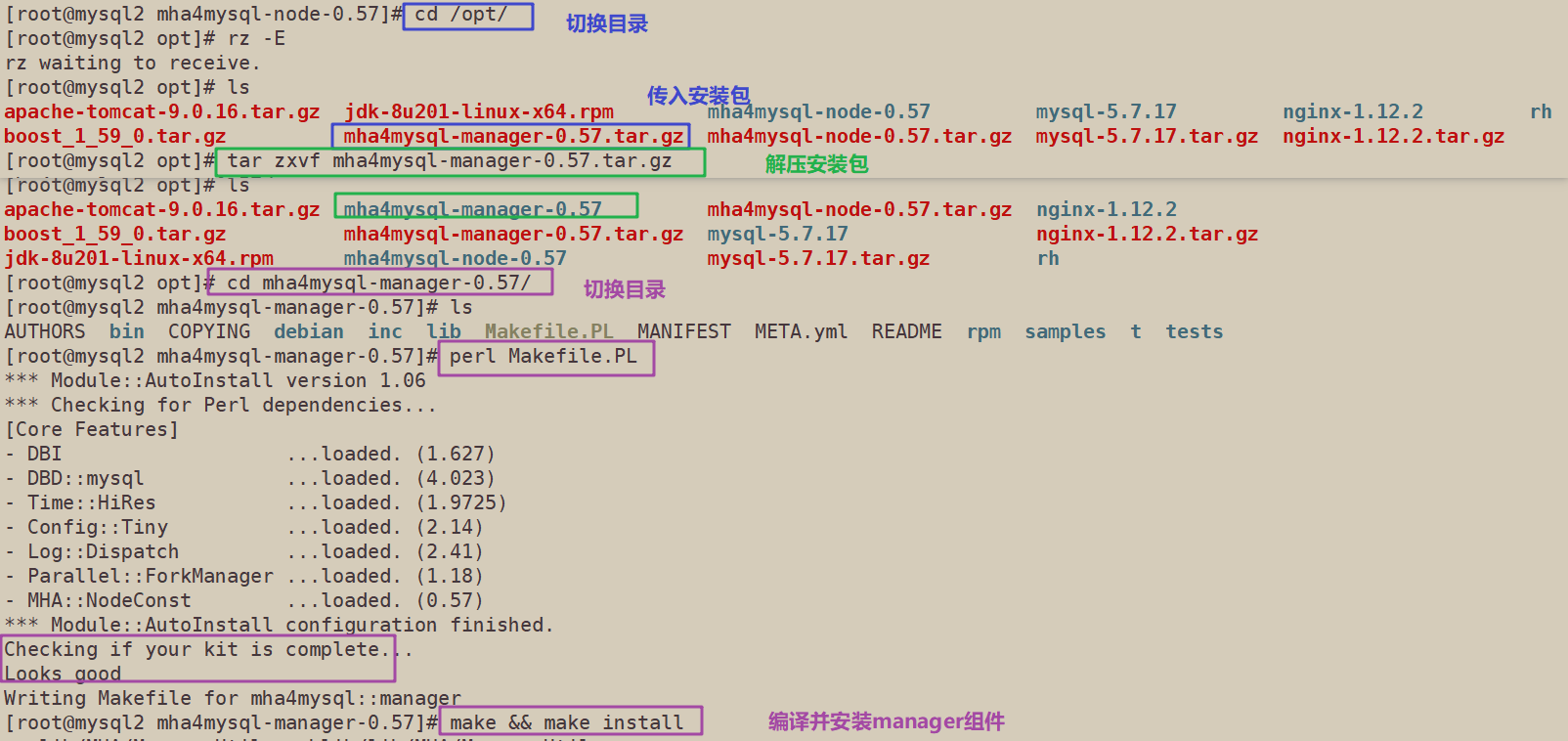

在 slave01节点上安装 manager 组件

cd /opt tar zxvf mha4mysql-manager-0.57.tar.gz cd mha4mysql-manager-0.57 perl Makefile.PL make && make install

在Master01、Slave01、Slave02所有服务器上配置无密码认证

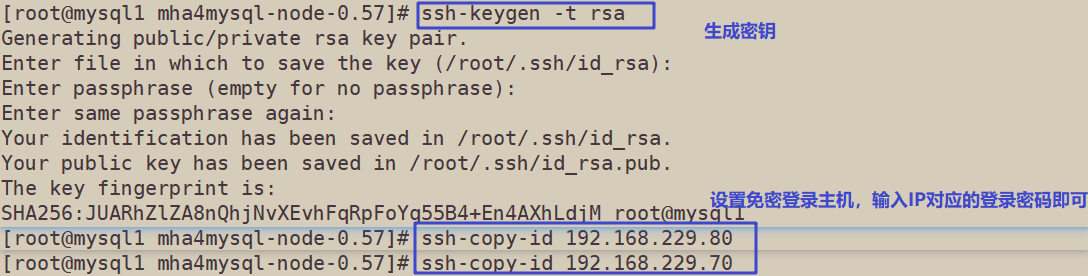

在 mysql1 上配置到数据库节点 mysql2 和 mysql3 的无密码认证

ssh-keygen -t rsa ssh-copy-id 192.168.229.80 ssh-copy-id 192.168.229.70

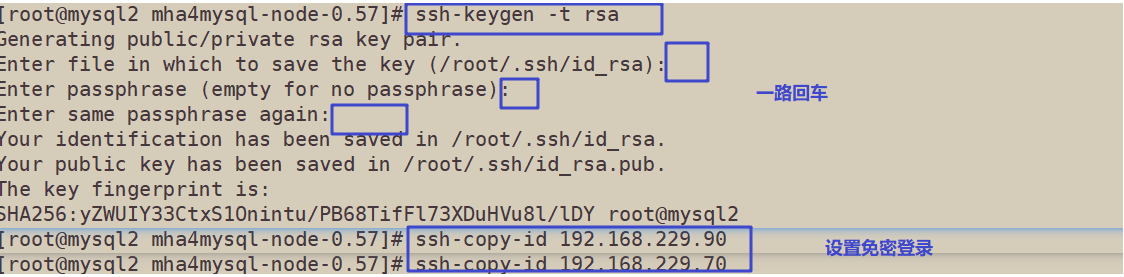

在 mysql2 上配置到数据库节点 mysql1 和 mysql3 的无密码认证

ssh-keygen -t rsa ssh-copy-id 192.168.229.90 ssh-copy-id 192.168.229.80

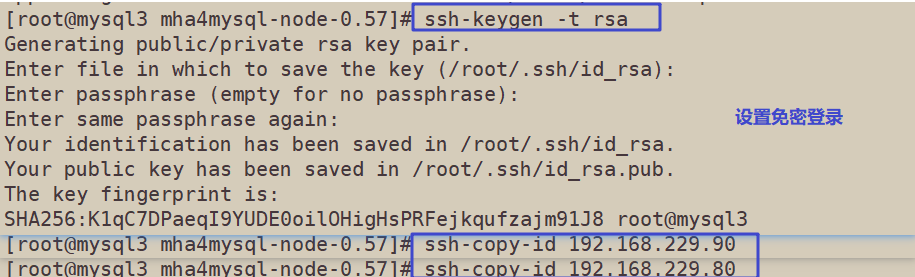

在 mysql3 上配置到数据库节点 mysql1 和 mysql2 的无密码认证

ssh-keygen -t rsa ssh-copy-id 192.168.229.90 ssh-copy-id 192.168.229.80

在 manager 节点(slave1:192.168.229.80)上配置 MHA

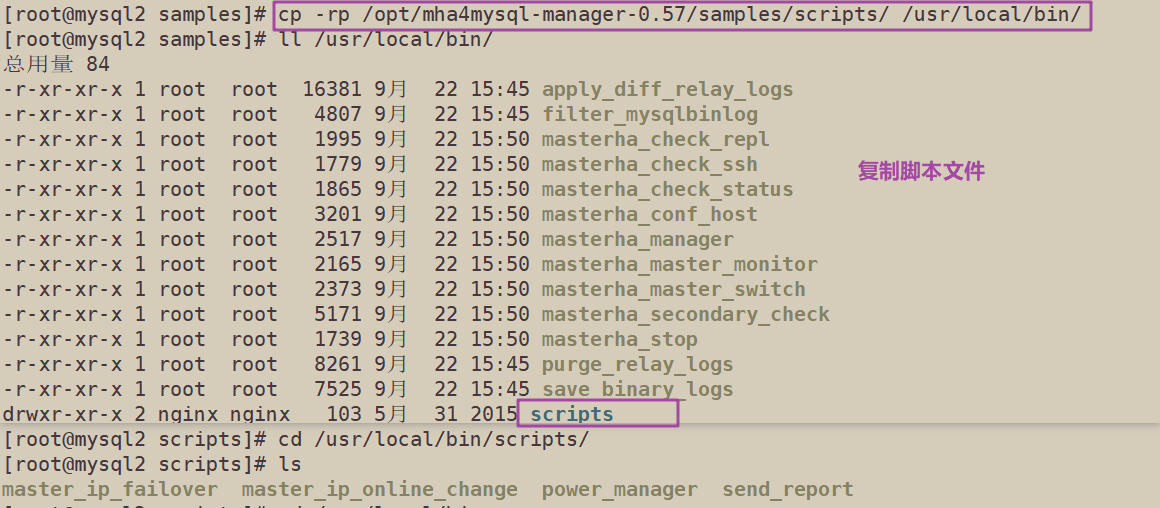

在 manager 节点上复制相关脚本到/usr/local/bin 目录

[root@mysql2 samples]# cp -rp /opt/mha4mysql-manager-0.57/samples/scripts/ /usr/local/bin/ [root@mysql2 samples]# ll /usr/local/bin/ 总用量 84 -r-xr-xr-x 1 root root 16381 9月 22 15:45 apply_diff_relay_logs -r-xr-xr-x 1 root root 4807 9月 22 15:45 filter_mysqlbinlog -r-xr-xr-x 1 root root 1995 9月 22 15:50 masterha_check_repl -r-xr-xr-x 1 root root 1779 9月 22 15:50 masterha_check_ssh -r-xr-xr-x 1 root root 1865 9月 22 15:50 masterha_check_status -r-xr-xr-x 1 root root 3201 9月 22 15:50 masterha_conf_host -r-xr-xr-x 1 root root 2517 9月 22 15:50 masterha_manager -r-xr-xr-x 1 root root 2165 9月 22 15:50 masterha_master_monitor -r-xr-xr-x 1 root root 2373 9月 22 15:50 masterha_master_switch -r-xr-xr-x 1 root root 5171 9月 22 15:50 masterha_secondary_check -r-xr-xr-x 1 root root 1739 9月 22 15:50 masterha_stop -r-xr-xr-x 1 root root 8261 9月 22 15:45 purge_relay_logs -r-xr-xr-x 1 root root 7525 9月 22 15:45 save_binary_logs drwxr-xr-x 2 nginx nginx 103 5月 31 2015 scripts [root@mysql2 scripts]# cd /usr/local/bin/scripts/ [root@mysql2 scripts]# ls master_ip_failover master_ip_online_change power_manager send_report

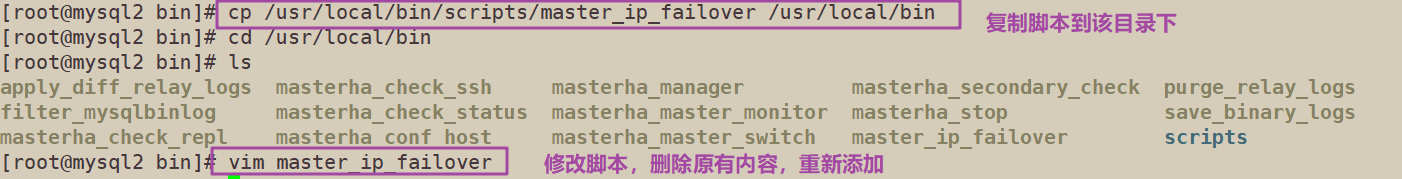

复制上述的自动切换时 VIP 管理的脚本到 /usr/local/bin 目录,这里使用master_ip_failover脚本来管理 VIP 和故障切换

具体的脚本内容详见博客:MySQL —MHA高可用配置及故障切换

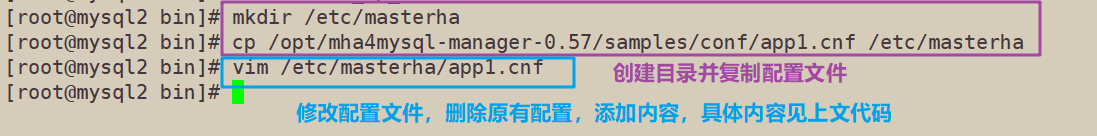

创建 MHA 软件目录并拷贝配置文件,这里使用app1.cnf配置文件来管理 mysql 节点服务器

mkdir /etc/masterha cp /opt/mha4mysql-manager-0.57/samples/conf/app1.cnf /etc/masterha vim /etc/masterha/app1.cnf #删除原有内容,直接复制并修改节点服务器的IP地址 [server default] manager_log=/var/log/masterha/app1/manager.log manager_workdir=/var/log/masterha/app1 master_binlog_dir=/usr/local/mysql/data master_ip_failover_script=/usr/local/bin/master_ip_failover master_ip_online_change_script=/usr/local/bin/master_ip_online_change password=manager ping_interval=1 remote_workdir=/tmp repl_password=12345 repl_user=myslave secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.229.80 -s 192.168.229.70 shutdown_script="" ssh_user=root user=mha [server1] hostname=192.168.229.90 port=3306 [server2] candidate_master=1 check_repl_delay=0 hostname=192.168.229.80 port=3306 [server3] hostname=192.168.229.70 port=3306

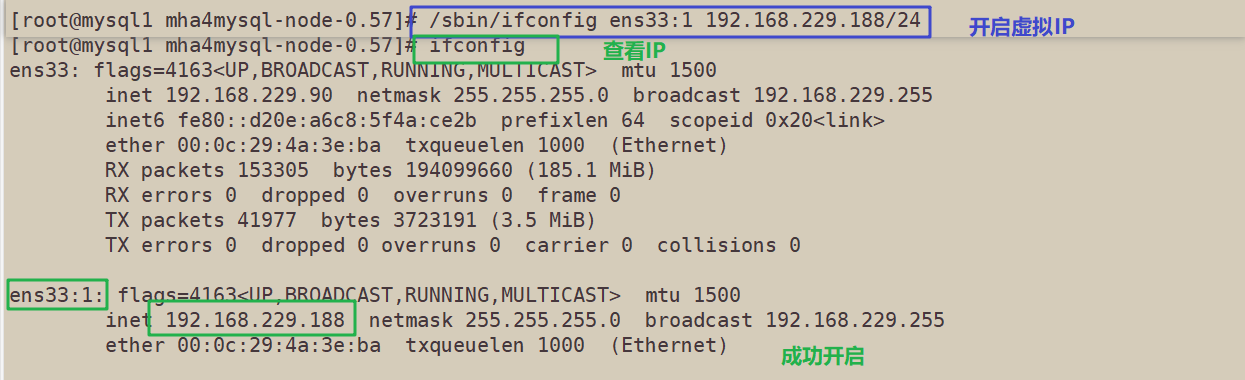

第一次配置需要在 Master 节点上手动开启虚拟IP

/sbin/ifconfig ens33:1 192.168.229.188/24

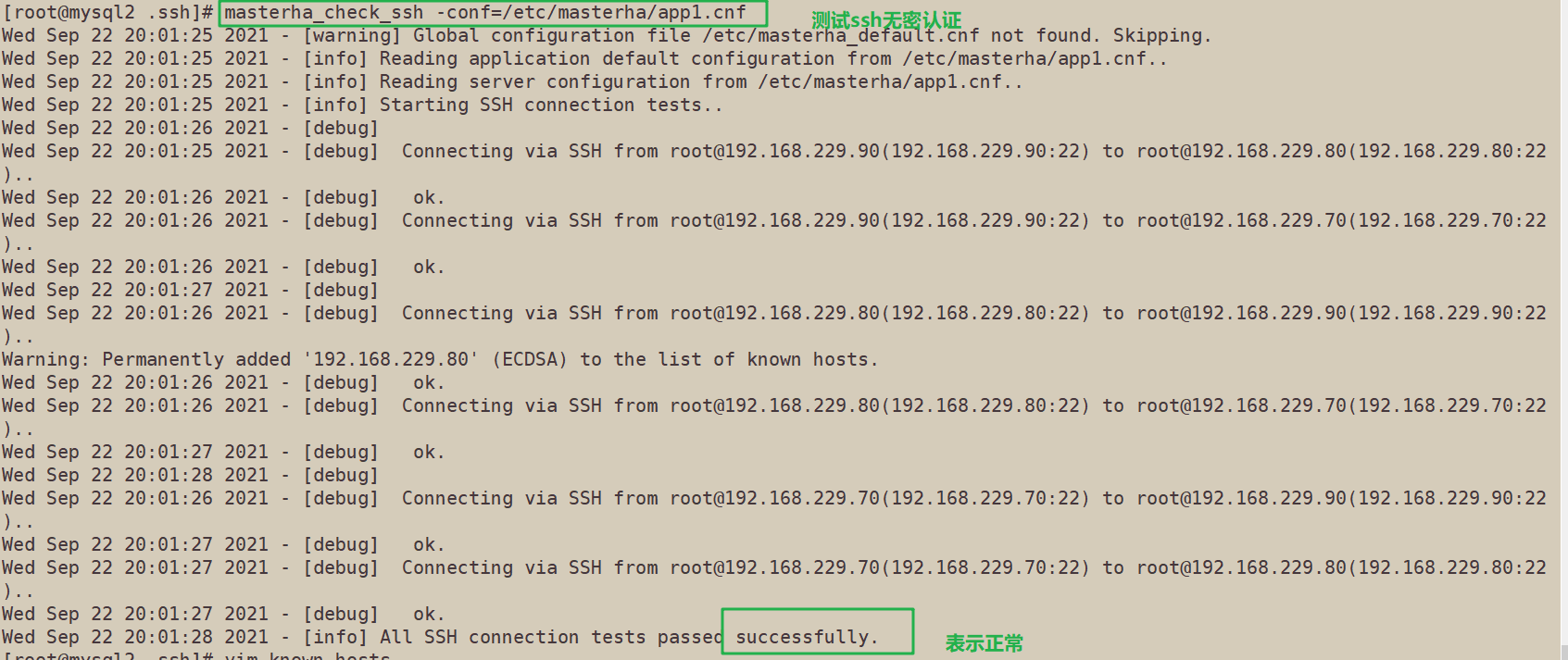

在 manager 节点上测试 ssh 无密码认证,如果正常最后会输出 successfully

[root@mysql2 .ssh]# masterha_check_ssh -conf=/etc/masterha/app1.cnf

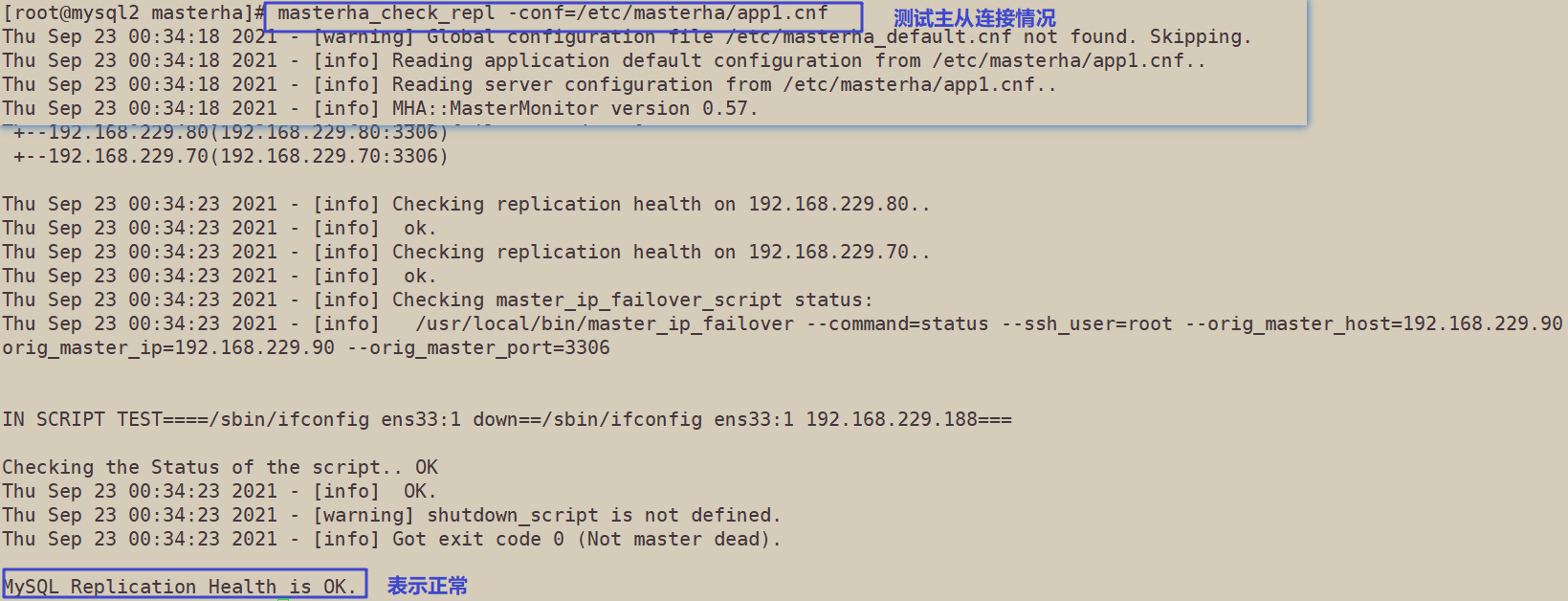

在 manager 节点上测试 mysql 主从连接情况,最后出现 MySQL Replication Health is OK 字样说明正常

masterha_check_repl -conf=/etc/masterha/app1.cnf

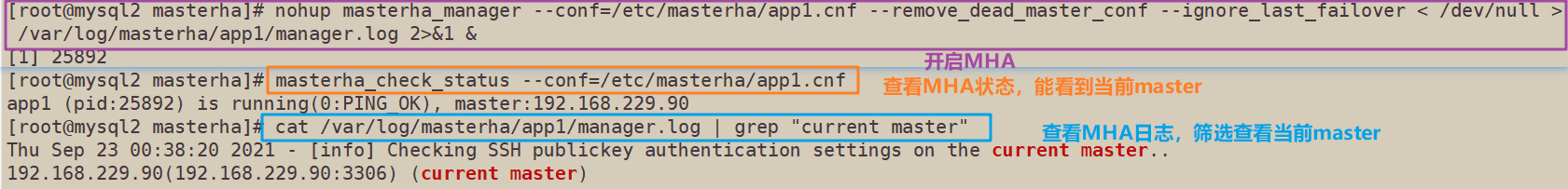

在 manager (slave01)节点上启动 MHA 并查看MHA状态以及日志

[root@mysql2 masterha]# nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 & [1] 25892 [root@mysql2 masterha]# masterha_check_status --conf=/etc/masterha/app1.cnf app1 (pid:25892) is running(0:PING_OK), master:192.168.229.90 [root@mysql2 masterha]# cat /var/log/masterha/app1/manager.log | grep "current master" Thu Sep 23 00:38:20 2021 - [info] Checking SSH publickey authentication settings on the current master.. 192.168.229.90(192.168.229.90:3306) (current master)

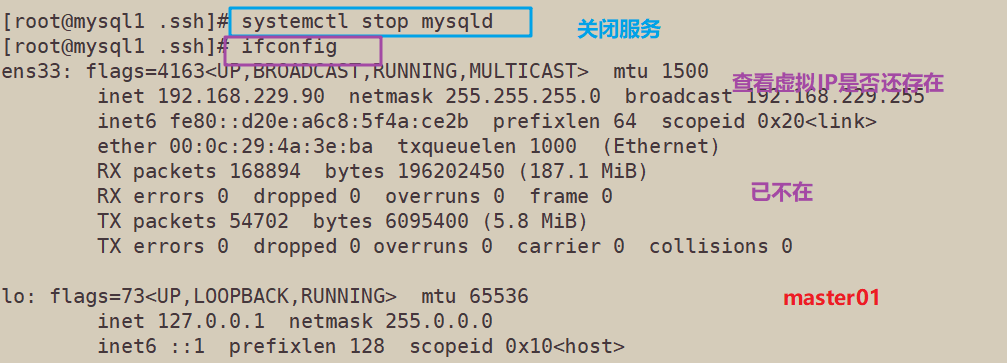

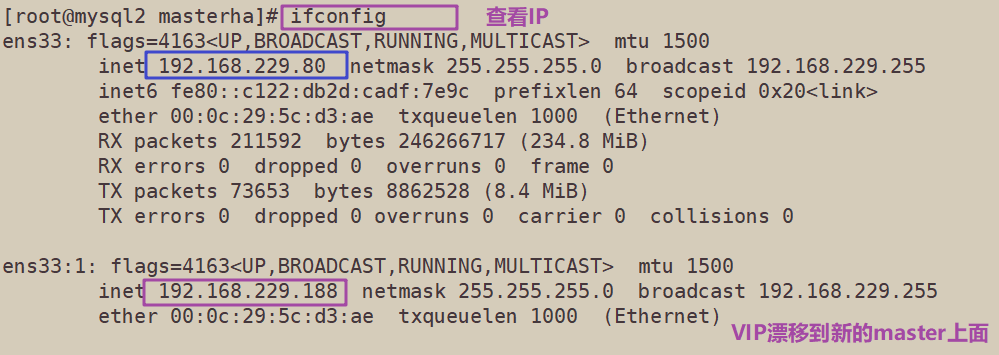

6.3 故障切换测试

在 Master01 节点 Mysql1 上停止mysql服务

在 manager 节点(slave01)上监控观察日志记录

tail -f /var/log/masterha/app1/manager.log

证明了MHA搭建成功,能够实现master故障切换,实现了高可用

到此所有实验部分全部完成