C++ 神经网络初尝试

人言落日是天涯,望极天涯不见家。

已恨碧山相阻隔,碧山还被暮云遮。--李覯

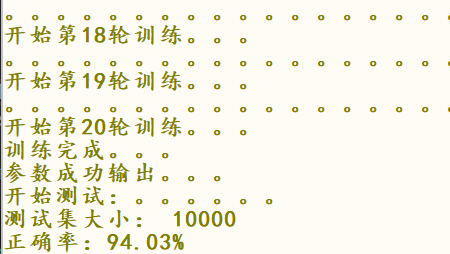

用C++ eigen库对《 Neural networks and deep learning 》中python代码的复现。目前最好正确率仅在94%左右,迫于期末事儿多,暂时存档记录一下。来日方长,先放一放。

main()函数展示一下基本流程:

1 int main() 2 { 3 4 int b[] = { 784,60,10}; //网络结构 5 int len = sizeof(b) / 4; 6 7 vector<batch> datas; 8 vector<batch> testdatas; 9 10 Network N(b,len); 11 N.saveParameters("E:/shujuji/shouxie/y.txt"); //保存一下初始化参数 12 13 cout << "请求读取数据集。。。。。。。。。。" << endl; 14 15 string filename = "E:/shujuji/shouxie/train-images.idx3-ubyte"; 16 string filename1 = "E:/shujuji/shouxie/train-labels.idx1-ubyte"; 17 datas = loadData(filename,filename1,-1); 18 19 cout << "读取成功!!!" << endl; 20 cout << "数据集大小:" << datas.size() << endl; 21 cout << "数据格式: (" << datas[2].x.rows() << ", " << datas[2].x.cols() << " )" << endl; 22 23 string filename2 = "E:/shujuji/shouxie/t10k-images.idx3-ubyte"; 24 string filename3 = "E:/shujuji/shouxie/t10k-labels.idx1-ubyte"; 25 testdatas = loadData(filename2, filename3,10000); 26 27 cout << "读取成功!!!" << endl; 28 cout << "数据集大小:" << testdatas.size() << endl; 29 cout << "数据格式: (" << testdatas[2].x.rows() << ", " << testdatas[2].x.cols() << " )" << endl; 30 31 N.SGD(datas,20,10,(double)3); //SGD算法开始训练 32 N.testDatas(testdatas); //测试 33 34 return 0; 35 }

对于每个照片数据和标签的定义如下:

1 struct batch { 2 MatrixXd x; //(784,1)的矩阵 3 double y; //标签 4 };

对与数据的读取上篇文章有所提及,经过实际的训练,有几点需要注意:数据集中的像素值是(0~255)的灰度值,需要对其进行归一化:

double dpix = pix/255.0;

或者反相:

double dpix = (255-pix)/255.0;

但实际测试中效果不太好,正确率只有70%左右。

考虑了二值化:

double dpix = (pp <= 125) ? 0 : 1;

效果提升较明显。

对于神经网络的初始化:

1 Network::Network(int size[], int num_lay) { 2 3 for (int i = 0; i < num_lay; i++) 4 { 5 if (i > 0) { 6 MatrixXd biase = Eigen::MatrixXd::Random(size[i], 1); 7 Network::biases.push_back(biase); 8 } 9 if (i < num_lay - 1) { 10 MatrixXd weight = Eigen::MatrixXd::Random(size[i+1], size[i]); 11 Network::weights.push_back(weight); 12 } 13 } 14 }

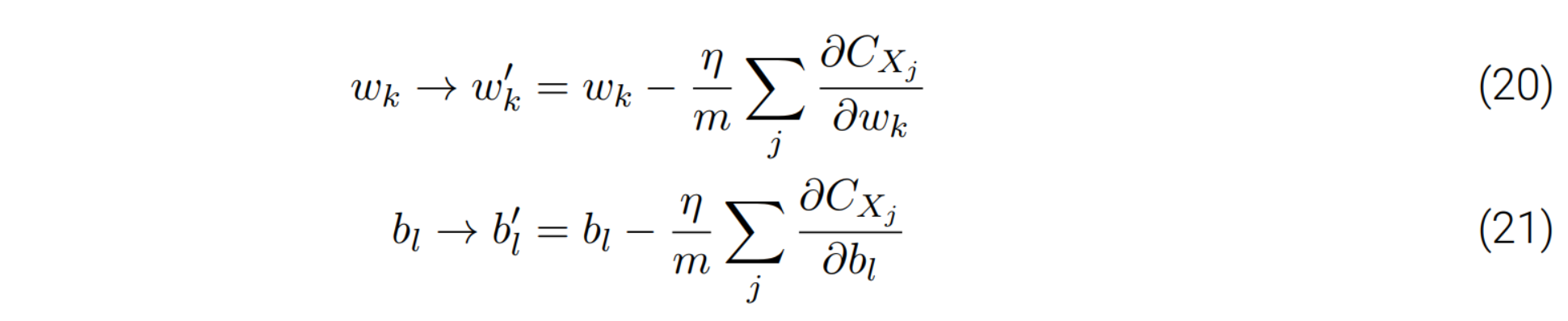

随机梯度下降算法:思想就是通过随机选取⼩量训练输⼊样本来计算 ∇Cx,进⽽估算梯度 ∇C。通过计算少量样本的平均值我们可以快速得到⼀个对于实际梯度 ∇C 的很好的估算,这有助于加速梯度下降,进⽽加速学习过程。

SGD( ):

1 void Network::SGD(vector<batch> traindata, int epochs, int mini_batch_size, double eta) //epoxhs 训练轮次;mini_batch_size 随机选取⼩量训练输⼊样本的个数; eta 学习率; 2 { 3 int len = traindata.size(); 4 5 for (int e = 1; e <= epochs; e++) 6 { 7 cout << "。。。。。。。。。。。。。。。。。。"<<endl; 8 cout << "开始第" << e << "轮训练。。。" << endl; 9 randomshuffle(traindata); 10 //batch *bs = new batch[mini_batch_size] ; 11 vector<batch> bs; 12 13 for (int i = 0; i < len; i += mini_batch_size) { 14 bs.clear(); 15 for (int j = i; j < i+mini_batch_size; j++) { 16 if (j > len) break; 17 bs.push_back(traindata[j]); 18 } 19 update_mini_batch(bs,eta,mini_batch_size); 20 } 21 } 22 23 string Pfilename = "E:/shujuji/shouxie/p.txt"; 24 25 if (Network::saveParameters(Pfilename)) { 26 cout << "训练完成。。。" << endl; 27 cout << "参数成功输出。。。" << endl; 28 } 29 }

1 void Network::update_mini_batch(vector<batch> minibatch, double eta,int mlength) 2 { 3 vector<MatrixXd> nabla_b; 4 vector<MatrixXd> nabla_w; 5 vector<MatrixXd> delta_nabla_b; 6 vector<MatrixXd> delta_nabla_w; 7 8 for (int i = 0; i < Network::biases.size(); i++) 9 { 10 MatrixXd bia(Network::biases[i].rows(), Network::biases[i].cols()); 11 bia.setZero(); 12 MatrixXd dbia(Network::biases[i].rows(), Network::biases[i].cols()); 13 dbia.setZero(); 15 nabla_b.push_back(bia); 16 delta_nabla_b.push_back(dbia); 17 } 18 19 for (int n = 0; n < Network::weights.size(); n++) 20 { 21 MatrixXd wei(Network::weights[n].rows(), Network::weights[n].cols()); 22 wei.setZero(); 23 MatrixXd dwei(Network::weights[n].rows(), Network::weights[n].cols()); 24 dwei.setZero(); 25 26 nabla_w.push_back(wei); 27 delta_nabla_w.push_back(dwei); 28 } 29 30 31 for (int i = 0; i < mlength; i++) { 32 33 MatrixXd x = minibatch[i].x; 34 double y = minibatch[i].y; 35 36 Network::backprop(x, y, delta_nabla_b, delta_nabla_w); 37 38 for (int n = 0; n < delta_nabla_b.size(); n++) { 39 nabla_b[n] = nabla_b[n] + delta_nabla_b[n]; 40 } 41 42 for (int j = 0; j < delta_nabla_w.size(); j++) { 43 nabla_w[j] = nabla_w[j] + delta_nabla_w[j]; 44 } 45 46 } 47 48 int len = minibatch.size(); 49 50 for (int i = 0; i < Network::biases.size(); i++) 51 { 52 Network::biases[i] = Network::biases[i] - (eta / len)*nabla_b[i]; 53 } 54 55 for (int i = 0; i < Network::weights.size(); i++) 56 { 57 Network::weights[i] = Network::weights[i] - (eta / len)*nabla_w[i]; 58 } 59 60 }

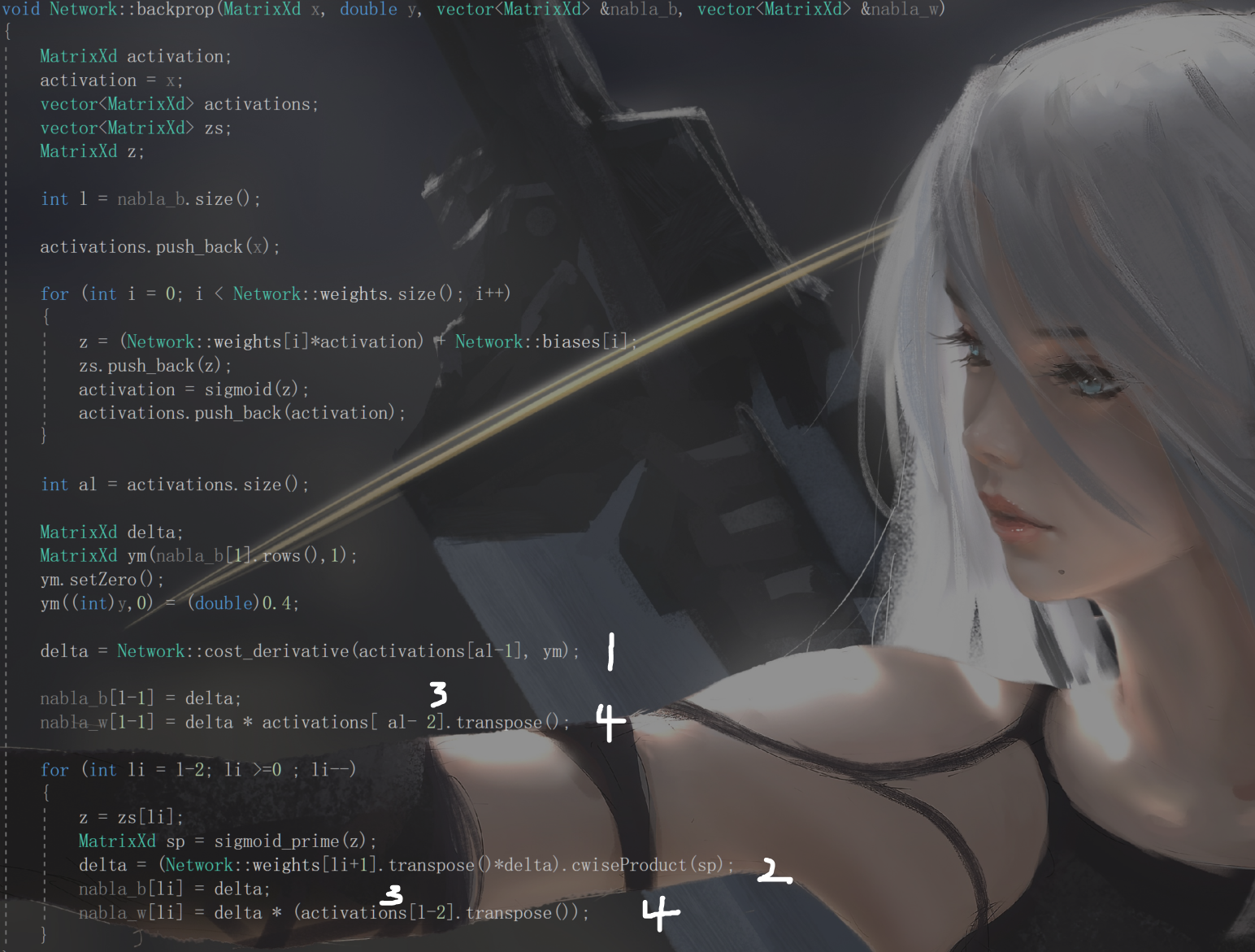

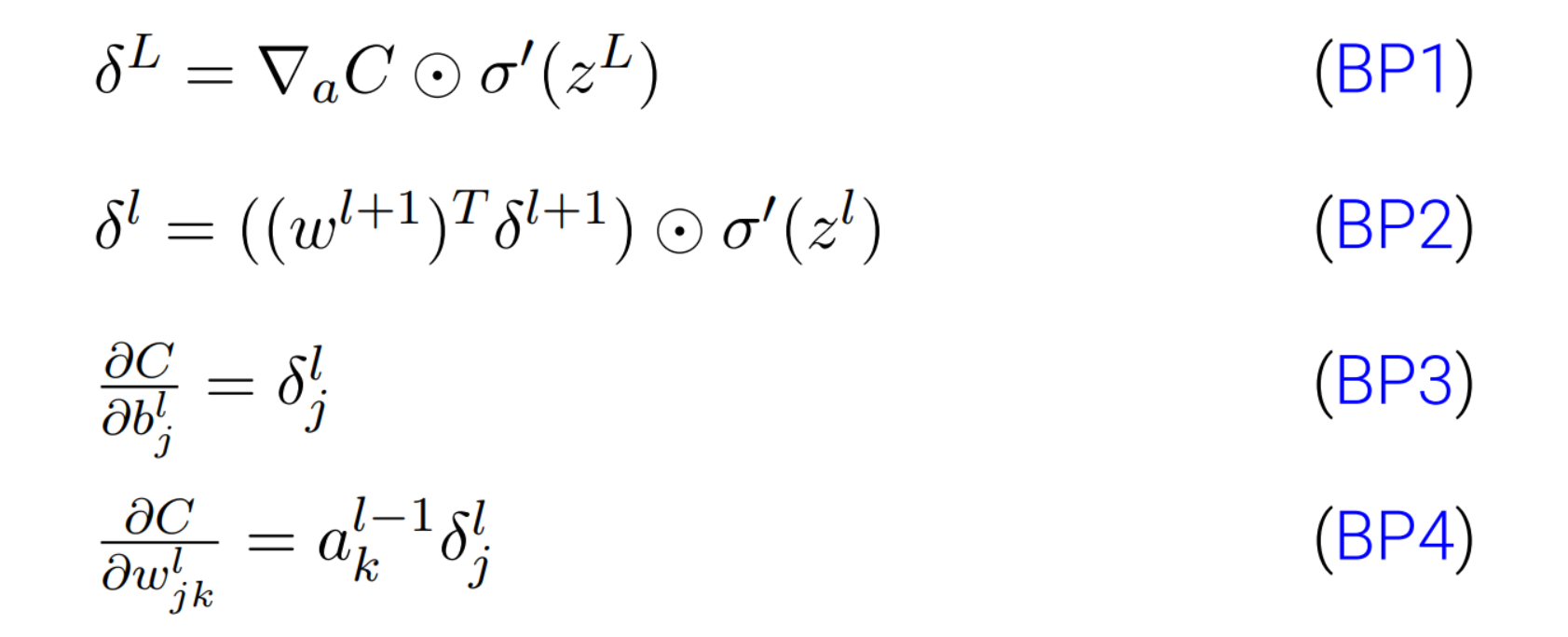

对标签数据集y定义为( 0, 0, 0, 0,1, 0, 0, 0, 0, 0 ),但测试中值在0.4左右效果最好;

依据如下公式:

***对于语句1,因为用的交叉熵作为损失函数,激活函数的导数被约去。

补充一点eigen中exp() 函数的用法:

1 MatrixXd sigmoid(MatrixXd z) { 2 MatrixXd zt; 3 zt = (-z).array().exp().matrix(); 4 MatrixXd o(z.rows(), z.cols()); 5 o.setOnes(); 6 return (o+zt).cwiseInverse(); 7 } 8 9 MatrixXd sigmoid_prime(MatrixXd z) { 10 11 MatrixXd o(z.rows(), z.cols()); 12 o.setOnes(); 13 return sigmoid(z).cwiseProduct(o - sigmoid(z)); 14 }

eigen中矩阵(MatrixXd)乘法直接使用“ * ”即可,对应项相乘使用 cwiseProduct( );

类似的 cwiseInverse() 每个元素取倒数。