Learning Multiple Tasks with Multilinear Relationship Networks

继cross-stitch unit后,阅读了第二篇Feature Transformation Approach.

这篇文章中将Multi-task learning 方法分为了两类:1)multi-task feature learning that learns a shared fearture representation;

2)multi-task relationship learning that models inherent task relationship

1. Motivation

How to exploit the task relatedness underlying parameter tensors and improve feature transferability in the multiple task-specific layers.

2.Multilinear Relationship Network (MRN)

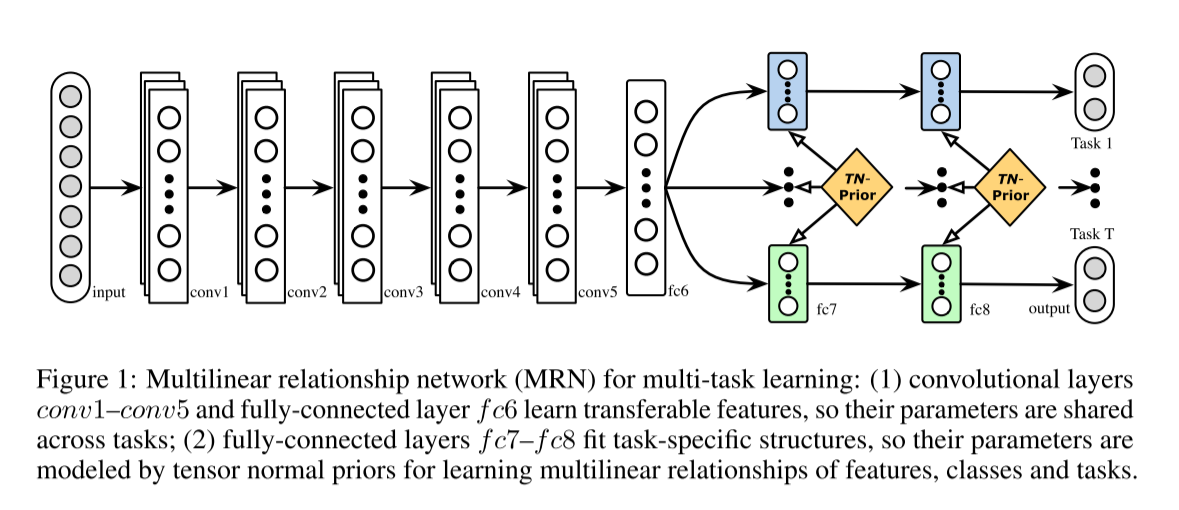

MRN integrates deep neural networks with tensor normal priors over the network parameters of all task-specific layers, which model the task relatedness through the covariance structures over tasks, classes and features to enable transfer across related tasks. By jointly learning transferable features and multilinear relationships, MRN is able to circumvent the dilemma of negative-transfer in feature layers and under-transfer in classifier layer.

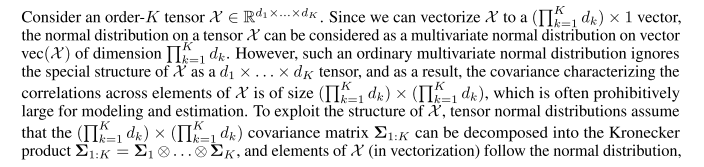

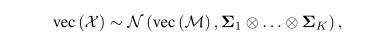

2.1 Tensor Normal Distribution

Probability Density Function

进一步地

![]()

![]()

![]()

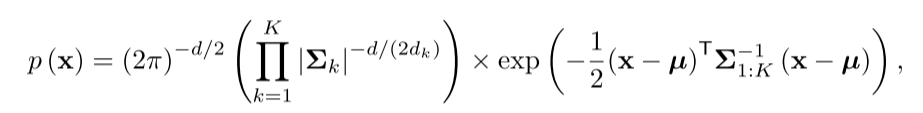

Maximum Likelihood Estimation

![]()

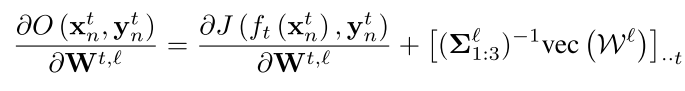

2.2 Multilinear Relationship Networks

2.3 Model

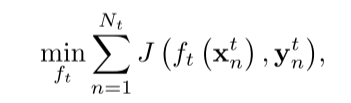

The empirical error of CNN on {Xt, Yt} is

where J is the cross-entropy loss function, and ft (xtn) is the conditional probability that CNN assigns xtn to label ytn.

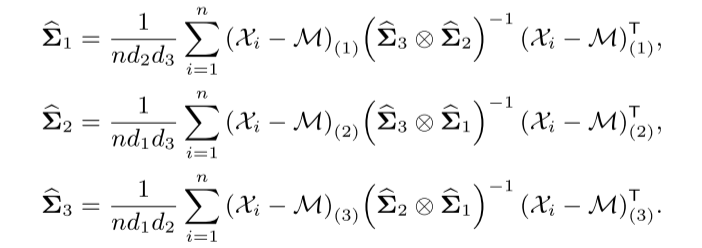

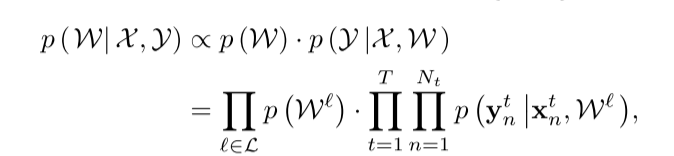

In order to capture the task relationship in the network parameters of all T tasks, we construct the l-th layer parameter tensor as Wl=[W1,l;...;WT,l]∈RD1l×D2l×T.the set of parameter tensors of all the task-specific layers L={fc7, fc8}.

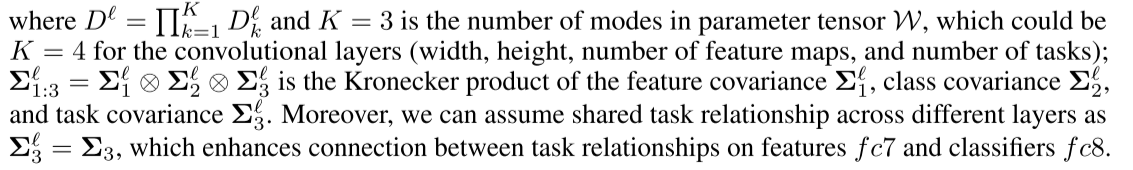

defines the prior for the l-th layer parameter tensor by tensor normal distribution as

![]()

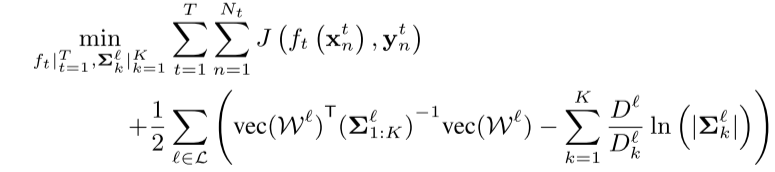

Multilinear Relationship Network (MRN) formally writing as

(1)

(1)

3.Algorithm

(1) is jointly non-convex with respect to the parameter tensors W as well as feature covariance Σ1l , class covariance Σ2l , and task covariance Σ3l .Thus, we alternatively optimize one set of variables with the others fixed.

![]()

![]()

4.Discussion

1.Learning with feature covariances can be viewed as a representative formulation in feature-based methods;

This can bd viewed as a special cade of Equation(1) by setting all covariance matrices but the feature covariance to identity matrix, i.e. Σk=I|k=2K;

2.Learning with task relations is for parameter-based methods

This can be viewed as a special case of Equation(1) by setting all covariance matrices but the task covariance to identity matrix, i.e. Σk=I|k=1K-1

The proposed MRN is more general in the architecture perspective in dealing with parameter tensors in multiple layers of deep neural networks.

这篇文章主要是围绕how to exploit the task relatedness underlying parameter tensors and improve feature transferability in the multiple task-specific layers. 文章使用的方法是基于 multiple task-specific layesr寻找task relationships ,基于这种task relationship去寻找transfered features.

基于tensor normal distribution 可以推出task relationship的最大后验估计,使用训练数据对其进行更新。

浙公网安备 33010602011771号

浙公网安备 33010602011771号