十六 、大数据平台及组件安装部署

十六 、大数据平台及组件安装部署

实验任务一:Hadoop 集群验证

分布式集群搭建完成后,根据 Hadoop 两大核心组成,可以通过监测这 HDFS 分布式文件

系统和 MapReduce 来完成监测工作,通过以下步骤完成 Hadoop 集群测试:

(1)初始化集群,使用 Hadoop 命令启动集群。

(2)使用 Hadoop 命令,创建 HDFS 文件夹。

(3)使用 HDFS 命令查看文件系统“/”路径下是否存在文件。

(4)调用 Hadoop 自带的 WordCount 程序去测试 MapReduce,查看控制台是否能正确统

计单词数量。

步骤一:具体测试内容

Hadoop 自身提供了许多功能用于监测,通过这些监控功能,可以判断出平台是否已经

完成全分布式集群搭建,以下步骤监测集群是否搭建成功:

(1)使用 JSP 查看各个节点启动的进程情况,都启动成功说明系统启动正常。

master(主节点)启动情况

[hadoop@master ~]# jps

5825 NameNode

6021 SecondaryNameNode

6453 Jps

6169 ResourceManager

slave1(从节点)节点启动情况

[hadoop@slave1 ~]# jps

2928 Jps

2640 NodeManager

2542 DataNode

slave2(从节点)节点启动情况

[hadoop@slave2 ~]# jps

2673 Jps

2771 NodeManager

3036 DataNode

(2)查看 Hadoop 的 Web 监控页面。

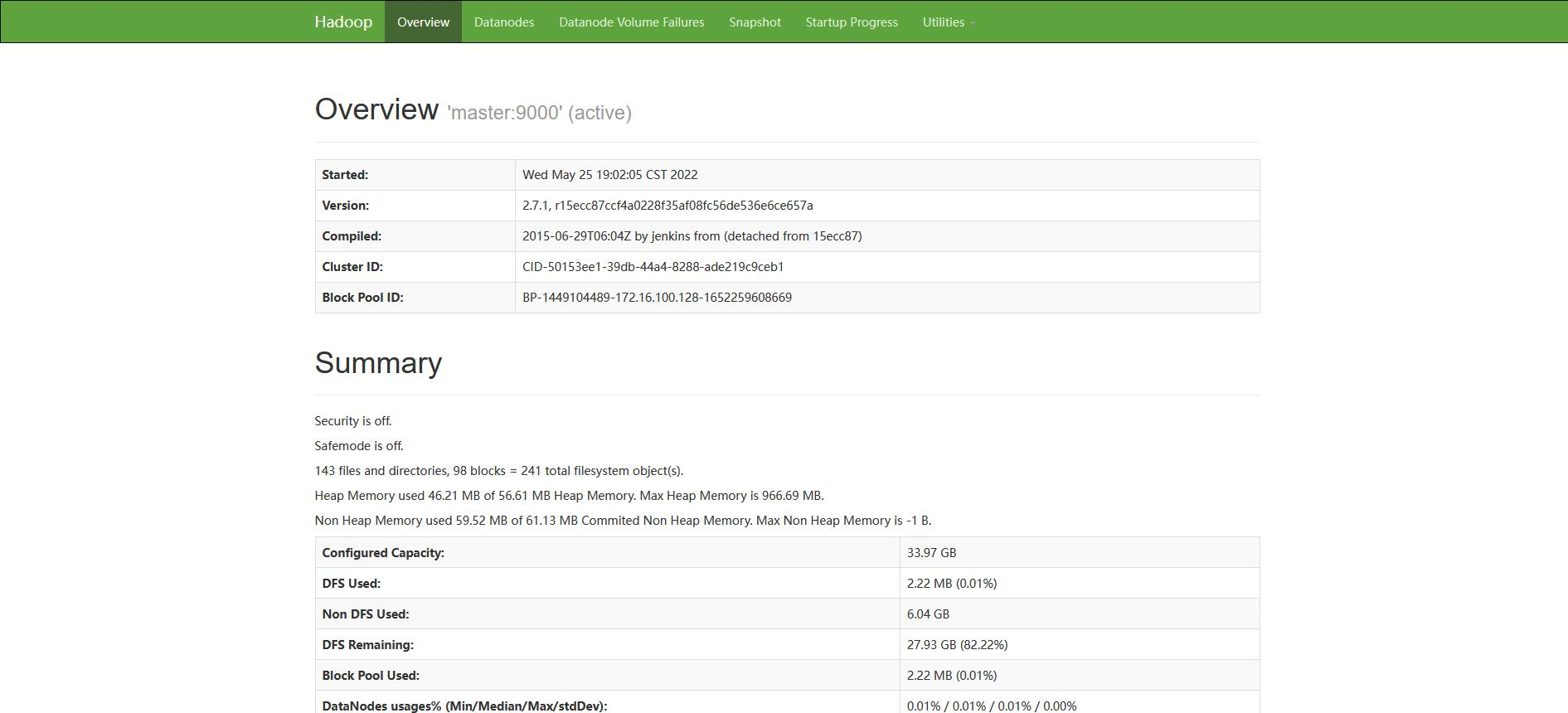

使用浏览器浏览主节点机http://master:50070

查看NameNode节点状态说明系统启动正常

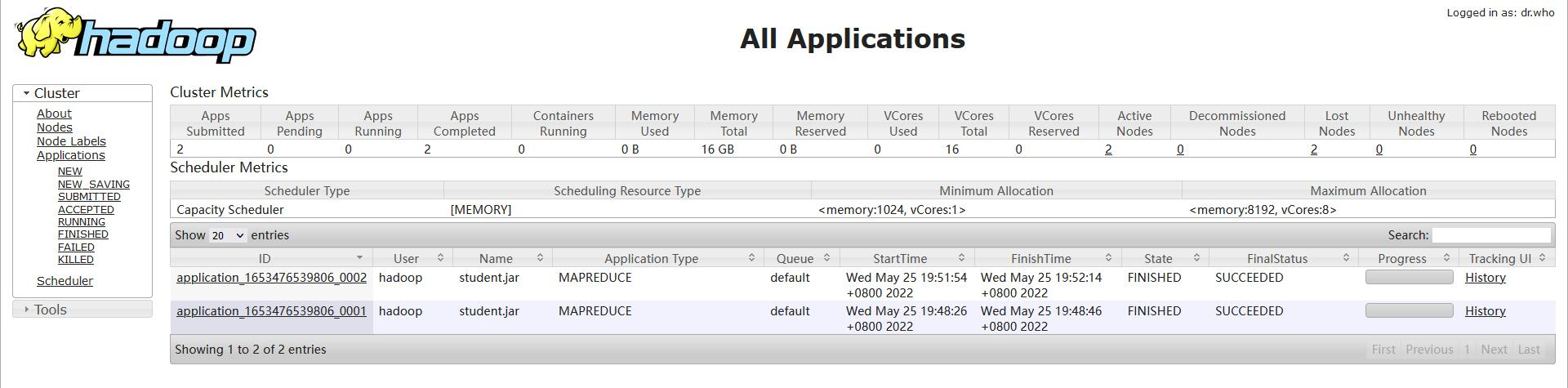

使用浏览器浏览 master 节点 http://master:8088,查看所有应用说明系统启动正常

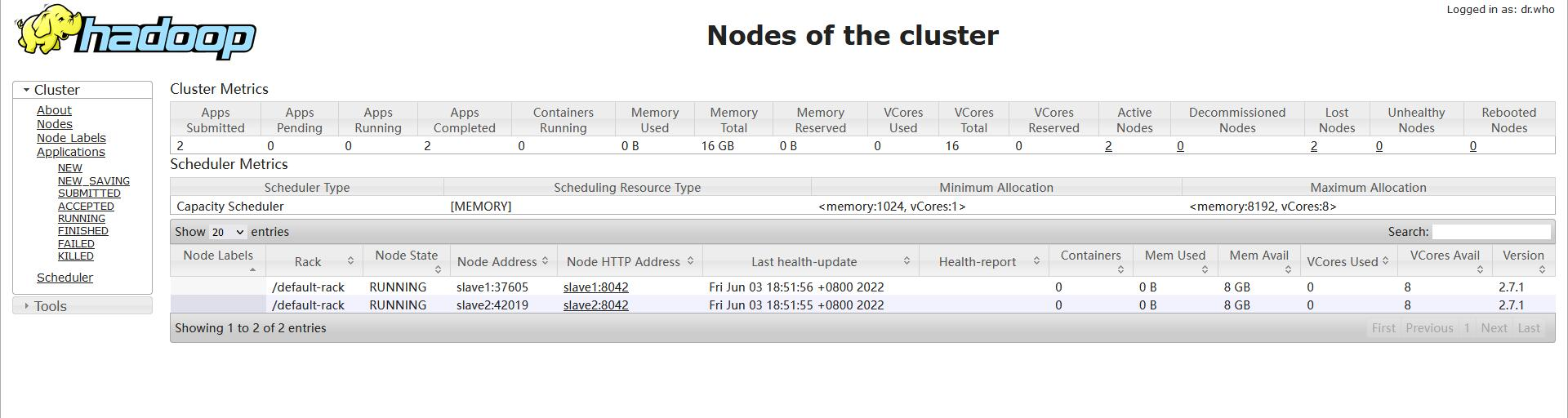

浏览 Nodes 说明系统启动正常

(3)使用 Hadoop 命令关闭集群。

使用命令关闭 Hadoop 集群,返回信息如下,说明系统关闭正常

[hadoop@master ~]# stop -all.sh

This script is Deprecated. Instead use stop-dfs.sh and stopyarn.sh

Stopping namenodes on [master] master: no namenode to stop

202.106.155.58: datanode to stop

202.106.155.59: datanode to stop

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: secondarynamenode to stop

stopping yarn daemons resourcemanager to stop

202.106.155.58: nodemanager to

stop 202.106.155.59: nodemanager to stop

实验二:Sqoop 组件部署

实验任务一:Sqoop 数据传输验证

步骤一:查看 Sqoop 版本

通过 Sqoop 相关命令能够查询到 Sqoop 版本号为 1.4.7,则表示 Sqoop 部署成功,部署

成功信息如下:

[hadoop@master ~]# sqoop

Please set $HCAT_HOME to the root of your HCatalog installation. Please set $ACCUMULO_HOME to the root of your Accumulo installation.

20/04/30 14:30:16 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8 Compiled by maugli on Thu Dec 21 15:59:58 STD 2017 2

步骤二:Sqoop 连接 MySQL 数据库

Sqoop 需要启动 Hadoop 集群,通过 Sqoop 连接 MySQL 查看数据库列表判断是否安装成

功,输入命令查看控制台最后输出是否为 MySQL 中的数据库

[hadoop@master ~]# sqoop list-databases --connect jdbc:mysql://127.0.0.1:3306/ --username root -P

Warning: /opt/sofeware/sqoop/../hcatalog does not exist!

HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /opt/sofeware/sqoop/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /opt/sofeware/sqoop/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 19/04/22 18:54:10 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: 19/04/22 18:54:14 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. information_schema hive mysql performance_schema sys

步骤三:Sqoop 将 HDFS 数据导入到 MySQL

(1)使用 Sqoop 命令,将 HDFS 中“/user/test”数据导入到 MySQL 中(其中 HDFS 数

据源已经上传到大数据平台,MySQL 相关数据库、数据表在第九章已经创建)。

删除原数据库 student 表的数据

[hadoop@master hadoop]$ mysql -u root -p

Enter password:

mysql> use sample

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from student;

+--------+----------+

| number | name |

+--------+----------+

| 01 | zhangsan |

| 02 | lisi |

| 03 | wangwu |

+--------+----------+

3 rows in set (0.00 sec)

mysql>delete from student;

Query OK, 3 rows affected (0.00 sec)

mysql> select * from student;

Empty set (0.00 sec)

mysql> exit

Bye

将 HDFS 数据导入到 MySQL

[hadoop@master ~]$ sqoop export --connect "jdbc:mysql://master:3306/sample?useUnicode=true&characterEncoding=utf-8" --username root --password Password123! --table student --input-fields-terminated-by ',' --export-dir /user/test

Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

22/06/03 15:46:08 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

22/06/03 15:46:08 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

22/06/03 15:46:08 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

22/06/03 15:46:08 INFO tool.CodeGenTool: Beginning code generation

Fri Jun 03 15:46:08 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

22/06/03 15:46:09 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `student` AS t LIMIT 1

22/06/03 15:46:09 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `student` AS t LIMIT 1

22/06/03 15:46:09 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/src/hadoop

Note: /tmp/sqoop-hadoop/compile/2baf54fd43010519351abe6800dafe26/student.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

22/06/03 15:46:11 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/2baf54fd43010519351abe6800dafe26/student.jar

22/06/03 15:46:11 INFO mapreduce.ExportJobBase: Beginning export of student

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hbase/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

22/06/03 15:46:11 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

22/06/03 15:46:11 WARN mapreduce.ExportJobBase: Input path hdfs://master:9000/user/test does not exist

22/06/03 15:46:11 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

22/06/03 15:46:11 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

22/06/03 15:46:11 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

22/06/03 15:46:11 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

22/06/03 15:46:13 INFO mapreduce.JobSubmitter: Cleaning up the staging area /tmp/hadoop-yarn/staging/hadoop/.staging/job_1654240081881_0001

22/06/03 15:46:13 ERROR tool.ExportTool: Encountered IOException running export job:

org.apache.hadoop.mapreduce.lib.input.InvalidInputException: Input path does not exist: hdfs://master:9000/user/test

(2)通过 MySQL 命令,查看数据是否成功导入 MySQL

[hadoop@master ~]# mysql -u root -pPassword123!

mysql> use sample;

Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from student;

+--------+----------+

|number | name |

+--------+----------+ |

1 | zhangsan | |

2 | lisi | |

3 | wangwu | |

4 | sss | |

5 | ss2 | |

6 | ss3 | +--------+----------+

6 rows in set (0.00 sec)

实验二:Hive 组件部署

实验任务一:Hive 组件验证

步骤一:初始化 Hive

启动 Hadoop 集群的环境下,使用 Hive 初始化命令查看初始化控制台日志信息。

[hadoop@master ~]# schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found bindings in [jar:file:/opt/hive/lib/hive-jdbc2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found bindings in [jar:file:/opt/hive/lib/log4j-slf4jimpl-2.4.1jar!/org/slf4j/impl/StaticLoggerBinder.class[ SLF4J: Found bindings in [jar:file:/opt/hadoop/share/hadoop/common/lib/slf4j-log4j121.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multip_bindings for

an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4LoggerFactory] Metastore connection URL: jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true$us eSSL=false Metastore Connection Driver: com.mysql.jdbc.Driver Metastore:connection User: root Staring metastore schema initalization to 2.0.0 Initialzation script hive-schema-2.0.0.mysql.sql Initialzation script completed schemaTool completed

步骤二:启动 Hive

由于 Hive 是 Hadoop 生态中的一个组件,只需要测试 hive 时候能正常启动即可,启动

Hadoop 环境下通过 Hive 命令,查看 Hive 启动信息。

[hadoop@master ~]# hive

Logging initialized using configuration in jar:file:/opt/hive/lib/hive-common-1.1.0.jar!/hivelog4j.properties SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/hadoop/share/hadoop/common/lib/slf4j-log4j121.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/hive/lib/hive-jdbc1.1.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings

for an explanation.

SLF4J: Actual binding is of type

[org.slf4j.impl.Log4jLoggerFactory]

hive>

浙公网安备 33010602011771号

浙公网安备 33010602011771号