1-监控界面sql保存

1, BufferSize_machine

1), template

主要用来监控buffersize的状态的

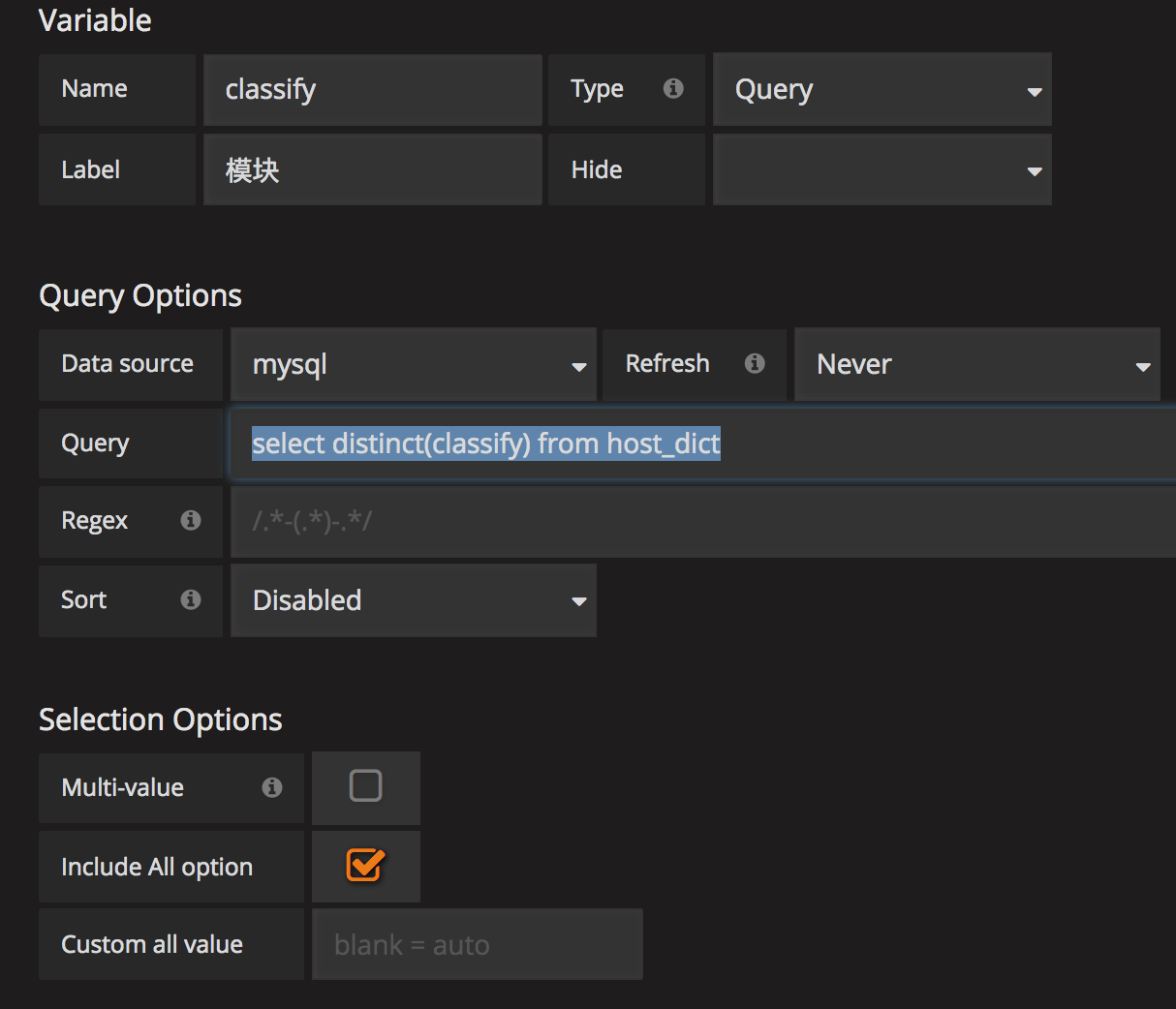

name: 模块名字, 用于后续调取使用,

label: 模块显示名字, 在页面显示的

includeAll: 是否包含 all 按钮

query: 查询的sql语句, 由于模版一致, 所以后续只保留sql

classify:

select distinct(classify) from host_dict

model_name

select distinct(model_name) from host_dict where classify in ($classify)

2) KafkaSinkNetword

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "BufferSize" AND object = 'KafkaSinkNetwork' AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, host

3), KafkaSinkFile

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "BufferSize" AND object = 'KafkaSinkFile' AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, host

4), FileSink

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "BufferSize" AND object = 'FileSink' AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, host

5), MessageCopy

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "BufferSize" AND object = 'MessageCopy' AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, host

2, BufferSizeTopic

1) BufferSize长度

SELECT UNIX_TIMESTAMP(time) as time_sec, sum(value) as value, object as metric FROM jmx_status WHERE $__timeFilter(time) AND attribute = 'BufferSize' GROUP BY object, time ORDER BY time ASC, metric

3, Metric_machine

1), template

Classify

select distinct(classify) from host_dict

model_name

select distinct(model_name) from host_dict where classify in ($classify)

source_file

select distinct(component) from topic_count where component like '%Source'

kafka_file

select distinct(component) from topic_count where component like 'Kafka%';

2), 接受消息总量

SELECT UNIX_TIMESTAMP(tc.time) as time_sec, tc.host as metric, SUM(out_num) as value FROM topic_count as tc left join topic_dict as td on tc.topic = td.topic left join host_dict as hd on hd.innet_ip = tc.host WHERE $__timeFilter(time) AND tc.component in ($source_file) AND hd.classify in ($classify) and hd.model_name in ($model_name) GROUP BY tc.host, tc.time ORDER BY tc.time ASC, tc.host

3), 发送kafka消息总量

SELECT UNIX_TIMESTAMP(tc.time) as time_sec, tc.host as metric, SUM(in_num - out_num) as value FROM topic_count as tc left join topic_dict as td on tc.topic = td.topic left join host_dict as hd on hd.innet_ip = tc.host WHERE $__timeFilter(time) AND tc.component in ($kafka_file) AND hd.classify in ($classify) and hd.model_name in ($model_name) GROUP BY tc.host, tc.time ORDER BY tc.time ASC, tc.host

4), 发送kafka消息失败量

SELECT UNIX_TIMESTAMP(tc.time) as time_sec, tc.host as metric, SUM(out_num) as value FROM topic_count as tc left join host_dict as hd on hd.innet_ip = tc.host WHERE $__timeFilter(time) AND (tc.component = 'KafkaSinkNetwork' OR tc.component = 'KafkaSinkFile') AND hd.classify in ($classify) and hd.model_name in ($model_name) GROUP BY tc.host, tc.time ORDER BY tc.time ASC, tc.host

4, Metric_topic

1), template

component_name

select distinct(component_name) from topic_dict

topic

select distinct(topic) from topic_dict where component_name in ($component_name)

source_file

select distinct(component) from topic_count where component like '%Source'

kafka_file

select distinct(component) from topic_count where component like 'Kafka%';

2), iris接收总量

SELECT UNIX_TIMESTAMP(tc.time) as time_sec, tc.topic as metric, SUM(out_num) as value FROM topic_count as tc left join topic_dict as td on tc.topic = td.topic WHERE $__timeFilter(time) AND tc.topic in ($topic) AND tc.component in ($source_file) GROUP BY tc.topic, tc.time ORDER BY tc.time ASC, tc.topic

3), 发送kafka消息总量

SELECT UNIX_TIMESTAMP(time) as time_sec, tc.topic as metric, SUM(in_num - out_num) as value FROM topic_count as tc left join topic_dict as td on tc.topic = td.topic WHERE $__timeFilter(time) AND tc.topic in ($topic) AND tc.component in ($kafka_file) GROUP BY tc.topic, tc.time ORDER BY tc.time ASC, tc.topic

4), 发送kafka消息失败量

SELECT UNIX_TIMESTAMP(time) as time_sec, sum(out_num) as value, topic as metric FROM topic_count WHERE $__timeFilter(time) AND (component = 'KafkaSinkNetwork' OR component = 'KafkaSinkFile') AND topic in ($topic) GROUP BY topic, time ORDER BY time ASC, metric

5), 消息丢失数

SELECT UNIX_TIMESTAMP(time) as time_sec, (temp.value - SUM(tc.in_num - tc.out_num)) as value, tc.topic as metric FROM topic_count as tc left join topic_dict as td on tc.topic = td.topic right join ( SELECT tc2.time as calen, tc2.topic, SUM(out_num) as value FROM topic_count as tc2 left join topic_dict as td2 on tc2.topic = td2.topic WHERE $__timeFilter(tc2.time) AND tc2.topic in ($topic) AND tc2.component in ($source_file) AND tc2.component <> 'FileSource' GROUP BY tc2.topic, tc2.time ) as temp on temp.calen = tc.time and temp.topic = tc.topic WHERE $__timeFilter(time) AND tc.topic in ($topic) AND tc.component in ($kafka_file) GROUP BY tc.time, tc.topic ORDER BY tc.topic, tc.time asc

6), 验平汇总, 此为表格

SELECT date_format(time, '%Y-%m-%d %H:%i:%s') as time, tc.topic, temp.value as iris总接受量, SUM(tc.in_num - tc.out_num) as kafka发送成功, SUM(tc.out_num) as kafka发送失败, (temp.value - SUM(tc.in_num - tc.out_num)) as 消息丢失数 FROM topic_count as tc left join topic_dict as td on tc.topic = td.topic right join ( SELECT tc2.time as calen, tc2.topic, SUM(out_num) as value FROM topic_count as tc2 left join topic_dict as td2 on tc2.topic = td2.topic WHERE $__timeFilter(tc2.time) AND tc2.topic in ($topic) AND tc2.component in ($source_file) AND tc2.component <> 'FileSource' GROUP BY tc2.topic, tc2.time ) as temp on temp.calen = tc.time and temp.topic = tc.topic WHERE $__timeFilter(time) AND tc.topic in ($topic) AND tc.component in ($kafka_file) GROUP BY tc.time, tc.topic ORDER BY tc.topic, tc.time asc

5, QPS_Component

qps

SELECT UNIX_TIMESTAMP(time) as time_sec, sum(value) as value, object as metric FROM jmx_status WHERE $__timeFilter(time) AND attribute = 'QPS' GROUP BY object, time ORDER BY time ASC, metric

6, QPS_machine

1), template

classify

select distinct(classify) from host_dict

model_name

select distinct(model_name) from host_dict where classify in ($classify)

2), topic_source

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "QPS" AND object = 'TcpSource' AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time DESC, metric limit 990000

3), js_source

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "QPS" AND object = 'JsSource' AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time DESC limit 990000

4), legency_source

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE object = 'LegacyJsSource' AND attribute = "QPS" AND $__timeFilter(time) AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time DESC limit 990000

5), webSource

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE object = 'WebSource' AND attribute = "QPS" AND $__timeFilter(time) AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time DESC limit 990000

6), zhixinSource

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, concat('KSF-', host) as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE object = 'ZhixinSource' AND attribute = "QPS" AND $__timeFilter(time) AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time DESC limit 990000

7) cdn_httpsource

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE object = 'CdnHttpSource' AND attribute = "QPS" AND $__timeFilter(time) AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time DESC limit 990000

8), qps_everyhost

SELECT UNIX_TIMESTAMP(time) as time_sec, sum(value) as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "QPS" AND hd.classify in ($classify) and hd.model_name in ($model_name) group by host, time ORDER BY time DESC, metric limit 990000

9), qps_hostnum

SELECT UNIX_TIMESTAMP(js.time) as time_sec, count(distinct(js.host)) as value, hd.classify as metric FROM jmx_status as js left join host_dict as hd on js.host = hd.innet_ip WHERE $__timeFilter(time) AND attribute = "QPS" group by time, hd.classify ORDER BY time DESC, metric limit 990000

7, Resource_machine

1), template

classify

select distinct(classify) from host_dict

model_name

select distinct(model_name) from host_dict where classify in ($classify)

2), cpu_used

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "SystemCpuLoad" AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, metric

3), memory_used

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "HeapMemoryUsage.used" AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, metric

4), thread_count

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "ThreadCount" AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, metric

5), openfile_script

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "OpenFileDescriptorCount" AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, metric

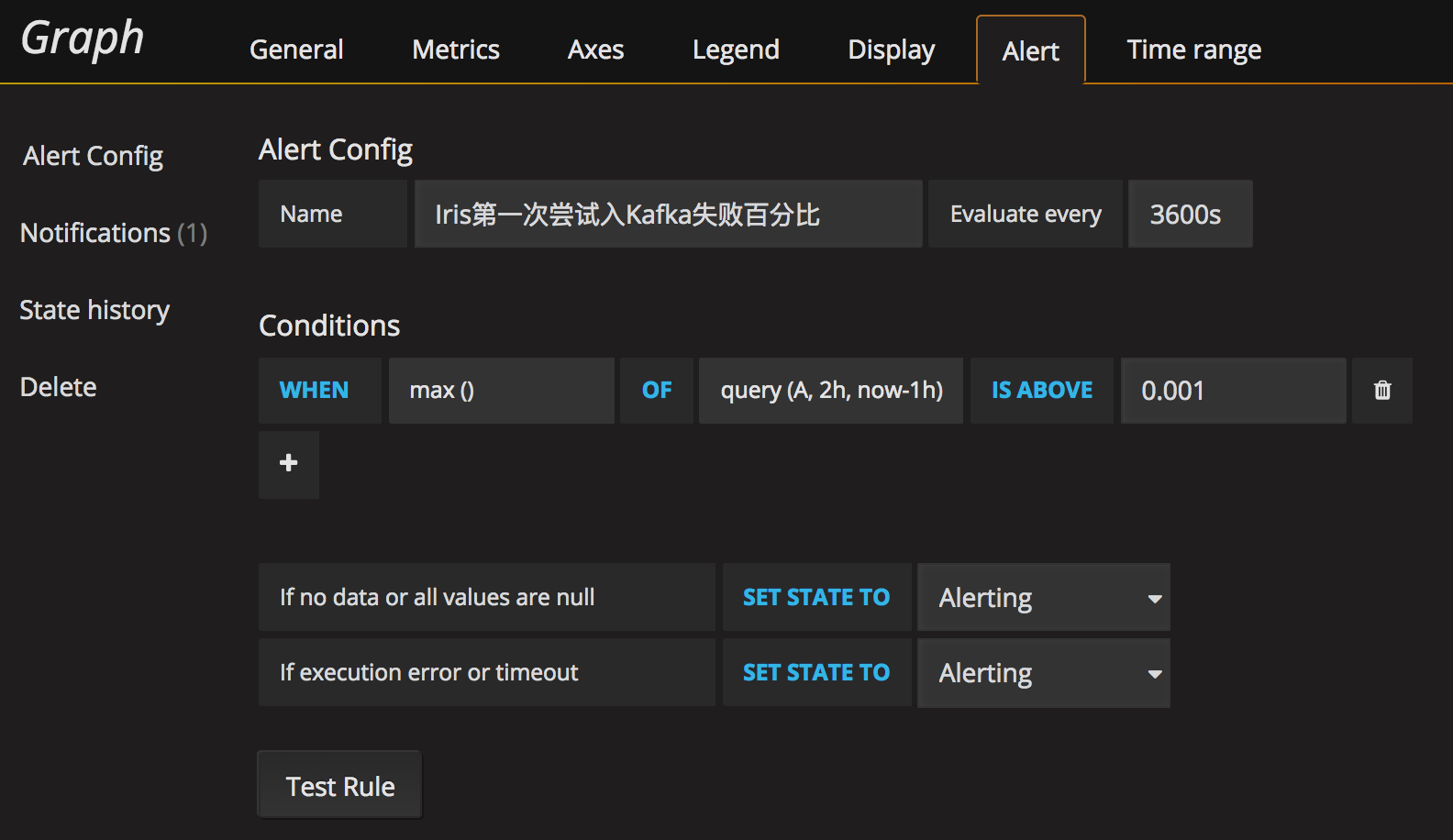

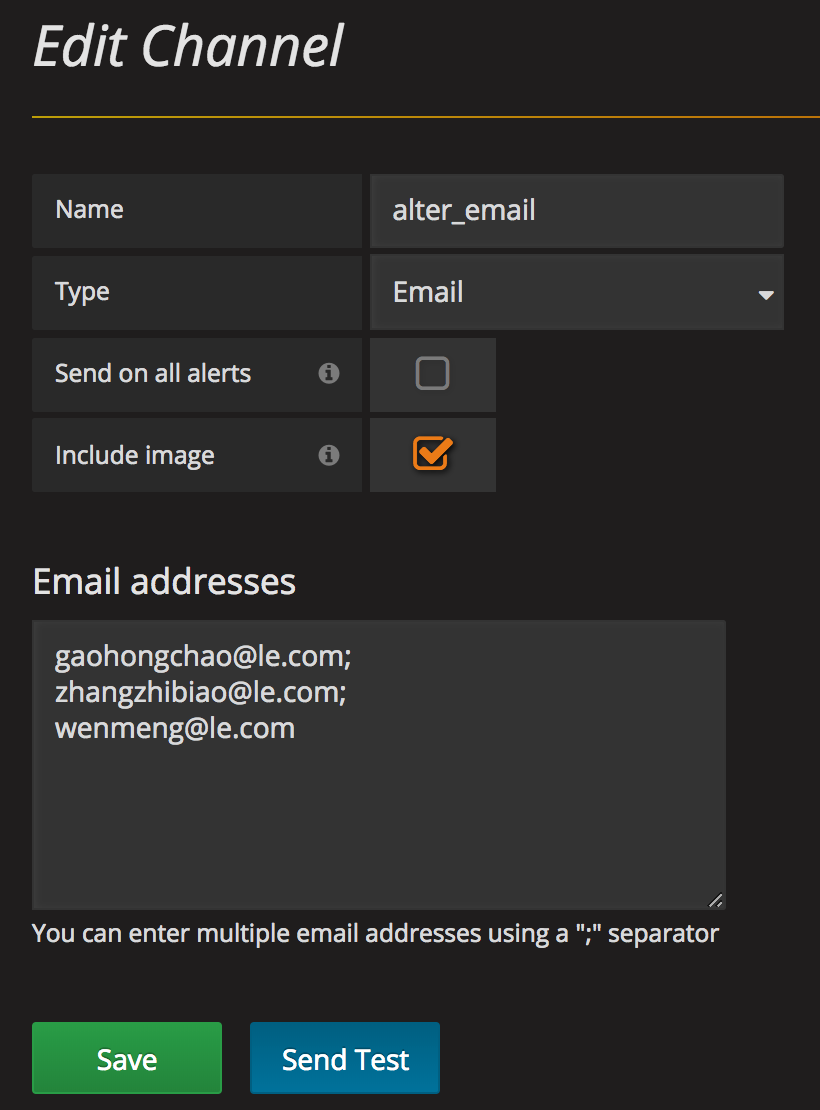

8, alter 报警用, 需要配合设置

iris第一次尝试入kafka失败百分比

SELECT UNIX_TIMESTAMP(time) as time_sec, value as value, host as metric FROM jmx_status as jmx left join host_dict as hd on hd.innet_ip = jmx.host WHERE $__timeFilter(time) AND attribute = "OpenFileDescriptorCount" AND hd.classify in ($classify) and hd.model_name in ($model_name) ORDER BY time ASC, metric

主要在alter标签中

然后在alter标签中进行配置

9, 需要用到的sql保存

1), host_dict

CREATE TABLE `host_dict` ( `id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT '主键id', `classify` varchar(20) DEFAULT NULL COMMENT '类型', `model_name` varchar(20) DEFAULT NULL COMMENT '模块名', `innet_ip` varchar(20) DEFAULT NULL COMMENT '内网ip', `outnet_ip` varchar(20) DEFAULT NULL COMMENT '外网ip', `cpu_core` int(11) DEFAULT NULL COMMENT 'cpu核心', `memory_size` int(11) DEFAULT NULL COMMENT '内存', `address` varchar(20) DEFAULT NULL COMMENT '机房', `status` varchar(20) DEFAULT NULL COMMENT '状态', `plan` varchar(20) DEFAULT NULL COMMENT '规划', PRIMARY KEY (`id`), KEY `classify` (`classify`,`model_name`,`innet_ip`), KEY `idx_innet_ip` (`innet_ip`) USING BTREE ) ENGINE=InnoDB AUTO_INCREMENT=49 DEFAULT CHARSET=utf8

2), jmx_status

CREATE TABLE `jmx_status` ( `host` varchar(20) NOT NULL DEFAULT '', `time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, `report` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00', `object` varchar(60) NOT NULL DEFAULT '', `attribute` varchar(60) NOT NULL DEFAULT '', `value` double DEFAULT NULL, PRIMARY KEY (`host`,`time`,`object`,`attribute`), KEY `idx_host_time_attribute_object` (`host`,`time`,`attribute`,`object`) USING BTREE, KEY `idx_time_attribute` (`time`,`attribute`) USING BTREE ) ENGINE=InnoDB DEFAULT CHARSET=utf8 /*!50100 PARTITION BY RANGE (unix_timestamp(time)) (PARTITION p20180811 VALUES LESS THAN (1534003199) ENGINE = InnoDB, PARTITION p20180812 VALUES LESS THAN (1534089599) ENGINE = InnoDB, PARTITION p20180813 VALUES LESS THAN (1534175999) ENGINE = InnoDB, PARTITION p20180814 VALUES LESS THAN (1534262399) ENGINE = InnoDB, PARTITION p20180815 VALUES LESS THAN (1534348799) ENGINE = InnoDB, PARTITION p20180816 VALUES LESS THAN (1534435199) ENGINE = InnoDB, PARTITION p20180817 VALUES LESS THAN (1534521599) ENGINE = InnoDB, PARTITION p20180818 VALUES LESS THAN (1534607999) ENGINE = InnoDB, PARTITION p20180819 VALUES LESS THAN (1534694399) ENGINE = InnoDB, PARTITION p20180820 VALUES LESS THAN (1534780799) ENGINE = InnoDB, PARTITION p20180821 VALUES LESS THAN (1534867199) ENGINE = InnoDB, PARTITION p20180822 VALUES LESS THAN (1534953599) ENGINE = InnoDB) */

3), topic_count

CREATE TABLE `topic_count` ( `host` varchar(20) NOT NULL DEFAULT '', `time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, `report` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00', `component` varchar(32) NOT NULL DEFAULT '', `topic` varchar(32) NOT NULL DEFAULT '', `in_num` bigint(20) DEFAULT NULL, `out_num` bigint(20) DEFAULT NULL, PRIMARY KEY (`host`,`time`,`topic`,`component`), KEY `component` (`component`,`topic`,`time`), KEY `idx_topic` (`topic`) USING BTREE, KEY `idx_time_topic` (`time`,`topic`) USING BTREE, KEY `idx_compnent` (`component`) USING BTREE ) ENGINE=InnoDB DEFAULT CHARSET=utf8

4), topic_dict

CREATE TABLE `topic_dict` ( `id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT '主键id', `model` varchar(20) DEFAULT NULL COMMENT '模式', `component` varchar(20) DEFAULT NULL COMMENT '组件', `component_name` varchar(20) DEFAULT NULL COMMENT '组件名称', `component_type` varchar(20) DEFAULT NULL COMMENT '组件类型', `topic` varchar(20) DEFAULT NULL COMMENT 'topic', `topic_type` varchar(20) DEFAULT NULL COMMENT 'topic类型', `status` varchar(20) DEFAULT 'ON' COMMENT '状态', PRIMARY KEY (`id`), KEY `component` (`component`,`topic`), KEY `idx_topic` (`topic`) USING BTREE ) ENGINE=InnoDB AUTO_INCREMENT=40 DEFAULT CHARSET=utf8