23-hadoop-hive的DDL和DML操作

跟mysql类似, hive也有 DDL, 和 DML操作

数据类型: https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DDL

: primitive_type | array_type | map_type | struct_type | union_type -- (Note: Available in Hive 0.7.0 and later)

primitive_type为基本类型, 包括:

: TINYINT | SMALLINT | INT | BIGINT | BOOLEAN | FLOAT | DOUBLE | DOUBLE PRECISION -- (Note: Available in Hive 2.2.0 and later) | STRING | BINARY -- (Note: Available in Hive 0.8.0 and later) | TIMESTAMP -- (Note: Available in Hive 0.8.0 and later) | DECIMAL -- (Note: Available in Hive 0.11.0 and later) | DECIMAL(precision, scale) -- (Note: Available in Hive 0.13.0 and later) | DATE -- (Note: Available in Hive 0.12.0 and later) | VARCHAR -- (Note: Available in Hive 0.12.0 and later) | CHAR -- (Note: Available in Hive 0.13.0 and later)

DDL:

基本语法:

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS] [db_name.]table_name -- (Note: TEMPORARY available in Hive 0.14.0 and later) [(col_name data_type [COMMENT col_comment], ... [constraint_specification])] [COMMENT table_comment] [PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)] [CLUSTERED BY (col_name, col_name, ...) [SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS] [SKEWED BY (col_name, col_name, ...) -- (Note: Available in Hive 0.10.0 and later)] ON ((col_value, col_value, ...), (col_value, col_value, ...), ...) [STORED AS DIRECTORIES] [ [ROW FORMAT row_format] [STORED AS file_format] | STORED BY 'storage.handler.class.name' [WITH SERDEPROPERTIES (...)] -- (Note: Available in Hive 0.6.0 and later) ] [LOCATION hdfs_path] [TBLPROPERTIES (property_name=property_value, ...)] -- (Note: Available in Hive 0.6.0 and later) [AS select_statement]; -- (Note: Available in Hive 0.5.0 and later; not supported for external tables)

1, 使用简单数据类型创建表

CREATE TABLE page_view(

viewTime INT,

page_url STRING,

ip STRING COMMENT 'IP Address of the User'

)

COMMENT 'This is the page view table'

ROW FORMAT DELIMITED # 使用 \t 进行分隔, 和下面一行一块用

FIELDS TERMINATED BY '\t'

STORED AS TEXTFILE; # 数据从文件中导入

准备数据

1 vini 192.168.208.126 2 bronk 192.168.208.32

导入:

load data local inpath '/opt/data_hive/test.txt' into table page_view;

2, 使用复合数据类型

创建数据表

CREATE TABLE people ( id string, name string, likes array<string>, addr map<string, string> ) row format delimited # 使用 \t 分隔数据 fields terminated by '\t' collection items terminated by ',' # 集合之间使用 , 分隔 map keys terminated by ':' # map的key, values 使用 : 分隔 stored as textfile;

准备数据:

1 vini game,read,play stuAddr:yt,workAddr:bj 2 bronk game,read,play stuAddr:sy,workAddr:bj

数据导入:

load data local inpath '/opt/data_hive/test.txt' into table people;

查看数据:

select addr['stuAddr'] from people where name='vini';

3, 带partition的数据导入

CREATE TABLE people ( id string, name string, likes array<string>, addr map<string, string> ) partitioned by(dt string) # 增加分区字段 row format delimited fields terminated by '\t' collection items terminated by ',' map keys terminated by ':' stored as textfile;

date 为 hive的一个保留字段, 不可使用, 一般使用dt作为代替

准备数据

1 vini game,read,play stuAddr:yt,workAddr:bj 2017-1-1 2 bronk game,read,play stuAddr:sy,workAddr:bj 2017-1-2

数据导入, 指定partition

load data local inpath '/opt/data_hive/test.txt' into table people partition (dt='2017-1-1', dt='2017-1-2');

ps: 创建数据表时, 有一个可选字段为 EXTERNAL, 表示创建的为内表还是外表

1、在导入数据到外部表,数据并没有移动到自己的数据仓库目录下(如果指定了location的话),也就是说外部表中的数据并不是由它自己来管理的!而内部表则不一样; 2、在删除内部表的时候,Hive将会把属于表的元数据和数据全部删掉;而删除外部表的时候,Hive仅仅删除外部表的元数据,数据是不会删除的! 3. 在创建内部表或外部表时加上location 的效果是一样的,只不过表目录的位置不同而已,加上partition用法也一样,只不过表目录下会有分区目录而已,load data local inpath直接把本地文件系统的数据上传到hdfs上,有location上传到location指定的位置上,没有的话上传到hive默认配置的数据仓库中。

详细见: http://blog.csdn.net/u012599619/article/details/50999259

DML

使用最多的是 select 语句和 insert 语句

insert:

INSERT OVERWRITE TABLE tablename1 [PARTITION (partcol1=val1, partcol2=val2 ...) [IF NOT EXISTS]] select_statement1 FROM from_statement;

INSERT INTO TABLE tablename1 [PARTITION (partcol1=val1, partcol2=val2 ...)] select_statement1 FROM from_statement;

insert使用多的为, 将一张表的数据导入需要的表中

创建表:

CREATE TABLE people_insert ( id string, name string, likes array<string> ) row format delimited fields terminated by '\t' collection items terminated by ',' stored as textfile;

然后将people表中的部分数据, 导入到新的表中:

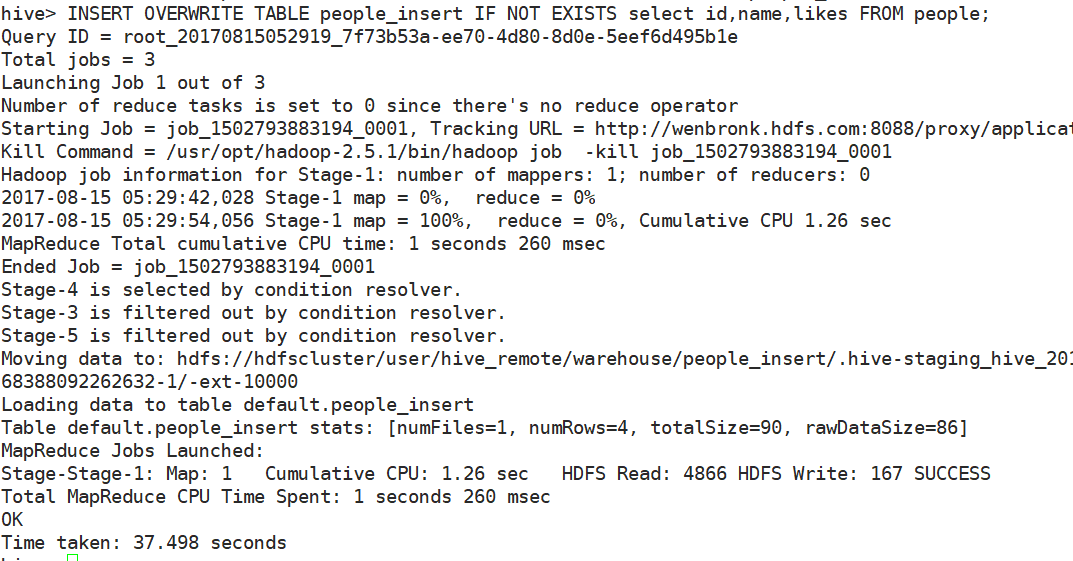

INSERT OVERWRITE TABLE people_insert IF NOT EXISTS select id,name,likes FROM people;

可以看到启动了一个新的mapreduce任务去执行

update 和 delete语句需要额外配置一些东西, 但使用不多, 不做阐述

系列来自 尚学堂极限班视频

浙公网安备 33010602011771号

浙公网安备 33010602011771号