elasticsearch插件安装之--中文分词器 ik 安装

/**

* 系统环境: vm12 下的centos 7.2

* 当前安装版本: elasticsearch-2.4.0.tar.gz

*/

ElasticSearch中内置了许多分词器, standard, english, chinese等, 中文分词效果不佳, 所以使用ik

安装ik分词器

下载链接: https://github.com/medcl/elasticsearch-analysis-ik/releases

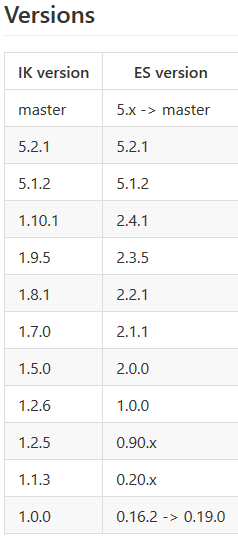

版本对应关系: https://github.com/medcl/elasticsearch-analysis-ik

关闭elasticsearch.bat,将下载下来的压缩文件解压,在ES目录中的plugins文件夹里新建名为ik的文件夹,将解压得到的所有文件复制到ik中。

unzip elasticsearch-analysis-ik-1.10.0.zip

确认 plugin-descriptor.properties 中的版本和安装的elasticsearch版本一直, 否则报异常

在elasticsearch.yml中增加ik设置

index.analysis.analyzer.ik.type : “ik”

或者添加:

index: analysis: analyzer: ik: alias: [ik_analyzer] type: org.elasticsearch.index.analysis.IkAnalyzerProvider ik_max_word: type: ik use_smart: false ik_smart: type: ik use_smart: true

重新启动elasticsearch

注意: 不可将zip包放在在ik目录同级, 否则报错

Exception in thread "main" java.lang.IllegalStateException: Could not load plugin descriptor for existing plugin [elasticsearch-analysis-ik-1.10.0.zip]. Was the plugin built before 2.0? Likely root cause: java.nio.file.FileSystemException: /usr/work/elasticsearch/elasticsearch-2.4.0/plugins/elasticsearch-analysis-ik-1.10.0.zip/plugin-descriptor.properties: 不是目录 at sun.nio.fs.UnixException.translateToIOException(UnixException.java:91) at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:102) at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:107) at sun.nio.fs.UnixFileSystemProvider.newByteChannel(UnixFileSystemProvider.java:214) at java.nio.file.Files.newByteChannel(Files.java:361) at java.nio.file.Files.newByteChannel(Files.java:407) at java.nio.file.spi.FileSystemProvider.newInputStream(FileSystemProvider.java:384) at java.nio.file.Files.newInputStream(Files.java:152) at org.elasticsearch.plugins.PluginInfo.readFromProperties(PluginInfo.java:87) at org.elasticsearch.plugins.PluginsService.getPluginBundles(PluginsService.java:378) at org.elasticsearch.plugins.PluginsService.<init>(PluginsService.java:128) at org.elasticsearch.node.Node.<init>(Node.java:158) at org.elasticsearch.node.Node.<init>(Node.java:140) at org.elasticsearch.node.NodeBuilder.build(NodeBuilder.java:143) at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:194) at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:286) at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:35) Refer to the log for complete error details.

测试:

首先配置:

curl -XPUT localhost:9200/local -d '{ "settings" : { "analysis" : { "analyzer" : { "ik" : { "tokenizer" : "ik" } } } }, "mappings" : { "article" : { "dynamic" : true, "properties" : { "title" : { "type" : "string", "analyzer" : "ik" } } } } }'

然后测试

curl 'http://localhost:9200/index/_analyze?analyzer=ik&pretty=true' -d' { "text":"中华人民共和国国歌" } ' { "tokens" : [ { "token" : "text", "start_offset" : 12, "end_offset" : 16, "type" : "ENGLISH", "position" : 1 }, { "token" : "中华人民共和国", "start_offset" : 19, "end_offset" : 26, "type" : "CN_WORD", "position" : 2 }, { "token" : "国歌", "start_offset" : 26, "end_offset" : 28, "type" : "CN_WORD", "position" : 3 } ] }

想要返回最细粒度的结果, 需要在elaticsearch.yml中配置

index: analysis: analyzer: ik: alias: [ik_analyzer] type: org.elasticsearch.index.analysis.IkAnalyzerProvider ik_smart: type: ik use_smart: true ik_max_word: type: ik use_smart: false