Hive与MySQL、Oracle、SqlServer的简单交换

目录

前言

在https://blog.csdn.net/lw18751836671/article/details/119794613?spm=1001.2014.3001.5501中写了关于hive到hive之间的交换,现在就将xml的数据变动一下实现hive和三种数据库简单交换。

这里只是做一个简单模型,复杂的都是从里面延伸出来,复杂的也懒得写,太多了。

Hive与Mysql

hive到mysql

mysql中建表,建立一个目标表

CREATE TABLE `user_info_dest` (

`id` varchar(36) DEFAULT NULL,

`name` varchar(36) DEFAULT NULL

);修改代码中关于目标表的连接,由之前的hive,修改为mysql

//目标数据库连接

String dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>dest</name>" +

"<server>192.168.10.64</server>" +

"<type>MYSQL</type>" +

"<access>Native</access>" +

"<database>test</database>" +

"<port>3306</port>" +

"<username>root</username>" +

"<password>root</password>" +

"</connection>";OK,其他不用改变。

mysql到hive

在mysql中建立源表,并插入一条数据。

CREATE TABLE `user_info_src` (

`id` varchar(36) DEFAULT NULL,

`name` varchar(36) DEFAULT NULL

)然后将代码中源数据库连接的xml改为mysql的,还是上面那个mysql,

//源数据库连接

String mysqL_src = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>mysqL_src</name>" +

"<server>192.168.10.64</server>" +

"<type>MYSQL</type>" +

"<access>Native</access>" +

"<database>test</database>" +

"<port>3306</port>" +

"<username>root</username>" +

"<password>root</password>" +

"</connection>";其他不用变,直接运行即可交换成功。

HIve与Oracle

hive到oracle

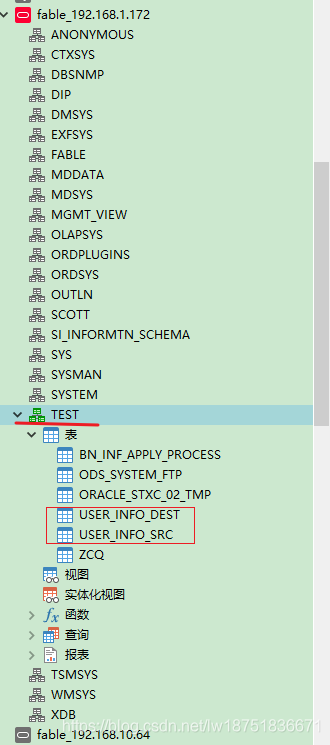

oracle中建立目标表,注意此处的字段名是大写了,因为oracle是大小写敏感,同时也是为了说明一个其他问题。

CREATE TABLE USER_INFO_DEST (

ID varchar2(36) ,

NAME varchar2(36)

);然后目标数据库连接改为oracle的,

//目标数据库连接

String dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>dest</name>" +

"<server>192.168.1.172</server>" +

"<type>ORACLE</type>" +

"<access>Native</access>" +

"<database>fableorcl</database>" +

"<port>1521</port>" +

"<username>fable</username>" +

"<password>fable</password>" +

"</connection>";因为oracle是有schema的概念的,所以需要在代码中tableoutput添加schema,之前是设置为空的,

//设置目标表的 schema和表名

tableOutputMeta.setSchemaName("TEST");

然后交换就成功了。

oracle到hive

在oracle中建立一个源表,并插入数据,

CREATE TABLE USER_INFO_SRC (

ID varchar2(36) ,

NAME varchar2(36)

);然后把源库连接改为oracle,目标库连接的xml改为hive。

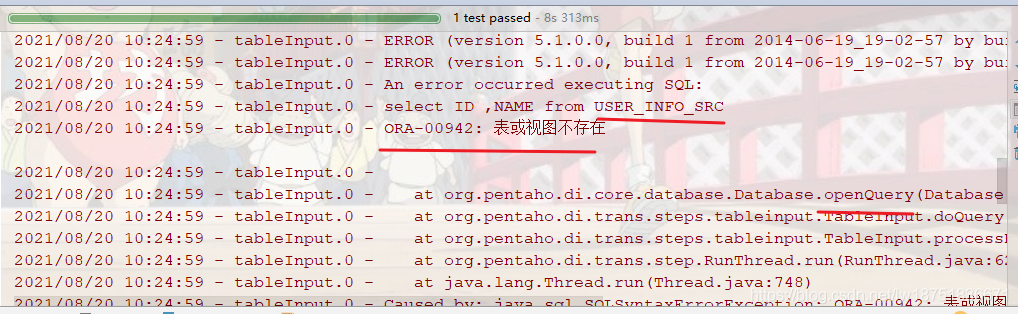

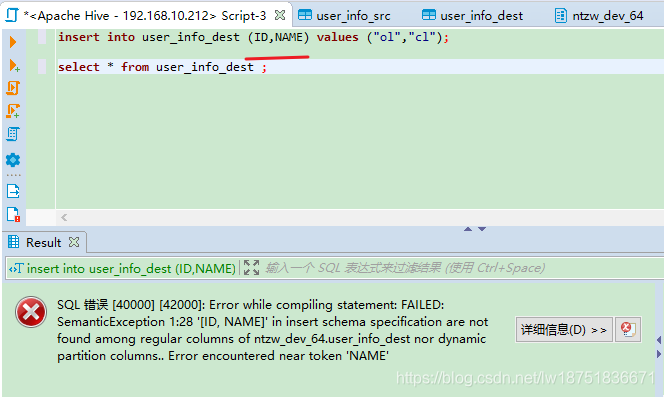

如果这样运行交换会报错,如下图所示,

所以需要在 tableInputMeta.setSQL(selectSql); 设置SQL时指定schema,所以selectSQL要改为如下,

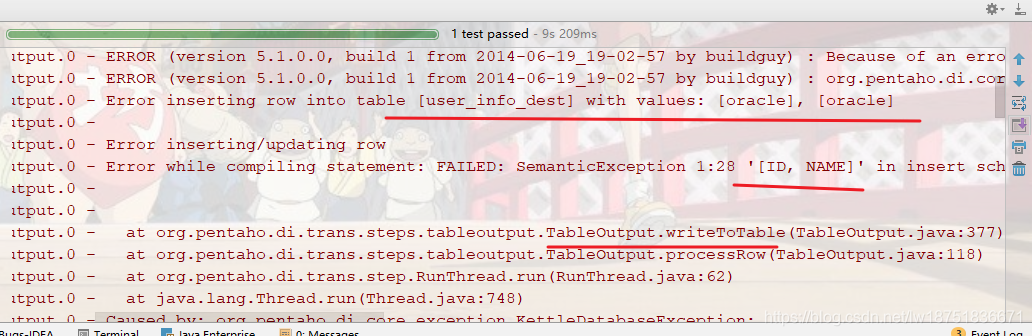

String selectSql = "select ID ,NAME from TEST.USER_INFO_SRC";继续报错,这里是说ID,NAME无法插入执行,

这里贴一下报错信息,当然是简化版,看我加粗的就知道是hive报错了,

2021/08/20 10:27:15 - tableInput.0 - 完成处理 (I=1, O=0, R=0, W=1, U=0, E=0

2021/08/20 10:27:15 - tableOutput.0 - ERROR (version 5.1.0.0, build 1 from 2014-06-19_19-02-57 by buildguy) : Because of an error, this step can't continue:

2021/08/20 10:27:15 - tableOutput.0 - ERROR (version 5.1.0.0, build 1 from 2014-06-19_19-02-57 by buildguy) : org.pentaho.di.core.exception.KettleException:

2021/08/20 10:27:15 - tableOutput.0 - Error inserting row into table [user_info_dest] with values: [oracle], [oracle]

2021/08/20 10:27:15 - tableOutput.0 -

2021/08/20 10:27:15 - tableOutput.0 - Error inserting/updating row

2021/08/20 10:27:15 - tableOutput.0 - Error while compiling statement: FAILED: SemanticException 1:28 '[ID, NAME]' in insert schema specification are not found among regular columns of ntzw_dev_64.user_info_dest nor dynamic partition columns.. Error encountered near token 'NAME'

2021/08/20 10:27:15 - tableOutput.0 -

2021/08/20 10:27:15 - tableOutput.0 -

2021/08/20 10:27:15 - tableOutput.0 - at org.pentaho.di.trans.steps.tableoutput.TableOutput.writeToTable(TableOutput.java:377)

2021/08/20 10:27:15 - tableOutput.0 - at org.pentaho.di.trans.steps.tableoutput.TableOutput.processRow(TableOutput.java:118)

2021/08/20 10:27:15 - tableOutput.0 - at org.pentaho.di.trans.step.RunThread.run(RunThread.java:62)

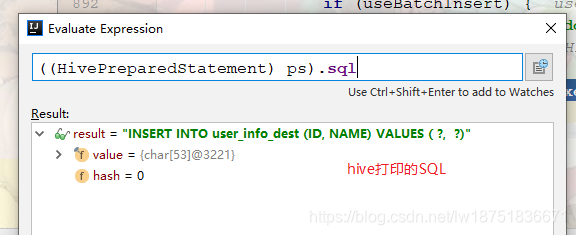

在运行中打印了一下hive的插入SQL语句,如下图,

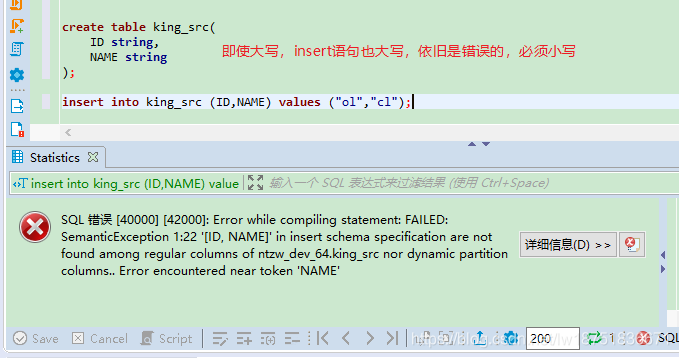

把这个SQL语句放入hive中执行一下,如下图所示报错,

如果id,name是小写,那么是可以成功的,因为Hive的表字段是小写。

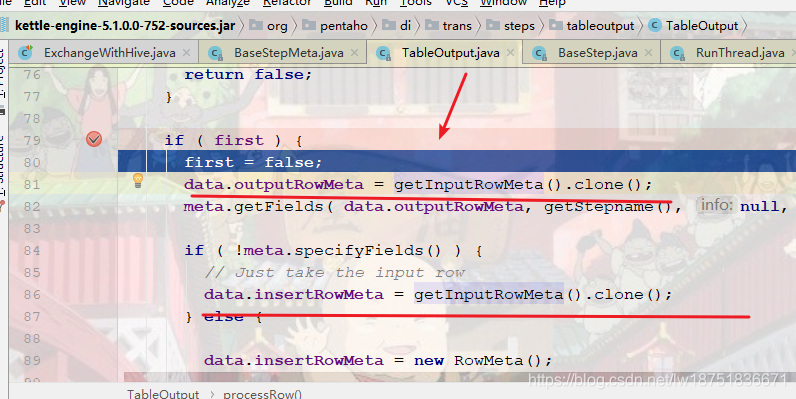

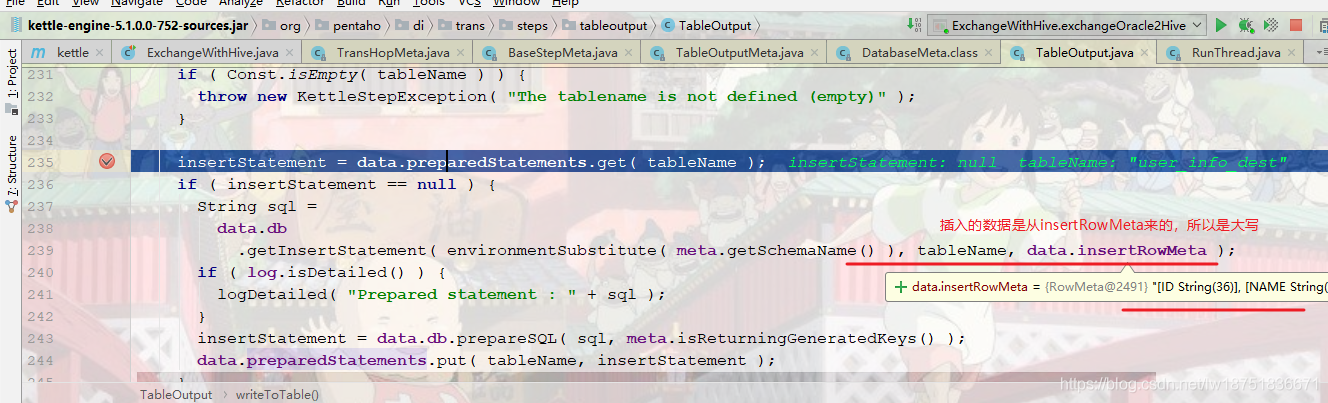

那就是看这个SQL是在哪拼接的,看代码发现是如下图处,获取InputRowMeta,这不就是select语句的列么?

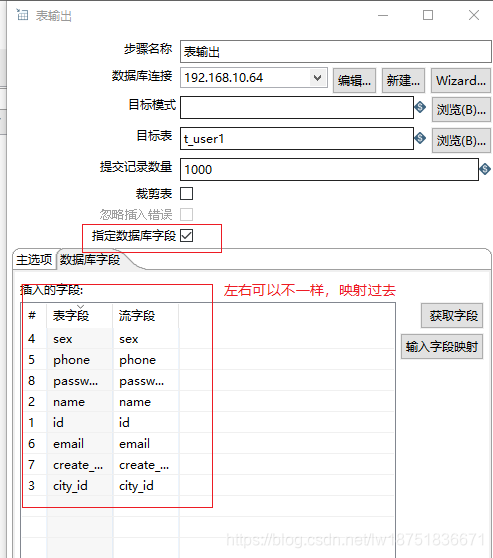

然后那就要做个映射了,和spoon工具一样,

所以那就要用一个SelectValuesMeta,OK,新增的代码如下,

//将ID,NAME列的值映射到列id,name

SelectValuesMeta selectValuesTime = new SelectValuesMeta();

String selectValuesPluginIdTime = registry.getPluginId(StepPluginType.class, selectValuesTime);

selectValuesTime.allocate(2,0,0);

String[] srcColNames = new String[]{"ID","NAME"};

selectValuesTime.setSelectName(srcColNames);

String[] destColNames = new String[]{"id","name"};

selectValuesTime.setSelectRename(destColNames);

StepMeta selectValuesStep = new StepMeta(selectValuesPluginIdTime, "selectValuesTime", (StepMetaInterface)selectValuesTime);

transMeta.addStep(selectValuesStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(tableInputStepMeta, selectValuesStep));现在TableInput关联到SelectValues,那么TableOutput的数据当然是从SelectValues中获取了,

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(selectValuesStep, tableOutputStep));运行OK,交换成功。

HIVE与SQLserver

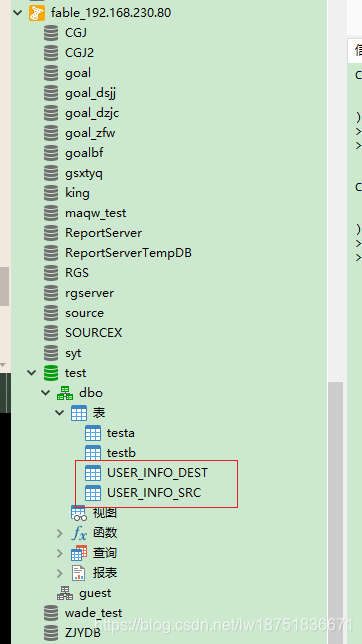

hive到sqlserver

sqlserver中建表,

CREATE TABLE USER_INFO_DEST (

ID varchar(36) ,

NAME varchar(36)

);

修改目标库的xml为SQLserver,

//目标数据库连接

String dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>dest</name>" +

"<server>192.168.230.80</server>" +

"<type>MSSQL</type>" +

"<access>Native</access>" +

"<database>test</database>" +

"<port>1433</port>" +

"<username>sa</username>" +

"<password>123456</password>" +

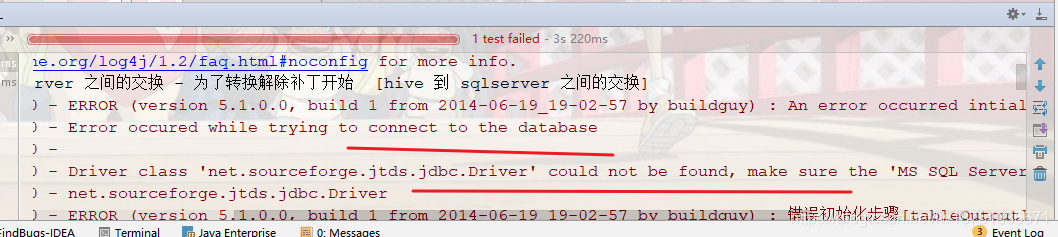

"</connection>";运行报错,连不上数据库?明明Pom里面引入了sqlserver的jdbc包。

<dependency>

<groupId>com.microsoft.sqlserver</groupId>

<artifactId>sqljdbc4</artifactId>

<version>4.0</version>

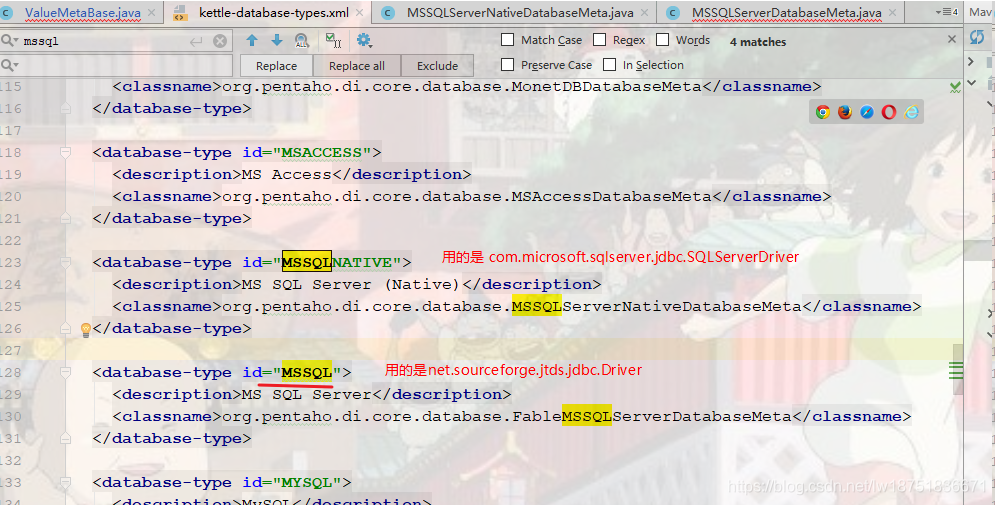

</dependency>但搜了一下,确实没有报错所说的Driver,然后去看5.1的源码,要么就是xml中的type节点改为MSSQLNATIVE,要么就是引包。

FableMSSQLServerDatabaseMeta继承了MSSQLServerDatabaseMeta,是我改写的一个类。

引入如下包,OK,交换成功。

<dependency>

<groupId>net.sourceforge.jtds</groupId>

<artifactId>jtds</artifactId>

<version>1.3.1</version>

</dependency>sqlserver到hive

和前面大致一样,源的xml改为sqlserver,目标是hive即可。

完整代码

package com.lw.kettle;

import org.junit.Before;

import org.junit.Test;

import org.pentaho.di.core.KettleEnvironment;

import org.pentaho.di.core.database.DatabaseMeta;

import org.pentaho.di.core.exception.KettleException;

import org.pentaho.di.core.exception.KettleXMLException;

import org.pentaho.di.core.logging.LogLevel;

import org.pentaho.di.core.plugins.PluginRegistry;

import org.pentaho.di.core.plugins.StepPluginType;

import org.pentaho.di.core.row.RowMetaInterface;

import org.pentaho.di.core.util.EnvUtil;

import org.pentaho.di.trans.Trans;

import org.pentaho.di.trans.TransHopMeta;

import org.pentaho.di.trans.TransMeta;

import org.pentaho.di.trans.step.StepMeta;

import org.pentaho.di.trans.step.StepMetaInterface;

import org.pentaho.di.trans.steps.selectvalues.SelectMetadataChange;

import org.pentaho.di.trans.steps.selectvalues.SelectValuesMeta;

import org.pentaho.di.trans.steps.tableinput.TableInputMeta;

import org.pentaho.di.trans.steps.tableoutput.TableOutputData;

import org.pentaho.di.trans.steps.tableoutput.TableOutputMeta;

import java.util.ArrayList;

import java.util.List;

/**

* 关联hive相关的交换

* @author Administrator

*

*/

public class ExchangeWithHive {

@Before

public void before() {

try {

// 初始化Kettle环境

KettleEnvironment.init();

EnvUtil.environmentInit();

} catch (KettleException e) {

e.printStackTrace();

}

}

/**

* hive之间的交换

* @throws KettleException

*/

@Test

public void exchangeHive2Hive() throws KettleException{

//源数据库连接

String hive_src = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>hive_src</name>" +

"<server>192.168.10.212</server>" +

"<type>HIVE2</type>" +

"<access>Native</access>" +

"<database>ntzw_dev_64</database>" +

"<port>10000</port>" +

"<username>hadoop</username>" +

"<password>hadoop</password>" +

"</connection>";

//目标数据库连接

String hive_dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>hive_dest</name>" +

"<server>192.168.10.212</server>" +

"<type>HIVE2</type>" +

"<access>Native</access>" +

"<database>ntzw_dev_64</database>" +

"<port>10000</port>" +

"<username>hadoop</username>" +

"<password>hadoop</password>" +

"</connection>";

DatabaseMeta srcDatabaseMeta = new DatabaseMeta(hive_src);

DatabaseMeta destDatabaseMeta = new DatabaseMeta(hive_dest);

//创建转换元信息

TransMeta transMeta = new TransMeta();

transMeta.setName("hive之间的交换");

//设置源和目标

transMeta.addDatabase(srcDatabaseMeta);

transMeta.addDatabase(destDatabaseMeta);

/*

* 创建 表输入->表输出

* 同时将两个步骤连接起来

*/

PluginRegistry registry = PluginRegistry.getInstance();

TableInputMeta tableInputMeta = new TableInputMeta();

String tableInputPluginId = registry.getPluginId(StepPluginType.class,

tableInputMeta);

tableInputMeta.setDatabaseMeta(srcDatabaseMeta);

//设置查询条件

String selectSql = "select id ,name from user_info_src";

tableInputMeta.setSQL(selectSql);

StepMeta tableInputStepMeta = new StepMeta(tableInputPluginId,

"tableInput", (StepMetaInterface) tableInputMeta);

transMeta.addStep(tableInputStepMeta);

TableOutputMeta tableOutputMeta = new TableOutputMeta();

tableOutputMeta.setDatabaseMeta(destDatabaseMeta);

//设置目标表的 schema和表名

tableOutputMeta.setSchemaName(null);

tableOutputMeta.setTablename("user_info_dest");

String tableOutputPluginId = registry.getPluginId(StepPluginType.class, tableOutputMeta);

StepMeta tableOutputStep = new StepMeta(tableOutputPluginId, "tableOutput" , (StepMetaInterface) tableOutputMeta);

//将步骤添加进去

transMeta.addStep(tableOutputStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(tableInputStepMeta, tableOutputStep));

Trans trans = new Trans(transMeta);

//执行转换

trans.execute(null);

//等待完成

trans.waitUntilFinished();

if (trans.getErrors() > 0) {

System.out.println("交换出错.");

return;

}

}

/**

* mysql到hive之间的交换

* @throws KettleException

*/

@Test

public void exchangeMySQL2Hive() throws KettleException{

//源数据库连接

String mysqL_src = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>mysqL_src</name>" +

"<server>192.168.10.64</server>" +

"<type>MYSQL</type>" +

"<access>Native</access>" +

"<database>test</database>" +

"<port>3306</port>" +

"<username>root</username>" +

"<password>root</password>" +

"</connection>";

//目标数据库连接

String hive_dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>hive_dest</name>" +

"<server>192.168.10.212</server>" +

"<type>HIVE2</type>" +

"<access>Native</access>" +

"<database>ntzw_dev_64</database>" +

"<port>10000</port>" +

"<username>hadoop</username>" +

"<password>hadoop</password>" +

"</connection>";

DatabaseMeta srcDatabaseMeta = new DatabaseMeta(mysqL_src);

DatabaseMeta destDatabaseMeta = new DatabaseMeta(hive_dest);

//创建转换元信息

TransMeta transMeta = new TransMeta();

transMeta.setName("mysql到hive之间的交换");

//设置源和目标

transMeta.addDatabase(srcDatabaseMeta);

transMeta.addDatabase(destDatabaseMeta);

/*

* 创建 表输入->表输出

* 同时将两个步骤连接起来

*/

PluginRegistry registry = PluginRegistry.getInstance();

TableInputMeta tableInputMeta = new TableInputMeta();

String tableInputPluginId = registry.getPluginId(StepPluginType.class,

tableInputMeta);

tableInputMeta.setDatabaseMeta(srcDatabaseMeta);

//设置查询条件

String selectSql = "select id ,name from user_info_src";

tableInputMeta.setSQL(selectSql);

StepMeta tableInputStepMeta = new StepMeta(tableInputPluginId,

"tableInput", (StepMetaInterface) tableInputMeta);

transMeta.addStep(tableInputStepMeta);

TableOutputMeta tableOutputMeta = new TableOutputMeta();

tableOutputMeta.setDatabaseMeta(destDatabaseMeta);

//设置目标表的 schema和表名

tableOutputMeta.setSchemaName(null);

tableOutputMeta.setTablename("user_info_dest");

String tableOutputPluginId = registry.getPluginId(StepPluginType.class, tableOutputMeta);

StepMeta tableOutputStep = new StepMeta(tableOutputPluginId, "tableOutput" , (StepMetaInterface) tableOutputMeta);

//将步骤添加进去

transMeta.addStep(tableOutputStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(tableInputStepMeta, tableOutputStep));

Trans trans = new Trans(transMeta);

//执行转换

trans.execute(null);

//等待完成

trans.waitUntilFinished();

if (trans.getErrors() > 0) {

System.out.println("交换出错.");

return;

}

}

/**

* hive 到 mysql 之间的交换

* @throws KettleException

*/

@Test

public void exchangeHive2MySQL() throws KettleException{

//源数据库连接

String src = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>src</name>" +

"<server>192.168.10.212</server>" +

"<type>HIVE2</type>" +

"<access>Native</access>" +

"<database>ntzw_dev_64</database>" +

"<port>10000</port>" +

"<username>hadoop</username>" +

"<password>hadoop</password>" +

"</connection>";

//目标数据库连接

String dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>dest</name>" +

"<server>192.168.10.64</server>" +

"<type>MYSQL</type>" +

"<access>Native</access>" +

"<database>test</database>" +

"<port>3306</port>" +

"<username>root</username>" +

"<password>root</password>" +

"</connection>";

DatabaseMeta srcDatabaseMeta = new DatabaseMeta(src);

DatabaseMeta destDatabaseMeta = new DatabaseMeta(dest);

//创建转换元信息

TransMeta transMeta = new TransMeta();

transMeta.setName("hive 到 mysql 之间的交换");

//设置源和目标

transMeta.addDatabase(srcDatabaseMeta);

transMeta.addDatabase(destDatabaseMeta);

/*

* 创建 表输入->表输出

* 同时将两个步骤连接起来

*/

PluginRegistry registry = PluginRegistry.getInstance();

TableInputMeta tableInputMeta = new TableInputMeta();

String tableInputPluginId = registry.getPluginId(StepPluginType.class,

tableInputMeta);

tableInputMeta.setDatabaseMeta(srcDatabaseMeta);

//设置查询条件

String selectSql = "select id ,name from user_info_src";

tableInputMeta.setSQL(selectSql);

StepMeta tableInputStepMeta = new StepMeta(tableInputPluginId,

"tableInput", (StepMetaInterface) tableInputMeta);

transMeta.addStep(tableInputStepMeta);

TableOutputMeta tableOutputMeta = new TableOutputMeta();

tableOutputMeta.setDatabaseMeta(destDatabaseMeta);

//设置目标表的 schema和表名

tableOutputMeta.setSchemaName(null);

tableOutputMeta.setTablename("user_info_dest");

String tableOutputPluginId = registry.getPluginId(StepPluginType.class, tableOutputMeta);

StepMeta tableOutputStep = new StepMeta(tableOutputPluginId, "tableOutput" , (StepMetaInterface) tableOutputMeta);

//将步骤添加进去

transMeta.addStep(tableOutputStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(tableInputStepMeta, tableOutputStep));

Trans trans = new Trans(transMeta);

//执行转换

trans.execute(null);

//等待完成

trans.waitUntilFinished();

if (trans.getErrors() > 0) {

System.out.println("交换出错.");

return;

}

}

/**

* hive 到 oracle 之间的交换

* @throws KettleException

*/

@Test

public void exchangeHive2Oracle()throws KettleException{

//源数据库连接

String src = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>src</name>" +

"<server>192.168.10.212</server>" +

"<type>HIVE2</type>" +

"<access>Native</access>" +

"<database>ntzw_dev_64</database>" +

"<port>10000</port>" +

"<username>hadoop</username>" +

"<password>hadoop</password>" +

"</connection>";

//目标数据库连接

String dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>dest</name>" +

"<server>192.168.1.172</server>" +

"<type>ORACLE</type>" +

"<access>Native</access>" +

"<database>fableorcl</database>" +

"<port>1521</port>" +

"<username>fable</username>" +

"<password>fable</password>" +

"</connection>";

DatabaseMeta srcDatabaseMeta = new DatabaseMeta(src);

DatabaseMeta destDatabaseMeta = new DatabaseMeta(dest);

//创建转换元信息

TransMeta transMeta = new TransMeta();

transMeta.setName("hive 到 oracle 之间的交换");

//设置源和目标

transMeta.addDatabase(srcDatabaseMeta);

transMeta.addDatabase(destDatabaseMeta);

/*

* 创建 表输入->表输出

* 同时将两个步骤连接起来

*/

PluginRegistry registry = PluginRegistry.getInstance();

TableInputMeta tableInputMeta = new TableInputMeta();

String tableInputPluginId = registry.getPluginId(StepPluginType.class,

tableInputMeta);

tableInputMeta.setDatabaseMeta(srcDatabaseMeta);

//设置查询条件

String selectSql = "select id ,name from user_info_src";

tableInputMeta.setSQL(selectSql);

StepMeta tableInputStepMeta = new StepMeta(tableInputPluginId,

"tableInput", (StepMetaInterface) tableInputMeta);

transMeta.addStep(tableInputStepMeta);

TableOutputMeta tableOutputMeta = new TableOutputMeta();

tableOutputMeta.setDatabaseMeta(destDatabaseMeta);

//设置目标表的 schema和表名

tableOutputMeta.setSchemaName("TEST");

tableOutputMeta.setTablename("user_info_dest");

String tableOutputPluginId = registry.getPluginId(StepPluginType.class, tableOutputMeta);

StepMeta tableOutputStep = new StepMeta(tableOutputPluginId, "tableOutput" , (StepMetaInterface) tableOutputMeta);

//将步骤添加进去

transMeta.addStep(tableOutputStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(tableInputStepMeta, tableOutputStep));

Trans trans = new Trans(transMeta);

//执行转换

trans.execute(null);

//等待完成

trans.waitUntilFinished();

if (trans.getErrors() > 0) {

System.out.println("交换出错.");

return;

}

}

/**

* oracle 到 hive 之间的交换

* @throws KettleException

*/

@Test

public void exchangeOracle2Hive()throws KettleException{

//源数据库连接

String src = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>src</name>" +

"<server>192.168.1.172</server>" +

"<type>ORACLE</type>" +

"<access>Native</access>" +

"<database>fableorcl</database>" +

"<port>1521</port>" +

"<username>fable</username>" +

"<password>fable</password>" +

"</connection>";

//目标数据库连接

String dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>dest</name>" +

"<server>192.168.10.212</server>" +

"<type>HIVE2</type>" +

"<access>Native</access>" +

"<database>ntzw_dev_64</database>" +

"<port>10000</port>" +

"<username>hadoop</username>" +

"<password>hadoop</password>" +

"</connection>";

DatabaseMeta srcDatabaseMeta = new DatabaseMeta(src);

DatabaseMeta destDatabaseMeta = new DatabaseMeta(dest);

//创建转换元信息

TransMeta transMeta = new TransMeta();

transMeta.setName("oracle 到 hive 之间的交换");

//设置源和目标

transMeta.addDatabase(srcDatabaseMeta);

transMeta.addDatabase(destDatabaseMeta);

/*

* 创建 表输入->表输出

* 同时将两个步骤连接起来

*/

PluginRegistry registry = PluginRegistry.getInstance();

TableInputMeta tableInputMeta = new TableInputMeta();

String tableInputPluginId = registry.getPluginId(StepPluginType.class,

tableInputMeta);

tableInputMeta.setDatabaseMeta(srcDatabaseMeta);

//设置查询条件

String selectSql = "select ID,NAME from TEST.USER_INFO_SRC";

tableInputMeta.setSQL(selectSql);

StepMeta tableInputStepMeta = new StepMeta(tableInputPluginId,

"tableInput", (StepMetaInterface) tableInputMeta);

transMeta.addStep(tableInputStepMeta);

//将ID,NAME列的值映射到列id,name

SelectValuesMeta selectValuesTime = new SelectValuesMeta();

String selectValuesPluginIdTime = registry.getPluginId(StepPluginType.class, selectValuesTime);

selectValuesTime.allocate(2,0,0);

String[] srcColNames = new String[]{"ID","NAME"};

selectValuesTime.setSelectName(srcColNames);

String[] destColNames = new String[]{"id","name"};

selectValuesTime.setSelectRename(destColNames);

StepMeta selectValuesStep = new StepMeta(selectValuesPluginIdTime, "selectValuesTime", (StepMetaInterface)selectValuesTime);

transMeta.addStep(selectValuesStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(tableInputStepMeta, selectValuesStep));

TableOutputMeta tableOutputMeta = new TableOutputMeta();

tableOutputMeta.setDatabaseMeta(destDatabaseMeta);

//设置目标表的 schema和表名

tableOutputMeta.setSchemaName(null);

tableOutputMeta.setTablename("user_info_dest");

String tableOutputPluginId = registry.getPluginId(StepPluginType.class, tableOutputMeta);

StepMeta tableOutputStep = new StepMeta(tableOutputPluginId, "tableOutput" , (StepMetaInterface) tableOutputMeta);

//将步骤添加进去

transMeta.addStep(tableOutputStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(selectValuesStep, tableOutputStep));

Trans trans = new Trans(transMeta);

//执行转换

trans.execute(null);

//等待完成

trans.waitUntilFinished();

if (trans.getErrors() > 0) {

System.out.println("交换出错.");

return;

}

}

/**

* hive 到 sqlserver 之间的交换

* @throws KettleException

*/

@Test

public void exchangeHive2MSSQL()throws KettleException{

//源数据库连接

String src = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>src</name>" +

"<server>192.168.10.212</server>" +

"<type>HIVE2</type>" +

"<access>Native</access>" +

"<database>ntzw_dev_64</database>" +

"<port>10000</port>" +

"<username>hadoop</username>" +

"<password>hadoop</password>" +

"</connection>";

//目标数据库连接

String dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>dest</name>" +

"<server>192.168.230.80</server>" +

"<type>MSSQL</type>" +

"<access>Native</access>" +

"<database>test</database>" +

"<port>1433</port>" +

"<username>sa</username>" +

"<password>123456</password>" +

"</connection>";

DatabaseMeta srcDatabaseMeta = new DatabaseMeta(src);

DatabaseMeta destDatabaseMeta = new DatabaseMeta(dest);

//创建转换元信息

TransMeta transMeta = new TransMeta();

transMeta.setName("hive 到 sqlserver 之间的交换");

//设置源和目标

transMeta.addDatabase(srcDatabaseMeta);

transMeta.addDatabase(destDatabaseMeta);

/*

* 创建 表输入->表输出

* 同时将两个步骤连接起来

*/

PluginRegistry registry = PluginRegistry.getInstance();

TableInputMeta tableInputMeta = new TableInputMeta();

String tableInputPluginId = registry.getPluginId(StepPluginType.class,

tableInputMeta);

tableInputMeta.setDatabaseMeta(srcDatabaseMeta);

//设置查询条件

String selectSql = "select id ,name from user_info_src";

tableInputMeta.setSQL(selectSql);

StepMeta tableInputStepMeta = new StepMeta(tableInputPluginId,

"tableInput", (StepMetaInterface) tableInputMeta);

transMeta.addStep(tableInputStepMeta);

TableOutputMeta tableOutputMeta = new TableOutputMeta();

tableOutputMeta.setDatabaseMeta(destDatabaseMeta);

//设置目标表的 schema和表名

tableOutputMeta.setSchemaName(null);

tableOutputMeta.setTablename("user_info_dest");

String tableOutputPluginId = registry.getPluginId(StepPluginType.class, tableOutputMeta);

StepMeta tableOutputStep = new StepMeta(tableOutputPluginId, "tableOutput" , (StepMetaInterface) tableOutputMeta);

//将步骤添加进去

transMeta.addStep(tableOutputStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(tableInputStepMeta, tableOutputStep));

Trans trans = new Trans(transMeta);

//执行转换

trans.execute(null);

//等待完成

trans.waitUntilFinished();

if (trans.getErrors() > 0) {

System.out.println("交换出错.");

return;

}

}

/**

* sqlserver 到 hive 之间的交换

* @throws KettleException

*/

@Test

public void exchangeMSSQL2Hive()throws KettleException{

//源数据库连接

String src = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>src</name>" +

"<server>192.168.230.80</server>" +

"<type>MSSQL</type>" +

"<access>Native</access>" +

"<database>test</database>" +

"<port>1433</port>" +

"<username>sa</username>" +

"<password>123456</password>" +

"</connection>";

//目标数据库连接

String dest = "<?xml version=\"1.0\" encoding=\"UTF-8\"?>" +

"<connection>" +

"<name>dest</name>" +

"<server>192.168.10.212</server>" +

"<type>HIVE2</type>" +

"<access>Native</access>" +

"<database>ntzw_dev_64</database>" +

"<port>10000</port>" +

"<username>hadoop</username>" +

"<password>hadoop</password>" +

"</connection>";

DatabaseMeta srcDatabaseMeta = new DatabaseMeta(src);

DatabaseMeta destDatabaseMeta = new DatabaseMeta(dest);

//创建转换元信息

TransMeta transMeta = new TransMeta();

transMeta.setName("sqlserver 到 hive 之间的交换");

//设置源和目标

transMeta.addDatabase(srcDatabaseMeta);

transMeta.addDatabase(destDatabaseMeta);

/*

* 创建 表输入->表输出

* 同时将两个步骤连接起来

*/

PluginRegistry registry = PluginRegistry.getInstance();

TableInputMeta tableInputMeta = new TableInputMeta();

String tableInputPluginId = registry.getPluginId(StepPluginType.class,

tableInputMeta);

tableInputMeta.setDatabaseMeta(srcDatabaseMeta);

//设置查询条件

String selectSql = "select id ,name from user_info_src";

tableInputMeta.setSQL(selectSql);

StepMeta tableInputStepMeta = new StepMeta(tableInputPluginId,

"tableInput", (StepMetaInterface) tableInputMeta);

transMeta.addStep(tableInputStepMeta);

TableOutputMeta tableOutputMeta = new TableOutputMeta();

tableOutputMeta.setDatabaseMeta(destDatabaseMeta);

//设置目标表的 schema和表名

tableOutputMeta.setSchemaName(null);

tableOutputMeta.setTablename("user_info_dest");

String tableOutputPluginId = registry.getPluginId(StepPluginType.class, tableOutputMeta);

StepMeta tableOutputStep = new StepMeta(tableOutputPluginId, "tableOutput" , (StepMetaInterface) tableOutputMeta);

//将步骤添加进去

transMeta.addStep(tableOutputStep);

//将步骤和上一步关联起来

transMeta.addTransHop(new TransHopMeta(tableInputStepMeta, tableOutputStep));

Trans trans = new Trans(transMeta);

//执行转换

trans.execute(null);

//等待完成

trans.waitUntilFinished();

if (trans.getErrors() > 0) {

System.out.println("交换出错.");

return;

}

}

}