linux (centos 8.1)生产环境基于9台物理机 安装 opentstack ussuri集群以及集成ceph (已转gitee)

零 修订记录

| 序号 | 修订记录 | 修订时间 |

|---|---|---|

| 1 | 新增 | 20210429 |

| 2 | 修订 | 20210504 |

一 摘要

本文介绍基于9台物理机部署openstack ussuri版本 以及与ceph nautils集成。

二环境信息

(一)服务器信息

| 主机名 |品牌型号 |机器配置 |数量|

| ---- | ---- | ---- | ---- | ----|

| procontroller01.pro.kxdigit.com | 浪潮 SA5212M5 | 42102/128G/SSD:240G2/SAS:2T 7.2K 2 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

| procontroller02.pro.kxdigit.com | 浪潮 SA5212M5 | 42102/128G/SSD:240G2/SAS:2T 7.2K 2 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

| procontroller03.pro.kxdigit.com | 浪潮 SA5212M5 | 42102/128G/SSD:240G2/SAS:2T 7.2K 2 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

| procompute01.pro.kxdigit.com | 浪潮 SA5212M5 | 52182/1024G/SSD:240G2/SAS:2T 7.2K 2 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

| procompute02.pro.kxdigit.com | 浪潮 SA5212M5 | 52182/1024G/SSD:240G2/SAS:2T 7.2K 2 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

| procompute03.pro.kxdigit.com | 浪潮 SA5212M5 | 52182/1024G/SSD:240G2/SAS:2T 7.2K 2 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

| proceph01.pro.kxdigit.com | 浪潮 SA5212M5 | 42102/128G/SSD:240G2 960G2 /SAS:8T 7.2K 6 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

| proceph02.pro.kxdigit.com | 浪潮 SA5212M5 | 42102/128G/SSD:240G2 960G2 /SAS:8T 7.2K 6 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

| proceph03.pro.kxdigit.com | 浪潮 SA5212M5 | 42102/128G/SSD:240G2 960G2 /SAS:8T 7.2K 6 /10G X7102/1G PHY卡1/RAID卡 SAS3108 2GB |1|

(二)交换机信息

两台相同配置的交换机配置堆叠。

| 交换机名称 | 品牌型号 | 机器配置 | 数量 |

|---|---|---|---|

| A3_1F_DC_openstack_test_jieru_train-irf_b02&b03 | H3C LS-6860-54HF | 10G 光口48,40g 光口6 | 2 |

(三)软件信息

2.3.1 操作系统

[root@localhost ~]# cat /etc/centos-release

CentOS Linux release 8.1.1911 (Core)

[root@localhost ~]# uname -a

Linux localhost.localdomain 4.18.0-147.el8.x86_64 #1 SMP Wed Dec 4 21:51:45 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

[root@localhost ~]#

2.3.2 docker

2.3.3 kolla

2.3.4 kolla-ansible

三实施

(一)部署规划

3.1.1 部署网络规划

两台交互及做堆叠,服务器上有两张万兆网卡,每张网卡有两个port,交叉制作bond0,bond1;

10.3.140.0/24 bond0 用于物理机地址及api管理

10.3.141.0/24 专用于存储集群交换

10.3.142.0/24-10.3.149.0/24 用于虚拟机;

| 主机端 | 物理接口 | 网卡名称 | 绑定 | IP地址 | 交换机 | 接口 | 绑定 | 模式 | VLAN | 备注 |

|---|---|---|---|---|---|---|---|---|---|---|

| procontroller01 | 万兆光口1 | ens35f1 | mode4 | bond0:10.3.140.11 | B02.40U | 1 | BAGG1111/LACP | access | 140 | API管理 |

| procontroller01 | 万兆光口3 | ens47f1 | mode4 | B03.40U | 1 | BAGG1111/LACP | access | 140 | API管理 | |

| procontroller01 | 万兆光口2 | ens35f0 | mode4 | bond1:不配置地址 | B02.40U | 25 | BAGG25/LACP | trunck | permit 140-149 vlan | overlay |

| procontroller01 | 万兆光口4 | ens47f0 | mode4 | B03.40U | 25 | BAGG25/LACP | trunck | permit 140-149 vlan | overlay | |

| procontroller02 | 万兆光口1 | ens35f1 | mode4 | bond0:10.3.140.12 | B02.40U | 2 | BAGG2/LACP | access | 140 | API管理 |

| procontroller02 | 万兆光口3 | ens47f1 | mode4 | B03.40U | 2 | BAGG2/LACP | access | 140 | API管理 | |

| procontroller02 | 万兆光口2 | ens35f0 | mode4 | bond1:不配置地址 | B02.40U | 26 | BAGG26/LACP | trunck | permit 140-149 vlan | overlay |

| procontroller02 | 万兆光口4 | ens47f0 | mode4 | B03.40U | 26 | BAGG26/LACP | trunck | permit 140-149 vlan | overlay | |

| procontroller03 | 万兆光口1 | ens35f1 | mode4 | bond0:10.3.140.13 | B02.40U | 3 | BAGG3/LACP | access | 140 | API管理 |

| procontroller03 | 万兆光口3 | ens47f1 | mode4 | B03.40U | 3 | BAGG3/LACP | access | 140 | API管理 | |

| procontroller03 | 万兆光口2 | ens35f0 | mode4 | bond1:不配置地址 | B02.40U | 27 | BAGG27/LACP | trunck | permit 140-149 vlan | overlay |

| procontroller03 | 万兆光口4 | ens47f0 | mode4 | B03.40U | 27 | BAGG27/LACP | trunck | permit 140-149 vlan | overlay | |

| procompute01 | 万兆光口1 | ens35f1 | mode4 | bond0:10.3.140.21 | B02.40U | 4 | BAGG4/LACP | access | 140 | API管理 |

| procompute01 | 万兆光口3 | ens47f1 | mode4 | B03.40U | 4 | BAGG4/LACP | access | 140 | API管理 | |

| procompute01 | 万兆光口2 | ens35f0 | mode4 | bond1:不配置地址 | B02.40U | 28 | BAGG28/LACP | trunck | permit 140-149 vlan | overlay |

| procompute01 | 万兆光口4 | ens47f0 | mode4 | B03.40U | 28 | BAGG28/LACP | trunck | permit 140-149 vlan | overlay | |

| procompute02 | 万兆光口1 | ens35f1 | mode4 | bond0:10.3.140.22 | B02.40U | 5 | BAGG5/LACP | access | 140 | API管理 |

| procompute02 | 万兆光口3 | ens47f1 | mode4 | B03.40U | 5 | BAGG5/LACP | access | 140 | API管理 | |

| procompute02 | 万兆光口2 | ens35f0 | mode4 | bond1:不配置地址 | B02.40U | 29 | BAGG29/LACP | trunck | permit 140-149 vlan | overlay |

| procompute02 | 万兆光口4 | ens47f0 | mode4 | B03.40U | 29 | BAGG29/LACP | trunck | permit 140-149 vlan | overlay | |

| procompute03 | 万兆光口1 | ens35f1 | mode4 | bond0:10.3.140.23 | B02.40U | 6 | BAGG6/LACP | access | 140 | API管理 |

| procompute03 | 万兆光口3 | ens47f1 | mode4 | B03.40U | 6 | BAGG6/LACP | access | 140 | API管理 | |

| procompute03 | 万兆光口2 | ens35f0 | mode4 | bond1:不配置地址 | B02.40U | 30 | BAGG30/LACP | trunck | permit 140-149 vlan | overlay |

| procompute03 | 万兆光口4 | ens47f0 | mode4 | B03.40U | 30 | BAGG30/LACP | trunck | permit 140-149 vlan | overlay | |

| proceph01 | 万兆光口1 | enp59s0f1 | mode4 | bond0:10.3.140.31 | B02.40U | 7 | BAGG7/LACP | access | 140 | API管理 |

| proceph01 | 万兆光口3 | enp175s0f1 | mode4 | B03.40U | 7 | BAGG7/LACP | access | 140 | API管理 | |

| proceph01 | 万兆光口2 | enp59s0f0 | mode4 | bond1:10.3.141.31 | B02.40U | 31 | BAGG31/LACP | access | 141 | 存储专用网络 |

| proceph01 | 万兆光口4 | enp175s0f0 | mode4 | B03.40U | 31 | BAGG31/LACP | access | 141 | 存储专用网络 | |

| proceph02 | 万兆光口1 | enp59s0f1 | mode4 | bond0:10.3.140.32 | B02.40U | 8 | BAGG8/LACP | access | 140 | API管理 |

| proceph02 | 万兆光口3 | enp175s0f1 | mode4 | B03.40U | 8 | BAGG8/LACP | access | 140 | API管理 | |

| proceph02 | 万兆光口2 | enp59s0f0 | mode4 | bond1:10.3.141.32 | B02.40U | 32 | BAGG32/LACP | access | 141 | 存储专用网络 |

| proceph02 | 万兆光口4 | enp175s0f0 | mode4 | B03.40U | 32 | BAGG32/LACP | access | 141 | 存储专用网络 | |

| proceph03 | 万兆光口1 | enp59s0f1 | mode4 | bond0:10.3.140.33 | B02.40U | 9 | BAGG9/LACP | access | 140 | API管理 |

| proceph03 | 万兆光口3 | enp175s0f1 | mode4 | B03.40U | 9 | BAGG9/LACP | access | 140 | API管理 | |

| proceph03 | 万兆光口2 | enp59s0f0 | mode4 | bond1:10.3.141.33 | B02.40U | 33 | BAGG33/LACP | access | 141 | 存储专用网络 |

| proceph03 | 万兆光口4 | enp175s0f0 | mode4 | B03.40U | 33 | BAGG33/LACP | access | 141 | 存储专用网络 |

3.1.2 部署节点功能规划

| 主机名 | 网卡 | IP | VIP | 功能 |

|---|---|---|---|---|

| procontroller01.pro.kxdigit.com | bond0 | 10.3.140.11 | 10.3.140.10 | kolla-ansible kolla ansible 部署服务器 控制节点 网络节点 存储服务 监控节点 |

| procontroller02.pro.kxdigit.com | bond0 | 10.3.140.12 | 10.3.140.10 | 控制节点 网络节点 存储服务 |

| procontroller03.pro.kxdigit.com | bond0 | 10.3.140.13 | 10.3.140.10 | 控制节点 存储服务 网络节点 |

| procompute01.pro.kxdigit.com | bond0 | 10.3.140.21 | 本机不需要使用 | 计算节点 |

| procompute02.pro.kxdigit.com | bond0 | 10.3.140.22 | 本机不需要使用 | 计算节点 |

| procompute03.pro.kxdigit.com | bond0 | 10.3.140.23 | 本机不需要使用 | 计算节点 |

| proceph01.pro.kxdigit.com | bond0 | 10.3.140.31 | 本机不需要使用 | 存储节点 |

| proceph02.pro.kxdigit.com | bond0 | 10.3.140.32 | 本机不需要使用 | 存储节点 |

| proceph03.pro.kxdigit.com | bond0 | 10.3.140.33 | 本机不需要使用 | 存储节点 |

(二)部署ceph 集群

ceph 集群部署请参照linux (centos 7.6)生产环境基于三台物理机 安装 ceph 集群以及集成openstack

(三) 部署openstack ussuri集群

我这里是离线部署,关于如何下载openstack ussuri包,及相关依赖

请参考openstack ussuri版本基于内网三台物理机集群kolla-ansible部署及与ceph 集群 集成

这里主要下载两类

1.是镜像

2.是部署节点安装时需要的各种依赖

3.3.1 部署前准备(控制节点和计算节点都要)

3.3.1.1 关闭防火墙以及selinux

[dev@10-3-170-32 base]$ ansible-playbook closefirewalldandselinux.yml

需要重启机器

3.3.1.2 配置dns以及修改机器名

dns 服务器上配置参考下表

| 域名 |IP|备注|

| ---- | ---- | ---- | ---- |

| procontroller01.pro.kxdigit.com | 10.3.140.11|无|

| procontroller02.pro.kxdigit.com | 10.3.140.12|无|

| procontroller03.pro.kxdigit.com | 10.3.140.13|无|

| procompute01.pro.kxdigit.com | 10.3.140.21|无|

| procompute02.pro.kxdigit.com | 10.3.140.22|无|

| procompute03.pro.kxdigit.com | 10.3.140.23|无|

| proceph01.pro.kxdigit.com | 10.3.140.31|存储集群部署时已执行|

| proceph02.pro.kxdigit.com | 10.3.140.32|存储集群部署时已执行|

| proceph03.pro.kxdigit.com | 10.3.140.33|存储集群部署时已执行|

|procloud.kxdigit.com|10.3.140.10|虚IP 对应域名|

修改机器名:

[root@localhost ~]# hostnamectl set-hostname procontroller01.pro.kxdigit.com

[root@localhost ~]#

以上以一台机器为例,9台机器均需设置,其中存储节点在ceph 安装时已设置,故此处只需设置6台即可。

修改服务器dns 解析地址

[dev@10-3-170-32 base]$ ansible-playbook modifydns.yml

修改ssh 配置文件 /etc/ssh/sshd_config,取消登录时调用dns,重启ssh

#UseDNS yes

UseDNS no

systemctl restart sshd

3.3.1.3 更新yum 源

更新操作系统yum,更新docker 源

[dev@10-3-170-32 base]$ ansible-playbook updateyum.yml

[dev@10-3-170-32 base]$ ansible-playbook updateansible.yml

[root@procontroller01 ~]# ll /etc/yum.repos.d

total 12

-rw-r--r-- 1 root root 501 Apr 29 14:55 CentOS-81.repo

-rw-r--r-- 1 root root 91 Apr 29 15:26 ansible2910forcentos8.repo

-rw-r--r-- 1 root root 104 Apr 29 14:55 docker1803forcentos8.repo

[root@procontroller01 ~]#

3.3.1.4 同步时间服务器

[dev@10-3-170-32 base]$ ansible-playbook modifychronyclient.yml

3.3.1.5 安装docker

控制节点 计算节点 逐台安装,以下一控制节点一为例只需

安装、修改配置文件、设置开机启动

我这里目前安装的是docker 18.03,三台节点都需要安装

我这里已经配置了docker yum 源,直接yum install docker-ce 即可安装,安装时可能会报错,主要是依赖包兼容问题。

安装docker 异常处理参考openstack ussuri 版本 all-in-one 离线部署

[root@procontroller01 ~]# yum install docker-ce

Failed to set locale, defaulting to C.UTF-8

Last metadata expiration check: 0:01:16 ago on Thu Apr 29 14:56:36 2021.

Modular dependency problems:

若有该包兼容性错误,可以删除

yum remove podman

docker 配置文件修改

新增 /etc/systemd/system/docker.service.d/kolla.conf

[root@procontroller01 ~]# cd /etc/systemd/system/

[root@procontroller01 system]# mkdir docker.service.d

[root@procontroller01 system]# cd docker.service.d/

[root@procontroller01 docker.service.d]# vi kolla.conf

[root@procontroller01 docker.service.d]#

文件内容如下

[Service]

MountFlags=shared

设置开机启动

[root@procontroller01 docker.service.d]# systemctl daemon-reload

[root@procontroller01 docker.service.d]# systemctl restart docker && systemctl enable docker && systemctl status docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/docker.service.d

└─kolla.conf

Active: active (running) since Thu 2021-04-29 15:01:14 CST; 126ms ago

Docs: https://docs.docker.com

Main PID: 36902 (dockerd)

Tasks: 39 (limit: 32767)

Memory: 55.6M

CGroup: /system.slice/docker.service

├─36902 /usr/bin/dockerd

└─36914 docker-containerd --config /var/run/docker/containerd/containerd.toml

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.185105356+08:00" level=info msg="Graph>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.185371260+08:00" level=warning msg="Yo>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.185384710+08:00" level=warning msg="Yo>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.185806534+08:00" level=info msg="Loadi>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.272931021+08:00" level=info msg="Defau>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.331678817+08:00" level=info msg="Loadi>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.363650311+08:00" level=info msg="Docke>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.363785574+08:00" level=info msg="Daemo>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com dockerd[36902]: time="2021-04-29T15:01:14.369294430+08:00" level=info msg="API l>

Apr 29 15:01:14 procontroller01.pro.kxdigit.com systemd[1]: Started Docker Application Container Engine.

3.3.1.6 安装pip

centos8.1 自带python3,默然安装了pip3.6 ,我们只需要做下链接即可。

3.3.2.2.1 制作pip链接

[root@procontroller01 ~]# whereis pip3.6

pip3: /usr/bin/pip3 /usr/bin/pip3.6 /usr/share/man/man1/pip3.1.gz

[root@procontroller01 ~]# ln -s /usr/bin/pip3.6 /usr/bin/pip

[root@procontroller01 ~]# pip -V

pip 9.0.3 from /usr/lib/python3.6/site-packages (python 3.6)

[root@procontroller01 ~]#

3.3.1.7 禁用libvirt(仅计算节点执行)

[root@procompute03 ~]# systemctl stop libvirtd.service && systemctl disable libvirtd.service && systemctl status libvirtd.service

Removed /etc/systemd/system/multi-user.target.wants/libvirtd.service.

Removed /etc/systemd/system/sockets.target.wants/virtlogd.socket.

Removed /etc/systemd/system/sockets.target.wants/virtlockd.socket.

● libvirtd.service - Virtualization daemon

Loaded: loaded (/usr/lib/systemd/system/libvirtd.service; disabled; vendor preset: enabled)

Active: inactive (dead) since Thu 2021-04-29 16:40:50 CST; 108ms ago

Docs: man:libvirtd(8)

https://libvirt.org

Main PID: 5342 (code=exited, status=0/SUCCESS)

CGroup: /system.slice/libvirtd.service

├─5814 /usr/sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/libexec/>

└─5815 /usr/sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/libexec/>

Apr 29 14:45:01 procompute03.pro.kxdigit.com dnsmasq-dhcp[5814]: DHCP, IP range 192.168.122.2 -- 192.168.122.254, lease time 1h

Apr 29 14:45:01 procompute03.pro.kxdigit.com dnsmasq-dhcp[5814]: DHCP, sockets bound exclusively to interface virbr0

Apr 29 14:45:01 procompute03.pro.kxdigit.com dnsmasq[5814]: no servers found in /etc/resolv.conf, will retry

Apr 29 14:45:01 procompute03.pro.kxdigit.com dnsmasq[5814]: read /etc/hosts - 2 addresses

Apr 29 14:45:01 procompute03.pro.kxdigit.com dnsmasq[5814]: read /var/lib/libvirt/dnsmasq/default.addnhosts - 0 addresses

Apr 29 14:45:01 procompute03.pro.kxdigit.com dnsmasq-dhcp[5814]: read /var/lib/libvirt/dnsmasq/default.hostsfile

Apr 29 14:49:16 procompute03.pro.kxdigit.com dnsmasq[5814]: reading /etc/resolv.conf

Apr 29 14:49:16 procompute03.pro.kxdigit.com dnsmasq[5814]: using nameserver 10.3.157.201#53

Apr 29 16:40:50 procompute03.pro.kxdigit.com systemd[1]: Stopping Virtualization daemon.

3.3.1.8 安装docker python sdk (所有节点都需要安装)

[root@procompute03 ~]# tar -zxvf /tmp/dockerpython.tgz

dockerpython/

dockerpython/docker-4.2.1-py2.py3-none-any.whl

dockerpython/requests-2.25.1-py2.py3-none-any.whl

dockerpython/websocket_client-0.58.0-py2.py3-none-any.whl

dockerpython/six-1.15.0-py2.py3-none-any.whl

dockerpython/idna-2.10-py2.py3-none-any.whl

dockerpython/chardet-4.0.0-py2.py3-none-any.whl

dockerpython/certifi-2020.12.5-py2.py3-none-any.whl

dockerpython/urllib3-1.26.4-py2.py3-none-any.whl

[root@procompute03 ~]# pip install --no-index --find-links=/root/dockerpython docker==4.2.1

WARNING: Running pip install with root privileges is generally not a good idea. Try `pip install --user` instead.

Collecting docker==4.2.1

Collecting websocket-client>=0.32.0 (from docker==4.2.1)

Requirement already satisfied: requests!=2.18.0,>=2.14.2 in /usr/lib/python3.6/site-packages (from docker==4.2.1)

Requirement already satisfied: six>=1.4.0 in /usr/lib/python3.6/site-packages (from docker==4.2.1)

Requirement already satisfied: chardet<3.1.0,>=3.0.2 in /usr/lib/python3.6/site-packages (from requests!=2.18.0,>=2.14.2->docker==4.2.1)

Requirement already satisfied: idna<2.8,>=2.5 in /usr/lib/python3.6/site-packages (from requests!=2.18.0,>=2.14.2->docker==4.2.1)

Requirement already satisfied: urllib3<1.25,>=1.21.1 in /usr/lib/python3.6/site-packages (from requests!=2.18.0,>=2.14.2->docker==4.2.1)

Installing collected packages: websocket-client, docker

Successfully installed docker-4.2.1 websocket-client-0.58.0

[root@procompute03 ~]#

3.3.2 安装部署服务(部署服务器)

3.3.2.1 安装ansible

3.3.2.1.1 安装ansible

[root@procontroller01 ~]# yum install ansible

Failed to set locale, defaulting to C.UTF-8

ansible

[root@procontroller01 ~]# ansible --version

ansible 2.9.10

config file = /etc/ansible/ansible.cfg

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python3.6/site-packages/ansible

executable location = /usr/bin/ansible

python version = 3.6.8 (default, Nov 21 2019, 19:31:34) [GCC 8.3.1 20190507 (Red Hat 8.3.1-4)]

[root@procontroller01 ~]#

3.3.2.1.2 修改ansible 配置文件

对ansible /etc/ansible/ansible.cfg 配置文件做了优化

先备份原文件:cp /etc/ansible/ansible.cfg /etc/ansible/ansible.cfg.bak.orig

新增如下内容:

[defaults]

inventory = $HOME/ansible/hosts

host_key_checking=False

pipelining=True

forks=10

3.3.2.2 安装基础依赖

centos8 使用dnf 安装rpm 包,安装python3-devel libffi-devel gcc openssl-devel python3-libselinux git vim bash-completion net-tools 基础依赖包

3.3.2.2.1 安装

[root@procontroller01 ussuri]# cd dependencies/

[root@procontroller01 dependencies]# ll

total 31224

-rw-r--r-- 1 root root 280084 Jun 17 2020 bash-completion-2.7-5.el8.noarch.rpm

-rw-r--r-- 1 root root 24564532 Jun 17 2020 gcc-8.3.1-4.5.el8.x86_64.rpm

-rw-r--r-- 1 root root 190956 Jun 17 2020 git-2.18.2-2.el8_1.x86_64.rpm

-rw-r--r-- 1 root root 29396 Jun 17 2020 libffi-devel-3.1-21.el8.i686.rpm

-rw-r--r-- 1 root root 29376 Jun 17 2020 libffi-devel-3.1-21.el8.x86_64.rpm

-rw-r--r-- 1 root root 330916 Jun 17 2020 net-tools-2.0-0.51.20160912git.el8.x86_64.rpm

-rw-r--r-- 1 root root 2395376 Jun 17 2020 openssl-devel-1.1.1c-2.el8_1.1.i686.rpm

-rw-r--r-- 1 root root 2395344 Jun 17 2020 openssl-devel-1.1.1c-2.el8_1.1.x86_64.rpm

-rw-r--r-- 1 root root 290084 Jun 17 2020 python3-libselinux-2.9-2.1.el8.x86_64.rpm

-rw-r--r-- 1 root root 16570 Jun 17 2020 python36-devel-3.6.8-2.module_el8.1.0+245+c39af44f.x86_64.rpm

-rw-r--r-- 1 root root 1427224 Jun 17 2020 vim-enhanced-8.0.1763-13.el8.x86_64.rpm

[root@procontroller01 dependencies]# dnf install python3-devel libffi-devel gcc openssl-devel python3-libselinux git vim bash-completion net-tools

Failed to set locale, defaulting to C.UTF-8

3.3.2.3 安装kolla (部署节点)

[root@procontroller01 ussuri]# unzip kolla-10.1.0.zip

Archive: kolla-10.1.0.zip

fbea3bf26d93e6fd784dbd9967659f549df3ec2d

3.3.2.3.1离线安装

[root@procontroller01 ussuri]# cd kolla-10.1.0/

[root@procontroller01 kolla-10.1.0]# git init

Initialized empty Git repository in /root/ussuri/kolla-10.1.0/.git/

[root@procontroller01 kolla-10.1.0]#

安装依赖包

[root@procontroller01 ~]# pip install --no-index --find-links=/root/software/ussuri/kollapip -r /root/software/ussuri/kolla-10.1.0/requirements.txt

WARNING: Running pip

安装kolla

[root@procontroller01 ~]# pip install /root/software/ussuri/kolla-10.1.0/

WARNING: Running pip install with root privileges is generally not a good idea. Try `pip install --user` instead.

Processing ./software/ussuri/kolla-10.1.0

验证

[root@procontroller01 ~]# kolla-build --version

0.0.0

[root@procontroller01 ~]#

3.3.2.4 安装kolla-ansible (部署节点)

本文安装的是kolla-ansible 10.1.0 版本

3.3.2.4.1离线安装

解压

[root@procontroller01 ussuri]# unzip kolla-ansible-10.1.0.zip

Archive: kolla-ansible-10.1.0.zip

6bba8cc52af3a26678da48129856f80c21eb8e38

git 初始化

[root@procontroller01 ussuri]# cd kolla-ansible-10.1.0/

[root@procontroller01 kolla-ansible-10.1.0]# git init

Initialized empty Git repository in /root/software/ussuri/kolla-ansible-10.1.0/.git/

[root@procontroller01 kolla-ansible-10.1.0]#

安装kolla-ansible 依赖包

[root@procontroller01 kolla-ansible-10.1.0]# pip install --no-index --find-links=/root/software/ussuri//kollaansiblepip -r /root/software/ussuri/kolla-ansible-10.1.0/requirements.txt

WARNING: Running pip install with root privileges is generally not a good idea. Try `pip install --user` instead.

Requirement already satisfied: pbr!=2.1.0,>=2.0.0 in /usr/local/lib/python3.6/site-packages (from -r /root/software/ussuri/kolla-ansible-10.1.0/requirements.txt (line 1))

安装kolla-ansible

[root@procontroller01 kolla-ansible-10.1.0]# pip install /root/software/ussuri/kolla-ansible-10.1.0

WARNING: Running pip install with root privileges is generally not a good idea. Try `pip install --user` instead.

Processing /root/software/ussuri/kolla-ansible-10.1.0

验证

[root@procontroller01 kolla-ansible-10.1.0]# kolla-ansible -h

Usage: /usr/local/bin/kolla-ansible COMMAND [options]

Options:

--inventory, -i <inventory_path> Specify path to ansible inventory file

--playbook, -p <playbook_path> Specify path to ansible playbook file

--configdir <config_path> Specify path to directory with globals.yml

--key -k <key_path> Specify path to ansible vault keyfile

--help, -h Show this usage information

3.3.2.4.2 新增kolla-ansilbe 配置文件

[root@procontroller01 kolla-ansible-10.1.0]# mkdir -p /etc/kolla

[root@procontroller01 kolla-ansible-10.1.0]# cp -r /root/software/ussuri/kolla-ansible-10.1.0/etc/kolla/* /etc/kolla

[root@procontroller01 kolla-ansible-10.1.0]# ll /etc/kolla/

total 36

-rw-r--r-- 1 root root 25509 Apr 29 15:55 globals.yml

-rw-r--r-- 1 root root 5037 Apr 29 15:55 passwords.yml

[root@procontroller01 kolla-ansible-10.1.0]#

3.3.2.4.2 新增ansilbe 配置文件

[root@procontroller01 kolla-ansible-10.1.0]# mkdir /root/ansible

[root@procontroller01 kolla-ansible-10.1.0]# cp /root/software/ussuri/kolla-ansible-10.1.0/ansible/inventory/* /root/ansible/

[root@procontroller01 kolla-ansible-10.1.0]# ll /root/ansible/

total 24

-rw-r--r-- 1 root root 9584 Apr 29 15:56 all-in-one

-rw-r--r-- 1 root root 10058 Apr 29 15:56 multinode

[root@procontroller01 kolla-ansible-10.1.0]#

3.3.2.5 部署节点免密登录到所有节点

部署节点能免密登录到所有控制节点和计算节点,

下面仅示例登录到控制节点1,剩下六台 参考方法

[root@procontroller01 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:Fol6UrRlbAcOreLvCDG3cknIk3+C8a0KeF9wIDuD4sw root@procontroller01.pro.kxdigit.com

The key's randomart image is:

+---[RSA 3072]----+

| oo+. |

| . B+.. |

| . . +o+. |

| o =.+. . |

|o %.*.o S |

|* &.O . |

|oE= B.+ |

| o = *. |

| ..+.. |

+----[SHA256]-----+

[root@procontroller01 ~]# ssh-copy-id root@10.3.140.11

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '10.3.140.11 (10.3.140.11)' can't be established.

ECDSA key fingerprint is SHA256:j5XQyrGFUqdRSnbrryQo7oD+SjATitiH5MC7wJTW3EQ.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

3.3.3 kolla ansible 部署openstack准备工作

下面上传的是train 版本镜像,ussuri 版本上传方法类似,只是上传的内容以及镜像tag 不同。

这里主要是配置文件的初步修改,镜像上传到内网镜像中心,

我这里内网有个docker 仓库,而且是https方式

https://registry.kxdigit.com

非https 方式,docker 需要配置。

3.3.3.1 镜像上传到内网镜像中心

3.3.3.1.1 上传到内网

部署节点新增目录

[root@procontroller01 software]# mkdir -p ./openstack/train/images/kolla

[root@procontroller01 software]# cd openstack/train/images/kolla/

[root@procontroller01 kolla]# pwd

/root/software/openstack/train/images/kolla

[root@procontroller01 kolla]#

openstak 镜像导入内网

此步骤略

我这边镜像到上传到部署节点,总共134个镜像

centos-source-zun-api.tar.gz 100% 337MB 112.2MB/s 00:03

centos-source-zun-compute.tar.gz 100% 387MB 96.7MB/s 00:04

centos-source-zun-wsproxy.tar.gz 100% 337MB 112.2MB/s 00:03

[dev@10-3-170-32 kolla]$ pwd

/home/dev/software/openstack/train/images/kolla

[dev@10-3-170-32 kolla]$

[dev@10-3-170-32 kolla]$ ls | grep gz |wc -l

134

[dev@10-3-170-32 kolla]$

3.3.3.1.2 解压镜像压缩包

解压脚本

#! /bin/sh

for i in ` ls -al /root/software/openstack/train/images/kolla/ | grep gz | awk '{print $9}' `;do gzip -d /root/software/openstack/train/images/kolla/$i; done

3.3.3.1.3 批量加载镜像

脚本

[root@procontroller01 scripts]# cat dockerload.sh

#!/bin/bash

path=$1

cd $path

for filename in `ls`

do

echo $filename

docker load < $filename

done

[root@procontroller01 scripts]#

执行命令:

[root@procontroller01 scripts]# ./dockerload.sh /root/software/openstack/train/images/kolla/

centos-source-aodh-api.tar

174f56854903: Loading layer [==================================================>] 211.7MB/211.7MB

1f66661b2a8e: Loading layer [==================================================>] 84.99kB/84.99kB

检查,已全部上传到部署节点

[root@procontroller01 kolla]# docker images | grep train | wc -l

134

[root@procontroller01 kolla]#

[root@procontroller01 kolla]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

kolla/centos-source-nova-compute train bb089a9b5fdf 4 weeks ago 2.08GB

kolla/centos-source-horizon train a4b1597d0c85 4 weeks ago 1.19GB

3.3.3.1.4 上传到内网docker 仓库

[root@procontroller01 scripts]# for i in `docker images|grep -v registry | grep train|awk '{print $1}'`;do docker image tag $i:train registry.kxdigit.com/$i:train;done

[root@procontroller01 scripts]#

经比对 上面命令少执行了一条

[root@procontroller01 kolla]# docker images | grep centos-source-glance-registry

kolla/centos-source-glance-registry train f59b22154362 4 weeks ago 1.02GB

[root@procontroller01 kolla]# docker images|grep centos-source-glance-registry | grep train|awk '{print $1}'

kolla/centos-source-glance-registry

[root@procontroller01 kolla]# for i in `docker images|grep centos-source-glance-registry | grep train|awk '{print $1}'`;do docker image tag $i:train registry.kxdigit.com/$i:train;done

[root@procontroller01 kolla]#

先登录私有仓库

[root@procontroller01 kolla]# docker login -u admin -p passwordxxx registry.kxdigit.com

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

批量推送到私有仓库

for i in ` docker images | grep kxdigit |awk '{print $1}'`;do docker push $i;done

3.3.3.2 生成密码文件并修改登录密码(部署节点)

先使用kolla-genpwd 生成全套密码,然后为了前台便于登录,修改keystone_admin_password 密码 为Admin_PASS2021

[root@procontroller01 scripts]# cd /etc/kolla/

[root@procontroller01 kolla]# ll

总用量 36

-rw-r--r--. 1 root root 24901 4月 27 11:07 globals.yml

-rw-r--r--. 1 root root 5217 4月 27 11:07 passwords.yml

[root@procontroller01 kolla]# kolla-genpwd

[root@procontroller01 kolla]# ll

总用量 56

-rw-r--r--. 1 root root 24901 4月 27 11:07 globals.yml

-rw-r--r--. 1 root root 25916 4月 27 17:42 passwords.yml

[root@procontroller01 kolla]# vim passwords.yml

[root@procontroller01 kolla]#

keepalived_password: 02u493LqhiQ8RXp0Zux4bEeZs0sr0AbxfFjb6CvZ

keystone_admin_password: Admin_PASS2021

keystone_database_password: DjD2mvrS0sgii9Wriz8emSBAekdBNXjDhjwwaa98

3.3.3.3 修改multinode(部署节点)

主要修改点有:

[control]

[network]

[compute]

[monitoring]

[storage]

这条我这里暂时没改,先试试能不能用

[nova-compute-ironic:children] 配置改为compute

仅放了修改的地方

[root@procontroller01 ansible]# cat multinode

[control]

# These hostname must be resolvable from your deployment host

#control01

#control02

#control03

procontroller01.pro.kxdigit.com

procontroller02.pro.kxdigit.com

procontroller03.pro.kxdigit.com

# The above can also be specified as follows:

#control[01:03] ansible_user=kolla

# The network nodes are where your l3-agent and loadbalancers will run

# This can be the same as a host in the control group

[network]

#network01

#network02

procontroller01.pro.kxdigit.com

procontroller02.pro.kxdigit.com

procontroller03.pro.kxdigit.com

[compute]

#compute01

procompute01.pro.kxdigit.com

procompute02.pro.kxdigit.com

procompute03.pro.kxdigit.com

[monitoring]

#monitoring01

procontroller01.pro.kxdigit.com

# When compute nodes and control nodes use different interfaces,

# you need to comment out "api_interface" and other interfaces from the globals.yml

# and specify like below:

#compute01 neutron_external_interface=eth0 api_interface=em1 storage_interface=em1 tunnel_interface=em1

[storage]

#storage01

procontroller01.pro.kxdigit.com

procontroller02.pro.kxdigit.com

procontroller03.pro.kxdigit.com

[deployment]

localhost ansible_connection=local

[nova-compute-ironic:children]

#nova

compute

3.3.3.4 修改global.yml(部署节点)

此步暂略,后面与ceph 集成时也要修改该文件,整合到一起。

3.3.4 openstack与 ceph 集成

3.3.4.1 修改global.yml

[root@procontroller01 ansible]# cat /etc/kolla/globals.yml |grep -v "^#" | grep -v ^$

---

kolla_base_distro: "centos"

kolla_install_type: "source"

openstack_release: "ussuri"

kolla_internal_vip_address: "10.3.140.10"

docker_registry: registry.kxdigit.com

network_interface: "bond0"

tunnel_interface: "{{ network_interface }}"

neutron_external_interface: "bond1"

neutron_plugin_agent: "openvswitch"

openstack_logging_debug: "True"

enable_openstack_core: "yes"

enable_haproxy: "yes"

enable_cinder: "yes"

enable_manila_backend_cephfs_native: "yes"

ceph_glance_keyring: "ceph.client.glance.keyring"

ceph_glance_user: "glance"

ceph_glance_pool_name: "images"

ceph_cinder_keyring: "ceph.client.cinder.keyring"

ceph_cinder_user: "cinder"

ceph_cinder_pool_name: "volumes"

ceph_cinder_backup_keyring: "ceph.client.cinder-backup.keyring"

ceph_cinder_backup_user: "cinder-backup"

ceph_cinder_backup_pool_name: "backups"

ceph_nova_keyring: "{{ ceph_cinder_keyring }}"

ceph_nova_user: "cinder"

ceph_nova_pool_name: "vms"

glance_backend_ceph: "yes"

cinder_backend_ceph: "yes"

nova_backend_ceph: "yes"

nova_compute_virt_type: "qemu"

[root@procontroller01 ansible]#

3.3.4.2 ceph 状态检查

[cephadmin@proceph01 ~]$ ceph -s

cluster:

id: ad0bf159-1b6f-472b-94de-83f713c339a3

health: HEALTH_OK

services:

mon: 3 daemons, quorum proceph01,proceph02,proceph03 (age 2d)

mgr: proceph01(active, since 2d), standbys: proceph02, proceph03

osd: 18 osds: 18 up (since 2d), 18 in (since 2d)

data:

pools: 4 pools, 544 pgs

objects: 4 objects, 76 B

usage: 18 GiB used, 131 TiB / 131 TiB avail

pgs: 544 active+clean

[cephadmin@proceph01 ~]$

[cephadmin@proceph01 ~]$ ceph health detail

HEALTH_OK

[cephadmin@proceph01 ~]$

[cephadmin@proceph01 ~]$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 130.98395 root default

-3 43.66132 host proceph01

0 hdd 7.27689 osd.0 up 1.00000 1.00000

1 hdd 7.27689 osd.1 up 1.00000 1.00000

2 hdd 7.27689 osd.2 up 1.00000 1.00000

3 hdd 7.27689 osd.3 up 1.00000 1.00000

4 hdd 7.27689 osd.4 up 1.00000 1.00000

5 hdd 7.27689 osd.5 up 1.00000 1.00000

-5 43.66132 host proceph02

6 hdd 7.27689 osd.6 up 1.00000 1.00000

7 hdd 7.27689 osd.7 up 1.00000 1.00000

8 hdd 7.27689 osd.8 up 1.00000 1.00000

9 hdd 7.27689 osd.9 up 1.00000 1.00000

10 hdd 7.27689 osd.10 up 1.00000 1.00000

11 hdd 7.27689 osd.11 up 1.00000 1.00000

-7 43.66132 host proceph03

12 hdd 7.27689 osd.12 up 1.00000 1.00000

13 hdd 7.27689 osd.13 up 1.00000 1.00000

14 hdd 7.27689 osd.14 up 1.00000 1.00000

15 hdd 7.27689 osd.15 up 1.00000 1.00000

16 hdd 7.27689 osd.16 up 1.00000 1.00000

17 hdd 7.27689 osd.17 up 1.00000 1.00000

[cephadmin@proceph01 ~]$

3.3.4.3 配置glance 使用ceph

3.3.4.3.1 生成 ceph.client.glance.keyring

在ceph 部署节点 生成 ceph.client.glance.keyring 文件

[cephadmin@proceph01 ~]$ ceph auth get-or-create client.glance | tee /etc/ceph/ceph.client.glance.keyring

[client.glance]

key = AQCYS4VgKKk7MRAAQZkwE3ISG1J+jsN1AcpqRg==

[cephadmin@proceph01 ~]$ ll /etc/ceph/

total 16

-rw-------. 1 cephadmin cephadmin 151 Apr 23 18:01 ceph.client.admin.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Apr 27 19:03 ceph.client.glance.keyring

-rw-r--r-- 1 root root 308 Apr 25 16:57 ceph.conf

-rw-r--r--. 1 cephadmin cephadmin 92 Nov 24 03:33 rbdmap

-rw-------. 1 cephadmin cephadmin 0 Apr 23 17:58 tmpd8sfbW

[cephadmin@proceph01 ~]$

3.3.4.3.2 koll-ansible 部署节点 新建/etc/kolla/config/glance

[root@procontroller01 ~]# mkdir -p /etc/kolla/config/glance

[root@procontroller01 ~]# cd /etc/kolla/config/glance/

[root@procontroller01 glance]# ll

总用量 0

[root@procontroller01 glance]# pwd

/etc/kolla/config/glance

[root@procontroller01 glance]#

3.3.4.3.3 ceph.client.glance.keyring 文件从ceph部署节点拷贝到openstack 部署节点该目录下/etc/kolla/config/glance

[cephadmin@proceph01 ceph]$ scp /etc/ceph/ceph.conf root@10.3.140.11:/etc/kolla/config/glance/

root@10.3.140.11's password:

ceph.conf 100% 308 588.9KB/s 00:00

[cephadmin@proceph01 ceph]$

只保留如下内容

[global]

fsid = ad0bf159-1b6f-472b-94de-83f713c339a3

mon_host = 10.3.140.31,10.3.140.32,10.3.140.33

3.3.4.4 配置cinder 使用ceph

3.3.4.4.1 ceph 部署节点生成 ceph.client.cinder.keyring 和 ceph.client.cinder-backup.keyring 密钥文件

[cephadmin@proceph01 ceph]$ ceph auth get-or-create client.cinder | tee /etc/ceph/ceph.client.cinder.keyring

[client.cinder]

key = AQCrS4VgBJhtDhAA5X+b5N7GJMOK/4p12IwRBg==

[cephadmin@proceph01 ceph]$ ceph auth get-or-create client.cinder-backup | tee /etc/ceph/ceph.client.cinder-backup.keyring

[client.cinder-backup]

key = AQC5S4VgmMBDIRAA6xnBwr04Ik0lmn0WIoD20Q==

[cephadmin@proceph01 ceph]$ ll /etc/ceph/

total 24

-rw-------. 1 cephadmin cephadmin 151 Apr 23 18:01 ceph.client.admin.keyring

-rw-rw-r-- 1 cephadmin cephadmin 71 Apr 27 19:13 ceph.client.cinder-backup.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Apr 27 19:13 ceph.client.cinder.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Apr 27 19:03 ceph.client.glance.keyring

-rw-r--r-- 1 root root 308 Apr 25 16:57 ceph.conf

-rw-r--r--. 1 cephadmin cephadmin 92 Nov 24 03:33 rbdmap

-rw-------. 1 cephadmin cephadmin 0 Apr 23 17:58 tmpd8sfbW

[cephadmin@proceph01 ceph]$

3.3.4.4.2 kolla-ansible 部署节点新增cinder 配置文件目录

[root@procontroller01 glance]# mkdir -p /etc/kolla/config/cinder/cinder-volume/

[root@procontroller01 glance]# mkdir -p /etc/kolla/config/cinder/cinder-backup

[root@procontroller01 glance]# ll /etc/kolla/config/

总用量 0

drwxr-xr-x. 4 root root 48 4月 27 19:14 cinder

drwxr-xr-x. 2 root root 57 4月 27 19:14 glance

[root@procontroller01 glance]# ll /etc/kolla/config/cinder/

总用量 0

drwxr-xr-x. 2 root root 6 4月 27 19:14 cinder-backup

drwxr-xr-x. 2 root root 6 4月 27 19:14 cinder-volume

[root@procontroller01 glance]#

3.3.4.4.3 cinder 相关密钥文件拷贝到 kolla-ansbile 部署节点

[cephadmin@proceph01 ceph]$ scp /etc/ceph/ceph.client.cinder.keyring root@10.3.140.11:/etc/kolla/config/cinder/cinder-backup/

root@10.3.140.11's password:

ceph.client.cinder.keyring 100% 64 121.4KB/s 00:00

[cephadmin@proceph01 ceph]$ scp /etc/ceph/ceph.client.cinder.keyring root@10.3.140.11:/etc/kolla/config/cinder/cinder-volume/

root@10.3.140.11's password:

ceph.client.cinder.keyring 100% 64 124.8KB/s 00:00

[cephadmin@proceph01 ceph]$ scp /etc/ceph/ceph.client.cinder-backup.keyring root@10.3.140.11:/etc/kolla/config/cinder/cinder-backup/

root@10.3.140.11's password:

ceph.client.cinder-backup.keyring 100% 71 141.6KB/s 00:00

[cephadmin@proceph01 ceph]$

3.3.4.4.4 cinder 配置目录下添加ceph

因为ceph.conf 配置文件内容 同上 3.3.4.3.4 ,故直接复制过来即可

[root@procontroller01 glance]# cp /etc/kolla/config/glance/ceph.conf /etc/kolla/config/cinder/

[root@procontroller01 glance]# ll /etc/kolla/config/cinder/

总用量 4

-rw-r--r--. 1 root root 100 4月 27 19:19 ceph.conf

drwxr-xr-x. 2 root root 81 4月 27 19:17 cinder-backup

drwxr-xr-x. 2 root root 40 4月 27 19:17 cinder-volume

[root@procontroller01 glance]#

3.3.4.5 配置nova 使用ceph

3.3.4.5.1 kolla ansible 部署节点新建/etc/kolla/config/nova目录

[root@procontroller01 glance]# mkdir -p /etc/kolla/config/nova

[root@procontroller01 glance]# ll /etc/kolla/config/

总用量 0

drwxr-xr-x. 4 root root 65 4月 27 19:19 cinder

drwxr-xr-x. 2 root root 57 4月 27 19:14 glance

drwxr-xr-x. 2 root root 6 4月 27 19:20 nova

[root@procontroller01 glance]#

3.3.4.5.2 拷贝ceph.client.cinder.keyring 到 /etc/kolla/config/nova

[cephadmin@proceph01 ceph]$ scp /etc/ceph/ceph.client.cinder.keyring root@10.3.140.11:/etc/kolla/config/nova/

root@10.3.140.11's password:

ceph.client.cinder.keyring 100% 64 126.0KB/s 00:00

[cephadmin@proceph01 ceph]$

3.3.4.5.3 配置ceph.conf

[root@procontroller01 glance]# cp /etc/kolla/config/glance/ceph.conf /etc/kolla/config/nova/

[root@procontroller01 glance]# ll /etc/kolla/config/nova/

总用量 8

-rw-r--r--. 1 root root 64 4月 27 19:21 ceph.client.cinder.keyring

-rw-r--r--. 1 root root 100 4月 27 19:23 ceph.conf

[root@procontroller01 glance]#

3.3.4.5.4 配置nova.conf

umap可以启用trim

writeback设置虚拟机的disk cache

[root@procontroller01 glance]# vim /etc/kolla/config/nova/nova.conf

[root@procontroller01 glance]#

内容如下:

[root@procontroller01 glance]# cat /etc/kolla/config/nova/nova.conf

[libvirt]

hw_disk_discard = unmap

disk_cachemodes="network=writeback"

cpu_mode=host-passthrough

[root@procontroller01 glance]#

3.3.5 kolla-ansible 安装openstack ussuri

3.3.5.1 预检查

[root@procontroller01 kolla]# kolla-ansible -v -i /root/ansible/multinode prechecks

3.3.5.2 拉去依赖包

[root@procontroller01 kolla]# kolla-ansible -v -i /root/ansible/multinode pull

Pulling Docker images : ansible-playbook -i /root/ansible/multinode -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla -e kolla_action=pull /usr/local/share/kolla-ansible/ansible/site.yml --verbose

Using /etc/ansible/ansible.cfg as config file

3.3.5.3 部署

[root@procontroller01 kolla]# kolla-ansible -v -i /root/ansible/multinode deploy

3.3.5.4 安装openstackclient(控制计算节点)

[root@procontroller01 openstackclient]# pip install --no-index --find-links=/root/software/ussuri/openstackclient python_openstackclient==5.2.0

3.3.5.5 生成本地环境变量

[root@procontroller01 openstackclient]# kolla-ansible -v -i /root/ansible/multinode post-deploy

Post-Deploying Playbooks : ansible-playbook -i /root/ansible/multinode -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla /usr/local/share/kolla-ansible/ansible/post-deploy.yml --verbose

[root@procontroller01 openstackclient]# source /etc/kolla/admin-openrc.sh

[root@procontroller01 openstackclient]# openstack server list

[root@procontroller01 openstackclient]# openstack nova list

openstack: 'nova list' is not an openstack command. See 'openstack --help'.

Did you mean one of these?

quota list

quota set

quota show

[root@procontroller01 openstackclient]#

3.3.6 实施vlan 网络

3.3.6.1 部署前

[root@procontroller01 templates]# pwd

/usr/local/share/kolla-ansible/ansible/roles/neutron/templates

[root@procontroller01 templates]#

[root@procontroller01 templates]# cp ml2_conf.ini.j2 ml2_conf.ini.j2.bak.orig

[root@procontroller01 templates]# vim ml2_conf.ini.j2

修改内容

[ml2_type_vlan]

{% if enable_ironic | bool %}

network_vlan_ranges = physnet1

{% else %}

network_vlan_ranges = physnet1:140:149

{% endif %}

若在上一步骤已经部署过,且系统里没有数据,可以destroy ,重新部署,重新推送配置文件

这一步谨慎执行,因为也可以直接修改配置文件

[root@procontroller01 ~]# kolla-ansible -v -i /root/ansible/multinode destroy --yes-i-really-really-mean-it

Destroy Kolla containers, volumes and host configuration : ansible-playbook -i /root/ansible/multinode -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla /usr/local/share/kolla-ansible/ansible/destroy.yml --verbose

3.3.6.2 部署后修改文件

3.3.6.2.1 控制节点(网络节点)配置文件

其实第一步也可以放在这里手动修改

/etc/kolla/neutron-server/ml2_conf.ini

主要修改了此句:tenant_network_types = vxlan,vlan,flat

[root@procontroller01 neutron-server]# cat ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan,vlan,flat

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_vlan]

network_vlan_ranges = physnet1:140:149

[ml2_type_flat]

flat_networks = physnet1

[ml2_type_vxlan]

vni_ranges = 1:1000

重启控制节点服务

docker restart neutron_server neutron_openvswitch_agent

[root@procontroller03 ~]# docker exec -it -u root neutron_openvswitch_agent /bin/bash

(neutron-openvswitch-agent)[root@procontroller03 /]# ovs-vsctl show

081a24d5-6033-4d2c-ace6-e47e591a8b6b

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

datapath_type: system

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port br-ex

Interface br-ex

type: internal

Port bond1

Interface bond1

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

datapath_type: system

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

datapath_type: system

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port br-int

Interface br-int

type: internal

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

(neutron-openvswitch-agent)[root@procontroller03 /]#

3.3.6.2.2 计算节点

计算点 主要是修改neutron-openvswitch 交换机里面的配置,需要新增网桥并与外部物理网卡对应起来。

[root@procompute01 neutron-openvswitch-agent]# docker exec -it -u root neutron_openvswitch_agent /bin/bash

(neutron-openvswitch-agent)[root@procompute01 /]# ovs-vsctl add-br br-ex

(neutron-openvswitch-agent)[root@procompute01 /]# ovs-vsctl add-port br-ex bond1

(neutron-openvswitch-agent)[root@procompute01 /]# exit

exit

新增 bridge_mappings = physnet1:br-ex

[root@procompute01 neutron-openvswitch-agent]# cp openvswitch_agent.ini openvswitch_agent.ini.bak.orig

[root@procompute01 neutron-openvswitch-agent]# vim openvswitch_agent.ini

[root@procompute01 neutron-openvswitch-agent]#

[agent]

tunnel_types = vxlan

l2_population = true

arp_responder = true

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[ovs]

bridge_mappings = physnet1:br-ex

datapath_type = system

ovsdb_connection = tcp:127.0.0.1:6640

local_ip = 10.3.140.21

计算节点添加port、bridge 并将 bridge 指定网卡

[root@procompute01 neutron-openvswitch-agent]# docker restart neutron_openvswitch_agent

neutron_openvswitch_agent

[root@procompute01 neutron-openvswitch-agent]# docker exec -it -u root neutron_openvswitch_agent /bin/bash

(neutron-openvswitch-agent)[root@procompute01 /]# ovs-vsctl show

d90e91de-9445-47d3-b3c8-04937855be2a

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

datapath_type: system

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port br-ex

Interface br-ex

type: internal

Port bond1

Interface bond1

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

datapath_type: system

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

(neutron-openvswitch-agent)[root@procompute01 /]#

重启计算节点网络服务器

[root@procompute01 ~]# docker restart neutron_openvswitch_agent

neutron_openvswitch_agent

[root@procompute01 ~]#

3.3.6.3 运行initrunonce

修改原版initrunonce 镜像地址,及网路参数即可

#IMAGE_PATH=/opt/cache/files/

IMAGE_PATH=/usr/local/share/kolla-ansible/

IMAGE_URL=https://github.com/cirros-dev/cirros/releases/download/0.5.1/

#IMAGE=cirros-0.5.1-${ARCH}-disk.img

IMAGE=cirros-0.3.4-x86_64-disk.img

IMAGE_NAME=cirros

IMAGE_TYPE=linux

# This EXT_NET_CIDR is your public network,that you want to connect to the internet via.

ENABLE_EXT_NET=${ENABLE_EXT_NET:-1}

EXT_NET_CIDR=${EXT_NET_CIDR:-'10.3.140.0/24'}

EXT_NET_RANGE=${EXT_NET_RANGE:-'start=10.3.140.150,end=10.3.140.199'}

EXT_NET_GATEWAY=${EXT_NET_GATEWAY:-'10.3.140.1'}

$KOLLA_OPENSTACK_COMMAND network create demo-net

$KOLLA_OPENSTACK_COMMAND subnet create --subnet-range 172.31.164.0/24 --network demo-net \

--gateway 172.31.164.1 --dns-nameserver 8.8.8.8 demo-subnet

执行命令

[root@procontroller01 kolla-ansible]# source /etc/kolla/admin-openrc.sh

[root@procontroller01 kolla-ansible]# source /usr/local/share/kolla-ansible/init-runonce

Checking for locally available cirros image.

Using cached cirros image from the nodepool node.

Creating glance image.

3.3.6.4 手动创建网络

有两种方法通过命令行或者通过dashboard

3.3.6.4.1 通过命令行创建网络

创建142 vlan

[root@procontroller01 kolla-ansible]# openstack network create provider_vlan_net142 --project admin --provider-network-type vlan --external --provider-physical-network physnet1 --provider-segment 142 --share

[root@procontroller01 kolla-ansible]# openstack subnet create vlan_subnet142 --project admin --network provider_vlan_net142 --subnet-range 10.3.142.0/24 --allocation-pool start=10.3.142.10,end=10.3.142.250

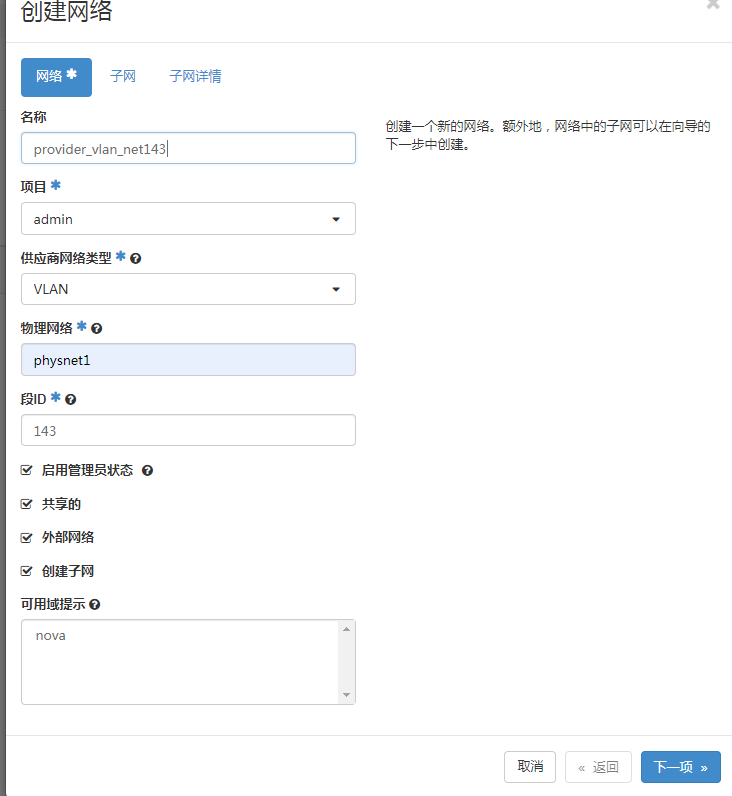

3.3.6.4.2 dashboard创建网络

登录dashboard,》管理员》网络==》创建网络

[root@procontroller01 kolla-ansible]# openstack network list

+--------------------------------------+----------------------+--------------------------------------+

| ID | Name | Subnets |

+--------------------------------------+----------------------+--------------------------------------+

| 7536754e-f9b1-4939-8900-3bbed9d706ad | provider_vlan_net143 | a1e3da64-b633-42c0-b455-e44e0656571c |

| a14f90bc-2094-41a1-bf23-1daa4618ad59 | provider_vlan_net142 | a524df0d-5ccb-4817-a5e0-3b45b11eef8b |

+--------------------------------------+----------------------+--------------------------------------+

[root@procontroller01 kolla-ansible]#

3.3.6.5 测试验证

posted on 2021-04-29 16:43 weiwei2021 阅读(709) 评论(0) 编辑 收藏 举报