openstack ussuri版本基于内网三台物理机集群kolla-ansible部署及与ceph 集群 集成

零 修订记录

| 序号 | 修订内容 | 修订时间 |

|---|---|---|

| 1 | 域名 kxxxxx.com 改为chouniu.fun | 20210120 |

一 摘要

本文主要说明物理机上使用kolla-ansible 部署openstack ussuri,以及与ceph 集群集成。

ceph 集群部署请参考:ceph nautilus 三台物理机集群部署 ceph nautilus 三台虚机集群部署

二 环境信息

(一)软件环境信息

2.1.1 操作系统版本

[root@kolla ~]# cat /etc/centos-release

CentOS Linux release 8.1.1911 (Core)

[root@kolla ~]# uname -a

Linux kolla 4.18.0-147.el8.x86_64 #1 SMP Wed Dec 4 21:51:45 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

[root@kolla ~]#

2.1.2 docker 版本

2.1.3 ansible 版本

2.1.3 kolla-ansble 版本

2.1.4 openstack 版本

2.1.5 ceph 版本

(二)硬件环境信息

2.2.1 服务器信息

| 品牌 | 型号 | 网卡 | IP | 配置 |

|---|---|---|---|---|

| 华为 | RH1288V3 | eth0 | 10.3.176.2 | Intel(R) Xeon(R) CPU E5-2603 v4 @ 1.70GHz 内存:8G 硬盘:2TB SATA*1 |

| 曙光 | Sugon I620-G30 | eth0 | 10.3.176.35 | Intel(R) Xeon(R) Gold 5118 CPU @ 2.30GHz2 内存:320G 32G10 硬盘:12块硬盘大小未标明,总大小223.1G+9.6T |

| 浪潮 | Inspur NF5270M3 | eth0 | 10.3.176.37 | Intel(R) Xeon(R) CPU E5-2650 v2 @ 2.60GHz2 内存:320G 16G20 硬盘:3.638T SAS*8 |

| 品牌 | 物理机配置 |

|---|---|

| Huashan Technologies R4900 G2 | Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20GHz*2;内存:128G;硬盘:5块硬盘大小未标明,总大小4.4T |

| Sugon I620-G20 | Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20GHz*2;内存:224G;硬盘:7块硬盘大小未标明,总大小4.4T+558.4G |

| 浪潮 SA5212M5 | Intel Silver 4210 2.2G * 2 ;内存:总256G 32G*8;硬盘:240G SSD * 2 ;8T/SATA/7.2K * 6;网卡:网卡 X710 10G * 1 |

三 部署规划

本次部署使用三台物理机部署openstack,每台机器上都部署控制、计算、网络,存储使用ceph 集群。

10.3.176.8 作为部署成功的虚IP.

| 机器名 | 网卡 | IP | VIP | 角色 |

|---|---|---|---|---|

| ussuritest001.cloud.chouniu.fun | eth0 | 10.3.176.2 | 10.3.176.8 | kolla-ansible kolla ansible 部署服务器 控制 计算 网络 存储服务 |

| ussuritest002.cloud.chouniu.fun | eth0 | 10.3.176.35 | 10.3.176.8 | 控制 计算 网络 存储服务 |

| ussuritest003.cloud.chouniu.fun | eth0 | 10.3.176.37 | 10.3.176.8 | 控制 计算 网络 存储服务 |

| 主机名 | IP |磁盘 |角色|

| ---- | ---- | ---- | ---- | ---- |---|

| cephtest001.ceph.chouniu.fun | 10.3.176.10 | 系统盘:/dev/sda 数据盘:/dev/sdb /dev/sdc /dev/sdd |ceph-deploy,monitor,mgr,mds,osd|

| cephtest002.ceph.chouniu.fun | 10.3.176.16 | 系统盘:/dev/sda 数据盘:/dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf |monitor,mgr,mds,osd|

| cephtest003.ceph.chouniu.fun | 10.3.176.44 | 系统盘:/dev/sda 数据盘:/dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf /dev/sdg |monitor,mgr,mds,osd|

四 部署准备

我们的物理机在内网,先在外网(能连互联网)准备一台虚机把openstack u 版 所有镜像,以及kolla-ansible 安装所需要的相关软件下载下来,然后人工转到内网。

(一)下载openstack ussuri 所有镜像

4.1.1 修改globals.yml

尽量下载所有镜像

---

# You can use this file to override _any_ variable throughout Kolla.

# Additional options can be found in the

# 'kolla-ansible/ansible/group_vars/all.yml' file. Default value of all the

# commented parameters are shown here, To override the default value uncomment

# the parameter and change its value.

###############

# Kolla options

###############

# Valid options are [ COPY_ONCE, COPY_ALWAYS ]

#config_strategy: "COPY_ALWAYS"

# Valid options are ['centos', 'debian', 'rhel', 'ubuntu']

kolla_base_distro: "centos"

# Valid options are [ binary, source ]

kolla_install_type: "source"

# Do not override this unless you know what you are doing.

openstack_release: "ussuri"

# Docker image tag used by default.

#openstack_tag: "{{ openstack_release ~ openstack_tag_suffix }}"

# Suffix applied to openstack_release to generate openstack_tag.

#openstack_tag_suffix: ""

# Location of configuration overrides

#node_custom_config: "/etc/kolla/config"

# This should be a VIP, an unused IP on your network that will float between

# the hosts running keepalived for high-availability. If you want to run an

# All-In-One without haproxy and keepalived, you can set enable_haproxy to no

# in "OpenStack options" section, and set this value to the IP of your

# 'network_interface' as set in the Networking section below.

kolla_internal_vip_address: "172.31.185.160"

# This is the DNS name that maps to the kolla_internal_vip_address VIP. By

# default it is the same as kolla_internal_vip_address.

#kolla_internal_fqdn: "{{ kolla_internal_vip_address }}"

# This should be a VIP, an unused IP on your network that will float between

# the hosts running keepalived for high-availability. It defaults to the

# kolla_internal_vip_address, allowing internal and external communication to

# share the same address. Specify a kolla_external_vip_address to separate

# internal and external requests between two VIPs.

#kolla_external_vip_address: "{{ kolla_internal_vip_address }}"

# The Public address used to communicate with OpenStack as set in the public_url

# for the endpoints that will be created. This DNS name should map to

# kolla_external_vip_address.

#kolla_external_fqdn: "{{ kolla_external_vip_address }}"

################

# Docker options

################

# Custom docker registry settings:

#docker_registry:

#docker_registry_insecure: "{{ 'yes' if docker_registry else 'no' }}"

#docker_registry_username:

# docker_registry_password is set in the passwords.yml file.

# Namespace of images:

#docker_namespace: "kolla"

# Docker client timeout in seconds.

#docker_client_timeout: 120

#docker_configure_for_zun: "no"

#containerd_configure_for_zun: "no"

#containerd_grpc_gid: 42463

###################

# Messaging options

###################

# Below is an example of an separate backend that provides brokerless

# messaging for oslo.messaging RPC communications

#om_rpc_transport: "amqp"

#om_rpc_user: "{{ qdrouterd_user }}"

#om_rpc_password: "{{ qdrouterd_password }}"

#om_rpc_port: "{{ qdrouterd_port }}"

#om_rpc_group: "qdrouterd"

##############################

# Neutron - Networking Options

##############################

# This interface is what all your api services will be bound to by default.

# Additionally, all vxlan/tunnel and storage network traffic will go over this

# interface by default. This interface must contain an IP address.

# It is possible for hosts to have non-matching names of interfaces - these can

# be set in an inventory file per host or per group or stored separately, see

# http://docs.ansible.com/ansible/intro_inventory.html

# Yet another way to workaround the naming problem is to create a bond for the

# interface on all hosts and give the bond name here. Similar strategy can be

# followed for other types of interfaces.

network_interface: "ens3"

# These can be adjusted for even more customization. The default is the same as

# the 'network_interface'. These interfaces must contain an IP address.

#kolla_external_vip_interface: "{{ network_interface }}"

#api_interface: "{{ network_interface }}"

#storage_interface: "{{ network_interface }}"

#cluster_interface: "{{ network_interface }}"

#swift_storage_interface: "{{ storage_interface }}"

#swift_replication_interface: "{{ swift_storage_interface }}"

#tunnel_interface: "{{ network_interface }}"

#dns_interface: "{{ network_interface }}"

#octavia_network_interface: "{{ api_interface }}"

# Configure the address family (AF) per network.

# Valid options are [ ipv4, ipv6 ]

#network_address_family: "ipv4"

#api_address_family: "{{ network_address_family }}"

#storage_address_family: "{{ network_address_family }}"

#cluster_address_family: "{{ network_address_family }}"

#swift_storage_address_family: "{{ storage_address_family }}"

#swift_replication_address_family: "{{ swift_storage_address_family }}"

#migration_address_family: "{{ api_address_family }}"

#tunnel_address_family: "{{ network_address_family }}"

#octavia_network_address_family: "{{ api_address_family }}"

#bifrost_network_address_family: "{{ network_address_family }}"

#dns_address_family: "{{ network_address_family }}"

# This is the raw interface given to neutron as its external network port. Even

# though an IP address can exist on this interface, it will be unusable in most

# configurations. It is recommended this interface not be configured with any IP

# addresses for that reason.

neutron_external_interface: "eth0"

# Valid options are [ openvswitch, linuxbridge, vmware_nsxv, vmware_nsxv3, vmware_dvs ]

# if vmware_nsxv3 is selected, enable_openvswitch MUST be set to "no" (default is yes)

neutron_plugin_agent: "openvswitch"

# Valid options are [ internal, infoblox ]

#neutron_ipam_driver: "internal"

# Configure Neutron upgrade option, currently Kolla support

# two upgrade ways for Neutron: legacy_upgrade and rolling_upgrade

# The variable "neutron_enable_rolling_upgrade: yes" is meaning rolling_upgrade

# were enabled and opposite

# Neutron rolling upgrade were enable by default

#neutron_enable_rolling_upgrade: "yes"

####################

# keepalived options

####################

# Arbitrary unique number from 0..255

# This should be changed from the default in the event of a multi-region deployment

# where the VIPs of different regions reside on a common subnet.

#keepalived_virtual_router_id: "51"

###################

# Dimension options

###################

# This is to provide an extra option to deploy containers with Resource constraints.

# We call it dimensions here.

# The dimensions for each container are defined by a mapping, where each dimension value should be a

# string.

# Reference_Docs

# https://docs.docker.com/config/containers/resource_constraints/

# eg:

# <container_name>_dimensions:

# blkio_weight:

# cpu_period:

# cpu_quota:

# cpu_shares:

# cpuset_cpus:

# cpuset_mems:

# mem_limit:

# mem_reservation:

# memswap_limit:

# kernel_memory:

# ulimits:

#############

# TLS options

#############

# To provide encryption and authentication on the kolla_external_vip_interface,

# TLS can be enabled. When TLS is enabled, certificates must be provided to

# allow clients to perform authentication.

#kolla_enable_tls_internal: "no"

#kolla_enable_tls_external: "{{ kolla_enable_tls_internal if kolla_same_external_internal_vip | bool else 'no' }}"

#kolla_certificates_dir: "{{ node_config }}/certificates"

#kolla_external_fqdn_cert: "{{ kolla_certificates_dir }}/haproxy.pem"

#kolla_internal_fqdn_cert: "{{ kolla_certificates_dir }}/haproxy-internal.pem"

#kolla_admin_openrc_cacert: ""

#kolla_copy_ca_into_containers: "no"

#kolla_verify_tls_backend: "yes"

#haproxy_backend_cacert: "{{ 'ca-certificates.crt' if kolla_base_distro in ['debian', 'ubuntu'] else 'ca-bundle.trust.crt' }}"

#haproxy_backend_cacert_dir: "/etc/ssl/certs"

#kolla_enable_tls_backend: "no"

#kolla_tls_backend_cert: "{{ kolla_certificates_dir }}/backend-cert.pem"

#kolla_tls_backend_key: "{{ kolla_certificates_dir }}/backend-key.pem"

################

# Region options

################

# Use this option to change the name of this region.

#openstack_region_name: "RegionOne"

# Use this option to define a list of region names - only needs to be configured

# in a multi-region deployment, and then only in the *first* region.

#multiple_regions_names: ["{{ openstack_region_name }}"]

###################

# OpenStack options

###################

# Use these options to set the various log levels across all OpenStack projects

# Valid options are [ True, False ]

#openstack_logging_debug: "False"

# Enable core OpenStack services. This includes:

# glance, keystone, neutron, nova, heat, and horizon.

enable_openstack_core: "yes"

# These roles are required for Kolla to be operation, however a savvy deployer

# could disable some of these required roles and run their own services.

#enable_glance: "{{ enable_openstack_core | bool }}"

enable_haproxy: "yes"

#enable_keepalived: "{{ enable_haproxy | bool }}"

#enable_keystone: "{{ enable_openstack_core | bool }}"

#enable_mariadb: "yes"

#enable_memcached: "yes"

#enable_neutron: "{{ enable_openstack_core | bool }}"

#enable_nova: "{{ enable_openstack_core | bool }}"

#enable_rabbitmq: "{{ 'yes' if om_rpc_transport == 'rabbit' or om_notify_transport == 'rabbit' else 'no' }}"

#enable_outward_rabbitmq: "{{ enable_murano | bool }}"

# OpenStack services can be enabled or disabled with these options

enable_aodh: "yes"

enable_barbican: "yes"

enable_blazar: "yes"

enable_ceilometer: "yes"

enable_ceilometer_ipmi: "yes"

enable_cells: "no"

enable_central_logging: "yes"

enable_chrony: "yes"

enable_cinder: "yes"

enable_cinder_backup: "yes"

enable_cinder_backend_hnas_nfs: "no"

enable_cinder_backend_iscsi: "{{ enable_cinder_backend_lvm | bool or enable_cinder_backend_zfssa_iscsi | bool }}"

enable_cinder_backend_lvm: "yes"

enable_cinder_backend_nfs: "no"

enable_cinder_backend_zfssa_iscsi: "no"

enable_cinder_backend_quobyte: "no"

enable_cloudkitty: "yes"

enable_collectd: "yes"

enable_congress: "yes"

enable_cyborg: "yes"

enable_designate: "yes"

enable_destroy_images: "yes"

enable_elasticsearch: "{{ 'yes' if enable_central_logging | bool or enable_osprofiler | bool or enable_skydive | bool or enable_monasca | bool else 'no' }}"

enable_elasticsearch_curator: "yes"

enable_etcd: "yes"

enable_fluentd: "yes"

enable_freezer: "yes"

enable_gnocchi: "yes"

enable_gnocchi_statsd: "yes"

enable_grafana: "yes"

enable_heat: "{{ enable_openstack_core | bool }}"

enable_horizon: "{{ enable_openstack_core | bool }}"

enable_horizon_blazar: "{{ enable_blazar | bool }}"

enable_horizon_cloudkitty: "{{ enable_cloudkitty | bool }}"

enable_horizon_congress: "{{ enable_congress | bool }}"

enable_horizon_designate: "{{ enable_designate | bool }}"

enable_horizon_fwaas: "{{ enable_neutron_fwaas | bool }}"

enable_horizon_freezer: "{{ enable_freezer | bool }}"

enable_horizon_heat: "{{ enable_heat | bool }}"

enable_horizon_ironic: "{{ enable_ironic | bool }}"

enable_horizon_karbor: "{{ enable_karbor | bool }}"

enable_horizon_magnum: "{{ enable_magnum | bool }}"

enable_horizon_manila: "{{ enable_manila | bool }}"

enable_horizon_masakari: "{{ enable_masakari | bool }}"

enable_horizon_mistral: "{{ enable_mistral | bool }}"

enable_horizon_monasca: "{{ enable_monasca | bool }}"

enable_horizon_murano: "{{ enable_murano | bool }}"

enable_horizon_neutron_vpnaas: "{{ enable_neutron_vpnaas | bool }}"

enable_horizon_octavia: "{{ enable_octavia | bool }}"

enable_horizon_qinling: "{{ enable_qinling | bool }}"

enable_horizon_sahara: "{{ enable_sahara | bool }}"

enable_horizon_searchlight: "{{ enable_searchlight | bool }}"

enable_horizon_senlin: "{{ enable_senlin | bool }}"

enable_horizon_solum: "{{ enable_solum | bool }}"

enable_horizon_tacker: "{{ enable_tacker | bool }}"

enable_horizon_trove: "{{ enable_trove | bool }}"

enable_horizon_vitrage: "{{ enable_vitrage | bool }}"

enable_horizon_watcher: "{{ enable_watcher | bool }}"

enable_horizon_zun: "{{ enable_zun | bool }}"

enable_hyperv: "yes"

enable_influxdb: "{{ enable_monasca | bool or (enable_cloudkitty | bool and cloudkitty_storage_backend == 'influxdb') }}"

enable_ironic: "yes"

enable_ironic_ipxe: "yes"

#enable_ironic_neutron_agent: "{{ enable_neutron | bool and enable_ironic | bool }}"

enable_ironic_pxe_uefi: "yes"

enable_iscsid: "{{ (enable_cinder | bool and enable_cinder_backend_iscsi | bool) or enable_ironic | bool }}"

enable_karbor: "yes"

enable_kafka: "{{ enable_monasca | bool }}"

enable_kibana: "{{ 'yes' if enable_central_logging | bool or enable_monasca | bool else 'no' }}"

enable_kuryr: "yes"

enable_magnum: "yes"

enable_manila: "yes"

enable_manila_backend_generic: "yes"

enable_manila_backend_hnas: "yes"

enable_manila_backend_cephfs_native: "yes"

enable_manila_backend_cephfs_nfs: "yes"

enable_mariabackup: "yes"

enable_masakari: "yes"

enable_mistral: "yes"

enable_monasca: "yes"

enable_mongodb: "yes"

enable_multipathd: "yes"

enable_murano: "yes"

enable_neutron_vpnaas: "yes"

enable_neutron_sriov: "yes"

enable_neutron_dvr: "yes"

enable_neutron_fwaas: "yes"

enable_neutron_qos: "yes"

enable_neutron_agent_ha: "yes"

enable_neutron_bgp_dragent: "yes"

enable_neutron_provider_networks: "yes"

enable_neutron_segments: "yes"

enable_neutron_sfc: "yes"

enable_neutron_metering: "yes"

enable_neutron_infoblox_ipam_agent: "yes"

enable_neutron_port_forwarding: "yes"

enable_nova_serialconsole_proxy: "yes"

enable_nova_ssh: "yes"

enable_octavia: "yes"

enable_openvswitch: "{{ enable_neutron | bool and neutron_plugin_agent != 'linuxbridge' }}"

enable_ovn: "{{ enable_neutron | bool and neutron_plugin_agent == 'ovn' }}"

enable_ovs_dpdk: "yes"

enable_osprofiler: "yes"

enable_panko: "yes"

enable_placement: "{{ enable_nova | bool or enable_zun | bool }}"

enable_prometheus: "yes"

enable_qdrouterd: "{{ 'yes' if om_rpc_transport == 'amqp' else 'no' }}"

enable_qinling: "yes"

enable_rally: "yes"

enable_redis: "yes"

enable_sahara: "yes"

enable_searchlight: "yes"

enable_senlin: "yes"

enable_skydive: "yes"

enable_solum: "yes"

enable_storm: "{{ enable_monasca | bool }}"

enable_swift: "yes"

enable_swift_s3api: "yes"

enable_tacker: "yes"

enable_telegraf: "yes"

enable_tempest: "yes"

enable_trove: "yes"

enable_trove_singletenant: "yes"

enable_vitrage: "yes"

enable_vmtp: "yes"

enable_watcher: "yes"

enable_zookeeper: "{{ enable_kafka | bool or enable_storm | bool }}"

enable_zun: "yes"

##################

# RabbitMQ options

##################

# Options passed to RabbitMQ server startup script via the

# RABBITMQ_SERVER_ADDITIONAL_ERL_ARGS environment var.

# See Kolla Ansible docs RabbitMQ section for details.

# These are appended to args already provided by Kolla Ansible

# to configure IPv6 in RabbitMQ server.

#rabbitmq_server_additional_erl_args: ""

#################

# MariaDB options

#################

# List of additional WSREP options

#mariadb_wsrep_extra_provider_options: []

#######################

# External Ceph options

#######################

# External Ceph - cephx auth enabled (this is the standard nowadays, defaults to yes)

#external_ceph_cephx_enabled: "yes"

# Glance

ceph_glance_keyring: "ceph.client.glance.keyring"

ceph_glance_user: "glance"

ceph_glance_pool_name: "images"

# Cinder

ceph_cinder_keyring: "ceph.client.cinder.keyring"

ceph_cinder_user: "cinder"

ceph_cinder_pool_name: "volumes"

ceph_cinder_backup_keyring: "ceph.client.cinder-backup.keyring"

ceph_cinder_backup_user: "cinder-backup"

ceph_cinder_backup_pool_name: "backups"

# Nova

ceph_nova_keyring: "{{ ceph_cinder_keyring }}"

ceph_nova_user: "cinder"

ceph_nova_pool_name: "vms"

# Gnocchi

#ceph_gnocchi_keyring: "ceph.client.gnocchi.keyring"

#ceph_gnocchi_user: "gnocchi"

#ceph_gnocchi_pool_name: "gnocchi"

# Manila

#ceph_manila_keyring: "ceph.client.manila.keyring"

#ceph_manila_user: "manila"

#############################

# Keystone - Identity Options

#############################

# Valid options are [ fernet ]

#keystone_token_provider: 'fernet'

#keystone_admin_user: "admin"

#keystone_admin_project: "admin"

# Interval to rotate fernet keys by (in seconds). Must be an interval of

# 60(1 min), 120(2 min), 180(3 min), 240(4 min), 300(5 min), 360(6 min),

# 600(10 min), 720(12 min), 900(15 min), 1200(20 min), 1800(30 min),

# 3600(1 hour), 7200(2 hour), 10800(3 hour), 14400(4 hour), 21600(6 hour),

# 28800(8 hour), 43200(12 hour), 86400(1 day), 604800(1 week).

#fernet_token_expiry: 86400

########################

# Glance - Image Options

########################

# Configure image backend.

glance_backend_ceph: "yes"

#glance_backend_file: "yes"

#glance_backend_swift: "no"

#glance_backend_vmware: "no"

#enable_glance_image_cache: "no"

# Configure glance upgrade option.

# Due to this feature being experimental in glance,

# the default value is "no".

#glance_enable_rolling_upgrade: "no"

####################

# Osprofiler options

####################

# valid values: ["elasticsearch", "redis"]

#osprofiler_backend: "elasticsearch"

##################

# Barbican options

##################

# Valid options are [ simple_crypto, p11_crypto ]

#barbican_crypto_plugin: "simple_crypto"

#barbican_library_path: "/usr/lib/libCryptoki2_64.so"

################

## Panko options

################

# Valid options are [ mongodb, mysql ]

#panko_database_type: "mysql"

#################

# Gnocchi options

#################

# Valid options are [ file, ceph, swift ]

#gnocchi_backend_storage: "{% if enable_swift | bool %}swift{% else %}file{% endif %}"

#gnocchi_backend_storage: "ceph"

# Valid options are [redis, '']

#gnocchi_incoming_storage: "{{ 'redis' if enable_redis | bool else '' }}"

################################

# Cinder - Block Storage Options

################################

# Enable / disable Cinder backends

cinder_backend_ceph: "yes"

#cinder_backend_vmwarevc_vmdk: "no"

#cinder_volume_group: "cinder-volumes"

# Valid options are [ '', redis, etcd ]

#cinder_coordination_backend: "{{ 'redis' if enable_redis|bool else 'etcd' if enable_etcd|bool else '' }}"

# Valid options are [ nfs, swift, ceph ]

#cinder_backup_driver: "ceph"

#cinder_backup_share: ""

#cinder_backup_mount_options_nfs: ""

#######################

# Cloudkitty options

#######################

# Valid option is gnocchi

#cloudkitty_collector_backend: "gnocchi"

# Valid options are 'sqlalchemy' or 'influxdb'. The default value is

# 'influxdb', which matches the default in Cloudkitty since the Stein release.

# When the backend is "influxdb", we also enable Influxdb.

# Also, when using 'influxdb' as the backend, we trigger the configuration/use

# of Cloudkitty storage backend version 2.

#cloudkitty_storage_backend: "influxdb"

###################

# Designate options

###################

# Valid options are [ bind9 ]

#designate_backend: "bind9"

#designate_ns_record: "sample.openstack.org"

# Valid options are [ '', redis ]

#designate_coordination_backend: "{{ 'redis' if enable_redis|bool else '' }}"

########################

# Nova - Compute Options

########################

nova_backend_ceph: "yes"

# Valid options are [ qemu, kvm, vmware, xenapi ]

nova_compute_virt_type: "qemu"

# The number of fake driver per compute node

#num_nova_fake_per_node: 5

# The flag "nova_safety_upgrade" need to be consider when

# "nova_enable_rolling_upgrade" is enabled. The "nova_safety_upgrade"

# controls whether the nova services are all stopped before rolling

# upgrade to the new version, for the safety and availability.

# If "nova_safety_upgrade" is "yes", that will stop all nova services (except

# nova-compute) for no failed API operations before upgrade to the

# new version. And opposite.

#nova_safety_upgrade: "no"

# Valid options are [ none, novnc, spice, rdp ]

#nova_console: "novnc"

##############################

# Neutron - networking options

##############################

# Enable distributed floating ip for OVN deployments

#neutron_ovn_distributed_fip: "no"

#################

# Hyper-V options

#################

# NOTE: Hyper-V support has been deprecated and will be removed in the Victoria cycle.

# Hyper-V can be used as hypervisor

#hyperv_username: "user"

#hyperv_password: "password"

#vswitch_name: "vswitch"

# URL from which Nova Hyper-V MSI is downloaded

#nova_msi_url: "https://www.cloudbase.it/downloads/HyperVNovaCompute_Beta.msi"

#############################

# Horizon - Dashboard Options

#############################

#horizon_backend_database: "{{ enable_murano | bool }}"

#############################

# Ironic options

#############################

# dnsmasq bind interface for Ironic Inspector, by default is network_interface

#ironic_dnsmasq_interface: "{{ network_interface }}"

# The following value must be set when enabling ironic,

# the value format is "192.168.0.10,192.168.0.100".

#ironic_dnsmasq_dhcp_range:

# PXE bootloader file for Ironic Inspector, relative to /tftpboot.

#ironic_dnsmasq_boot_file: "pxelinux.0"

# Configure ironic upgrade option, due to currently kolla support

# two upgrade ways for ironic: legacy_upgrade and rolling_upgrade

# The variable "ironic_enable_rolling_upgrade: yes" is meaning rolling_upgrade

# were enabled and opposite

# Rolling upgrade were enable by default

#ironic_enable_rolling_upgrade: "yes"

# List of extra kernel parameters passed to the kernel used during inspection

#ironic_inspector_kernel_cmdline_extras: []

######################################

# Manila - Shared File Systems Options

######################################

# HNAS backend configuration

#hnas_ip:

#hnas_user:

#hnas_password:

#hnas_evs_id:

#hnas_evs_ip:

#hnas_file_system_name:

################################

# Swift - Object Storage Options

################################

# Swift expects block devices to be available for storage. Two types of storage

# are supported: 1 - storage device with a special partition name and filesystem

# label, 2 - unpartitioned disk with a filesystem. The label of this filesystem

# is used to detect the disk which Swift will be using.

# Swift support two matching modes, valid options are [ prefix, strict ]

#swift_devices_match_mode: "strict"

# This parameter defines matching pattern: if "strict" mode was selected,

# for swift_devices_match_mode then swift_device_name should specify the name of

# the special swift partition for example: "KOLLA_SWIFT_DATA", if "prefix" mode was

# selected then swift_devices_name should specify a pattern which would match to

# filesystems' labels prepared for swift.

#swift_devices_name: "KOLLA_SWIFT_DATA"

# Configure swift upgrade option, due to currently kolla support

# two upgrade ways for swift: legacy_upgrade and rolling_upgrade

# The variable "swift_enable_rolling_upgrade: yes" is meaning rolling_upgrade

# were enabled and opposite

# Rolling upgrade were enable by default

#swift_enable_rolling_upgrade: "yes"

################################################

# Tempest - The OpenStack Integration Test Suite

################################################

# The following values must be set when enabling tempest

#tempest_image_id:

#tempest_flavor_ref_id:

#tempest_public_network_id:

#tempest_floating_network_name:

# tempest_image_alt_id: "{{ tempest_image_id }}"

# tempest_flavor_ref_alt_id: "{{ tempest_flavor_ref_id }}"

###################################

# VMware - OpenStack VMware support

###################################

# NOTE: VMware support has been deprecated and will be removed in the Victoria cycle.

#vmware_vcenter_host_ip:

#vmware_vcenter_host_username:

#vmware_vcenter_host_password:

#vmware_datastore_name:

#vmware_vcenter_name:

#vmware_vcenter_cluster_name:

#######################################

# XenAPI - Support XenAPI for XenServer

#######################################

# NOTE: XenAPI support has been deprecated and will be removed in the Victoria cycle.

# XenAPI driver use HIMN(Host Internal Management Network)

# to communicate with XenServer host.

#xenserver_himn_ip:

#xenserver_username:

#xenserver_connect_protocol:

############

# Prometheus

############

#enable_prometheus_server: "{{ enable_prometheus | bool }}"

#enable_prometheus_haproxy_exporter: "{{ enable_haproxy | bool }}"

#enable_prometheus_mysqld_exporter: "{{ enable_mariadb | bool }}"

#enable_prometheus_node_exporter: "{{ enable_prometheus | bool }}"

#enable_prometheus_cadvisor: "{{ enable_prometheus | bool }}"

#enable_prometheus_memcached: "{{ enable_prometheus | bool }}"

#enable_prometheus_alertmanager: "{{ enable_prometheus | bool }}"

#enable_prometheus_ceph_mgr_exporter: "no"

#enable_prometheus_openstack_exporter: "{{ enable_prometheus | bool }}"

#enable_prometheus_elasticsearch_exporter: "{{ enable_prometheus | bool and enable_elasticsearch | bool }}"

#enable_prometheus_blackbox_exporter: "{{ enable_prometheus | bool }}"

# List of extra parameters passed to prometheus. You can add as many to the list.

#prometheus_cmdline_extras:

# Example of setting endpoints for prometheus ceph mgr exporter.

# You should add all ceph mgr's in your external ceph deployment.

#prometheus_ceph_mgr_exporter_endpoints:

# - host1:port1

# - host2:port2

#########

# Freezer

#########

# Freezer can utilize two different database backends, elasticsearch or mariadb.

# Elasticsearch is preferred, however it is not compatible with the version deployed

# by kolla-ansible. You must first setup an external elasticsearch with 2.3.0.

# By default, kolla-ansible deployed mariadb is the used database backend.

#freezer_database_backend: "mariadb"

##########

# Telegraf

##########

# Configure telegraf to use the docker daemon itself as an input for

# telemetry data.

#telegraf_enable_docker_input: "no"

4.1.2 预检查及下载

预检查命令

[root@controller1 kolla]# kolla-ansible -i /root/ansible/all-in-one prechecks

Pre-deployment checking : ansible-playbook -i /root/ansible/all-in-one -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla -e kolla_action=precheck /usr/local/share/kolla-ansible/ansible/site.yml

预检查时 这些目录要有,否则可能会影响镜像下载。我这里缺少swift文件夹,所以预检查时报了一个错,但是不影响,因为我的ceph 不与swift 集成。

[root@controller1 config]# cd cinder/

[root@controller1 cinder]# ll

total 4

-rw-r--r-- 1 root root 110 Dec 14 16:26 ceph.conf

drwxr-xr-x 2 root root 81 Dec 14 16:23 cinder-backup

drwxr-xr-x 2 root root 40 Dec 14 16:23 cinder-volume

[root@controller1 cinder]# cd ../

[root@controller1 config]# ll

total 0

drwxr-xr-x 4 root root 65 Dec 14 16:26 cinder

drwxr-xr-x 2 root root 57 Dec 14 15:58 glance

drwxr-xr-x 2 root root 74 Dec 14 16:37 nova

[root@controller1 config]# pwd

/etc/kolla/config

[root@controller1 config]#

下载镜像命令

nohup kolla-ansible -v -i /root/ansible/all-in-one pull &

4.1.3 使用脚本下载镜像

通过该脚本也能拉取绝大部分镜像。

#!/usr/bin/env sh

FILE="

centos-source-aodh-api

centos-source-aodh-base

centos-source-aodh-evaluator

centos-source-aodh-expirer

centos-source-aodh-listener

centos-source-aodh-notifier

centos-source-barbican-api

centos-source-barbican-base

centos-source-barbican-keystone-listener

centos-source-barbican-worker

centos-source-base

centos-source-bifrost-base

centos-source-bifrost-deploy

centos-source-ceilometer-api

centos-source-ceilometer-base

centos-source-ceilometer-central

centos-source-ceilometer-collector

centos-source-ceilometer-compute

centos-source-ceilometer-notification

centos-source-ceph-base

centos-source-cephfs-fuse

centos-source-ceph-mds

centos-source-ceph-mon

centos-source-ceph-osd

centos-source-ceph-rgw

centos-source-chrony

centos-source-cinder-api

centos-source-cinder-backup

centos-source-cinder-base

centos-source-cinder-scheduler

centos-source-cinder-volume

centos-source-cloudkitty-api

centos-source-cloudkitty-base

centos-source-cloudkitty-processor

centos-source-collectd

centos-source-congress-api

centos-source-congress-base

centos-source-congress-datasource

centos-source-congress-policy-engine

centos-source-cron

centos-source-designate-api

centos-source-designate-backend-bind9

centos-source-designate-base

centos-source-designate-central

centos-source-designate-mdns

centos-source-designate-pool-manager

centos-source-designate-sink

centos-source-designate-worker

centos-source-dind

centos-source-dnsmasq

centos-source-elasticsearch

centos-source-etcd

centos-source-fluentd

centos-source-freezer-api

centos-source-freezer-base

centos-source-glance-api

centos-source-glance-base

centos-source-glance-registry

centos-source-gnocchi-api

centos-source-gnocchi-base

centos-source-gnocchi-metricd

centos-source-gnocchi-statsd

centos-source-grafana

centos-source-haproxy

centos-source-heat-api

centos-source-heat-api-cfn

centos-source-heat-base

centos-source-heat-engine

centos-source-heka

centos-source-helm-repository

centos-source-horizon

centos-source-influxdb

centos-source-ironic-api

centos-source-ironic-base

centos-source-ironic-conductor

centos-source-ironic-inspector

centos-source-ironic-pxe

centos-source-iscsid

centos-source-karbor-api

centos-source-karbor-base

centos-source-karbor-operationengine

centos-source-karbor-protection

centos-source-keepalived

centos-source-keystone

centos-source-keystone-base

centos-source-keystone-fernet

centos-source-keystone-ssh

centos-source-kibana

centos-source-kolla-toolbox

centos-source-kube-apiserver-amd64

centos-source-kube-base

centos-source-kube-controller-manager-amd64

centos-source-kube-discovery-amd64

centos-source-kube-proxy-amd64

centos-source-kubernetes-entrypoint

centos-source-kube-scheduler-amd64

centos-source-kubetoolbox

centos-source-kuryr-base

centos-source-kuryr-libnetwork

centos-source-magnum-api

centos-source-magnum-base

centos-source-magnum-conductor

centos-source-manila-api

centos-source-manila-base

centos-source-manila-data

centos-source-manila-scheduler

centos-source-manila-share

centos-source-mariadb

centos-source-memcached

centos-source-mistral-api

centos-source-mistral-base

centos-source-mistral-engine

centos-source-mistral-executor

centos-source-monasca-api

centos-source-monasca-base

centos-source-monasca-log-api

centos-source-monasca-notification

centos-source-monasca-persister

centos-source-monasca-statsd

centos-source-mongodb

centos-source-multipathd

centos-source-murano-api

centos-source-murano-base

centos-source-murano-engine

centos-source-neutron-base

centos-source-neutron-dhcp-agent

centos-source-neutron-l3-agent

centos-source-neutron-lbaas-agent

centos-source-neutron-linuxbridge-agent

centos-source-neutron-metadata-agent

centos-source-neutron-metering-agent

centos-source-neutron-openvswitch-agent

centos-source-neutron-server

centos-source-neutron-sfc-agent

centos-source-neutron-vpnaas-agent

centos-source-nova-api

centos-source-nova-base

centos-source-nova-compute

centos-source-nova-compute-ironic

centos-source-nova-conductor

centos-source-nova-consoleauth

centos-source-nova-libvirt

centos-source-nova-novncproxy

centos-source-nova-placement-api

centos-source-nova-scheduler

centos-source-nova-serialproxy

centos-source-nova-spicehtml5proxy

centos-source-nova-ssh

centos-source-octavia-api

centos-source-octavia-base

centos-source-octavia-health-manager

centos-source-octavia-housekeeping

centos-source-octavia-worker

centos-source-openstack-base

centos-source-openvswitch-base

centos-source-openvswitch-db-server

centos-source-openvswitch-vswitchd

centos-source-panko-api

centos-source-panko-base

centos-source-rabbitmq

centos-source-rally

centos-source-redis

centos-source-sahara-api

centos-source-sahara-base

centos-source-sahara-engine

centos-source-searchlight-api

centos-source-searchlight-base

centos-source-searchlight-listener

centos-source-senlin-api

centos-source-senlin-base

centos-source-senlin-engine

centos-source-solum-api

centos-source-solum-base

centos-source-solum-conductor

centos-source-solum-deployer

centos-source-solum-worker

centos-source-swift-account

centos-source-swift-base

centos-source-swift-container

centos-source-swift-object

centos-source-swift-object-expirer

centos-source-swift-proxy-server

centos-source-swift-rsyncd

centos-source-tacker

centos-source-telegraf

centos-source-tempest

centos-source-tgtd

centos-source-trove-api

centos-source-trove-base

centos-source-trove-conductor

centos-source-trove-guestagent

centos-source-trove-taskmanager

centos-source-vitrage-api

centos-source-vitrage-base

centos-source-vitrage-graph

centos-source-vitrage-notifier

centos-source-vmtp

centos-source-watcher-api

centos-source-watcher-applier

centos-source-watcher-base

centos-source-watcher-engine

centos-source-zaqar

centos-source-zookeeper

centos-source-zun-api

centos-source-zun-base

centos-source-zun-compute

"

for Name in $FILE

do

echo docker pull kolla/$Name:ussuri

sleep 1

docker pull kolla/$Name:ussuri

done

(二)转存openstack ussuri 所有镜像到内网

4.2.1 在外网机保存镜像

可见已下载了194个镜像

[root@controller1 scripts]# docker images | grep ussuri | wc -l

194

新建目录 \执行保存命令

[root@controller1 openstackussuriimages]# mkdir -p /data/openstackussuriimages/kolla

[root@controller1 openstackussuriimages]# for i in ` docker images | grep ussuri|awk '{print $1}'`;do docker save $i:ussuri >/data/openstackussuriimages/$i.tar; gzip /data/openstackussuriimages/$i.tar; done

4.2.2 上传内网镜像仓库

将这些镜像包上传到内部镜像仓库

4.2.2.1 首先转到内网docker 里

tar 均传到内网机器里了,该目录下:

-rw-r--r-- 1 root root 982262784 Dec 30 19:56 centos-source-zun-base.tar

-rw-r--r-- 1 root root 1152483840 Dec 30 19:56 centos-source-zun-compute.tar

[root@kolla 20201230]# pwd

/root/software/ussuriimages/20201230

[root@kolla 20201230]#

使用下面脚本将批量加载镜像。

#!/bin/bash

path=$1

cd $path

for filename in `ls`

do

echo $filename

docker load < $filename

done

[root@kolla scripts]# ./dockerload.sh /root/software/ussuriimages/20201230/

centos-source-aodh-api.tar

2653d992f4ef: Loading layer [==================================================>] 216.5MB/216.5MB

5b147a2d1ba3: Loading layer [==================================================>] 84.48kB/84.48kB

ce23142c7aea: Loading layer [==================================================>] 4.608kB/4.608kB

全部加载到了 docker 里

kolla/centos-source-prometheus-cadvisor ussuri 2b21f36d3022 2 weeks ago 330MB

kolla/centos-source-prometheus-openstack-exporter ussuri caeb686cb764 2 weeks ago 311MB

kolla/centos-source-storm ussuri 72f5cb7701e5 2 weeks ago 651MB

kolla/centos-source-grafana ussuri 38fe1e81d92c 2 weeks ago 516MB

[root@kolla scripts]# docker images | grep ussuri |wc -l

194

[root@kolla scripts]#

现在要把这些镜像推到本地镜像仓库,本地镜像仓库地址 registry.chouniu.fun,且开启了https

首先将镜像前缀改为 registry.chouniu.fun

for i in docker images|grep -v registry | grep ussuri|awk '{print $1}';do docker image tag $i:ussuri registry.chouniu.fun/$i:ussuri;done

[root@kolla scripts]# for i in `docker images|grep -v registry | grep ussuri|awk '{print $1}'`;do docker image tag $i:ussuri registry.chouniu.fun/$i:ussuri;done

[root@kolla scripts]# docker images |grep kxdigit

registry.chouniu.fun/kolla/centos-source-octavia-api ussuri a80665d35290 3 days ago 875MB

然后将这些镜像推到仓库

for i in docker images | grep kxdigit |awk '{print $1}';do docker push $i;done

五 部署实施

(一)ceph 三节点搭建

| 主机名 | IP |磁盘 |角色|

| ---- | ---- | ---- | ---- | ---- |---|

| cephtest001.ceph.chouniu.fun | 10.3.176.10 | 系统盘:/dev/sda 数据盘:/dev/sdb /dev/sdc /dev/sdd |ceph-deploy,monitor,mgr,mds,osd|

| cephtest002.ceph.chouniu.fun | 10.3.176.16 | 系统盘:/dev/sda 数据盘:/dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf |monitor,mgr,mds,osd|

| cephtest003.ceph.chouniu.fun | 10.3.176.44 | 系统盘:/dev/sda 数据盘:/dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf /dev/sdg |monitor,mgr,mds,osd|

具体参考下面博客

三台物理机安装ceph

当前ceph 信息

[dev@10-3-170-32 ~]$ ssh root@10.3.176.10

Last login: Fri Dec 25 15:11:31 2020

[root@cephtest001 ~]# su - cephadmin

上一次登录:五 12月 25 15:12:17 CST 2020pts/0 上

[cephadmin@cephtest001 ~]$ ceph -s

cluster:

id: 6cd05235-66dd-4929-b697-1562d308d5c3

health: HEALTH_OK

services:

mon: 3 daemons, quorum cephtest001,cephtest002,cephtest003 (age 6h)

mgr: cephtest001(active, since 6d), standbys: cephtest002, cephtest003

osd: 14 osds: 14 up (since 6h), 14 in (since 6h)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 14 GiB used, 52 TiB / 52 TiB avail

pgs:

[cephadmin@cephtest001 ~]$

(二)ceph block devices 集成openstack 之ceph端配置

本文主要ceph 14.2.15 与openstack ussuri 集成 ,参考了ceph 官网 [Block Devices and OpenStack](https://docs.ceph.com/en/nautilus/rbd/rbd-openstack/#)

5.2.1 创建ceph 集群需要的池

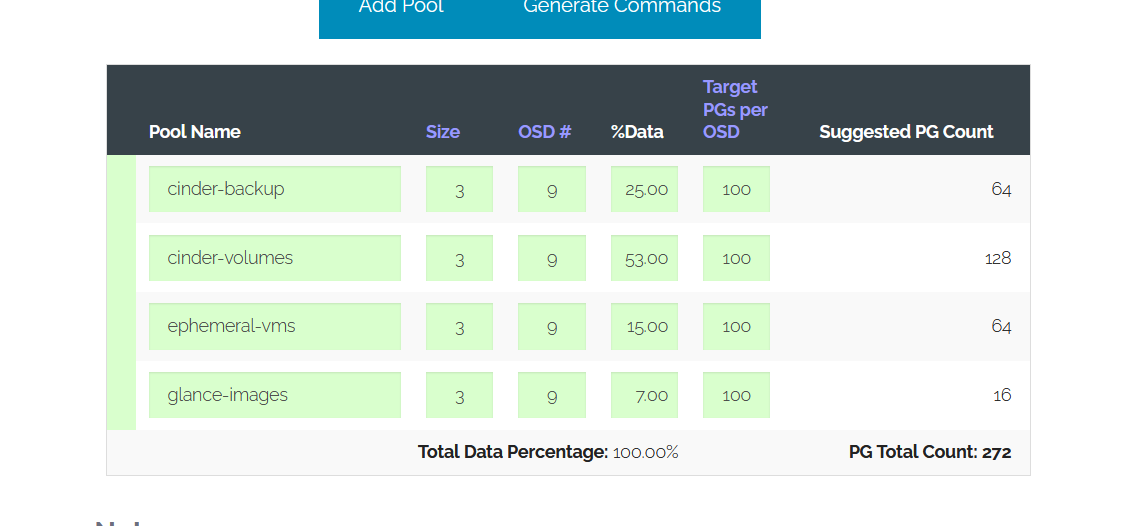

我这里一共有14个osd 并不是平均分配到三台物理机上,分别是3+5+6, 我取3*3=9 来计算pg num

首先到官网计算pg 数量官网计算pg num

在ceph 部署节点执行如下命令

[cephadmin@cephtest001 cephcluster]$ ceph osd pool create volumes 128

pool 'volumes' created

[cephadmin@cephtest001 cephcluster]$ ceph osd pool create backups 64

pool 'backups' created

[cephadmin@cephtest001 cephcluster]$ ceph osd pool create vms 64

pool 'vms' created

[cephadmin@cephtest001 cephcluster]$ ceph osd pool create images 16

pool 'images' created

[cephadmin@cephtest001 cephcluster]$

查看池已经建好

[cephadmin@cephtest001 cephcluster]$ ceph osd lspools

1 volumes

2 backups

3 vms

4 images

[cephadmin@cephtest001 cephcluster]$

5.2.2 初始化池

官网上提示Newly created pools must initialized prior to use. Use the rbd tool to initialize the pools:

网上看的部分博客 nautilus 版本 均未作这一步,我这里暂时先按官网来。

[cephadmin@cephtest001 cephcluster]$ rbd pool init volumes

[cephadmin@cephtest001 cephcluster]$ rbd pool init images

[cephadmin@cephtest001 cephcluster]$ rbd pool init backups

[cephadmin@cephtest001 cephcluster]$ rbd pool init vms

[cephadmin@cephtest001 cephcluster]$

5.2.3 生成授权文件

官网说明:If you have cephx authentication enabled, create a new user for Nova/Cinder and Glance. Execute the following:

我们用最小授权让openstack 能使用ceph 集群。

[cephadmin@cephtest001 cephcluster]$ pwd

/home/cephadmin/cephcluster

[cephadmin@cephtest001 cephcluster]$ ceph auth get-or-create client.glance mon 'profile rbd' osd 'profile rbd pool=images' mgr 'profile rbd pool=images'

[client.glance]

key = AQDdiu1frWDzBBAA8PHskef3x4515c3qHn7rWA==

[cephadmin@cephtest001 cephcluster]$ ceph auth get-or-create client.cinder mon 'profile rbd' osd 'profile rbd pool=volumes, profile rbd pool=vms, profile rbd-read-only pool=images' mgr 'profile rbd pool=volumes, profile rbd pool=vms'

[client.cinder]

key = AQDpiu1f5ARcORAAKoM0AHYwm8lvDg0zAGwfLA==

[cephadmin@cephtest001 cephcluster]$ ceph auth get-or-create client.cinder-backup mon 'profile rbd' osd 'profile rbd pool=backups' mgr 'profile rbd pool=backups'

[client.cinder-backup]

key = AQD1iu1fPaVZMhAA5aPqEsG/rkSyn/r+rrxacw==

[cephadmin@cephtest001 cephcluster]$ ls

查看已配置的授权 ceph auth list

[cephadmin@cephtest001 cephcluster]$ ceph auth list

installed auth entries:

osd.0

key: AQDwOOVfdLOTAhAAhdiOWlejKFkfpSj4bahzRg==

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.1

key: AQD7OOVfiydyGhAAZsCYkAdaFCP+VZm2z7b1xg==

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.10

key: AQBjQOVfg0c0GBAAlr+fyCCMhetVCszhjXBsTw==

caps: [mgr] allow profile osd

[cephadmin@cephtest001 cephcluster]$ ceph auth list | grep cinder

installed auth entries:

client.cinder

client.cinder-backup

[cephadmin@cephtest001 cephcluster]$

(三)openstack 三节点部署

部署参考该文openstack ussuri版本 集群(1台控制节点+1台计算节点)离线部署

5.3.1 基础安装

dns yum docker 关闭防火墙selinux 时间同步 三个节点都要安装

5.3.1.1 安装dns

[dev@10-3-170-32 base]$ ll

总用量 20

-rw-rw-r-- 1 dev dev 1417 12月 24 08:46 2_installzabbixagentbyrpm.yml

-rw-rw-r-- 1 dev dev 176 12月 24 10:10 closefirewalldandselinux.yml

-rw-rw-r-- 1 dev dev 786 12月 24 16:12 modifychronyclient.yml

-rw-rw-r-- 1 dev dev 2178 12月 24 08:56 modifydns.yml

-rw-rw-r-- 1 dev dev 3509 12月 24 15:59 upateyum.yml

[dev@10-3-170-32 base]$ ansible-playbook modifydns.yml

5.3.1.2 更新yum 源

[dev@10-3-170-32 base]$ ansible-playbook upateyum.yml

[DEPRECATION WARNING]: The TRANSFORM_INVALID_GROUP_CHARS settings is set to allow bad characters in group names by default, this will change,

but still be user configurable on deprecation. This feature will be removed in version 2.10. Deprecation warnings can be disabled by setting

deprecation_warnings=False in ansible.cfg.

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

PLAY [00base] *********************************************************************************************************************************

TASK [Gathering Facts] ************************************************************************************************************************

ok: [10.3.176.2]

ok: [10.3.176.35]

ok: [10.3.176.37]

5.3.1.3 时间同步

[dev@10-3-170-32 base]$ ansible-playbook modifychronyclient.yml

[DEPRECATION WARNING]: The TRANSFORM_INVALID_GROUP_CHARS settings is set to allow bad characters in group names by default, this will change,

but still be user configurable on deprecation. This feature will be removed in version 2.10. Deprecation warnings can be disabled by setting

deprecation_warnings=False in ansible.cfg.

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

PLAY [00base] *********************************************************************************************************************************

TASK [Gathering Facts] ************************************************************************************************************************

ok: [10.3.176.2]

ok: [10.3.176.37]

ok: [10.3.176.35]

5.3.1.4 关闭防火墙和selinux

[dev@10-3-170-32 base]$ ansible-playbook closefirewalldandselinux.yml

[DEPRECATION WARNING]: The TRANSFORM_INVALID_GROUP_CHARS settings is set to allow bad characters in group names by default, this will change,

but still be user configurable on deprecation. This feature will be removed in version 2.10. Deprecation warnings can be disabled by setting

deprecation_warnings=False in ansible.cfg.

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

PLAY [00base] *********************************************************************************************************************************

TASK [Gathering Facts] ************************************************************************************************************************

ok: [10.3.176.2]

ok: [10.3.176.37]

ok: [10.3.176.35]

5.3.1.5 配置dns及修改机器名

如果在内网有dns系统,则在dns 里配置下

ussuritest001.cloud.chouniu.fun 10.3.176.2

ussuritest002.cloud.chouniu.fun 10.3.176.35

ussuritest003.cloud.chouniu.fun 10.3.176.37

若无dns 系统,则在三台机器hosts 文件里添加对应内容即可。

修改机器名是为了便于识别当前机器。三台都要改下

[root@kolla docker]# hostnamectl set-hostname ussuritest001.cloud.chouniu.fun

[root@kolla docker]# reboot

5.3.1.6 修改网卡名称

为了方便后面配置,我这里把网卡名称改为 eth0 eth1 ,具体参考centos7 centos8 修改网卡名称

5.3.1.7 安装docker

我这里目前安装的是docker 18.03,三台节点都需要安装

我这里已经配置了docker yum 源,直接yum install docker-ce 即可安装,安装时可能会报错,主要是依赖包兼容问题。

安装docker 异常处理参考openstack ussuri 版本 all-in-one 离线部署

docker 配置文件也需要修改

新增 /etc/systemd/system/docker.service.d/kolla.conf

[root@ussuricontroller1 ~]# cd /etc/systemd/system/

[root@ussuricontroller1 system]# mkdir docker.service.d

[root@ussuricontroller1 system]# cd docker.service.d/

[root@ussuricontroller1 docker.service.d]# vi kolla.conf

[root@ussuricontroller1 docker.service.d]# ll

文件内容

[Service]

MountFlags=shared

重启docker

[root@ussuricontroller1 docker.service.d]# systemctl daemon-reload

[root@ussuricontroller1 docker.service.d]# systemctl restart docker

[root@ussuricontroller1 docker.service.d]#

5.3.1.8 禁用libvirt

[root@ussuritest001 ussuri]# systemctl stop libvirtd.service && systemctl disable libvirtd.service && systemctl status libvirtd.service

● libvirtd.service - Virtualization daemon

Loaded: loaded (/usr/lib/systemd/system/libvirtd.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:libvirtd(8)

https://libvirt.org

[root@ussuritest001 ussuri]#

5.3.1.9 安装基础依赖包

centos8 使用dnf 安装rpm 包,安装python3-devel libffi-devel gcc openssl-devel python3-libselinux git vim bash-completion net-tools 基础依赖包

[root@ussuritest001 ussuri]# cd dependencies/

[root@ussuritest001 dependencies]# ll

total 31224

-rw-r--r-- 1 root root 280084 Jun 17 2020 bash-completion-2.7-5.el8.noarch.rpm

-rw-r--r-- 1 root root 24564532 Jun 17 2020 gcc-8.3.1-4.5.el8.x86_64.rpm

-rw-r--r-- 1 root root 190956 Jun 17 2020 git-2.18.2-2.el8_1.x86_64.rpm

-rw-r--r-- 1 root root 29396 Jun 17 2020 libffi-devel-3.1-21.el8.i686.rpm

-rw-r--r-- 1 root root 29376 Jun 17 2020 libffi-devel-3.1-21.el8.x86_64.rpm

-rw-r--r-- 1 root root 330916 Jun 17 2020 net-tools-2.0-0.51.20160912git.el8.x86_64.rpm

-rw-r--r-- 1 root root 2395376 Jun 17 2020 openssl-devel-1.1.1c-2.el8_1.1.i686.rpm

-rw-r--r-- 1 root root 2395344 Jun 17 2020 openssl-devel-1.1.1c-2.el8_1.1.x86_64.rpm

-rw-r--r-- 1 root root 290084 Jun 17 2020 python3-libselinux-2.9-2.1.el8.x86_64.rpm

-rw-r--r-- 1 root root 16570 Jun 17 2020 python36-devel-3.6.8-2.module_el8.1.0+245+c39af44f.x86_64.rpm

-rw-r--r-- 1 root root 1427224 Jun 17 2020 vim-enhanced-8.0.1763-13.el8.x86_64.rpm

[root@ussuritest001 dependencies]# dnf install python3-devel libffi-devel gcc openssl-devel python3-libselinux git vim bash-completion net-tools

5.3.1.10 安装pip

centos8.1 自带python3,默然安装了pip3.6 ,我们只需要做下链接即可。

[root@ussuricontroller1 ~]# whereis pip3.6

pip3: /usr/bin/pip3 /usr/bin/pip3.6 /usr/share/man/man1/pip3.1.gz

[root@ussuricontroller1 ~]# ln -s /usr/bin/pip3.6 /usr/bin/pip

[root@ussuricontroller1 ~]# pip -V

pip 9.0.3 from /usr/lib/python3.6/site-packages (python 3.6)

[root@ussuricontroller1 ~]#

5.3.2 部署服务器基础安装

部署服务器上需要安装ansible kolla kolla-ansible 等应用

5.3.2.1 安装ansible

直接使用内网yum 源安装。

kolla 对ansible 版本是有要求的

https://docs.openstack.org/kolla-ansible/ussuri/user/quickstart.html

Install Ansible. Kolla Ansible requires at least Ansible 2.8 and supports up to 2.9.

[root@ussuritest001 ussuri]# ansible --version

ansible 2.9.10

config file = /etc/ansible/ansible.cfg

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python3.6/site-packages/ansible

executable location = /usr/bin/ansible

python version = 3.6.8 (default, Nov 21 2019, 19:31:34) [GCC 8.3.1 20190507 (Red Hat 8.3.1-4)]

[root@ussuritest001 ussuri]#

配置文件优化

对ansible /etc/ansible/ansible.cfg 配置文件做了优化

先备份原文件:cp /etc/ansible/ansible.cfg /etc/ansible/ansible.cfg.bak.orig

新增如下内容:

[defaults]

inventory = $HOME/ansible/hosts

host_key_checking=False

pipelining=True

forks=10

5.3.2.2 安装kolla

解压源码包

[root@ussuritest001 ussuri]# unzip kolla-10.1.0.zip

git初始化

[root@ussuritest001 kolla-10.1.0]# git init

Initialized empty Git repository in /root/software/ussuri/kolla-10.1.0/.git/

[root@ussuritest001 kolla-10.1.0]# pwd

/root/software/ussuri/kolla-10.1.0

[root@ussuritest001 kolla-10.1.0]#

安装依赖包

[root@ussuritest001 kollapip]# pip install --no-index --find-links=/root/software/ussuri/kollapip -r /root/software/ussuri/kolla-10.1.0/requirements.txt

安装kolla

[root@ussuritest001 kolla-10.1.0]# pip install /root/software/ussuri/kolla-10.1.0/

WARNING: Running pip install with root privileges is generally not a good idea. Try `pip install --user` instead.

Processing /root/software/ussuri/kolla-10.1.0

验证

[root@ussuritest001 kolla-10.1.0]# kolla-build --version

0.0.0

[root@ussuritest001 kolla-10.1.0]#

5.3.2.3安装kolla-ansible

本文安装的是kolla-ansible 10.1.0 版本

解压源码包

[root@ussuritest001 ussuri]# unzip kolla-ansible-10.1.0.zip

Archive: kolla-ansible-10.1.0.zip

6bba8cc52af3a26678da48129856f80c21eb8e38

creating: kolla-ansible-10.1.0/

git 初始化

[root@ussuritest001 ussuri]# cd kolla-ansible-10.1.0/

[root@ussuritest001 kolla-ansible-10.1.0]# pwd

/root/software/ussuri/kolla-ansible-10.1.0

[root@ussuritest001 kolla-ansible-10.1.0]# git init

Initialized empty Git repository in /root/software/ussuri/kolla-ansible-10.1.0/.git/

[root@ussuritest001 kolla-ansible-10.1.0]#

安装kolla-ansible 依赖包

[root@ussuritest001 kolla-ansible-10.1.0]# pip install --no-index --find-links=/root/software/ussuri//kollaansiblepip -r /root/software/ussuri/kolla-ansible-10.1.0/requirements.txt

WARNING: Running pip install with root privileges is generally not a good idea. Try `pip install --user` instead.

Requirement already satisfied: pbr!=2.1.0,>=2.0.0 in /usr/local/lib/python3.6/site-packages (from -r /root/software/ussuri/kolla-ansible-10.1.0/requirements.txt (line 1))

安装kolla-ansible

[root@ussuritest001 kolla-ansible-10.1.0]# pip install /root/software/ussuri/kolla-ansible-10.1.0

WARNING: Running pip install with root privileges is generally not a good idea. Try `pip install --user` instead.

Processing /root/software/ussuri/kolla-ansible-10.1.0

验证

[root@ussuritest001 kolla-ansible-10.1.0]# kolla-ansible -h

Usage: /usr/local/bin/kolla-ansible COMMAND [options]

5.3.2.4新增ansible配置文件

[root@ussuritest001 ~]# mkdir /root/ansible

[root@ussuritest001 ~]# cp /root/software/ussuri/kolla-ansible-10.1.0/ansible/inventory/* /root/ansible/

[root@ussuritest001 ~]# ll /root/ansible/

total 24

-rw-r--r-- 1 root root 9584 Jan 4 16:43 all-in-one

-rw-r--r-- 1 root root 10058 Jan 4 16:43 multinode

[root@ussuritest001 ~]#

5.3.2.5新增ansible-kolla配置文件

[root@ussuritest001 etc]# mkdir -p /etc/kolla

[root@ussuritest001 etc]# cp -r /root/software/ussuri/kolla-ansible-10.1.0/etc/kolla/* /etc/kolla

[root@ussuritest001 etc]# ll /etc/kolla/

total 36

-rw-r--r-- 1 root root 25509 Jan 4 16:45 globals.yml

-rw-r--r-- 1 root root 5037 Jan 4 16:45 passwords.yml

[root@ussuritest001 etc]#

5.3.2.6 免密登录到各节点

部署节点要能免密登录到所有节点

[root@ussuritest001 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:C+oL6hCgaUVLHJvcPy0FO5NNd/z4n+8lLzXsN0ECv3o root@ussuritest001.cloud.chouniu.fun

The key's randomart image is:

+---[RSA 3072]----+

| .+. . . ... |

| +.= * .... |

|. * . = o o o |

|o.. . = + o |

|+. .+S. * |

|.. . .o. . =.|

|. . . . . o.*|

|.. o . E.*+|

|o. o. . oB|

+----[SHA256]-----+

[root@ussuritest001 ~]# ssh-copy-id root@10.3.176.2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '10.3.176.2 (10.3.176.2)' can't be established.

ECDSA key fingerprint is SHA256:+Ry1/nN1i7gWLYNx32WqBgoN9LRNr5sBfFOr4K7yapU.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@10.3.176.2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@10.3.176.2'"

and check to make sure that only the key(s) you wanted were added.

[root@ussuritest001 ~]# ssh-copy-id root@10.3.176.35

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '10.3.176.35 (10.3.176.35)' can't be established.

ECDSA key fingerprint is SHA256:UYEJB1fQOIdRa358S1SM+/hECNy1fo50JgNvG74snKc.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@10.3.176.35's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@10.3.176.35'"

and check to make sure that only the key(s) you wanted were added.

5.3.2.7 生成密码文件并修改登录密码

先使用kolla-genpwd 生成全套密码,然后为了前台便于登录,修改keystone_admin_password 密码 为Admin_PASS

[root@ussuritest001 ~]# cd /etc/kolla/

[root@ussuritest001 kolla]# ll

total 36

-rw-r--r-- 1 root root 25509 Jan 4 16:45 globals.yml

-rw-r--r-- 1 root root 5037 Jan 4 16:45 passwords.yml

[root@ussuritest001 kolla]# kolla-genpwd

[root@ussuritest001 kolla]# ll

total 56

-rw-r--r-- 1 root root 25509 Jan 4 16:45 globals.yml

-rw-r--r-- 1 root root 25675 Jan 5 08:55 passwords.yml

[root@ussuritest001 kolla]# vim passwords.yml

[root@ussuritest001 kolla]#

keepalived_password: fGMyr5TVe7LSQ7aN1FZJFpNVo8c7VPf2j1JKjDcA

keystone_admin_password: Admin_PASS

keystone_database_password: H7EjpJjhIeYxgxwfD3uQI2gs7DSYJEEoWDnj5mzu

5.3.2.8 修改multinode

主要修改点有:

[control]

[network]

[compute]

[monitoring]

[storage]

这条我这里暂时没改,先试试能不能用

[nova-compute-ironic:children] 配置改为compute

对该文件/root/ansible/multinode,仅做了如下修改。

[root@ussuritest001 ansible]# cat multinode

# These initial groups are the only groups required to be modified. The

# additional groups are for more control of the environment.

[control]

# These hostname must be resolvable from your deployment host

#control01

#control02

#control03

ussuritest001.cloud.chouniu.fun

ussuritest002.cloud.chouniu.fun

ussuritest003.cloud.chouniu.fun

# The above can also be specified as follows:

#control[01:03] ansible_user=kolla

# The network nodes are where your l3-agent and loadbalancers will run

# This can be the same as a host in the control group

[network]

#network01

#network02

ussuritest001.cloud.chouniu.fun

ussuritest002.cloud.chouniu.fun

ussuritest003.cloud.chouniu.fun

[compute]

#compute01

ussuritest001.cloud.chouniu.fun

ussuritest002.cloud.chouniu.fun

ussuritest003.cloud.chouniu.fun

[monitoring]

#monitoring01

ussuritest001.cloud.chouniu.fun

ussuritest002.cloud.chouniu.fun

ussuritest003.cloud.chouniu.fun

# When compute nodes and control nodes use different interfaces,

# you need to comment out "api_interface" and other interfaces from the globals.yml

# and specify like below:

#compute01 neutron_external_interface=eth0 api_interface=em1 storage_interface=em1 tunnel_interface=em1

[storage]

#storage01

ussuritest001.cloud.chouniu.fun

ussuritest002.cloud.chouniu.fun

ussuritest003.cloud.chouniu.fun

5.3.2.9 修改global.yml

此步暂略,后面与ceph 集成时也要修改该文件,整合到一起。

(四)openstack 与ceph 集成

5.4.1 修改global.yml

备份原文件,然后做了如下修改

cat globals.yml |grep -v "^#" | grep -v ^$

kolla_base_distro: "centos"

kolla_install_type: "source"

openstack_release: "ussuri"

kolla_internal_vip_address: "10.3.176.8"

docker_registry: registry.chouniu.fun

network_interface: "eth0"

neutron_external_interface: "eth1"

neutron_plugin_agent: "openvswitch"

enable_openstack_core: "yes"

enable_haproxy: "yes"

enable_cinder: "yes"

enable_manila_backend_cephfs_native: "yes"

ceph_glance_keyring: "ceph.client.glance.keyring"

ceph_glance_user: "glance"

ceph_glance_pool_name: "images"

ceph_cinder_keyring: "ceph.client.cinder.keyring"

ceph_cinder_user: "cinder"

ceph_cinder_pool_name: "volumes"

ceph_cinder_backup_keyring: "ceph.client.cinder-backup.keyring"

ceph_cinder_backup_user: "cinder-backup"

ceph_cinder_backup_pool_name: "backups"

ceph_nova_keyring: "{{ ceph_cinder_keyring }}"

ceph_nova_user: "cinder"

ceph_nova_pool_name: "vms"

glance_backend_ceph: "yes"

cinder_backend_ceph: "yes"

nova_backend_ceph: "yes"

nova_compute_virt_type: "qemu"

5.4.2 ceph 状态检查

先检查下ceph 状态,发现Degraded data redundancy: 15 pgs undersized,此处暂不解决,经查询说是副本数少于三个导致。

[cephadmin@cephtest001 ~]$ ceph -s

cluster:

id: 6cd05235-66dd-4929-b697-1562d308d5c3

health: HEALTH_WARN

Degraded data redundancy: 15 pgs undersized

services:

mon: 3 daemons, quorum cephtest001,cephtest002,cephtest003 (age 5d)

mgr: cephtest001(active, since 10d), standbys: cephtest002, cephtest003

osd: 14 osds: 14 up (since 5d), 14 in (since 5d)

data:

pools: 4 pools, 272 pgs

objects: 4 objects, 76 B

usage: 14 GiB used, 52 TiB / 52 TiB avail

pgs: 257 active+clean

15 active+undersized

[cephadmin@cephtest001 ~]$ ceph health detail

HEALTH_WARN Degraded data redundancy: 15 pgs undersized

PG_DEGRADED Degraded data redundancy: 15 pgs undersized

pg 1.18 is stuck undersized for 413285.779806, current state active+undersized, last acting [5,10]

pg 1.1a is stuck undersized for 413285.780676, current state active+undersized, last acting [10,5]

pg 1.3d is stuck undersized for 413285.777493, current state active+undersized, last acting [11,4]

pg 1.49 is stuck undersized for 413285.774841, current state active+undersized, last acting [13,4]

pg 1.6f is stuck undersized for 413285.777027, current state active+undersized, last acting [12,6]

pg 1.7c is stuck undersized for 413285.776398, current state active+undersized, last acting [8,3]

pg 1.7f is stuck undersized for 413285.777565, current state active+undersized, last acting [13,4]

pg 2.7 is stuck undersized for 413277.821636, current state active+undersized, last acting [8,7]

pg 2.29 is stuck undersized for 413277.823859, current state active+undersized, last acting [6,10]

pg 2.31 is stuck undersized for 413277.824248, current state active+undersized, last acting [9,4]

pg 2.38 is stuck undersized for 413277.821487, current state active+undersized, last acting [8,4]

pg 3.1 is stuck undersized for 413269.576784, current state active+undersized, last acting [13,4]

pg 3.4 is stuck undersized for 413269.579048, current state active+undersized, last acting [1,13]

pg 3.33 is stuck undersized for 413269.574585, current state active+undersized, last acting [8,4]

pg 3.3e is stuck undersized for 413269.574513, current state active+undersized, last acting [11,4]

[cephadmin@cephtest001 ~]$

[cephadmin@cephtest001 ~]$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 52.38348 root default

-3 3.26669 host cephtest001

0 hdd 1.08890 osd.0 up 1.00000 1.00000

1 hdd 1.08890 osd.1 up 1.00000 1.00000

2 hdd 1.08890 osd.2 up 1.00000 1.00000

-5 5.45547 host cephtest002

3 hdd 1.09109 osd.3 up 1.00000 1.00000

4 hdd 1.09109 osd.4 up 1.00000 1.00000

5 hdd 1.09109 osd.5 up 1.00000 1.00000

6 hdd 1.09109 osd.6 up 1.00000 1.00000

7 hdd 1.09109 osd.7 up 1.00000 1.00000

-7 43.66132 host cephtest003

8 hdd 7.27689 osd.8 up 1.00000 1.00000

9 hdd 7.27689 osd.9 up 1.00000 1.00000

10 hdd 7.27689 osd.10 up 1.00000 1.00000

11 hdd 7.27689 osd.11 up 1.00000 1.00000

12 hdd 7.27689 osd.12 up 1.00000 1.00000

13 hdd 7.27689 osd.13 up 1.00000 1.00000

[cephadmin@cephtest001 ~]$

5.4.3 配置glance 使用ceph

5.4.3.1 生成 ceph.client.glance.keyring

在ceph 部署节点 生成 ceph.client.glance.keyring 文件

[cephadmin@cephtest001 ~]$ ceph auth get-or-create client.glance | tee /etc/ceph/ceph.client.glance.keyring

[client.glance]

key = AQDdiu1frWDzBBAA8PHskef3x4515c3qHn7rWA==

[cephadmin@cephtest001 ~]$ ll /etc/ceph/

total 16

-rw-------. 1 cephadmin cephadmin 151 Dec 24 17:16 ceph.client.admin.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Jan 5 11:06 ceph.client.glance.keyring

-rw-r--r--. 1 cephadmin cephadmin 316 Dec 25 09:36 ceph.conf

-rw-r--r--. 1 cephadmin cephadmin 92 Nov 24 03:33 rbdmap

-rw-------. 1 cephadmin cephadmin 0 Dec 24 17:13 tmpCzgIPJ

[cephadmin@cephtest001 ~]$

5.4.3.2 koll-ansible 部署节点 新建/etc/kolla/config/glance

[root@ussuritest001 ~]# mkdir -p /etc/kolla/config/glance

[root@ussuritest001 ~]# cd /etc/kolla/config/glance/

[root@ussuritest001 glance]# ll

total 0

[root@ussuritest001 glance]# pwd

/etc/kolla/config/glance

[root@ussuritest001 glance]#

5.4.3.3 ceph.client.glance.keyring 文件从ceph部署节点拷贝到openstack 部署节点该目录下/etc/kolla/config/glance

[cephadmin@cephtest001 ~]$ scp /etc/ceph/ceph.client.glance.keyring root@10.3.176.2:/etc/kolla/config/glance/

The authenticity of host '10.3.176.2 (10.3.176.2)' can't be established.

ECDSA key fingerprint is SHA256:+Ry1/nN1i7gWLYNx32WqBgoN9LRNr5sBfFOr4K7yapU.

ECDSA key fingerprint is MD5:86:42:86:9f:b3:ce:37:2c:fe:35:59:69:14:aa:17:bc.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.3.176.2' (ECDSA) to the list of known hosts.

root@10.3.176.2's password:

ceph.client.glance.keyring 100% 64 63.7KB/s 00:00

[cephadmin@cephtest001 ~]$

5.4.3.4 添加ceph.conf

在/etc/kolla/config/glance/ 目录下新增 ceph.conf

[cephadmin@cephtest001 ~]$ scp /etc/ceph/ceph.conf root@10.3.176.2:/etc/kolla/config/glance/

root@10.3.176.2's password:

ceph.conf 100% 316 200.3KB/s 00:00

[cephadmin@cephtest001 ~]$

只保留如下内容

[global]

fsid = 6cd05235-66dd-4929-b697-1562d308d5c3

mon_host = 10.3.176.10,10.3.176.16,10.3.176.44

~

5.4.4 配置cinder 使用ceph

5.4.4.1 ceph 部署节点生成 ceph.client.cinder.keyring 和 ceph.client.cinder-backup.keyring 密钥文件

[cephadmin@cephtest001 ~]$ ceph auth get-or-create client.cinder | tee /etc/ceph/ceph.client.cinder.keyring

[client.cinder]

key = AQDpiu1f5ARcORAAKoM0AHYwm8lvDg0zAGwfLA==

[cephadmin@cephtest001 ~]$ ceph auth get-or-create client.cinder-backup | tee /etc/ceph/ceph.client.cinder-backup.keyring

[client.cinder-backup]

key = AQD1iu1fPaVZMhAA5aPqEsG/rkSyn/r+rrxacw==

[cephadmin@cephtest001 ~]$ ll /etc/ceph/

total 24

-rw-------. 1 cephadmin cephadmin 151 Dec 24 17:16 ceph.client.admin.keyring

-rw-rw-r-- 1 cephadmin cephadmin 71 Jan 5 14:15 ceph.client.cinder-backup.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Jan 5 14:15 ceph.client.cinder.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Jan 5 11:06 ceph.client.glance.keyring

-rw-r--r--. 1 cephadmin cephadmin 316 Dec 25 09:36 ceph.conf

-rw-r--r--. 1 cephadmin cephadmin 92 Nov 24 03:33 rbdmap

-rw-------. 1 cephadmin cephadmin 0 Dec 24 17:13 tmpCzgIPJ

[cephadmin@cephtest001 ~]$

5.4.4.2 kolla-ansible 部署节点新增cinder 配置文件目录

[root@ussuritest001 config]# mkdir -p /etc/kolla/config/cinder/cinder-volume/

[root@ussuritest001 config]# mkdir -p /etc/kolla/config/cinder/cinder-backup

[root@ussuritest001 config]# ll /etc/kolla/config/

total 0

drwxr-xr-x 4 root root 48 Jan 5 14:18 cinder

drwxr-xr-x 2 root root 57 Jan 5 13:29 glance

[root@ussuritest001 config]# ll /etc/kolla/config/cinder/

total 0

drwxr-xr-x 2 root root 6 Jan 5 14:18 cinder-backup

drwxr-xr-x 2 root root 6 Jan 5 14:18 cinder-volume

[root@ussuritest001 config]#

5.4.4.3 cinder 相关密钥文件拷贝到 kolla-ansbile 部署节点

[cephadmin@cephtest001 ~]$ scp /etc/ceph/ceph.client.cinder.keyring root@10.3.176.2:/etc/kolla/config/cinder/cinder-backup/

root@10.3.176.2's password:

ceph.client.cinder.keyring 100% 64 62.8KB/s 00:00

[cephadmin@cephtest001 ~]$ scp /etc/ceph/ceph.client.cinder.keyring root@10.3.176.2:/etc/kolla/config/cinder/cinder-volume/

root@10.3.176.2's password:

ceph.client.cinder.keyring

[cephadmin@cephtest001 ~]$ scp /etc/ceph/ceph.client.cinder-backup.keyring root@10.3.176.2:/etc/kolla/config/cinder/cinder-backup/

root@10.3.176.2's password:

ceph.client.cinder-backup.keyring 100% 71 73.2KB/s 00:00

[cephadmin@cephtest001 ~]$

5.4.4.4 cinder 配置目录下添加ceph

因为ceph.conf 配置文件内容 同上 5.4.3.4,故直接复制过来即可。

[root@ussuritest001 config]# ll /etc/kolla/config/cinder/

total 0

drwxr-xr-x 2 root root 6 Jan 5 14:18 cinder-backup

drwxr-xr-x 2 root root 6 Jan 5 14:18 cinder-volume

[root@ussuritest001 config]# cd /etc/kolla/config/cinder/

[root@ussuritest001 cinder]# cp ../glance/ceph.conf ./

[root@ussuritest001 cinder]# ll

total 4

-rw-r--r-- 1 root root 100 Jan 5 14:28 ceph.conf

drwxr-xr-x 2 root root 81 Jan 5 14:26 cinder-backup

drwxr-xr-x 2 root root 40 Jan 5 14:25 cinder-volume

[root@ussuritest001 cinder]#

5.4.5 配置nova 使用ceph

5.4.5.1 kolla ansible 部署节点新建/etc/kolla/config/nova目录

[root@ussuritest001 config]# mkdir -p /etc/kolla/config/nova

[root@ussuritest001 config]# ll /etc/kolla/config/

total 0

drwxr-xr-x 4 root root 65 Jan 5 14:28 cinder

drwxr-xr-x 2 root root 57 Jan 5 13:29 glance

drwxr-xr-x 2 root root 6 Jan 5 14:35 nova

[root@ussuritest001 config]#

5.4.5.2 拷贝ceph.client.cinder.keyring 到 /etc/kolla/config/nova

[cephadmin@cephtest001 ~]$ scp /etc/ceph/ceph.client.cinder.keyring root@10.3.176.2:/etc/kolla/config/nova/

root@10.3.176.2's password:

ceph.client.cinder.keyring 100% 64 116.0KB/s 00:00

[cephadmin@cephtest001 ~]$

5.4.5.3 配置ceph.conf

该文件内容相同,直接复制

[root@ussuritest001 config]# cd nova/

[root@ussuritest001 nova]# ll

total 4

-rw-r--r-- 1 root root 64 Jan 5 14:36 ceph.client.cinder.keyring

[root@ussuritest001 nova]# cp ../glance/ceph.conf ./

[root@ussuritest001 nova]# ll

total 8

-rw-r--r-- 1 root root 64 Jan 5 14:36 ceph.client.cinder.keyring

-rw-r--r-- 1 root root 100 Jan 5 14:40 ceph.conf

[root@ussuritest001 nova]#

5.4.5.4 配置nova.conf

umap可以启用trim

writeback设置虚拟机的disk cache

[root@ussuritest001 nova]# vim /etc/kolla/config/nova/nova.conf

[root@ussuritest001 nova]#

内容入下

[libvirt]

hw_disk_discard = unmap

disk_cachemodes="network=writeback"

cpu_mode=host-passthrough

~

(五)kolla-ansible 安装openstack ussuri

5.5.1 预检查

在kolla-ansible 部署节点执行预检查命令

[root@ussuritest001 nova]# kolla-ansible -v -i /root/ansible/multinode prechecks

Pre-deployment checking : ansible-playbook -i /root/ansible/multinode -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla -e kolla_action=precheck /usr/local/share/kolla-ansible/ansible/site.yml --verbose

Using /etc/ansible/ansible.cfg as config file

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

[WARNING]: Could not match supplied host pattern, ignoring: enable_nova_True

PLAY [Gather facts for all hosts] *************************************************************************************************************

TASK [Gathering Facts] ************************************************************************************************************************

ok: [ussuritest002.cloud.chouniu.fun]

ok: [localhost]

ok: [ussuritest001.cloud.chouniu.fun]

ok: [ussuritest003.cloud.chouniu.fun]

预检查结果ok

PLAY RECAP ************************************************************************************************************************************

localhost : ok=15 changed=0 unreachable=0 failed=0 skipped=11 rescued=0 ignored=0

ussuritest001.cloud.chouniu.fun : ok=101 changed=0 unreachable=0 failed=0 skipped=194 rescued=0 ignored=0

ussuritest002.cloud.chouniu.fun : ok=99 changed=0 unreachable=0 failed=0 skipped=182 rescued=0 ignored=0

ussuritest003.cloud.chouniu.fun : ok=99 changed=0 unreachable=0 failed=0 skipped=182 rescued=0 ignored=0

[root@ussuritest001 nova]#

5.5.2 拉取依赖包

[root@ussuritest001 nova]# kolla-ansible -v -i /root/ansible/multinode pull

Pulling Docker images : ansible-playbook -i /root/ansible/multinode -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla -e kolla_action=pull /usr/local/share/kolla-ansible/ansible/site.yml --verbose

Using /etc/ansible/ansible.cfg as config file

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

[WARNING]: Could not match supplied host pattern, ignoring: enable_nova_True

PLAY [Gather facts for all hosts] *************************************************************************************************************

TASK [Gathering Facts] ************************************************************************************************************************

ok: [ussuritest003.cloud.chouniu.fun]

ok: [ussuritest002.cloud.chouniu.fun]

ok: [localhost]

ok: [ussuritest001.cloud.chouniu.fun]

localhost : ok=4 changed=0 unreachable=0 failed=0 s kipped=0 rescued=0 ignored=0

ussuritest001.cloud.chouniu.fun : ok=37 changed=16 unreachable=0 failed=0 skipped=97 rescued=0 ignored=0

ussuritest002.cloud.chouniu.fun : ok=37 changed=16 unreachable=0 failed=0 skipped=96 rescued=0 ignored=0

ussuritest003.cloud.chouniu.fun : ok=37 changed=16 unreachable=0 failed=0 skipped=96 rescued=0 ignored=0

可以去每个节点 执行dokcer images 验证下

[root@ussuritest001 nova]# docker images | grep ussuri

registry.chouniu.fun/kolla/centos-source-nova-compute ussuri b41fd82a0eed 8 days ago 2.02GB

registry.chouniu.fun/kolla/centos-source-keystone-ssh ussuri 4764304c9eb6 8 days ago 925MB

registry.chouniu.fun/kolla/centos-source-keystone-fernet ussuri 1b7b33cc80d4 8 days ago 924MB

registry.chouniu.fun/kolla/centos-source-glance-api ussuri 925b18f9e467 8 days ago 901MB

registry.chouniu.fun/kolla/centos-source-keystone ussuri 7ff5731b31d2 8 days ago 923MB

registry.chouniu.fun/kolla/centos-source-cinder-volume ussuri 0f622aa35925 8 days ago 1.32GB

registry.chouniu.fun/kolla/centos-source-placement-api ussuri b9761c66b3f9 8 days ago 893MB

registry.chouniu.fun/kolla/centos-source-nova-api ussuri cde0885e5cde 8 days ago 1.16GB

registry.chouniu.fun/kolla/centos-source-nova-ssh ussuri a7107a92c35c 8 days ago 1.15GB

registry.chouniu.fun/kolla/centos-source-nova-novncproxy ussuri 1db6ac636426 8 days ago 1.2GB

registry.chouniu.fun/kolla/centos-source-nova-scheduler ussuri c4c743c5f767 8 days ago 1.11GB

registry.chouniu.fun/kolla/centos-source-neutron-server ussuri d75b2a55dc62 8 days ago 1.08GB