ceph nautilus 与openstack ussuri 集成

一 摘要

本文主要讲ceph 端配置,为与openstack ussuri 集成做好准备工作,ceph 集群搭建参考

二 环境信息

(一)ceph 信息

2.1.1 集群信息

| 主机名 | IP |磁盘 |角色|

| ---- | ---- | ---- | ---- | ---- |

| ceph001 | 172.31.185.127 | 系统盘:/dev/vda 数据盘:/dev/vdb |时间服务器,ceph-deploy,monitor,mgr,mds,osd|

| ceph002 | 172.31.185.198 | 系统盘:/dev/vda 数据盘:/dev/vdb |monitor,mgr,mds,osd|

| ceph003 | 172.31.185.203 | 系统盘:/dev/vda 数据盘:/dev/vdb |monitor,mgr,mds,osd|

2.1.2 ceph 版本

[cephadmin@ceph001 ~]$ ceph -s

cluster:

id: 8ed6d371-0553-4d62-a6b6-1d7359589655

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph001,ceph002,ceph003 (age 7m)

mgr: ceph001(active, since 39h), standbys: ceph003, ceph002

osd: 3 osds: 3 up (since 39h), 3 in (since 39h)

rgw: 3 daemons active (ceph001, ceph002, ceph003)

task status:

data:

pools: 4 pools, 128 pgs

objects: 221 objects, 2.3 KiB

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs: 128 active+clean

[cephadmin@ceph001 ~]$ ceph -v

ceph version 14.2.15 (afdd217ae5fb1ed3f60e16bd62357ca58cc650e5) nautilus (stable)

[cephadmin@ceph001 ~]$

当前ceph 信息

[root@ceph001 ~]# su - cephadmin

Last login: Fri Dec 4 08:54:11 CST 2020 on pts/0

[cephadmin@ceph001 ~]$ ceph -s

cluster:

id: 8ed6d371-0553-4d62-a6b6-1d7359589655

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph001,ceph002,ceph003 (age 2h)

mgr: ceph001(active, since 47h), standbys: ceph003, ceph002

osd: 3 osds: 3 up (since 2d), 3 in (since 2d)

rgw: 3 daemons active (ceph001, ceph002, ceph003)

task status:

data:

pools: 4 pools, 128 pgs

objects: 221 objects, 2.3 KiB

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs: 128 active+clean

[cephadmin@ceph001 ~]$ cephs osd lspools

-bash: cephs: command not found

[cephadmin@ceph001 ~]$ ceph osd lspools

1 .rgw.root

2 default.rgw.control

3 default.rgw.meta

4 default.rgw.log

[cephadmin@ceph001 ~]$

(二)操作系统信息

[cephadmin@ceph001 ~]$ cat /etc/centos-release

CentOS Linux release 7.6.1810 (Core)

[cephadmin@ceph001 ~]$ uname -a

Linux ceph001 3.10.0-957.el7.x86_64 #1 SMP Thu Nov 8 23:39:32 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

[cephadmin@ceph001 ~]$

三 ceph block devices 集成openstack

本文主要ceph 14.2.15 与openstack ussuri 集成 ,参考了ceph 官网 [Block Devices and OpenStack](https://docs.ceph.com/en/nautilus/rbd/rbd-openstack/#)

(一) ceph 端配置

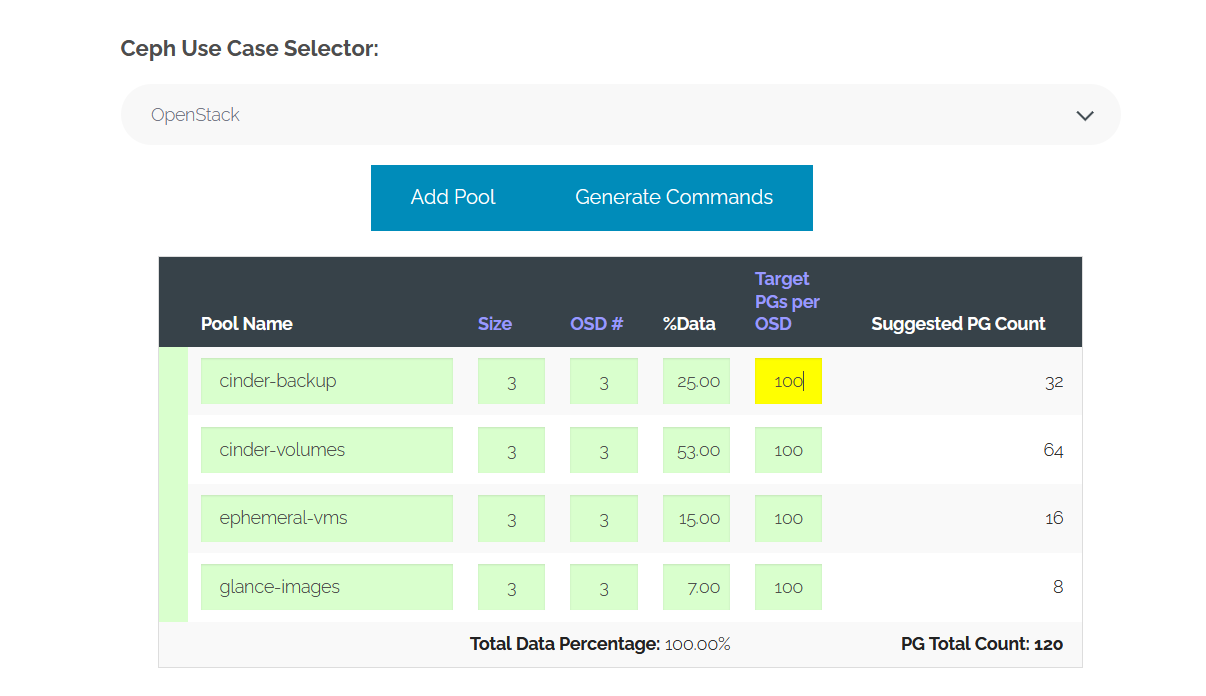

3.1.1 创建openstack 集群所需要的池

创建池时,pg_nums 参数 设置要注意,我这里ceph 集群总共只有3个osd,pg_nums 设置为128 就报错了。

[cephadmin@ceph001 ~]$ ceph osd pool create volumes 128

Error ERANGE: pg_num 128 size 3 would mean 768 total pgs, which exceeds max 750 (mon_max_pg_per_osd 250 * num_in_osds 3)

[cephadmin@ceph001 ~]$

[cephadmin@ceph001 ~]$ ceph osd pool create volumes 64

pool 'volumes' created

[cephadmin@ceph001 ~]$ ceph osd pool create backups 32

pool 'backups' created

[cephadmin@ceph001 ~]$ ceph osd pool create vms 16

pool 'vms' created

[cephadmin@ceph001 ~]$ ceph osd pool create images 8

pool 'images' created

[cephadmin@ceph001 ~]$

查看池已建好

[cephadmin@ceph001 ~]$ ceph osd lspools

1 .rgw.root

2 default.rgw.control

3 default.rgw.meta

4 default.rgw.log

5 volumes

6 backups

7 vms

8 images

[cephadmin@ceph001 ~]$

3.1.2 初始化池

官网上提示Newly created pools must initialized prior to use. Use the rbd tool to initialize the pools:

网上看的部分博客 nautilus 版本 均未作这一步,我这里暂时先按官网来。

[cephadmin@ceph001 ~]$ rbd pool init volumes

[cephadmin@ceph001 ~]$ rbd pool init images

[cephadmin@ceph001 ~]$ rbd pool init backups

[cephadmin@ceph001 ~]$ rbd pool init vms

[cephadmin@ceph001 ~]$

3.1.3 生成ceph 授权文件

官网说明:If you have cephx authentication enabled, create a new user for Nova/Cinder and Glance. Execute the following:

我们用最小授权让openstack 能使用ceph 集群。

[cephadmin@ceph001 cephcluster]$ pwd

/home/cephadmin/cephcluster

[cephadmin@ceph001 cephcluster]$ ceph auth get-or-create client.glance mon 'profile rbd' osd 'profile rbd pool=images' mgr 'profile rbd pool=images'

[client.glance]

key = AQBWf81fSIkvJRAA7T39Cc1qzlor1aaSegQcHw==

[cephadmin@ceph001 cephcluster]$ ceph auth get-or-create client.cinder mon 'profile rbd' osd 'profile rbd pool=volumes, profile rbd pool=vms, profile rbd-read-only pool=images' mgr 'profile rbd pool=volumes, profile rbd pool=vms'

[client.cinder]

key = AQBjf81f0UwJLBAAjn8xyY2Dmx6LhQM+7Uya3g==

[cephadmin@ceph001 cephcluster]$ ceph auth get-or-create client.cinder-backup mon 'profile rbd' osd 'profile rbd pool=backups' mgr 'profile rbd pool=backups'

[client.cinder-backup]

key = AQBsf81f++flORAA+Fu1Ok0hlQfBiPN6zypNPQ==

[cephadmin@ceph001 cephcluster]$ ls

查看已配置的授权 ceph auth list

[cephadmin@ceph001 cephcluster]$ ceph auth list | grep cinder

installed auth entries:

client.cinder

client.cinder-backup

[cephadmin@ceph001 cephcluster]$ ceph auth list | grep glance

installed auth entries:

client.glance

[cephadmin@ceph001 cephcluster]$

这些授权文件后面要拷贝到对应的openstack 集群节点上。

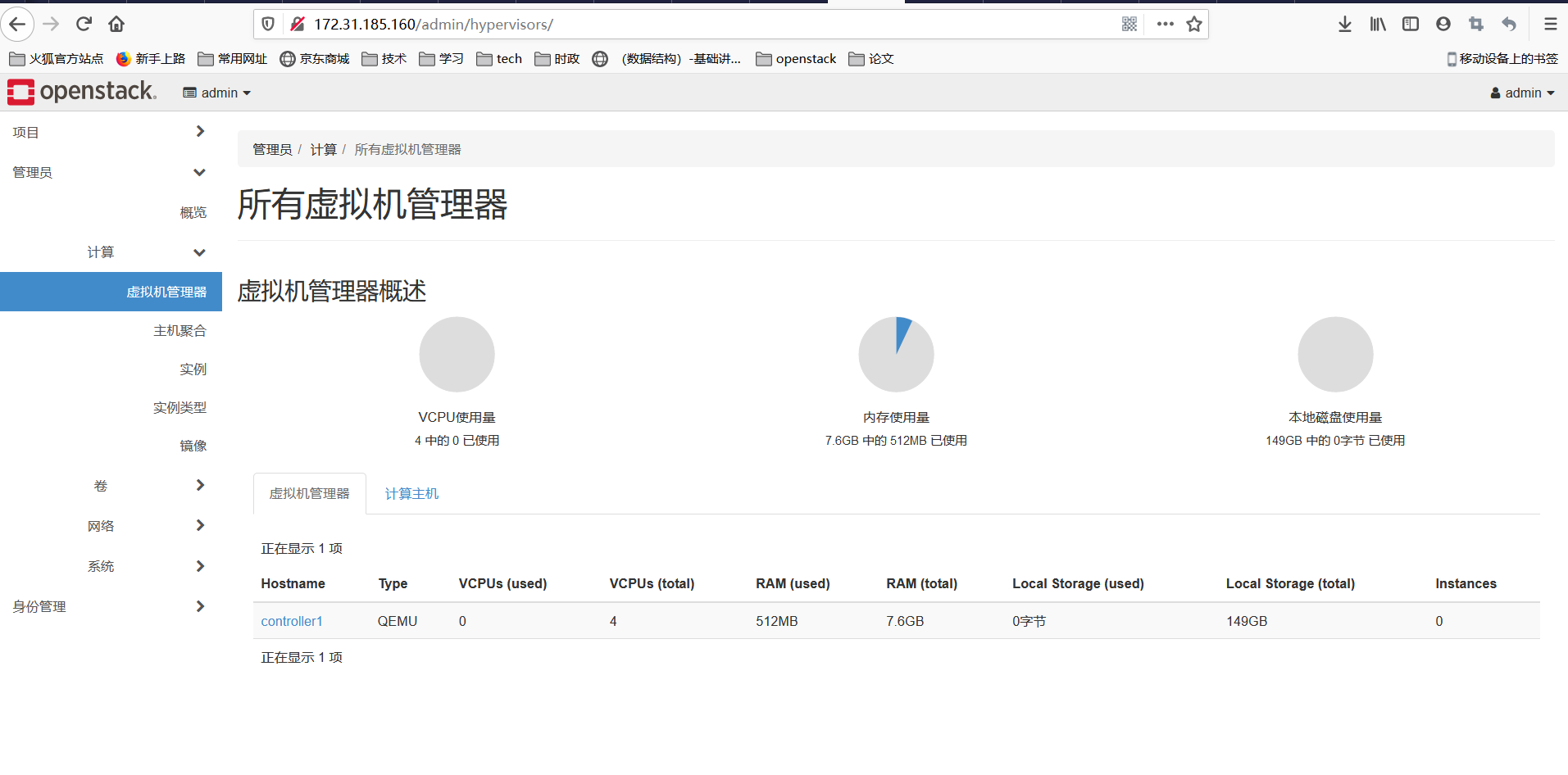

四 openstack 部署及配置

openstack ussuri 版本 [all-in-one 部署](https://i.cnblogs.com/posts/edit;postId=13919486)

| 主机 | IP | 角色 |

|---|---|---|

| controller1 | 172.31.185.211 | 控制节点、计算节点、网络节点 |

(一)配置global.yml

kolla_base_distro: "centos"

kolla_install_type: "source"

openstack_release: "ussuri"

kolla_internal_vip_address: "172.31.185.140"

network_interface: "eth0"

neutron_external_interface: "ens8"

neutron_plugin_agent: "openvswitch"

enable_openstack_core: "yes"

enable_haproxy: "yes"

enable_cinder: "yes"

enable_manila_backend_cephfs_native: "yes"

ceph_glance_keyring: "ceph.client.glance.keyring"

ceph_glance_user: "glance"

ceph_glance_pool_name: "images"

ceph_cinder_keyring: "ceph.client.cinder.keyring"

ceph_cinder_user: "cinder"

ceph_cinder_pool_name: "volumes"

ceph_cinder_backup_keyring: "ceph.client.cinder-backup.keyring"

ceph_cinder_backup_user: "cinder-backup"

ceph_cinder_backup_pool_name: "backups"

ceph_nova_keyring: "{{ ceph_cinder_keyring }}"

ceph_nova_user: "cinder"

ceph_nova_pool_name: "vms"

glance_backend_ceph: "yes"

cinder_backend_ceph: "yes"

nova_backend_ceph: "yes"

nova_compute_virt_type: "qemu"

(二) openstack 各节点配置

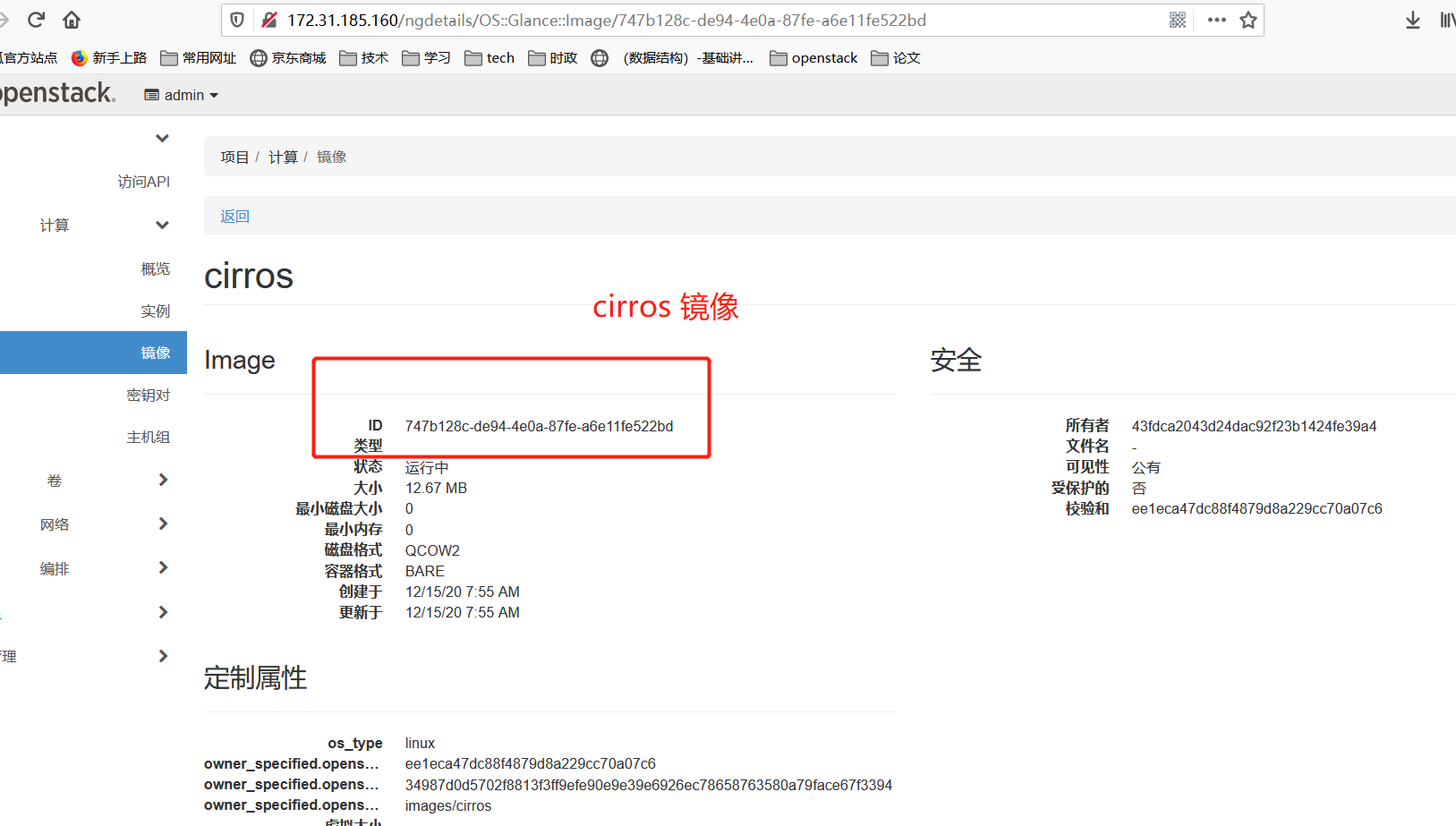

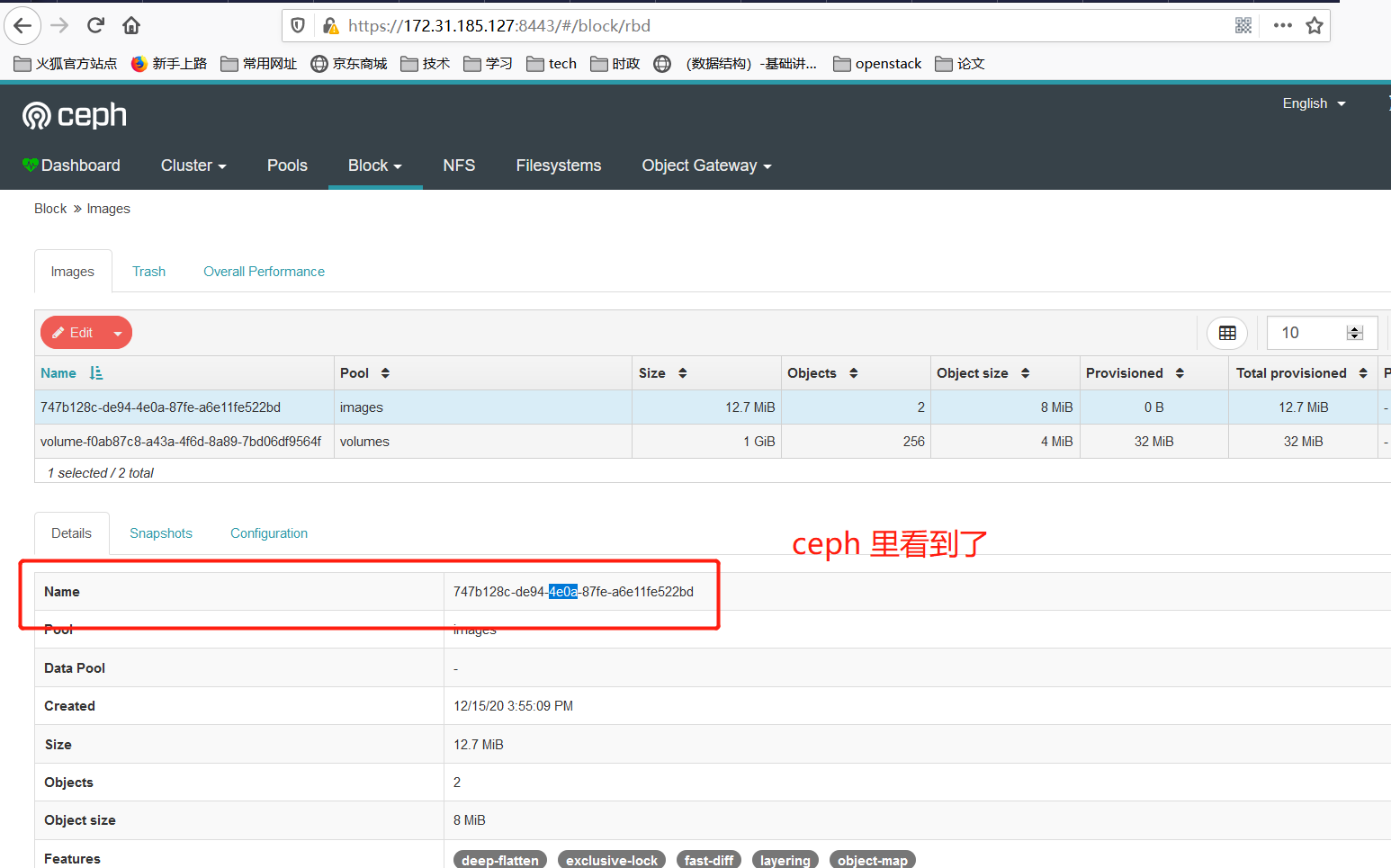

4.2.1 配置glance 使用ceph

4.2.1.1 生成 ceph.client.glance.keyring

生成 ceph.client.glance.keyring 文件

[root@ceph001 ceph]# su - cephadmin

Last login: Mon Dec 7 08:57:51 CST 2020 on pts/0

[cephadmin@ceph001 ~]$ ceph auth get-or-create client.glance | tee /etc/ceph/ceph.client.glance.keyring

[client.glance]

key = AQBWf81fSIkvJRAA7T39Cc1qzlor1aaSegQcHw==

[cephadmin@ceph001 ~]$ ll /etc/ceph/

total 16

-rw------- 1 cephadmin cephadmin 151 Dec 2 17:03 ceph.client.admin.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Dec 14 15:40 ceph.client.glance.keyring

-rw-r--r-- 1 cephadmin cephadmin 313 Dec 2 17:17 ceph.conf

-rw-r--r-- 1 cephadmin cephadmin 92 Nov 24 03:33 rbdmap

-rw------- 1 cephadmin cephadmin 0 Dec 2 17:02 tmp1UDpGt

[cephadmin@ceph001 ~]$

4.2.1.2 koll-ansible 部署节点 新建/etc/kolla/config/glance

[root@controller1 config]# mkdir -p /etc/kolla/config/glance

[root@controller1 config]# cd glance/

[root@controller1 glance]# pwd

/etc/kolla/config/glance

[root@controller1 glance]#

4.2.1.3 ceph.client.glance.keyring 文件从ceph部署节点拷贝到openstack 部署节点该目录下/etc/kolla/config/glance

[cephadmin@ceph001 ~]$ scp /etc/ceph/ceph.client.glance.keyring root@172.31.185.211:/etc/kolla/config/glance/

ceph.client.glance.keyring 100% 64 33.0KB/s 00:00

[cephadmin@ceph001 ~]$

4.2.1.4 添加ceph.conf

在/etc/kolla/config/glance/ 目录下新增 ceph.conf

[root@controller1 glance]# vi ceph.conf

[root@controller1 glance]# cat ceph.conf

[global]

fsid = 8ed6d371-0553-4d62-a6b6-1d7359589655

mon_host = 172.31.185.127,172.31.185.198,172.31.185.203

[root@controller1 glance]# pwd

/etc/kolla/config/glance

[root@controller1 glance]#

4.2.2 配置cinder 使用ceph

4.2.2.1 ceph 部署节点生成 ceph.client.cinder.keyring 和 ceph.client.cinder-backup.keyring 密钥文件

[cephadmin@ceph001 ~]$ ceph auth get-or-create client.cinder | tee /etc/ceph/ceph.client.cinder.keyring

[client.cinder]

key = AQBjf81f0UwJLBAAjn8xyY2Dmx6LhQM+7Uya3g==

[cephadmin@ceph001 ~]$ ceph auth get-or-create client.cinder-backup | tee /etc/ceph/ceph.client.cinder-backup.keyring

[client.cinder-backup]

key = AQBsf81f++flORAA+Fu1Ok0hlQfBiPN6zypNPQ==

[cephadmin@ceph001 ~]$ ll /etc/ceph/

total 24

-rw------- 1 cephadmin cephadmin 151 Dec 2 17:03 ceph.client.admin.keyring

-rw-rw-r-- 1 cephadmin cephadmin 71 Dec 14 16:15 ceph.client.cinder-backup.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Dec 14 16:15 ceph.client.cinder.keyring

-rw-rw-r-- 1 cephadmin cephadmin 64 Dec 14 15:40 ceph.client.glance.keyring

-rw-r--r-- 1 cephadmin cephadmin 313 Dec 2 17:17 ceph.conf

-rw-r--r-- 1 cephadmin cephadmin 92 Nov 24 03:33 rbdmap

-rw------- 1 cephadmin cephadmin 0 Dec 2 17:02 tmp1UDpGt

[cephadmin@ceph001 ~]$

4.2.2.2 kolla-ansible 部署节点新增cinder 配置文件目录

root@controller1 ~]# mkdir -p /etc/kolla/config/cinder/cinder-volume/

[root@controller1 ~]# mkdir -p /etc/kolla/config/cinder/cinder-backup

[root@controller1 ~]# ll /etc/kolla/config/cinder/

total 0

drwxr-xr-x 2 root root 6 Dec 14 16:18 cinder-backup

drwxr-xr-x 2 root root 6 Dec 14 16:18 cinder-volume

[root@controller1 ~]#

4.2.2.3 cinder 相关密钥文件拷贝到 kolla-ansbile 部署节点

[cephadmin@ceph001 ~]$ scp /etc/ceph/ceph.client.cinder.keyring root@172.31.185.211:/etc/kolla/config/cinder/cinder-backup/

ceph.client.cinder.keyring 100% 64 15.2KB/s 00:00

[cephadmin@ceph001 ~]$ scp /etc/ceph/ceph.client.cinder.keyring root@172.31.185.211:/etc/kolla/config/cinder/cinder-volume/

ceph.client.cinder.keyring 100% 64 33.3KB/s 00:00

[cephadmin@ceph001 ~]$ scp /etc/ceph/ceph.client.cinder-backup.keyring root@172.31.185.211:/etc/kolla/config/cinder/cinder-backup/

ceph.client.cinder-backup.keyring 100% 71 37.5KB/s 00:00

[cephadmin@ceph001 ~]$

4.2.2.4 cinder 配置目录下添加ceph

因为ceph.conf 配置文件内容 同上 4.2.1.4,故直接复制过来即可。

[root@controller1 ~]# cd /etc/kolla/config/cinder/

[root@controller1 cinder]# pwd

/etc/kolla/config/cinder

[root@controller1 cinder]# ll

total 0

drwxr-xr-x 2 root root 81 Dec 14 16:23 cinder-backup

drwxr-xr-x 2 root root 40 Dec 14 16:23 cinder-volume

[root@controller1 cinder]# cp ../glance/ceph.conf ./

[root@controller1 cinder]# ll

total 4

-rw-r--r-- 1 root root 110 Dec 14 16:26 ceph.conf

drwxr-xr-x 2 root root 81 Dec 14 16:23 cinder-backup

drwxr-xr-x 2 root root 40 Dec 14 16:23 cinder-volume

[root@controller1 cinder]#

4.2.3 nova 配置ceph

4.2.3.1 拷贝ceph.client.cinder.keyring 到 /etc/kolla/config/nova

[cephadmin@ceph001 ~]$ scp /etc/ceph/ceph.client.cinder.keyring root@172.31.185.211:/etc/kolla/config/nova/

ceph.client.cinder.keyring 100% 64 1.8KB/s 00:00

[cephadmin@ceph001 ~]$

4.2.3.2 配置ceph.conf

因为ceph.conf 配置文件内容 同上 4.2.1.4,故直接复制过来即可。

[root@controller1 nova]# cp ../glance/ceph.conf ./

[root@controller1 nova]# ll

total 8

-rw-r--r-- 1 root root 64 Dec 14 16:35 ceph.client.cinder.keyring

-rw-r--r-- 1 root root 110 Dec 14 16:36 ceph.conf

[root@controller1 nova]# pwd

/etc/kolla/config/nova

[root@controller1 nova]#

4.2.3.3 配置nova.conf

umap可以启用trim

writeback设置虚拟机的disk cache

[root@controller1 nova]# pwd

/etc/kolla/config/nova

[root@controller1 nova]# cat <<EOF>>/etc/kolla/config/nova/nova.conf

> [libvirt]

> hw_disk_discard = unmap

> disk_cachemodes="network=writeback"

> cpu_mode=host-passthrough

> EOF

[root@controller1 nova]# cat /etc/kolla/config/nova/nova.conf

[libvirt]

hw_disk_discard = unmap

disk_cachemodes="network=writeback"

cpu_mode=host-passthrough

[root@controller1 nova]#

4.2.4 kolla-ansible 安装openstack ussuri

4.2.4.1 预检查

[root@controller1 ~]# kolla-ansible -v -i /root/ansible/all-in-one prechecks

Pre-deployment checking : ansible-playbook -i /root/ansible/all-in-one -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla -e kolla_action=precheck /usr/local/share/kolla-ansible/ansible/site.yml --verbose

Using /etc/ansible/ansible.cfg as config file

检查成功

PLAY [Apply role masakari] ********************************************************************************************************************

skipping: no hosts matched

PLAY RECAP ************************************************************************************************************************************

localhost : ok=100 changed=0 unreachable=0 failed=0 skipped=192 rescued=0 ignored=0

4.2.4.2 拉取依赖包

[root@controller1 ~]# kolla-ansible -v -i /root/ansible/all-in-one pull

Pulling Docker images : ansible-playbook -i /root/ansible/all-in-one -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla -e kolla_action=pull /usr/local/share/kolla-ansible/ansible/site.yml --verbose

Using /etc/ansible/ansible.cfg as config file

拉取成功

PLAY RECAP ************************************************************************************************************************************

localhost : ok=37 changed=0 unreachable=0 failed=0 skipped=97 rescued=0 ignored=0

[root@controller1 ~]#

[root@controller1 ~]# docker images | grep ussuri

kolla/centos-source-nova-compute ussuri fab9bca9bae5 43 hours ago 1.91GB

kolla/centos-source-neutron-server ussuri d9d0cd4e1f65 43 hours ago 973MB

kolla/centos-source-neutron-l3-agent ussuri 8a1bbfe1b4ae 43 hours ago 998MB

kolla/centos-source-neutron-openvswitch-agent ussuri 7b7c9fee7b56 43 hours ago 953MB

kolla/centos-source-neutron-dhcp-agent ussuri b8cfc374e4a4 43 hours ago 953MB

kolla/centos-source-neutron-metadata-agent ussuri 685a3868b3a7 43 hours ago 953MB

kolla/centos-source-placement-api ussuri 641d0e316d6e 43 hours ago 893MB

kolla/centos-source-heat-api ussuri 89fa2791cc89 43 hours ago 878MB

kolla/centos-source-heat-engine ussuri 9290e1d2c92b 43 hours ago 878MB

kolla/centos-source-nova-novncproxy ussuri f136f8373623 43 hours ago 1.08GB

kolla/centos-source-heat-api-cfn ussuri 6e423823435b 43 hours ago 878MB

kolla/centos-source-nova-ssh ussuri 3c74f44d8a01 43 hours ago 1.03GB

kolla/centos-source-nova-api ussuri 203474c3acf1 43 hours ago 1.05GB

kolla/centos-source-nova-conductor ussuri ebbd9f83391f 43 hours ago 1GB

kolla/centos-source-nova-scheduler ussuri 3258ca26b032 43 hours ago 1GB

kolla/centos-source-cinder-volume ussuri 392b8bb9e713 43 hours ago 1.32GB

kolla/centos-source-glance-api ussuri 9b18c72555e0 43 hours ago 901MB

kolla/centos-source-cinder-api ussuri 2f1a8c77512c 43 hours ago 1.07GB

kolla/centos-source-cinder-backup ussuri 3732e4475380 43 hours ago 1.06GB

kolla/centos-source-cinder-scheduler ussuri 603801f67e22 43 hours ago 1.02GB

kolla/centos-source-keystone-ssh ussuri 4bf8ced56b77 43 hours ago 924MB

kolla/centos-source-keystone-fernet ussuri 09742c6f9d1b 43 hours ago 923MB

kolla/centos-source-keystone ussuri e3c2d0a07c65 43 hours ago 922MB

kolla/centos-source-horizon ussuri dc5c27f4599c 43 hours ago 998MB

kolla/centos-source-kolla-toolbox ussuri d143b65b0d59 43 hours ago 810MB

kolla/centos-source-openvswitch-db-server ussuri b7eaf724051e 43 hours ago 335MB

kolla/centos-source-nova-libvirt ussuri c89f3f71340a 43 hours ago 1.21GB

kolla/centos-source-openvswitch-vswitchd ussuri 4b263b000674 43 hours ago 335MB

kolla/centos-source-mariadb-clustercheck ussuri 049e5d1ba83f 43 hours ago 523MB

kolla/centos-source-rabbitmq ussuri b440b8076d4e 43 hours ago 393MB

kolla/centos-source-cron ussuri e04a8ecb4834 43 hours ago 312MB

kolla/centos-source-fluentd ussuri d77a23a0aa14 43 hours ago 636MB

kolla/centos-source-mariadb ussuri 894044f4d0cf 43 hours ago 554MB

kolla/centos-source-chrony ussuri 149aeba25faa 43 hours ago 313MB

kolla/centos-source-haproxy ussuri a24980fada86 43 hours ago 330MB

kolla/centos-source-memcached ussuri 73232e567d12 43 hours ago 332MB

kolla/centos-source-keepalived ussuri 274359685b05 43 hours ago 326MB

[root@controller1 ~]# docker images | grep ussuri | wc -l

37

[root@controller1 ~]#

4.2.4.3 部署openstack

[root@controller1 ~]# kolla-ansible -v -i /root/ansible/all-in-one deploy

Deploying Playbooks : ansible-playbook -i /root/ansible/all-in-one -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla -e kolla_action=deploy /usr/local/share/kolla-ansible/ansible/site.yml --verbose

Using /etc/ansible/ansible.cfg as config file

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

[WARNING]: Could not match supplied host pattern, ignoring: enable_nova_True

PLAY [Apply role masakari] ********************************************************************************************************************

skipping: no hosts matched

PLAY RECAP ************************************************************************************************************************************

localhost : ok=420 changed=234 unreachable=0 failed=0 skipped=239 rescued=0 ignored=1

[root@controller1 kolla]#

4.2.4.4 生成本地环境变量

[root@controller1 ~]# kolla-ansible -i /root/ansible/all-in-one post-deploy

Post-Deploying Playbooks : ansible-playbook -i /root/ansible/all-in-one -e @/etc/kolla/globals.yml -e @/etc/kolla/passwords.yml -e CONFIG_DIR=/etc/kolla /usr/local/share/kolla-ansible/ansible/post-deploy.yml

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

PLAY [Creating admin openrc file on the deploy node] ******************************************************************************************

TASK [Gathering Facts] ************************************************************************************************************************

[DEPRECATION WARNING]: Distribution centos 8.1.1911 on host localhost should use /usr/libexec/platform-python, but is using /usr/bin/python

for backward compatibility with prior Ansible releases. A future Ansible release will default to using the discovered platform python for this

host. See https://docs.ansible.com/ansible/2.9/reference_appendices/interpreter_discovery.html for more information. This feature will be

removed in version 2.12. Deprecation warnings can be disabled by setting deprecation_warnings=False in ansible.cfg.

ok: [localhost]

TASK [Template out admin-openrc.sh] ***********************************************************************************************************

changed: [localhost]

PLAY RECAP ************************************************************************************************************************************

localhost : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

[root@controller1 ~]# ll /etc/kolla/

total 60

-rw-r--r-- 1 root root 521 Dec 15 15:22 admin-openrc.sh

4.2.4.5 初始化网络及制作镜像

4.2.5 验证ceph 存储生效

初步判断,ceph 与 openstack 集成生效了,但是详细验证 还待后续换个环境重新搭建下。目前是虚机上部署的。做了个实例 ,没有完全成功。

posted on 2020-12-07 09:13 weiwei2021 阅读(526) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号