linux (centos7) 使用ceph-deploy 安装ceph

一 摘要

本文是基于centos7.6 版使用ceph-deploy 工具 安装ceph nautilus 版本。

二 环境

(一)操作系统版本

[root@ceph ~]# cat /etc/centos-release

CentOS Linux release 7.6.1810 (Core)

[root@ceph ~]# uname -a

Linux ceph.novalocal 3.10.0-957.el7.x86_64 #1 SMP Thu Nov 8 23:39:32 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

[root@ceph ~]#

(二)ceph 版本

(三)部署规划

| 主机名 | IP |磁盘 |角色|

| ---- | ---- | ---- | ---- | ---- |

| ceph001 | 172.31.185.127 | 系统盘:/dev/vda 数据盘:/dev/vdb |时间服务器,ceph-deploy,monitor,mgr,mds,osd|

| ceph002 | 172.31.185.198 | 系统盘:/dev/vda 数据盘:/dev/vdb |monitor,mgr,mds,osd|

| ceph003 | 172.31.185.203 | 系统盘:/dev/vda 数据盘:/dev/vdb |monitor,mgr,mds,osd|

三 部署实施

(一) 基础安装

以下安装,若无特殊说明,三台节点均需要安装。

3.1.1 设置主机名称

以172.31.185.127 为例子

[root@ceph ~]# hostnamectl set-hostname ceph001

[root@ceph ~]#

PS:

172.31.185.198 hostnamectl set-hostname ceph002

172.31.185.203 hostnamectl set-hostname ceph003

3.1.2 关闭防火墙

[root@ceph ~]# systemctl stop firewalld | systemctl disable firewalld | systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@ceph ~]#

3.1.3 关闭selinux

[root@ceph001 ~]# cp /etc/selinux/config /etc/selinux/config.bak.orig

[root@ceph001 ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

[root@ceph001 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@ceph001 ~]#

3.1.4 修改hosts文件

[root@ceph001 ~]# cp /etc/hosts /etc/hosts.bak.orig

[root@ceph001 ~]# vi /etc/hosts

新增

172.31.185.127 ceph001

172.31.185.198 ceph002

172.31.185.203 ceph003

3.1.5 重启虚机

[root@ceph001 ~]# reboot

3.1.6 配置时间服务

因为centos7 一般默认安装了chrony ,若没有安装直接 yum install chrony

3.1.6.1 配置时间服务器server 端

172.31.185.127 作为时间服务器server

3.1.6.1.1 修改配置/etc/chrony.conf

[root@ceph001 ~]# cp /etc/chrony.conf /etc/chrony.conf.bak.orig

3.1.6.2 配置时间服务器client 端

3.1.7 配置ceph 源

根据操作系统版本以及需要安装的ceph 版本配置ceph 源

vim cephcentos7.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

给另外两台机器也配置上

[root@ceph001 yum.repos.d]# scp /etc/yum.repos.d/cephcentos7.repo root@ceph002:/etc/yum.repos.d/

The authenticity of host 'ceph002 (172.31.185.198)' can't be established.

ECDSA key fingerprint is SHA256:UaP5H/xJyWWjNZhVTvkDnGHmrmbjbunj0aoTHFmaQOE.

ECDSA key fingerprint is MD5:b2:0c:2f:54:62:11:0e:32:98:44:1f:a8:d0:1e:16:eb.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'ceph002,172.31.185.198' (ECDSA) to the list of known hosts.

root@ceph002's password:

cephcentos7.repo 100% 611 335.1KB/s 00:00

[root@ceph001 yum.repos.d]# scp /etc/yum.repos.d/cephcentos7.repo root@ceph003:/etc/yum.repos.d/

The authenticity of host 'ceph003 (172.31.185.203)' can't be established.

ECDSA key fingerprint is SHA256:UaP5H/xJyWWjNZhVTvkDnGHmrmbjbunj0aoTHFmaQOE.

ECDSA key fingerprint is MD5:b2:0c:2f:54:62:11:0e:32:98:44:1f:a8:d0:1e:16:eb.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'ceph003,172.31.185.203' (ECDSA) to the list of known hosts.

root@ceph003's password:

cephcentos7.repo 100% 611 167.0KB/s 00:00

[root@ceph001 yum.repos.d]#

3.1.8 创建部署用户cephadmin

三台节点都要创建该用户,并设置sudo

[root@ceph001 yum.repos.d]# useradd cephadmin

[root@ceph001 ~]# echo "cephnau@2020" | passwd --stdin cephadmin

Changing password for user cephadmin.

passwd: all authentication tokens updated successfully.

[root@ceph001 ~]#

[root@ceph001 yum.repos.d]# echo "cephadmin ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephadmin

cephadmin ALL = (root) NOPASSWD:ALL

[root@ceph001 yum.repos.d]# chmod 0440 /etc/sudoers.d/cephadmin

[root@ceph001 yum.repos.d]#

(二) 部署节点基础安装

此处部署节点是ceph001 节点即ceph001

3.2.1 配置cephadmin用户ssh免密登录

部署节点上cephadmin 能够免密登录到三个ceph 集群集群 ceph001,ceph002,ceph003

[root@ceph001 ~]# su - cephadmin

Last login: Mon Nov 30 11:35:15 CST 2020 from 172.31.185.198 on pts/1

[cephadmin@ceph001 ~]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/cephadmin/.ssh/id_rsa):

Created directory '/home/cephadmin/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/cephadmin/.ssh/id_rsa.

Your public key has been saved in /home/cephadmin/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:sw1pd339wzApgtVCwDQV4fGq8/oT7hug7P5WK2ifbpE cephadmin@ceph001

The key's randomart image is:

+---[RSA 3072]----+

| o+o*o |

| .+ + |

| + o |

| o.o .. .|

| oSo...+. o|

| . .EoO... +..|

| o.o=.+ o.|

| .o +++. .|

| oo**==o |

+----[SHA256]-----+

[cephadmin@ceph001 ~]$ ssh-copy-id cephadmin@ceph001

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadmin/.ssh/id_rsa.pub"

The authenticity of host 'ceph001 (172.31.185.127)' can't be established.

ECDSA key fingerprint is SHA256:ES6ytBX1siYV4WMG2CF3/21VKaDd5y27lbWQggeqRWM.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadmin@ceph001's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'cephadmin@ceph001'"

and check to make sure that only the key(s) you wanted were added.

[cephadmin@ceph001 ~]$ ssh-copy-id cephadmin@ceph002

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadmin/.ssh/id_rsa.pub"

The authenticity of host 'ceph002 (172.31.185.198)' can't be established.

ECDSA key fingerprint is SHA256:ES6ytBX1siYV4WMG2CF3/21VKaDd5y27lbWQggeqRWM.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadmin@ceph002's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'cephadmin@ceph002'"

and check to make sure that only the key(s) you wanted were added.

[cephadmin@ceph001 ~]$ ssh-copy-id cephadmin@ceph003

(三) 部署ceph

3.3.1 部署节点安装ceph-deploy

在部署节点ceph001 上使用cephadmin 用户安装ceph-deploy

3.3.1.1 下载安装包到本地

将这些安装包下载到本地,为以后的离线安装做准备。

sudo yum -y install --downloadonly --downloaddir=/home/cephadmin/software/cephcentos7/ceph-deploy/ ceph-deploy python-pip

[cephadmin@ceph001 cephcentos7]$ cd ceph-deploy/

[cephadmin@ceph001 ceph-deploy]$ pwd

/home/cephadmin/software/cephcentos7/ceph-deploy

[cephadmin@ceph001 ceph-deploy]$ ll

total 2000

-rw-r--r-- 1 root root 292428 Apr 10 2020 ceph-deploy-2.0.1-0.noarch.rpm

-rw-r--r-- 1 root root 1752207 Jan 29 2020 python2-pip-8.1.2-12.el7.noarch.rpm

[cephadmin@ceph001 ceph-deploy]$

3.3.1.2 安装ceph-deploy python-pip

[cephadmin@ceph001 ceph-deploy]$ pwd

/home/cephadmin/software/cephcentos7/ceph-deploy

[cephadmin@ceph001 ceph-deploy]$ ll

total 2000

-rw-r--r-- 1 root root 292428 Apr 10 2020 ceph-deploy-2.0.1-0.noarch.rpm

-rw-r--r-- 1 root root 1752207 Jan 29 2020 python2-pip-8.1.2-12.el7.noarch.rpm

[cephadmin@ceph001 ceph-deploy]$ sudo yum localinstall *.rpm

3.3.2 安装ceph包

所有ceph 集群节点都需安装

采用yum 方式安装ceph 包,把相关依赖全部下载下来

3.3.2.1 下载安装包到本地

sudo yum -y install --downloadonly --downloaddir=/home/cephadmin/software/cephcentos7/ceph/ ceph ceph-radosgw

3.3.2.2 安装ceph 包

三台节点安装ceph

[cephadmin@ceph001 ceph]$ sudo yum localinstall *.rpm

3.3.3 创建集群

在ceph-deploy 部署节点 操作

3.3.3.1 安装ceph软件(标题名称要改)

部署节点上

[cephadmin@ceph001 ~]$ mkdir /home/cephadmin/cephcluster

[cephadmin@ceph001 ~]$ ll

total 0

drwxrwxr-x 2 cephadmin cephadmin 6 Nov 30 16:48 cephcluster

drwxr-xr-x 4 root root 58 Nov 30 16:45 software

[cephadmin@ceph001 ~]$ cd cephcluster/

[cephadmin@ceph001 cephcluster]$ pwd

/home/cephadmin/cephcluster

[cephadmin@ceph001 cephcluster]$ ceph-deploy new ceph001 ceph002 ceph003

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy new ceph001 ceph002 ceph003

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] func : <function new at 0x7f296c15bd70>

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f296bae7950>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['ceph001', 'ceph002', 'ceph003']

[ceph_deploy.cli][INFO ] public_network : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

。。。。

以上可见 本次集群名是ceph

并生成以下文件

[cephadmin@ceph001 cephcluster]$ ll

total 16

-rw-rw-r-- 1 cephadmin cephadmin 247 Nov 30 16:50 ceph.conf

-rw-rw-r-- 1 cephadmin cephadmin 5231 Nov 30 16:50 ceph-deploy-ceph.log

-rw------- 1 cephadmin cephadmin 73 Nov 30 16:50 ceph.mon.keyring

[cephadmin@ceph001 cephcluster]$

PS:

ceph-deploy –cluster {cluster-name} new node1 node2 //创建一个自定集群名称的ceph集群,默

认为 ceph

修改ceph.conf 新增网络配置

[global]

fsid = 69002794-cf45-49fa-8849-faadae48544f

mon_initial_members = ceph001, ceph002, ceph003

mon_host = 172.31.185.127,172.31.185.198,172.31.185.203

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 172.31.185.0/24

cluster network = 172.31.185.0/24

~

public network = 172.31.185.0/24

cluster network = 172.31.185.0/24

应该使用两个不同的网段,因为我这里只有一张网卡。

比如eth0 ,eth1

3.3.3.2 集群配置初始化,生成所有密钥

[cephadmin@ceph001 cephcluster]$ ceph-deploy mon create-initial #配置初始 monitor(s)、并收集所有密钥

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create-initial

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f0a8fa7c0e0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mon at 0x7f0a8fcdf398>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph001 ceph002 ceph003

生成的密钥

[cephadmin@ceph001 cephcluster]$ ls -l *.keyring

-rw------- 1 cephadmin cephadmin 113 Nov 30 17:17 ceph.bootstrap-mds.keyring

-rw------- 1 cephadmin cephadmin 113 Nov 30 17:17 ceph.bootstrap-mgr.keyring

-rw------- 1 cephadmin cephadmin 113 Nov 30 17:17 ceph.bootstrap-osd.keyring

-rw------- 1 cephadmin cephadmin 113 Nov 30 17:17 ceph.bootstrap-rgw.keyring

-rw------- 1 cephadmin cephadmin 151 Nov 30 17:17 ceph.client.admin.keyring

-rw------- 1 cephadmin cephadmin 73 Nov 30 16:50 ceph.mon.keyring

[cephadmin@ceph001 cephcluster]$ pwd

/home/cephadmin/cephcluster

[cephadmin@ceph001 cephcluster]$

3.3.3.3 配置信息分发到各节点

配置信息将拷贝到各节点/etc/ceph 目录下

[cephadmin@ceph001 cephcluster]$ ceph-deploy admin ceph001 ceph002 ceph003 #配置信息拷贝到三台节点

检查是否可以

切换到root 账号

[root@ceph001 ~]# ceph -s

cluster:

id: 69002794-cf45-49fa-8849-faadae48544f

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph001,ceph002,ceph003 (age 10m)

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

[root@ceph001 ~]#

[root@ceph002 ~]# ceph -s

cluster:

id: 69002794-cf45-49fa-8849-faadae48544f

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph001,ceph002,ceph003 (age 12m)

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

[root@ceph002 ~]#

如果想使用cephadmin 账号执行ceph -s,则需要修改/etc/ceph 目录权限

[root@ceph001 ~]# su - cephadmin

Last login: Mon Nov 30 16:42:45 CST 2020 on pts/0

[cephadmin@ceph001 ~]$ ceph -s

[errno 2] error connecting to the cluster

[cephadmin@ceph001 ~]$ ll /etc/ceph/

total 12

-rw------- 1 root root 151 Nov 30 17:25 ceph.client.admin.keyring

-rw-r--r-- 1 root root 313 Nov 30 17:25 ceph.conf

-rw-r--r-- 1 root root 92 Nov 24 03:33 rbdmap

-rw------- 1 root root 0 Nov 30 17:16 tmp10a3zI

[cephadmin@ceph001 ~]$ sudo chown -R cephadmin:cephadmin /etc/ceph

[cephadmin@ceph001 ~]$ ceph -s

cluster:

id: 69002794-cf45-49fa-8849-faadae48544f

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph001,ceph002,ceph003 (age 14m)

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

[cephadmin@ceph001 ~]$

三个节点都需要执行 sudo chown -R cephadmin:cephadmin /etc/ceph

3.3.3.4 配置osd

首先查看数据盘名称,三个节点都要看

[root@ceph001 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 478K 0 rom

vda 253:0 0 50G 0 disk

├─vda1 253:1 0 200M 0 part /boot

└─vda2 253:2 0 49.8G 0 part /

vdb 253:16 0 50G 0 disk

可见我数据盘时vdb

我这里写了各脚本,该脚本需要在/home/cephadmin/cephcluster 目录下执行

for dev in /dev/vdb

do

ceph-deploy disk zap ceph001 $dev

ceph-deploy osd create ceph001 --data $dev

ceph-deploy disk zap ceph002 $dev

ceph-deploy osd create ceph002 --data $dev

ceph-deploy disk zap ceph003 $dev

ceph-deploy osd create ceph003 --data $dev

done

[cephadmin@ceph001 cephcluster]$ for dev in /dev/vdb

> do

> ceph-deploy disk zap ceph001 $dev

> ceph-deploy osd create ceph001 --data $dev

> ceph-deploy disk zap ceph002 $dev

> ceph-deploy osd create ceph002 --data $dev

> ceph-deploy disk zap ceph003 $dev

> ceph-deploy osd create ceph003 --data $dev

> done

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy disk zap ceph001 /dev/vdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fcd19fcd8c0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ceph001

[ceph_deploy.cli][INFO ] func : <function disk at 0x7fcd1a21c8c0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/vdb']

[ceph_deploy.osd][DEBUG ] zapping /dev/vdb on ceph001

[ceph001][DEBUG ] connection detected need for sudo

检查命令是否执行成功

[cephadmin@ceph001 cephcluster]$ ceph -s

cluster:

id: 69002794-cf45-49fa-8849-faadae48544f

health: HEALTH_WARN

no active mgr

services:

mon: 3 daemons, quorum ceph001,ceph002,ceph003 (age 26m)

mgr: no daemons active

osd: 3 osds: 3 up (since 4m), 3 in (since 4m)

## 我这里时三个节点,每个节点一块数据盘,可见已成功

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

[cephadmin@ceph001 cephcluster]$

3.3.3.5 部署mgr

[cephadmin@ceph001 cephcluster]$ ceph-deploy mgr create ceph001 ceph002 ceph003

[cephadmin@ceph001 cephcluster]$ ceph -s

cluster:

id: 69002794-cf45-49fa-8849-faadae48544f

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph001,ceph002,ceph003 (age 30m)

mgr: ceph002(active, since 83s), standbys: ceph003, ceph001

#mgr 已经部署成功

osd: 3 osds: 3 up (since 8m), 3 in (since 8m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs:

[cephadmin@ceph001 cephcluster]$

3.3.3.6 安装mgr-dashboard(三台节点都需要安装)

nautilus 版本需要安装dashboard

[cephadmin@ceph001 cephcluster]$ sudo yum -y install --downloadonly --downloaddir=/home/cephadmin/software/cephcentos7/cephmgrdashboard/ ceph-mgr-dashboard

[cephadmin@ceph001 cephcluster]$ cd /home/cephadmin/software/cephcentos7/cephmgrdashboard/

[cephadmin@ceph001 cephmgrdashboard]$ ll

total 5468

-rw-r--r-- 1 root root 20944 Nov 24 05:47 ceph-grafana-dashboards-14.2.15-0.el7.noarch.rpm

-rw-r--r-- 1 root root 4143192 Nov 24 05:47 ceph-mgr-dashboard-14.2.15-0.el7.noarch.rpm

-rw-r--r-- 1 root root 514504 Apr 25 2018 python2-cryptography-1.7.2-2.el7.x86_64.rpm

-rw-r--r-- 1 root root 102132 Nov 21 2016 python2-pyasn1-0.1.9-7.el7.noarch.rpm

-rw-r--r-- 1 root root 95952 Aug 11 2017 python-idna-2.4-1.el7.noarch.rpm

-rw-r--r-- 1 root root 37064 Apr 25 2018 python-jwt-1.5.3-1.el7.noarch.rpm

-rw-r--r-- 1 root root 13028 Jan 10 2014 python-repoze-lru-0.4-3.el7.noarch.rpm

-rw-r--r-- 1 root root 655332 Jan 11 2014 python-routes-1.13-2.el7.noarch.rpm

[cephadmin@ceph001 cephmgrdashboard]$ sudo yum localinstall *.rpm

3.3.3.7 开启mgr-dashboard(主节点开启)

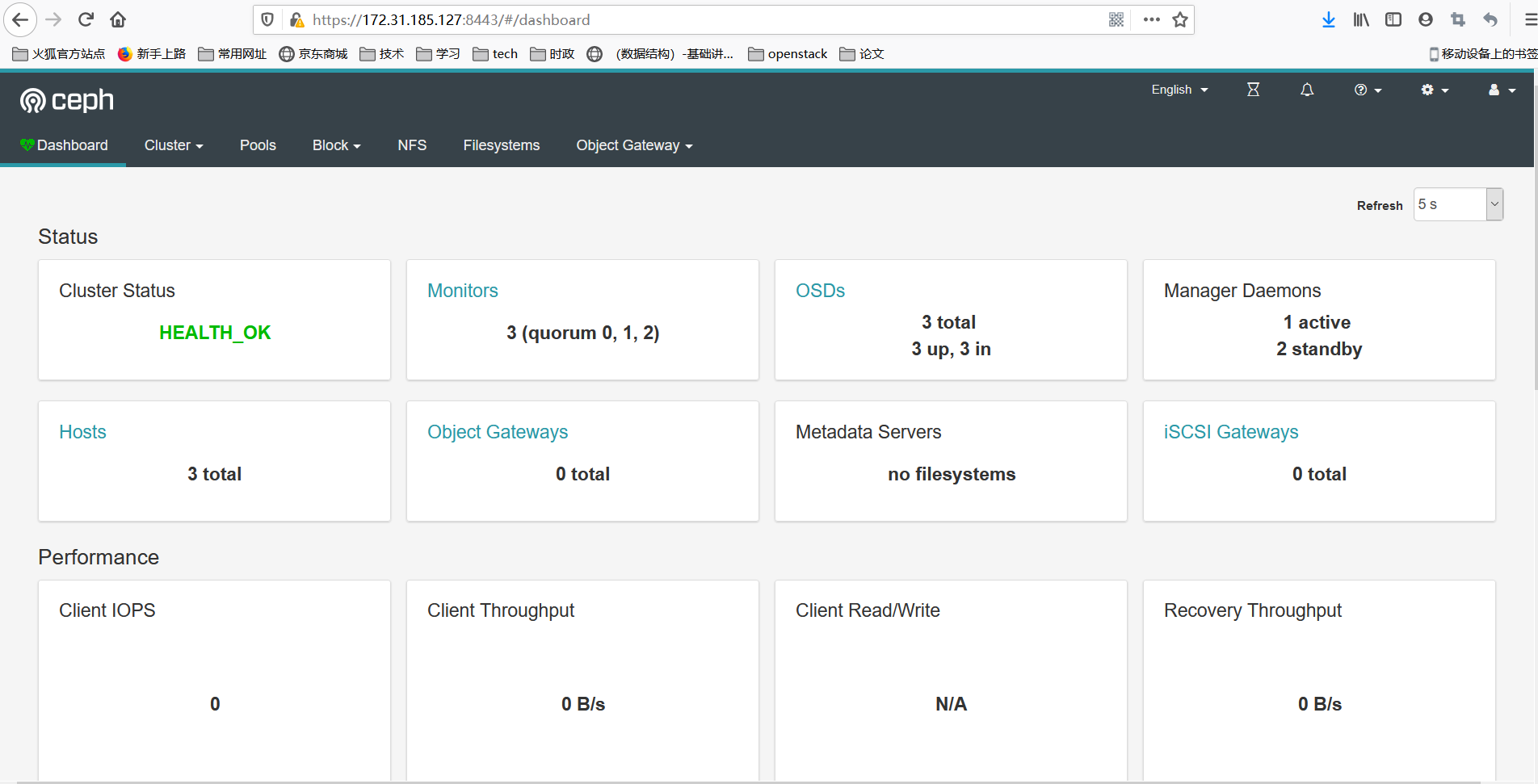

[cephadmin@ceph001 cephmgrdashboard]$ ceph -s

cluster:

id: 8ed6d371-0553-4d62-a6b6-1d7359589655

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph001,ceph002,ceph003 (age 9m)

mgr: ceph001(active, since 60s), standbys: ceph003, ceph002 # 可见主节点在ceph001 上

osd: 3 osds: 3 up (since 4m), 3 in (since 4m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs:

[cephadmin@ceph001 cephmgrdashboard]$ ceph mgr module enable dashboard

[cephadmin@ceph001 cephmgrdashboard]$ ceph dashboard create-self-signed-cert

Self-signed certificate created

[cephadmin@ceph001 cephmgrdashboard]$ ceph dashboard set-login-credentials admin admin

******************************************************************

*** WARNING: this command is deprecated. ***

*** Please use the ac-user-* related commands to manage users. ***

******************************************************************

Username and password updated

[cephadmin@ceph001 cephmgrdashboard]$ ceph mgr services

{

"dashboard": "https://ceph001:8443/"

}

[cephadmin@ceph001 cephmgrdashboard]$

posted on 2020-12-01 08:42 weiwei2021 阅读(3281) 评论(1) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号