Scrapy框架

Scrapy

Scrapy是一个为了爬取网站数据,提取结构性数据而编写的应用框架。 其可以应用在数据挖掘,信息处理或存储历史数据等一系列的程序中。

Scrapy囊括了爬取网站数据几乎所有的功能,是一个扩展性很强的一个框架,Scrapy在爬虫界里相当于web的Django

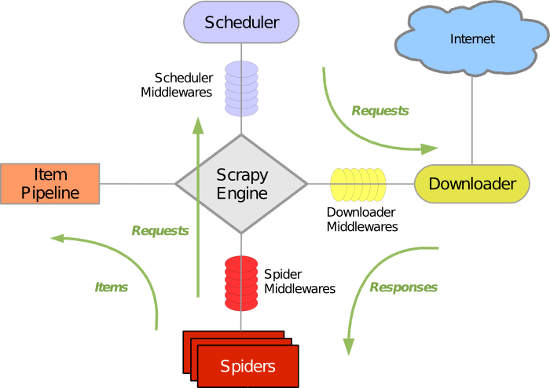

Scrapy 使用了 Twisted异步网络库来处理网络通讯。整体架构大致如下

Scrapy主要包括了以下组件:

- 引擎(Scrapy)

用来处理整个系统的数据流处理, 触发事务(框架核心) - 调度器(Scheduler)

用来接受引擎发过来的请求, 压入队列中, 并在引擎再次请求的时候返回. 可以想像成一个URL(抓取网页的网址或者说是链接)的优先队列, 由它来决定下一个要抓取的网址是什么, 同时去除重复的网址 - 下载器(Downloader)

用于下载网页内容, 并将网页内容返回给蜘蛛(Scrapy下载器是建立在twisted这个高效的异步模型上的) - 爬虫(Spiders)

爬虫是主要干活的, 用于从特定的网页中提取自己需要的信息, 即所谓的实体(Item)。用户也可以从中提取出链接,让Scrapy继续抓取下一个页面 - 项目管道(Pipeline)

负责处理爬虫从网页中抽取的实体,主要的功能是持久化实体、验证实体的有效性、清除不需要的信息。当页面被爬虫解析后,将被发送到项目管道,并经过几个特定的次序处理数据。 - 下载器中间件(Downloader Middlewares)

位于Scrapy引擎和下载器之间的框架,主要是处理Scrapy引擎与下载器之间的请求及响应。 -

下载中间件

下载中间件class DownMiddleware1(object): def process_request(self, request, spider): """ 请求需要被下载时,经过所有下载器中间件的process_request调用 :param request: :param spider: :return: None,继续后续中间件去下载; Response对象,停止process_request的执行,开始执行process_response Request对象,停止中间件的执行,将Request重新调度器 raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception """ pass def process_response(self, request, response, spider): """ spider处理完成,返回时调用 :param response: :param result: :param spider: :return: Response 对象:转交给其他中间件process_response Request 对象:停止中间件,request会被重新调度下载 raise IgnoreRequest 异常:调用Request.errback """ print('response1') return response def process_exception(self, request, exception, spider): """ 当下载处理器(download handler)或 process_request() (下载中间件)抛出异常 :param response: :param exception: :param spider: :return: None:继续交给后续中间件处理异常; Response对象:停止后续process_exception方法 Request对象:停止中间件,request将会被重新调用下载 """ return None 下载器中间件

- 爬虫中间件(Spider Middlewares)

介于Scrapy引擎和爬虫之间的框架,主要工作是处理蜘蛛的响应输入和请求输出。 -

爬虫中间件

爬虫中间件class SpiderMiddleware(object): def process_spider_input(self,response, spider): """ 下载完成,执行,然后交给parse处理 :param response: :param spider: :return: """ pass def process_spider_output(self,response, result, spider): """ spider处理完成,返回时调用 :param response: :param result: :param spider: :return: 必须返回包含 Request 或 Item 对象的可迭代对象(iterable) """ return result def process_spider_exception(self,response, exception, spider): """ 异常调用 :param response: :param exception: :param spider: :return: None,继续交给后续中间件处理异常;含 Response 或 Item 的可迭代对象(iterable),交给调度器或pipeline """ return None def process_start_requests(self,start_requests, spider): """ 爬虫启动时调用 :param start_requests: :param spider: :return: 包含 Request 对象的可迭代对象 """ return start_requests 爬虫中间件

- 调度中间件(Scheduler Middewares)

介于Scrapy引擎和调度之间的中间件,从Scrapy引擎发送到调度的请求和响应。

Scrapy运行流程大概如下:

- 引擎从调度器中取出一个链接(URL)用于接下来的抓取

- 引擎把URL封装成一个请求(Request)传给下载器

- 下载器把资源下载下来,并封装成应答包(Response)

- 爬虫解析Response

- 解析出实体(Item),则交给实体管道进行进一步的处理

- 解析出的是链接(URL),则把URL交给调度器等待抓取

-

Scrapy的安装

Windows

a. pip3 install wheel(使pip能够安装.whl文件)

b. 下载twisted http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

c. 进入下载目录,执行 pip3 install Twisted‑17.1.0‑cp35‑cp35m‑win_amd64.whl

d. pip3 install scrapy

e. 下载并安装pywin32:https://sourceforge.net/projects/pywin32/files/

Linux

pip install scrapy

1、基本命令

scrapy startproject xdb 创建项目

cd xdb

scrapy genspider chouti chouti.com 创建爬虫

scrapy crawl chouti --nolog 启动爬虫

2、爬虫目录结构

project_name/

scrapy.cfg

project_name/

__init__.py

items.py

pipelines.py

settings.py

spiders/

__init__.py

爬虫1.py

爬虫2.py

爬虫3.py

爬取抽屉的新闻链接

spider

# -*- coding: utf-8 -*-

import scrapy

from scrapy.http import Request

from ..items import FirstspiderItem

class ChoutiSpider(scrapy.Spider):

name = 'chouti'

allowed_domains = ['chouti.com']

start_urls = ['http://chouti.com/']

def parse(self, response):

# print('response.request.url',response.request.url)

# print(response,type(response)) # 对象

# print(response.text.strip())

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.text,'html.parser')

content_list = soup.find('div',attrs={'id':'content-list'})

"""

# 去子孙中找div并且id=content-list

# f = open('news.log', mode='a+')

item_list = response.xpath('//div[@id="content-list"]/div[@class="item"]')

for item in item_list:

text = item.xpath('.//a/text()').extract_first()

href = item.xpath('.//a/@href').extract_first()

# f.write(href+'\n')

yield FirstspiderItem(href=href)

# f.close()

page_list = response.xpath('//div[@id="dig_lcpage"]//a/@href').extract()

for page in page_list:

url_1 = "https://dig.chouti.com" + page

yield Request(url=url_1,callback=self.parse,) # https://dig.chouti.com/all/hot/recent/2

items

import scrapy

class FirstspiderItem(scrapy.Item):

# define the fields for your item here like:

href = scrapy.Field()

pipelines

class FirstspiderPipeline(object):

def __init__(self,path):

self.f = None

self.path = path

@classmethod

def from_crawler(cls,crawler):

path = crawler.settings.get('HREF_FILE_PATH')

return cls(path)

# print('File.from_crawler')

# path = crawler.settings.get('HREF_FILE_PATH')

# return cls(path)

def open_spider(self,spider):

print('开始爬虫')

self.f =open(self.path, mode='a+')

def process_item(self, item, spider):

print(item['href'])

self.f.write(item['href']+'\n')

return item

def close_spider(self,spider):

print('结束爬虫')

self.f.close()

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html """ """ """ 源码内容: 1. 判断当前XdbPipeline类中是否有from_crawler 有: obj = XdbPipeline.from_crawler(....) 否: obj = XdbPipeline() 2. obj.open_spider() 3. obj.process_item()/obj.process_item()/obj.process_item()/obj.process_item()/obj.process_item() 4. obj.close_spider() """ from scrapy.exceptions import DropItem class FilePipeline(object): def __init__(self,path): self.f = None self.path = path @classmethod def from_crawler(cls, crawler): """ 初始化时候,用于创建pipeline对象 :param crawler: :return: """ print('File.from_crawler') path = crawler.settings.get('HREF_FILE_PATH') return cls(path) def open_spider(self,spider): """ 爬虫开始执行时,调用 :param spider: :return: """ # if spider.name == 'chouti': print('File.open_spider') self.f = open(self.path,'a+') def process_item(self, item, spider): # f = open('xx.log','a+') # f.write(item['href']+'\n') # f.close() print('File',item['href']) self.f.write(item['href']+'\n') # return item raise DropItem() def close_spider(self,spider): """ 爬虫关闭时,被调用 :param spider: :return: """ print('File.close_spider') self.f.close() class DbPipeline(object): def __init__(self,path): self.f = None self.path = path @classmethod def from_crawler(cls, crawler): """ 初始化时候,用于创建pipeline对象 :param crawler: :return: """ print('DB.from_crawler') path = crawler.settings.get('HREF_DB_PATH') return cls(path) def open_spider(self,spider): """ 爬虫开始执行时,调用 :param spider: :return: """ print('Db.open_spider') self.f = open(self.path,'a+') def process_item(self, item, spider): # f = open('xx.log','a+') # f.write(item['href']+'\n') # f.close() print('Db',item) # self.f.write(item['href']+'\n') return item def close_spider(self,spider): """ 爬虫关闭时,被调用 :param spider: :return: """ print('Db.close_spider') self.f.close()

settings中引擎配置

ROBOTSTXT_OBEY = False

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'

pipelines在settings的配置

ITEM_PIPELINES={

'Firstspider.pipelines.FirstspiderPipeline': 300

}

# 每行后面的整型值,确定了他们运行的顺序,item按数字从低到高的顺序,通过pipeline,通常将这些数字定义在0-1000范围内。

HREF_FILE_PATH='new.log'

关于window编码

import sys,os

sys.stdout=io.TextIOWrapper(sys.stdout.buffer,encoding='gb18030')

抽屉爬虫点赞

cookie的使用

yield Request(url=url, callback=self.login, meta={'cookiejar': True})

#-*- coding: utf-8 -*-

import os,io,sys

sys.stdout=io.TextIOWrapper(sys.stdout.buffer,encoding='gb18030')

import scrapy

from scrapy.http import Request

from scrapy.http.cookies import CookieJar

class ChoutizanSpider(scrapy.Spider):

name = 'choutizan'

# allowed_domains = ['chouti.com']

start_urls = ['http://chouti.com/']

cookie_dict = {}

def parse(self, response):

print('parse开始')

cookie_jar = CookieJar()

cookie_jar.extract_cookies(response,response.request)

for k,v in cookie_jar._cookies.items():

for i,j in v.items():

for m,n in j.items():

self.cookie_dict[m] = n.value

yield Request(url='http://chouti.com/',callback=self.login)

def login(self,response):

print('qwert')

yield Request(

url='https://dig.chouti.com/login',

method='POST',

headers={

'Content-Type':'application/x-www-form-urlencoded; charset=UTF-8'

},

cookies=self.cookie_dict,

body="phone=8615339953615&password=w11111111&oneMonth=1",

callback=self.check_login

)

def check_login(self,response):

print(response.text)

yield Request(

url='https://dig.chouti.com/all/hot/recent/1',

cookies=self.cookie_dict,

callback=self.dianzan

)

def dianzan(self,response):

print('执行dianzan')

response_list = response.xpath('//div[@id="content-list"]/div[@class="item"]/div[@class="news-content"]/div[@class="part2"]/@share-linkid').extract()

# for new in response_list:

# new.xpath('/')

# print(response_list)

for new in response_list:

url1 ='https://dig.chouti.com/link/vote?linksId='+new

print(url1)

yield Request(

url=url1,

method='POST',

cookies=self.cookie_dict,

callback=self.check_dianzan

)

url_list = response.xpath('//div[@id="dig_lcpage"]//a/@href').extract()

for url_1 in url_list:

page = 'https://dig.chouti.com'+url_1

yield Request(

url=page,

callback=self.dianzan

)

def check_dianzan(self,response):

print(response.text)

# page_list = response.xpath('//div[@id="dig_lcpage"]//a/@href').extract()

# for page in page_list:

#

# url_1 = "https://dig.chouti.com" + page

# print(url_1)

# yield Request(url=url_1,callback=self.dianzan,) # https://dig.chouti.com/all/hot/recent/2

爬虫去重控制

dupefilters.py 中request_seen编写正确的逻辑,dont_filter=False

from scrapy.dupefilter import BaseDupeFilter

from scrapy.utils.request import request_fingerprint

class XdbDupeFilter(BaseDupeFilter):

def __init__(self):

self.visited_fd = set()

@classmethod

def from_settings(cls, settings):

return cls()

def request_seen(self, request):

fd = request_fingerprint(request=request)

if fd in self.visited_fd:

return True

self.visited_fd.add(fd)

def open(self): # can return deferred

print('开始')

def close(self, reason): # can return a deferred

print('结束')

# def log(self, request, spider): # log that a request has been filtered

# print('日志')

dupefilters.py的settings中:

# 修改默认的去重规则 # DUPEFILTER_CLASS = 'scrapy.dupefilter.RFPDupeFilter' DUPEFILTER_CLASS = 'xdb.dupefilters.XdbDupeFilter'

配置文件中限制深度

# 限制深度 DEPTH_LIMIT = 3

取url的深度:print(response.request.url, response.meta.get('depth',0))

scrapy解析器

from scrapy.http import HtmlResponse

from scrapy.selector import Selector

response = HtmlResponse(url='http://example.com', body=html,encoding='utf-8')

# hxs = Selector(response)

# hxs.xpath()

response.xpath('')

起始请求定制 start_requests

内置代理

自定义代理 proxy.py中

# by luffycity.com

import base64

import random

from six.moves.urllib.parse import unquote

try:

from urllib2 import _parse_proxy

except ImportError:

from urllib.request import _parse_proxy

from six.moves.urllib.parse import urlunparse

from scrapy.utils.python import to_bytes

class XdbProxyMiddleware(object):

def _basic_auth_header(self, username, password):

user_pass = to_bytes(

'%s:%s' % (unquote(username), unquote(password)),

encoding='latin-1')

return base64.b64encode(user_pass).strip()

def process_request(self, request, spider):

PROXIES = [

"http://root:woshiniba@192.168.11.11:9999/",

"http://root:woshiniba@192.168.11.12:9999/",

"http://root:woshiniba@192.168.11.13:9999/",

"http://root:woshiniba@192.168.11.14:9999/",

"http://root:woshiniba@192.168.11.15:9999/",

"http://root:woshiniba@192.168.11.16:9999/",

]

url = random.choice(PROXIES)

orig_type = ""

proxy_type, user, password, hostport = _parse_proxy(url)

proxy_url = urlunparse((proxy_type or orig_type, hostport, '', '', '', ''))

if user:

creds = self._basic_auth_header(user, password)

else:

creds = None

request.meta['proxy'] = proxy_url

if creds:

request.headers['Proxy-Authorization'] = b'Basic ' + creds

class DdbProxyMiddleware(object):

def process_request(self, request, spider):

PROXIES = [

{'ip_port': '111.11.228.75:80', 'user_pass': ''},

{'ip_port': '120.198.243.22:80', 'user_pass': ''},

{'ip_port': '111.8.60.9:8123', 'user_pass': ''},

{'ip_port': '101.71.27.120:80', 'user_pass': ''},

{'ip_port': '122.96.59.104:80', 'user_pass': ''},

{'ip_port': '122.224.249.122:8088', 'user_pass': ''},

]

proxy = random.choice(PROXIES)

if proxy['user_pass'] is not None:

request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port'])

encoded_user_pass = base64.b64encode(to_bytes(proxy['user_pass']))

request.headers['Proxy-Authorization'] = to_bytes('Basic ' + encoded_user_pass)

else:

request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port'])

settings的配置

DOWNLOADER_MIDDLEWARES = {

#'xdb.middlewares.XdbDownloaderMiddleware': 543,

'xdb.proxy.XdbProxyMiddleware':751,

}

自定制命令

单个爬虫

import sys

from scrapy.cmdline import execute

if __name__ == '__main__':

execute(["scrapy","crawl",“chouti”,"--nolog"])

多个爬虫

- 在spiders同级创建任意目录,如:commands

- 在其中创建 crawlall.py 文件 (此处文件名就是自定义的命令)

from scrapy.commands import ScrapyCommand

from scrapy.utils.project import get_project_settings

class Command(ScrapyCommand):

requires_project = True

def syntax(self):

return '[options]'

def short_desc(self):

return 'Runs all of the spiders'

def run(self, args, opts):

spider_list = self.crawler_process.spiders.list()

for name in spider_list:

self.crawler_process.crawl(name, **opts.__dict__)

self.crawler_process.start()

- 在settings.py 中添加配置 COMMANDS_MODULE = '项目名称.目录名称'

- 在项目目录执行命令:scrapy crawlall

爬虫信号

# by luffycity.com

from scrapy import signals

class MyExtend(object):

def __init__(self):

pass

@classmethod

def from_crawler(cls, crawler):

self = cls()

crawler.signals.connect(self.x1, signal=signals.spider_opened)

crawler.signals.connect(self.x2, signal=signals.spider_closed)

return self

def x1(self, spider):

print('open')

def x2(self, spider):

print('close')

信号中settings的配置

EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

'xdb.ext.MyExtend':666,

}

爬虫基于scrapy-redis的去重

settings的配置

# ############### scrapy redis连接 ####################

REDIS_HOST = '140.143.227.206' # 主机名

REDIS_PORT = 8888 # 端口

REDIS_PARAMS = {'password':'beta'} # Redis连接参数 默认:REDIS_PARAMS = {'socket_timeout': 30,'socket_connect_timeout': 30,'retry_on_timeout': True,'encoding': REDIS_ENCODING,})

REDIS_ENCODING = "utf-8" # redis编码类型 默认:'utf-8'

# REDIS_URL = 'redis://user:pass@hostname:9001' # 连接URL(优先于以上配置)

# ############### scrapy redis去重 ####################

DUPEFILTER_KEY = 'dupefilter:%(timestamp)s'

# DUPEFILTER_CLASS = 'scrapy_redis.dupefilter.RFPDupeFilter'

DUPEFILTER_CLASS = 'dbd.xxx.RedisDupeFilter'

from scrapy.dupefilter import BaseDupeFilter

import redis

from scrapy.utils.request import request_fingerprint

import scrapy_redis

class DupFilter(BaseDupeFilter):

def __init__(self):

self.conn = redis.Redis(host='140.143.227.206',port=8888,password='beta')

def request_seen(self, request):

"""

检测当前请求是否已经被访问过

:param request:

:return: True表示已经访问过;False表示未访问过

"""

fid = request_fingerprint(request)

result = self.conn.sadd('visited_urls', fid)

if result == 1:

return False

return True

###############以上为完全自定义的去重########################

###############以下为继承scrapy-redis的去重########################

from scrapy_redis.dupefilter import RFPDupeFilter

from scrapy_redis.connection import get_redis_from_settings

from scrapy_redis import defaults

class RedisDupeFilter(RFPDupeFilter):

@classmethod

def from_settings(cls, settings):

"""Returns an instance from given settings.

This uses by default the key ``dupefilter:<timestamp>``. When using the

``scrapy_redis.scheduler.Scheduler`` class, this method is not used as

it needs to pass the spider name in the key.

Parameters

----------

settings : scrapy.settings.Settings

Returns

-------

RFPDupeFilter

A RFPDupeFilter instance.

"""

server = get_redis_from_settings(settings)

# XXX: This creates one-time key. needed to support to use this

# class as standalone dupefilter with scrapy's default scheduler

# if scrapy passes spider on open() method this wouldn't be needed

# TODO: Use SCRAPY_JOB env as default and fallback to timestamp.

key = defaults.DUPEFILTER_KEY % {'timestamp': 'xiaodongbei'}

debug = settings.getbool('DUPEFILTER_DEBUG')

return cls(server, key=key, debug=debug)

I can feel you forgetting me。。 有一种默契叫做我不理你,你就不理我