基于kubeadm和underlay部署K8S

#所有节点执行:

#修改主机名

hostnamectl set-hostname k8s-master-101

#配置hosts

[root@k8s-master-101 ~]#cat >> /etc/hosts <<EOF

10.0.0.101 k8s-master-101

10.0.0.102 k8s-master-102

10.0.0.103 k8s-master-103

10.0.0.104 k8s-node

EOF

#关闭swap交换分区

[root@k8s-master-101 ~]#vim /etc/fstab

swapoff -a

2、安装docker

#安装必要软件

apt update

apt -y install apt-transport-https ca-certificates curl software-properties-common

#安装GPG证书

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

#导入软件源

add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

#更新源

apt update

#查看Docker版本

apt-cache madison docker-ce docker-ce-cli

#安装docker

apt install docker-ce docker-ce-cli -y

systemctl start docker && systemctl enable docker

# 配置镜像加速,使用systemd

mkdir -p /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://9916w1ow.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload && systemctl restart docker

安装cri-docker

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.6/cri-dockerd-0.2.6.amd64.tgz

tar xf cri-dockerd-0.2.6.amd64.tgz

cp cri-dockerd/cri-dockerd /usr/local/bin/

#配置cri-dockerd.service

cat > /lib/systemd/system/cri-docker.service <<EOF

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/local/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

#配置cri-docker.socket文件

cat > /etc/systemd/system/cri-docker.socket <<EOF

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

systemctl enable --now cri-docker cri-docker.socket

3、安装k8s

apt-get install -y apt-transport-https

#配置阿里云镜像的kubernetes源

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

#安装kubeadm

apt update

apt-cache madison kubeadm

apt-get install -y kubelet=1.25.3-00 kubeadm=1.25.3-00 kubectl=1.25.3-00

#列出所需要的镜像

kubeadm config images list --kubernetes-version v1.25.3

registry.k8s.io/kube-apiserver:v1.25.3

registry.k8s.io/kube-controller-manager:v1.25.3

registry.k8s.io/kube-scheduler:v1.25.3

registry.k8s.io/kube-proxy:v1.25.3

registry.k8s.io/pause:3.8

registry.k8s.io/etcd:3.5.4-0

registry.k8s.io/coredns/coredns:v1.9.3

#配置拉取镜像脚本

cat > download-k8s-images.sh <<EOF

#!/bin/bash

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.25.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.25.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.25.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.25.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.4-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3

EOF

#执行脚本

bash download-k8s-images.sh #更改阿里云镜像标签为谷歌标签

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.25.3 registry.k8s.io/kube-apiserver:v1.25.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.25.3 registry.k8s.io/kube-controller-manager:v1.25.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.25.3 registry.k8s.io/kube-scheduler:v1.25.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.25.3 registry.k8s.io/kube-proxy:v1.25.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8 registry.k8s.io/pause:3.8

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.4-0 registry.k8s.io/etcd:3.5.4-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3 registry.k8s.io/coredns/coredns:v1.9.3

#初始化集群

kubeadm init --control-plane-endpoint "10.0.0.101" \

--upload-certs \

--apiserver-advertise-address=10.0.0.101 \

--apiserver-bind-port=6443 \

--kubernetes-version=v1.25.3 \

--pod-network-cidr=10.200.0.0/16 \

--service-cidr=10.100.0.0/16 \

--service-dns-domain=cluster.local \

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers \

--cri-socket unix:///var/run/cri-dockerd.sock Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 10.0.0.101:6443 --token kr7s59.5dkj81fg5wo47z58 \

--discovery-token-ca-cert-hash sha256:40511f956a5ac5744f42b58f13d1925abd1355185bae2628bdb2b2f8d0d9846a \

--control-plane --certificate-key 824675e469c7ec128ee541dccb44cd947d34c0c7b1874f05039b419a847ac826

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.101:6443 --token kr7s59.5dkj81fg5wo47z58 \

--discovery-token-ca-cert-hash sha256:40511f956a5ac5744f42b58f13d1925abd1355185bae2628bdb2b2f8d0d9846a

#其他两个master加入集群

kubeadm join 10.0.0.101:6443 --token kr7s59.5dkj81fg5wo47z58 --discovery-token-ca-cert-hash sha256:40511f956a5ac5744f42b58f13d1925abd1355185bae2628bdb2b2f8d0d9846a --control-plane --certificate-key 824675e469c7ec128ee541dccb44cd947d34c0c7b1874f05039b419a847ac826 --cri-socket unix:///var/run/cri-dockerd.sock

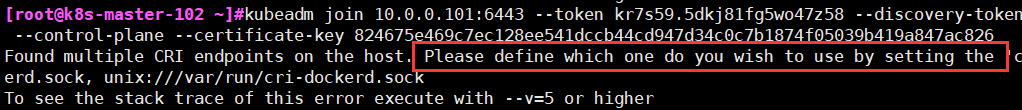

提示:不指定--cri-socket unix:///var/run/cri-dockerd.sock提示如下报错:

kubeadm join 10.0.0.101:6443 --token kr7s59.5dkj81fg5wo47z58 --discovery-token-ca-cert-hash sha256:4051 --control-plane --certificate-key 824675e469c7ec128ee541dccb44cd947d34c0c7b1874f05039b419a847ac826

Found multiple CRI endpoints on the host. Please define which one do you wish to use by setting the 'crerd.sock, unix:///var/run/cri-dockerd.sock

To see the stack trace of this error execute with --v=5 or higher

#node节点加入集群:

kubeadm join 10.0.0.101:6443 --token kr7s59.5dkj81fg5wo47z58 \

> --discovery-token-ca-cert-hash sha256:40511f956a5ac5744f42b58f13d1925abd1355185bae2628bdb2b2f8d0d9846a --cri-socket unix:///var/run/cri-dockerd.sock[root@k8s-master-101 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-101 NotReady control-plane 27m v1.25.3

k8s-master-102 NotReady control-plane 2m25s v1.25.3

k8s-master-103 NotReady control-plane 59s v1.25.3

k8s-node NotReady <none> 30s v1.25.3

[root@k8s-master-101 ~]#kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f8cbcb969-6hbx5 0/1 Pending 0 28m

kube-system coredns-7f8cbcb969-r4sg4 0/1 Pending 0 28m

kube-system etcd-k8s-master-101 1/1 Running 0 28m

kube-system etcd-k8s-master-102 1/1 Running 0 2m59s

kube-system etcd-k8s-master-103 1/1 Running 0 84s

kube-system kube-apiserver-k8s-master-101 1/1 Running 0 28m

kube-system kube-apiserver-k8s-master-102 1/1 Running 0 3m

kube-system kube-apiserver-k8s-master-103 1/1 Running 0 72s

kube-system kube-controller-manager-k8s-master-101 1/1 Running 1 (2m48s ago) 28m

kube-system kube-controller-manager-k8s-master-102 1/1 Running 0 3m

kube-system kube-controller-manager-k8s-master-103 1/1 Running 0 83s

kube-system kube-proxy-9bpzk 1/1 Running 0 3m1s

kube-system kube-proxy-gc6gr 1/1 Running 0 28m

kube-system kube-proxy-qbfql 1/1 Running 0 95s

kube-system kube-proxy-z9jt9 1/1 Running 0 66s

kube-system kube-scheduler-k8s-master-101 1/1 Running 1 (2m48s ago) 28m

kube-system kube-scheduler-k8s-master-102 1/1 Running 0 3m

kube-system kube-scheduler-k8s-master-103 1/1 Running 0 80s4、安装underlay网络组件

4-1、准备helm环境

helm官网:https://github.com/helm/helm/releases

tar xvf helm-v3.9.0-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/

helm version4-2、部署hybridnet

[root@k8s-master-101 ~]#helm repo add hybridnet https://alibaba.github.io/hybridnet

"hybridnet" has been added to your repositories

[root@k8s-master-101 ~]#helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "hybridnet" chart repository

Update Complete. ⎈Happy Helming!⎈

#配置overlay pod网络, 如果不指定--set init.cidr=10.200.0.0/16默认会使用100.64.0.0/16

[root@k8s-master-101 ~]#helm install hybridnet hybridnet/hybridnet -n kube-system --set init.cidr=10.200.0.0/16

NAME: hybridnet

LAST DEPLOYED: Fri Nov 4 13:54:02 2022

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None[root@k8s-master-101 ~]#kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master-101 Ready control-plane 15h v1.25.3 10.0.0.101 <none> Ubuntu 20.04.4 LTS 5.4.0-125-generic docker://20.10.21

k8s-master-102 Ready control-plane 14h v1.25.3 10.0.0.102 <none> Ubuntu 20.04.4 LTS 5.4.0-126-generic docker://20.10.21

k8s-master-103 Ready control-plane 14h v1.25.3 10.0.0.103 <none> Ubuntu 20.04.4 LTS 5.4.0-124-generic docker://20.10.21

k8s-node Ready <none> 14h v1.25.3 10.0.0.104 <none> Ubuntu 20.04.4 LTS 5.4.0-124-generic docker://20.10.21

[root@k8s-master-101 ~]#kubectl label node k8s-master-101 node-role.kubernetes.io/master=

[root@k8s-master-101 ~]#kubectl label node k8s-master-102 node-role.kubernetes.io/master=

[root@k8s-master-101 ~]#kubectl label node k8s-master-103 node-role.kubernetes.io/master=[root@k8s-master-101 ~]#mkdir /root/hybridnet

[root@k8s-master-101 ~]#cd hybridnet/

[root@k8s-master-101 hybridnet]#kubectl label node k8s-master-101 network=underlay-nethost

node/k8s-master-101 labeled

[root@k8s-master-101 hybridnet]#kubectl label node k8s-master-102 network=underlay-nethost

node/k8s-master-102 labeled

[root@k8s-master-101 hybridnet]#kubectl label node k8s-master-103 network=underlay-nethost

node/k8s-master-103 labeled

[root@k8s-master-101 hybridnet]#kubectl label node k8s-node network=underlay-nethost[root@k8s-master-101 hybridnet]#kubectl get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master-101 Ready control-plane,master 20h v1.25.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master-101,kubernetes.io/os=linux,network=underlay-nethost,networking.alibaba.com/overlay-network-attachment=true,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-master-102 Ready control-plane,master 20h v1.25.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master-102,kubernetes.io/os=linux,network=underlay-nethost,networking.alibaba.com/overlay-network-attachment=true,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-master-103 Ready control-plane,master 20h v1.25.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master-103,kubernetes.io/os=linux,network=underlay-nethost,networking.alibaba.com/overlay-network-attachment=true,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-node Ready <none> 20h v1.25.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node,kubernetes.io/os=linux,network=underlay-nethost,networking.alibaba.com/overlay-network-attachment=true[root@k8s-master-101 hybridnet]#vim 1.create-underlay-network.yaml

[root@k8s-master-101 hybridnet]#kubectl apply -f 1.create-underlay-network.yaml

[root@k8s-master-101 hybridnet]#kubectl get network

[root@k8s-master-101 hybridnet]#kubectl get subnet

[root@k8s-master-101 hybridnet]#kubectl describe node k8s-master-101[root@k8s-master-101 hybridnet]#vim 2.tomcat-app1-overlay.yaml

[root@k8s-master-101 hybridnet]#kubectl create ns myserver

namespace/myserver created

[root@k8s-master-101 hybridnet]#kubectl apply -f 2.tomcat-app1-overlay.yaml

deployment.apps/myserver-tomcat-app1-deployment-overlay created

service/myserver-tomcat-app1-service-overlay created

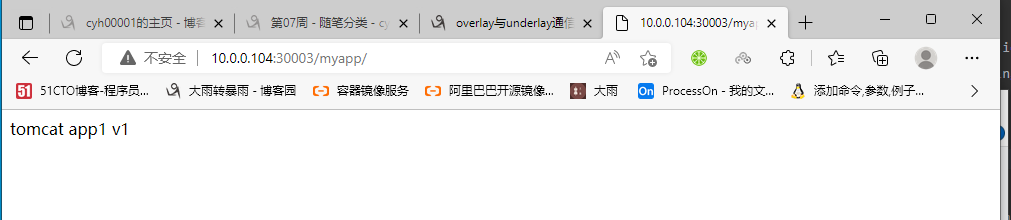

[root@k8s-master-101 hybridnet]#kubectl get pod -n myserver -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-tomcat-app1-deployment-overlay-596784cdc7-xjnv6 1/1 Running 0 2m25s 10.200.0.3 k8s-node <none> <none>

#浏览器访问node节点ip加端口:

本文来自博客园,作者:大雨转暴雨,转载请注明原文链接:https://www.cnblogs.com/wdy001/p/16859001.html