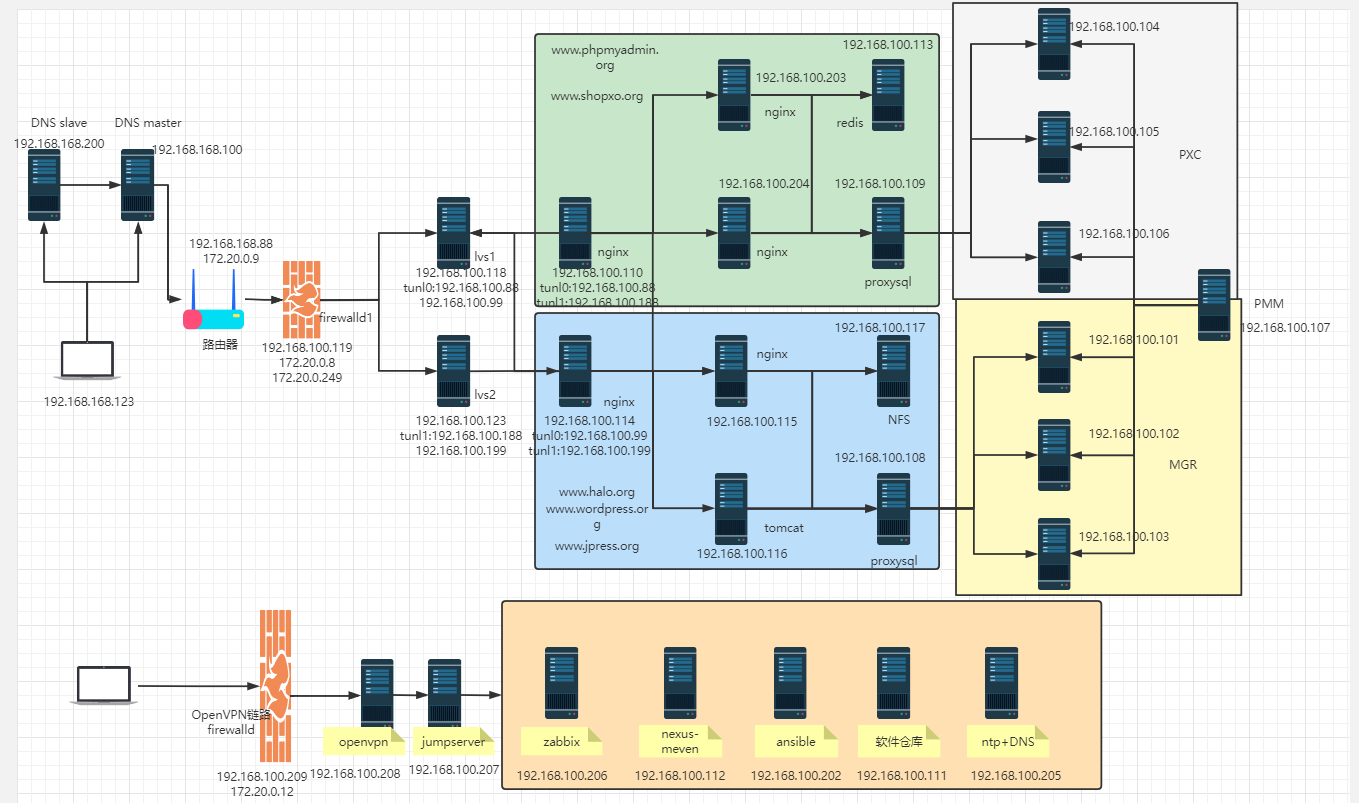

中小型架构综合实验

| 服务器 | IP地址 | 作用 | 系统版本 |

|---|---|---|---|

| MGR数据库 | 192.168.100.101 | MGR集群数据库master节点 | Rocky8.6 |

| MGR数据库 | 192.168.100.102 | MGR集群数据库slave节点 | Rocky8.6 |

| MGR数据库 | 192.168.100.103 | MGR集群数据库slave节点 | Rocky8.6 |

| PXC数据库 | 192.168.100.104 | PXC集群数据库1 | Rocky8.6 |

| PXC数据库 | 192.168.100.105 | PXC集群数据库2 | Rocky8.6 |

| PXC数据库 | 192.168.100.106 | PXC集群数据库3 | Rocky8.6 |

| PMM | 192.168.100.107 | PMM数据库监控软件 | Rocky8.6 |

| proxysql | 192.168.100.108 | proxysql读写分离1 | Rocky8.6 |

| proxysql | 192.168.100.109 | proxysql读写分离2 | Rocky8.6 |

| Nginx反向代理 | 192.168.100.110 | Nginx反向代理负载均衡 | Rocky8.6 |

| repo软件仓库 | 192.168.100.111 | repo软件仓库 | Rocky8.6 |

| nexus | 192.168.100.112 | nexus软件仓库 | Rocky8.6 |

| redis | 192.168.100.113 | redis 会话保持 | Rocky8.6 |

| Nginx反向代理 | 192.168.100.114 | Nginx反向代理负载均衡2 | Rocky8.6 |

| web网站服务器 | 192.168.100.115 | web网站服务器-Jpress+Wordpress | Rocky8.6 |

| web网站服务器 | 192.168.100.116 | web网站服务器-Jpress+Wordpress | Rocky8.6 |

| NFS | 192.168.100.117 | NFS | Rocky8.6 |

| LVS1 | 192.168.100.118 | LVS四层负载 | Rocky8.6 |

| 防火墙1 | 192.168.100.119 | 防火墙 | Rocky8.6 |

| ansible | 192.168.100.202 | ansible控制端 | Ubuntu2004 |

| web网站服务器 | 192.168.100.203 | web网站服务器-shopxo+phpmyadmin | Ubuntu2004 |

| web网站服务器 | 192.168.100.204 | web网站服务器-shopxo+phpmyadmin | Ubuntu2004 |

| ntp+DNS | 192.168.100.205 | ntp时间同步服务器+DNS服务器 | Ubuntu2004 |

| zabbix | 192.168.100.206 | zabbix监控端 | Ubuntu2004 |

| jumpserver | 192.168.100.207 | jumpserver跳板机 | Ubuntu2004 |

| openvpn | 192.168.100.208 | openvpn服务器 | Ubuntu2004 |

| 防火墙2 | 192.168.100.209 | openvpn线路防火墙 | Ubuntu2004 |

| 客户端路由器 | 192.168.168.88 | 客户端路由器 | Rocky8.6 |

| 客户端dns主 | 192.168.168.100 | 客户端dns主 | Rocky8.6 |

| 客户端dns从 | 192.168.168.200 | 客户端dns从 | Rocky8.6 |

| 客户端 | 192.168.168.123 | 测试 | Rocky8.6 |

2、配置MGR

# MGR数据库集群所有节点(192.168.100.101、192.168.100.102、192.168.100.103):

# 如果是ubuntu的话需要在配置文件把监听端口改成0.0.0.0

[root@node101 ~]# vim /etc/my.cnf.d/mysql-server.cnf

[mysqld]

server-id=101

gtid_mode=ON

enforce_gtid_consistency=ON

default_authentication_plugin=mysql_native_password

binlog_checksum=NONE

loose-group_replication_group_name="89c0ed5c-fd34-4a77-94da-e94a90ea4932"

loose-group_replication_start_on_boot=OFF

loose-group_replication_local_address="192.168.100.101:24901"

loose-group_replication_group_seeds="192.168.100.101:24901,192.168.100.102:24901,192.168.100.103:24901"

loose-group_replication_bootstrap_group=OFF

loose-group_replication_recovery_use_ssl=ON

# MGR数据库集群所有节点(192.168.100.101、192.168.100.102、192.168.100.103):

mysql> set sql_log_bin=0;

mysql> create user repluser@'192.168.100.%' identified by '123456';

mysql> grant replication slave on *.* to repluser@'192.168.100.%';

mysql> flush privileges;

mysql> set sql_log_bin=1;

mysql> install plugin group_replication soname 'group_replication.so';

mysql> select * from information_schema.plugins where plugin_name='group_replication'\G

#第一节点(引导启动)192.168.100.101:

mysql> set global group_replication_bootstrap_group=ON;

mysql> start group_replication;

mysql> set global group_replication_bootstrap_group=OFF;

mysql> select * from performance_schema.replication_group_members; #剩余节点(192.168.100.102、192.168.100.103):

mysql> change master to master_user='repluser',master_password='123456' for channel 'group_replication_recovery';

mysql> start group_replication;

mysql> select * from performance_schema.replication_group_members;

3、安装Percona-Xtradb-Cluster

# Pxc数据库集群所有节点 (192.168.100.104、192.168.100.105、192.168.100.106):从 Percona 仓库安装

[root@node105 ~]# dnf module disable mysql #先关闭掉默认的 MySQL 模块yum [root@node105 ~]# yum install https://repo.percona.com/yum/percona-release-latest.noarch.rpm #配置 yum 源

[root@node105 ~]# percona-release setup pxc80 #设置 pxc80 仓库,这个操作会设置 PXC 的仓库地址为:http://repo.percona.com/pxc-80/yum/release/

[root@node105 ~]# yum install percona-xtradb-cluster #安装 Percona XtraDB Cluster # 第一节点 (192.168.100.104):对第一个节点进行修改密码操作

[root@node104 ~]# systemctl start mysqld #先启动 Percona XtraDB Cluster 服务

[root@node104 ~]# grep 'temporary password' /var/log/mysqld.log #拷贝 MySQL 安装时自动生成的 root 的临时密码

[root@node104 ~]# mysql -u root -p #使用临时密码登录 MySQL

mysql> alter user root@'localhost' identified by '123456'; #更改 root 用户的密码,之后退出

mysql> exit

[root@node104 ~]# systemctl stop mysqld #关闭 MySQL 服务。

#注意

-- 以上修改密码操作只在第一个节点进行即可。

-- 配置好第二个和第三个节点,启动服务操作后会复制到其他节点中

## 192.168.100.104:配置 my.cnf,也可以单独增加一个配置,参考:https://blog.51cto.com/dayu/5653272

[root@node104 ~]# vim /etc/my.cnf

# Template my.cnf for PXC

# Edit to your requirements.

[client]

socket=/var/lib/mysql/mysql.sock

[mysqld]

server-id=104 #更改id

default_authentication_plugin=mysql_native_password

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

# Binary log expiration period is 604800 seconds, which equals 7 days

binlog_expire_logs_seconds=604800

######## wsrep ###############

# Path to Galera library

wsrep_provider=/usr/lib64/galera4/libgalera_smm.so

# Cluster connection URL contains IPs of nodes

#If no IP is found, this implies that a new cluster needs to be created,

#in order to do that you need to bootstrap this node

wsrep_cluster_address=gcomm://192.168.100.104,192.168.100.105,192.168.100.106 #更改为pxc集群数据库服务器节点的ip地址

# In order for Galera to work correctly binlog format should be ROW

binlog_format=ROW

# Slave thread to use

wsrep_slave_threads=8

wsrep_log_conflicts

# This changes how InnoDB autoincrement locks are managed and is a requirement for Galera

innodb_autoinc_lock_mode=2

# Node IP address

wsrep_node_address=192.168.100.104 #取消注释,更改为本节点的ip地址

# Cluster name

wsrep_cluster_name=pxc-cluster # 此集群名称可以自定义,但是需要所有节点名称一样

#If wsrep_node_name is not specified, then system hostname will be used

wsrep_node_name=pxc-node-104 # 本节点的名称

#pxc_strict_mode allowed values: DISABLED,PERMISSIVE,ENFORCING,MASTER

pxc_strict_mode=ENFORCING

# SST method

wsrep_sst_method=xtrabackup-v2

pxc-encrypt-cluster-traffic=OFF #关闭加密安全传输,此设置看情况而定

===============================================================

##主要的需要设置的地方如下:

# 集群通讯地址

wsrep_cluster_address=gcomm://192.168.100.104,192.168.100.105,192.168.100.106

# 本节点的地址

wsrep_node_address=192.168.100.104

# 本节点的名称

wsrep_node_name=pxc-node-104

# 关闭加密

pxc-encrypt-cluster-traffic=OFF

====================================================

# 这些内容也同样对 pxc2, pxc3 进行配置,只有两个参数 wsrep_node_name,wsrep_node_address 配置的值需要修改一下

====================================================

## 192.168.100.105:配置 my.cnf

[root@node105 ~]# vim /etc/my.cnf

server-id=105

default_authentication_plugin=mysql_native_password

wsrep_cluster_address=gcomm://192.168.100.104,192.168.100.105,192.168.100.106

wsrep_node_address=192.168.100.105

wsrep_node_name=pxc-node-105

pxc-encrypt-cluster-traffic=OFF

## 192.168.100.106:配置 my.cnf

[root@node106 ~]# vim /etc/my.cnf

server-id=106

default_authentication_plugin=mysql_native_password

wsrep_cluster_address=gcomm://192.168.100.104,192.168.100.105,192.168.100.106

wsrep_node_address=192.168.100.106

wsrep_node_name=pxc-node-106

pxc-encrypt-cluster-traffic=OFF # 启动第一个节点

[root@node104 ~]# systemctl start mysql@bootstrap.service

[root@node104 ~]# ss -ntlp #查看是否开启了默认的4567端口

root@node104 ~]# mysql -uroot -p # 登录 MySQL

mysql> show status like 'wsrep%'; # 查看集群状态

.....

wsrep_local_state_uuid | 8a8418bf-4171-11ed-82db-3bef4e0b8be8

......

wsrep_local_state_comment | Synced

......

wsrep_incoming_addresses | 192.168.100.104:3306

......

wsrep_cluster_size | 1

......

wsrep_cluster_state_uuid | 8a8418bf-4171-11ed-82db-3bef4e0b8be8

......

wsrep_cluster_status | Primary

wsrep_connected | ON

......

wsrep_ready | ON # 添加其他节点(192.168.100.105、192.168.100.106):

# 注意

-- 所有其他节点的数据和配置都会被第一个节点的数据覆盖

-- 不要同时加入多个节点,避免数据或网络开销过大

[root@node105 ~]# systemctl start mysqld

[root@node105 ~]# mysql -uroot -p

mysql> show status like 'wsrep%';

可以看到 wsrep_cluster_size 的值是 2 表示集群已经有 2 个节点了。wsrep_local_state_comment 的值是 Synced 表示已经同步了。

#注意

-- 如果 wsrep_local_state_comment 的状态是 Joiner,表示正在同步,请不要启动第三个节点的服务。

==========================================

[root@node106 ~]# systemctl start mysqld

[root@node106 ~]# mysql -uroot -p123456

mysql> show status like 'wsrep%';

# 参考:

- https://blog.51cto.com/dayu/5653272

- https://qizhanming.com/blog/2020/06/22/how-to-install-percona-xtradb-cluster-on-centos-84、Proxysql读写分离MGR集群

# proxy1服务器(192.168.100.109):

[root@node108 ~]# yum -y localinstall proxysql-2.2.0-1-centos8.x86_64.rpm

#安装已经下载好的proxysql

[root@node108 ~]# systemctl enable --now proxysql

[root@node108 ~]# yum install -y mysql

[root@node108 ~]# mysql -uadmin -padmin -h127.0.0.1 -P6032 #连接ProxySQL的管理端口,默认管理员用户和密码都是admin,管理接口6032

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.101',3306);

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.102',3306);

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.103',3306);

# 数据库master节点(192.168.100.101):创建monitor、proxysql账号

mysql> create user monitor@'192.168.100.%' identified by '123456';

mysql> create user proxysql@'192.168.100.%' identified by '123456';

mysql> grant all privileges on *.* to monitor@'192.168.100.%';

mysql> grant all privileges on *.* to proxysql@'192.168.100.%'; # proxy服务器(192.168.100.108):添加监控账号密码

mysql> set mysql-monitor_username='monitor';

mysql> set mysql-monitor_password='123456';

mysql> insert into mysql_users(username,password,active,default_hostgroup,transaction_persistent) values ('proxysql','123456',1,10,1);

# 数据库master节点(192.168.100.101):导入proxysql监控sql

[root@node101 ~]# vim proxysql-monitor.sql

USE sys;

DELIMITER $$

CREATE FUNCTION IFZERO(a INT, b INT)

RETURNS INT

DETERMINISTIC

RETURN IF(a = 0, b, a)$$

CREATE FUNCTION LOCATE2(needle TEXT(10000), haystack TEXT(10000), offset INT)

RETURNS INT

DETERMINISTIC

RETURN IFZERO(LOCATE(needle, haystack, offset), LENGTH(haystack) + 1)$$

CREATE FUNCTION GTID_NORMALIZE(g TEXT(10000))

RETURNS TEXT(10000)

DETERMINISTIC

RETURN GTID_SUBTRACT(g, '')$$

CREATE FUNCTION GTID_COUNT(gtid_set TEXT(10000))

RETURNS INT

DETERMINISTIC

BEGIN

DECLARE result BIGINT DEFAULT 0;

DECLARE colon_pos INT;

DECLARE next_dash_pos INT;

DECLARE next_colon_pos INT;

DECLARE next_comma_pos INT;

SET gtid_set = GTID_NORMALIZE(gtid_set);

SET colon_pos = LOCATE2(':', gtid_set, 1);

WHILE colon_pos != LENGTH(gtid_set) + 1 DO

SET next_dash_pos = LOCATE2('-', gtid_set, colon_pos + 1);

SET next_colon_pos = LOCATE2(':', gtid_set, colon_pos + 1);

SET next_comma_pos = LOCATE2(',', gtid_set, colon_pos + 1);

IF next_dash_pos < next_colon_pos AND next_dash_pos < next_comma_pos THEN

SET result = result +

SUBSTR(gtid_set, next_dash_pos + 1,

LEAST(next_colon_pos, next_comma_pos) - (next_dash_pos + 1)) -

SUBSTR(gtid_set, colon_pos + 1, next_dash_pos - (colon_pos + 1)) + 1;

ELSE

SET result = result + 1;

END IF;

SET colon_pos = next_colon_pos;

END WHILE;

RETURN result;

END$$

CREATE FUNCTION gr_applier_queue_length()

RETURNS INT

DETERMINISTIC

BEGIN

RETURN (SELECT sys.gtid_count( GTID_SUBTRACT( (SELECT

Received_transaction_set FROM performance_schema.replication_connection_status

WHERE Channel_name = 'group_replication_applier' ), (SELECT

@@global.GTID_EXECUTED) )));

END$$

CREATE FUNCTION gr_member_in_primary_partition()

RETURNS VARCHAR(3)

DETERMINISTIC

BEGIN

RETURN (SELECT IF( MEMBER_STATE='ONLINE' AND ((SELECT COUNT(*) FROM

performance_schema.replication_group_members WHERE MEMBER_STATE != 'ONLINE') >=

((SELECT COUNT(*) FROM performance_schema.replication_group_members)/2) = 0),

'YES', 'NO' ) FROM performance_schema.replication_group_members JOIN

performance_schema.replication_group_member_stats USING(member_id)

where performance_schema.replication_group_members.member_host=@@hostname);

END$$

CREATE VIEW gr_member_routing_candidate_status AS

SELECT

sys.gr_member_in_primary_partition() AS viable_candidate,

IF((SELECT

(SELECT

GROUP_CONCAT(variable_value)

FROM

performance_schema.global_variables

WHERE

variable_name IN ('read_only' , 'super_read_only')) != 'OFF,OFF'

),

'YES',

'NO') AS read_only,

sys.gr_applier_queue_length() AS transactions_behind,

Count_Transactions_in_queue AS 'transactions_to_cert'

FROM

performance_schema.replication_group_member_stats a

JOIN

performance_schema.replication_group_members b ON a.member_id = b.member_id

WHERE

b.member_host IN (SELECT

variable_value

FROM

performance_schema.global_variables

WHERE

variable_name = 'hostname')$$

DELIMITER ;

[root@node101 ~]# mysql < proxysql-monitor.sql # proxy服务器(192.168.100.108): 设置读写组

mysql> insert into mysql_group_replication_hostgroups (writer_hostgroup,backup_writer_hostgroup,reader_hostgroup, offline_hostgroup,active,max_writers,writer_is_also_reader,max_transactions_behind) values (10,20,30,40,1,1,0,100);

Query OK, 1 row affected (0.00 sec)

mysql> load mysql servers to runtime;

Query OK, 0 rows affected (0.02 sec)

mysql> save mysql servers to disk;

Query OK, 0 rows affected (0.04 sec)

mysql> load mysql users to runtime;

Query OK, 0 rows affected (0.00 sec)

mysql> save mysql users to disk;

Query OK, 0 rows affected (0.01 sec)

mysql> load mysql variables to runtime;

Query OK, 0 rows affected (0.00 sec)

mysql> save mysql variables to disk;

Query OK, 140 rows affected (0.01 sec)

# proxy服务器(192.168.100.108):设置读写规则

mysql> insert into mysql_query_rules(rule_id,active,match_digest,destination_hostgroup,apply) VALUES (1,1,'^SELECT.*FOR UPDATE$',10,1),(2,1,'^SELECT',30,1);

Query OK, 2 rows affected (0.00 sec)

mysql> load mysql servers to runtime;

Query OK, 0 rows affected (0.01 sec)

mysql> save mysql servers to disk;

Query OK, 0 rows affected (0.02 sec)

mysql> load mysql users to runtime;

Query OK, 0 rows affected (0.00 sec)

mysql> save mysql users to disk;

Query OK, 0 rows affected (0.00 sec)

mysql> load mysql variables to runtime;

Query OK, 0 rows affected (0.00 sec)

mysql> save mysql variables to disk;

Query OK, 140 rows affected (0.02 sec)5、Proxysql读写分离PXC集群

# proxy2服务器(192.168.100.108):

[root@node109 ~]# yum -y localinstall proxysql-2.2.0-1-centos8.x86_64.rpm

[root@node109 ~]# systemctl enable --now proxysql

[root@node109 ~]# yum install -y mysql

[root@node109 ~]# mysql -uadmin -padmin -h127.0.0.1 -P6032

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.104',3306));

Query OK, 1 row affected (0.00 sec)

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.105',3306));

Query OK, 1 row affected (0.00 sec)

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.106',3306));

Query OK, 1 row affected (0.00 sec)

# 数据库master节点(192.168.100.104):创建monitor、proxysql账号

mysql> create user monitor@'192.168.100.%' identified by '123456';

mysql> create user proxysql@'192.168.100.%' identified by '123456';

mysql> grant all privileges on *.* to monitor@'192.168.100.%';

mysql> grant all privileges on *.* to proxysql@'192.168.100.%'; # proxy服务器(192.168.100.109):添加监控账号密码

mysql> set mysql-monitor_username='monitor';

mysql> set mysql-monitor_password='123456';

mysql> insert into mysql_users(username,password,active,default_hostgroup,transaction_persistent) values ('proxysql','123456',1,10,1); [root@node104 ~]# vim proxysql-monitor.sql

USE sys;

DELIMITER $$

CREATE FUNCTION IFZERO(a INT, b INT)

RETURNS INT

DETERMINISTIC

RETURN IF(a = 0, b, a)$$

CREATE FUNCTION LOCATE2(needle TEXT(10000), haystack TEXT(10000), offset INT)

RETURNS INT

DETERMINISTIC

RETURN IFZERO(LOCATE(needle, haystack, offset), LENGTH(haystack) + 1)$$

CREATE FUNCTION GTID_NORMALIZE(g TEXT(10000))

RETURNS TEXT(10000)

DETERMINISTIC

RETURN GTID_SUBTRACT(g, '')$$

CREATE FUNCTION GTID_COUNT(gtid_set TEXT(10000))

RETURNS INT

DETERMINISTIC

BEGIN

DECLARE result BIGINT DEFAULT 0;

DECLARE colon_pos INT;

DECLARE next_dash_pos INT;

DECLARE next_colon_pos INT;

DECLARE next_comma_pos INT;

SET gtid_set = GTID_NORMALIZE(gtid_set);

SET colon_pos = LOCATE2(':', gtid_set, 1);

WHILE colon_pos != LENGTH(gtid_set) + 1 DO

SET next_dash_pos = LOCATE2('-', gtid_set, colon_pos + 1);

SET next_colon_pos = LOCATE2(':', gtid_set, colon_pos + 1);

SET next_comma_pos = LOCATE2(',', gtid_set, colon_pos + 1);

IF next_dash_pos < next_colon_pos AND next_dash_pos < next_comma_pos THEN

SET result = result +

SUBSTR(gtid_set, next_dash_pos + 1,

LEAST(next_colon_pos, next_comma_pos) - (next_dash_pos + 1)) -

SUBSTR(gtid_set, colon_pos + 1, next_dash_pos - (colon_pos + 1)) + 1;

ELSE

SET result = result + 1;

END IF;

SET colon_pos = next_colon_pos;

END WHILE;

RETURN result;

END$$

CREATE FUNCTION gr_applier_queue_length()

RETURNS INT

DETERMINISTIC

BEGIN

RETURN (SELECT sys.gtid_count( GTID_SUBTRACT( (SELECT

Received_transaction_set FROM performance_schema.replication_connection_status

WHERE Channel_name = 'group_replication_applier' ), (SELECT

@@global.GTID_EXECUTED) )));

END$$

CREATE FUNCTION gr_member_in_primary_partition()

RETURNS VARCHAR(3)

DETERMINISTIC

BEGIN

RETURN (SELECT IF( MEMBER_STATE='ONLINE' AND ((SELECT COUNT(*) FROM

performance_schema.replication_group_members WHERE MEMBER_STATE != 'ONLINE') >=

((SELECT COUNT(*) FROM performance_schema.replication_group_members)/2) = 0),

'YES', 'NO' ) FROM performance_schema.replication_group_members JOIN

performance_schema.replication_group_member_stats USING(member_id)

where performance_schema.replication_group_members.member_host=@@hostname);

END$$

CREATE VIEW gr_member_routing_candidate_status AS

SELECT

sys.gr_member_in_primary_partition() AS viable_candidate,

IF((SELECT

(SELECT

GROUP_CONCAT(variable_value)

FROM

performance_schema.global_variables

WHERE

variable_name IN ('read_only' , 'super_read_only')) != 'OFF,OFF'

),

'YES',

'NO') AS read_only,

sys.gr_applier_queue_length() AS transactions_behind,

Count_Transactions_in_queue AS 'transactions_to_cert'

FROM

performance_schema.replication_group_member_stats a

JOIN

performance_schema.replication_group_members b ON a.member_id = b.member_id

WHERE

b.member_host IN (SELECT

variable_value

FROM

performance_schema.global_variables

WHERE

variable_name = 'hostname')$$

DELIMITER ;

[root@node104 ~]# mysql -uroot -p123456 < proxysql-monitor.sql

附-查询监控的数据库:

登陆proxysql数据库:

mysql -uadmin -padmin -h127.0.0.1 -P6032

mysql> use monitor

mysql> select * from runtime_mysql_servers;6、部署phpmyadmin

# phpmyadmin(192.168.100.203、192.168.100.204):

[root@node203 ~]# apt install nginx -y

[root@node203 ~]# apt -y install php7.4-fpm php7.4-mysql php7.4-json php7.4-xml php7.4-mbstring php7.4-zip php7.4-gd php7.4-curl php-redis

[root@node203 ~]# vim /etc/php/7.4/fpm/pool.d/www.conf

listen = 127.0.0.1:9000

php_value[session.save_handler] = redis

php_value[session.save_path] = "tcp://192.168.100.113:6379" #redis服务器

[root@node203 ~]# systemctl restart php7.4-fpm.service

[root@node203 ~]# unzip phpMyAdmin-5.2.0-all-languages.zip

[root@node203 ~]# mv phpMyAdmin-5.2.0-all-languages/* /data/phpmyadmin/

[root@node203 ~]# cd /data/phpmyadmin/

[root@node203 phpmyadmin]# cp config.sample.inc.php config.inc.php

[root@node203 phpmyadmin]# vim config.inc.php

$cfg['Servers'][$i]['host'] = '192.168.100.104'; #直接连数据库,proxysql无法连接

[root@node203 phpmyadmin]# chown -R www-data. /data/phpmyadmin/

[root@node203 phpmyadmin]# vim /etc/nginx/conf.d/phpmyadmin.wang.org.conf

server {

listen 80;

server_name phpmyadmin.wang.org;

root /data/phpmyadmin;

index index.php index.html index.htm;

location ~ \.php$|ping|php-status {

fastcgi_pass 127.0.0.1:9000;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

[root@node203 phpmyadmin]#systemctl restart nginx.service

# 浏览器域名解析,打开phpmyadmin.wang.org输入数据库创建的用户名密码即可。7、部署redis-phpmyadmin

# redis-phpmyadmin(192.168.100.113):

[root@node113 ~]# vim /etc/redis.conf

bind 0.0.0.0

[root@node113 ~]# systemctl restart redis

[root@node113 ~]# redis-cli

127.0.0.1:6379> keys *

1) "PHPREDIS_SESSION:mn2v1alcjegeb7r86kjqnn9p2j" #只要浏览器登陆就会有session会话键值对

2) "PHPREDIS_SESSION:v6nqh2nto2msb8n7uc8tajqkol"

8、部署phpmyadmin反向代理

# phpmyadmin Nginx代理(192.168.100.110):

[root@node110 ~]# vim /apps/nginx/conf/nginx.conf

include /apps/nginx/conf/conf.d/*.conf;

[root@node110 ~]# mkdir /apps/nginx/conf/conf.d

[root@node110 ~]# vim /apps/nginx/conf/conf.d/phpmyadmin.wang.org.conf

upstream phpmyadmin {

server 192.168.100.203;

server 192.168.100.204;

}

server {

listen 80;

server_name phpmyadmin.wang.org;

location / {

proxy_pass http://phpmyadmin;

proxy_set_header host $http_host;

}

}

[root@node110 ~]# nginx -s reload

附加-部署shopxo:

# 192.168.100.203、192.168.100.204:

[root@node203 ~]# vim /etc/nginx/conf.d/shopxo.wang.org.conf

server {

listen 80;

server_name shopxo.wang.org;

location / {

root /data/shopxo;

index index.php index.html index.htm;

}

location ~ \.php$|status|ping {

root /data/shopxo;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

[root@node203 ~]# mkdir /data/shopxo

[root@node203 ~]# unzip shopxov2.3.0.zip

[root@node203 ~]# chown -R www-data. /data/shopxo

[root@node203 ~]# systemctl restart php7.4-fpm.service

[root@node203 ~]# systemctl restart nginx.service

[root@node203 ~]# rsync -av /data/shopxo/public/static/upload/* root@192.168.100.113:/data/shopxo/ #把现有的网站页面拷贝到nfs上,一台服务器执行即可

# 建立nfs后挂载nfs

[root@node203 ~]# vim /etc/fstab

192.168.100.113:/data/shopxo /data/shopxo/public/static/upload/ nfs _netdev 0 0

[root@node203 ~]# apt install -y nfs-common

[root@node203 ~]# mount -a

# 部署shopxo的反向代理负载均衡(192.168.100.110):

[root@node110 ~]# vim /apps/nginx/conf/conf.d/shopxo.wang.org.conf

upstream shopxo {

server 192.168.100.203;

server 192.168.100.204;

}

server {

listen 80;

server_name shopxo.wang.org;

location / {

proxy_pass http://shopxo;

proxy_set_header host $http_host;

}

}9、部署NFS

#搭建NFS(192.168.100.117):

[root@node117 ~]# vim /etc/exports

/data/jpress 192.168.100.0/24(rw,all_squash,anonuid=994,anongid=991)

/data/wordpress 192.168.100.0/24(rw,all_squash,anonuid=994,anongid=991)

/data/halo 192.168.100.0/24(rw,all_squash,anonuid=994,anongid=991)

[root@node117 ~]# yum install -y nfs-utils

[root@node117 ~]# exportfs -r

[root@node117 ~]# exportfs -v

[root@node117 ~]# systemctl restart nfs-server.service

===================================================================

#搭建NFS(192.168.100.113):

[root@node113 ~]# mkdir /data/shopxo -p

[root@node113 ~]# yum install -y nfs-utils

[root@node113 ~]# vim /etc/exports

/data/shopxo 192.168.100.0/24(rw,all_squash,anonuid=33,anongid=33)

[root@node113 ~]# groupadd -g 33 www-data

[root@node113 ~]# useradd -u33 -g 33 www-data

[root@node113 ~]# chown -R www-data. /data/shopxo/

[root@node113 ~]# exportfs -r

[root@node113 ~]# exportfs -v

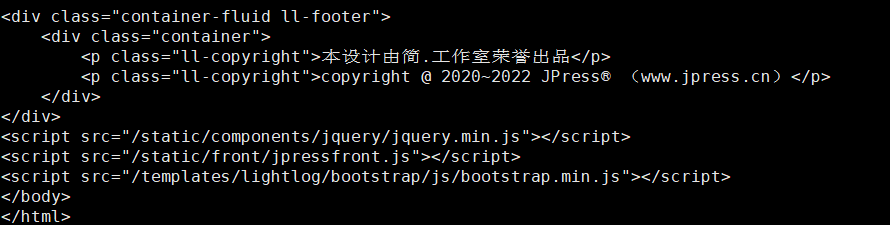

[root@node113 ~]# systemctl restart nfs-server10、部署jpress

# jpress服务器所有节点(192.168.100.115、192.168.100.116):

[root@node115 ~]# vim /usr/local/tomcat/conf/server.xml

......

<Host name="jpress.wang.org" appBase="/data/jpress" unpackWARs="true" autoDeploy="true">

</Host>

......

[root@node115 ~]#cp jpress-v5.0.2.war /data/jpress/

[root@node115 ~]#cd /data/jpress/

[root@node115 jpress]#mv jpress-v5.0.2.war ROOT.war

[root@node115 jpress]#systemctl restart tomcat.service

[root@node115 ~]# vim /apps/nginx/conf/conf.d/jpress.wang.org.conf

server {

listen 81;

server_name jpress.wang.org;

location / {

proxy_pass http://127.0.0.1:8080;

proxy_set_header host $http_host;

}

}

[root@node115 ~]# systemctl restart nginx.service

[root@node115 ~]# yum install -y rsync

[root@node115 ~]# rsync -av /data/jpress/ROOT/* root@192.168.100.117:/data/jpress/ #把现有的网站页面拷贝到nfs上,一台服务器执行即可

[root@node115 ~]# yum install -y nfs-utils

[root@node115 ~]# showmount -e 192.168.100.117

[root@node115 ~]# vim /etc/fstab

192.168.100.117:/data/jpress /data/jpress/ROOT nfs _netdev 0 0

[root@node115 ~]# mount -a11、部署Nginx反向代理负载均衡jpress

# Jpress Nginx代理(192.168.100.114):

[root@node114 ~]# vim /apps/nginx/conf/conf.d/jpress.wang.org.conf

upstream jpress {

hash $remote_addr;

server 192.168.100.115:81;

server 192.168.100.116:81;

}

server {

listen 80;

server_name jpress.wang.org;

location / {

proxy_pass http://jpress;

proxy_set_header host $http_host;

}

}

[root@node114 ~]# systemctl restart nginx.service 附加-wordpress

# 192.168.100.115、192.168.100.116:

[root@node115 ~]# vim /apps/nginx/conf/conf.d/wordpress.conf

server {

listen 82;

server_name wordpress.wang.org;

location / {

index index.php index.html index.htm;

root /data/wordpress;

}

location ~ \.php$|status|ping {

root /data/wordpress;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

wget https://mirrors.tuna.tsinghua.edu.cn/remi/enterprise/remi-release-8.rpm

[root@node115 ~]# yum install php74-php-fpm php74 php74-php-json php74-php-mysqlnd

[root@node115 ~]# vim /etc/opt/remi/php74/php-fpm.d/www.conf

[www]

user = nginx # 最好改为nginx运行的用户

group = nginx # 最好改为nginx运行的组

listen = 127.0.0.1:9000 #监听地址及IP (跨网络需要写网卡的IP)

pm.status_path = /wordpress_status #取消注释

ping.path = /ping #取消注释

ping.response = pong #取消注释

[root@node115 ~]# systemctl restart php74-php-fpm.service

[root@node115 ~]# tar xf wordpress-6.0.2-zh_CN.tar.gz

[root@node115 ~]# mv wordpress/* /data/wordpress/

[root@node115 ~]# chown -R nginx. /data/wordpress/

[root@node115 ~]# nginx -t

[root@node115 ~]# nginx -s reload

[root@node115 ~]# rsync -av /data/wordpress/* root@192.168.100.109:/data/wordpress/ #一台拷贝即可

# 建立nfs后挂载nfs

[root@node115 ~]# vim /etc/fstab

192.168.100.109:/data/wordpress /data/wordpress nfs _netdev 0 0

[root@node115 ~]# mount -a

# 部署wordpress的反向代理负载均衡(192.168.100.114):

[root@node114 conf.d]# vim wordpress.conf

upstream wordpress {

server 192.168.100.115:82;

server 192.168.100.116:82;

}

server {

listen 80;

server_name wordpress.wang.org;

location / {

proxy_pass http://wordpress;

proxy_set_header host $http_host;

}

}

[root@node114 ~]# nginx -s reload

# 实现每个nginx反向代理都可代理四个网站:

[root@node114 ~]# scp jpress.wang.org.conf wordpress.conf root@192.168.100.110:/apps/nginx/conf/conf.d/

[root@node110 ~]# scp phpmyadmin.wang.org.conf shopxo.wang.org.conf root@192.168.100.114:/apps/nginx/conf/conf.d/

12、部署Keepalived+LVS

12-1、准备

12-1-1、时间同步

#所有节点时间同步:

[root@node118 ~]# yum -y install chrony

[root@node118 ~]# systemctl enable --now chronyd

[root@node118 ~]# chronyc sources12-1-2、基于主机名互相通信

# 两个节点步骤相同:

[root@node118 ~]# vim /etc/hosts

192.168.100.118 node118.wang.org

192.168.100.123 node123.wang.org

[root@node118 ~]# vim /etc/hostname

node118.wang.org12-1-3、打通SSH互信

[root@node118 ~]# ssh-keygen

[root@node118 ~]# ssh-copy-id node123.wang.org

[root@node123 ~]# ssh-keygen

[root@node123 ~]# ssh-copy-id node118.wang.org

12-1-4、安装程序

# 两个节点都需安装:

[root@node118 ~]# yum install -y keepalived ipvsadm12-2、配置keepalived

[root@node118 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@wang.org

}

notification_email_from root@wang.org

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node118

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

# vrrp_mcast_group4 224.0.0.18 #组播,如果开启组播,请把单播注释

}

vrrp_instance VI_1 { #定义VRRP实例,实例名自定义

state MASTER #指定Keepalived的角色,MASTER为主服务器,BACKUP为备用服务器

interface eth0 #指定HA监测的接口

virtual_router_id 51 #虚拟路由标识(1-255),在一个VRRP实例中主备服务器ID必须一样

priority 100 #优先级,数字越大越优先,主服务器优先级必须高于备服务器

advert_int 1 #设置主备之间同步检查时间间隔,单位秒

authentication { #设置验证类型和密码

auth_type PASS #验证类型

auth_pass 1111 #设置验证密码,同一实例中主备密码要保持一致

}

virtual_ipaddress { #定义虚拟IP地址

192.168.200.88 dev eth0 label eth0:1

}

unicast_src_ip 192.168.100.118 #定义单播

unicast_peer {

192.168.100.123

}

}

vrrp_instance VI_2 {

state BACKUP

interface eth0

virtual_router_id 61

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

192.168.200.99 dev eth0 label eth0:2

}

unicast_src_ip 192.168.100.123

unicast_peer {

192.168.100.118

}

}

virtual_server 192.168.200.88 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.100.110 80 {

weight 1

HTTP_GET {

url {

path /

# digest 640205b7b0fc66c1ea91c463fac6334d

status_code 200

}

connect_timeout 2

retry 3

delay_before_retry 1

}

}

real_server 192.168.100.114 80 {

weight 1

# HTTP_GET {

# url {

# path /

# digest 640205b7b0fc66c1ea91c463fac6334d

# status_code 200

# }

TCP_CHECK {

connect_timeout 2

retry 3

delay_before_retry 1

connect_port 80

}

}

}

virtual_server 192.168.200.99 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.100.110 80 {

weight 1

HTTP_GET {

url {

path /

# digest 640205b7b0fc66c1ea91c463fac6334d

status_code 200

}

connect_timeout 2

retry 3

delay_before_retry 1

}

}

real_server 192.168.100.114 80 {

weight 1

# HTTP_GET {

# url {

# path /

# digest 640205b7b0fc66c1ea91c463fac6334d

# status_code 200

# }

TCP_CHECK {

connect_timeout 2

retry 3

delay_before_retry 1

connect_port 80

}

}

}

12-3、将配置文件复制给另一个节点

[root@node118 ~]# scp /etc/keepalived/keepalived.conf node123.wang.org:/etc/keepalived/keepalived.conf 12-4、另一个节点修改配置文件

global_defs {

notification_email {

root@wang.org

}

notification_email_from root@wang.org

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node123

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

# vrrp_mcast_group4 224.0.0.18

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.200.88 dev eth0 label eth0:1

}

unicast_src_ip 192.168.100.123

unicast_peer {

192.168.100.118

}

}

vrrp_instance VI_2 {

state MASTER

interface eth0

virtual_router_id 61

priority 200

advert_int 1

authentication {

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

192.168.200.99 dev eth0 label eth0:2

} unicast_src_ip 192.168.100.123

unicast_peer {

192.168.100.118

}

}

# 其他配置不变12-5、后端服务器配置

# 所有后端服务器节点执行:

[root@node110 ~]# echo 1 > /proc/sys/net/ipv4/conf/eth0/arp_ignore

[root@node110 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@node110 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@node110 ~]# echo 2 > /proc/sys/net/ipv4/conf/eth0/arp_announce

[root@node110 ~]# ifconfig lo:0 192.168.100.88 netmask 255.255.255.255 broadcast 192.168.100.88 up

[root@node110 ~]# ifconfig lo:1 192.168.100.99 netmask 255.255.255.255 broadcast 192.168.100.99 up

[root@node110 ~]# route add -host 192.168.100.88 dev lo:0

[root@node110 ~]# route add -host 192.168.100.99 dev lo:1

[root@node110 ~]# systemctl restart nginx.service12-6、查看两节点的ip和ipvs规则情况

[root@node118 ~]# systemctl restart keepalived.service ;ssh node123.wang.org 'systemctl restart keepalived' #启动keepalived

[root@node118 ~]# ip a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:d6:eb:f3 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.118/24 brd 192.168.100.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.100.88/32 scope global eth0:1

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fed6:ebf3/64 scope link

valid_lft forever preferred_lft forever

[root@node118 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.88:80 rr

-> 192.168.100.110:80 Route 1 0 0

-> 192.168.100.114:80 Route 1 0 0

TCP 192.168.100.99:80 rr persistent 50

-> 192.168.100.110:80 Route 1 0 0

-> 192.168.100.114:80 Route 1 0 0

[root@node123 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:02:b1:c5 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.123/24 brd 192.168.100.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.100.99/32 scope global eth0:2

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe02:b1c5/64 scope link

valid_lft forever preferred_lft forever

[root@node123 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.88:80 rr persistent 50

-> 192.168.100.110:80 Route 1 0 0

-> 192.168.100.114:80 Route 1 0 0

TCP 192.168.100.99:80 rr persistent 50

-> 192.168.100.110:80 Route 1 0 0

-> 192.168.100.114:80 Route 1 0 0 12-7、客户端测试

[root@wdy software]#while :;do curl 192.168.100.88;sleep 1;done

192.168.100.110

192.168.100.114

192.168.100.110

192.168.100.114

192.168.100.110

192.168.100.114

192.168.100.110

192.168.100.114

192.168.100.110

13、部署firewalld

# 防火墙(192.168.100.119)增加一块物理网卡(172.20.0.8),再添加一个ip地址:ip a a 172.20.0.249/24 dev eth1:0

[root@node119 ~]# echo 1 > /proc/sys/net/ipv4/ip_forward

[root@node119 ~]# iptables -t nat -A PREROUTING -d 172.20.0.8 -p tcp --dport 80 -j DNAT --to-destination 192.168.100.88:80

[root@node119 ~]# iptables -t nat -A PREROUTING -d 172.20.0.249 -p tcp --dport 80 -j DNAT --to-destination 192.168.100.99:80

[root@node119 ~]# iptables -t nat -nvL14、部署客户路由器

# 客户路由器 (192.168.168.88):两块网卡,一块172.20.0.9,一块192.168.100.88

[root@rocky8 ~]#echo 1 > /proc/sys/net/ipv4/ip_forward

[root@rocky8 ~]#iptables -t nat -A POSTROUTING -s 192.168.168.0/24 -j MASQUERADE 15、配置主DNS

# 主DNS(192.168.168.100):网关指向192.168.168.88

[root@rocky8 ~]# vim /etc/named.conf

......

// listen-on port 53 { 127.0.0.1; };

// listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

secroots-file "/var/named/data/named.secroots";

recursing-file "/var/named/data/named.recursing";

// allow-query { localhost; };

.....

[root@rocky8 ~]# vim /etc/named.rfc1912.zones

......

zone "wang.org" IN {

type master;

file "wang.org.zone";

};

[root@rocky8 ~]# cd /var/named/

[root@rocky8 named]# cp -p named.localhost wang.org.zone

[root@rocky8 named]# vim wang.org.zone

$TTL 1D

@ IN SOA admin admin.wang.org. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS admin

NS slave

slave A 192.168.168.200

admin A 192.168.168.100

jpress A 172.20.0.249

phpmyadmin A 172.20.0.8

shopxo A 172.20.0.8

wordpress A 172.20.0.249

[root@rocky8 named]# systemctl start named16、配置从DNS

# 从DNS(192.168.168.200):网关指向192.168.168.88

[root@rocky8 ~]#vim /etc/named.conf

......

// listen-on port 53 { 127.0.0.1; };

// listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

secroots-file "/var/named/data/named.secroots";

recursing-file "/var/named/data/named.recursing";

// allow-query { localhost; };

.....

[root@rocky8 ~]#vim /etc/named.rfc1912.zones

zone "wang.org" IN {

type slave;

masters { 192.168.168.100; };

file "slaves/wang.org.zone";

};

[root@rocky8 ~]#systemctl restart named17、客户端连接测试

[root@rocky8 ~]# vim /etc/resolv.conf

# Generated by NetworkManager

nameserver 192.168.168.100

nameserver 192.168.168.200

[root@rocky8 ~]# curl jpress.wang.org

[root@rocky8 ~]# curl phpmyadmin.wang.org

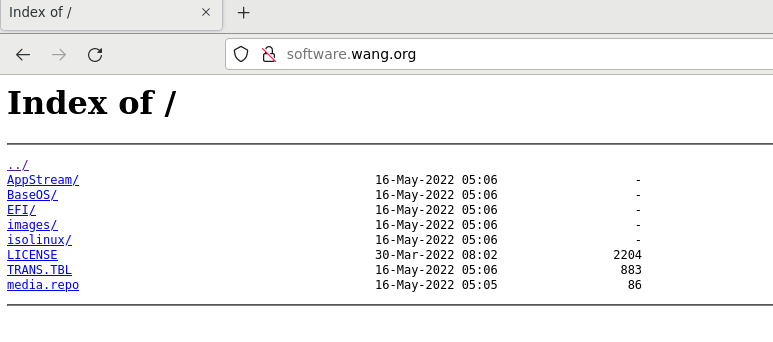

18、利用NGINX部署局域网内部yum仓库

# 192.168.100.111:

[root@node111 ~]# vim /etc/nginx/conf.d/software.wang.org.conf

server {

listen 80;

server_name software.wang.org

root /data/software;

location / {

autoindex on;

autoindex_exact_size on;

autoindex_localtime on;

charset utf8;

limit_rate 2048k;

root /data/software;

}

}

[root@node111 ~]# systemctl restart nginx.service

19、创建nexus

# 192.168.100.112:

[root@node112 ~]# mkdir /usr/local/maven

[root@node112 ~]# tar xf apache-maven-3.8.6-bin.tar.gz -C /usr/local

[root@node112 ~]# cd /usr/local

[root@node112 local]# mv apache-maven-3.8.6/ maven

[root@node112 local]# echo 'PATH=/usr/local/maven/bin:$PATH' > /etc/profile.d/maven.sh

[root@node112 local]# . /etc/profile.d/maven.sh

[root@node112 local]# mvn -v

[root@node112 local]# vim /usr/local/maven/conf/settings.xml #设置aliyun加速

<!--阿里云镜像-->

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>*</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>

[root@node112 ~]# tar xf nexus-3.41.1-01-unix.tar.gz -C /usr/local

[root@node112 ~]# cd /usr/local

[root@node112 local]# mv nexus-3.41.1-01/ nexus

[root@node112 local]# echo 'PATH=/usr/local/nexus/bin:$PATH' > /etc/profile.d/nexus.sh

[root@node112 local]# . /etc/profile.d/nexus.sh

[root@node112 local]# vim nexus/bin/nexus.vmoptions

-Xms1024m

-Xmx1024m

-XX:MaxDirectMemorySize=1024m

[root@node112 local]# nexus run

[root@node112 local]# vim /usr/lib/systemd/system/nexus.service

[Unit]

Description=nexus service

After=network.target

[Service]

Type=forking

LimitNOFILE=65535

ExecStart=/usr/local/nexus/bin/nexus start

ExecStop=/usr/local/nexus/bin/nexus stop

User=root

Restart=on-abort

[Install]

WantedBy=multi-user.target

[root@node112 local]# systemctl daemon-reload

[root@node112 local]# systemctl start nexus

[root@node112 local]# systemctl status nexus

[root@node112 local]# cat sonatype-work/nexus3/admin.password #默认admin密码保存在这里

2d620554-5031-4444-87a6-3cf2bdb6a81c

[root@node112 local]# mkdir /data/nexus/maven -p #建立仓库目录

#浏览器输入192.168.100.112:8081 登陆建立即可,建立方法参考:https://blog.51cto.com/dayu/572992720、搭建zabbix监控端

#zabbix(192.168.100.206):

[root@zabbix ~]# wget https://repo.zabbix.com/zabbix/6.0/ubuntu/pool/main/z/zabbix-release/zabbix-release_6.0-4%2Bubuntu20.04_all.deb

[root@zabbix ~]#dpkg -i zabbix-release_6.0-4+ubuntu20.04_all.deb

[root@zabbix ~]#apt update

[root@zabbix ~]#apt install zabbix-server-mysql zabbix-frontend-php zabbix-nginx-conf zabbix-sql-scripts zabbix-agent #安装Zabbix server,Web前端,agen

[root@zabbix ~]#scp /usr/share/zabbix-sql-scripts/mysql/server.sql.gz root@192.168.100.101: #拷贝模版数据库文件至数据库服务器

# 数据库创建用户并授权(192.168.100.101):测试不支持PXC

mysql> create database zabbix character set utf8mb4 collate utf8mb4_bin;

mysql> create user zabbix@'192.168.100.%' identified by '123456';

mysql> grant all on zabbix.* to zabbix@'192.168.100.%';

mysql> quit

[root@node104 ~]# zcat server.sql.gz |mysql --default-character-set=utf8mb4 -h192.168.100.101 -uzabbix -p zabbix #导入zabbix数据库模板, -u指定用户,-p 指定数据库

Enter password: #zabbix(192.168.100.206):

[root@zabbix ~]#vim /etc/zabbix/zabbix_server.conf

DBHost=192.168.100.101

DBUser=zabbix

DBPassword=123456

[root@zabbix ~]#vim /etc/zabbix/nginx.conf

server {

listen 8888; #取消注释,更改端口

server_name zabbix.wang.org; #取消注释,更改域名

......

[root@zabbix ~]#systemctl restart zabbix-server.service zabbix-agent.service nginx.service php7.4-fpm.service

[root@zabbix ~]#systemctl enable zabbix-server zabbix-agent nginx php7.4-fpm

[root@zabbix ~]#apt -y install language-pack-zh-hans #安装中文语言包

[root@zabbix ~]#cp simfang.ttf /usr/share/zabbix/assets/fonts/ #拷贝windows里边的simfang.ttf(仿宋)字体到zabbix字体下

[root@zabbix ~]#cd /usr/share/zabbix/assets/fonts/

[root@zabbix fonts]#ls

graphfont.ttf simfang.ttf

[root@zabbix fonts]#mv graphfont.ttf graphfont.ttf.bak

[root@zabbix fonts]#mv simfang.ttf graphfont.ttf

#浏览器域名解析连接即可,zabbix.wang.org:8888 zabbix默认用户名Admin密码zabbix21、安装ansible

[root@ansible ~]# apt install ansible

[root@ansible ~]# mkdir /data/ansible -p

[root@ansible ~]# cd /data/ansible/

[root@ansible ansible]# cp /etc/ansible/ansible.cfg .

[root@ansible ansible]# vim ansible.cfg

[defaults]

inventory = /data/ansible/hosts

[root@ansible ansible]# vim hosts

[mgr]

192.168.100.[101:103]

[pxc]

192.168.100.[104:106]

[proxysql]

192.168.100.108

192.168.100.109

[phpmyadmin]

192.168.100.[203:204]

[jpress]

192.168.100.[115:116]

[nginx]

192.168.100.110

192.168.100.114

[redis]

192.168.100.117

192.168.100.113

[lvs]

192.168.100.118

[firewalld]

192.168.100.119

192.168.100.209

[nexus]

192.168.100.112

[repo]

192.168.100.111

[ntp]

192.168.100.205

[ansible]

192.168.100.202

[jumpserver]

192.168.100.207

[openserver]

192.168.100.208

[root@ansible ansible]# ansible all -m ping

[root@ansible ansible]# ssh-keygen

[root@ansible ansible]# for i in {101..120} {202..212}; do sshpass -predhat ssh-copy-id -o StrictHostKeyChecking=no root@192.168.100.$i; done

[root@ansible ansible]# vim zabbix_test.yml

---

- name: install agent

hosts: all

vars:

server_host: "192.168.100.206"

tasks:

- shell: "rpm -Uvh https://repo.zabbix.com/zabbix/6.0/rhel/8/x86_64/zabbix-release-6.0-4.el8.noarch.rpm ; dnf clean all"

when: ansible_distribution_file_variety == "RedHat" and ansible_distribution_major_version == "8"

- shell: "rpm -Uvh https://repo.zabbix.com/zabbix/6.0/rhel/7/x86_64/zabbix-release-6.0-4.el7.noarch.rpm ; yum clean all"

when: ansible_distribution_file_variety == "RedHat" and ansible_distribution_major_version == "7"

- shell: "wget https://repo.zabbix.com/zabbix/6.0/ubuntu/pool/main/z/zabbix-release/zabbix-release_6.0-4%2Bubuntu20.04_all.deb; dpkg -i zabbix-release_6.0-4+ubuntu20.04_all.deb "

when: ansible_distribution_file_variety == "Debian" and ansible_distribution_major_version == "20"

- yum:

name:

- "zabbix-agent2"

- "zabbix-agent2-plugin-mongodb"

disable_gpg_check: yes

state: present

when: ansible_distribution_file_variety == "RedHat"

- apt:

name:

- "zabbix-agent2"

- "zabbix-agent2-plugin-mongodb"

update_cache: yes

state: present

when: ansible_os_family == "Debian"

- replace:

path: /etc/zabbix/zabbix_agent2.conf

regexp: 'Server=127.0.0.1'

replace: "Server={{ server_host }}"

- service:

name: zabbix-agent2

state: restarted

enabled: yes

[root@ansible ansible]# ansible-playbook zabbix_test.yml

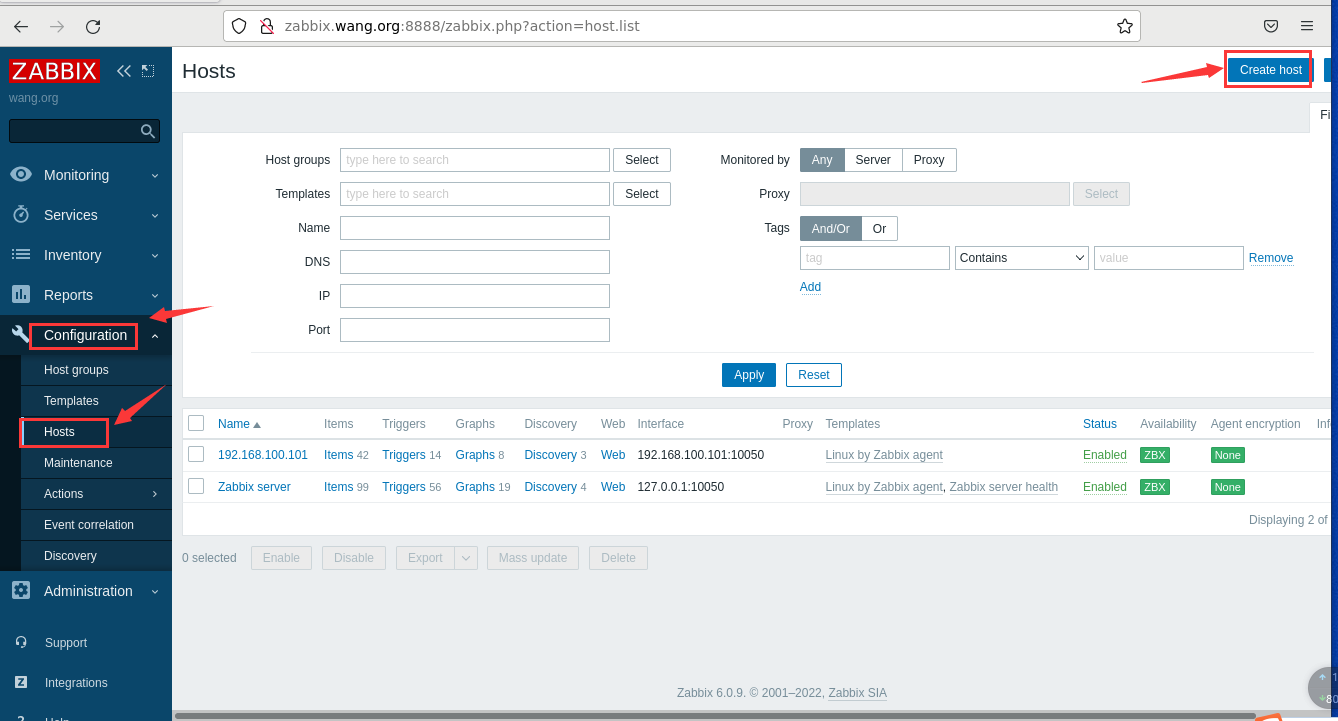

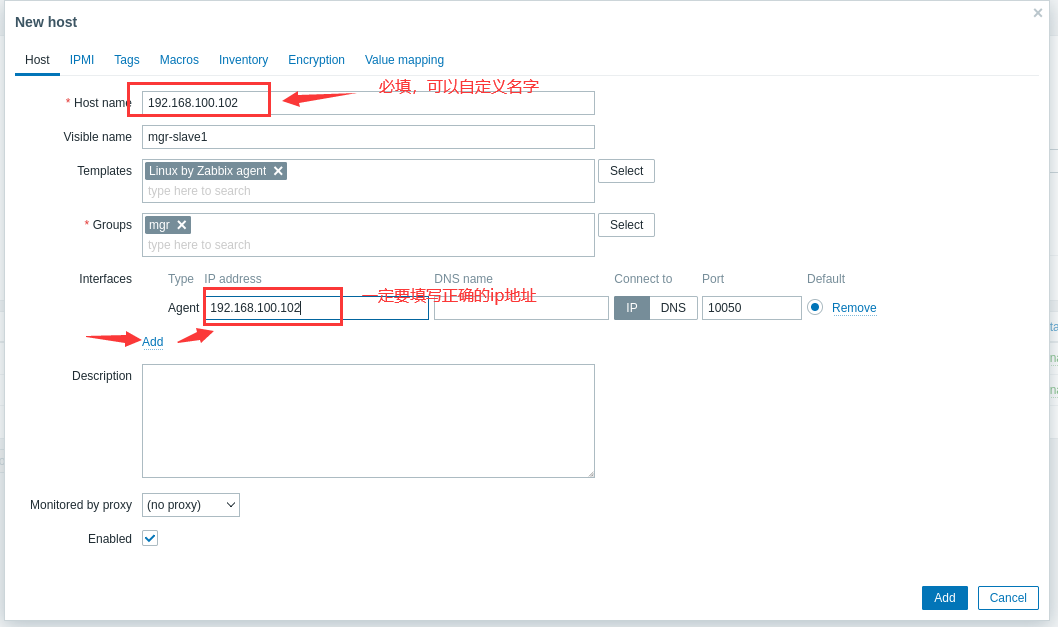

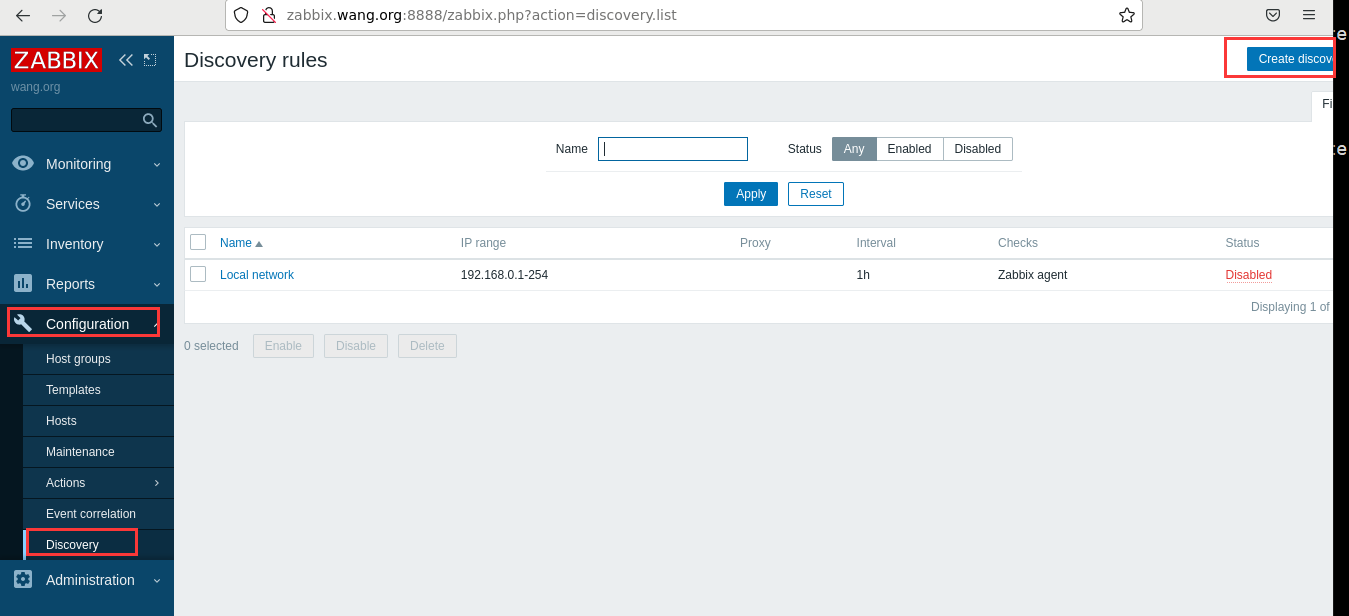

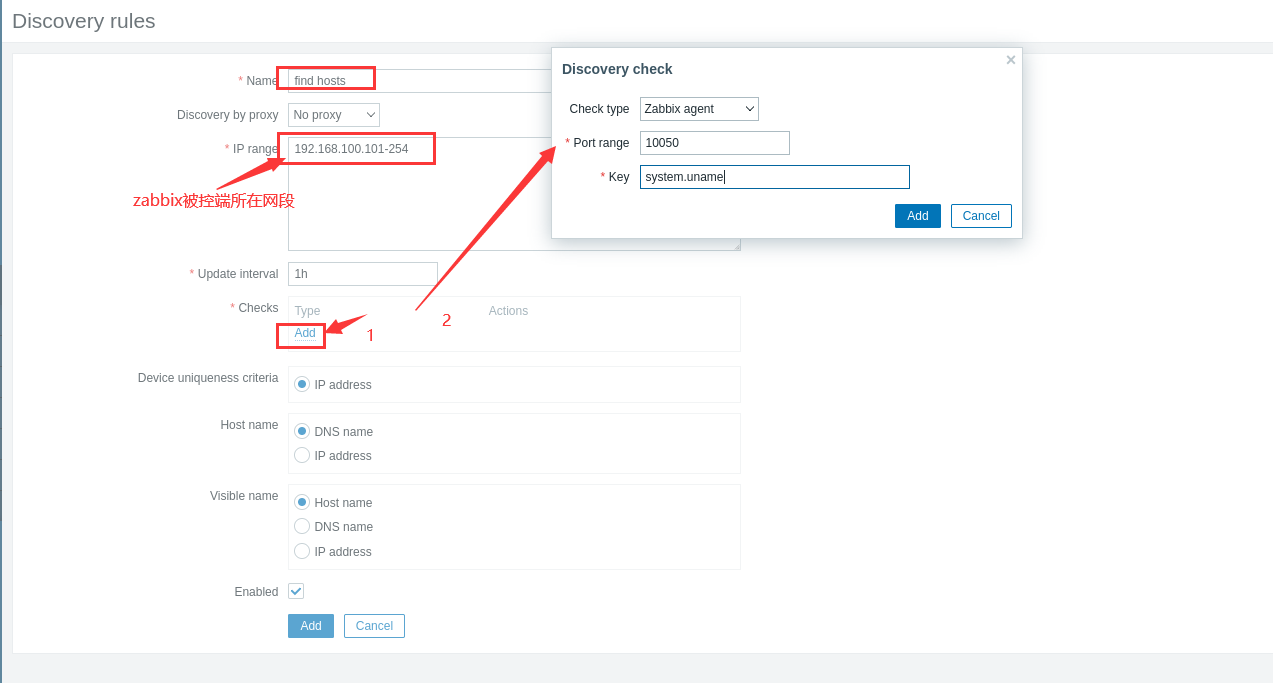

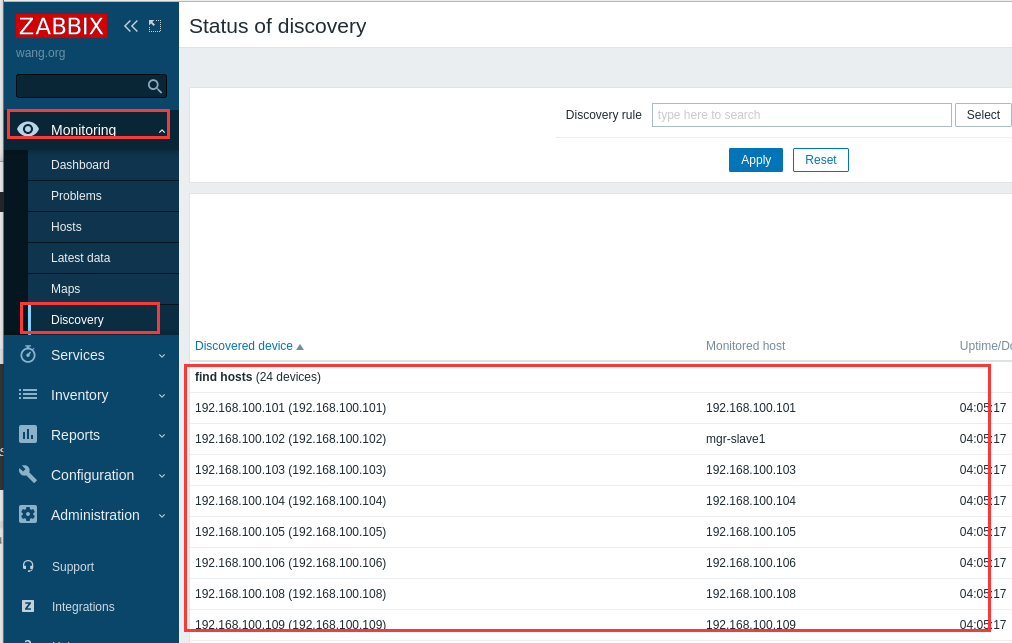

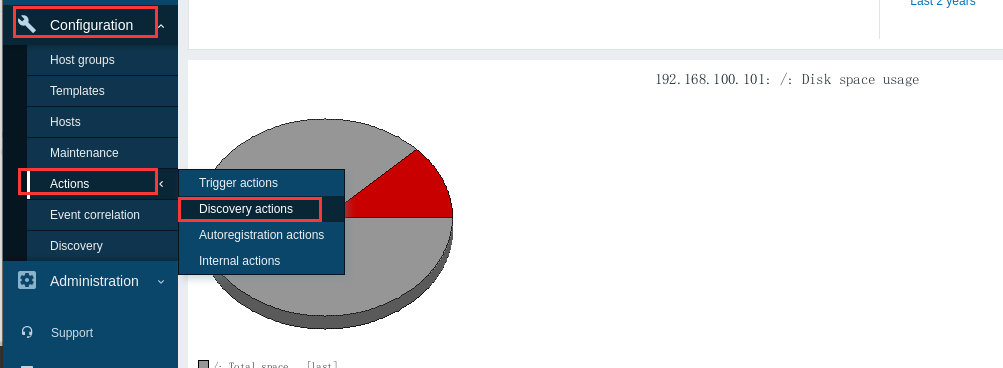

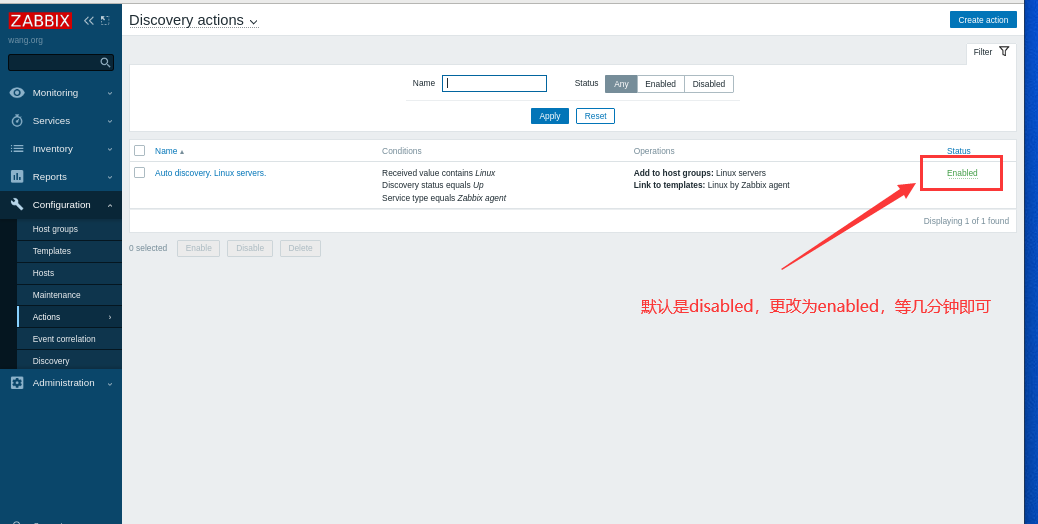

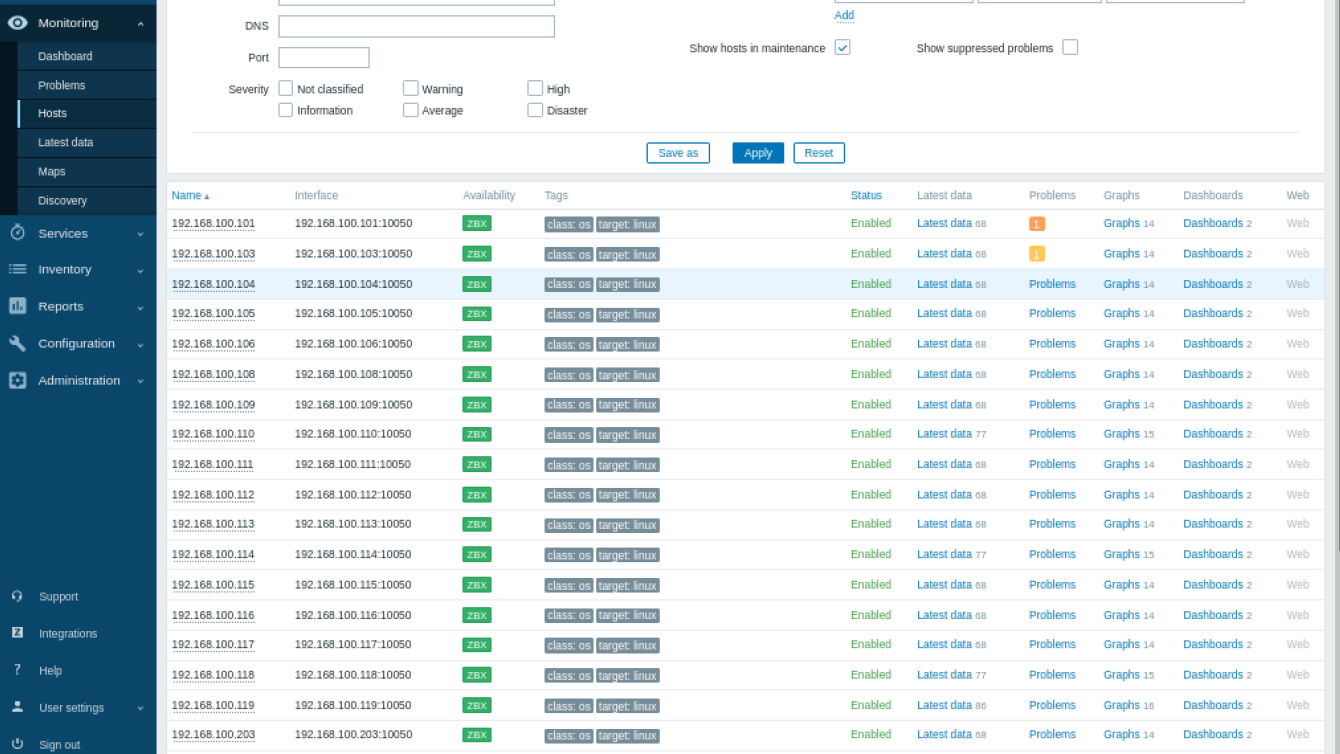

22、zabbix添加被控端

21-1、批量发现:

#被控端配置:

vim /etc/zabbix/zabbix_agent2.conf

Server=192.168.100.206

ServerActive=192.168.100.206

systemctl restart zabbix-agent2.service

23、部署jumpserver

方法一:此方法安装完,有时候用户连接资产节点提示502,重启docker,有时候能解决,有时候不能解决

#jumpserver(192.168.100.207):

[root@jumpserver ~]#mkdir -p /etc/mysql/mysql.conf.d

[root@jumpserver ~]#mkdir -p /etc/mysql/conf.d

[root@jumpserver ~]#tee /etc/mysql/mysql.conf.d/mysqld.cnf <<EOF

[mysqld]

pid-file= /var/run/mysqld/mysqld.pid

socket= /var/run/mysqld/mysqld.sock

datadir= /var/lib/mysql

symbolic-links=0

character-set-server=utf8

EOF

[root@jumpserver ~]#tee /etc/mysql/conf.d/mysql.cnf <<EOF

[mysql]

default-character-set=utf8

EOF

[root@jumpserver ~]#apt -y install docker.io

[root@jumpserver ~]#systemctl start docker.service

[root@jumpserver ~]#docker run -d -p 3306:3306 --name mysql --restart always \

-e MYSQL_ROOT_PASSWORD=123456 \

-e MYSQL_DATABASE=jumpserver \

-e MYSQL_USER=jumpserver \

-e MYSQL_PASSWORD=123456 \

-v /data/mysql:/var/lib/mysql \

-v /etc/mysql/mysql.conf.d/mysqld.cnf:/etc/mysql/mysql.conf.d/mysqld.cnf \

-v /etc/mysql/conf.d/mysql.cnf:/etc/mysql/conf.d/mysql.cnf mysql:5.7.30

[root@jumpserver ~]#docker run -d -p 6379:6379 --name redis --restart always redis:6.2.7

[root@jumpserver ~]#vim key.sh

#!/bin/bash

#

#********************************************************************

#Author: shuhong

#QQ: 985347841

#Date: 2022-10-03

#FileName: key.sh

#URL: hhhhh

#Description: The test script

#Copyright (C): 2022 All rights reserved

#********************************************************************

if [ ! "$SECRET_KEY" ]; then

SECRET_KEY=`cat /dev/urandom | tr -dc A-Za-z0-9 | head -c 50`;

echo "SECRET_KEY=$SECRET_KEY" >> ~/.bashrc;

echo SECRET_KEY=$SECRET_KEY;

else

echo SECRET_KEY=$SECRET_KEY;

fi

if [ ! "$BOOTSTRAP_TOKEN" ]; then

BOOTSTRAP_TOKEN=`cat /dev/urandom | tr -dc A-Za-z0-9 | head -c 16`;

echo "BOOTSTRAP_TOKEN=$BOOTSTRAP_TOKEN" >> ~/.bashrc;

echo BOOTSTRAP_TOKEN=$BOOTSTRAP_TOKEN;

else

echo BOOTSTRAP_TOKEN=$BOOTSTRAP_TOKEN;

fi

[root@jumpserver ~]#bash key.sh

SECRET_KEY=bKlLNPvgYV0fBl7FIyZCXJjHv47hdO82ucXOXdsYx2PnrDxRmm

BOOTSTRAP_TOKEN=U10N3nT70jX9zvmA

docker run --name jms_all -d \

-v /opt/jumpserver/core/data:/opt/jumpserver/data \

-v /opt/jumpserver/koko/data:/opt/koko/data \

-v /opt/jumpserver/lion/data:/opt/lion/data \

-p 80:80 \

-p 2222:2222 \

-e SECRET_KEY=bKlLNPvgYV0fBl7FIyZCXJjHv47hdO82ucXOXdsYx2PnrDxRmm \

-e BOOTSTRAP_TOKEN=U10N3nT70jX9zvmA \

-e LOG_LEVEL=ERROR \

-e DB_HOST=192.168.100.101 \

-e DB_PORT=3306 \

-e DB_USER=jumpserver \

-e DB_PASSWORD=123456 \

-e DB_NAME=jumpserver \

-e REDIS_HOST=192.168.100.207 \

-e REDIS_PORT=6379 \

-e REDIS_PASSWORD='' \

--privileged=true \

--restart always \

jumpserver/jms_all:v2.25.5

#浏览器输入192.168.100.207 设置密码,登陆即可设置。 #方法二:快速安装。推荐使用此方法

[root@node122 ~]# curl -sSL https://github.com/jumpserver/jumpserver/releases/download/v2.26.1/quick_start.sh | bash

[root@node122 ~]# ssh-keygen

[root@node122 ~]# for i in {101..120} {202..212}; do sshpass -predhat ssh-copy-id -o StrictHostKeyChecking=no root@192.168.100.$i; done

#浏览器输入192.168.100.122 用户名密码admin:admin,登陆即可设置。24、部署openvpn

[root@openvpn ~]#apt install -y openvpn easy-rsa

[root@openvpn ~]#dpkg -L openvpn

[root@openvpn ~]#dpkg -L easy-rsa

[root@openvpn ~]#cp -r /usr/share/easy-rsa /etc/openvpn/

[root@openvpn ~]#cd /etc/openvpn/easy-rsa

[root@openvpn easy-rsa]#cp vars.example vars

[root@openvpn easy-rsa]#vim vars

.....

set_var EASYRSA_CA_EXPIRE 36500

set_var EASYRSA_CERT_EXPIRE 3650

......

[root@openvpn easy-rsa]# ./easyrsa init-pki #初始化数据,在当前目录下生成pki目录及相关文件

[root@openvpn easy-rsa]# ./easyrsa build-ca nopass #创建 CA 机构证书环境

[root@openvpn easy-rsa]# openssl x509 -in pki/ca.crt --noout -text # 查看ca信息

[root@openvpn easy-rsa]# ./easyrsa gen-req www.wang.org nopass #创建服务器证书申请文件

[root@openvpn easy-rsa]# ./easyrsa sign server www.wang.org #第一个server表示证书的类型,第二个server表示请求文件名的前缀

[root@openvpn easy-rsa]# ./easyrsa gen-dh #创建 Diffie-Hellman 密钥 需要等待一会儿

[root@openvpn easy-rsa]# vim /etc/openvpn/easy-rsa/vars

set_var EASYRSA_CERT_EXPIRE 365 #给客户端证书的有效期适当调整

[root@openvpn easy-rsa]# ./easyrsa gen-req dayu nopass #生成客户端用户的证书申请

[root@openvpn easy-rsa]# ./easyrsa sign client dayu #颁发客户端证书

[root@openvpn easy-rsa]# cp /etc/openvpn/easy-rsa/pki/ca.crt /etc/openvpn/server/ ##将CA和服务器证书相关文件复制到服务器相应的目录

[root@openvpn easy-rsa]# cp /etc/openvpn/easy-rsa/pki/issued/www.wang.org.crt /etc/openvpn/server/

[root@openvpn easy-rsa]# cp /etc/openvpn/easy-rsa/pki/private/www.wang.org.key /etc/openvpn/server/

[root@openvpn easy-rsa]# cp /etc/openvpn/easy-rsa/pki/dh.pem /etc/openvpn/server/

[root@openvpn easy-rsa]# ll /etc/openvpn/server/

-rw------- 1 root root 1192 Oct 3 17:13 ca.crt

-rw------- 1 root root 424 Oct 3 17:14 dh.pem

-rw------- 1 root root 4622 Oct 3 17:13 www.wang.org.crt

-rw------- 1 root root 1704 Oct 3 17:14 www.wang.org.key

[root@openvpn easy-rsa]# mkdir /etc/openvpn/client/dayu

[root@openvpn easy-rsa]# find /etc/openvpn/easy-rsa/ -name "*dayu*" -exec cp {} /etc/openvpn/client/dayu/ \; ##将客户端私钥与证书相关文件复制到服务器相关的目录

[root@openvpn easy-rsa]# cp pki/ca.crt ../client/dayu/

[root@openvpn easy-rsa]#ll /etc/openvpn/client/dayu/

-rw------- 1 root root 1192 Oct 3 17:16 ca.crt

-rw------- 1 root root 4472 Oct 3 17:16 dayu.crt

-rw------- 1 root root 1704 Oct 3 17:16 dayu.key

-rw------- 1 root root 883 Oct 3 17:16 dayu.req

[root@openvpn easy-rsa]# cp /usr/share/doc/openvpn/examples/sample-config-files/server.conf.gz /etc/openvpn/

[root@openvpn easy-rsa]# cd ..

[root@openvpn openvpn]# gzip -d server.conf.gz

[root@openvpn openvpn]# vim server.conf

port 1194

proto tcp

dev tun

ca /etc/openvpn/server/ca.crt

cert /etc/openvpn/server/www.wang.org.crt

key /etc/openvpn/server/www.wang.org.key

dh /etc/openvpn/server/dh.pem

server 10.8.0.0 255.255.255.0

push "route 192.168.100.0 255.255.255.0"

keepalive 10 120

cipher AES-256-CBC

compress lz4-v2

push "compress lz4-v2"

max-clients 1000

tls-auth /etc/openvpn/server/ta.key 0 #客户端为1,服务器端为0

,注释掉

user openvpn

group openvpn

status /var/log/openvpn/openvpn-status.log

log-append /var/log/openvpn/openvpn.log

verb 3

mute 20

#script-security 3

#auth-user-pass-verify /etc/openvpn/checkpsw.sh via-env

#username-as-common-name

[root@openvpn openvpn]# openvpn --genkey --secret /etc/openvpn/server/ta.key

[root@openvpn openvpn]# useradd -r openvpn

[root@openvpn openvpn]# mkdir /var/log/openvpn

[root@openvpn openvpn]# chown -R openvpn. /var/log/openvpn/

[root@openvpn openvpn]# vim /usr/lib/systemd/system/openvpn@.service #更改service文件

[Unit]

Description=OpenVPN Robust And Highly Flexible Tunneling Application On %I

After=network.target

[Service]

Type=notify

PrivateTmp=true

ExecStart=/usr/sbin/openvpn --cd /etc/openvpn/ --config %i.conf

[Install]

WantedBy=multi-user.target

~

[root@openvpn openvpn]# systemctl daemon-reload

[root@openvpn openvpn]# systemctl enable --now openvpn@server

[root@openvpn openvpn]# systemctl status openvpn@server.service

[root@openvpn openvpn]# ss -ntl #查看是否开启1194端口

[root@openvpn openvpn]# ifconfig tun0 #查看是否生成tun0端口

#如果客户端需要访问openvpn服务器内网内其他主机,需要在openvpn服务器执行以下:

[root@openvpn openvpn]# echo 1 > /proc/sys/net/ipv4/ip_forward

[root@openvpn openvpn]# iptables -t nat -A POSTROUTING -s 10.8.0.0/24 ! -d 10.8.0.0/24 -j MASQUERADE #配置客户端文件:

[root@openvpn easy-rsa]#cp /usr/share/doc/openvpn/examples/sample-config-files/client.conf /etc/openvpn/client/dayu/client.ovpn

[root@openvpn easy-rsa]#vim /etc/openvpn/client/dayu/client.ovpn

client

dev tun

proto tcp

remote 172.20.0.248 1194 #生产中为OpenVPN服务器的FQDN或者公网IP

resolv-retry infinite

nobind

#persist-key

#persist-tun

ca ca.crt

cert wangdayu.crt

key wangdayu.key

remote-cert-tls server

tls-auth ta.key 1

cipher AES-256-CBC

verb 3 #此值不能随意指定,否则无法通信

compress lz4-v2 #此项在OpenVPN2.4.X版本使用,需要和服务器端保持一致,如不指定,默认使用comp-lz压缩

#auth-user-pass

[root@openvpn easy-rsa]#cp /etc/openvpn/server/ta.key ../client/dayu/

[root@openvpn easy-rsa]#ll ../client/dayu/

total 28

drwxr-xr-x 2 root root 101 Oct 3 19:30 ./

drwxr-xr-x 3 root root 18 Oct 3 17:14 ../

-rw------- 1 root root 1192 Oct 3 17:16 ca.crt

-rw-r--r-- 1 root root 490 Oct 3 19:30 client.ovpn

-rw------- 1 root root 4472 Oct 3 17:16 dayu.crt

-rw------- 1 root root 1704 Oct 3 17:16 dayu.key

-rw------- 1 root root 883 Oct 3 17:16 dayu.req

-rw------- 1 root root 636 Oct 3 19:30 ta.key

[root@openvpn easy-rsa]#cd ../client/dayu/

[root@openvpn dayu]#zip dayu.zip * #打包所有客户端文件

[root@openvpn dayu]#scp dayu.zip 172.20.0.250: #传给客户端25、配置openvpn链路防火墙

# (192.168.100.121):增加一块外网卡172.20.0.12

[root@node121 ~]# echo 1 > /proc/sys/net/ipv4/ip_forward

[root@node121 ~]# iptables -t nat -A PREROUTING -d 172.20.0.12 -p tcp --dport 1194 -j DNAT --to-destination 192.168.100.208:119426、客户端连接测试

# windows客户端下载Openvpn客户端

https://openvpn.net/community-downloads/

把传给客户端的文件解压拷贝到openvpn客户端目录的config文件夹下即可

# Linux客户端,拷贝公钥私钥和配置文件到etc/openvpn下:

[root@rocky8 openvpn]# unzip dayu.zip

[root@rocky8 openvpn]# openvpn --daemon --cd /etc/openvpn --config client.ovpn --log-append /var/log/openvpn.log

[root@rocky8 openvpn]# cat /var/log/openvpn.log #可以查看到已经连接上

Tue Oct 4 09:17:19 2022 Outgoing Data Channel: Cipher 'AES-256-GCM' initialized with 256 bit key

Tue Oct 4 09:17:19 2022 Incoming Data Channel: Cipher 'AES-256-GCM' initialized with 256 bit key

Tue Oct 4 09:17:19 2022 ROUTE_GATEWAY 10.0.0.2/255.255.255.0 IFACE=eth0 HWADDR=00:0c:29:72:06:54

Tue Oct 4 09:17:19 2022 TUN/TAP device tun0 opened

Tue Oct 4 09:17:19 2022 TUN/TAP TX queue length set to 100

Tue Oct 4 09:17:19 2022 /sbin/ip link set dev tun0 up mtu 1500

Tue Oct 4 09:17:19 2022 /sbin/ip addr add dev tun0 local 10.8.0.6 peer 10.8.0.5

Tue Oct 4 09:17:19 2022 /sbin/ip route add 192.168.100.0/24 via 10.8.0.5

Tue Oct 4 09:17:19 2022 /sbin/ip route add 10.8.0.1/32 via 10.8.0.5

27、搭建内部DNS和NTP

#dns (192.168.100.205):

[root@dns ~]# apt install bind9 bind9-utils chrony

[root@dns ~]# vim /etc/chrony.conf

server ntp.aliyun.com iburst

allow 192.168.100.0/24

[root@dns ~]# cd /etc/bind/

[root@dns bind]# vim named.conf.options

// dnssec-validation auto;

[root@dns bind]# vim named.conf.default-zones

zone "wang.org" {

type master;

file "/etc/bind/wang.org.local";

};

[root@dns bind]# cp db.local wang.org.local

[root@dns bind]# chown -R root.bind wang.org.local

[root@dns bind]# vim wang.org.local

;

; BIND data file for local loopback interface

;

$TTL 604800

@ IN SOA dayu. wang.dayu.org. (

2 ; Serial

604800 ; Refresh

86400 ; Retry

2419200 ; Expire

604800 ) ; Negative Cache TTL

;

@ IN NS dayu.

dayu A 192.168.100.205

jpress A 192.168.100.114

phpmyadmin A 192.168.100.110

# 参考:https://blog.51cto.com/dayu/5687167

本文来自博客园,作者:大雨转暴雨,转载请注明原文链接:https://www.cnblogs.com/wdy001/p/16757203.html