ClickHouse 副本

ClickHouse 副本的目的主要是保障数据的高可用性,即使一台ClickHouse节点宕机,那么也可以从其他服务器获得相同的数据。官网地址如下:

https://clickhouse.com/docs/en/engines/table-engines/mergetree-family/replication/

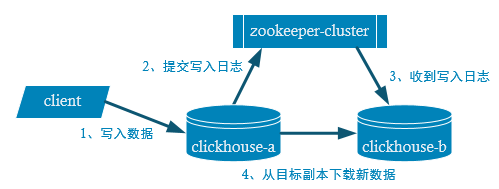

1、副本写入流程

2、配置步骤

启动zk集群

[root@hadoop201 hui]# jps.sh -----------hadoop201--------------- 2885 Jps 2247 QuorumPeerMain -----------hadoop202--------------- 1559 QuorumPeerMain 2124 Jps -----------hadoop203--------------- 1561 QuorumPeerMain 2123 Jps

在hadoop201的/etc/clickhouse-server/config.d目录下创建一个名为metrika.xml的配置文件,内容如下:

[root@hadoop201 hui]# vim /etc/clickhouse-server/config.d/metrika.xml <?xml version="1.0"?> <yandex> <zookeeper-servers> <node index="1"> <host>hadoop201</host> <port>2181</port> </node> <node index="2"> <host>hadoop202</host> <port>2181</port> </node> <node index="3"> <host>hadoop203</host> <port>2181</port> </node> </zookeeper-servers> </yandex>

修改文件所属组

[root@hadoop201 config.d]# chown clickhouse:clickhouse metrika.xml

分发

[root@hadoop201 hui]# sudo /home/hui/bin/sxync.sh /etc/clickhouse-server/config.d/metrika.xml

在 hadoop201的/etc/clickhouse-server/config.xml中增加 大约719行左右

<include_from>/etc/clickhouse-server/config.d/metrika.xml</include_from>

分发 config.xml

[root@hadoop201 hui]# /home/hui/bin/sxync.sh /etc/clickhouse-server/config.xml

分别在hadoop201 hadoop202和hadoop203 上启动ClickHouse服务

[hui@hadoop201 ~]$ chkall.sh sudo systemctl start clickhouse-server

在hadoop201和hadoop202上分别建表,副本只能同步数据,不能同步表结构,所以我们需要在每台机器上自己手动建表

hadoop201 :) ccreate table t_order_rep ( :-] id UInt32, :-] sku_id String, :-] total_amount Decimal(16,2), :-] create_time Datetime :-] ) engine =ReplicatedMergeTree('/clickhouse/tables/01/t_order_rep','rep_201') :-] partition by toYYYYMMDD(create_time) :-] primary key (id) :-] order by (id,sku_id); CREATE TABLE t_order_rep ( `id` UInt32, `sku_id` String, `total_amount` Decimal(16, 2), `create_time` Datetime ) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01/t_order_rep', 'rep_202') PARTITION BY toYYYYMMDD(create_time) PRIMARY KEY id ORDER BY (id, sku_id) Ok. 0 rows in set. Elapsed: 0.173 sec.

hadoop202 建表

hadoop202 :) ccreate table t_order_rep ( :-] id UInt32, :-] sku_id String, :-] total_amount Decimal(16,2), :-] create_time Datetime :-] ) engine =ReplicatedMergeTree('/clickhouse/tables/01/t_order_rep','rep_202') :-] partition by toYYYYMMDD(create_time) :-] primary key (id) :-] order by (id,sku_id); CREATE TABLE t_order_rep ( `id` UInt32, `sku_id` String, `total_amount` Decimal(16, 2), `create_time` Datetime ) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01/t_order_rep', 'rep_203') PARTITION BY toYYYYMMDD(create_time) PRIMARY KEY id ORDER BY (id, sku_id)

ReplicatedMergeTree 中,第一个参数是分片的zk_path一般按照: /clickhouse/table/{shard}/{table_name} 的格式写,如果只有一个分片就写01即可。第二个参数是副本名称,相同的分片副本名称不能相同

hadoop201 :) ((102,'sku_002',2000.00,'2022-05-18 13:00:00'), :-] ((102,'sku_002',12000.00,'2022-05-18 13:00:00'), :-] ((102,'sku_002',600.00,'2022-05-19 12:00:00'); Syntax error: failed at position 2: (102,'sku_002',2000.00,'2022-05-18 13:00:00'), (102,'sku_002',12000.00,'2022-05-18 13:00:00'), (102,'sku_002',600.00,'2022-05-19 12:00:00'); Expected one of: SELECT subquery, SELECT query, SELECT, list of elements, WITH, SELECT query, possibly with UNION, SELECT query, subquery, possibly with UNION hadoop201 :) hadoop201 :) iinsert into t_order_rep values :-] ((101,'sku_001',1000.00,'2022-05-18 12:00:00'), :-] ((102,'sku_002',2000.00,'2022-05-18 12:00:00'), :-] ((103,'sku_004',2500.00,'2022-05-18 12:00:00'), :-] ((104,'sku_002',2000.00,'2022-05-18 12:00:00'), :-] ((105,'sku_003',600.00,'2022-05-19 12:00:00');(105,'sku_003',600.00,'2022-05-19 12:00:00'); INSERT INTO t_order_rep VALUES Ok. 5 rows in set. Elapsed: 0.289 sec.

在hadoop202上执行select,可以查询出结果,说明副本配置正确

hadoop202 :) select * from t_order_rep; SELECT * FROM t_order_rep ┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐ │ 105 │ sku_003 │ 600.00 │ 2022-05-19 12:00:00 │ └─────┴─────────┴──────────────┴─────────────────────┘ ┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐ │ 101 │ sku_001 │ 1000.00 │ 2022-05-18 12:00:00 │ │ 102 │ sku_002 │ 2000.00 │ 2022-05-18 12:00:00 │ │ 103 │ sku_004 │ 2500.00 │ 2022-05-18 12:00:00 │ │ 104 │ sku_002 │ 2000.00 │ 2022-05-18 12:00:00 │ └─────┴─────────┴──────────────┴─────────────────────┘ 5 rows in set. Elapsed: 0.010 sec.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下